1, Algorithm efficiency

When we solve problems, there may be a variety of algorithms that are feasible. Which algorithm should we choose? Which algorithm is better?

In fact, to measure the quality of an algorithm, we look at the execution efficiency of the algorithm.

After the algorithm is written into an executable program, it needs time and space (memory) to run. Therefore, the efficiency of the algorithm is measured by the two dimensions of time and space, that is, the topic to be discussed in this paper - time complexity and space complexity

Time complexity mainly measures the running speed of an algorithm, while space complexity mainly measures the additional space required for the operation of an algorithm. In the early stage of computer development, the storage capacity of the computer is very small. So I care about the complexity of space. However, with the rapid development of computer industry, the storage capacity of computer has reached a high level. Therefore, we no longer need to pay special attention to the spatial complexity of an algorithm.

2, Time complexity

2.1 concept of time complexity

In computer science, the time complexity of an algorithm is a function (the function here refers to the function expression with unknowns in Mathematics), which quantitatively describes the running time of the algorithm. However, the time consumed by the execution of an algorithm cannot be calculated theoretically. It can only be known when the program is run on the machine, but the time spent must be different due to the different memory size and cpu of each machine. Therefore, we stipulate:

The execution times of basic operations in the algorithm is the time complexity of the algorithm.

In that case, let's see how many times the following example is actually executed (number of cycles):

void Func1(int N)

{

int count = 0;

//Cycle 1

for (int i = 0; i < N; ++i)

{

for (int j = 0; j < N; ++j)

{

++count;

}

}

//Cycle 2

for (int k = 0; k < 2 * N; ++k)

{

++count;

}

//Cycle 3

int M = 10;

while (M--)

{

++count;

}

printf("%d\n", count);

}

NN executed in loop 1

2N times in loop 2

Performed 10 times in loop 3

So we can get a function of time complexity

F(N) = N*N + 2 * N + 10

It can be found from this function that when N is large, the influence of the latter two terms on the result is smaller. Therefore, when N is large enough, the size of F(N) is mainly determined by N^2. In fact, when we calculate the time complexity, we do not have to calculate the exact execution times, but only the approximate execution times. Therefore, we need to use the asymptotic representation of large O here.

Next, let's look at what is the asymptotic representation of big O.

2.2 large O progressive representation

Generally speaking, it is actually the estimation that retains the item with the greatest impact. But let's look at the precise definition:

Big O notation: a mathematical symbol used to describe the asymptotic behavior of a function.

Big O progressive representation rules:

- Replace all addition constants in the run time with constant 1. (popular understanding: if the code execution times are constant times, the time complexity is O(1))

- In the modified run times function, only the highest order item is retained (popular understanding: the item that has the greatest impact on the result is retained)

- If the highest order term exists and is not 1, the constant multiplied by this item is removed. The result is large O-order. (popular understanding: if the coefficient of the highest order term is not 1, remove this coefficient)

Therefore, after using the progressive representation of big O, the time complexity of Func1 is:

O(N^2)In this way, we remove those items that have little impact on the results and show the execution times concisely.

In addition, the time complexity of some algorithms has the best, average and worst cases:

Worst case: maximum number of runs of any input scale (upper bound)

Average case: expected number of runs of any input scale

Best case: minimum number of runs of any input scale (lower bound)

Time complexity is a pessimistic expectation, so time complexity looks at the worst case.

2.3 calculation example of time complexity

From the above description rules, we may not know how to use the large O progressive representation to represent the time complexity. Let's look at the following examples

Example 1:

// Calculate the time complexity of Func2?

void Func2(int N)

{

int count = 0;

for (int k = 0; k < 2 * N; ++k)

{

++count;

}

int M = 10;

while (M--)

{

++count;

}

printf("%d\n", count);

}

Resolution:

The first loop is executed 2 * N times, and the second loop is executed 10 times.

So a total of 2 * N + 10 times were performed

The highest order term is 2 * N, so the time complexity is O(N)

Example 2:

// Calculate the time complexity of Func3?

void Func3(int N, int M)

{

int count = 0;

for (int k = 0; k < M; ++k)

{

++count;

}

for (int k = 0; k < N; ++k)

{

++count;

}

printf("%d\n", count);

}

Resolution:

The first cycle is executed M times, and the second cycle is executed N times. The total number of executions is M+N.

Since both M and N here are unknowns and are the highest terms, we don't know who is bigger and who is smaller, so the time complexity is O(M+N).

- If M is much larger than N, the time complexity is O(M)

- If N is much larger than M, the time complexity is O(N)

- If M and N are approximate (the size relationship between M and N is not explained), the time complexity is O(M+N)

Example 3:

// Calculate the time complexity of Func4?

void Func4(int N)

{

int count = 0;

for (int k = 0; k < 100; ++k)

{

++count;

}

printf("%d\n", count);

}

Resolution:

The loop was executed 100 times

So the time complexity is O(1)

O(1) does not mean that the algorithm runs once, but constant times

Example 4:

// The time complexity of calculating strchr? const char * strchr ( const char * str, int character );

Resolution:

The strchr function is a library function that finds a character in a string.

Find a character in a string, but since the length of the string received each time may change, the length is unknown. The unknown size in time complexity is represented by N.

Therefore, the time complexity can be divided into three cases:

The best is 1, the worst is N, and the average is N/2. Since the calculation of time complexity looks at the worst case, the time complexity is O(N).

Example 5:

// Calculate the time complexity of BubbleSort?

void BubbleSort(int* a, int n)

{

assert(a);

for (size_t end = n; end > 0; --end)

{

int exchange = 0;

for (size_t i = 1; i < end; ++i)

{

if (a[i - 1] > a[i])

{

Swap(&a[i - 1], &a[i]);

exchange = 1;

}

}

if (exchange == 0)

break;

}

}

Resolution:

After carefully understanding this code, we can know that the execution times of the loop are:

(n-1)+(n-2)+ ... +2+1

In fact, this is equivalent to the sum of an arithmetic sequence, and the result is: n * (n-1) / 2

The highest order term is: (n^2) / 2, so the time complexity is O(n^2)

Example 6:

// Calculate the time complexity of BinarySearch?

int BinarySearch(int* a, int n, int x)

{

assert(a);

int begin = 0;

int end = n;

while (begin < end)

{

int mid = begin + ((end - begin) >> 1);

if (a[mid] < x)

begin = mid + 1;

else if (a[mid] > x)

end = mid;

else

return mid;

}

return -1;

}

This is a binary search algorithm.

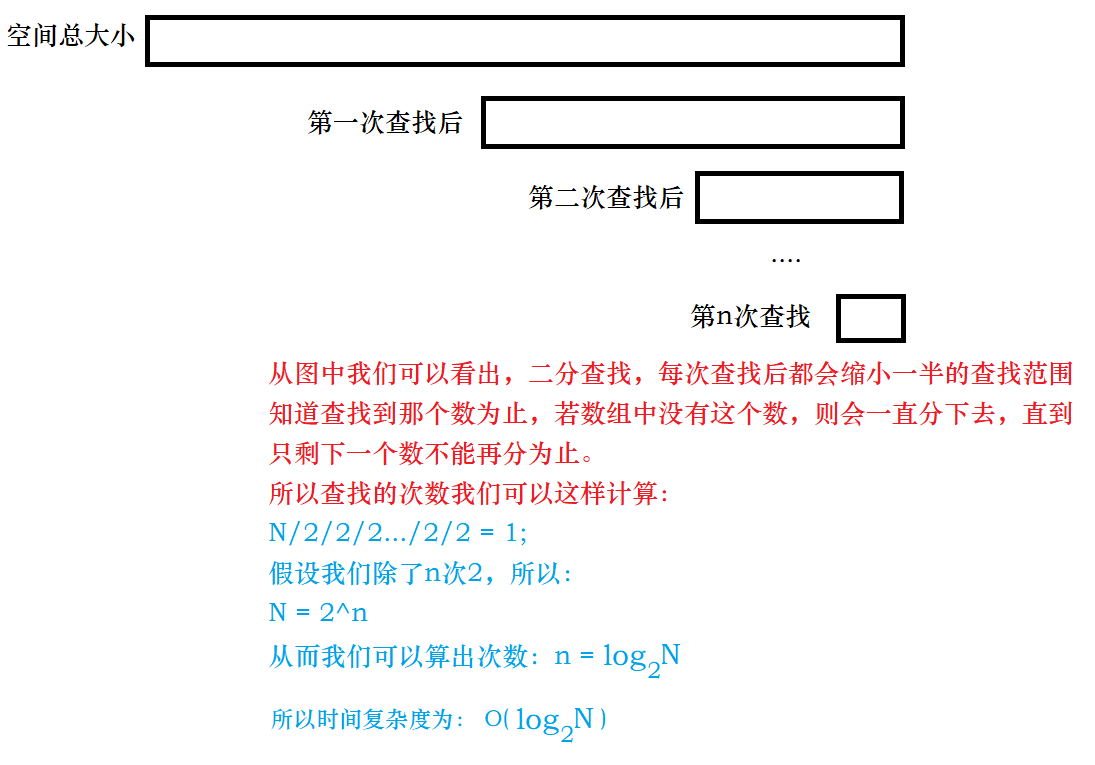

Here we need to pay attention to one thing: to calculate the time complexity, we should not only look at several layers of cycles, but also look at its ideas. Our binary search algorithm is to reduce the search range by half every time, as shown in the figure:

Example 7:

// The time complexity of computing factorial recursive Fac?

long long Fac(size_t N)

{

if (0 == N)

return 1;

return Fac(N - 1) * N;

}

Resolution:

Through calculation, we can know the number of recursive calls:

F(N) = N * F(N-1)

F(N-1) = (N-1) * F(N-2)

F(N-3) = (N-2) * F(N-4)

...

It recurses N times, and the time complexity is O(N)

Example 8:

// The time complexity of computing Fibonacci recursive Fib?

long long Fib(size_t N)

{

if (N < 3)

return 1;

return Fib(N - 1) + Fib(N - 2);

}

Resolution:

Fibonacci Number: starting from the third term, each term is equal to the sum of the first two terms

1,1,2,3,5,8,13...

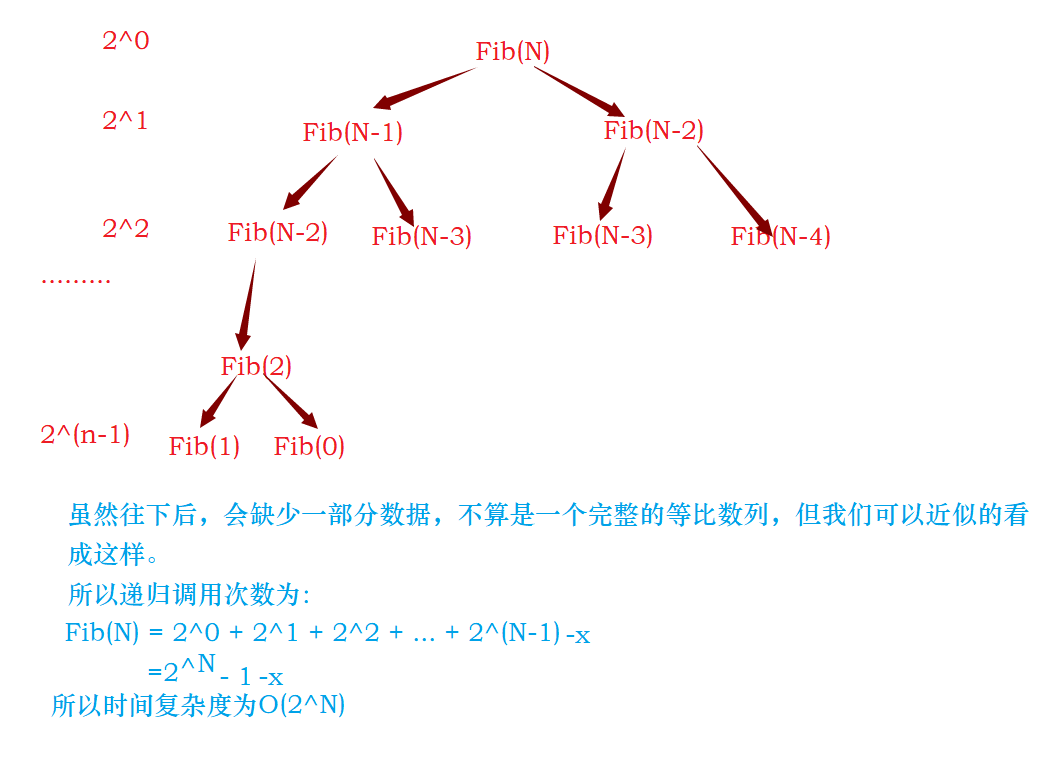

How to calculate the number of recursive calls?

As shown in the figure:

3, Spatial complexity

3.1 concept of spatial complexity

Space complexity is also a mathematical function expression, which is a measure of the temporary additional storage space occupied during the re execution of an algorithm.

Space complexity is not how many bytes of space the program occupies, because it doesn't make much sense, so space complexity is the number of variables.

The calculation rules of spatial complexity are basically similar to that of time complexity, and the large O asymptotic representation is also used.

Note: the stack space (storage parameters, local variables, some register information, etc.) required by the function during operation has been determined during compilation. Therefore, the space complexity is mainly determined by the additional space applied by the function during operation.

3.2 space complexity calculation example

Example 1:

// Calculate the spatial complexity of BubbleSort?

void BubbleSort(int* a, int n)

{

assert(a);

for (size_t end = n; end > 0; --end)

{

int exchange = 0;

for (size_t i = 1; i < end; ++i)

{

if (a[i - 1] > a[i])

{

Swap(&a[i - 1], &a[i]);

exchange = 1;

}

}

if (exchange == 0)

break;

}

}

Resolution:

The computational space complexity is determined by displaying the additional space requested by the function at run time.

Therefore, this function additionally opens up the space of exchange, end and i variables, that is, the constant space, so the space complexity is O(1)

Example 2:

// Calculate the spatial complexity of Fibonacci?

// Returns the first n items of the Fibonacci sequence

long long* Fibonacci(size_t n)

{

if (n == 0)

return NULL;

long long* fibArray = (long long*)malloc((n + 1) * sizeof(long long));

fibArray[0] = 0;

fibArray[1] = 1;

for (int i = 2; i <= n; ++i)

{

fibArray[i] = fibArray[i - 1] + fibArray[i - 2];

}

return fibArray;

}

Resolution:

This function requires to return the first n items of the Fibonacci sequence, rather than calculate the nth number of the Fibonacci sequence. So the actual calculation is an array of N numbers in the Fibonacci sequence

Here, a piece of (n+1) space is dynamically applied, and the others are constant, so the space complexity is O(N)

Example 3:

// Calculate the spatial complexity of factorial recursive Fac?

long long Fac(size_t N)

{

if (N == 0)

return 1;

return Fac(N - 1) * N;

}

Resolution:

Each recursion needs to open up the stack frame space. When calculating the time complexity above, we calculate the number of recursions as follows:

Fac(N)

Fac(N-1)

Fac(N-2)

...

Fac(1)

A total of N times. Each recursion needs to open up the function stack frame space

Therefore, a total of N function stack frame spaces are opened up recursively, and the space complexity is O(N).

Example 4:

// Calculate the spatial complexity of Fibonacci recursive Fib?

long long Fib(size_t N)

{

if (N < 3)

return 1;

return Fib(N - 1) + Fib(N - 2);

}

Resolution:

Note: space can be reused without accumulation; Time is gone forever, accumulated

When we explained the time complexity of this function above, we calculated that its time complexity is O(2^n) and the number of recursion is (2^n)-1, so will we establish a function stack frame of (2^n)-1 times, that is, the spatial complexity is also O(2^n). Obviously, it is impossible because the stack space is limited.

How is this calculated?

Here, because the space can be reused, during recursion, it will first recurse Fib(N-1). When Fib(N-1) recurses and returns, the space created by its recursion will be destroyed, and then recurse Fib(N-2), and so on. Therefore, the stack frame space opened by each recursion uses the same space, and the space of N function stack frames at most.

So the spatial complexity is O(N).