If you need to get data on a web page because of business or personal interests, the java crawler is necessary to contact and master. I am also the first time to contact the crawler framework. I searched several webMagic on the web and tried to learn how to use them.

The WebMagic framework consists of four components, PageProcessor, Scheduler, Downloader, and Pipline.

These four components correspond to functions such as processing, management, download, and persistence during the crawl life cycle.

These four components are properties in Spider, and the crawler framework is started and managed through Spider.

The framework then has four components

PageProcessor is responsible for parsing pages, extracting useful information, and discovering new links.You need to define it yourself.

Scheduler manages the URL s to be captured, as well as some heavy work.Normally you don't need to customize your own Scheduler.

Pipeline handles the extraction results, including calculations, persistence to files, databases, and so on.

Downloader is responsible for downloading pages from the Internet for subsequent processing.Normally you don't need to do it yourself.

See the code implementation below:

First, the dependency of webMagic

<!-- WebMagic --> <dependency> <groupId>us.codecraft</groupId> <artifactId>webmagic-core</artifactId> <version>0.7.3</version> </dependency> <dependency> <groupId>us.codecraft</groupId> <artifactId>webmagic-extension</artifactId> <version>0.7.3</version> <exclusions> <exclusion> <groupId>org.slf4j</groupId> <artifactId>slf4j-log4j12</artifactId> </exclusion> </exclusions> </dependency>

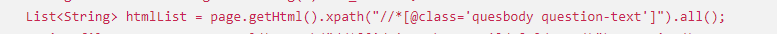

Here I've written a small demo myself based on the big guys'code on the Internet. First I inherit PageProcessor. This is to parse the page. My demo uses the XPath of the page to locate the data I need. First, I locate the XPath by myself. If it's a Chrome developer browser, I can right-click to copy the xpath.

I'm using all the same class elements on the web page here. Once I get this List, I can handle it individually according to my business needs.

package com.iShift.kachaschool;

import java.awt.image.BufferedImage;

import java.io.ByteArrayOutputStream;

import java.io.File;

import java.util.List;

import javax.imageio.ImageIO;

import gui.ava.html.image.generator.HtmlImageGenerator;

import us.codecraft.webmagic.Page;

import us.codecraft.webmagic.Site;

import us.codecraft.webmagic.Spider;

import us.codecraft.webmagic.processor.PageProcessor;

public class WebMagic implements PageProcessor {

private Site site = Site.me().setRetryTimes(3).setSleepTime(1000).setTimeOut(10000).addHeader("User-Agent",

"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36");

public static final String URL_LIST = "https://www.cnblogs.com/mvc/AggSite/PostList.aspx";

public static String knowledge = "zsd4774";

public static int pageNum = 1;

public static int i = 1;

public static List<String> htmlListLike;

public void process(Page page) {

// Get what the page needs, just the title, the same as the rest of the information.

// System.out.println("Number of captured content:"+ page.getHtml().xpath("//*[@class='quesbody question-text']").all().size());

// System.out.println("Captured Content:"+ page.getHtml().xpath("//*[@class='quesbody question-text']").all().toString());

// System.err.println(page.getHtml().xpath("//*[@class='quesbody question-text']").all().get(9).toString());

String fileId = knowledge.substring(3) + "_anchor";

List<String> htmlList = page.getHtml().xpath("//*[@class='quesbody question-text']").all();

String fileName = page.getHtml().xpath("//*[@id='crumbCanvas']/a[3]/text()").toString();

try {

if (!htmlList.equals(htmlListLike)) {

for (String html : htmlList) {

makeHtmlImage(html, fileName);

}

} else {

System.err.println("Start next page");

knowledge = "zsd" + (Integer.parseInt(knowledge.substring(3)) + 1);

pageNum=1;

}

htmlListLike = htmlList;

} catch (Exception e) {

e.printStackTrace();

}

}

public Site getSite() {

return site;

}

private void makeHtmlImage(String html, String fileName) throws Exception {

HtmlImageGenerator imageGenerator = new HtmlImageGenerator();

imageGenerator.loadHtml(html);

BufferedImage bufferedImage = imageGenerator.getBufferedImage();

ByteArrayOutputStream outPutStream = new ByteArrayOutputStream();

ImageIO.write(bufferedImage, "png", outPutStream);

File file = new File("D:\\webMagic\\" + fileName);

if (!file.exists()) {

file.mkdir();

}

imageGenerator.saveAsImage("D:\\webMagic\\" + fileName + "\\" + i++ + ".png");

// com.itextpdf.text.Image image = com.itextpdf.text.Image.getInstance(outPutStream.toByteArray());

}

public static void main(String[] args) {

while (true) {

String url = "http://zujuan.xkw.com/czsx/" + knowledge + "/o2p" + pageNum + "/";

Spider.create(new WebMagic()).addUrl(url).thread(1).run();

pageNum += 1;

if (knowledge.equals("zsd4800")) {

break;

}

}

}

}