Git address: https://gitee.com/jyq_18792721831/sparkmaven.git

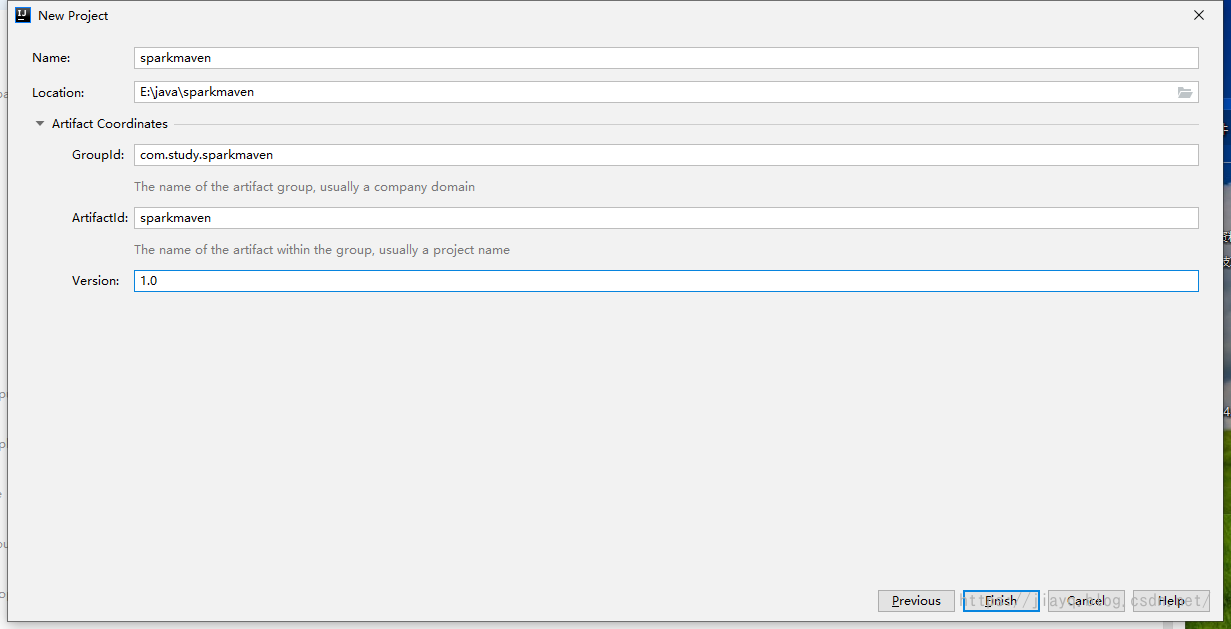

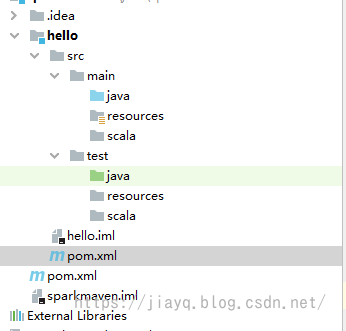

Create Project

Let's start by creating a generic maven project

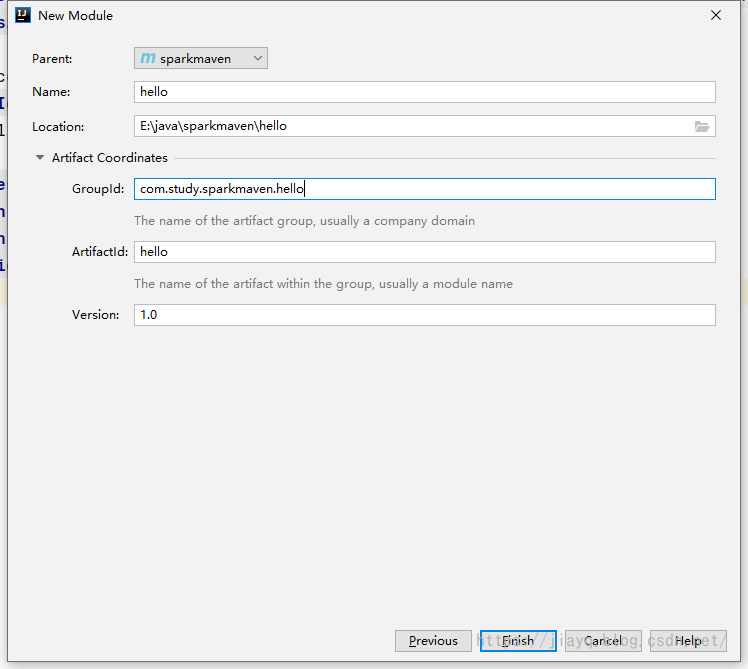

Create a project followed by a hello module

Is also a normal maven module

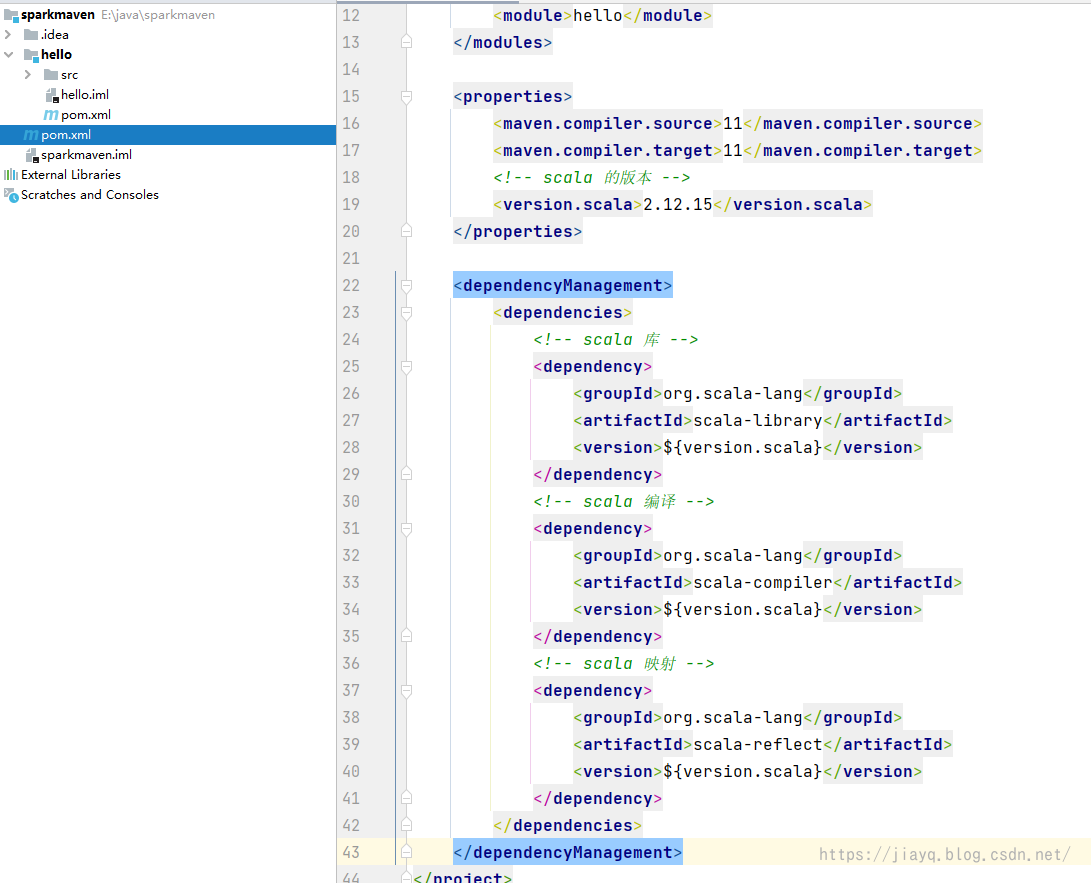

Increase scala dependency

We don't write code in the parent project, the parent project is just for managing the child project, so the src directory of the parent project can be deleted

Our POM in the parent project. Increase dependencies in XML

as follows

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.study.sparkmaven</groupId>

<artifactId>sparkmaven</artifactId>

<packaging>pom</packaging>

<version>1.0</version>

<modules>

<module>hello</module>

</modules>

<properties>

<maven.compiler.source>11</maven.compiler.source>

<maven.compiler.target>11</maven.compiler.target>

<!-- scala Version of -->

<version.scala>2.12.15</version.scala>

</properties>

<dependencyManagement>

<dependencies>

<!-- scala library -->

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${version.scala}</version>

</dependency>

<!-- scala Compile -->

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-compiler</artifactId>

<version>${version.scala}</version>

</dependency>

<!-- scala mapping -->

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-reflect</artifactId>

<version>${version.scala}</version>

</dependency>

</dependencies>

</dependencyManagement>

</project>

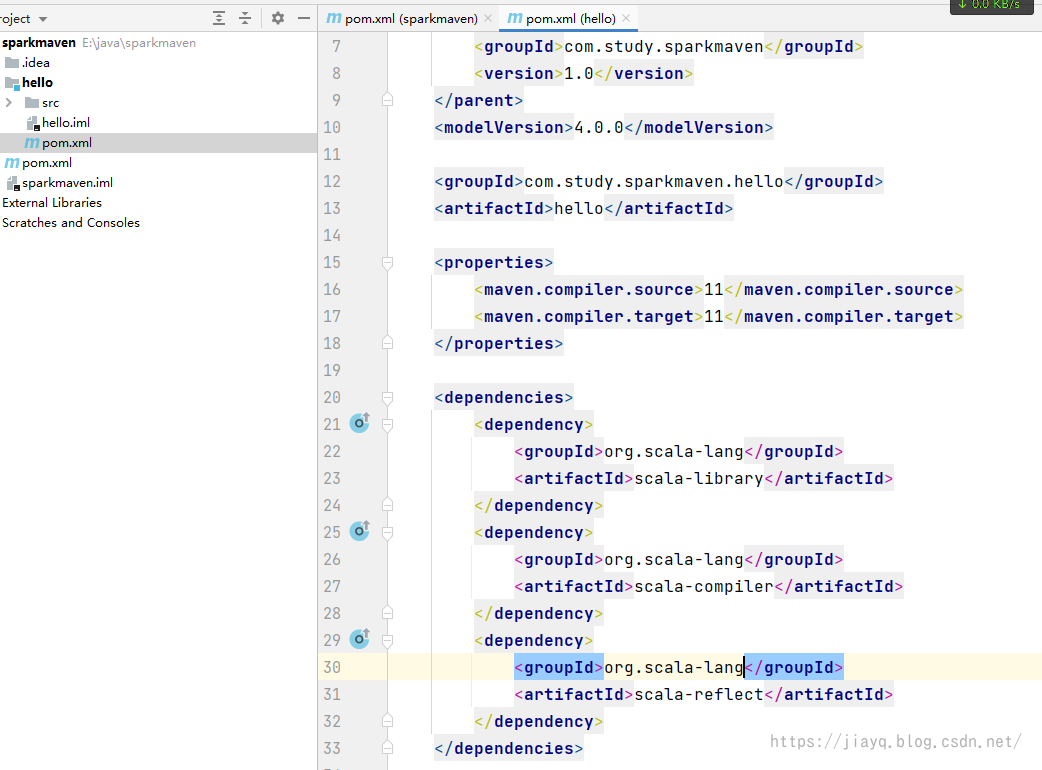

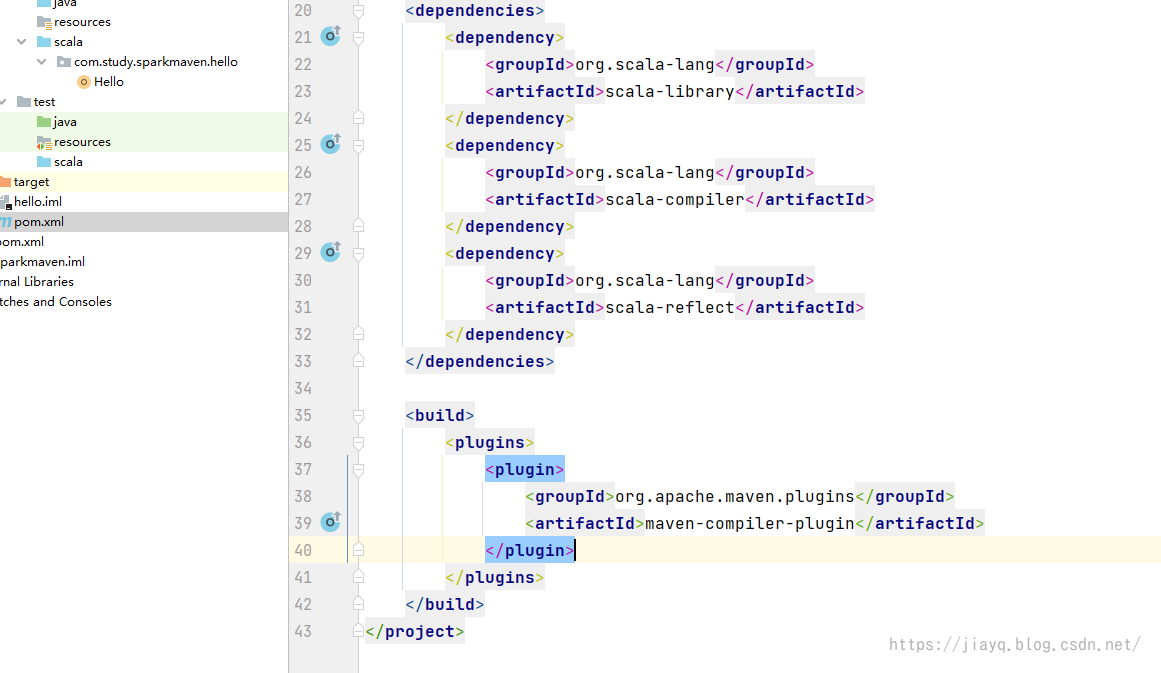

Next comes the POM of the hello module. Introducing scala-related dependencies into XML

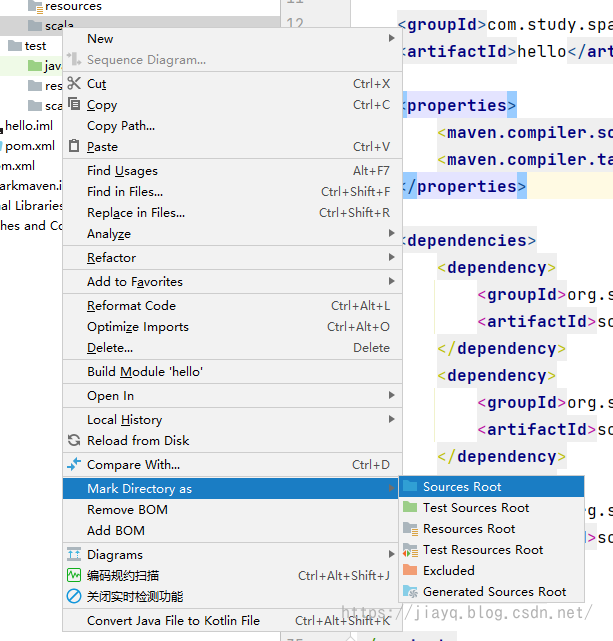

Create directory

We need to create our source and resource directories in the src directory

And right-click to label as source code and resources

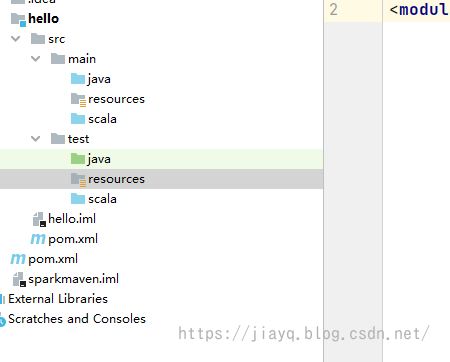

When the labeling is complete, the following

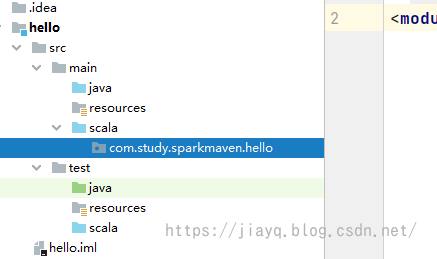

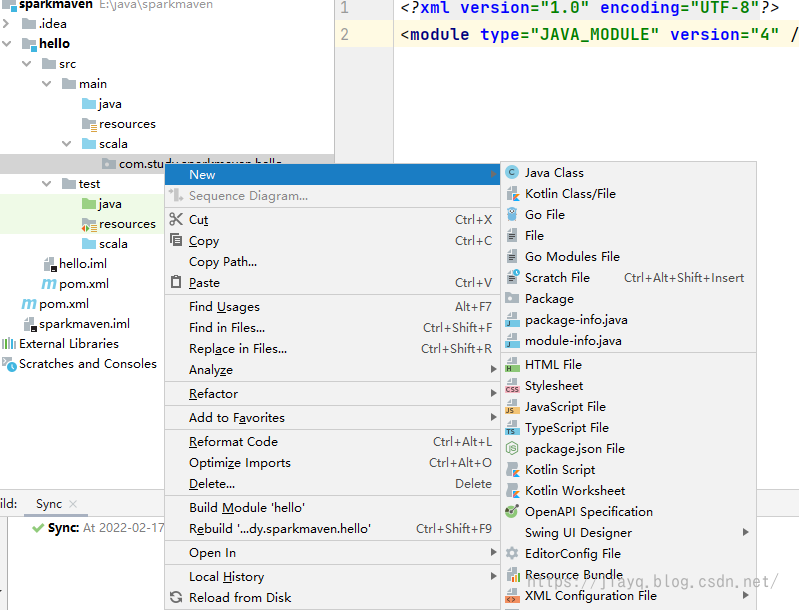

Next, create our package directory

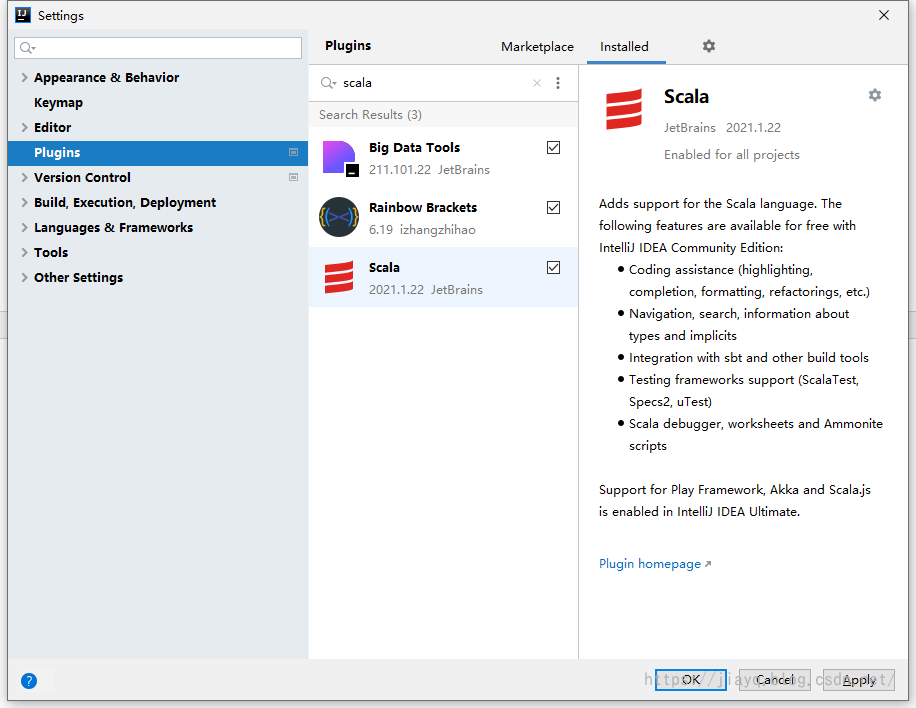

Install scala plug-in

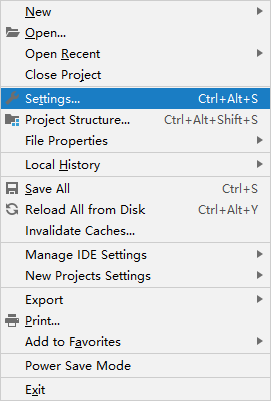

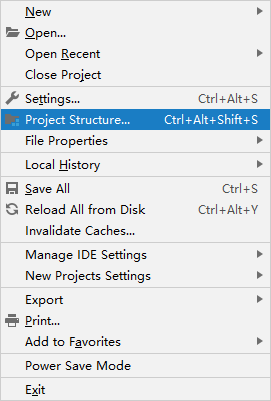

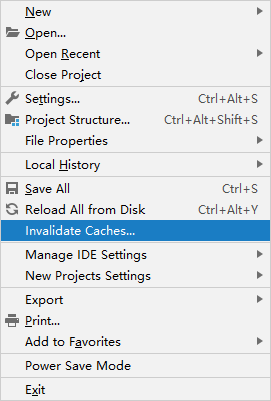

Open Settings First

Query the scala plug-in at the plug-in and install it. It may take several more attempts

And scala plug-ins are large, so downloads may not always succeed once. If you can't download it, you can go to the plug-in market in idea to download it offline and install it offline.

hello world of scala

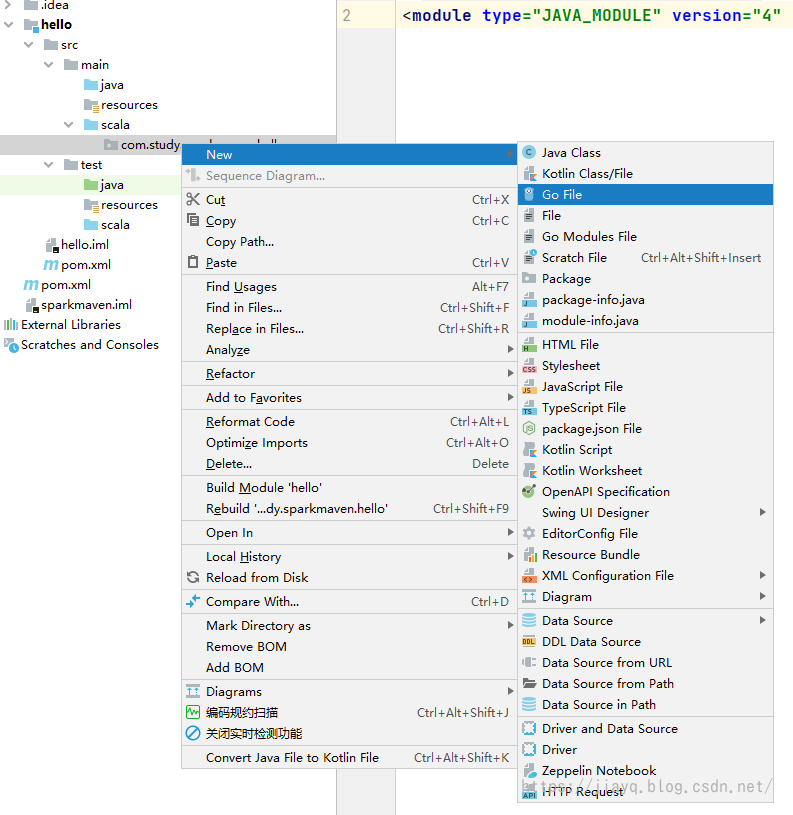

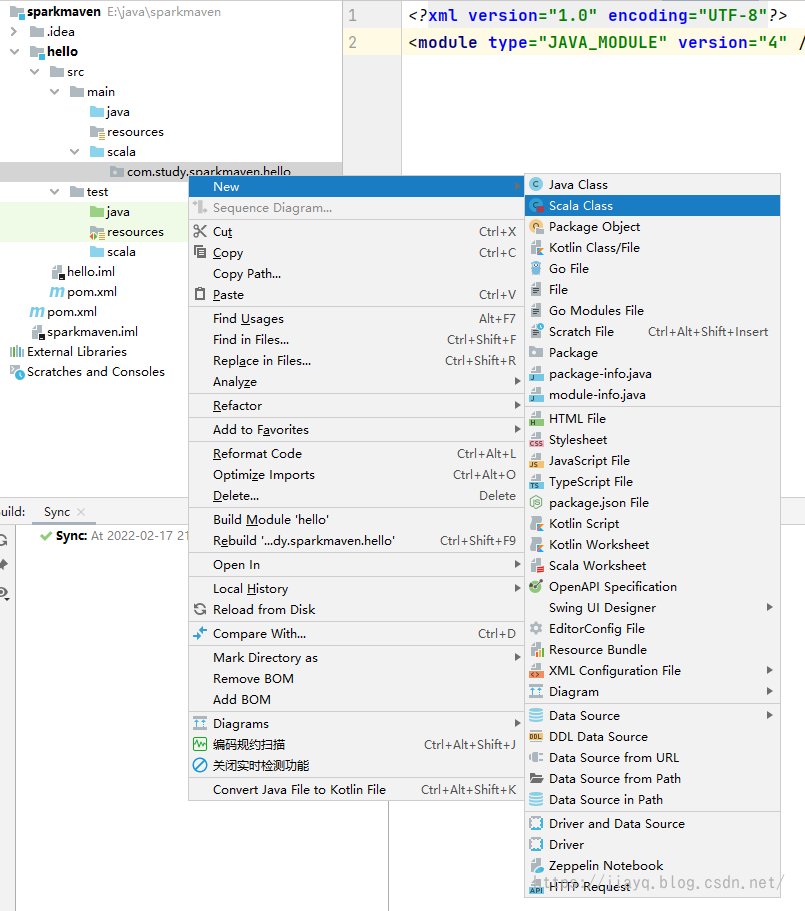

We're developing under scala's source code

No scala option was found at this time when creating a new file.

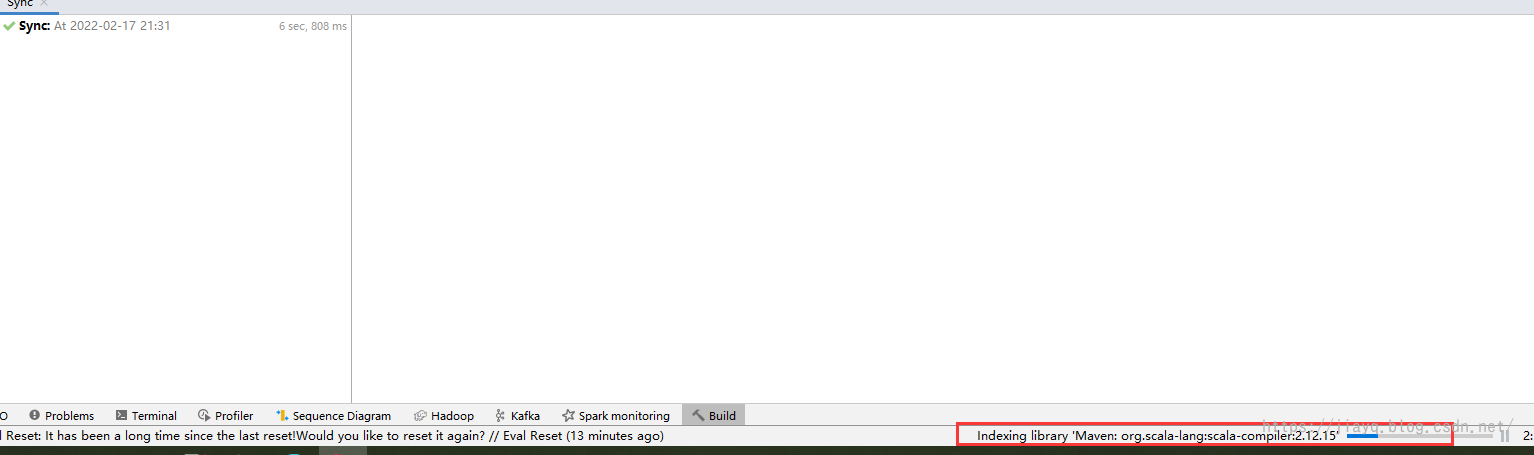

We refresh the entire maven project to make maven download dependent

Of course, a scala project cannot be created after refreshing

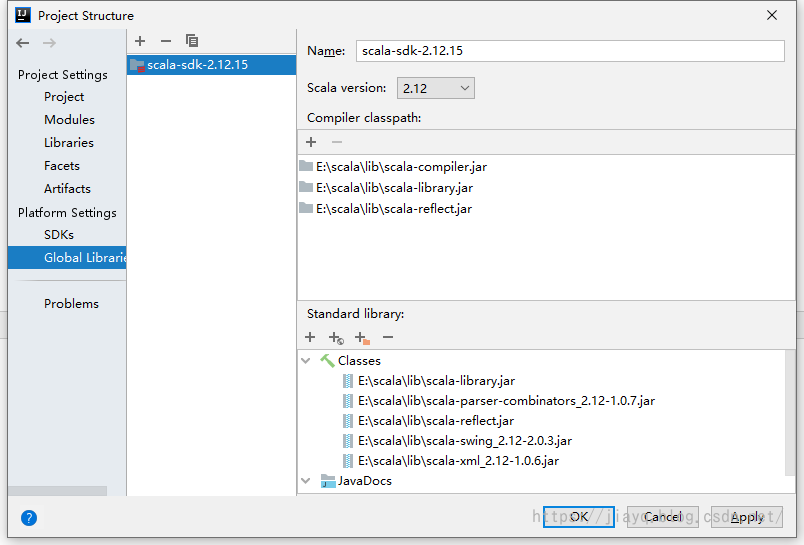

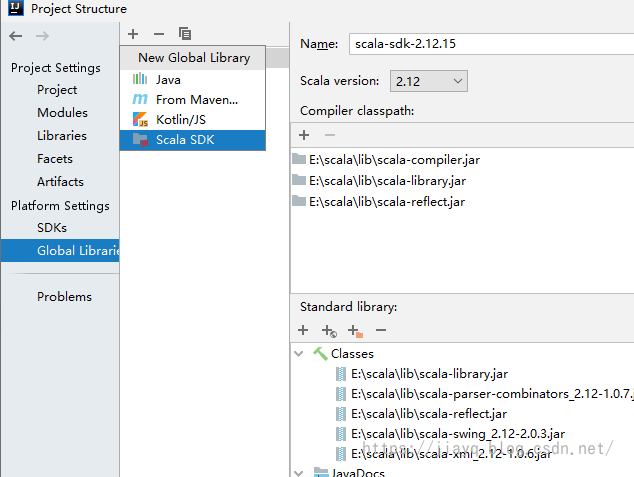

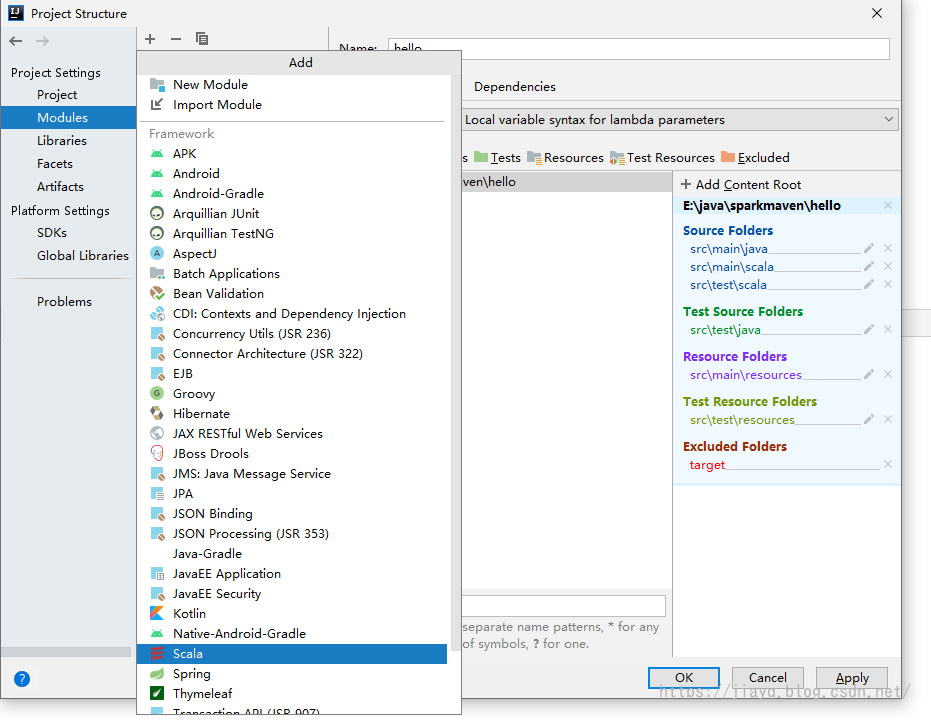

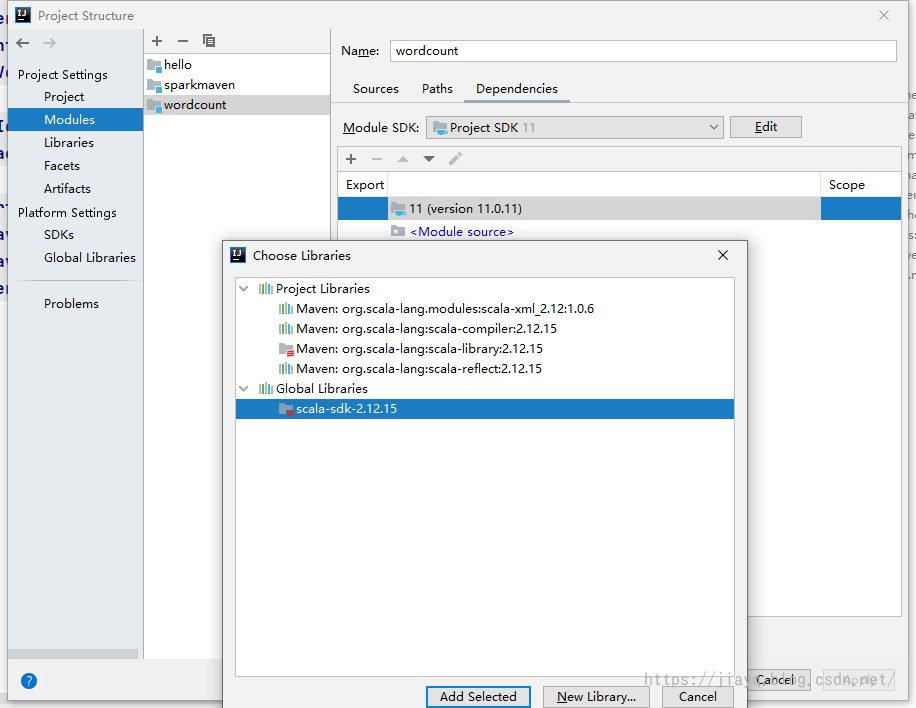

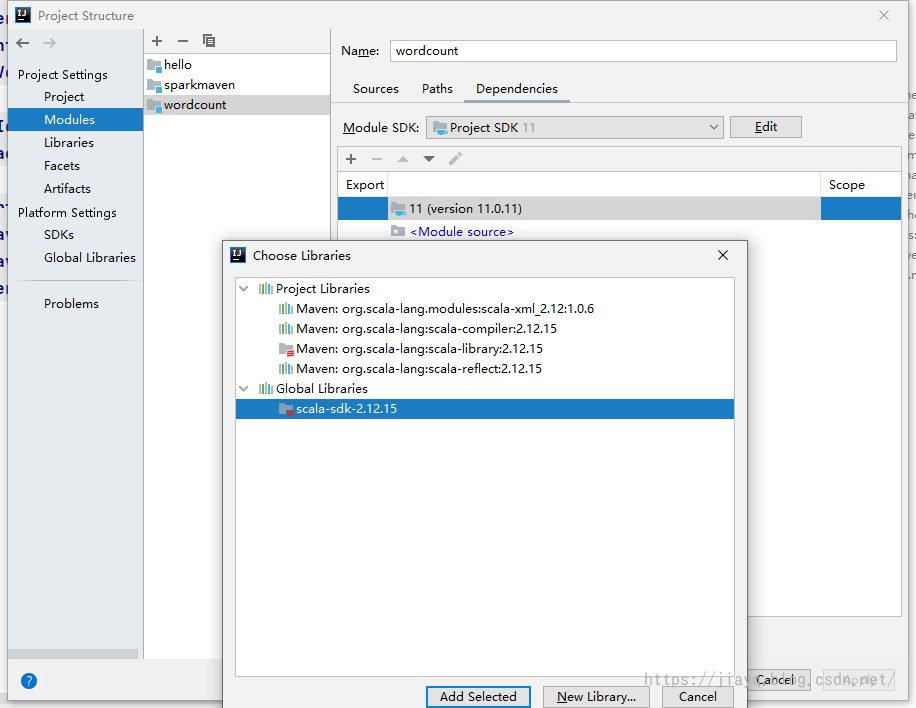

We need to tell idea that our project needs to support scala, so we need to add the sdk for Scala

First, make sure that your global sdk has scala if you don't need to click + increase

such as

Of course, the most important thing is that you need to install scala's plug-ins. Only if you install scala's plug-ins can you develop scala-related content.

We added scala in the module settings

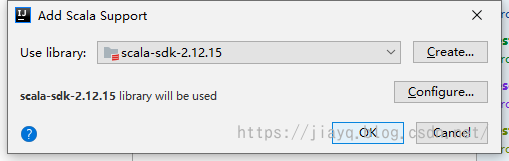

Choose our version of scala sdk. If you have multiple versions of scala's sdk, be aware of the version

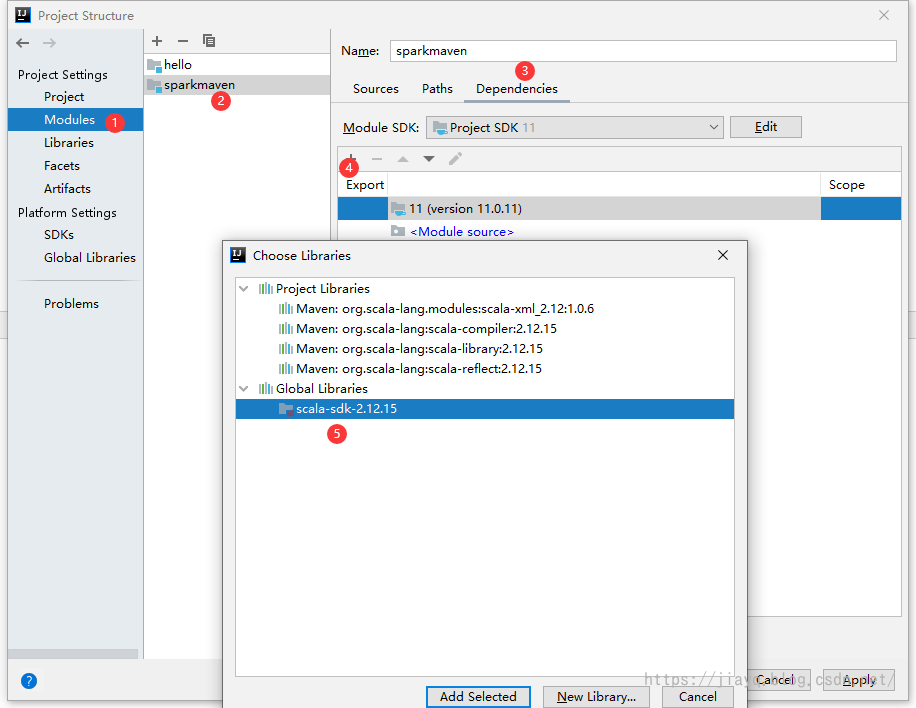

We can also add scala's sdk to the root project

Of course, you need to add one more time to the subproject after you join the root project

There is no conflict between our increased dependency here and maven's increased dependency on scala

We're increasing our dependency here, just telling idea that we need some scala functionality in our project

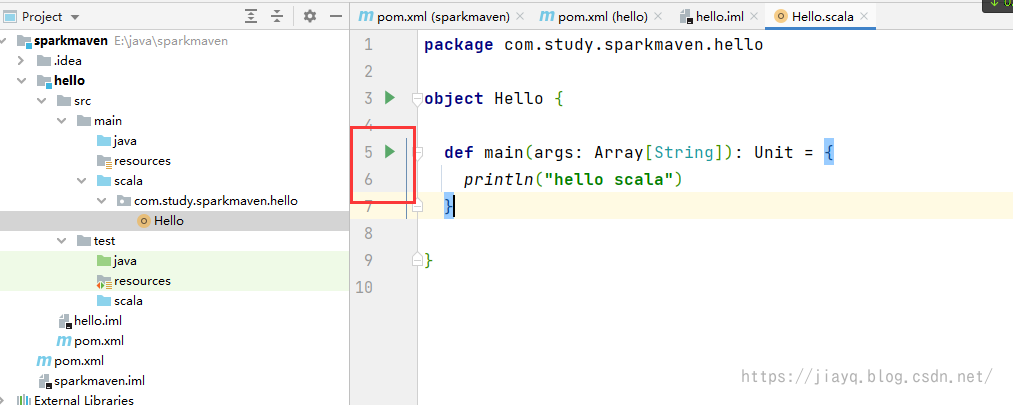

We chose to add an object and write the following

package com.study.sparkmaven.hello

object Hello {

def main(args: Array[String]): Unit = {

println("hello scala")

}

}

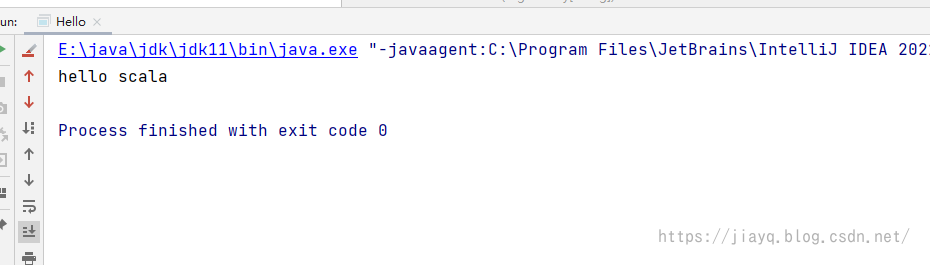

Then click Run

as follows

maven plug-in

We've developed scala's hello world, but it doesn't make any sense. It just means that our idea now supports scala's syntax. We need to install some maven plug-ins to help us do other things.

Or the same way we manage dependencies, in the POM of the parent project. Dependencies and plug-ins are defined in xml, including generic configurations of some plug-ins, then dependencies on subprojects personalization and plug-in configurations for subprojects personalization are added.

It is agreed that all version numbers are uniformly configured in properties.

Plug-ins are configured under build-pluginManage-plugins

Configuration warehouse

If you do not configure the warehouse, downloading dependencies and plug-ins from maven's central warehouse will be slower by default. We can configure a domestic mirror to speed up the download

<!-- Private Warehouse Configuration -->

<pluginRepositories>

<pluginRepository>

<id>maven-net-cn</id>

<name>Maven China Mirror</name>

<url>http://maven.aliyun.com/nexus/content/repositories/central/</url>

</pluginRepository>

</pluginRepositories>

<repositories>

<repository>

<id>central</id>

<name>Maven China Mirror</name>

<url>http://maven.aliyun.com/nexus/content/repositories/central/</url>

</repository>

</repositories>

Our POM in the parent project. Configure in xml.

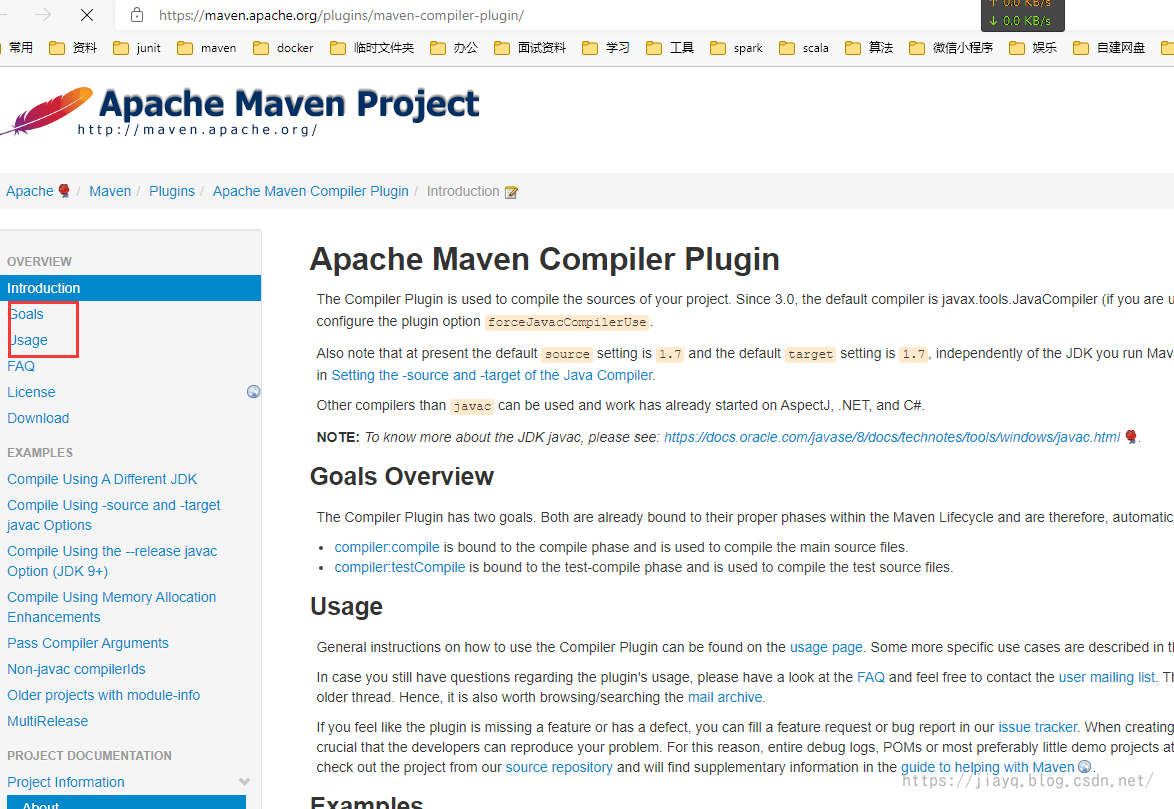

maven-compile-plugin

Our POM in the parent project. Add maven-compile-plugin to XML

Define the version of the plug-in first

<properties>

<maven.compiler.source>11</maven.compiler.source>

<maven.compiler.target>11</maven.compiler.target>

<!-- scala Version of -->

<version.scala>2.12.15</version.scala>

<!-- maven-compile-plugin Version number of -->

<version.maven.compile.plugin>3.9.0</version.maven.compile.plugin>

</properties>

Next, configure the plug-in

<build>

<pluginManagement>

<plugins>

<!-- maven-compile-plugin Plug-in unit -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>${version.maven.compile.plugin}</version>

<!-- configuration information -->

<configuration>

<!-- Source code -->

<source>${maven.compiler.source}</source>

<target>${maven.compiler.target}</target>

<!-- Encoding Method -->

<encoding>UTF-8</encoding>

<!-- Support debugging -->

<debug>true</debug>

</configuration>

</plugin>

</plugins>

</pluginManagement>

</build>

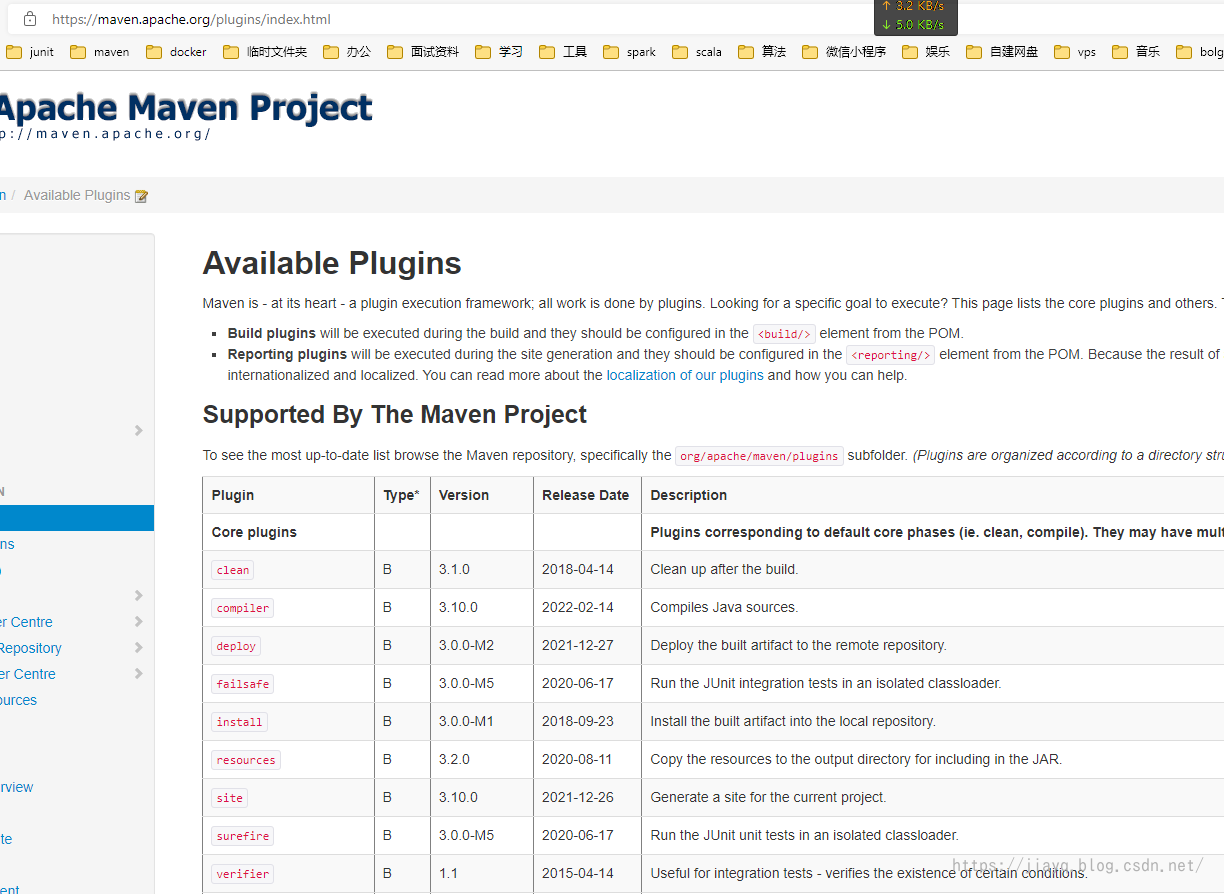

Perhaps you may be confused, how do we know which plug-ins I can use and what versions of them are?

You can do it at Maven – Available Plugins (apache.org) Query all available plug-ins, click on the name of the plug-in to enter the plug-in's documentation interface, which will have detailed version number, and how to use it.

detailed information

Remember, after we configure the plug-in in the parent project, we also need to introduce it in the child project

Our POM in the hello project. Introduction of XML

The benefit of doing this is in a pom. Unify information such as the version of plug-ins in your project in xml. Some generic configurations are also available in the POM of the parent project. Configure in xml.

maven-scala-plugin

Let's then configure scala's plug-ins

Define Version

<!-- maven-scala-plugin Version number of -->

<version.maven.scala.plugin>2.15.2</version.maven.scala.plugin>

Then introduce and configure plug-ins

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<version>${version.maven.scala.plugin}</version>

<configuration>

<!-- scala Version number of -->

<scalaVersion>${version.scala}</scalaVersion>

</configuration>

<!-- Configure listeners -->

<executions>

<!-- Monitor -->

<execution>

<!-- If there are multiple listeners you must set id,And it can't be repeated -->

<id>scala-compile</id>

<!-- Monitored Operations -->

<phase>compile</phase>

<!-- Actions performed after the listener triggers -->

<goals>

<goal>compile</goal>

</goals>

</execution>

<execution>

<id>scala-test-compile</id>

<phase>test-compile</phase>

<goals>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

</plugin>

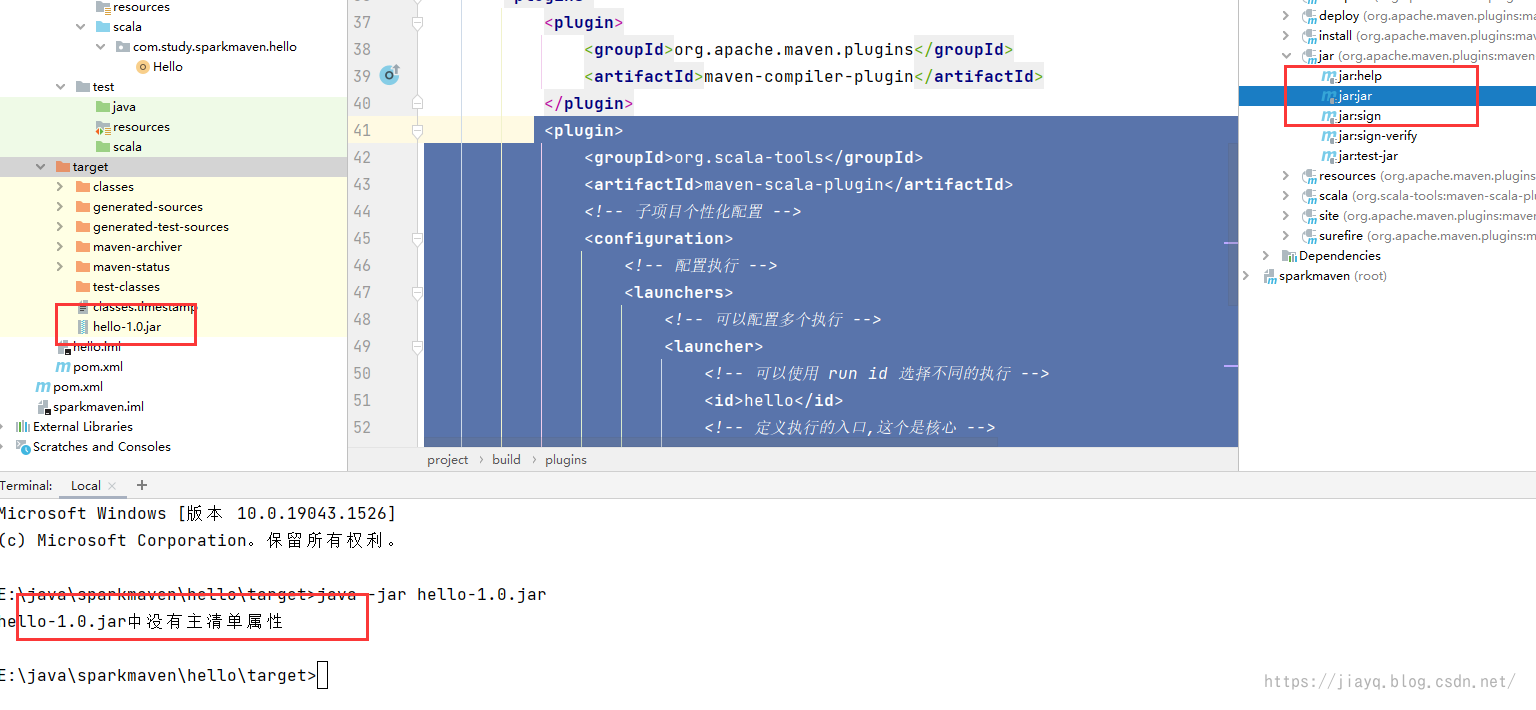

The plug-in is then introduced into the subproject and the execution is configured (pom.xml for the subproject)

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<!-- Subproject Personalization Configuration -->

<configuration>

<!-- Configuration Execution -->

<launchers>

<!-- Multiple executions can be configured -->

<launcher>

<!-- have access to run id Select a different execution -->

<id>hello</id>

<!-- Define the entry for execution,This is the core -->

<mainClass>com.study.sparkmaven.hello.Hello</mainClass>

<!-- Additional parameters for execution, etc. -->

<jvmArgs>

<jvmArg>-Xmx128m</jvmArg>

<jvmArg>-Djava.library.path=...</jvmArg>

</jvmArgs>

</launcher>

</launchers>

</configuration>

</plugin>

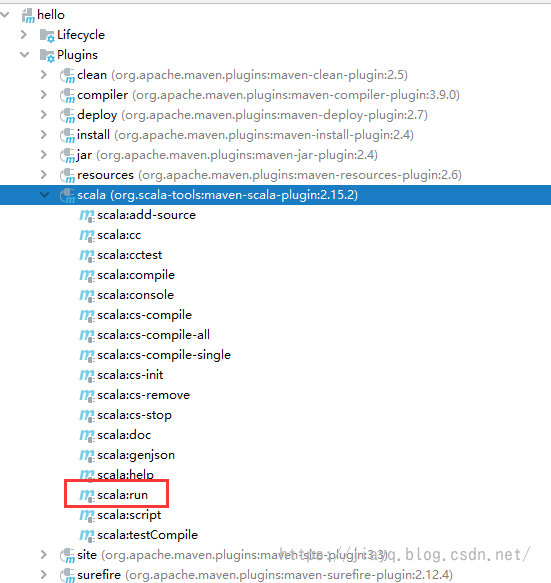

We refresh maven at this time

If everything is okay, there will be some scala action

Because we only have one scala execution configured, we can use this run directly. When there is only one execution, it is not necessary to specify the execution id. If there are more than one, it is the first one without specifying the execution id.

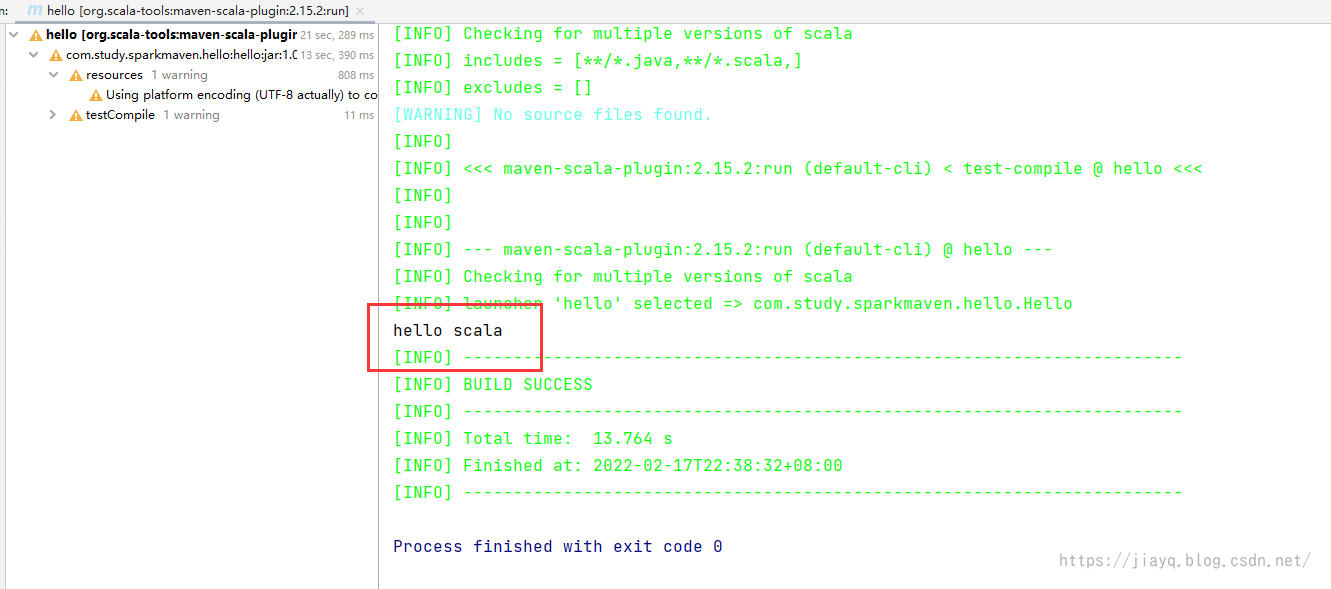

Let's double-click Run scala:run

The first time will be slower because you need to download dependencies.

It works, but it's idea based, and it's not possible if we just hit jar packages and run them in a java environment.

We will be prompted that we have not configured the main class

maven-jar-plugin

This plugin is used to specify the main class and the packaged jar package can run

Specified version

<!-- maven-jar-plugin Version number of -->

<version.maven.jar.plugin>3.2.2</version.maven.jar.plugin>

Configure Plugins

<!-- maven-jar-plugin -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-jar-plugin</artifactId>

<version>${version.maven.jar.plugin}</version>

<executions>

<execution>

<!-- Listen for packaging operations -->

<phase>package</phase>

<goals>

<!-- Execute after packaging jar operation -->

<goal>jar</goal>

</goals>

<configuration>

<!-- Generated jar Additional fields of the package to prevent duplication -->

<classifier>client</classifier>

</configuration>

</execution>

</executions>

</plugin>

Introducing in subprojects

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-jar-plugin</artifactId>

<configuration>

<archive>

<manifest>

<!-- Increase Runtime Environment -->

<addClasspath>true</addClasspath>

<!-- Specify the location of the dependency to be lib Catalog -->

<classpathPrefix>lib/</classpathPrefix>

<!-- Specify the main class -->

<mainClass>com.study.sparkmaven.hello.Hello</mainClass>

</manifest>

</archive>

</configuration>

</plugin>

We refresh the project and clean it before executing the package

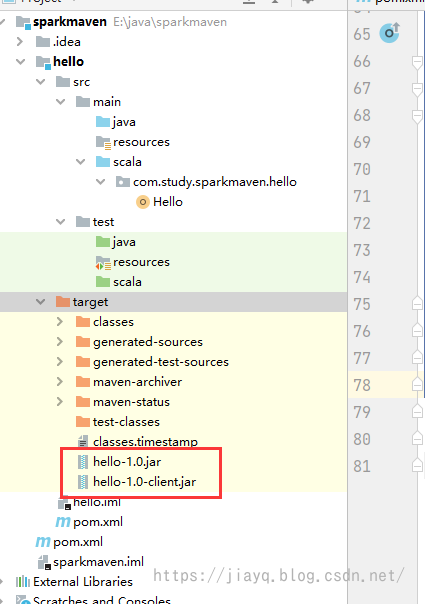

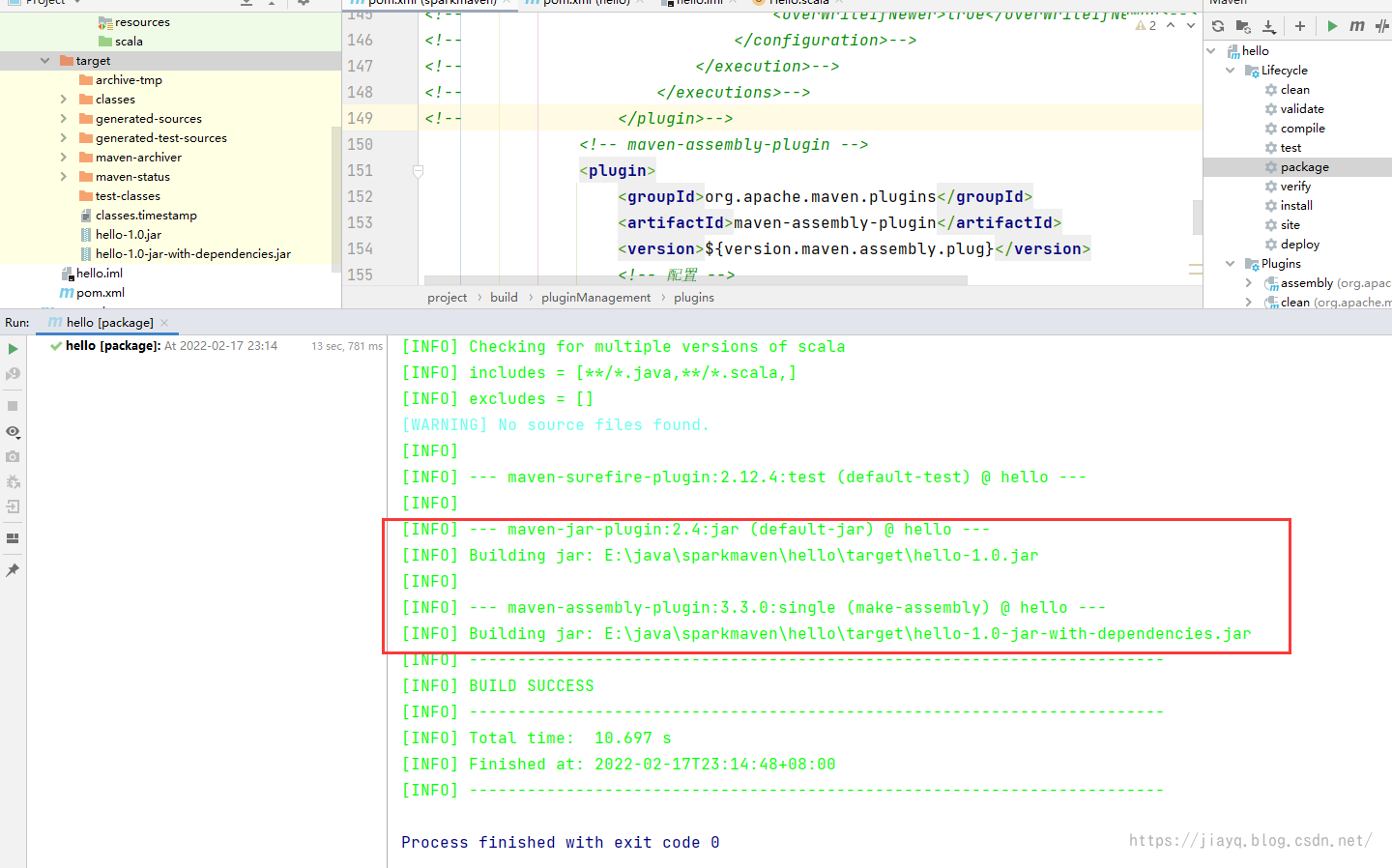

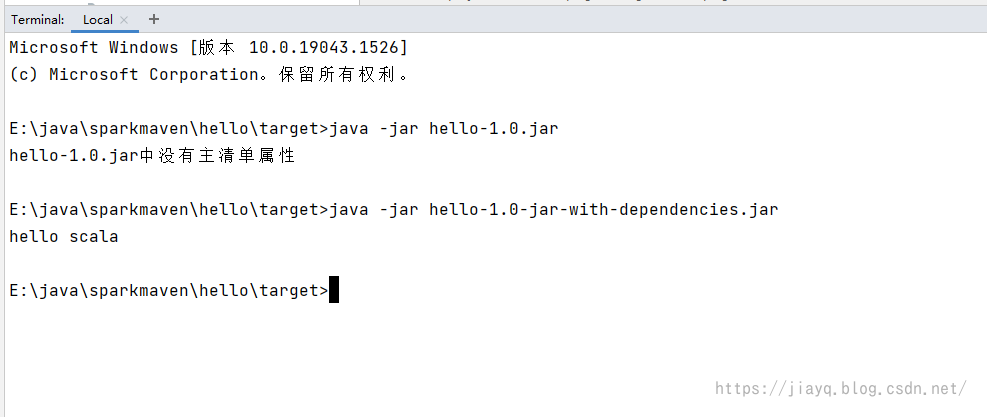

Two jar packages are generated after execution

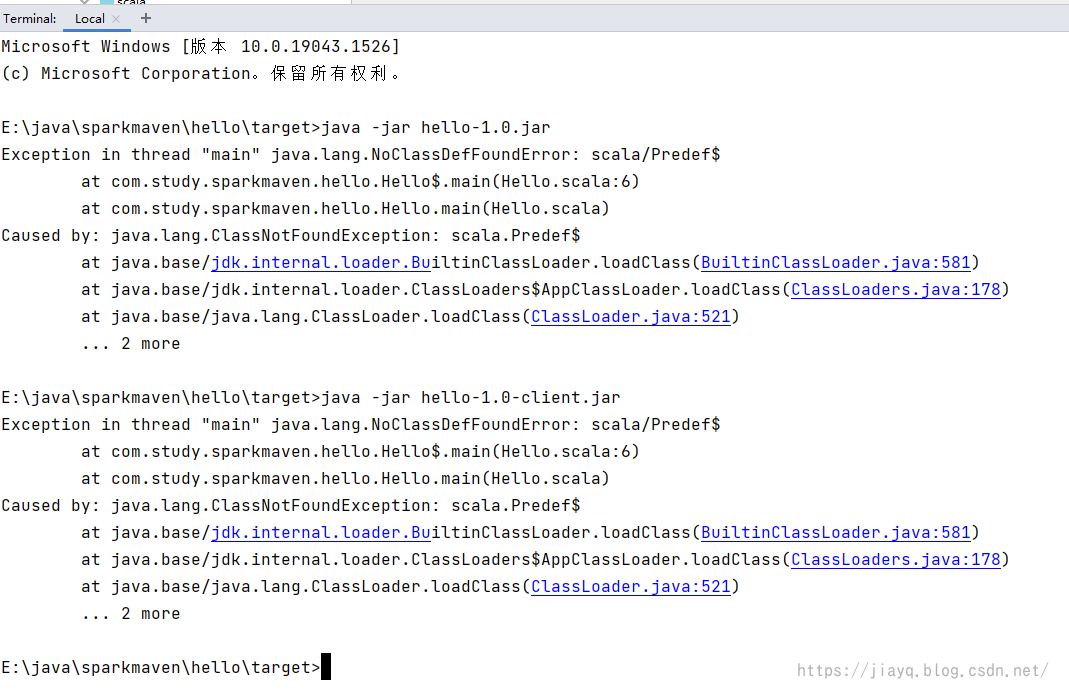

The second jar package is generated by the plug-in and tries to execute

We executed two jar packages in turn and found that they could not be executed, but at least we could not find the error for the main class, but could not find the library for scala.

This is because although we specified a dependency on the lib directory, the lib directory is now empty.

That is, no dependency.

Now you need the following plug-in.

maven-dependency-plugin

Dependent copy plug-ins allow you to copy your project's dependencies to a specified location in preparation for packaging.

Previously, we packed because the lib directory was empty, resulting in a lack of dependencies in the packaged jar.

Let's first define the version

<!-- maven-dependency.plugin Version number of -->

<version.maven.dependency.plugin>3.2.0</version.maven.dependency.plugin>

Next, configure the plug-in

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-dependency-plugin</artifactId>

<version>${version.maven.dependency.plugin}</version>

<executions>

<execution>

<id>dependency-copy</id>

<!-- Listen for packaging operations -->

<phase>package</phase>

<goals>

<!-- Dependent copy operation before packaging operation -->

<goal>copy-dependencies</goal>

</goals>

<configuration>

<!-- Copy-dependent destination directory -->

<outputDirectory>${project.build.directory}/lib</outputDirectory>

<!-- Can I overwrite release Version Dependency -->

<overWriteReleases>false</overWriteReleases>

<!-- Can I overwrite snapshots Version Dependency -->

<overWriteSnapshots>false</overWriteSnapshots>

<!-- Can I overwrite it when a new version is available -->

<overWriteIfNewer>true</overWriteIfNewer>

</configuration>

</execution>

</executions>

</plugin>

We introduced in subprojects

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-dependency-plugin</artifactId>

</plugin>

Because dependent copies are generic, this places the relevant settings in the POM in the parent project. In XML

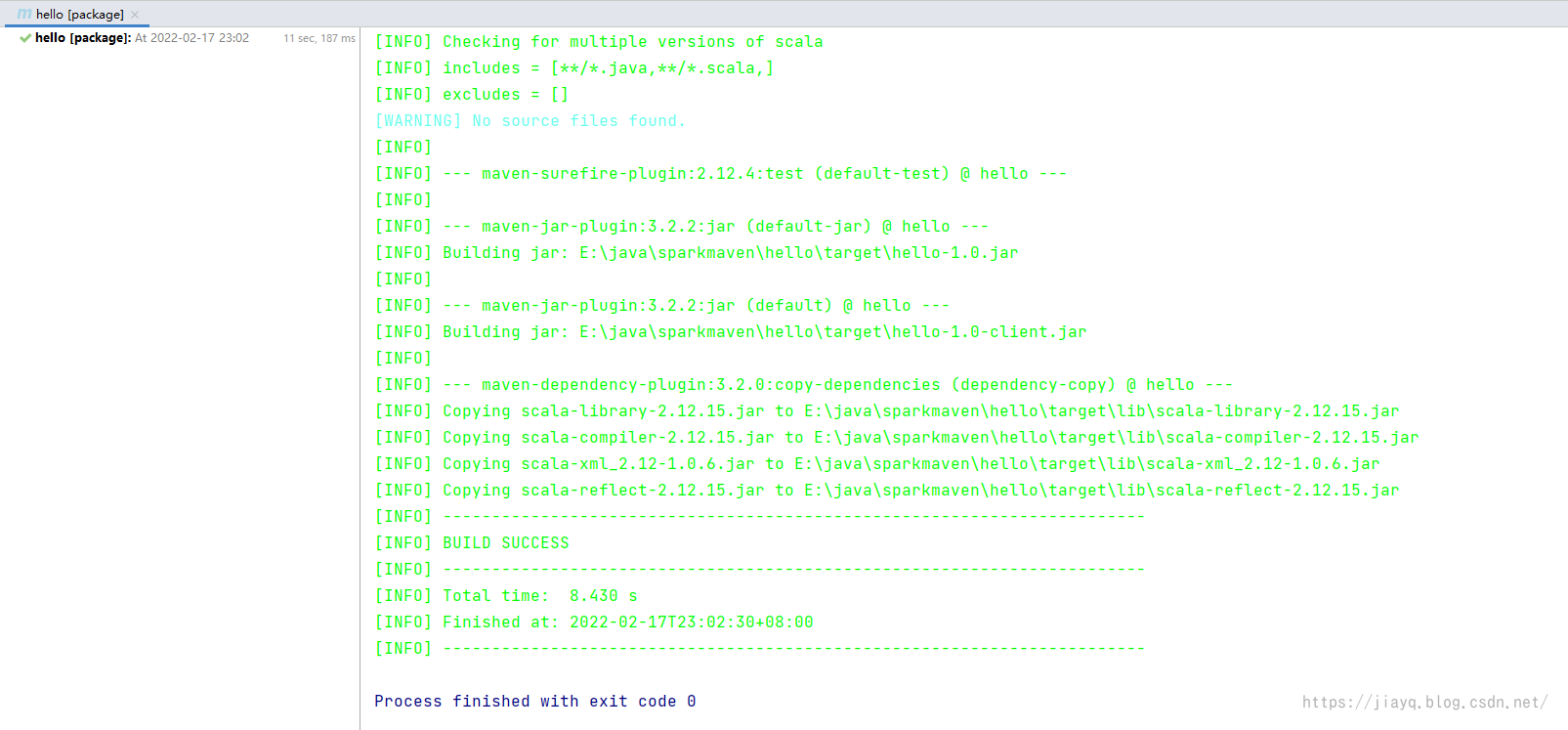

Refresh maven, clean and package

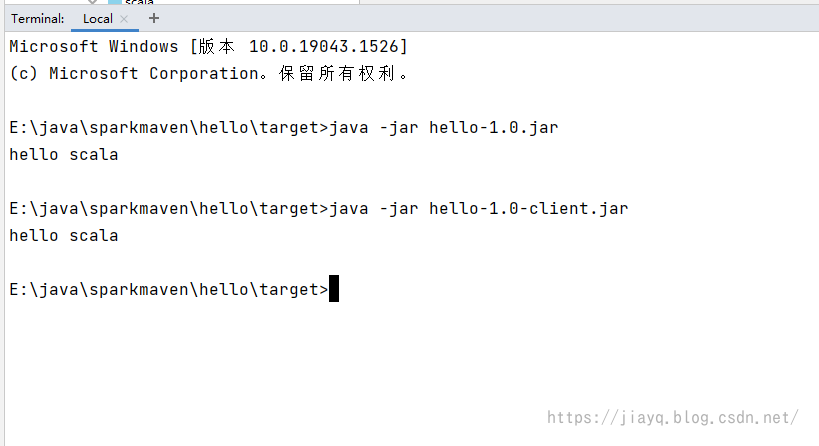

Next try executing the jar package

maven-assembly-plugin

In the above, two plug-ins actually work together, the first for copy dependency and the second for packaging.

But packaging only packs jar packages, if you want to make war packages, or anything else.

So there is also a plug-in which not only can jar packages but also other packages, but also integrates the functions of the two plug-ins.

It is the well-known assembly plug-in.

Version Definition

<!-- maven-assembly-plugin Version number of -->

<version.maven.assembly.plug>3.3.0</version.maven.assembly.plug>

Plug-in Definition

<!-- maven-assembly-plugin -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>${version.maven.assembly.plug}</version>

<!-- To configure -->

<configuration>

<!-- Functions started -->

<descriptorRefs>

<!-- hit jar Package Autocopy Dependency -->

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<!-- Monitor -->

<execution>

<id>make-assembly</id>

<!-- Listen for packaging commands -->

<phase>package</phase>

<goals>

<!-- Only this operation is currently available -->

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

Subproject dependencies, let's remove the previous maven-jar-plugin and maven-dependency-plugin plugins

<!-- <plugin>-->

<!-- <groupId>org.apache.maven.plugins</groupId>-->

<!-- <artifactId>maven-jar-plugin</artifactId>-->

<!-- <configuration>-->

<!-- <archive>-->

<!-- <manifest>-->

<!-- <!– Increase Runtime Environment –>-->

<!-- <addClasspath>true</addClasspath>-->

<!-- <!– Specify the location of the dependency to be lib Catalog –>-->

<!-- <classpathPrefix>lib/</classpathPrefix>-->

<!-- <!– Specify the main class –>-->

<!-- <mainClass>com.study.sparkmaven.hello.Hello</mainClass>-->

<!-- </manifest>-->

<!-- </archive>-->

<!-- </configuration>-->

<!-- </plugin>-->

<!-- <plugin>-->

<!-- <groupId>org.apache.maven.plugins</groupId>-->

<!-- <artifactId>maven-dependency-plugin</artifactId>-->

<!-- </plugin>-->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<configuration>

<archive>

<manifest>

<addClasspath>true</addClasspath>

<classpathPrefix>lib/</classpathPrefix>

<mainClass>com.study.sparkmaven.hello.Hello</mainClass>

</manifest>

</archive>

</configuration>

</plugin>

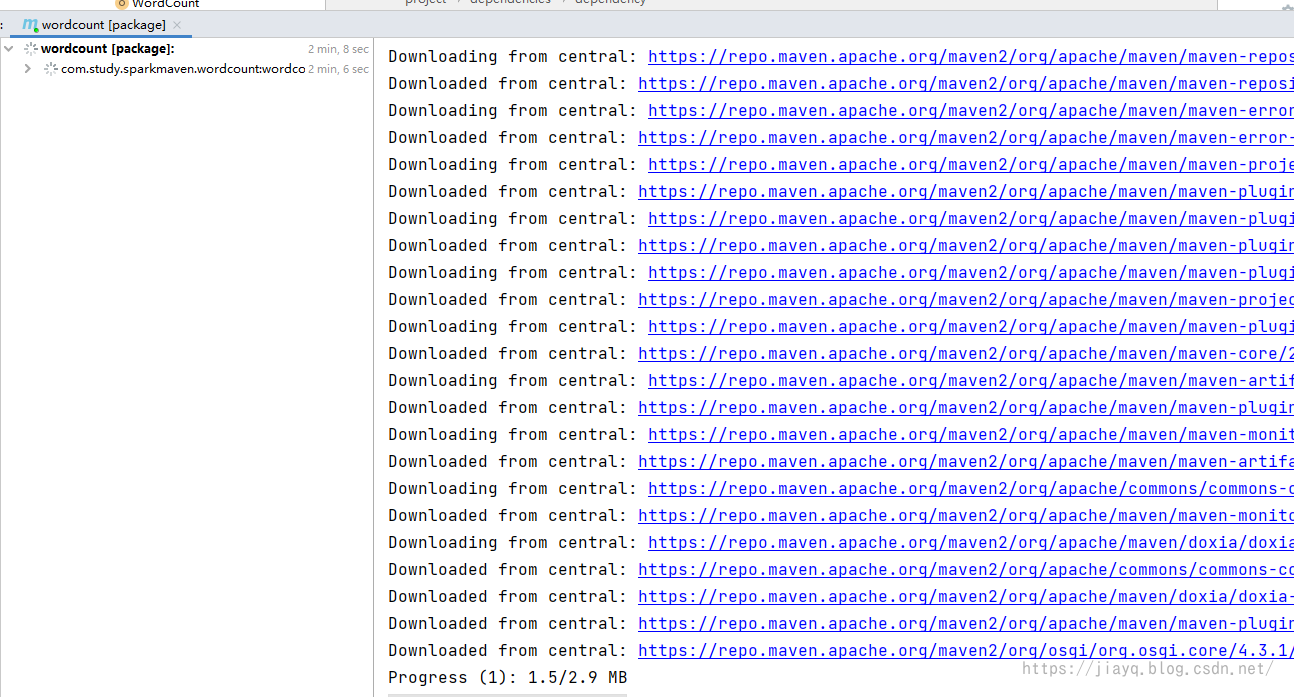

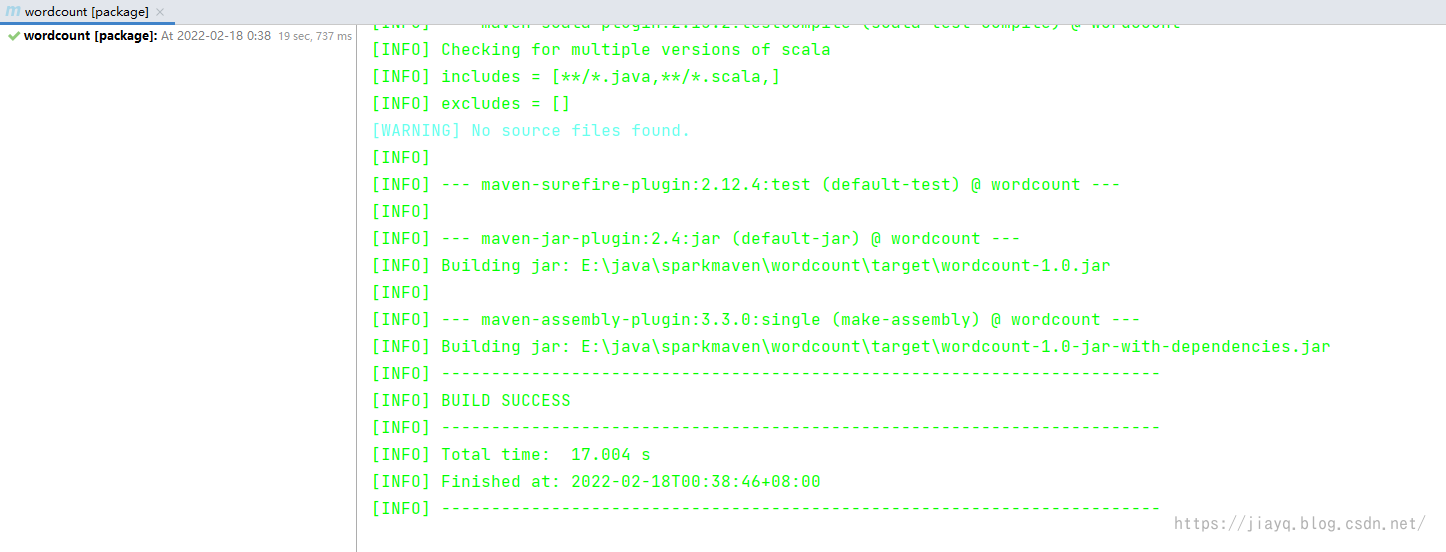

Refresh the project and execute clean and package

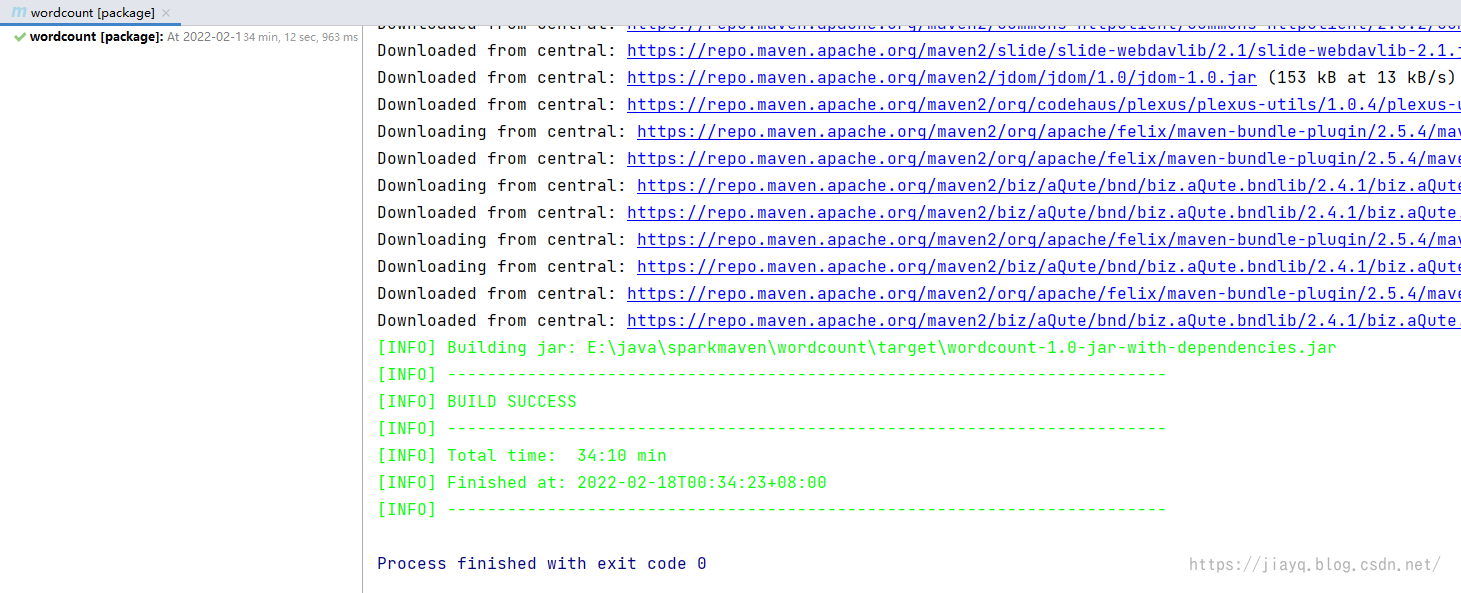

We're trying to do it

Because we commented on the configuration of maven-jar-plugin, the packaged default package could not find the main class.

maven-assembly-plugin because we configured the main class and also started the copy-dependent function, so the jar typed out is not only the main class, but also scala-dependent and can run directly.

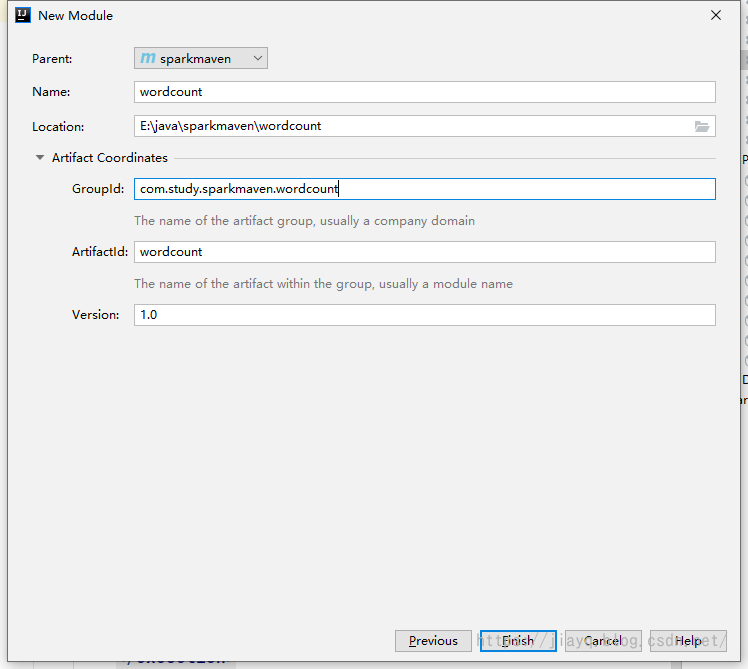

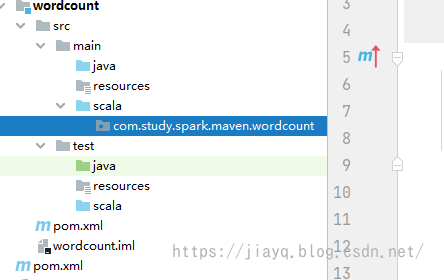

spark Development Environment

We create an empty maven project

Don't forget to tell idea that we need a scala environment

Create directory

Package directory

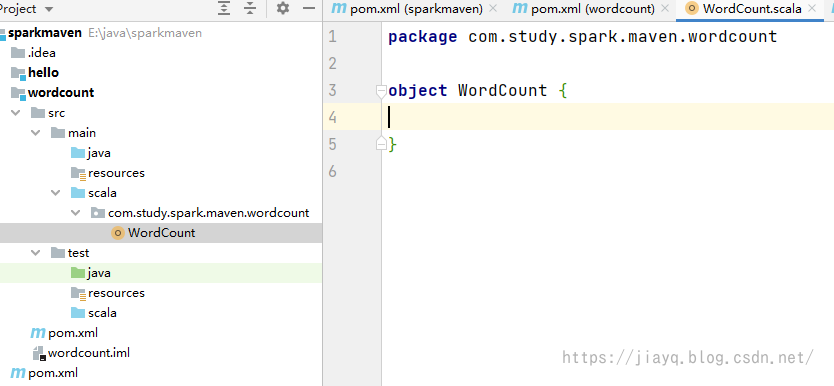

Main Class

Add spark and scala dependencies, and related plugins

Version of spark

I follow a custom when defining properties:

Dependent version number to. End of version

The version number of the plug-in starts with version,. End of plugin

<!-- spark Dependent Version -->

<spark.version>3.2.0</spark.version>

Add spark-core_2.12 Dependency, because scala binaries are incompatible, so be careful

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.12</artifactId>

<version>${spark.version}</version>

<!-- idea Run needs to be commented out, package needs to be released -->

<!-- <scope>provided</scope>-->

</dependency>

Because the packages spark typed are ultimately submitted to spark for execution and have spark-related dependencies, we do not need to include the spark dependencies when packaging. Otherwise, it will be very large, and because the dependencies of spark itself are complex, it will be more difficult to handle issues such as the transfer of dependencies.

Plug-ins for subprojects

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

</plugin>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<configuration>

<launchers>

<launcher>

<id>wordcount</id>

<mainClass>com.study.spark.maven.wordcount.WordCount</mainClass>

</launcher>

</launchers>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<configuration>

<archive>

<manifest>

<addClasspath>true</addClasspath>

<classpathPrefix>lib/</classpathPrefix>

<mainClass>com.study.spark.maven.wordcount.WordCount</mainClass>

</manifest>

</archive>

</configuration>

</plugin>

</plugins>

</build>

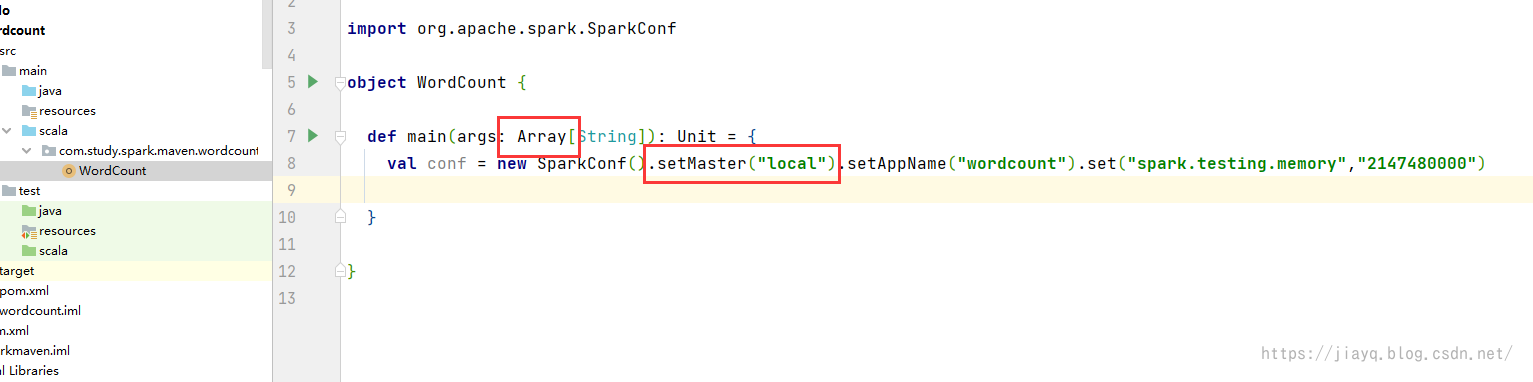

After refreshing the maven project, you can write spark code in the main class

If you are developing code and find that scala's related keywords are not recognized, refresh the idea's cache

Select this restart idea

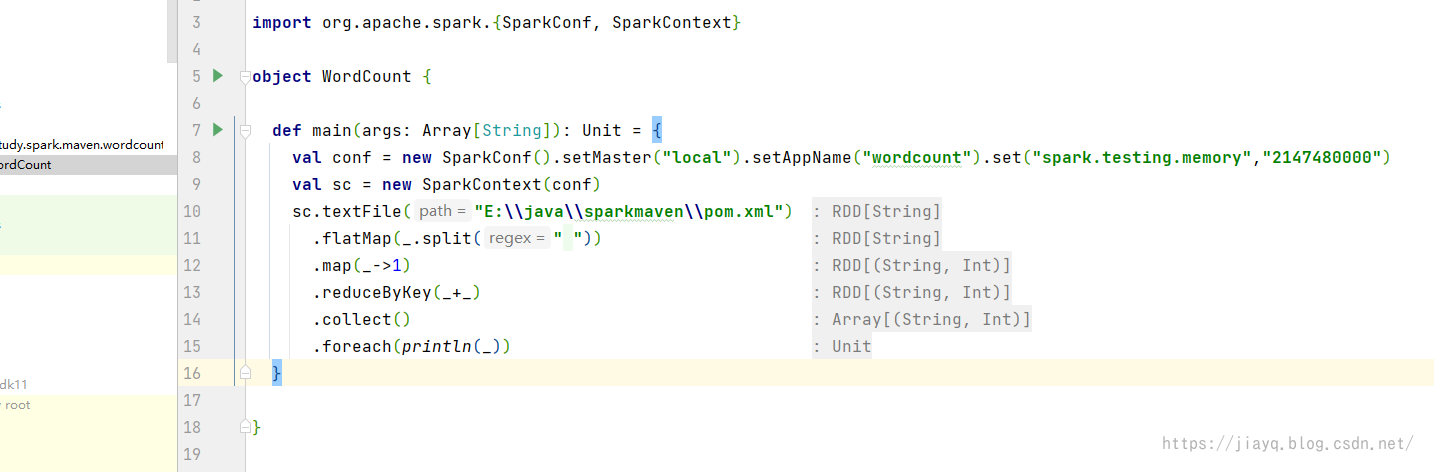

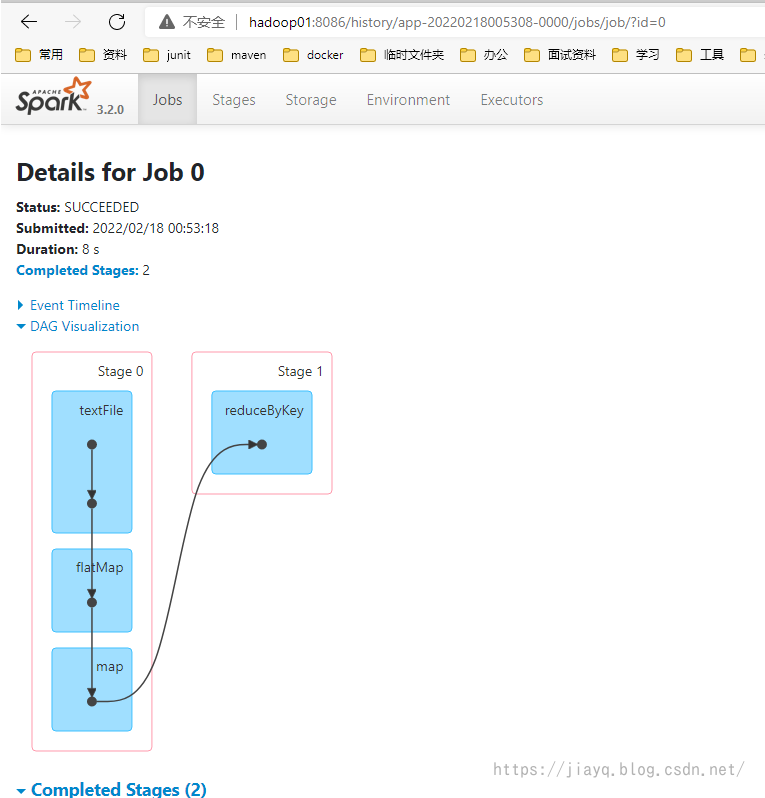

The wordcount code is as follows

package com.study.spark.maven.wordcount

import org.apache.spark.{SparkConf, SparkContext}

object WordCount {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setMaster("local").setAppName("wordcount").set("spark.testing.memory","2147480000")

val sc = new SparkContext(conf)

sc.textFile("E:\\java\\sparkmaven\\pom.xml")

.flatMap(_.split(" "))

.map(_->1)

.reduceByKey(_+_)

.collect()

.foreach(println(_))

}

}

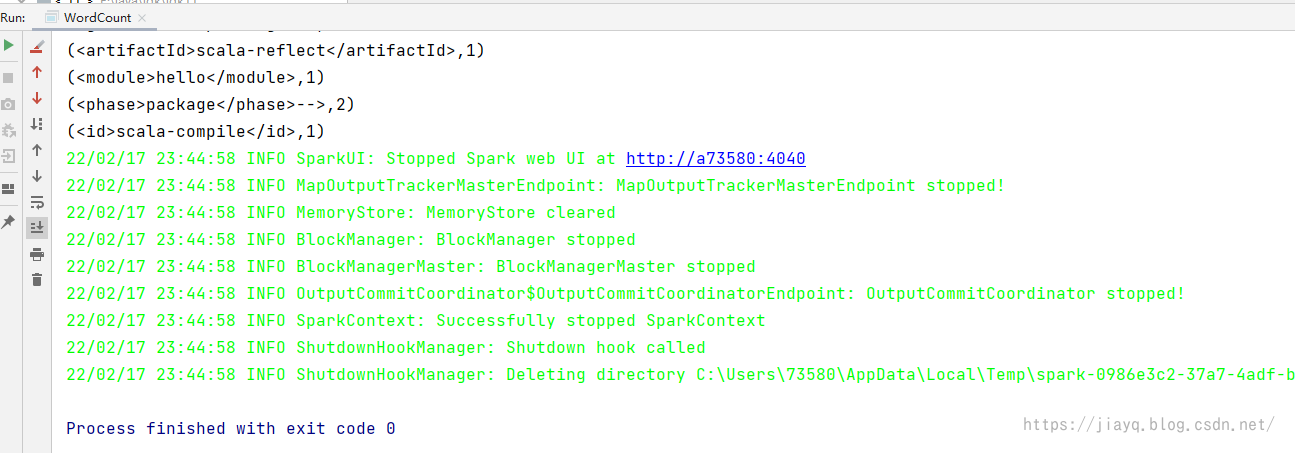

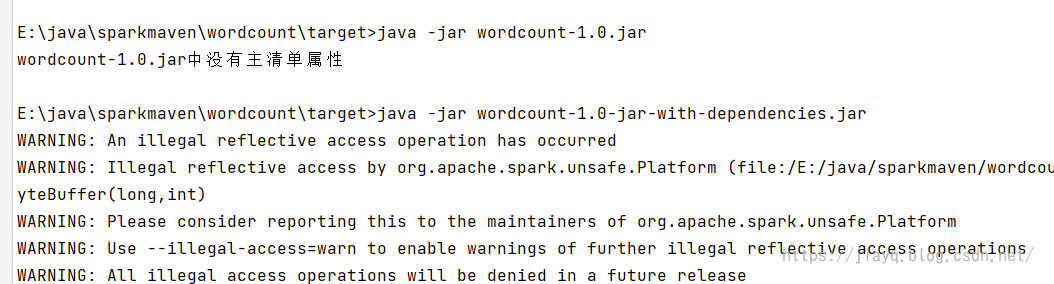

Let's start by clicking Run

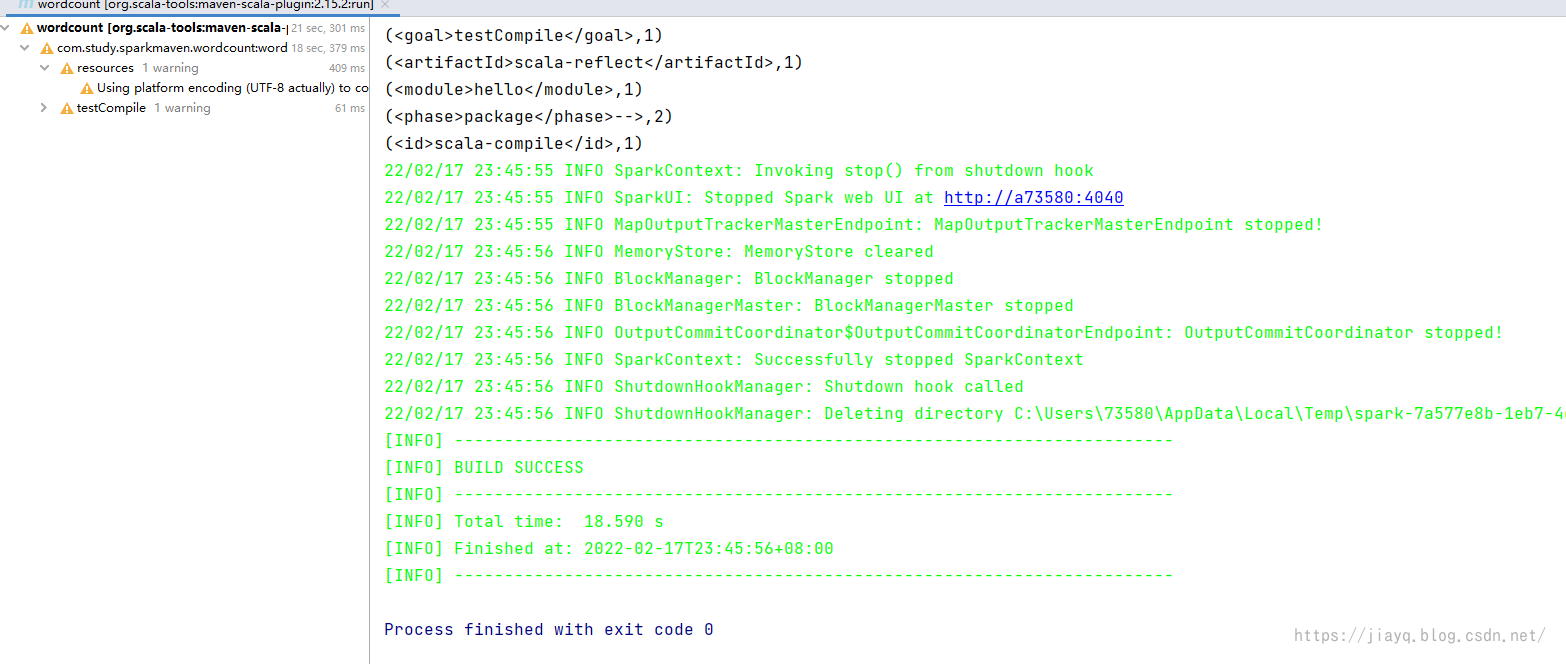

Then execute using scala:run

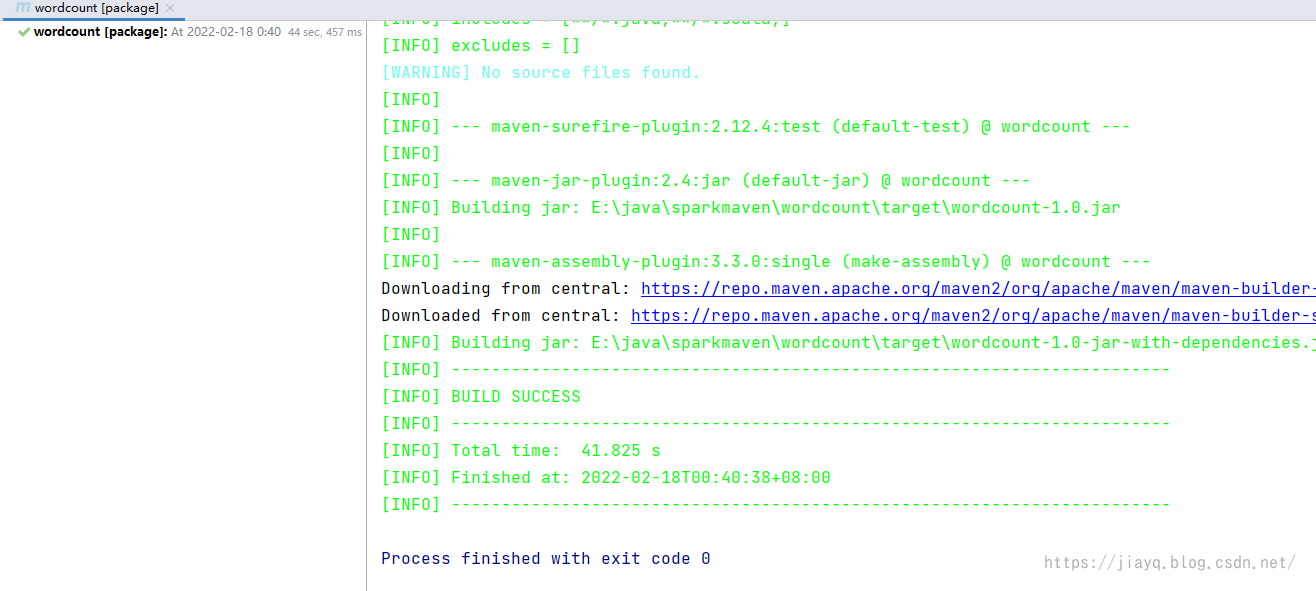

Then release the scope comment for spark-core and package it

The first run will be slower, and we're basically using the latest spark dependencies. Mirror libraries may not be synchronized yet, so they're slower.

More than 30 minutes, am I waiting for it to end

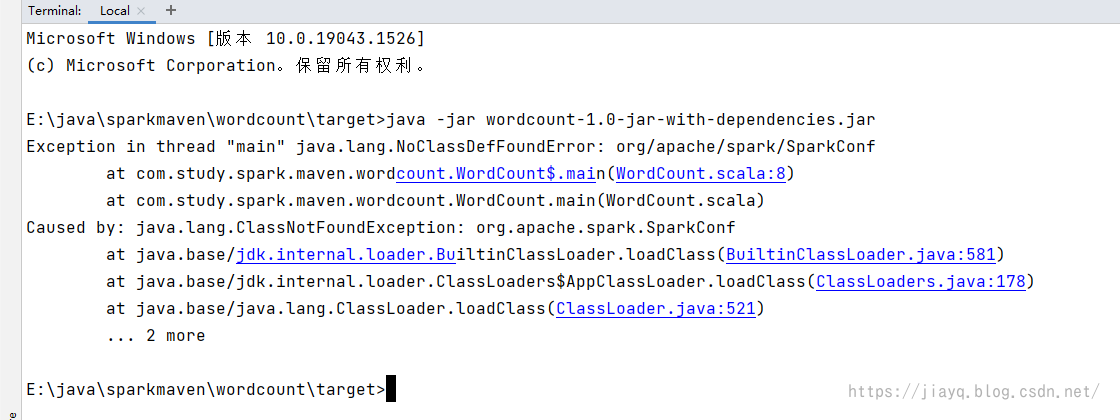

See if the packages you typed run

And it works

maven-assembly-plugin packs spark in vividly

If we don't need to pack a spark, it should be much faster

You will be prompted not to find a spark class, however

But the second packaging will be much faster

And regardless of whether we have packaged spark dependencies or not, there is usually no problem submitting them to spark for execution.

However, it is important to note that there may be conflicts between versions of the spark and spark environments within the jar package, so packaging spark dependencies is not recommended.

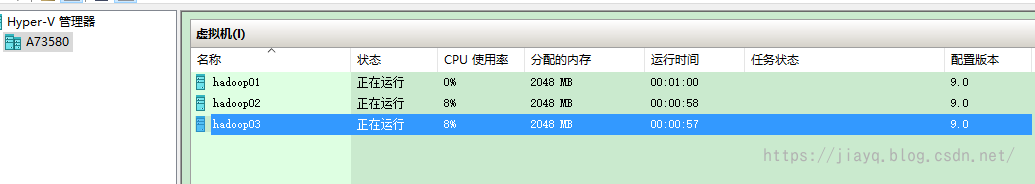

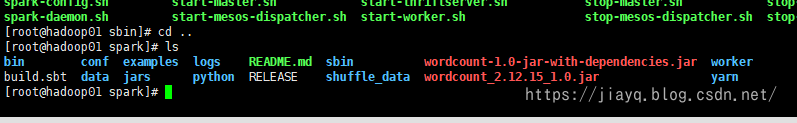

We tried to submit the jar package we typed to run in the spark cluster

Start the cluster environment first

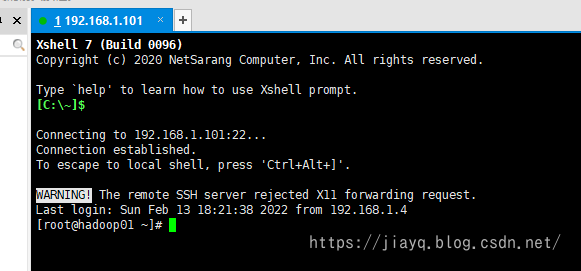

Then use the xshell link (free for home edition)

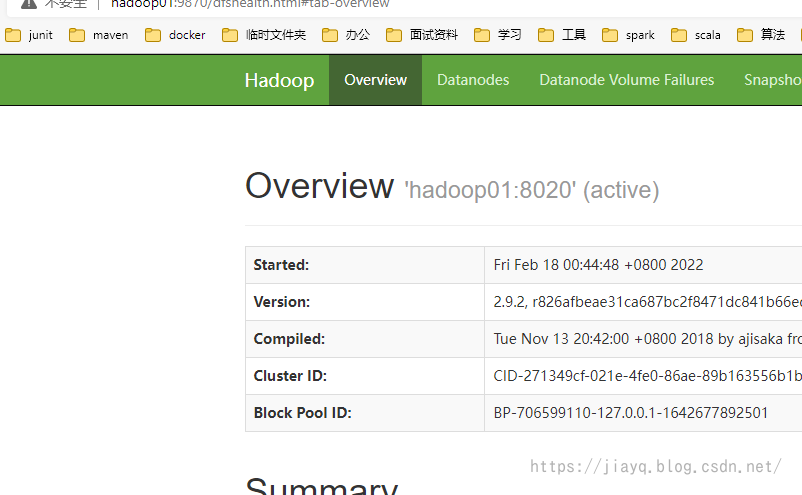

Start the hdfs cluster

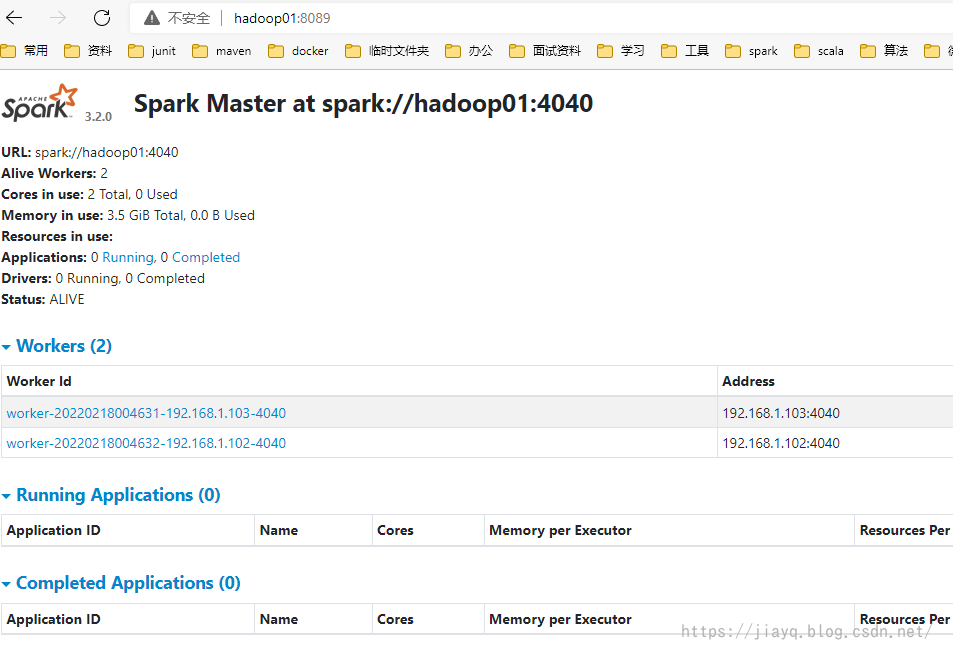

Then start the spark cluster

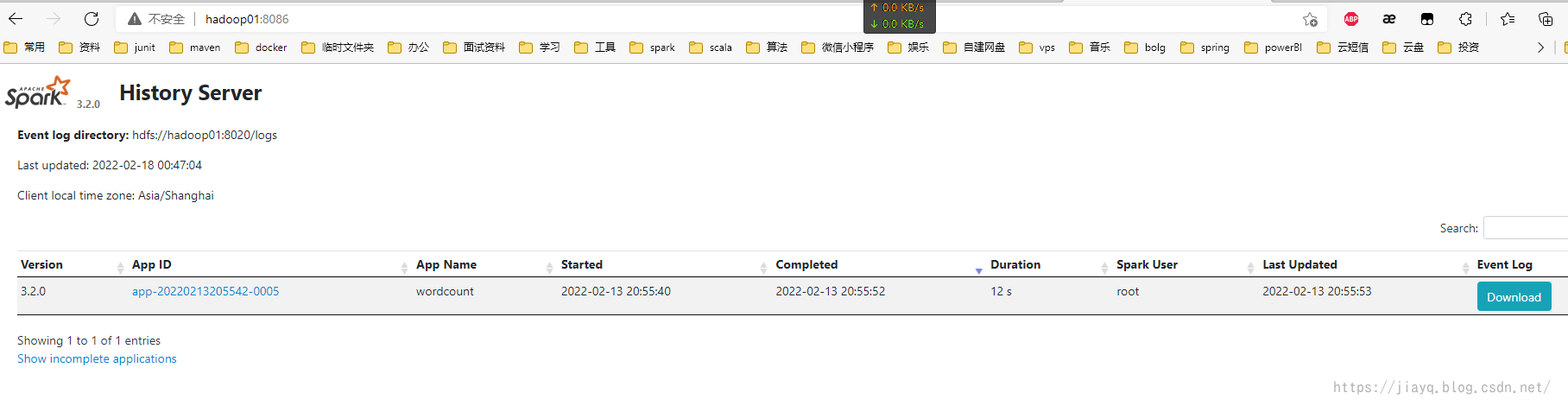

Start the spark history service

This wordcount is executed in the sbt build as detailed in spark source compilation and cluster deployment and sbt development environment integration in idea_ a18792721831 Blog-CSDN Blog

Since we already have files on our hdfs, we need to modify the code to use HDFS files instead of local files and cluster sparks instead of local sparks

The modified WordCount is as follows

package com.study.spark.maven.wordcount

import org.apache.spark.{SparkConf, SparkContext}

object WordCount {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setMaster("spark://hadoop01:4040").setAppName("wordcount.maven")

val sc = new SparkContext(conf)

sc.textFile("hdfs://hadoop01:8020/input/build.sbt")

.flatMap(_.split(" "))

.map(_->1)

.reduceByKey(_+_)

.collect()

.foreach(println(_))

}

}

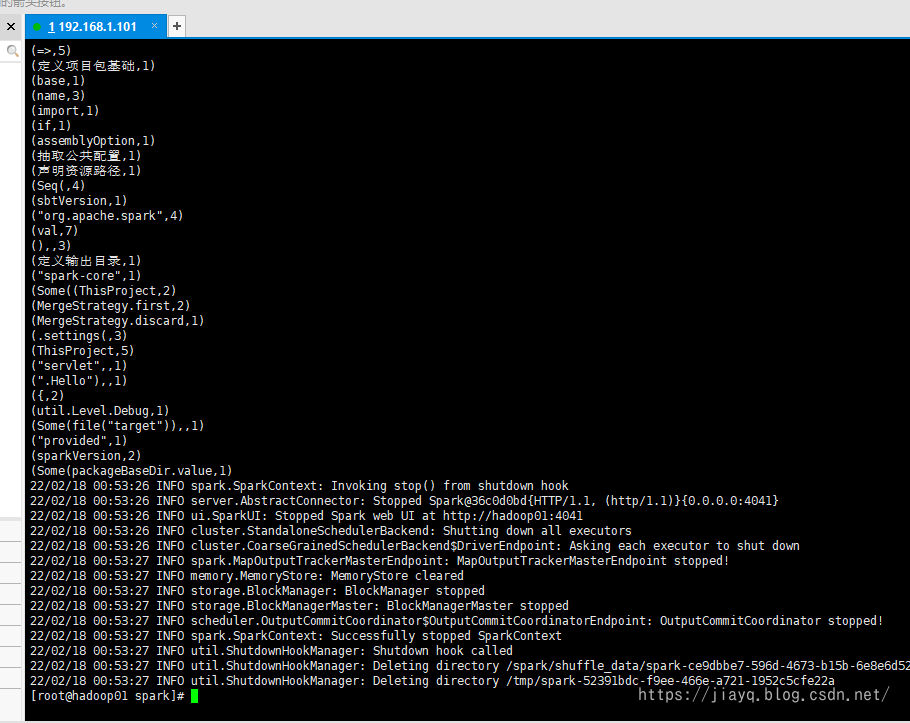

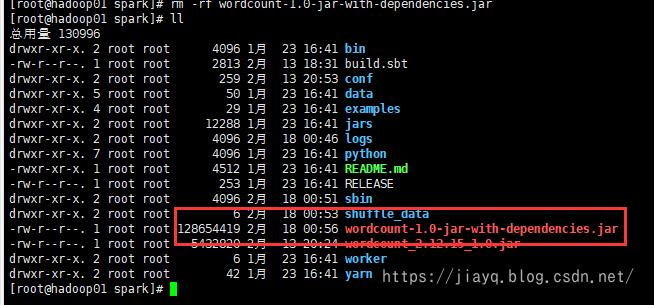

Pack and upload jar packages to the server, we don't need a spark dependency

Submit using the following command

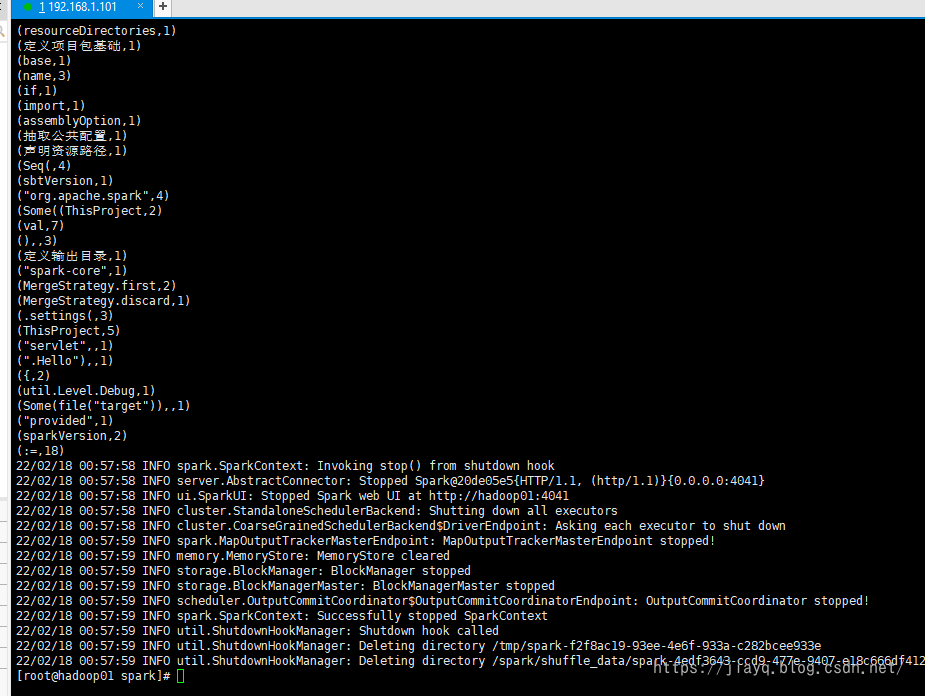

spark-submit --class com.study.spark.maven.wordcount.WordCount --master spark://hadoop01:4040 wordcount-1.0-jar-with-dependencies.jar

Successful execution

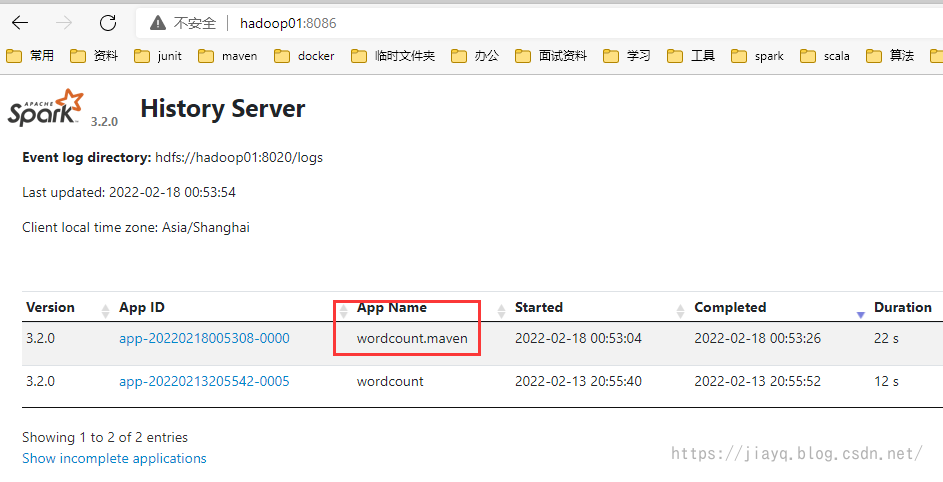

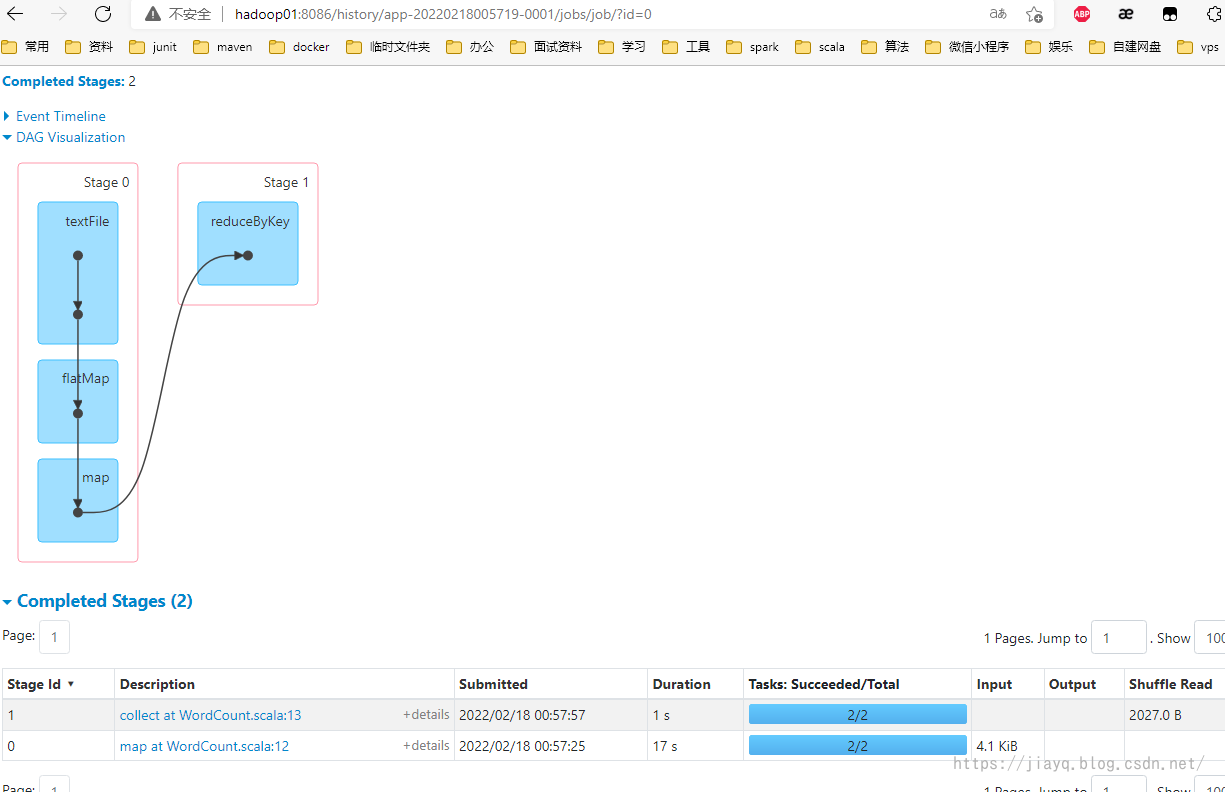

Can also be viewed from the spark history

In fact, I also have a question, if we pack the dependencies of spark, can it be executed?

Try!

Packages with spark dependencies, 128M, 😆

Execution:

Nothing different

This is because my server is also version 3.2.0 and compiled with the latest source code, as described in spark source compilation and cluster deployment and sbt development environment integration in idea_ a18792721831 Blog-CSDN Blog Local dependencies are also 3.2.0, so it is possible to have spark dependencies in jar packages.

If you do not know the exact version of the server's spark and the scala version of the server's spark, it is recommended that you do not package spark dependencies.