Safe sandbox operation container: introduction to gVisor

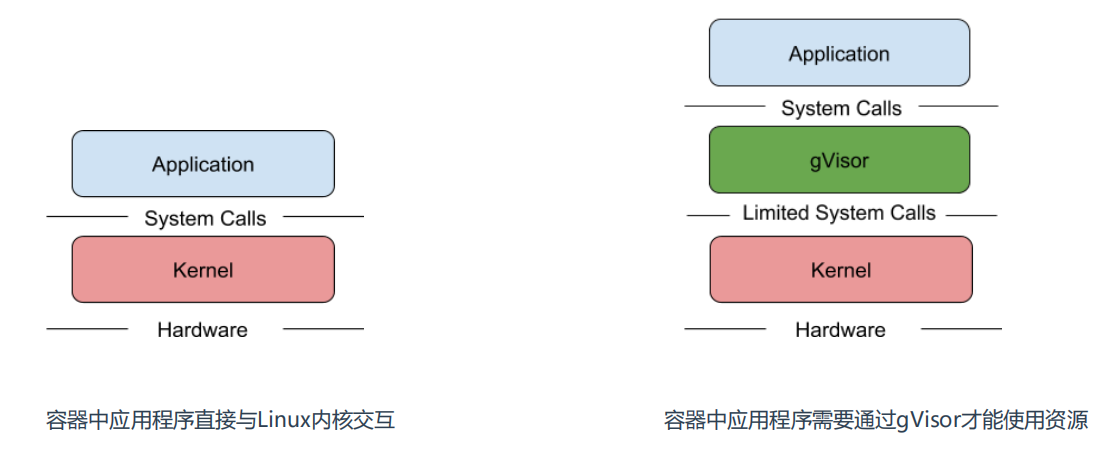

It can be seen that calling the Linux kernel needs to go through gvisor, which is mainly used to process the upper system calls. Gvisor will help you parse and call the kernel. If there is a security risk, it will be identified by gvisor.

Moreover, this attack capability is limited to the sandbox of gVisor, so it will not affect the linux kernel.

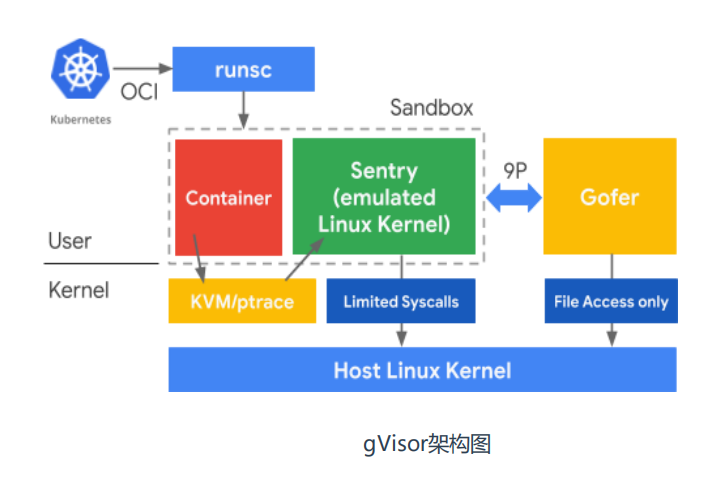

Safe sandbox operation container: gVisor architecture

When using any middleman, there will be some additional performance consumption. For example, virtualization and hyper layer, so the performance of virtual machine is not as good as that of physical machine. The same goes for gVisor

When using any middleman, there will be some additional performance consumption. For example, virtualization and hyper layer, so the performance of virtual machine is not as good as that of physical machine. The same goes for gVisor

Safe sandbox operation container: gVisor and Docker integration

[root@master ~]# uname -r 3.10.0-693.el7.x86_64

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm yum --enablerepo=elrepo-kernel install kernel-ml-devel kernel-ml –y grub2-set-default 0 reboot uname -r

sha512sum -c runsc.sha512 rm -f *.sha512 chmod a+x runsc mv runsc /usr/local/bin [root@k8s-master ~]# rm -f *.sha512 [root@k8s-master ~]# chmod a+x runsc [root@k8s-master ~]# mv runsc /usr/local/bin

runsc install # Check the configuration of / etc / docker / daemon json

systemctl restart docker

[root@k8s-master ~]# runsc install

2021/07/14 04:34:46 Added runtime "runsc" with arguments [] to "/etc/docker/daemon.json".

[root@k8s-master ~]# systemctl restart docker

[root@k8s-master ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": [

"https://b9pmyelo.mirror.aliyuncs.com"

],

"runtimes": {

"runsc": {

"path": "/usr/local/bin/runsc"

}

}

}

[root@k8s-node1 ~]# docker info

containerd version: 8fba4e9a7d01810a393d5d25a3621dc101981175

runc version: dc9208a3303feef5b3839f4323d9beb36df0a9dd

Runtimes: runc runsc

Default Runtime: runcdocker run -d --runtime=runsc nginx

[root@k8s-node1 ~]# docker run -d --runtime=runsc nginx Unable to find image 'nginx:latest' locally latest: Pulling from library/nginx b4d181a07f80: Pull complete 66b1c490df3f: Pull complete d0f91ae9b44c: Pull complete baf987068537: Pull complete 6bbc76cbebeb: Pull complete 32b766478bc2: Pull complete Digest: sha256:353c20f74d9b6aee359f30e8e4f69c3d7eaea2f610681c4a95849a2fd7c497f9 Status: Downloaded newer image for nginx:latest 25ba1c32b09fa04cad0d7397b21fcaac254ab9d539d28ab392ae90b1b38a3483 [root@k8s-node1 ~]# docker run --runtime=runsc -it nginx dmesg [ 0.000000] Starting gVisor... [ 0.257800] Committing treasure map to memory... [ 0.323427] Digging up root... [ 0.549272] Consulting tar man page... [ 0.727058] Feeding the init monster... [ 1.106393] Searching for needles in stacks... [ 1.209076] Generating random numbers by fair dice roll... [ 1.551108] Accelerating teletypewriter to 9600 baud... [ 1.858969] Reading process obituaries... [ 1.927673] Rewriting operating system in Javascript... [ 2.180562] Verifying that no non-zero bytes made their way into /dev/zero... [ 2.198676] Ready! #You can see that runc is not applicable to runsc. There is no such command here, because docker blocks some of the default system calls, and demesg is also included, so the functions of the Linux kernel cannot be called [root@k8s-node2 ~]# docker run nginx demesg /docker-entrypoint.sh: 38: exec: demesg: not found

You can see that the kernel version is different. The container kernel started with runsc is virtualized by gvisor, which is a virtual kernel.

#Container running through runsc [root@k8s-node2 ~]# docker exec -it 6d6683fe1bab bash root@6d6683fe1bab:/# uname -r 4.4.0 #Start with runc [root@k8s-node2 ~]# docker exec -it 0e30fdb6992e bash root@0e30fdb6992e:/# uname -r 5.13.1-1.el7.elrepo.x86_64

It can be seen here that runsc is used to manage containers, while runc is normally used to manage containers

[root@k8s-node2 ~]# ps -ef | grep runsc root 17114 1004 0 20:08 ? 00:00:00 containerd-shim -namespace moby -workdir /var/lib/containerd/io.containerd.runtime.v1.linux/moby/6d6683fe1bab8a122bdcc40b3ffe29e58c0534735b655db3bf5cb3b92c4416a0 -address /run/containerd/containerd.sock -containerd-binary /usr/bin/containerd -runtime-root /var/run/docker/runtime-runsc root 17129 17114 0 20:08 ? 00:00:00 runsc-gofer --root=/var/run/docker/runtime-runsc/moby --log=/run/containerd/io.containerd.runtime.v1.linux/moby/6d6683fe1bab8a122bdcc40b3ffe29e58c0534735b655db3bf5cb3b92c4416a0/log.json --log-format=json --log-fd=3 gofer --bundle /run/containerd/io.containerd.runtime.v1.linux/moby/6d6683fe1bab8a122bdcc40b3ffe29e58c0534735b655db3bf5cb3b92c4416a0 --spec-fd=4 --mounts-fd=5 --io-fds=6 --io-fds=7 --io-fds=8 --io-fds=9 --apply-caps=false --setup-root=false 65534 17133 17114 0 20:08 ? 00:00:01 runsc-sandbox --root=/var/run/docker/runtime-runsc/moby --log=/run/containerd/io.containerd.runtime.v1.linux/moby/6d6683fe1bab8a122bdcc40b3ffe29e58c0534735b655db3bf5cb3b92c4416a0/log.json --log-format=json --log-fd=3 boot --bundle=/run/containerd/io.containerd.runtime.v1.linux/moby/6d6683fe1bab8a122bdcc40b3ffe29e58c0534735b655db3bf5cb3b92c4416a0 --controller-fd=4 --mounts-fd=5 --spec-fd=6 --start-sync-fd=7 --io-fds=8 --io-fds=9 --io-fds=10 --io-fds=11 --stdio-fds=12 --stdio-fds=13 --stdio-fds=14 --cpu-num 1 6d6683fe1bab8a122bdcc40b3ffe29e58c0534735b655db3bf5cb3b92c4416a0 [root@k8s-node2 ~]# ps -ef | grep runc root 1826 1004 0 19:46 ? 00:00:00 containerd-shim -namespace moby -workdir /var/lib/containerd/io.containerd.runtime.v1.linux/moby/1513aca9d7cc807ebb8a48c999ffe18aba360d1b0dee6b18ccd8147244e36416 -address /run/containerd/containerd.sock -containerd-binary /usr/bin/containerd -runtime-root /var/run/docker/runtime-runc root 1828 1004 0 19:46 ? 00:00:00 containerd-shim -namespace moby -workdir /var/lib/containerd/io.containerd.runtime.v1.linux/moby/77bc2b32c40eda885eca918d08efc6aafa1d921eb3a643215a062d84eff045ac -address /run/containerd/containerd.sock -containerd-binary /usr/bin/containerd -runtime-root /var/run/docker/runtime-runc root 1901 1004 0 19:46 ? 00:00:00 containerd-shim -namespace moby -workdir /var/lib/containerd/io.containerd.runtime.v1.linux/moby/545aa9ffb5362589aa44bb9b55573ab4f6b53af9ef18222d7f13c3863f37edd4 -address /run/containerd/containerd.sock -containerd-binary /usr/bin/containerd -runtime-root /var/run/docker/runtime-runc root 2221 1004 0 19:47 ? 00:00:00 containerd-shim -namespace moby -workdir /var/lib/containerd/io.containerd.runtime.v1.linux/moby/52a806b60e5a43f29c381c38fcdb0b11be33822963127fc1f617b6346513cb93 -address /run/containerd/containerd.sock -containerd-binary /usr/bin/containerd -runtime-root /var/run/docker/runtime-runc root 2460 1004 0 19:47 ? 00:00:06 containerd-shim -namespace moby -workdir /var/lib/containerd/io.containerd.runtime.v1.linux/moby/c8049ebec32fa4f1b27dad6b4329877ac3c045ecb82f0210bace811810cdfaea -address /run/containerd/containerd.sock -containerd-binary /usr/bin/containerd -runtime-root /var/run/docker/runtime-runc root 2607 1004 0 19:47 ? 00:00:00 containerd-shim -namespace moby -workdir /var/lib/containerd/io.containerd.runtime.v1.linux/moby/31e7f22f9f7f2cde04e3ddfe777d4adf851fe248d5f3bdd4b7bae16aebfec7f0 -address /run/containerd/containerd.sock -containerd-binary /usr/bin/containerd -runtime-root /var/run/docker/runtime-runc root 2622 1004 0 19:47 ? 00:00:00 containerd-shim -namespace moby -workdir /var/lib/containerd/io.containerd.runtime.v1.linux/moby/1c430298d1a3d66919c1a9f763fa7aad897203a63f883f4a3a18b298a698db55 -address /run/containerd/containerd.sock -containerd-binary /usr/bin/containerd -runtime-root /var/run/docker/runtime-runc root 3067 1004 0 19:47 ? 00:00:00 containerd-shim -namespace moby -workdir /var/lib/containerd/io.containerd.runtime.v1.linux/moby/53b80b36a96fa7c3b63935007d5426652829220c547f71cef903b910481a7a62 -address /run/containerd/containerd.sock -containerd-binary /usr/bin/containerd -runtime-root /var/run/docker/runtime-runc root 3926 1004 0 19:48 ? 00:00:00 containerd-shim -namespace moby -workdir /var/lib/containerd/io.containerd.runtime.v1.linux/moby/4f730ddd354fe034acf48073c1fb4086932a99e9130612696c29c754785c0b14 -address /run/containerd/containerd.sock -containerd-binary /usr/bin/containerd -runtime-root /var/run/docker/runtime-runc root 19149 1004 0 20:11 ? 00:00:00 containerd-shim -namespace moby -workdir /var/lib/containerd/io.containerd.runtime.v1.linux/moby/0e30fdb6992e5e457bfd157a4b59fd93a15cc2fd1de7f0b72e61adb42146e995 -address /run/containerd/containerd.sock -containerd-binary /usr/bin/containerd -runtime-root /var/run/docker/runtime-runc

When the container makes a system call, it is the virtual kernel. If you want to destroy the kernel, it is also the virtual kernel, which has no impact on the kernel of the host