1, Getting started with Kubernetes

1. Evolution of application deployment mode

# 1. Traditional deployment Advantages: simple deployment Disadvantages: resource usage boundaries cannot be defined for applications # 2. Virtualization deployment (multiple virtual machines can be run on one physical machine, and each virtual machine is an independent operating system) Advantages: the program environment will not affect each other, providing a certain degree of security Disadvantages: it increases the operating system and wastes deployment resources # 3. Container deployment (similar to virtualization, but with shared operating system) Advantages: it can ensure that every container has its own file system and cross platform operation Disadvantages: container orchestration 1,One container failed down Machine, how to make a container start immediately and replace the container to take over the work 2,How to expand the number of containers horizontally when the concurrency becomes larger # Container orchestration tool Swarem: Docker Tools for arranging their own containers Mesos : apache A tool for unified resource management and control, which requires and Marathon combination kuernetes: google Open source container choreography tool # 2. Cluster type ```bash 2,Cluster type # kubernetes clusters are generally divided into two types: one master multi-slave and multi master multi-slave # 1. One master and many slaves: One Master Node and multiple Node Node, simple to build, with single machine fault analysis, suitable for the test environment # 2. Multi master and multi slave: Multiple sets Master Node and multiple Node Node, difficult to build, high security, suitable for production environment

3. Installation mode

Official address: https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm/

# Method 1: kubedm Kubeadm It's a K8s Deployment tools, providing kubeadm init and kubeadm join,For rapid deployment Kubernetes Cluster. # Mode 2: binary package from github Download the binary package of the distribution and manually deploy each component to form Kubernetes Cluster. Kubeadm The deployment threshold is lowered, but many details are shielded, so it is difficult to troubleshoot problems. If you want to be more controllable, binary package deployment is recommended Kubernetes Although the manual deployment of clusters is troublesome, you can learn a lot of working principles during the period, which is also conducive to later maintenance.

4. Docker comopse and k8s difference

1. Docker comopse is a stand-alone container choreography tool

2. k8s is a container choreography tool for clusters

5,Kubernetes

The relationship between k8s and docker?

docker is a container and k8s is a container management platform

Kubernetes Is a portable and extensible open source platform for managing containerized workloads and services, which can promote declarative configuration and automation. Kubernetes It has a large and rapidly growing ecosystem. Kubernetes Services, support, and tools are widely available.

2, Introduction to Kubernetes

1. Kubernetes overview

- Kubernetes is a new distributed leading solution based on container technology. Abbreviation: k8s. It is Google's open source container cluster management system. Its design inspiration comes from a container management system called Borg within Google. Inheriting Google's more than ten years of experience in using container clusters, it provides container applications with a series of complete functions such as deployment and operation, resource scheduling, service discovery and dynamic scaling, which greatly improves the convenience of large-scale container cluster management.

- Kubernetes is a complete distributed system support platform with complete cluster management capability, multi-level security protection and access mechanism, multi tenant application support capability, transparent service registration and discovery mechanism, built-in intelligent load balancer, powerful fault discovery and self-healing capability, service rolling upgrade and online capacity expansion capability Scalable automatic resource scheduling mechanism and multi granularity resource quota management capability.

- In terms of cluster management, Kubernetes divides the machines in the cluster into a Master Node and a group of work nodes. In the Master Node, a group of processes related to cluster management, Kube apiserver, Kube controller manager and Kube scheduler, are running. These processes realize the resource management, Pod scheduling, elastic scaling, security control Management capabilities such as system monitoring and error correction are fully automatic. As the working Node in the cluster, Node runs real applications. The smallest running unit managed by Kubernetes on Node is Pod. kubelet and Kube proxy service processes of Kubernetes are running on Node. These service processes are responsible for the creation, startup, monitoring, restart, destruction of Pod and the load balancer of software mode.

- In Kubernetes cluster, it solves the two difficult problems of service expansion and upgrading in traditional IT systems. If today's software is not particularly complex and the peak traffic to be carried is not particularly large, the deployment of back-end projects only needs to install some simple dependencies on the virtual machine, and the projects to be deployed can be compiled and run. However, as the software becomes more and more complex, a complete back-end service is no longer a single service, but consists of multiple services with different responsibilities and functions. The complex topology relationship between services and the performance requirements that can not be met by a single machine make the software deployment, operation and maintenance very complex, This makes the deployment and operation and maintenance of large clusters a very urgent need.

- The emergence of Kubernetes not only dominates the container layout market, but also changes the past operation and maintenance mode, which not only blurs the boundary between development and operation and maintenance, but also makes the role of DevOps clearer. Every software engineer can define the topological relationship between services, the number of nodes on the line Resource usage and rapid horizontal capacity expansion, blue-green deployment and other complex operation and maintenance operations in the past

2. k8s features

# (1) Automatic packing Based on the resource configuration requirements of the application running environment, the application container is automatically deployed # (2) Self healing (self healing) When the container fails, the container will be restarted Node When the node has problems, the container will be redeployed and rescheduled When the container fails to pass the monitoring inspection, the container will be closed until the container is in normal operation # (3) Horizontal expansion Through simple commands, users UI Interface or based on CPU And other resource usage, and expand or cut the scale of the application container # (3) Service discovery Users do not need to use additional service discovery mechanisms to be able to Kubernetes It can realize service discovery and load balancing # (4) Rolling update The applications running in the application container can be updated once or in batch according to the changes of applications # (5) Version fallback According to the application deployment, the historical version of the application running in the application container can be immediately rolled back # (6) Key and configuration management Without rebuilding the image, you can deploy and update the key and application configuration, similar to hot deployment. # (7) Storage orchestration Automatic storage system mounting and application, especially data persistence for stateful applications, is very important. The storage system can come from local directory and network storage(NFS,Gluster,Ceph etc.),Public cloud storage services # (8) Batch processing Provide one-time tasks and scheduled tasks; Scenarios for batch data processing and analysis # (9) Elastic expansion You can automatically adjust the number of containers running in the cluster as needed # (10) Load balancing If a service starts multiple containers, the requested load balancing can be realized automatically

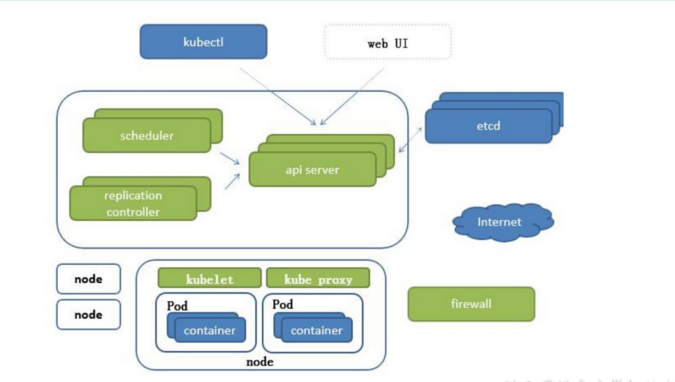

3, Structure

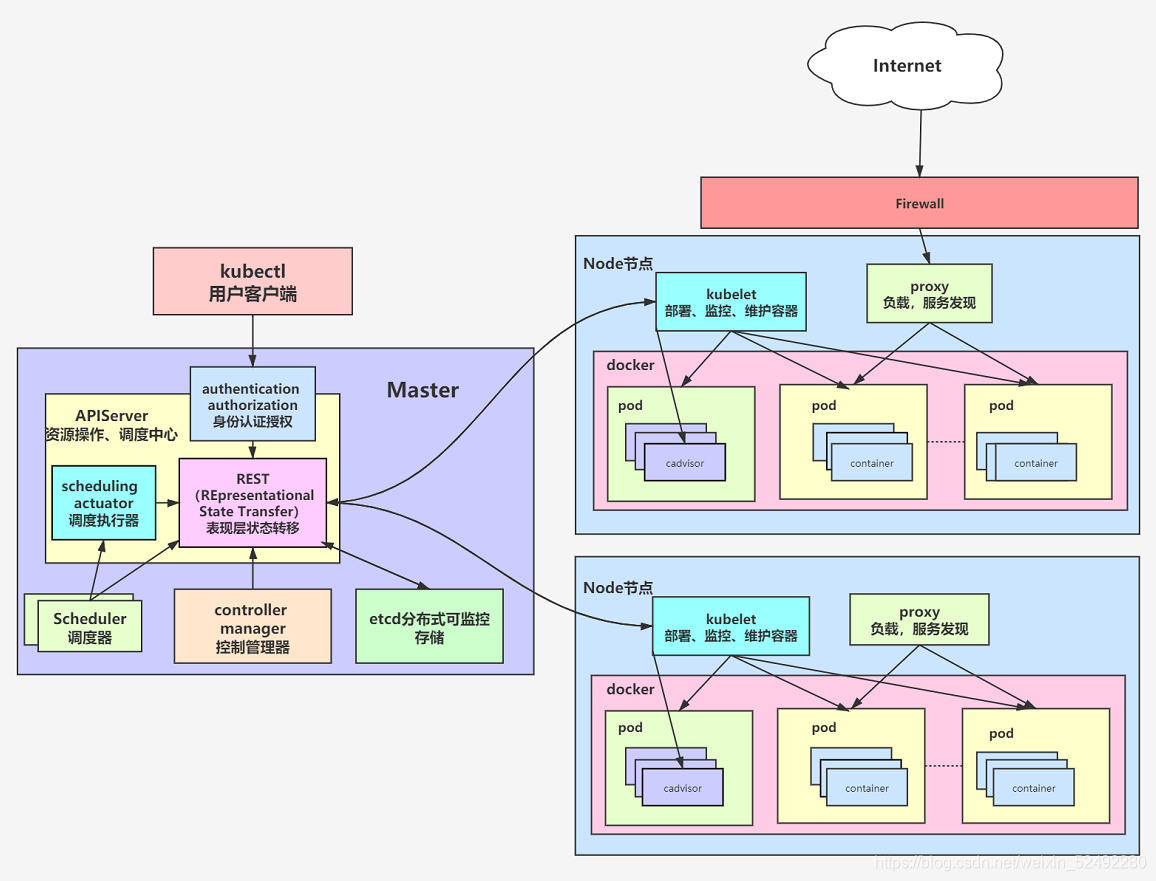

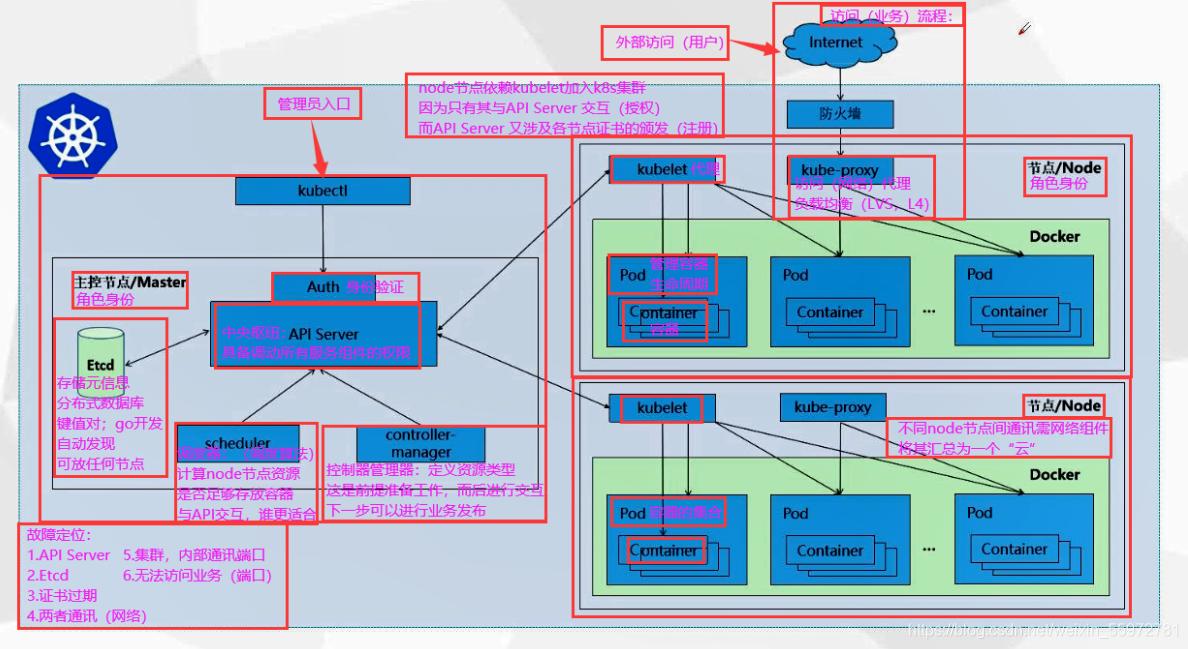

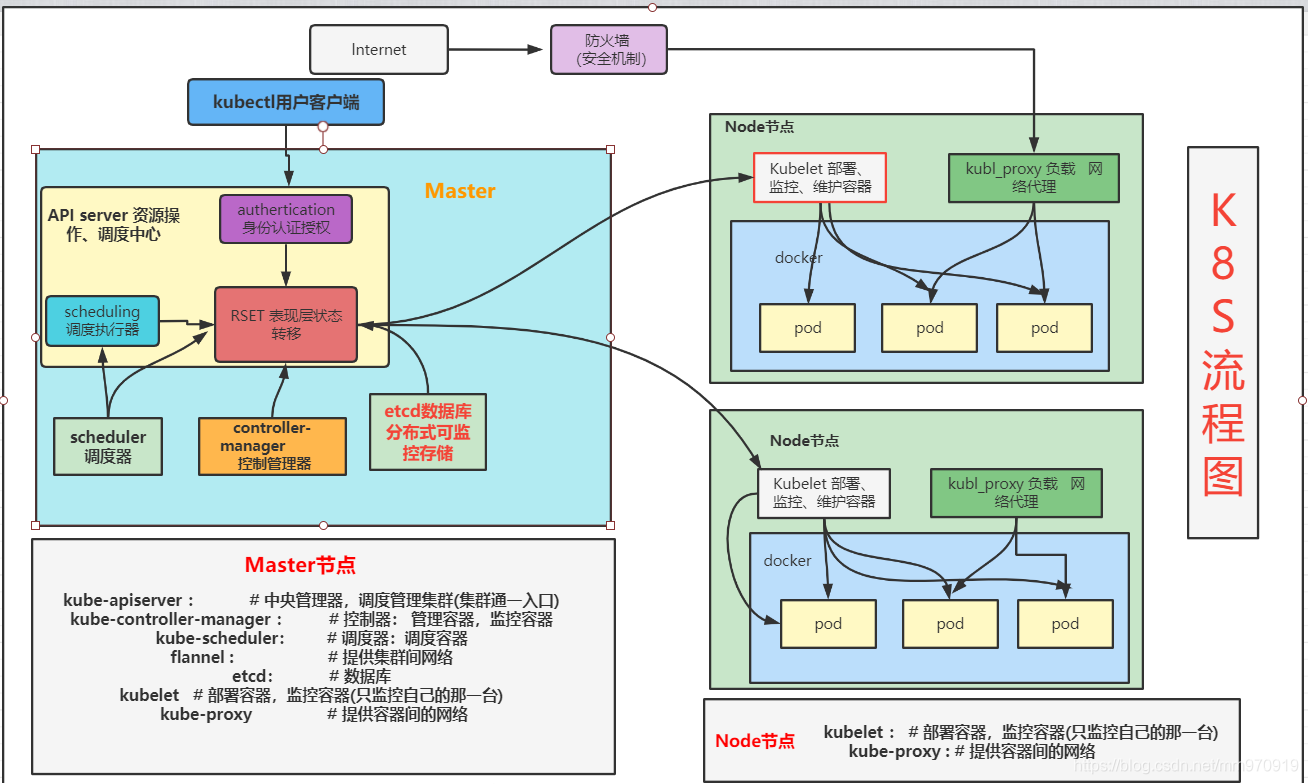

Kubernetes follows the very traditional client server architecture. The client communicates with kubernetes cluster through RESTful interface or directly using kubectl. In fact, there is no much difference between the two. The latter only encapsulates and provides the RESTful API provided by kubernetes. Each kubernetes cluster is composed of a group of Master nodes and a series of Worker nodes. The Master node is mainly responsible for storing the state of the cluster and allocating and scheduling resources for kubernetes objects.

Kubernetes is composed of master extension node and node work node. The components installed on each node are different

1.Master (mainly used to manage clusters)

It is mainly responsible for receiving the request from the client, arranging the execution of the container and running the control cycle, and migrating the cluster state to the target state. The Master node is composed of seven components:

#1.API Server (Kube apiserver: central manager, dispatching management cluster)

It is responsible for handling requests from users, and its main function is to provide external services RESTful Interface

It includes read requests for viewing cluster status and write requests for changing cluster status. It is also the only one etcd Components of cluster communication.

#2.Controller (Kube Controller Manager: Controller: management container, monitoring container)

The manager runs a series of controller processes, which will continuously adjust the objects in the whole cluster in the background according to the user's expected state

When the state of the service changes, the controller will find the change and begin to migrate to the target state.

A resource corresponds to a controller, such as a container resource( pod)If the controller is still alive, the resource will be recreated, so the repair ability depends on the completion of the control

controllerManager Responsible for managing these controllers, including:

1> Node controller( Node Controller): Be responsible for notifying and responding when a node fails.

2>Replica controller( Replication Controller): Responsible for maintaining the correct number of replicas for each replica controller object in the system Pod.

3>Endpoint controller( Endpoints Controller): Fill endpoint(Endpoints)object(Join immediately Service And Pod).

4>Service account and token controller( Service Account & Token Controllers): Create default accounts and for the new namespace API Access token.

#3.Scheduler (Kube scheduler: scheduler: scheduling container)

The scheduler is actually kubernetes Running in Pod Select deployed Worker node

It will select the node that can best meet the request according to the needs of users Pod,It will be scheduled every time Pod Execute when.

#4. Flannel (providing inter cluster network)

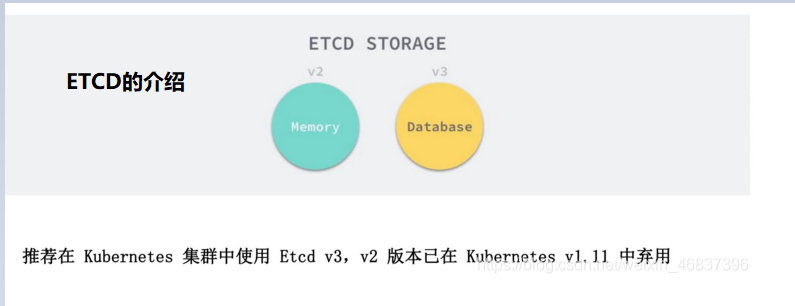

#5. Etcd (database)

Distributed key value storage system

Used to save cluster status data

such as :Pod,Service Other object information

#6. Kubelet (deployment container, monitoring container)

#7. Kube proxy (providing network between containers)

2. Node (mainly used to deploy applications)

#1. Kubelet (deployment container, monitoring container) kubelet It is the main service on a node, which periodically starts from APIServer Accept new or modified pod Standardize and ensure the security on the node pod And normal operation of its container It also ensures that the node will migrate to the target state, and the node will still migrate to the target state Master The node sends the health status of the host. kubelet And docker Interact and operate docker To create the corresponding container, kubelet position pod Life cycle of. #2. Kube proxy (providing network between containers) Be responsible for the subnet management of the host, and expose the services to the outside The principle is to forward the request to the correct server in multiple isolated networks Pod Or containers. # 3,Docker Various operations of containers on load nodes

3.Kubernetes architecture diagram

In the architecture diagram, we divide the services into those running on the work node and those composing the dashboard at the cluster level Kubernetes It is mainly composed of the following core components: 1. etcd Save the status of the whole cluster, and the key values are stored in the database K8S All important information of the cluster (persistence) 2. apiserver It provides a unique access to resources and provides authentication, authorization, access control API Registration and discovery, etc 3. controller manager Responsible for maintaining cluster status, such as fault detection, automatic expansion, rolling update, etc 4. scheduler Responsible for the scheduling of resources, according to the scheduled scheduling strategy Pod Dispatch to the corresponding machine, introduce the task, and select the appropriate node to allocate the task 5. kubelet Responsible for maintaining the life cycle of the container and Volume(CVI)And network( CNI)Directly interact with the container engine to realize container life cycle management 6. Container runtime Responsible for image management and Pod And the real operation of the container(CRI) 7. kube-poxy Responsible for Service provide cluster Internal service discovery and load balancing, responsible for writing rules to IPTABLES,IPVS Implementation of service mapping access In addition to the core components, there are some recommended components: 8. kube-dns Responsible for providing services for the whole cluster DNS service 9. Ingress Controller To provide Internet access for services, the official can only implement four-tier agents, INGRESS It can implement seven layer agent 10. Heapster Provide resource monitoring 11. Dashboard provide GUIFederation Provide clusters across availability zones to K8S The cluster provides a B/S Architecture access architecture 12. Fluentd-elasticsearch Provide cluster log collection, storage and query 13 .FEDERATION: Provide a multi center cluster K8S Unified management function 14.PROMETHEUS: provide K8S Monitoring capability of cluster 15.ELK: provide K8S Cluster log unified analysis and intervention platform

4. k8s core

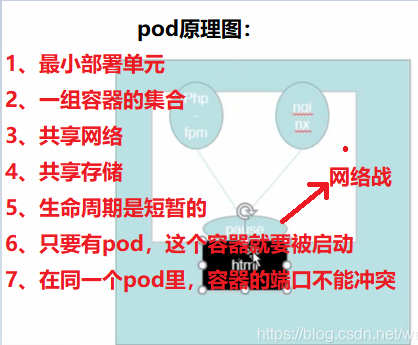

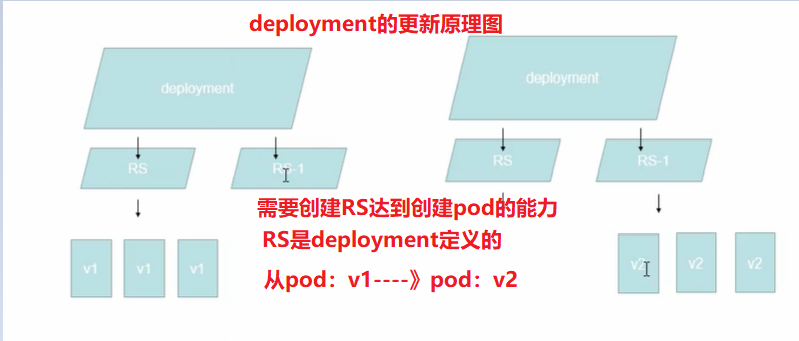

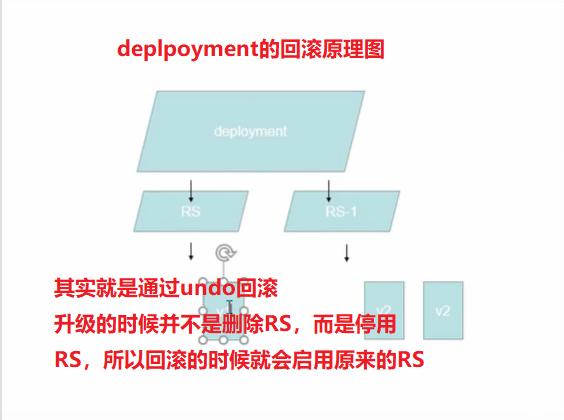

# 1,Pod 1,Minimum deployment unit 2,A collection of containers 3,Shared network 4,Shared storage 5,The life cycle is short 6,As long as there is pod,The container is about to be started 7,In the same pod In, the ports of the container cannot conflict # 2. Controller controller 1,Ensure expected pod Number of copies 1)Stateless application deployment --->Just use it( apache) 2)Stateful application deployment --> Only in a specific state can it be used( mysql) 2,Ensure that all node Run the same Pod 3,One time and scheduled tasks But it is generally recommended Deployment To automatically manage, so there is no need to worry about incompatibility with other mechanisms. Deployment by Pod and ReplicaSet Provides a declarative definition (declarative) method, To replace Generation before ReplicationController To facilitate the management of applications. Typical application scenarios include: ```bash * definition Deployment To create Pod and ReplicaSet * Rolling upgrade and rollback application * Expansion and contraction * Pause and resume Deployment `` # 3. Service load balancing 1,Define a group pod Access rules for( ip,Port), service collect pod It is collected through tags. # 4. Label label Used for Pod Separate, the same class Pod Will have the same label # 5. NameSpace namespace For isolation Pod Operating environment

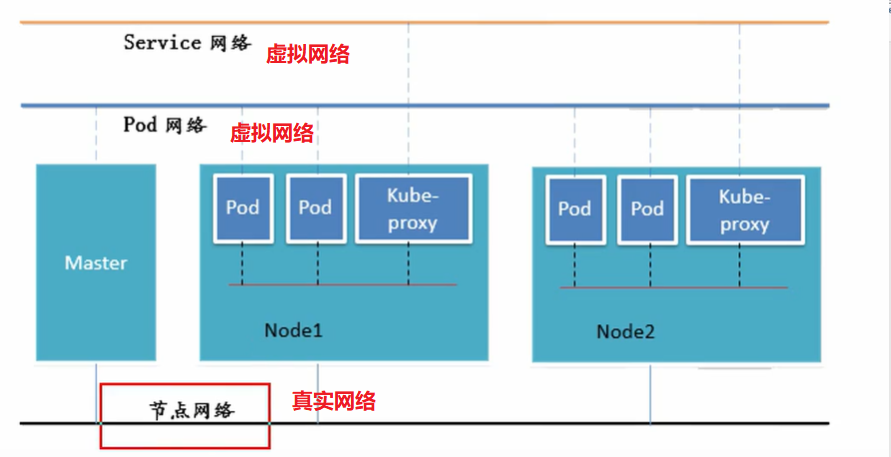

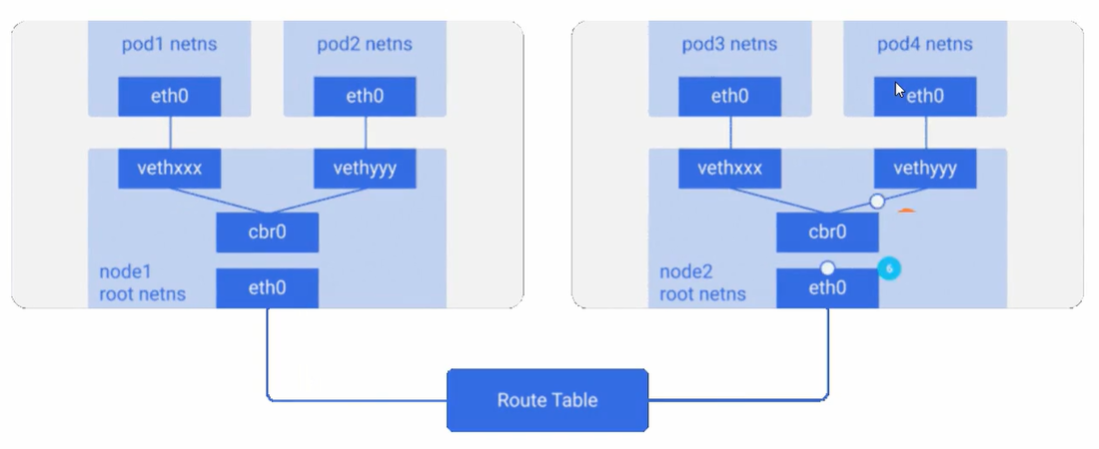

5. Network communication mode (flannel)

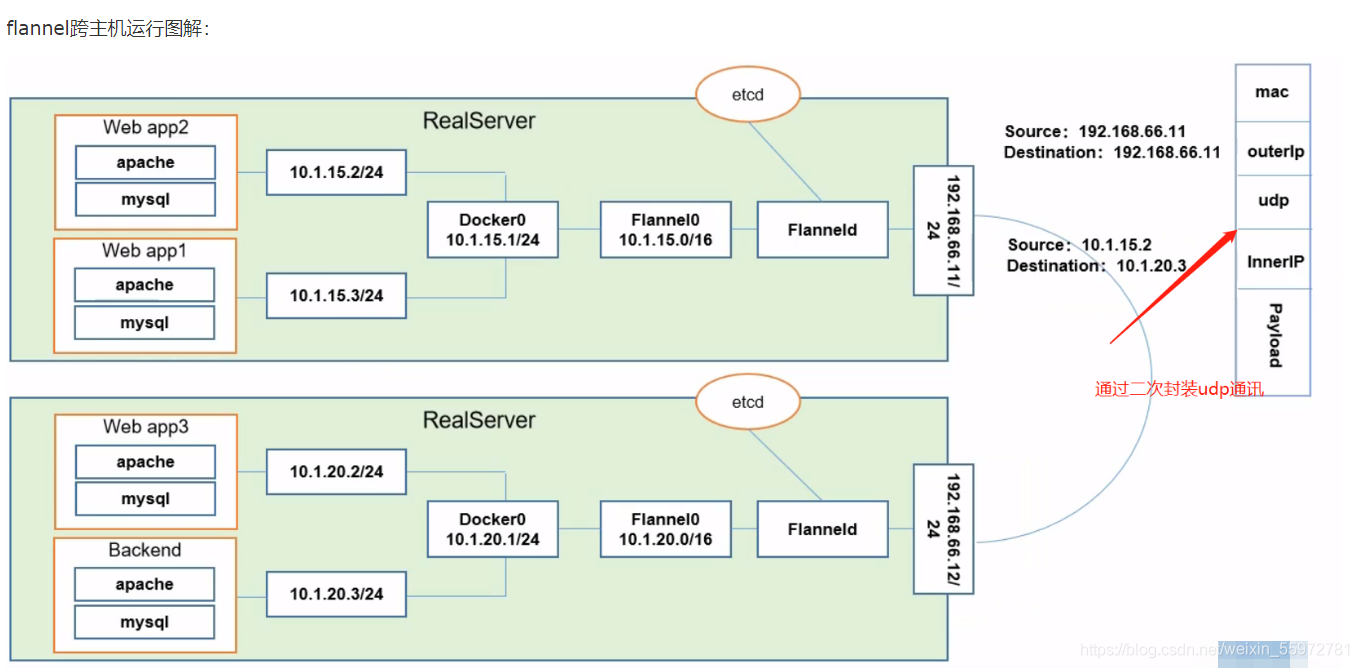

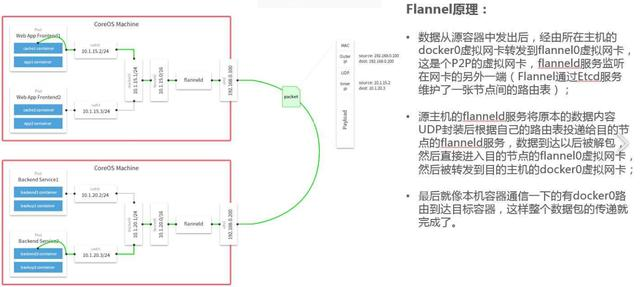

1>kubernetes The network model assumes`All pod All in a flat cyberspace`,Because this is GCE(Google Computer Engine)It's ready-made, so k8s Suppose the network already exists. 2>And in the private cloud k8s Cluster, because this flat network does not exist, you need to set up your own network to get through the network on different nodes docker The container is interconnected and then run k8s. 3>Communication schemes such as network can be adopted`Flannel`To achieve a flat cyberspace. 4>Flannel yes CoreOS Team targeting k8s Designed network planning services,`This function is created by the hosts of different nodes in the cluster Docker Each container has a unique virtual of a complete set IP address`,And in these IP Establish a network coverage between(`Overlay Network`)To transfer the data packet to the target container

[simulate the process of flannel transmitting data]

Instructions provided by Flannel of ETCD:

Storage management Flannel allocable IP address segment resources

Monitor the actual address of each Pod in ETCD,

And establish and maintain the Pod node routing table in memory

#Simulate using Flannel network

(Host computer A Upper docker container(10.1.15.10)Send data to host B Upper docker container(10.1.20.20)Process of)

1>first, docker Container 10.1.15.10 To send data to the host B On, match the relevant routes according to the source container and destination container IP Match routing rules, and match two at the same time IP The routing rule is 10.1.0.0/16,For container access on the same host, the match is 10.1.15.0 Or 10.1.20.0

2>Data from docker0 After the bridge comes out, it will be delivered to flannel0 network card

3>monitor flannel0 Network card Flanneld After receiving the data, the service encapsulates it into a packet and sends it to the host B

4 Host computer B Upper Flanneld After receiving the data packet, the service unpacks and restores it to the original data

5>Flanneld Service sends data to flannel0 The network card matches the routing rule 10 according to the destination container address.1.20.0/24(docker0)

6>Post data to docker0 The bridge then enters the target container 10.1.20.20

Same Pod Internal communication: same Pod Share the same network namespace, Share the same Linux Protocol stack Pod1 to Pod2 > Pod1 And Pod2 Not on the same host, Pod Your address is docker0 In the same network segment, but docker0 The network segment is completely different from the host network card IP Network segment, and different Node Communication between hosts can only be carried out through the physical network card of the host. take Pod of IP And where Node of IP Association, through this association Pod Can access each other > Pod1 And Pod2 On the same machine, from Docker0 The bridge forwards the request directly to Pod2,No need to go through Flannel Pod to Service Network: Based on performance considerations, All for iptables Maintenance and forwarding Pod To the Internet: Pod Send a request to the external network and look up the routing table, Forward the packet to the host network card. After the host network card completes routing, iptables/lvs implement Masquerade,Source IP Change to host network card IP,Then send a request to the Internet server Internet access Pod: Service