1 what is Apache Beam

Apache Beam is an open source unified big data programming model. It does not provide an execution engine, but supports various platforms, such as GCP Dataflow, Spark, Flink, etc. Batch processing or stream processing is defined through Apache Beam, which can be run on various execution engines.

At present, the supported SDK languages are also very rich, including Java, Python, Go, etc.

1.1 some basic concepts

PCollection: it can be understood as a data packet. Data processing is the conversion and processing of various pcollections.

PTransform: represents data processing. It is used to define how data is processed and to process PCollection.

Pipeline: pipeline is a collection composed of PTransform and PCollection. It can be understood that it defines the whole process of data processing from source to target.

Runner: data processing engine.

The simplest example of Pipeline is as follows:

Read data from the database into PCollection, convert it into another PCollection, and then write it back to the database.

Multiple ptransforms can handle the same PCollection:

A PTransform can also generate multiple pcollections:

2 initial experience of java development

Let's develop a WordCount by using the Java SDK.

First introduce the necessary dependencies, version 2.32.0:

<dependency>

<groupId>org.apache.beam</groupId>

<artifactId>beam-sdks-java-core</artifactId>

<version>${beam.version}</version>

</dependency>

<dependency>

<groupId>org.apache.beam</groupId>

<artifactId>beam-runners-direct-java</artifactId>

<version>${beam.version}</version>

</dependency>Write the Java main program as follows:

public class WordCountDirect {

public static void main(String[] args) {

PipelineOptions options = PipelineOptionsFactory.create();

Pipeline pipeline = Pipeline.create(options);

PCollection<String> lines = pipeline.apply("read from file",

TextIO.read().from("pkslow.txt"));

PCollection<List<String>> wordList = lines.apply(MapElements.via(new SimpleFunction<String, List<String>>() {

@Override

public List<String> apply(String input) {

List<String> result = new ArrayList<>();

char[] chars = input.toCharArray();

for (char c:chars) {

result.add(String.valueOf(c));

}

return result;

}

}));

PCollection<String> words = wordList.apply(Flatten.iterables());

PCollection<KV<String, Long>> wordCount = words.apply(Count.perElement());

wordCount.apply(MapElements.via(new SimpleFunction<KV<String, Long>, String>() {

@Override

public String apply(KV<String, Long> count) {

return String.format("%s : %s", count.getKey(), count.getValue());

}

})).apply(TextIO.write().to("word-count-result"));

pipeline.run().waitUntilFinish();

}

}Run directly. By default, it is executed through DirectRunner, that is, it can be executed locally without building. It is very convenient to develop and test Pipeline.

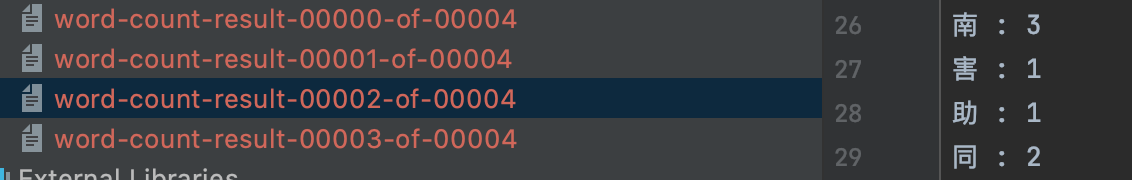

The general process of the whole procedure is as follows:

Read all lines from pkslow.txt file, then split each line into multiple characters, calculate the number of occurrences of each character, and output it to word count result in the file.

The contents of pkslow.txt file are as follows:

The result file after execution is as follows:

3 Summary

After a brief experience, the model development based on Beam is still very simple and easy to understand. However, how efficient it is on various platforms needs to be further explored.

Please check the code: https://github.com/LarryDpk/pkslow-samples