Articles Catalogue

- I. Overview of the Basic Principles of Reptiles

- 1. Basic Reptilian Process

- 2. Request and Response

- 3. Request

- 4. Response

- 5. The data types captured

- 6. Analytical Approach

- 7. JavaScript rendering

- 8. Preservation of data

- 2. Reptilian login github

- 1. Send get request to Github login page to get csrf_token

- 2. Send a post request to login Github for cookies

- 3. Visit the page with cookies and get content

- 3. Detailed description of requests module

- Brief description of bs4 module

- V. Polling/Long Polling

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

- I.

I. Overview of the Basic Principles of Reptiles

1. Basic Reptilian Process

- Initiation of requests

Through the HTTP library, a Request is sent to the target site, which can contain additional headers and other information, waiting for the server to respond. - Getting response content

If the server responds properly, it will get a Response. The content of the Response is the content of the page to be retrieved. The type may be HTML, Json string, binary data (such as pictures and videos). - Analysis of Content

The content may be HTML, which can be parsed with regular expressions and web parsing libraries. It may be Json, which can be directly converted to Json object parsing, or binary data, which can be saved or further processed. - Save data

It can be saved in various forms, such as text, database, or file in a specific format.

2. Request and Response

The browser sends a message to the server where the address is located, a process called HTTP Request. After receiving the message sent by the browser, the server can process the message according to the content of the message sent by the browser, and then send the message back to the browser. This process is called HTTP Response. After the browser receives the server's Respense information, it will process the information accordingly, and then display it.

3. Request

- Request mode

There are two main types of GET and POST, and HEAD, PUT, DELETE, OPTIONS and so on. - Request URL

The full name of the URL is the Unified Resource Locator, such as a web document, a picture, a video, etc. can be uniquely determined by the URL. - Request header

Contains header information when requesting, such as User-Agent, Host, Cookies, etc. - Requestor

Additional data carried on request, such as form data at the time of form submission

4. Response

- Response state

There are many response states, such as 200 for success, 301 jump, 404 for page failure, 502 server error. - Response Head

Such as content type, content length, server information, Cookie settings, etc. - Response volume

The most important part, including the content of the requested resources, such as HTML, image binary data, etc.

5. The data types captured

- Page Text

For example, HTML document, Json format text, etc. - picture

Get the binary file and save it as a picture format. - video

Save the same binary file as video format. - Other data

As long as it can be requested, it can generally be obtained.

6. Analytical Approach

- Direct processing

- Json parsing

- regular expression

- BeautifulSoup

- PyQuery

- Path

7. JavaScript rendering

- Analysis of Ajax requests

- Selenium/WebDriver

- Splash

- PyV8

- Ghost

8. Preservation of data

- text

Plain text, Json, Xml, etc. - Relational database

For example, MySQL, Oracle, SQL Server have structured table structure storage - Non-relational database

Key-Value formal storage such as MongoDB, Redis, etc. - Binary file

Save the pictures, videos, audio and so on directly into a specific format.

2. Reptilian login github

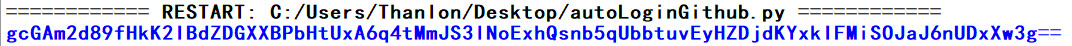

1. Send get request to Github login page to get csrf_token

import requests from bs4 import BeautifulSoup # request login page to get csrfequest resp = requests.get('https://github.com/login') login_bs = BeautifulSoup(resp.text, 'html.parser') token = login_bs.find(name='input', attrs={'name': 'authenticity_token'}).get('value') get_cookies_dict = resp.cookies.get_dict() # get cookies print(token)

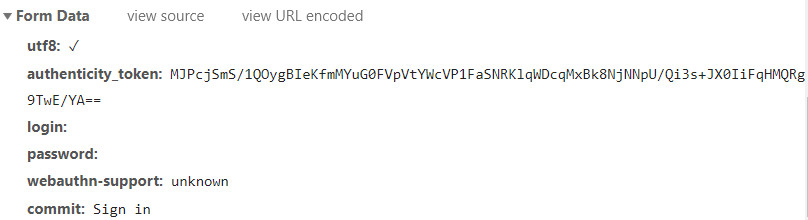

2. Send a post request to login Github for cookies

Get the parameters needed for login.

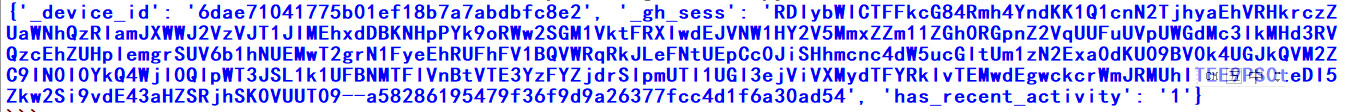

import requests from bs4 import BeautifulSoup # request login page to get csrfequest resp = requests.get('https://github.com/login') login_bs = BeautifulSoup(resp.text, 'html.parser') token = login_bs.find(name='input', attrs={'name': 'authenticity_token'}).get('value') get_cookies_dict = resp.cookies.get_dict() # get cookies # login github with cookies and other parameters,remember save cookies resp2 = requests.post( 'https://github.com/session', data={ 'utf8': '✓', 'authenticity_token': token, 'login': 'username',#your username 'password': 'password',#your password 'webauthn-support': 'unknown', 'commit': 'Sign in', }, cookies=get_cookies_dict ) post_cookie_dict = resp2.cookies.get_dict() # print(get_cookies_dict) # print(post_cookie_dict) cookies_dict = {} cookies_dict.update(get_cookies_dict) cookies_dict.update(post_cookie_dict) print(cookies_dict)

3. Visit the page with cookies and get content

import requests from bs4 import BeautifulSoup # request login page to get csrfequest resp = requests.get('https://github.com/login') login_bs = BeautifulSoup(resp.text, 'html.parser') token = login_bs.find(name='input', attrs={'name': 'authenticity_token'}).get('value') get_cookies_dict = resp.cookies.get_dict() # get cookies # login github with cookies and other parameters,remember save cookies resp2 = requests.post( 'https://github.com/session', data={ 'utf8': '✓', 'authenticity_token': token, 'login': 'username',#your username 'password': 'password',#your password 'webauthn-support': 'unknown', 'commit': 'Sign in', }, cookies=get_cookies_dict ) post_cookie_dict = resp2.cookies.get_dict() # print(get_cookies_dict) # print(post_cookie_dict) cookies_dict = {} cookies_dict.update(get_cookies_dict) cookies_dict.update(post_cookie_dict) # request primary page request_url = 'https://github.com/settings/profile' resp3 = requests.get(url=request_url, cookies=cookies_dict) print(resp3.text)

3. Detailed description of requests module

1. Method of request module

requests.get requests.post requests.put requests.delete ...... requests.request(method='get') requests.request(method='post') ......

2. Parameters of requests module

requests.get( url = 'https://www.blueflags.cn', headers = {}, cookies = {}, parames = {'k1':'v1','k2':'v2'} # https://www.blueflags.cn?k1=v1&k2=v2 ) requests.get( url = '', headers = {}, cookies = {}, parames = {'k1':'v1','k2':'v2'}, # https://www.blueflags.cn?k1=v1&k2=v2 data = {} )

- url

- headers

- cookies

- params

- session

Save all the cookies you visited inside the session, and you don't need to bring cookies with you when you send the request header later.

session = requests.Session() session.get() session.post()

- data: the body of the request

# coding:utf-8 import requests requests.post( # ......, data={'user': 'thanlon', 'pwd': '123456'} ) # Request header: # GET /index http1.1\r\nhost:c1.com\r\n\r\nuser=thanlon&pwd=123456

- json: Pass-on requester

# coding:utf-8 import requests requests.post( # ......, json={'user': 'thanlon', 'pwd': '123456'} ) # Request header: # GET /index http1.1\r\nhost:c1.com\r\nContent-Type:application/json\r\n\r\{'user': 'thanlon', 'pwd': '123456'}

In fact, we did json.domps().

- agent

If your own ip request data is blocked, you can use the proxy to send the data request to the proxy, so that the proxy can help us get the data.

# coding:utf-8 import requests proxies = { 'http': '123.206.74.1:80', 'https': 'http://123.206.74.24:3000' } # If http sends a request to 123.206.74.1:80, 123.206.74.1:80 helps us send a request to the target site. # If it's https, you'll find the agent http://123.206.74.24:3000. ret = requests.get('http://www.blueflags.cn', proxies=proxies)

The specified address uses the specified address:

# coding:utf-8 import requests proxies = { 'http://www.blueflags.cn': 'http://123.206.74.24:3000' } # The specified address uses the specified address ret = requests.get('http://www.blueflags.cn', proxies=proxies)

Authentication Agent:

# coding:utf-8 import requests from requests.auth import HTTPProxyAuth proxies = { 'http': '123.206.74.1:80', 'https': 'http://123.206.74.24:3000' } auth = HTTPProxyAuth('thanlon', 'kiku') # User name and password of the agent required for proxy authentication ret = requests.get('http://www.blueflags.cn', proxies=proxies, auth=auth) # If data is used for the user and password of the target site # ret = requests.get('http://www.blueflags.cn', data={'thanlon', 'kiku'}, proxies=proxies, auth=auth) print(ret.text)

In order to use proxy ip dynamically and randomly in a company, random function can be used.

- File upload (not very common)

# coding:utf-8 ''' //File upload ''' import requests file_dict = { 'f1': open('log.txt', 'rb') } requests.request( method='post',# post at the time of submission url='xxx', files=file_dict )

Custom upload file name (custom file name):

file_dict = { 'f1': ('test.txt',open('log.txt', 'rb')) }

- Authentication auth (not commonly used)

Inside the browser bullet window (router), users and passwords are encrypted and passed to the background in the request header. (1) Splice the username and password into a string "User: Password". 2) Encrypt the string. base64("User: Password") and base64 can be reversed. 3) Splice the string and construct a string "Basic 64" ("User | Password"). 4) Place the string in the request head, Authorization:"b" ASIC base 64 (`user | password')'). These are all browsers.

import requests from requests.auth import HTTPBasicAuth,HTTPDigestAuth#HTTP DigestAuth is digital authentication, and its algorithm is different from basic authentication. result = requests.get('https://api.github.com/user',auth=HTTPBasicAuth('thanlon','xxx')) print(result.text) ''' {"message":"Bad credentials","documentation_url":"https://developer.github.com/v3"} '''

The security mechanism is weak and generally not used. It is not possible to define a token or a request header by oneself. This is a fatal rule.

- timeout: timeout Error Reporting

# coding:utf-8 import requests # ret = requests.get('http://google.com/', timeout=1) # Write a connection timeout # print(ret) ret = requests.get('http://google.com/', timeout=(5, 1)) # One is connection timeout and the other is return timeout print(ret)

- Allow redirects allow_redirects

If the requested address is redirected, the default browser redirects to another address, and the result is available. But if you write allow_redirects = False to indicate no redirection, you get a response header (redirection), with no corresponding body.

# coding:utf-8 import requests ret = requests.get('http://google.com/', allow_redirects=False) # Allowing redirection is True, otherwise Fasle print(ret.text)

- Large file download stream

Using requests, you can download large files, such as video files. If there are 8G videos, send a request that all 8G videos be put into memory, and the video needs to be written from memory to file. If memory does not have 8G, it is likely to go down. If stream = True is used, it is downloaded bit by bit.

# coding:utf-8 import requests from contextlib import closing with closing(requests.get('http://google.com/', stream=True)) as r:#Download bit by bit # Processing response pass for i in r.iter_content():#Take it bit by bit print(i)

If stream = True is not allowed, it will be read into memory at one time.

- Certificate cert

import requests requests.get('http://www.blueflags.cn', cert='xxx/xxx/xxx.pem') # certificate requests.get('http://www.blueflags.cn', cert='xxx/xxx/xxx.pem') # Certificate Key

- Confirm verify

import requests requests.get('http://www.blueflags.cn', cert='xxx/xxx/xxx.pem', verify=False) # certificate requests.get('http://www.blueflags.cn', cert='xxx/xxx/xxx.pem', verify=False) # Certificate Key

You can make verify = False, go ahead without confirmation.

Brief description of bs4 module

Next, add.

V. Polling/Long Polling

1. Polling

Send requests every few seconds.