1, Introduction to Kubernetes

First, it is a new leading solution of distributed architecture based on container technology. Kubernetes(k8s) is Google's open source container cluster management system (Google internal: Borg). With the rapid development of Docker as an advanced container engine, container technology has been applied in Google for many years, and Borg system runs and manages thousands of container applications; Based on Docker technology, it provides a series of complete functions for container applications, such as deployment and operation, resource scheduling, service discovery and dynamic scaling, and improves the convenience of large-scale container cluster management.

Kubernetes is a complete supporting platform for distributed systems, which has the essence of Borg design thought and has absorbed the experience and lessons of Borg system. It has complete cluster management capability, multi-level security protection and access mechanism, multi tenant application support capability, transparent service registration and discovery mechanism, built-in intelligent load balancer, strong fault discovery and self-healing capability, service rolling upgrade and online capacity expansion capability Scalable automatic resource scheduling mechanism and multi granularity resource quota management capability. At the same time, kubernetes provides perfect management tools, covering all links including development, deployment, testing, operation and maintenance monitoring.

• Kubernetes advantages:

Hide resource management and error handling. Users only need to pay attention to application development. It has the advantages of lightweight and open source

The service is highly available, reliable and load balanced.

The load can be run in a cluster of thousands of machines.

Basic object:

Pod: the smallest deployment unit. A pod is composed of one or more containers, which share storage and network and run on the same docker host

Service: an application service abstraction that defines the logical set of pods and the policies for accessing the set of pods

Volume: data volume

Namespace

Label: Label

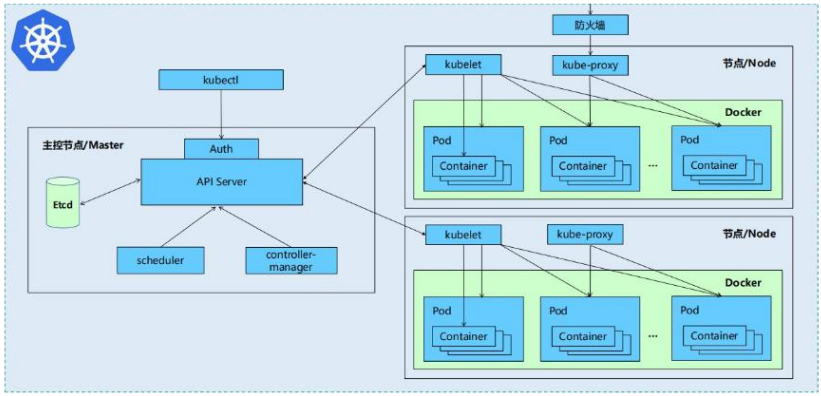

2, Kubernetes architecture

Overall architecture:

Kubernetes core components:

• etcd: saves the status of the entire cluster

• apiserver: it provides a unique entry for resource operation, and provides authentication, authorization, access control, API registration and discovery

Other mechanisms

• controller manager: responsible for maintaining the status of the cluster, such as fault detection, automatic expansion, rolling update, etc

• scheduler: it is responsible for scheduling resources and scheduling pods to corresponding machines according to predetermined scheduling policies

• kubelet: responsible for maintaining the life cycle of containers and managing Volume(CVI) and network (CNI)

• Container runtime: responsible for image management and real operation (CRI) of Pod and container

• Kube proxy: responsible for providing Service discovery and load balancing within the cluster for services

Kubernetes cluster:

- Kubernetes cluster includes node agent kubelet and Master components (APIs, scheduler, etc.),

Everything is based on distributed storage systems

Master node:

| Composition module | function | characteristic |

|---|---|---|

| APIServer | Responsible for providing external services of RESTful kubernetes API | It is a unified interface for system management instructions. Any addition or deletion of resources should be handed over to APIServer for processing and then to etcd |

| schedule | Be responsible for scheduling the Pod to the appropriate Node | If the scheduler is regarded as a black box, its input is pod and a list composed of multiple nodes, and its output is the binding of pod and a Node |

| controller-manager | Controller responsible for managing resources | If APIServer does the work of the foreground, the controller manager is responsible for the background |

| etcd | etcd is a highly available key value storage system | kubernetes uses it to store the state of various resources, thus implementing the Restful API. |

Node:

| Composition module | function | characteristic |

|---|---|---|

| kube-prox | The service discovery and reverse proxy functions in kubernetes are implemented | Kube proxy supports TCP and UDP connection forwarding. The default base Round Robin algorithm forwards the client traffic to a group of back-end pods corresponding to the service. Kube proxy uses etcd's watch mechanism to monitor the dynamic changes of service and endpoint object data in the cluster, and maintains a mapping relationship between service and endpoint, so as to ensure that the IP changes of back-end pod will not affect visitors |

| kublet | Maintain and manage all containers on the Node | It is the agent of the Master on each Node, which is responsible for making the running state of the Pod consistent with the expected state. |

| kubeadm: | The instruction used to initialize the cluster |

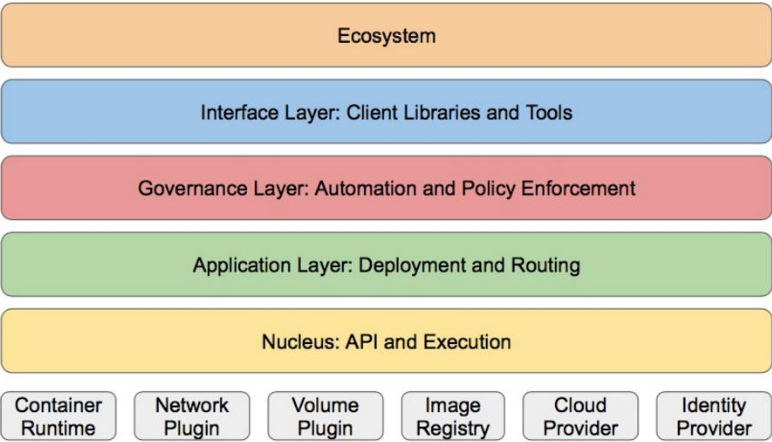

Layered architecture:

• Kubernetes design concept and function is actually a layered architecture similar to Linux

• core layer: the core function of Kubernetes, which provides API s to build high-level applications externally and plug-in application execution environment internally

• application layer: Deployment (stateless applications, stateful applications, batch tasks, cluster applications, etc.) and routing (service discovery, DNS resolution, etc.)

• Management: system measurement (such as infrastructure, container and network measurement), automation (such as automatic expansion, dynamic Provision, etc.) and policy management (RBAC, Quota, PSP, NetworkPolicy, etc.)

• interface layer: kubectl command line tool, CLIENT SDK and cluster Federation

• ecosystem: the ecosystem of large container cluster management and scheduling on the interface layer can be divided into two categories

• Kubernetes external: log, monitoring, configuration management, CI, CD, Workflow, FaaS, OTS application, ChatOps, etc

• Kubernetes internal: CRI, CNI, CVI, image warehouse, Cloud Provider, cluster configuration and management, etc

2, Kubernetes deployment

2.1. Environmental preparation

| Host domain name (ip) | Cluster function | Environmental configuration requirements |

|---|---|---|

| server1(172.25.6.1) | haproxy warehouse | Memory 2G |

| server2(172.25.6.2) | Master node | CPU2 core, memory 2G |

| server3(172.25.6.3) | Node node | CRU2 core, 2G memory |

| server4(172.25.6.4) | Node node | CRU2 core, 2G memory |

2.2. Modify drive

[root@server2 docker]# vim daemon.json ##server3 and server4 are the same

{

"registry-mirrors" : ["https://reg.westos.org"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

[root@server2 docker]# systemctl daemon-reload

[root@server2 docker]# systemctl restart docker.service

[root@server2 docker]# docker info ##Same as server3, server4

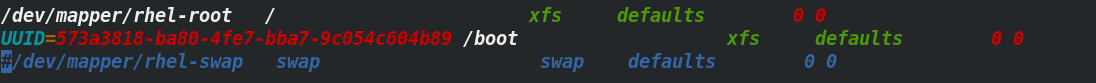

2.3. Disable swap partition

[root@server2 ~]# swapoff -a [root@server2 ~]# vim /etc/fstab ##Prohibit startup and self start

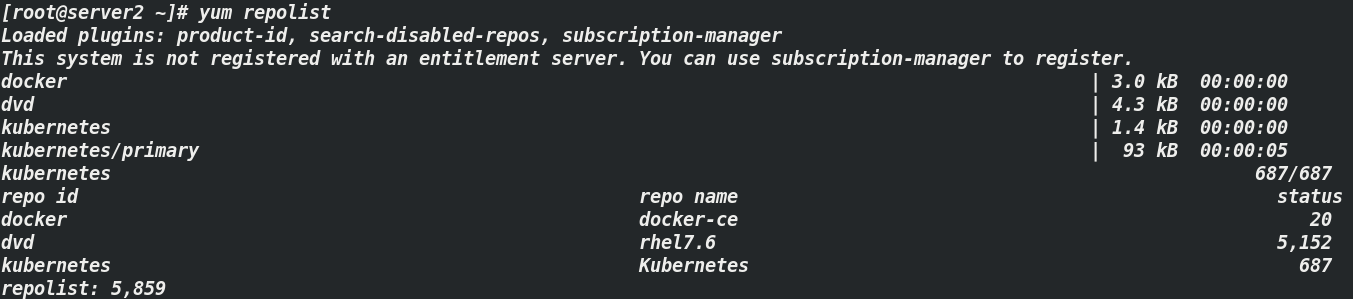

2.4. Install deployment software kubedm

2.4.1mirrors.aliyun image warehouse construction

[root@server2 yum.repos.d]# vim k8s.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=0 Note: server4 And server3 Simultaneous construction

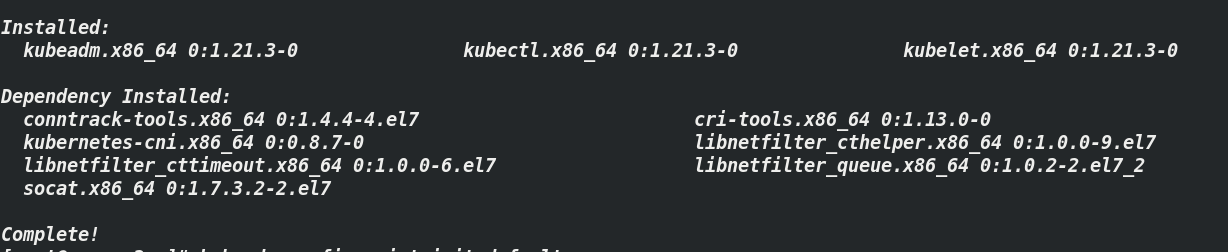

2.4.2. Installation of related components

Note: kubedm: the instruction used to initialize the cluster

kubelet: used to start pod s, containers, etc. on each node in the cluster

kubectl: command line tool for communicating with clusters

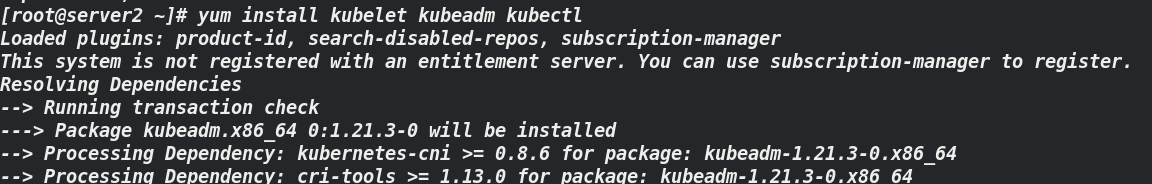

[root@server2 ~]# yum install kubelet kubeadm kubectl ##server4 and server3 are the same

[root@server2 ~]# kubeadm config print init-defaults ##View default configuration

[root@server2 ~]# systemctl enable kubelet ##Startup and self start Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

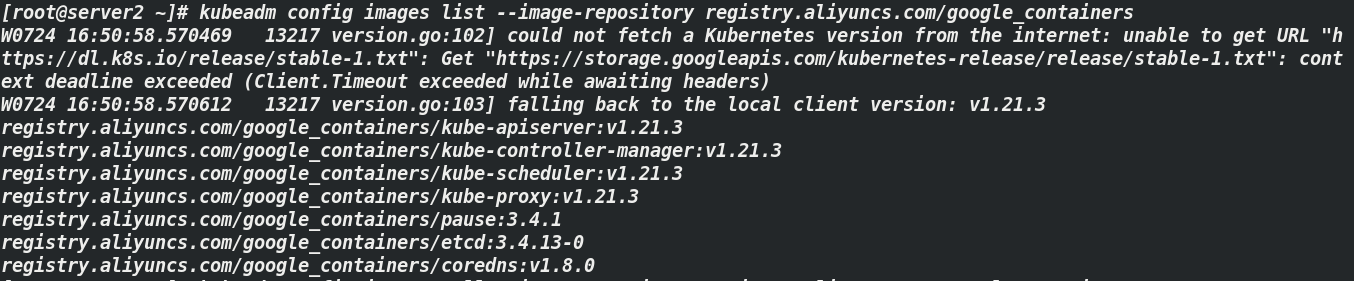

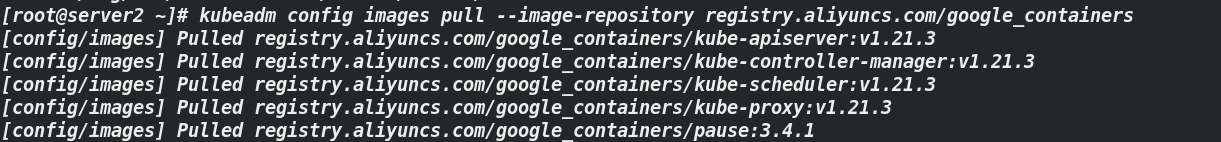

2.4.2. Mirror pull

Pull the image on the master side (server2). The default is from k8s gcr. The component image is downloaded from Io, so the image warehouse needs to be modified

[root@server2 ~]# kubeadm config images list --image-repository registry.aliyuncs.com/google_containers ##List mirrors

[root@server2 ~]# kubeadm config images pull --image-repository registry.aliyuncs.com/google_containers ##Pull image

: because the coredns image cannot be downloaded using the above command, the following:

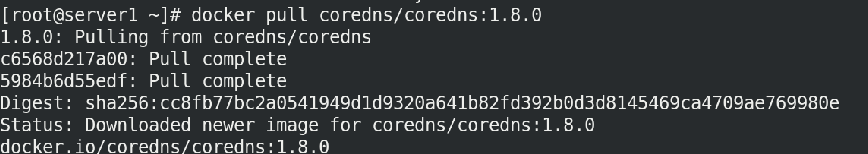

[root@server1 ~]# docker pull coredns/coredns:1.8.0

2.4.3. Image upload

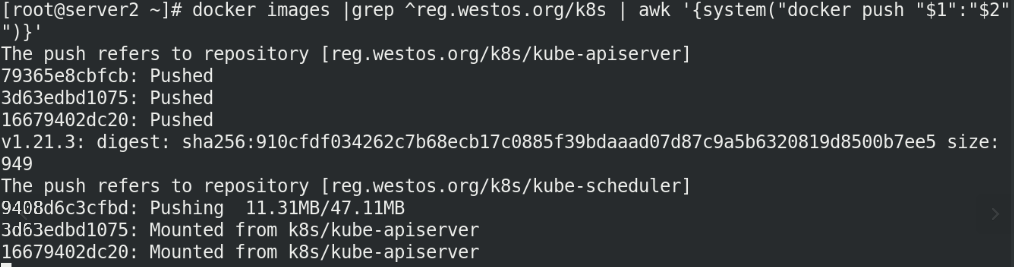

[root@server2 ~]# docker images | grep ^reg.westos.org/k8s | awk '{system("docker push "$1":"$2"")}'

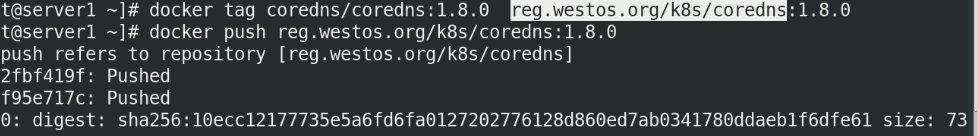

[root@server1 ~]# docker tag coredns/coredns:1.8.0 reg.westos.org/k8s/coredns:1.8.0 [root@server1 ~]# docker push reg.westos.org/k8s/coredns:1.8.0

2.5. Initialize cluster

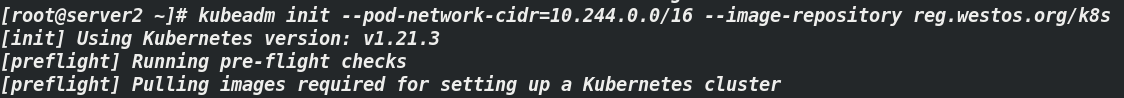

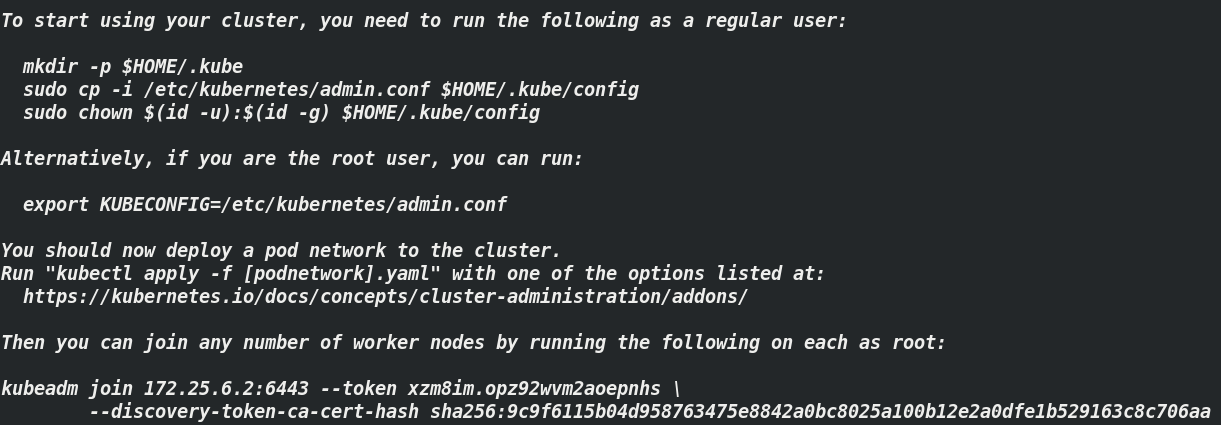

[ root@server2 ~]# kubeadm init --pod-network-cidr=10.244.0.0/16 --image-repository reg.westos.org/k8s ## note: k8s is reg westos. Org warehouse new project, which must be set to public

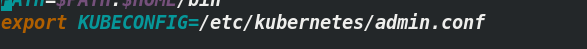

2.5.1. Add environment variable

[root@server2 ~]# vim .bash_profile export KUBECONFIG=/etc/kubernetes/admin.conf ##View the screenshot of initialization command (previous one), [root@server2 ~]# source .bash_profile ###

2.5.2. Installing flannel network components

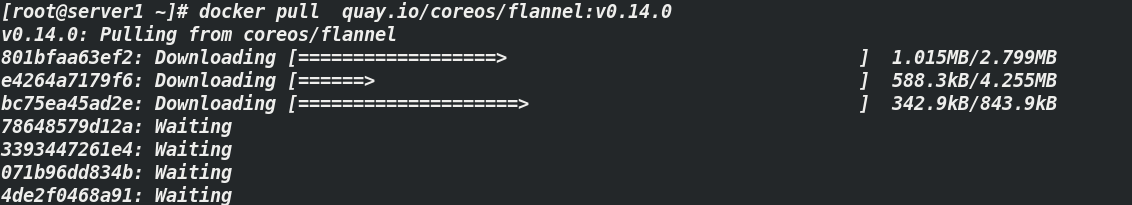

2.5.2.1. Image download and upload

Due to the installation of flannel network components, you need to mirror flannel

[root@server1 ~]# docker pull quay.io/coreos/flannel:v0.14.0

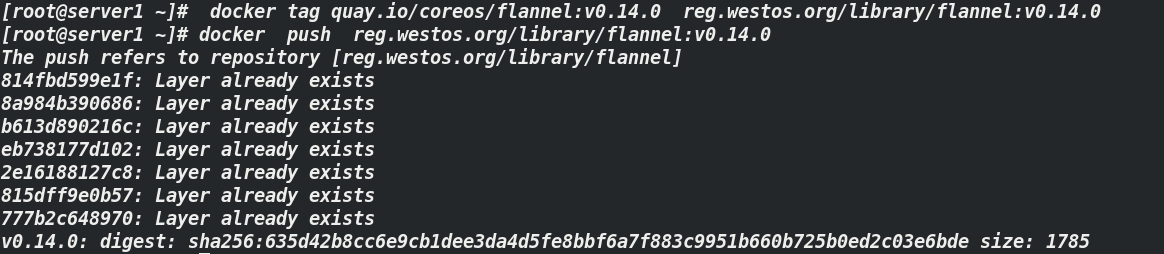

[root@server1 ~]# docker tag quay.io/coreos/flannel:v0.14.0 reg.westos.org/library/flannel:v0.14.0 [root@server1 ~]# docker push reg.westos.org/library/flannel:v0.14.0

2.5.2.2. Profile Download

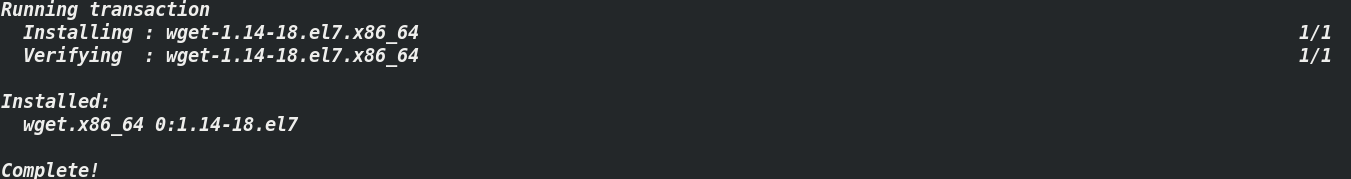

[root@server2 ~]# yum install wget -y: Download plug-ins

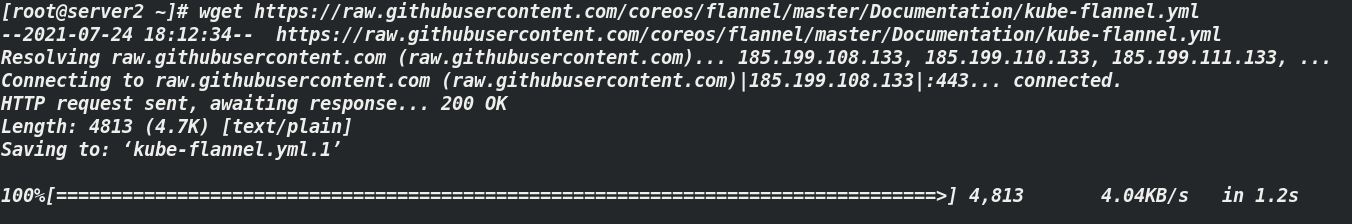

[root@server2 ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml ## configuration file Kube flannel yml

[root@server2 ~]# vim kube-flannel.yml ##Modify the configuration file to achieve version matching

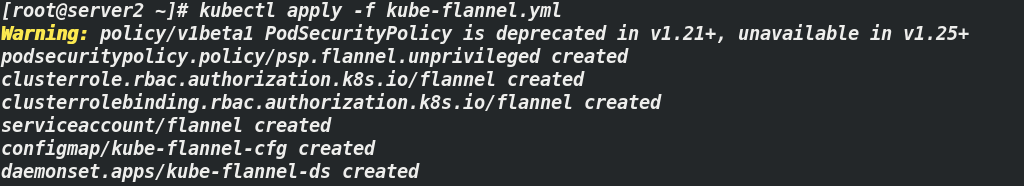

[root@server2 ~]# kubectl apply -f kube-flannel.yml ### Apply Kube flannel yml

2.6. Load cluster

2.6.1. Join node

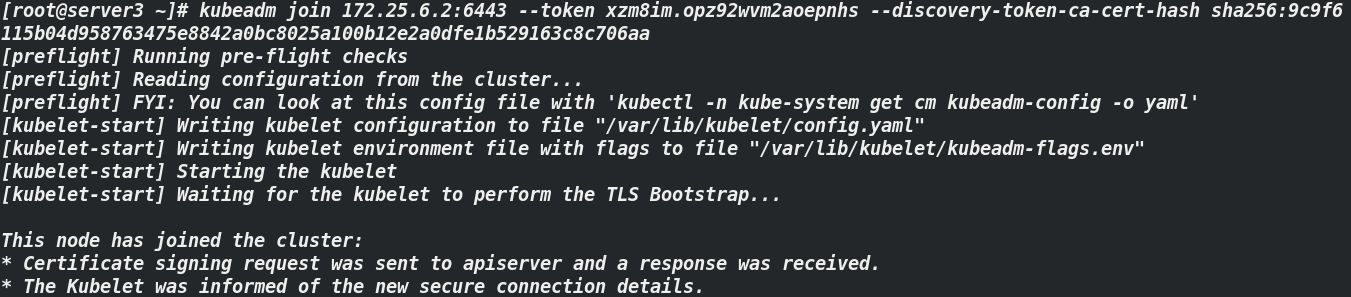

The token used by the node node will be automatically generated after the initialization command is executed. See (initialization screenshot)

[root@server3 ~]# kubeadm join 172.25.6.2:6443 --token xzm8im.opz92wvm2aoepnhs --discovery-token-ca-cert-hash sha256:9c9f6115b04d958763475e8842a0bc8025a100b12e2a0dfe1b529163c8c706aa [root@server4 ~]# kubeadm join 172.25.6.2:6443 --token xzm8im.opz92wvm2aoepnhs --discovery-token-ca-cert-hash sha256:9c9f6115b04d958763475e8842a0bc8025a100b12e2a0dfe1b529163c8c706aa

2.6.2 testing

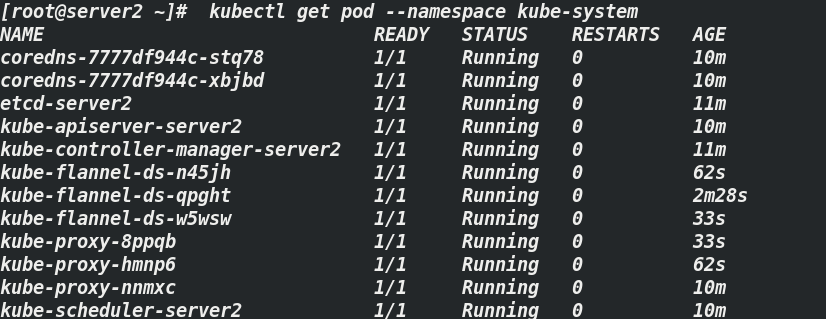

[root@server2 ~]# kubectl get pod --namespace kube-system #Check the container status of Kube system

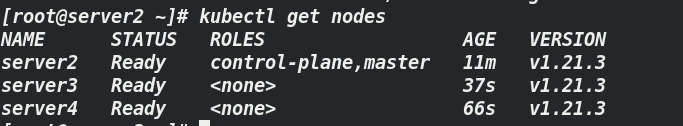

[root@server2 ~]# kubectl get nodes #View node status

Note: node status and Kube system container status are shown in the figure above, indicating that the deployment is complete