The author's official account is welcome to exchange.

1 background

MySQL Group Replication (MGR) is a highly available and highly scalable solution officially launched by MySQL on December 12, 2016. It provides highly available, highly scalable and highly reliable MySQL Cluster Services with the following characteristics:

- Strong consistency: Based on native replication and paxos protocol, ensure the consistency and atomicity of data transmission

- High fault tolerance: as long as most nodes do not fail, the cluster can provide external services

- High scalability: the addition and removal of nodes are automatic without excessive human intervention

- High flexibility: it has single master mode and multi master mode. The single master mode automatically selects the master after the Master goes down, and the multi master mode and multi node write

Unless otherwise specified, the MySQL database version is 8.0.27

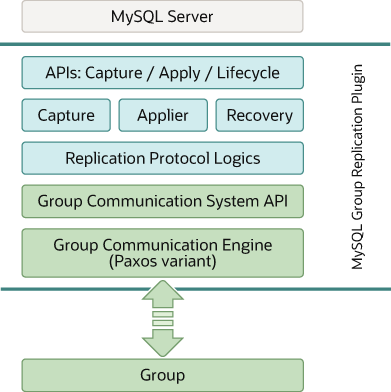

2 sets of replication plug-in architecture

MySQL MGR is a MySQL plug-in built on the existing MySQL replication infrastructure. The MGR plug-in includes a set of API s for Capture, Apply and Lifecycle to control how the plug-in interacts with the MySQL server. Through interfaces, information can flow between the server and plug-ins, and these interfaces isolate the MySQL server core from the group replication plug-ins. From the server to the plug-in, there will be notifications of events such as server startup, server recovery, server ready to accept connection and server about to commit transactions. In the other direction, that is, from the plug-in to the server, the plug-in instructs the server to commit or terminate ongoing transactions, or queue transactions in the Relaylog.

The next layer is a set of components, including Capture, Applier and Recovery. When notifications are routed to these components, corresponding responses will be made. Among them, the Capture component is responsible for tracking the context information of the executing transaction, the Applier component is responsible for executing remote transactions on the database, and the Recover component manages distributed Recovery.

Moving on, the replication protocol module contains the specific logic of the replication protocol. It handles conflict detection, receives transactions and propagates them to groups.

The last two layers are the group communication system (GCS) API and the implementation of Paxos based group communication engine.

3 configuration requirements and limitations

The use of group replication needs to meet the following requirements:

- InnoDB storage engine

- Each table must have an explicit primary key

- Network performance requires low latency and high bandwidth

- server_id must be unique

- Turn on GTID

- RC is recommended for transaction isolation level

- Foreign key cascading constraints are not recommended

- The maximum number of group members is 9

4 single master mode deployment

4.1 deployment environment

| Hostname | IP | Server ID | Port |

| node1 | 192.168.56.201 | 201 | 3306 |

| node2 | 192.168.56.202 | 202 | 3306 |

| node3 | 192.168.56.203 | 203 | 3306 |

4.2 installation and deployment

4.2.1 installing MySQL database software

1) Add the following configuration in the / etc/hosts files of nodes node1, node2 and node3 respectively:

192.168.56.201 node1 node1.com.cn 192.168.56.202 node2 node2.com.cn 192.168.56.203 node3 node3.com.cn

2) Turn off the firewall on nodes node1, node2 and node3 respectively:

View firewall status:

systemctl status firewalld

Turn off the firewall:

systemctl stop firewalld

Disable firewall startup:

systemctl disable firewalld

3) Install MySQL database, refer to[ MySQL 8.0 database installation and deployment ]Install MySQL database software on nodes node1, node2 and node3 respectively.

4.2.2 installing the master node node1

1) Copy the configuration group in the original configuration file / etc / my The following configuration information is added to CNF:

disabled_storage_engines = "MyISAM,BLACKHOLE,FEDERATED,ARCHIVE,MEMORY" gtid_mode = ON enforce_gtid_consistency = ON binlog_checksum = CRC32 plugin_load_add = 'group_replication.so' group_replication_group_name = "0b15d45f-6e29-11ec-99b3-08002790c7d5" group_replication_start_on_boot = off group_replication_local_address = "node1:33061" group_replication_group_seeds = "node1:33061,node2:33061,node3:33061" group_replication_bootstrap_group = off

2) Restart the MySQL server instance to make the configuration effective:

[root@node1 ~]# service mysql.server restart Shutting down MySQL.... SUCCESS! Starting MySQL....... SUCCESS!

3) Connect to the MySQL server, create a distributed recovery user and grant permissions. If you use Clone, you need to grant corresponding permissions:

mysql> set sql_log_bin=0; Query OK, 0 rows affected (0.00 sec) mysql> create user repl identified by 'repl'; Query OK, 0 rows affected (0.12 sec) mysql> grant replication slave on *.* to repl; Query OK, 0 rows affected (0.11 sec) mysql> grant backup_admin on *.* to repl; Query OK, 0 rows affected (0.10 sec) mysql> set sql_log_bin=1; Query OK, 0 rows affected (0.00 sec) mysql> change replication source to source_user='repl',source_password='repl' for channel 'group_replication_recovery'; Query OK, 0 rows affected, 2 warnings (0.17 sec)

4) Boot the group. You need to use group when starting the group for the first time_ replication_ bootstrap_ The group system variable guides the group. This operation can only be completed on a single server and can only be started once. After the guidance is completed, view the group member information:

mysql> set global group_replication_bootstrap_group=on; Query OK, 0 rows affected (0.00 sec) mysql> start group_replication; Query OK, 0 rows affected (1.29 sec) mysql> set global group_replication_bootstrap_group=off; Query OK, 0 rows affected (0.00 sec) mysql> select * from performance_schema.replication_group_members\G; *************************** 1. row *************************** CHANNEL_NAME: group_replication_applier MEMBER_ID: a6886158-6ef5-11ec-a75e-08002790c7d5 MEMBER_HOST: node1.com.cn MEMBER_PORT: 3306 MEMBER_STATE: ONLINE MEMBER_ROLE: PRIMARY MEMBER_VERSION: 8.0.27 MEMBER_COMMUNICATION_STACK: XCom 1 row in set (0.12 sec) ERROR: No query specified

5) For demonstration purposes, create a test table:

mysql> create database test; Query OK, 1 row affected (0.18 sec) mysql> use test; Database changed mysql> create table t1(id int primary key,name varchar(20)); Query OK, 0 rows affected (0.21 sec) mysql> insert into t1 values(1,'Alen'); Query OK, 1 row affected (0.03 sec) mysql> show binary logs; +------------------+-----------+-----------+ | Log_name | File_size | Encrypted | +------------------+-----------+-----------+ | mysql-bin.000001 | 179 | No | | mysql-bin.000002 | 500 | No | | mysql-bin.000003 | 1190 | No | +------------------+-----------+-----------+ 3 rows in set (0.00 sec) mysql> show binlog events in 'mysql-bin.000003'; +------------------+------+----------------+-----------+-------------+-------------------------------------------------------------------------------+ | Log_name | Pos | Event_type | Server_id | End_log_pos | Info | +------------------+------+----------------+-----------+-------------+-------------------------------------------------------------------------------+ | mysql-bin.000003 | 4 | Format_desc | 201 | 125 | Server ver: 8.0.27, Binlog ver: 4 | | mysql-bin.000003 | 125 | Previous_gtids | 201 | 156 | | | mysql-bin.000003 | 156 | Gtid | 201 | 242 | SET @@SESSION.GTID_NEXT= '0b15d45f-6e29-11ec-99b3-08002790c7d5:1' | | mysql-bin.000003 | 242 | Query | 201 | 308 | BEGIN | | mysql-bin.000003 | 308 | View_change | 201 | 411 | view_id=16414770057187861:1 | | mysql-bin.000003 | 411 | Query | 201 | 483 | COMMIT | | mysql-bin.000003 | 483 | Gtid | 201 | 567 | SET @@SESSION.GTID_NEXT= '0b15d45f-6e29-11ec-99b3-08002790c7d5:2' | | mysql-bin.000003 | 567 | Query | 201 | 675 | create database test /* xid=25 */ | | mysql-bin.000003 | 675 | Gtid | 201 | 759 | SET @@SESSION.GTID_NEXT= '0b15d45f-6e29-11ec-99b3-08002790c7d5:3' | | mysql-bin.000003 | 759 | Query | 201 | 899 | use `test`; create table t1(id int primary key,name varchar(20)) /* xid=30 */ | | mysql-bin.000003 | 899 | Gtid | 201 | 985 | SET @@SESSION.GTID_NEXT= '0b15d45f-6e29-11ec-99b3-08002790c7d5:4' | | mysql-bin.000003 | 985 | Query | 201 | 1060 | BEGIN | | mysql-bin.000003 | 1060 | Table_map | 201 | 1114 | table_id: 93 (test.t1) | | mysql-bin.000003 | 1114 | Write_rows | 201 | 1159 | table_id: 93 flags: STMT_END_F | | mysql-bin.000003 | 1159 | Xid | 201 | 1190 | COMMIT /* xid=31 */ | +------------------+------+----------------+-----------+-------------+-------------------------------------------------------------------------------+ 15 rows in set (0.00 sec)

It can be seen from the log that when the first member is started, there is a VIEW ID, and the transaction ID is represented by group_replication_group_name as part of the GTID.

4.2.3 adding a slave node node2

The operation of adding a slave node node2 is similar to that of node1. You can refer to the configuration process of node1, but the boot group is no longer required.

1) The configuration group is copied in the configuration file / etc / my The following configuration information is added to CNF, and the server needs to be modified_ Configuration options such as ID:

server_id = 202 disabled_storage_engines = "MyISAM,BLACKHOLE,FEDERATED,ARCHIVE,MEMORY" gtid_mode = ON enforce_gtid_consistency = ON binlog_checksum = CRC32 plugin_load_add = 'group_replication.so' group_replication_group_name = "0b15d45f-6e29-11ec-99b3-08002790c7d5" group_replication_start_on_boot = off group_replication_local_address = "node2:33061" group_replication_group_seeds = "node1:33061,node2:33061,node3:33061" group_replication_bootstrap_group = off

2) Restart the MySQL server instance to make the configuration effective:

[root@node2 ~]# service mysql.server restart Shutting down MySQL.. SUCCESS! Starting MySQL...... SUCCESS!

3) Connect to the MySQL server, create a distributed recovery user and grant permissions. If you use Clone, you need to grant corresponding permissions:

mysql> set sql_log_bin=0; Query OK, 0 rows affected (0.00 sec) mysql> create user repl identified by 'repl'; Query OK, 0 rows affected (0.06 sec) mysql> grant replication slave on *.* to repl; Query OK, 0 rows affected (0.01 sec) mysql> grant backup_admin on *.* to repl; Query OK, 0 rows affected (0.11 sec) mysql> set sql_log_bin=1; Query OK, 0 rows affected (0.00 sec) mysql> change replication source to source_user='repl',source_password='repl'\ -> for channel 'group_replication_recovery'; Query OK, 0 rows affected, 2 warnings (0.19 sec)

4) Start group replication and view member status:

mysql> start group_replication; Query OK, 0 rows affected (6.95 sec) mysql> select * from performance_schema.replication_group_members\G; *************************** 1. row *************************** CHANNEL_NAME: group_replication_applier MEMBER_ID: 4bfafef9-6efa-11ec-8296-080027e8893b MEMBER_HOST: node2.com.cn MEMBER_PORT: 3306 MEMBER_STATE: ONLINE MEMBER_ROLE: SECONDARY MEMBER_VERSION: 8.0.27 MEMBER_COMMUNICATION_STACK: XCom *************************** 2. row *************************** CHANNEL_NAME: group_replication_applier MEMBER_ID: a6886158-6ef5-11ec-a75e-08002790c7d5 MEMBER_HOST: node1.com.cn MEMBER_PORT: 3306 MEMBER_STATE: ONLINE MEMBER_ROLE: PRIMARY MEMBER_VERSION: 8.0.27 MEMBER_COMMUNICATION_STACK: XCom 2 rows in set (0.16 sec) ERROR: No query specified

5) Verify the data synchronized from node1 node:

mysql> select * from test.t1; +----+------+ | id | name | +----+------+ | 1 | Alen | +----+------+ 1 row in set (0.00 sec) mysql> show binlog events in 'mysql-bin.000003'; +------------------+------+----------------+-----------+-------------+-------------------------------------------------------------------------------+ | Log_name | Pos | Event_type | Server_id | End_log_pos | Info | +------------------+------+----------------+-----------+-------------+-------------------------------------------------------------------------------+ | mysql-bin.000003 | 4 | Format_desc | 202 | 125 | Server ver: 8.0.27, Binlog ver: 4 | | mysql-bin.000003 | 125 | Previous_gtids | 202 | 156 | | | mysql-bin.000003 | 156 | Gtid | 201 | 242 | SET @@SESSION.GTID_NEXT= '0b15d45f-6e29-11ec-99b3-08002790c7d5:1' | | mysql-bin.000003 | 242 | Query | 201 | 308 | BEGIN | | mysql-bin.000003 | 308 | View_change | 201 | 415 | view_id=16414770057187861:1 | | mysql-bin.000003 | 415 | Query | 201 | 487 | COMMIT | | mysql-bin.000003 | 487 | Gtid | 201 | 571 | SET @@SESSION.GTID_NEXT= '0b15d45f-6e29-11ec-99b3-08002790c7d5:2' | | mysql-bin.000003 | 571 | Query | 201 | 679 | create database test /* xid=31 */ | | mysql-bin.000003 | 679 | Gtid | 201 | 763 | SET @@SESSION.GTID_NEXT= '0b15d45f-6e29-11ec-99b3-08002790c7d5:3' | | mysql-bin.000003 | 763 | Query | 201 | 903 | use `test`; create table t1(id int primary key,name varchar(20)) /* xid=32 */ | | mysql-bin.000003 | 903 | Gtid | 201 | 989 | SET @@SESSION.GTID_NEXT= '0b15d45f-6e29-11ec-99b3-08002790c7d5:4' | | mysql-bin.000003 | 989 | Query | 201 | 1059 | BEGIN | | mysql-bin.000003 | 1059 | Table_map | 201 | 1113 | table_id: 90 (test.t1) | | mysql-bin.000003 | 1113 | Write_rows | 201 | 1158 | table_id: 90 flags: STMT_END_F | | mysql-bin.000003 | 1158 | Xid | 201 | 1189 | COMMIT /* xid=34 */ | | mysql-bin.000003 | 1189 | Gtid | 201 | 1275 | SET @@SESSION.GTID_NEXT= '0b15d45f-6e29-11ec-99b3-08002790c7d5:5' | | mysql-bin.000003 | 1275 | Query | 201 | 1341 | BEGIN | | mysql-bin.000003 | 1341 | View_change | 201 | 1554 | view_id=16414770057187861:2 | | mysql-bin.000003 | 1554 | Query | 201 | 1626 | COMMIT | | mysql-bin.000003 | 1626 | Gtid | 201 | 1712 | SET @@SESSION.GTID_NEXT= '0b15d45f-6e29-11ec-99b3-08002790c7d5:6' | | mysql-bin.000003 | 1712 | Query | 201 | 1778 | BEGIN | | mysql-bin.000003 | 1778 | View_change | 201 | 1925 | view_id=16414770057187861:4 | | mysql-bin.000003 | 1925 | Query | 201 | 1997 | COMMIT | +------------------+------+----------------+-----------+-------------+-------------------------------------------------------------------------------+ 23 rows in set (0.00 sec)

4.2.4 adding a slave node node3

1) For the operation of adding slave node node3, please refer to the adding process of slave node node2. Only the configuration options are different. Other operation processes are the same. Node3 configuration file / etc / my CNF configuration options are as follows:

server_id = 203 disabled_storage_engines = "MyISAM,BLACKHOLE,FEDERATED,ARCHIVE,MEMORY" gtid_mode = ON enforce_gtid_consistency = ON binlog_checksum = CRC32 plugin_load_add = 'group_replication.so' group_replication_group_name = "0b15d45f-6e29-11ec-99b3-08002790c7d5" group_replication_start_on_boot = off group_replication_local_address = "node3:33061" group_replication_group_seeds = "node1:33061,node2:33061,node3:33061" group_replication_bootstrap_group = off

2) After adding the slave node node3, view the group member information, and they are all online:

mysql> select * from performance_schema.replication_group_members\G; *************************** 1. row *************************** CHANNEL_NAME: group_replication_applier MEMBER_ID: 07cad00b-6efd-11ec-87cc-08002715e2e8 MEMBER_HOST: node3.com.cn MEMBER_PORT: 3306 MEMBER_STATE: ONLINE MEMBER_ROLE: SECONDARY MEMBER_VERSION: 8.0.27 MEMBER_COMMUNICATION_STACK: XCom *************************** 2. row *************************** CHANNEL_NAME: group_replication_applier MEMBER_ID: 4bfafef9-6efa-11ec-8296-080027e8893b MEMBER_HOST: node2.com.cn MEMBER_PORT: 3306 MEMBER_STATE: ONLINE MEMBER_ROLE: SECONDARY MEMBER_VERSION: 8.0.27 MEMBER_COMMUNICATION_STACK: XCom *************************** 3. row *************************** CHANNEL_NAME: group_replication_applier MEMBER_ID: a6886158-6ef5-11ec-a75e-08002790c7d5 MEMBER_HOST: node1.com.cn MEMBER_PORT: 3306 MEMBER_STATE: ONLINE MEMBER_ROLE: PRIMARY MEMBER_VERSION: 8.0.27 MEMBER_COMMUNICATION_STACK: XCom 3 rows in set (0.01 sec) ERROR: No query specified

5 single master / multi master mode switching

MySQL MGR runs in single master mode by default, i.e. parameter group_ replication_ single_ primary_ The default value of mode is ON. Since MySQL 8.0.13, you can use group when MySQL MGR is running_ replication_ switch_ to_ multi_ primary_ Mode () and group_ replication_ switch_ to_ single_ primary_ The mode() function performs single master / multi master mode switching.

5.1 switching from single master to multi master

Using the function group_replication_switch_to_multi_primary_mode() can switch from single master mode to multi master mode:

mysql> select group_replication_switch_to_multi_primary_mode(); +--------------------------------------------------+ | group_replication_switch_to_multi_primary_mode() | +--------------------------------------------------+ | Mode switched to multi-primary successfully. | +--------------------------------------------------+ 1 row in set (1.00 sec) mysql> select * from performance_schema.replication_group_members\G; *************************** 1. row *************************** CHANNEL_NAME: group_replication_applier MEMBER_ID: 07cad00b-6efd-11ec-87cc-08002715e2e8 MEMBER_HOST: node3.com.cn MEMBER_PORT: 3306 MEMBER_STATE: ONLINE MEMBER_ROLE: PRIMARY MEMBER_VERSION: 8.0.27 MEMBER_COMMUNICATION_STACK: XCom *************************** 2. row *************************** CHANNEL_NAME: group_replication_applier MEMBER_ID: 4bfafef9-6efa-11ec-8296-080027e8893b MEMBER_HOST: node2.com.cn MEMBER_PORT: 3306 MEMBER_STATE: ONLINE MEMBER_ROLE: PRIMARY MEMBER_VERSION: 8.0.27 MEMBER_COMMUNICATION_STACK: XCom *************************** 3. row *************************** CHANNEL_NAME: group_replication_applier MEMBER_ID: a6886158-6ef5-11ec-a75e-08002790c7d5 MEMBER_HOST: node1.com.cn MEMBER_PORT: 3306 MEMBER_STATE: ONLINE MEMBER_ROLE: PRIMARY MEMBER_VERSION: 8.0.27 MEMBER_COMMUNICATION_STACK: XCom 3 rows in set (0.00 sec) ERROR: No query specified

5.2 switching from multi master to single master

Using the function group_replication_switch_to_single_primary_mode() can switch multi master mode to single master mode:

mysql> select group_replication_switch_to_single_primary_mode(); +---------------------------------------------------+ | group_replication_switch_to_single_primary_mode() | +---------------------------------------------------+ | Mode switched to single-primary successfully. | +---------------------------------------------------+ 1 row in set (0.03 sec) mysql> select * from performance_schema.replication_group_members\G; *************************** 1. row *************************** CHANNEL_NAME: group_replication_applier MEMBER_ID: 07cad00b-6efd-11ec-87cc-08002715e2e8 MEMBER_HOST: node3.com.cn MEMBER_PORT: 3306 MEMBER_STATE: ONLINE MEMBER_ROLE: PRIMARY MEMBER_VERSION: 8.0.27 MEMBER_COMMUNICATION_STACK: XCom *************************** 2. row *************************** CHANNEL_NAME: group_replication_applier MEMBER_ID: 4bfafef9-6efa-11ec-8296-080027e8893b MEMBER_HOST: node2.com.cn MEMBER_PORT: 3306 MEMBER_STATE: ONLINE MEMBER_ROLE: SECONDARY MEMBER_VERSION: 8.0.27 MEMBER_COMMUNICATION_STACK: XCom *************************** 3. row *************************** CHANNEL_NAME: group_replication_applier MEMBER_ID: a6886158-6ef5-11ec-a75e-08002790c7d5 MEMBER_HOST: node1.com.cn MEMBER_PORT: 3306 MEMBER_STATE: ONLINE MEMBER_ROLE: SECONDARY MEMBER_VERSION: 8.0.27 MEMBER_COMMUNICATION_STACK: XCom 3 rows in set (0.00 sec) ERROR: No query specified

6 monitoring group replication environment

MySQL MGR is monitored mainly through performance_schema. The main tables are as follows:

mysql> show tables from performance_schema like '%replication%'; +------------------------------------------------------+ | Tables_in_performance_schema (%replication%) | +------------------------------------------------------+ | replication_applier_configuration | | replication_applier_filters | | replication_applier_global_filters | | replication_applier_status | | replication_applier_status_by_coordinator | | replication_applier_status_by_worker | | replication_asynchronous_connection_failover | | replication_asynchronous_connection_failover_managed | | replication_connection_configuration | | replication_connection_status | | replication_group_communication_information | | replication_group_configuration_version | | replication_group_member_actions | | replication_group_member_stats | | replication_group_members | +------------------------------------------------------+ 15 rows in set (0.01 sec)