Author: Alexey Milovidov

Original link: https://clickhouse.com/blog/en/2022/clickhouse-v22.1-released/

22.1 is the first version of our new year. It includes 2599 new submissions from 133 contributors, including 44 new contributors:

13DaGGeR, Adri Fernandez, Alexey Gusev, Anselmo D. Adams, Antonio Andelic, Ben, Boris Kuschel, Christoph Wurm, Chun-Sheng, Li, Dao, DimaAmega, Dmitrii Mokhnatkin, Harry-Lee, Justin Hilliard, MaxTheHuman, Meena-Renganathan, Mojtaba Yaghoobzadeh, N. Kolotov, Niek, Orkhan Zeynalli, Rajkumar, Ryad ZENINE, Sergei Trifonov, Suzy Wang, TABLUM. IO, Vitaly artemiyev, Xin Wang, Yatian Xu, youenn lebras, dalei2019, Fanzhou, gulige, lgbo USTC, minhthucdao, mreddy017, msirm, olevino, peter279k, save my heart, tekeri, usurai, zhoubintao, Li Yang.

Don't forget to run select * from system on your server Contributors view contributors!

Let's describe the most important new features in 22.1.

Automatic schema derivation

Take the following query as an example:

SELECT * FROM url('https://datasets.clickhouse.com/github_events_v2.native.xz', Native,

$$

file_time DateTime,

event_type Enum('CommitCommentEvent' = 1, 'CreateEvent' = 2, 'DeleteEvent' = 3, 'ForkEvent' = 4,

'GollumEvent' = 5, 'IssueCommentEvent' = 6, 'IssuesEvent' = 7, 'MemberEvent' = 8,

'PublicEvent' = 9, 'PullRequestEvent' = 10, 'PullRequestReviewCommentEvent' = 11,

'PushEvent' = 12, 'ReleaseEvent' = 13, 'SponsorshipEvent' = 14, 'WatchEvent' = 15,

'GistEvent' = 16, 'FollowEvent' = 17, 'DownloadEvent' = 18, 'PullRequestReviewEvent' = 19,

'ForkApplyEvent' = 20, 'Event' = 21, 'TeamAddEvent' = 22),

actor_login LowCardinality(String),

repo_name LowCardinality(String),

created_at DateTime,

updated_at DateTime,

action Enum('none' = 0, 'created' = 1, 'added' = 2, 'edited' = 3, 'deleted' = 4, 'opened' = 5, 'closed' = 6, 'reopened' = 7, 'assigned' = 8, 'unassigned' = 9,

'labeled' = 10, 'unlabeled' = 11, 'review_requested' = 12, 'review_request_removed' = 13, 'synchronize' = 14, 'started' = 15, 'published' = 16, 'update' = 17, 'create' = 18, 'fork' = 19, 'merged' = 20),

comment_id UInt64,

body String,

path String,

position Int32,

line Int32,

ref LowCardinality(String),

ref_type Enum('none' = 0, 'branch' = 1, 'tag' = 2, 'repository' = 3, 'unknown' = 4),

creator_user_login LowCardinality(String),

number UInt32,

title String,

labels Array(LowCardinality(String)),

state Enum('none' = 0, 'open' = 1, 'closed' = 2),

locked UInt8,

assignee LowCardinality(String),

assignees Array(LowCardinality(String)),

comments UInt32,

author_association Enum('NONE' = 0, 'CONTRIBUTOR' = 1, 'OWNER' = 2, 'COLLABORATOR' = 3, 'MEMBER' = 4, 'MANNEQUIN' = 5),

closed_at DateTime,

merged_at DateTime,

merge_commit_sha String,

requested_reviewers Array(LowCardinality(String)),

requested_teams Array(LowCardinality(String)),

head_ref LowCardinality(String),

head_sha String,

base_ref LowCardinality(String),

base_sha String,

merged UInt8,

mergeable UInt8,

rebaseable UInt8,

mergeable_state Enum('unknown' = 0, 'dirty' = 1, 'clean' = 2, 'unstable' = 3, 'draft' = 4),

merged_by LowCardinality(String),

review_comments UInt32,

maintainer_can_modify UInt8,

commits UInt32,

additions UInt32,

deletions UInt32,

changed_files UInt32,

diff_hunk String,

original_position UInt32,

commit_id String,

original_commit_id String,

push_size UInt32,

push_distinct_size UInt32,

member_login LowCardinality(String),

release_tag_name String,

release_name String,

review_state Enum('none' = 0, 'approved' = 1, 'changes_requested' = 2, 'commented' = 3, 'dismissed' = 4, 'pending' = 5)

$$)

In this query, we use table function URL to import data. The data is in the file extension native.xz is published on the HTTP server as a file. The most annoying thing about this query is that we have to specify the data structure and format of this file.

In the new version 22.1, it is not so complex:

SELECT * FROM url('https://datasets.clickhouse.com/github_events_v2.native.xz')

It's much simpler than before! How did this happen?

First, we automatically detect the data format from the file extension. Here is. native.xz, so we know that the data is compressed by xz (LZMA2) and expressed in Native format. The Native format already contains all the information about the type and name of the column. We only need to read and use this information.

This feature applies to the following formats: Native, Avro, Parquet, ORC,Arrow, and CSVWithNamesAndTypes, TSVWithNamesAndTypes.

This feature also applies to the following table functions: s3, file, hdfs, url, s3Cluster, hdfsCluster

This feature contains many tricks: it does not need to read the whole file into memory. For example, Parquet format has metadata at the end of the file. Therefore, we first read the header to find the location of the metadata, then make a range request to read the metadata about the column and its type, and then continue to read the requested column. If the file is small, it will be read through a single request.

If you only extract the data structure from the file without data processing, you can use DESCRIBE query:

DESCRIBE url('https://datasets.clickhouse.com/github_events_v2.native.xz')

Data structures can also be automatically inferred from JSONEachRow, CSV, TSV, CSVWithNames, TSVWithNames, MsgPack, Values and Regexp. CSV supports Float64 or String type inference. JSONEachRow supports the inference of array types, including multidimensional arrays. When the element types in the array are inconsistent, it will be automatically inferred into a meta group, and Objects will be mapped to the Map data type.

If the column name is not specified in the format (such as CSV without Header), c1, c2,... Is used to represent each column.

The file format can be inferred from the extension: csv, tsv, native, parquet, pb, ndjson, orc... For example Ndjson file is recognized as JSONEachRow format in ClickHouse, while CSV is recognized as a CSV format without Header. If you want to use CSVWithNames format, you can explicitly specify the format in query.

We support "on demand" queries. For example, the TSV format data type automatically detected is a string, but you can use the:: operator to optimize the type in the query:

SELECT c1 AS domain, uniq(c2::UInt64), count() AS cnt

FROM file('hits.tsv')

GROUP BY domain ORDER BY cnt DESC LIMIT 10

More conveniently, the LineAsString format RawBLOB can also obtain type inference. Try this query to see how I prefer to read my favorite website:

SELECT extractTextFromHTML(*)

FROM url('https://news.ycombinator.com/', LineAsString);

schema automatic detection is also applicable to the creation of Merge,Distributed and replicated megretree tables. When you create the first copy, you must specify a table structure. However, when creating all subsequent replicas, you only need to CREATE TABLE hits ENGINE = ReplicatedMegreTree(...), Instead of listing each column, the information will be replicated from another replica.

This function was realized by Pavel Kruglov inspired by Igor Baliuk's initial work and added by ZhongYuanKai.

Clickhouse client real-time display of query resource occupation

ClickHouse client is my favorite ClickHouse client. It is a model of all command-line tools.

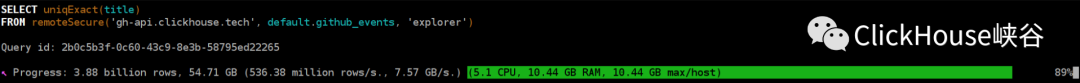

Now it can directly display the current query CPU and memory usage in real time in the progress bar:

Resource use

For distributed queries, it shows the total and maximum memory usage of each host.

This feature is implemented through distributed metrics forwarding implemented by Dmitry Novik. I have integrated these distributed metrics into the ClickHouse client. Now I can use the Native ClickHouse protocol to add similar information to each ClickHouse client.

Replica granularity parallel query

ClickHouse is a distributed MPP DBMS. It can scale up to use all CPU cores on a server and out to use computing resources from multiple slices in the cluster.

However, each slice usually contains multiple copies. By default, ClickHouse uses only one copy of the resource on each slice. For example, if you have a cluster of six servers with three shards and two replicas on each server, the query will use only three servers instead of all six.

There is an enable option max_parallel_replicas, but this option needs to specify the "sampling key", which is inconvenient to use and cannot be well extended.

Now we have an option to enable a new parallel processing algorithm: allow_experimental_parallel_reading_from_replicas. If enabled, the replica will_ Dynamic_ Select and assign work between them.

Even if the replica has less or more computing resources, it works perfectly. Even if some copies are old, it will give complete results.

This feature is implemented by Nikita Mikhaylov

Service discovery

When you add or remove nodes in a cluster, you do not now have to update the configuration on each server. Just in remote_ If the < discovery > configuration is used in the server, the replica will register itself:

<allow_experimental_cluster_discovery>1 </allow_experimental_cluster_discovery> <remote_servers> <auto_cluster> <discovery> <path>/clickhouse/discovery/auto_cluster</path> <shard>1</shard> </discovery> </auto_cluster> </remote_servers>

There is no need to modify the configuration when adding a new copy!

This function is realized by Vladimir Cherkasov.

Sparse coding of columns

If a column mainly contains zero, we can encode it into sparse format and automatically optimize the calculation!

It is a special column encoding, similar to LowCardinality, but it is completely transparent and works automatically.

CREATE TABLE test.hits ... ENGINE = MergeTree ORDER BY ... SETTINGS ratio_of_defaults_for_sparse_serialization = 0.9

It can better compress the data and optimize the calculation, because the data in the sparse column will be processed directly in the sparse format in memory.

ClickHouse will decide whether to use sparse coding according to column statistics during insertion, and update it again during background merge.

Developed by Anton Popov.

In the future, we also want to make LowCardinality coding automatic. Please look forward to it!

ClickHouse diagnostic tool

This is a gift from the Yandex Cloud team. They have a tool to collect reports on ClickHouse instances to provide all the required support information. They decided to contribute this tool to open source!

You can find the tool here: utils / Clickhouse diagnostics

Developed by Alexander Burmak

Third party integration

Many new integrations have been added to 22.1:

Integrated with Hive as an external table engine for SELECT query, provided by Taiyang Li and reviewed by Ksenia sumarokova.

Integrated with Azure Blob storage similar to S3, contributed by Jakub Kuklis and reviewed by Ksenia sumarokova.

hdfsCluster table function similar to s3Cluster is supported, contributed by Yuzhichang Yu and reviewed by Nikita Mikhailov.

Statistical function

I hope you have always dreamed of calculating Cramer's V and Theil's U coefficients in ClickHouse, because now we have prepared these functions for you.

:) SELECT cramersV(URL, URLDomain) FROM test.hits 0.98 :) SELECT cramersV(URLDomain, ResolutionWidth) FROM test.hits 0.27

It can calculate some dependency between classification (discrete) values. You can think like this: there is a correlation function corr, but it only applies to linear dependencies; There is a rank correlation function rankcrr, but it only applies to ordered values. Now there are some functions that can be calculated_ Discrete_ Value.

Developers: Artem Tsyganov, Ivan Belyaev, Alexey Milovidov.

... there are many more

Read the complete change log of version 22.1 and follow the roadmap.