About iterators in python, there are not a few articles about generators, some of which are very thorough, but more fragmented. The concepts of iteratable objects, iterators and generators are very convoluted, and the content is too fragmented, which makes people confused. This article attempts to systematically introduce the concepts and relationships of the three, hoping to help those in need.

Iteratable object, iterator

Concept introduction

Iteration:

Let's first look at the literal meaning of iteration:

Iteration means: iteration is a behavior, an action executed repeatedly. In python, it can be understood as the action of repeatedly taking values.

Iteratable object: as the name suggests, it is an object from which values can be iterated. In python, the data structures of container classes are iteratable objects, such as lists, dictionaries, sets, tuples, etc.

Iterator: similar to a tool that takes values from an iteratable object. Strictly speaking, an object that can take values from an iteratable object.

Iteratable object

In python, the data structures of container types are iteratable objects, which are listed as follows:

- list

- Dictionaries

- tuple

- aggregate

- character string

List of characters of the first day of journey to the West:

>>> arr = ['accomplished eminent monk','Great sage','canopy ','Roller shutter'] >>> for i in arr: ... print(i) ... accomplished eminent monk Great sage canopy Roller shutter >>>

In addition to python's own data structure being an iterative object, the methods and custom classes in the module may also be iterative objects. So how to confirm whether an object is an iteratable object? There is a standard that all iteratable objects have methods__ iter__, All objects with this method are iteratable objects.

>>> dir(arr) ['__add__', '__class__', '__contains__', '__delattr__', '__delitem__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattribute__', '__getitem__', '__gt__', '__hash__', '__iadd__', '__imul__', '__init__', '__init_subclass__', '__iter__', '__le__', '__len__', '__lt__', '__mul__', '__ne__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__reversed__', '__rmul__', '__setattr__', '__setitem__', '__sizeof__', '__str__', '__subclasshook__', 'append', 'clear', 'copy', 'count', 'extend', 'index', 'insert', 'pop', 'remove', 'reverse', 'sort']

iterator

Common iterators are created from iteratable objects. Calling an iteratable object__ iter__ Method to create its own iterator for the iteratable object. Iterators can also be created using the iter() method, which essentially calls iteratable objects__ iter__ method.

>>> arr = ['accomplished eminent monk','Great sage','canopy ','Roller shutter'] >>> arr_iter = iter(arr) >>> >>> for i in arr_iter: ... print(i) ... accomplished eminent monk Great sage canopy Roller shutter >>> >>> >>> arr_iter = iter(arr) >>> next(arr_iter) 'accomplished eminent monk' >>> next(arr_iter) 'Great sage' >>> next(arr_iter) 'canopy ' >>> next(arr_iter) 'Roller shutter' >>> next(arr_iter) Traceback (most recent call last): File "<stdin>", line 1, in <module> StopIteration

Iteratable objects can only be traversed through the for loop. In addition to traversing through the for loop, the iterator can also iterate out elements through the next() method. Call to iterate one element at a time until all elements are iterated, and throw a StopIteration error. This process is like a pawn without crossing the river in chess - you can only move forward, not backward, and you can't traverse all elements after iteration.

Briefly summarize the characteristics of iterators:

- You can use the next() method to iterate over the values

- The iterative process can only move forward, not backward

- The iterator is one-time. After iteration, all elements cannot be traversed again. Only a new iterator needs to be traversed again

Iterator objects are very common in python. For example, an open file is an iterator, and the return of higher-order functions such as map, filter and reduce is also an iterator. The iterator object has two methods:__ iter__ And__ next__. If the next() method can iterate out the element, it is called__ next__ To achieve.

>>> dir(arr_iter) ['__class__', '__delattr__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattribute__', '__gt__', '__hash__', '__init__', '__init_subclass__', '__iter__', '__le__', '__length_hint__', '__lt__', '__ne__', '__new__', '__next__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__setstate__', '__sizeof__', '__str__', '__subclasshook__']

Distinguish iteratable objects from iterators

How to distinguish iteratable objects from iterators? In python's data type enhancement module collections, there are iteratable objects and iterator data types, which can be distinguished by isinstance type comparison.

>>> from collections import Iterable, Iterator >>> arr = [1,2,3,4] >>> isinstance(arr, Iterable) True >>> isinstance(arr, Iterator) False >>> >>> >>> arr_iter = iter(arr) >>> isinstance(arr_iter, Iterable) True >>> isinstance(arr_iter, Iterator) True >>>

arr: iteratable object. Is an iteratable object type, not an iterator type

arr_iter: iterator. It is both an iteratable object type and an iterator type

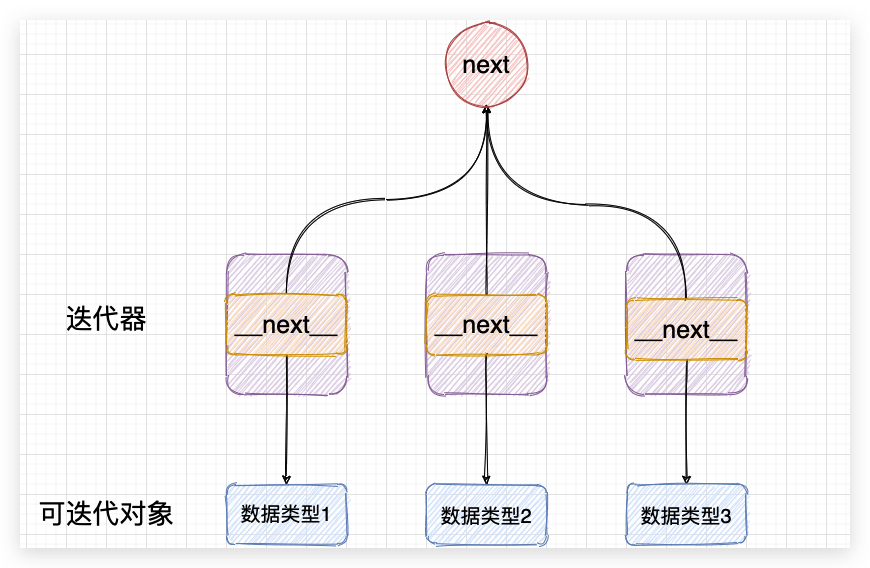

Relationship between iteratable objects and iterators

It can be roughly seen from the creation of iterators. An iteratable object is a collection, and an iterator is an iterative method created for this collection. When iterators iterate, they take values directly from the collection of iteratable objects. The following model can be used to understand the relationship between the two:

>>> arr = [1,2,3,4] >>> iter_arr = iter(arr) >>> >>> arr.append(100) >>> arr.append(200) >>> arr.append(300) >>> >>> for i in iter_arr: ... print(i) ... 1 2 3 4 100 200 300 >>>

You can see the process here:

- Create the iteratable object arr first

- Then create an arr from arr_iter iterator

- Then append elements to the arr list

- Finally, the elements iterated out include the elements appended later.

It can be explained that the iterator does not copy the elements of the iteratable object, but refers to the elements of the iteratable object. The elements of the iteratable object are directly used when iterating values.

Working mechanism of iteratable objects and iterators

First, sort out the methods of the two

Iteratable objects: objects with__ iter__ method

Iterators: objects with__ iter__ And__ next__ method

Mentioned in the creation of iterators__ iter__ The method is to return an iterator__ next__ Values are taken from elements. Therefore, about the functions of the two methods:

Iteratable objects:

__ iter__ Method returns an iterator

Iterator:

__ iter__ Method returns an iterator, itself.

__ next__ Method returns the next element in the collection

An iteratable object is a collection of elements. It does not have its own value taking method. An iteratable object is like a dumpling in a teapot, as the old saying goes.

>>> arr = [1,2,3,4] >>> >>> next(arr) Traceback (most recent call last): File "<stdin>", line 1, in <module> TypeError: 'list' object is not an iterator

Since the dumplings can't be poured out, what if you want to eat them? Then you have to find a tool like chopsticks to clip it out, right. Iterators are tools for taking values from iteratable objects.

Iterator arr created for iteratable object arr_iter, you can iterate out all the ARR values through the next value until no element throws an exception StopIteration

>>> arr_iter = iter(arr) >>> >>> next(arr_iter) 1 >>> next(arr_iter) 2 >>> next(arr_iter) 3 >>> next(arr_iter) 4 >>> next(arr_iter) Traceback (most recent call last): File "<stdin>", line 1, in <module> StopIteration >>>

for loop essence

>>> arr = [1,2,3] >>> for i in arr: ... print(i) ... 1 2 3

All values in arr are traversed through the for loop above. We know that the list arr is an iteratable object and cannot take value by itself. How can the for loop iterate out all elements?

The essence of the for loop is to create an iterator for arr, and then continuously call the next() method to take out the element and copy it to the variable i until no element throws an exception to catch StopIteration and exits the loop. This can be explained more intuitively by simulating the for loop:

arr = [1,2,3]

# Generate an iterator for arr

arr_iter = iter(arr)

while True:

try:

# Keep calling the iterator next method, catch exceptions, and then exit

print(next(arr_iter))

except StopIteration:

break

>>

1

2

3

This is the end of the working mechanism of iteratable objects and iterators. A brief summary:

Iteratable object: the element is saved, but the value cannot be taken by itself. You can call your own__ iter__ Method to create an exclusive iterator to take values.

Iterators: owning__ next__ Method, which can take values from the pointed iteratable object. Can only traverse once, and can only move forward, not backward.

Create iteratable objects and iterators yourself

Durian is delicious. You can only taste it. Iterators are not easy to understand. It's clear to implement them once. The following customizes the iteratable objects and iterators.

If you customize an iteratable object, you need to implement__ iter__ method;

If you want to customize an iterator, you need to implement it__ iter__ And__ next__ method.

Iteratable objects: Implementation__ iter__ Method, whose function is to call the method to return the iterator

Iterators: Implementation__ iter__, The function is to return the iterator, that is, itself; Realize__ next__, The function is to iterate the value until an exception is thrown.

from collections import Iterable, Iterator

# Iteratable object

class MyArr():

def __init__(self):

self.elements = [1,2,3]

# Returns an iterator and passes a reference to its own element to the iterator

def __iter__(self):

return MyArrIterator(self.elements)

# iterator

class MyArrIterator():

def __init__(self, elements):

self.index = 0

self.elements = elements

# Return self, which is the instantiated object, that is, the caller himself.

def __iter__(self):

return self

# Implementation value

def __next__(self):

# Exception thrown after iteration of all elements

if self.index >= len(self.elements):

raise StopIteration

value = self.elements[self.index]

self.index += 1

return value

arr = MyArr()

print(f'arr Is an iteratable object:{isinstance(arr, Iterable)}')

print(f'arr Is an iterator:{isinstance(arr, Iterator)}')

# Iterator returned

arr_iter = arr.__iter__()

print(f'arr_iter Is an iteratable object:{isinstance(arr_iter, Iterable)}')

print(f'arr_iter Is an iterator:{isinstance(arr_iter, Iterator)}')

print(next(arr_iter))

print(next(arr_iter))

print(next(arr_iter))

print(next(arr_iter))

result:

arr Is an iteratable object: True

arr Is an iterator: False

arr_iter Is an iteratable object: True

arr_iter Is an iterator: True

1

2

3

Traceback (most recent call last):

File "myarr.py", line 40, in <module>

print(next(arr_iter))

File "myarr.py", line 23, in __next__

raise StopIteration

StopIteration

From this list, we can clearly understand the implementation of iterators for iteratable objects. Of iteratable objects__ iter__ The return value of a method is the object of an instantiated iterator. The object of this iterator not only saves the reference of the element of the iteratable object, but also implements the value taking method, so you can take the value through the next() method. This is a code worthy of careful review. For example, there are several questions for readers to think about:

- Why can next() only move forward but not backward

- Why does an iterator fail only once

- If the target of the for loop is an iterator, how does it work

Advantages of iterators

Iterative model of design pattern

The advantage of iterator is that it provides a general iterative method that does not depend on index

The design of iterators comes from the iterative pattern of design patterns. The idea of iterative pattern is to provide a method to access the elements in a container sequentially without exposing the internal details of the object.

The iteration mode is specific to the iterator of python, which can separate the operation of traversing the sequence from the bottom of the sequence, and provide a general method to traverse the elements.

Such as list, dictionary, set, tuple and string. The underlying data models of these data structures are different, but they can also be traversed by using the for loop. It is precisely because each data structure can generate an iterator and can be iterated through the next() method, so you don't need to care about how to save the elements at the bottom and don't need to consider the internal details.

Similarly, if it is a self-defined data type, even if the internal implementation is complex, only the iterator needs to be implemented, and there is no need to care about the complex structure. The general next method can be used to traverse the elements.

Implementation of general value of complex data structure

For example, we construct a complex data structure: {(x,x):value}, a dictionary, where key is a tuple and value is a number. According to the iterative design pattern, the general value selection method is realized.

Example implementation:

class MyArrIterator():

def __init__(self):

self.index = 1

self.elements = {(1,1):100, (2,2):200, (3,3):300}

def __iter__(self):

return self

def __next__(self):

if self.index > len(self.elements):

raise StopIteration

value = self.elements[(self.index, self.index)]

self.index += 1

return value

arr_iter = MyArrIterator()

print(next(arr_iter))

print(next(arr_iter))

print(next(arr_iter))

print(next(arr_iter))

100

200

300

Traceback (most recent call last):

File "iter_two.py", line 22, in <module>

print(next(arr_iter))

File "iter_two.py", line 12, in __next__

raise StopIteration

StopIteration

As long as it is realized__ next__ Method can be accessed through next(), no matter how complex the data structure is__ next__ Masking the underlying details. This design idea is a common idea, such as driver design and third-party platform intervention design, which shield differences and provide a unified calling method.

Disadvantages and misunderstandings of iterators

shortcoming

The disadvantages of iterators are also mentioned in the above introduction, focusing on:

- The value is not flexible enough. The next method can only take values backward, not forward. The value is not as flexible as the index method, and a specified value cannot be taken

- The length of the iterator cannot be predicted. The iterator takes the value through the next() method and cannot know the number of iterations in advance

- Once used up, it will fail

misunderstanding

The advantages and disadvantages of iterators have been made clear. Now we discuss a common misunderstanding of iterators: iterators can't save memory

Add a premise to this sentence: the iterator here refers to an ordinary iterator, not a generator, because the generator is also a special iterator.

This may be a misunderstanding, that is, to create an iteratable object and iterator with the same function, the memory occupation of the iterator is less than that of the iteratable object. For example:

>>> arr = [1,2,3,4] >>> arr_iter = iter([1,2,3,4]) >>> >>> arr.__sizeof__() 72 >>> arr_iter.__sizeof__() 32

At first glance, it is true that the memory occupied by the iterator is less than that of the iteratable object. Think carefully about the implementation of the iterator. It refers to the elements of the iteratable object, that is, to create the iterator arr_iter also creates a list [1,2,3,4]. The iterator only saves the reference of the list, so the iterator's arr_iter's actual memory is [1,2,3,4] + 32 = 72 + 32 = 104 bytes.

arr_iter is essentially a class object. Because the python variable is the feature of saving the address, the address size of the object is 32 bytes.

Later, there is a special analysis on the memory occupied by iterators and generators, which can be proved by numbers.

itertools, python's own iterator tool

Iterators play an important role in python, so python has built-in iterator function module itertools. All methods in itertools are iterators, and you can use next() to get values. Methods can be divided into three categories: infinite iterators, finite iterators and combined iterators

Infinite iterator

count(): create an infinite iterator, similar to an infinite length list, from which you can take values

Finite iterator

chain(): multiple iteratable objects can be combined to form a larger iterator

Combined iterator

product(): the result is the Cartesian product of the iteratable object

For more information on the use of itertools, please refer to: https://zhuanlan.zhihu.com/p/51003123

generator

Generator is a special iterator. It not only has the function of iterator: it can iterate out elements through the next method, but also has its own particularity: saving memory.

How to create a generator

The generator can be created in two ways:

- () syntax, you can create a generator by replacing [] of list generation with ()

- Use the yield keyword to turn a normal function into a generator function

() syntax

>>> gen = (i for i in range(3)) >>> type(gen) <class 'generator'> >>> from collections import Iterable,Iterator >>> >>> isinstance(gen, Iterable) True >>> isinstance(gen, Iterator) True >>>

>>> next(gen) 0 >>> next(gen) 1 >>> next(gen) 2 >>> next(gen) Traceback (most recent call last): File "<stdin>", line 1, in <module> StopIteration >>>

You can see that the generator conforms to the characteristics of the iterator.

yield keyword

Yield is the keyword of python. Using yield in a function can turn an ordinary function into a generator. In the function, return is the return ID, and the code exits when return is executed. When the code is executed to yield, it will also return the variables after yield, but the program is only suspended at the current position. When the program is run again, it will be executed from the part after yield.

from collections import Iterator,Iterable

def fun():

a = 1

yield a

b = 100

yield b

gen_fun = fun()

print(f'Is an iteratable object:{isinstance(gen_fun, Iterable)}')

print(f'Is an iterator:{isinstance(gen_fun, Iterator)}')

print(next(gen_fun))

print(next(gen_fun))

print(next(gen_fun))

Is an iteratable object: True

Is an iterator: True

1

100

Traceback (most recent call last):

File "gen_fun.py", line 17, in <module>

print(next(gen_fun))

StopIteration

When executing the first next(), the program returns 1 through yield a, and the execution process is suspended here.

When executing the second next(), the program starts running from the place where it was suspended last time, then returns 100 through yield b, finally exits and the program ends.

The magic of yield is that it can remember the execution location and execute again from the execution location.

Generator method

Since the generator is a special iterator, do you have two methods for iterator objects? View the methods that the two generators have.

gen

>>> dir(gen) ['__class__', '__del__', '__delattr__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattribute__', '__gt__', '__hash__', '__init__', '__init_subclass__', '__iter__', '__le__', '__lt__', '__name__', '__ne__', '__new__', '__next__', '__qualname__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', 'close', 'gi_code', 'gi_frame', 'gi_running', 'gi_yieldfrom', 'send', 'throw']

gen_fun

['__class__', '__del__', '__delattr__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattribute__', '__gt__', '__hash__', '__init__', '__init_subclass__', '__iter__', '__le__', '__lt__', '__name__', '__ne__', '__new__', '__next__', '__qualname__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', 'close', 'gi_code', 'gi_frame', 'gi_running', 'gi_yieldfrom', 'send', 'throw']

Both generators use iterators__ iter__ And__ next__ method.

Generators are special iterators. If you want to distinguish between generators and iterators, you can't use the Iterator of collections. You can use the isgenerator method:

>>> from inspect import isgenerator >>> arr_gen = (i for i in range(10)) >>> isgenerator(arr_gen) True >>> >>> arr = [i for i in range(10)] >>> isgenerator(arr) False >>>

Advantages of generator

Generator is a special iterator. Its special feature is its advantage: saving memory. As can be seen from the name, the generator supports iterative values through the generated method.

Memory saving principle:

Taking traversing the list as an example, if the list elements are calculated according to some algorithm, the subsequent elements can be calculated continuously in the process of circulation, so there is no need to create a complete list, thus saving a lot of space.

Taking the same function as an example, iterate out the elements in the set. The set is: [1,2,3,4]

Iterator approach:

- First, generate an iterative object list [1,2,3,4]

- Then create an iterator and take the value from the iteratable object through next()

arr = [1,2,3,4] arr_iter = iter(arr) next(arr_iter) next(arr_iter) next(arr_iter) next(arr_iter)

Generator practices:

- Create a generator function

- Value through next

def fun():

n = 1

while n <= 4:

yield n

n += 1

gen_fun = fun()

print(next(gen_fun))

print(next(gen_fun))

print(next(gen_fun))

print(next(gen_fun))

Comparing the two methods, the iterator needs to create a list to complete the iteration, while the generator only needs a number to complete the iteration. In the case of small amount of data, this advantage can not be reflected. When the amount of data is huge, this advantage can be displayed incisively and vividly. For example, if 10w numbers are also generated, the iterator needs a list of 10w elements, while the generator only needs one element. Of course, you can save memory.

The generator is a method of exchanging time for space. The iterator takes values from the set that has been created in memory, so it consumes memory space. However, the generator only saves one value and takes one value and calculates it once. It consumes cpu but saves memory space.

Generator application scenario

- The data scale is huge and the memory consumption is serious

- The sequence is regular, but it can't be described by list derivation

- Synergetic process. Generator and collaborative process are inextricably linked

Generators save memory, iterators don't

Practice is the only criterion to test the truth. It can detect which iterator or generator can save memory by recording the changes of memory.

Environmental Science:

System: Linux deepin 20.2.1

Memory: 8G

python version: 3.7.3

Memory monitoring tool: free -b memory display in bytes

Methods: generate a 1 million scale list from 0 to 100w, and compare the memory changes before and after generating the data

Iteratable object

>>> arr = [i for i in range(1000000)] >>> >>> arr.__sizeof__() 8697440 >>>

The first free -b is before generating the list; The second time after the list is generated. The same below

ljk@work:~$ free -b

total used free shared buff/cache available

Mem: 7978999808 1424216064 2386350080 362094592 4168433664 5884121088

Swap: 0 0 0

ljk@work:~$ free -b

total used free shared buff/cache available

Mem: 7978999808 1464410112 2352287744 355803136 4162301952 5850210304

Swap: 0 0 0

Phenomenon: memory increase: 1464410112 bytes from 1424216064 bytes, 38.33 MB

iterator

>>> a = iter([i for i in range(1000000)]) >>> >>> a.__sizeof__() 32

ljk@work:~$ free -b

total used free shared buff/cache available

Mem: 7978999808 1430233088 2385924096 355160064 4162842624 5885038592

Swap: 0 0 0

ljk@work:~$ free -b

total used free shared buff/cache available

Mem: 7978999808 1469304832 2346835968 355160064 4162859008 5845966848

Swap: 0 0 0

Phenomenon: memory increase: 1469304832 bytes and 37.26 MB from 143023388 bytes

generator

>>> arr = (i for i in range(1000000)) >>> >>> arr.__sizeof__() 96 >>>

ljk@work:~$ free -b

total used free shared buff/cache available

Mem: 7978999808 1433968640 2373222400 362868736 4171808768 5873594368

Swap: 0 0 0

ljk@work:~$ free -b

total used free shared buff/cache available

Mem: 7978999808 1434963968 2378940416 356118528 4165095424 5879349248

Swap: 0 0 0

Phenomenon: memory increases: 14349639668 bytes and 0.9492 MB are increased from 14339686 bytes

Summary:

| - | system memory | Variable memory |

|---|---|---|

| Iteratable object | 38.33MB | 8.29MB |

| iterator | 37.26MB | 32k |

| generator | 0.9492MB | 96k |

The above conclusions have been realized for many times, and the saved variables are basically the same. From the data results, the iterator can not save memory, and the generator can save memory. When generating 100w scale data, the memory consumption of the iterator is about 40 times that of the generator, and there is a certain error in the result.

summary

Iteratable objects:

Property: a container object

Features: it can save the element set, and can't realize iterative value taking by itself. It can iterate value taking with the help of the outside world

Features: Yes__ iter__ method

Iterator:

Attributes: a tool object

Features: iterative value taking can be realized. The source of value taking is the set saved by iterative objects

Features: Yes__ iter__ And__ next__ method

Advantage: implement a general iterative method

Generator:

Property: a function object

Features: it can realize iterative value taking, save only one value, and return the next value of the iteration through calculation. Replace memory with calculation.

Features: Yes__ iter__ And__ next__ method

Advantages: it has the characteristics of iterator and can save memory

I've talked a lot about iteratable objects, iterators and generators. I don't know if the readers are already confused? Ask a question to test: whose perspective is the name of the characters of the first day of journey to the West addressed?

reference resources:

https://zhuanlan.zhihu.com/p/71703028

https://blog.csdn.net/mpu_nice/article/details/107299963