Locks in Java

1 Lock interface

Locks are the parties that control access to shared resources by multiple threads. In general, a lock prevents multiple threads from accessing shared resources simultaneously (but some locks allow multiple threads to access shared resources concurrently, such as read-write locks).

Prior to the Lock interface, Java programs relied on the synchronized keyword for lock functionality, whereas after Java SE 5, a new Lock interface (and related implementation classes) was added to concurrent packages to implement lock functionality, which provides synchronization similar to the synchronized keyword but requires explicit acquisition and release of locks when used. Although it lacks the convenience of acquiring and releasing locks implicitly (provided by synchronized blocks or methods), it has synchronization features that are not available with synchronized keywords such as operability of lock acquisition and release, interruptible acquisition of locks, and timeout acquisition of locks.

Using the synchronized keyword will implicitly acquire the lock, but it solidifies the acquisition and release of the lock, that is, acquire and release first. This simplifies synchronization management, but extensibility does not show good lock acquisition and release. For example, for a scenario, the handle acquires and releases the lock, acquires lock A first, then acquires lock B, releases lock A and acquires lock C when lock B is acquired, releases B and acquires lock D when lock C is acquired, and so on. In this scenario, the synchronized keyword is not as easy to implement as it is to use Lock.

Lock is also simple to use:

Lock lock = new ReentrantLock();

lock.lock();

try {

} finally {

lock.unlock(); // Release the lock to ensure that it is eventually released after it has been acquired

}

In ReentrantLock, the lock() method is called to acquire the lock; Call the unlock() method to release the lock. Do not write the process of acquiring a lock in a try block, because if an exception occurs while acquiring a lock (an implementation of a custom lock), the exception throws and the lock is released for no reason.

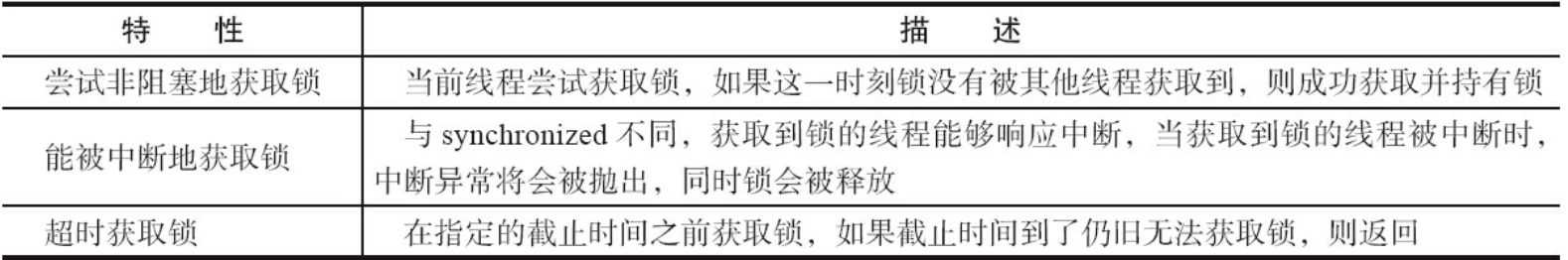

The main features that the synchronized keyword provided by the Lock interface does not have are as follows:

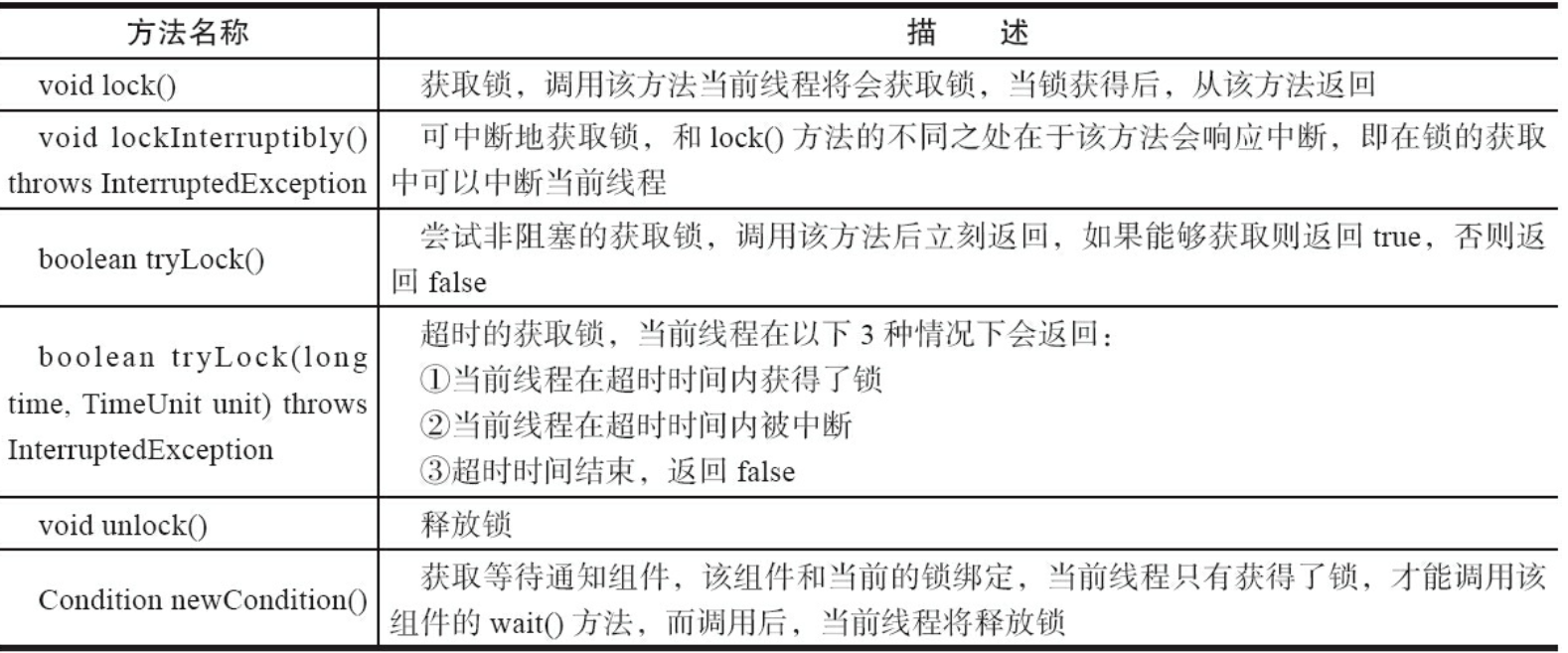

Lock is an interface that defines the basic operations for lock acquisition and release, as shown in the following table:

The implementation of the Lock interface basically accomplishes thread access control by aggregating a subclass of AbstractQueuedSynchronizer.

2 Queue Synchronizer

Queue Synchronizer AbstractQueuedSynchronizer (hereinafter referred to as Synchronizer) is the basic framework for building locks or other synchronization components. It uses an int member variable to represent the synchronization state and uses the built-in FIFO queue to complete the queuing of resource acquisition threads.

Synchronizer is mainly used by inheritance. Subclasses manage the synchronization state by inheriting the synchronizer and implementing its abstract method. It is inevitable to change the synchronization state during the implementation of the abstract method. At this point, three methods provided by the synchronizer (getState(), setState(int newState), and compareAndSetState(int expect,int update) are needed to operate. Because they ensure that state changes are safe.

synchronizer [ ˈ s ɪŋ kr ə na ɪ z ə ®] Synchronization device; synchronization flash device

public abstract class AbstractQueuedSynchronizer extends AbstractOwnableSynchronizer

implements java.io.Serializable {

private volatile int state;

protected final int getState() {

return state;

}

protected final void setState(int newState) {

state = newState;

}

protected final boolean compareAndSetState(int expect, int update) {

return U.compareAndSwapInt(this, STATE, expect, update);

}

}

Subclasses recommend static internal classes defined as custom synchronization components. Synchronizers themselves do not implement any synchronization interfaces. They simply define a number of methods to obtain and release synchronization states for use by custom synchronization components. Synchronizers can support either exclusive acquisition of synchronization states or shared acquisition of synchronization states, which makes it easy to avoid The same type of synchronization component (ReentrantLock, ReentrantReadWriteLock, CountDownLatch, and so on).

public class ReentrantLock implements Lock, java.io.Serializable {

private final Sync sync;

abstract static class Sync extends AbstractQueuedSynchronizer {

}

}

Synchronizer is the key to implementing locks (or any synchronization component). Synchronizer is aggregated in the implementation of locks, and the semantics of locks are implemented using synchronizers.

The relationship between the two can be understood as follows: Locks are user-oriented and define interfaces through which users interact with locks (for example, two threads can be allowed to access in parallel) To hide the details of the implementation; synchronizer is for the implementer of the lock, which simplifies the way the lock is implemented and shields the underlying operations such as synchronization state management, thread queuing, waiting and waking. Locks and synchronizers are a good way to isolate the areas of interest for users and implementers.

Interface and Example of 2.1 Queue Synchronizer

When overriding a synchronizer-specified method, you need to access or modify the synchronization state using three methods provided by the synchronizer:

public abstract class AbstractQueuedSynchronizer extends AbstractOwnableSynchronizer

implements java.io.Serializable {

private volatile int state;

// 1. Get the current synchronization status

protected final int getState() {

return state;

}

// 2. Set the current synchronization state

protected final void setState(int newState) {

state = newState;

}

// 3. Use CAS to set the current state, which guarantees the atomicity of the state setting

protected final boolean compareAndSetState(int expect, int update) {

return U.compareAndSwapInt(this, STATE, expect, update);

}

}

Synchronizer overridable methods:

public abstract class AbstractQueuedSynchronizer extends AbstractOwnableSynchronizer

implements java.io.Serializable {

// 1. Get synchronization state exclusively. To implement this method, you need to query the current state and determine if the synchronization state is as expected, then set the synchronization state by CAS.

protected boolean tryAcquire(int arg) {

throw new UnsupportedOperationException();

}

// 2. Release synchronization state exclusively, threads waiting to get synchronization state will have the opportunity to get synchronization state

protected boolean tryRelease(int arg) {

throw new UnsupportedOperationException();

}

// 3. Shared Get Synchronization Status, returns a value greater than or equal to 0, indicating success or failure

protected int tryAcquireShared(int arg) {

throw new UnsupportedOperationException();

}

// 4. Shared Release Synchronization Status

protected boolean tryReleaseShared(int arg) {

throw new UnsupportedOperationException();

}

// 5. Whether the current synchronizer is occupied by threads in exclusive mode, which is generally used to indicate whether it is exclusive by the current thread

protected boolean isHeldExclusively() {

throw new UnsupportedOperationException();

}

}

Exclusively [ ɪ k ˈ sklu ː s ɪ vli] Uniquely, exclusively, exclusively; As the only source

When implementing a custom synchronization component, the template method provided by the synchronizer is invoked:

public abstract class AbstractQueuedSynchronizer extends AbstractOwnableSynchronizer

implements java.io.Serializable {

// 1. Get the synchronization state exclusively. If the current thread gets the synchronization state successfully, it will be returned by this method. Otherwise, it will enter the synchronization queue and wait, which will call the overridden tryAcquire(int arg) method

public final void acquire(int arg) { }

// 2. Same as acquire(int arg), but this method responds to interruptions, the current thread does not get the synchronization state and enters the synchronization queue. If the current thread is interrupted, this method throws InterruptedException and returns

public final void acquireInterruptibly(int arg) throws InterruptedException { }

// 3. Increase the timeout limit based on acquireInterruptibly(int arg). If the current thread does not get synchronization within the timeout, it will return false, and if it does, it will return true.

public final boolean tryAcquireNanos(int arg, long nanosTimeout)

throws InterruptedException { }

// 4. Shared get synchronization status, if the current thread does not get synchronization status, it will enter the synchronization queue to wait, the main difference from exclusive get is that multiple threads can get synchronization status at the same time

public final void acquireShared(int arg) { }

// 5. Like acquireShared(int arg), the method responds to interruptions

public final void acquireSharedInterruptibly(int arg) throws InterruptedException { }

// 6. Timeout limit added on acquireSharedInterruptibly(int arg)

public final boolean tryAcquireSharedNanos(int arg, long nanosTimeout)

throws InterruptedException { }

// 7. Exclusive release synchronization state, which wakes up the thread contained by the first node in the synchronization queue after release synchronization state

public final boolean release(int arg) { }

// 8. Shared release synchronization state

public final boolean releaseShared(int arg) { }

// 9. Get the collection of threads waiting on the synchronization queue

public final Collection<Thread> getQueuedThreads() { }

}

Synchronizer provides three basic types of template methods: exclusive fetch and release synchronization state, shared fetch and release synchronization state, and wait threads in query synchronization queue. Custom synchronization components implement their own synchronization semantics using the template methods provided by the synchronizer.

An exclusive lock means that only one thread can acquire a lock at the same time, while the other thread that acquires the lock can only wait in the synchronization queue. Only the thread that acquires the lock releases the lock can subsequent threads acquire the lock:

class Mutex implements Lock {

// Simply proxy operations to Sync

private final Sync sync = new Sync();

// Static internal class, custom synchronizer

private static class Sync extends AbstractQueuedSynchronizer {

// Is it occupied

@Override

protected boolean isHeldExclusively() {

return getState() == 1;

}

// Acquire locks when state is 0

@Override

protected boolean tryAcquire(int arg) {

if (compareAndSetState(0, 1)) {

setExclusiveOwnerThread(Thread.currentThread());

return true;

}

return false;

}

// Release lock, set state to 0

@Override

protected boolean tryRelease(int arg) {

if (getState() == 0)

throw new IllegalMonitorStateException();

setExclusiveOwnerThread(null);

setState(0);

return true;

}

// Returns a Condition, each containing a condition queue

Condition newCondition() {

return new ConditionObject();

}

}

@Override

public void lock() {

sync.acquire(1);

}

@Override

public boolean tryLock() {

return sync.tryAcquire(1);

}

@Override

public void unlock() {

sync.release(1);

}

@Override

public Condition newCondition() {

return sync.newCondition();

}

public boolean isLocked() {

return sync.isHeldExclusively();

}

public boolean hasQueuedThreads() {

return sync.hasQueuedThreads();

}

@Override

public void lockInterruptibly() throws InterruptedException {

sync.acquireInterruptibly(1);

}

@Override

public boolean tryLock(long time, TimeUnit unit) throws InterruptedException {

return sync.tryAcquireNanos(1, unit.toNanos(time));

}

}

In the example above, the exclusive lock Mutex is a custom synchronization component that allows only one thread to hold a lock at a time. Mutex defines a static internal class that inherits the synchronizer and implements an exclusive acquisition and release of the synchronization state. In the tryAcquire(int acquires) method, if successfully set by CAS (synchronization state set to 1), the synchronization state is obtained, whereas in the tryRelease (int release) method, the synchronization state is reset to 0. Instead of dealing directly with the implementation of the internal synchronizer, users use Mutex to invoke the methods provided by Mutex. In the implementation of Mutex, for example, the lock() method to acquire locks, simply invoke the template method acquire(int args) of the synchronizer in the method implementation. If the current thread invokes this method to obtain synchronization status fails, it will be joined the synchronization queue and waited. This greatly reduces the threshold for implementing a reliable custom synchronization component.

Implementation Analysis of 2.2 Queue Synchronizer

Next, we will analyze how synchronizers accomplish thread synchronization from the perspective of implementation, including synchronization queue, exclusive synchronization state acquisition and release, shared synchronization state acquisition and release, and timeout synchronization state acquisition.

2.2.1 Synchronization Queue

Synchronizer relies on an internal synchronization queue (a FIFO two-way queue) to manage the synchronization state. When the current thread fails to get the synchronization state, the synchronizer constructs the current thread and wait state information into a node (Node) and joins the synchronization queue. It also blocks the current thread and wakes up the thread in the first node when the synchronization state is released. Make it try to get synchronization status again.

Node s in the synchronization queue are used to hold thread references, wait states, and precursor and successor nodes that fail to get synchronization status. The attribute types and names of nodes and descriptions are as follows:

public abstract class AbstractQueuedSynchronizer extends AbstractOwnableSynchronizer

implements java.io.Serializable {

static final class Node {

// 1. Wait state, including the following states

// 1.1 CANCELLED, value 1, since the thread waiting in the synchronization queue timed out or was interrupted, the waiting needs to be cancelled from the synchronization queue, and the node entering this state will not change

// 1.2 SIGNAL, value -1, threads of subsequent nodes are waiting, and threads of the current node will notify subsequent nodes if the synchronization state is released or canceled, so that threads of subsequent appliances can run

// 1.3 CONDITION, value -2, node is in wait queue, node thread is waiting on Condition, when other threads call single() method on Condition, the node will be transferred from wait queue to synchronization queue, if in the acquisition of synchronization state

// 1.4 PROPAGATE, with a value of -3, indicates that the next shared synchronization state acquisition will be propagated unconditionally

// 1.5 INITIAL, value 0, initial state

volatile int waitStatus;

// 2. Front-end node, set when the node joins the synchronization queue (tail added)

volatile Node prev;

// 3. Successor Nodes

volatile Node next;

// 4. Wait for the successor nodes in the queue. If the current node is shared, this field will be a SHARED constant, that is, the node type (exclusive and shared) and the successor nodes in the waiting queue share the same field

Node nextWaiter;

// 5. Threads to get synchronization status

volatile Thread thread;

}

}

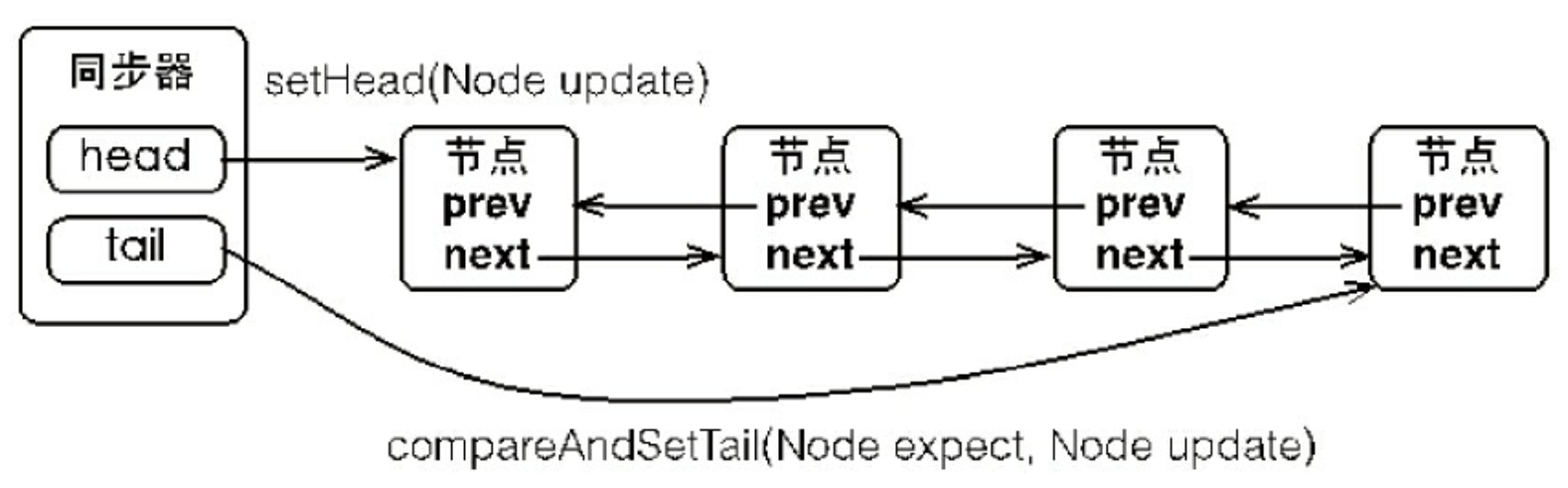

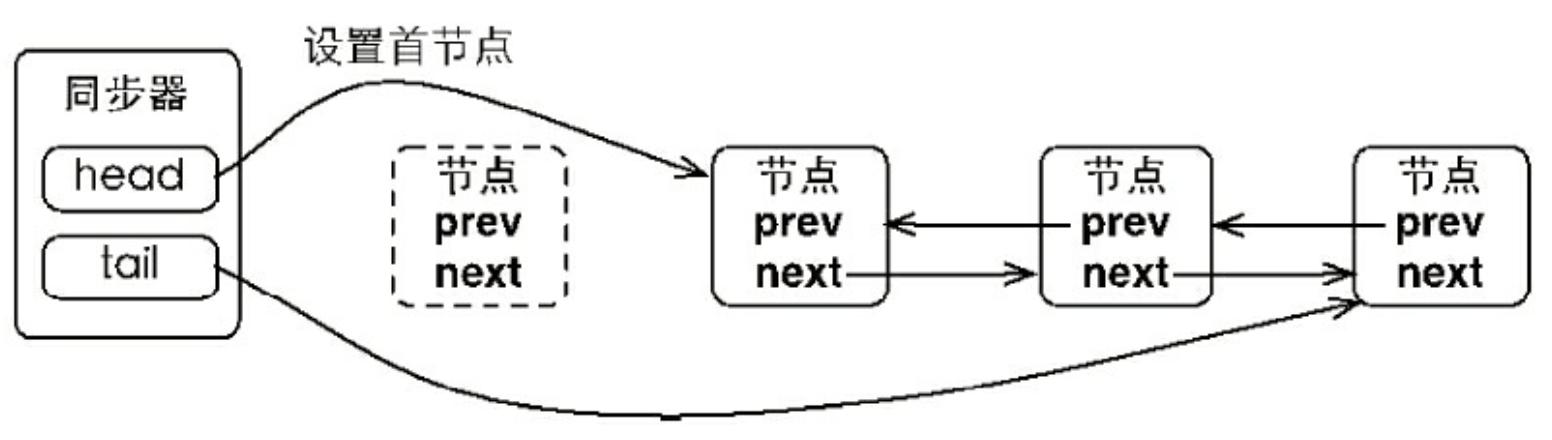

Nodes form the basis of a synchronization queue (waiting queue). Synchronizers have head and tail nodes. Threads that fail to obtain synchronization status successfully become the end of the queue where nodes join. The basic structure of the synchronization queue is as follows:

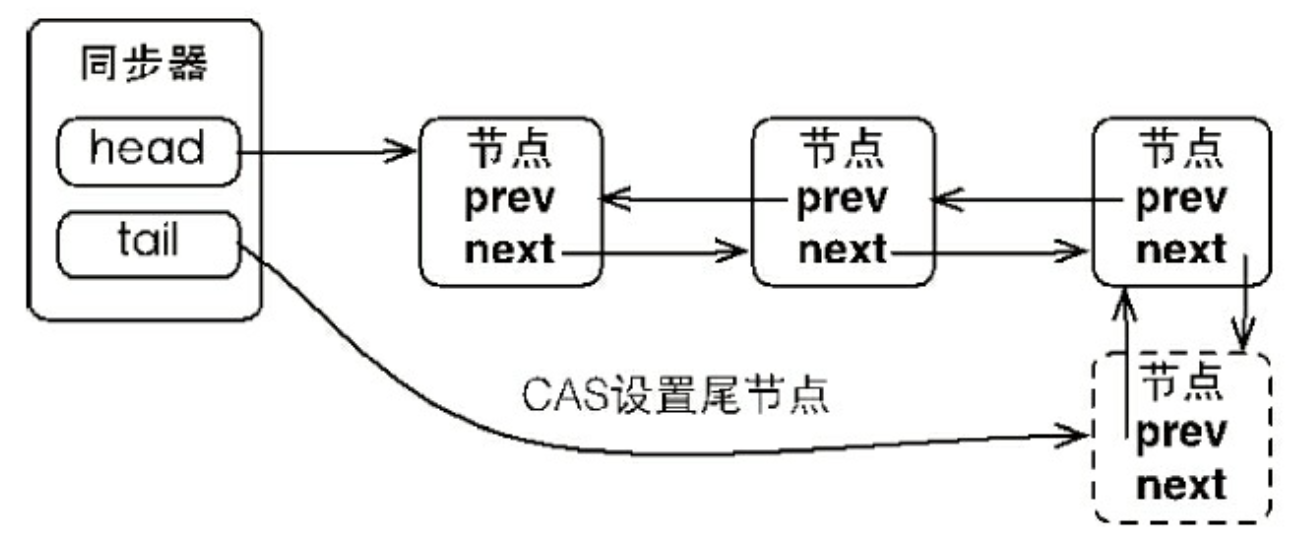

Synchronizer contains references to two node types, one pointing to the head node and the other pointing to the tail node. When one thread successfully acquires the synchronization state (or lock), the other threads will not be able to acquire the synchronization state, instead they will be constructed into nodes and joined to the synchronization queue. This process of joining the queue must be thread-safe, so the synchronizer provides a CAS-based method of setting the tail node: compareAndSetTail(Node expect,Node update). It passes the tail node and the current node that the current thread "thinks" of, and the current node formally associates with the previous tail node only after the setup is successful.

The synchronizer joins the nodes to the synchronization queue as shown in the diagram:

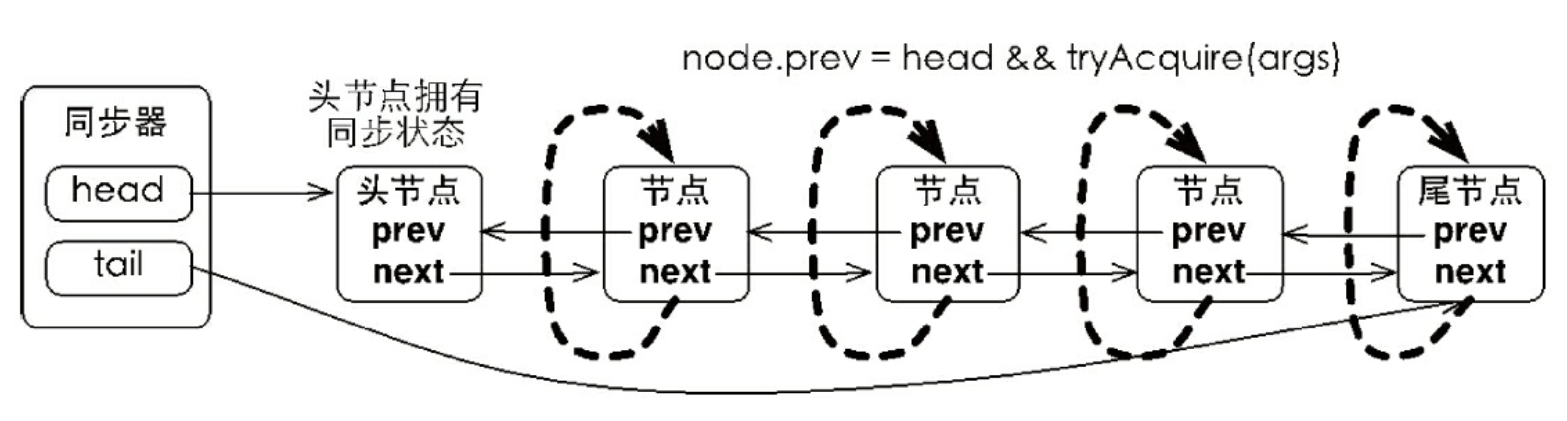

The synchronization queue follows FIFO. The first node is the node that gets the synchronization status successful. When the thread of the first node releases the synchronization state, the successor node wakes up, and the successor node sets itself as the first node when it gets the synchronization status successful, as shown in the diagram:

In the figure above, setting the first node is accomplished by a thread that has successfully obtained the synchronization state. Since only one thread can successfully obtain the synchronization state, the method of setting the first node does not need to use CAS to ensure that it only needs to set the first node as the successor node of the original first node and disconnect the next reference of the original first node.

2.2.2 Exclusive Synchronization State Acquisition and Release

Synchronization status can be obtained by calling the synchronizer's acquire(int arg) method, which is insensitive to interrupts, i.e. when a thread fails to obtain synchronization status and enters the synchronization queue, the thread will not be moved out of the synchronization queue during subsequent interrupt operations on the thread. The code is as follows:

public abstract class AbstractQueuedSynchronizer extends AbstractOwnableSynchronizer

implements java.io.Serializable {

public final void acquire(int arg) {

if (!tryAcquire(arg) && acquireQueued(addWaiter(Node.EXCLUSIVE), arg))

selfInterrupt();

}

}

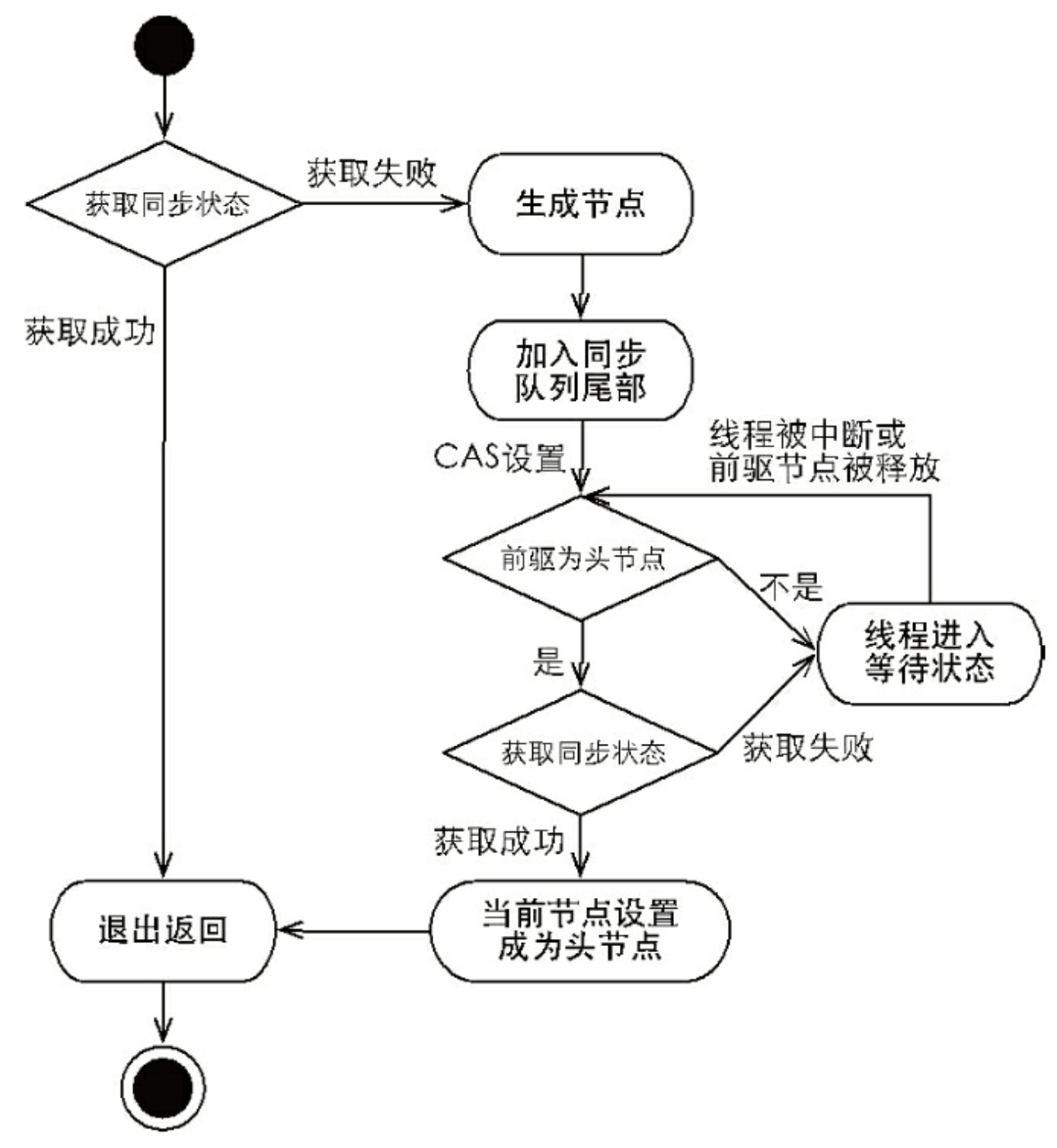

The above code mainly completes the synchronization status acquisition, node construction, joining the synchronization queue, and spin waiting in the synchronization queue. Its main logic is: first invoke the tryAcquire(int arg) method implemented by the custom synchronizer, which guarantees thread-safe acquisition of synchronization status, and then construct the synchronization node if the synchronization status acquisition fails (Exclusive Node.EXCLUSIVE, only one thread can successfully obtain synchronization status at a time) and add the node to the end of the synchronization queue through the addWaiter(Node node) method, then call the acquireQueued(Node node,int arg) method to make the node "dead-loop" If not, the thread in the node is blocked, and the wake-up of the blocked thread depends mainly on the queuing of the precursor node or the interruption of the blocked thread.

The following is an analysis of the related work. First, the construction of the node and joining the synchronization queue, the addWaiter method of the synchronizer:

public abstract class AbstractQueuedSynchronizer extends AbstractOwnableSynchronizer

implements java.io.Serializable {

private Node addWaiter(Node mode) {

Node node = new Node(mode);

for (;;) {

Node oldTail = tail;

if (oldTail != null) {

U.putObject(node, Node.PREV, oldTail);

if (compareAndSetTail(oldTail, node)) {

oldTail.next = node;

return node;

}

} else {

initializeSyncQueue();

}

}

}

private final boolean compareAndSetTail(Node expect, Node update) {

return U.compareAndSwapObject(this, TAIL, expect, update);

}

}

The above code uses the compareAndSetTail(Node expect,Node update) method to ensure that nodes can be safely added by threads. Imagine that if a common LinkedList is used to maintain the relationship between nodes, when one thread gets synchronized and multiple other threads get synchronized due to a call to tryAcquire(int arg) When a method fails to obtain synchronization status and is added concurrently to a LinkedList, the LinkedList will have difficulty ensuring that Node is added correctly. The end result may be that the number of nodes is skewed and the order is confusing.

When a node enters the synchronization queue, it enters a spin process, and each node (or thread) observes it carefully. When the condition is met and the synchronization state is obtained, it can exit from the spin process, otherwise it will remain in the spin process (and block the thread of the node), as shown in the code below:

public abstract class AbstractQueuedSynchronizer extends AbstractOwnableSynchronizer

implements java.io.Serializable {

final boolean acquireQueued(final Node node, int arg) {

try {

boolean interrupted = false;

for (;;) {

final Node p = node.predecessor();

if (p == head && tryAcquire(arg)) {

setHead(node);

p.next = null; // help GC

return interrupted;

}

if (shouldParkAfterFailedAcquire(p, node) &&

parkAndCheckInterrupt())

interrupted = true;

}

} catch (Throwable t) {

cancelAcquire(node);

throw t;

}

}

}

In the acquireQueued(final Node node,int arg) method, the current thread attempts to get the synchronization state in a "dead loop", whereas only the preceding node is the head node can attempt to get the synchronization state for the following reasons:

- A header node is a node that has successfully acquired the synchronization state, and when the thread of the header node releases the synchronization state, its successor node is awakened, and the thread of the successor node needs to check whether its precursor node is a header node after it is awakened.

- Maintains FIFO principles for synchronous queues.

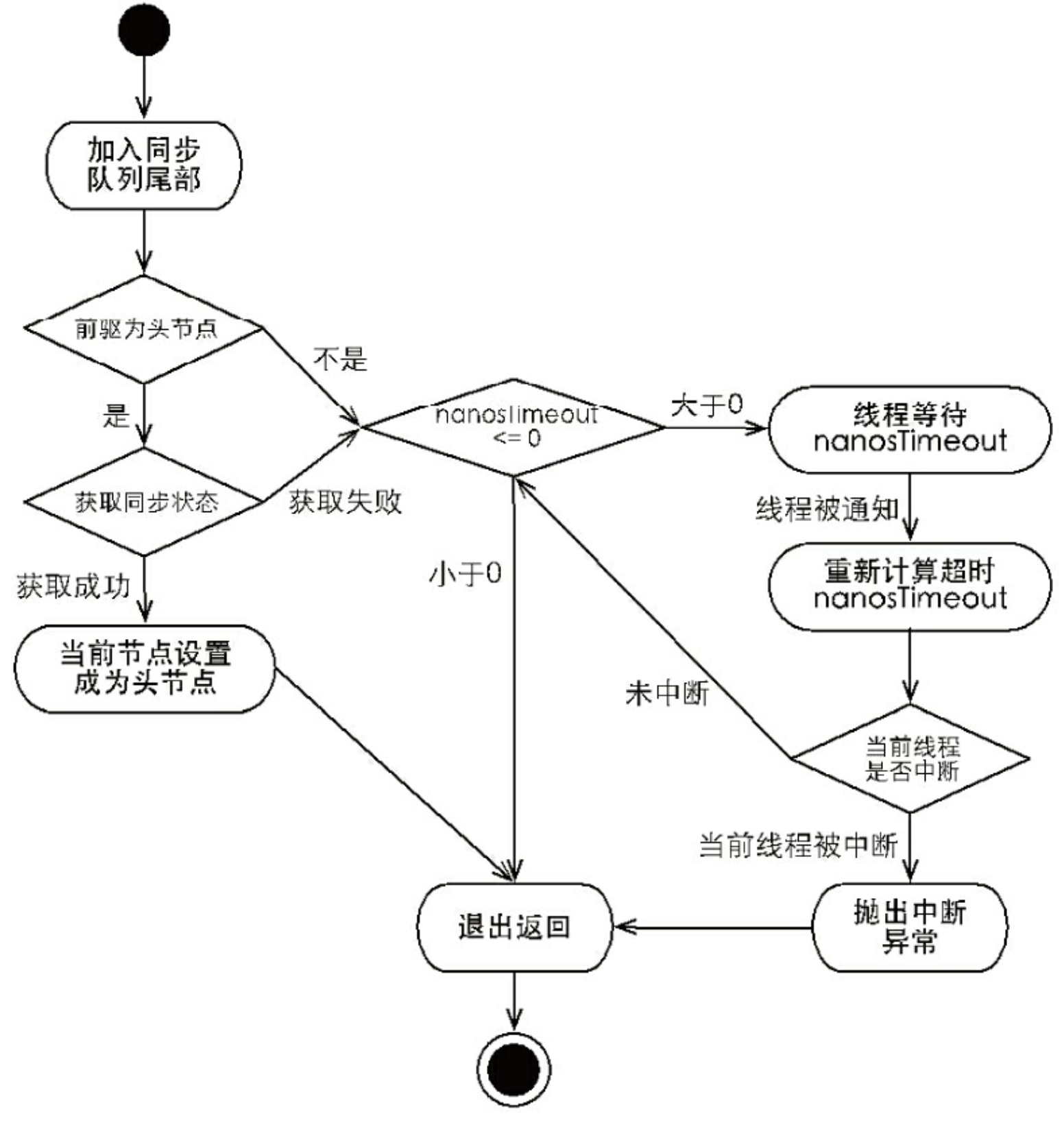

In this method, the behavior of node spin to get synchronization state is shown as follows:

Return from the wait state because a non-first-node thread precursor node is queued or interrupted, then check if its precursor is the first node and if so, try to get the synchronization state. You can see that the nodes and nodes do not communicate with each other during the cycle check, but simply determine whether their precursor is the head node, which makes the release rules of the nodes FIFO-compliant and facilitates the processing of premature notifications (premature notifications refer to threads whose precursor node is not the head node that are awakened due to interruption).

The exclusive synchronization state acquisition process, also known as the acquire(int arg) method invocation process, is shown as follows:

The precursor node is the head node and can get the judgment condition of synchronization state and the thread enters the waiting state are the spin processes to get the synchronization state. When the synchronization state is successful, the current thread returns from the acquire(int arg) method, which, for concurrent components like locks, represents the current thread acquiring the lock.

After the current thread gets the synchronization state and executes the appropriate logic, it needs to release the synchronization state so that subsequent nodes can continue to get the synchronization state. The synchronization state can be released by calling the release(int arg) method of the synchronizer, which wakes up subsequent nodes after the synchronization state is released (thereby causing them to retry the synchronization state). The method code is as follows:

public abstract class AbstractQueuedSynchronizer extends AbstractOwnableSynchronizer

implements java.io.Serializable {

public final boolean release(int arg) {

if (tryRelease(arg)) {

Node h = head;

if (h != null && h.waitStatus != 0)

unparkSuccessor(h);

return true;

}

return false;

}

}

When this method executes, the successor node threads of the header node are waked up, and the unparkSuccessor(Node node) method uses LockSupport to wake up the waiting threads.

After analyzing the process of acquiring and releasing exclusive synchronization state, it can be summarized as follows: When acquiring synchronization state, the synchronizer maintains a synchronization queue, and threads that fail to obtain state are joined to the queue and spin in the queue; The condition for moving out of the queue (or stopping spin) is that the precursor node is the head node and the synchronization status is successfully obtained. When the synchronization state is released, the synchronizer calls the tryRelease(int arg) method to release the synchronization state, then wakes up the successor nodes of the header node.

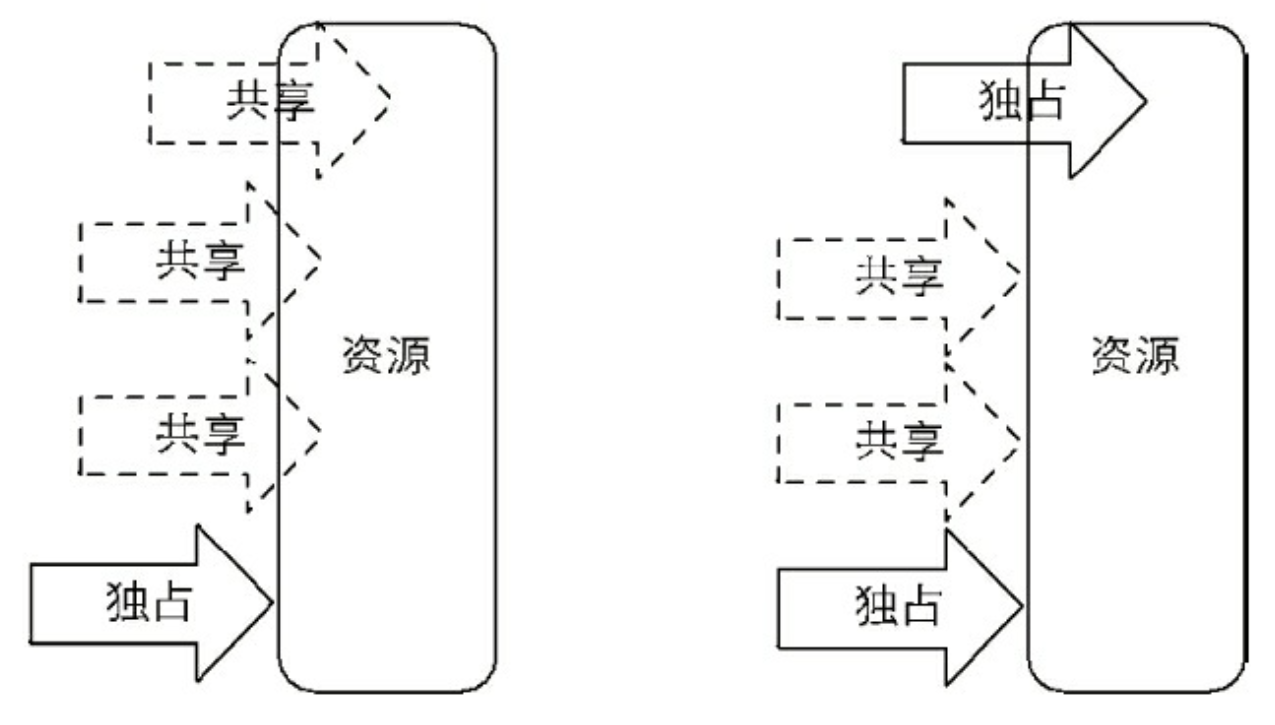

2.2.3 Shared Synchronization State Acquisition and Release

The main difference between shared and exclusive fetches is whether multiple threads can get synchronization at the same time. For example, if a program reads a file, the write operation to the file is blocked at that moment, and the read operation can be done at the same time. Write operations require exclusive access to resources, while read operations can be shared access, with two different access modes accessing files or resources at the same time, as shown in the diagram:

The left half allows shared access to resources, while exclusive access is blocked, and the right half blocks other access at the same time. The synchronization status can be shared by calling the acquireShared(int arg) method of the synchronizer with the following code:

public abstract class AbstractQueuedSynchronizer extends AbstractOwnableSynchronizer

implements java.io.Serializable {

public final void acquireShared(int arg) {

if (tryAcquireShared(arg) < 0)

doAcquireShared(arg);

}

private void doAcquireShared(int arg) {

final Node node = addWaiter(Node.SHARED);

try {

boolean interrupted = false;

for (;;) {

final Node p = node.predecessor();

if (p == head) {

int r = tryAcquireShared(arg);

if (r >= 0) {

setHeadAndPropagate(node, r);

p.next = null; // help GC

if (interrupted)

selfInterrupt();

return;

}

}

if (shouldParkAfterFailedAcquire(p, node) && parkAndCheckInterrupt())

interrupted = true;

}

} catch (Throwable t) {

cancelAcquire(node);

throw t;

}

}

}

In the acquireShared(int arg) method, the synchronizer calls the tryAcquireShared(int arg) method to attempt to obtain the synchronization state. The tryAcquireShared(int arg) method returns an int type, which means that the synchronization state can be obtained when the return value is greater than or equal to 0. Therefore, during the spin process of shared acquisition, the condition for successful synchronization and spin exit is that the return value of the tryAcquireShared(int arg) method is greater than or equal to 0. You can see that during the spin process of the doAcquireShared(int arg) method, if the precursor of the current node is the head node, an attempt is made to get the synchronization state, and if the return value is greater than or equal to 0, the synchronization state is obtained successfully and exited from the spin process.

Shared acquisition, like exclusive acquisition, also requires the release of synchronization state, which can be released by calling the release Shared (int arg) method with the following code:

public abstract class AbstractQueuedSynchronizer extends AbstractOwnableSynchronizer

implements java.io.Serializable {

public final boolean releaseShared(int arg) {

if (tryReleaseShared(arg)) {

doReleaseShared();

return true;

}

return false;

}

}

This method will wake up subsequent waiting nodes after releasing the synchronization state. For concurrent components that can support simultaneous access by multiple threads, such as Semaphore, the main difference from exclusive is that the tryReleaseShared(int arg) method must ensure that synchronized state (or number of resources) threads are freed safely, typically through loops and CAS, because operations that release synchronized state come from multiple threads at the same time.

2.2.4 Exclusive Timeout to Get Sync Status

By calling the doAcquireNanos (int arg, long nanos Timeout) method of the synchronizer, the synchronization state can be acquired over a timeout period, that is, within a specified time period, true if the synchronization state is acquired, false otherwise. This approach provides features that traditional Java synchronization operations, such as the synchronized keyword, do not have.

Before analyzing the implementation of this method, the synchronization state acquisition process in response to interrupts is introduced. Prior to Java 5, when a thread was blocked outside of synchronized because it could not acquire a lock, the interrupt flag bit of the thread was modified, but the thread would still block on synchronized and wait for the lock to be acquired. In Java 5, the synchronizer provides the acquireInterruptibly(int arg) method, which returns immediately and throws an InterruptedException if the current thread is interrupted while waiting to get the synchronization state.

Timeout acquisition of synchronization status can be viewed as an "enhanced version" of response interruption acquisition of synchronization status process. The doAcquireNanos (int arg, long nanos Timeout) method adds the feature of timeout acquisition on the basis of supporting response interruption. For timeout acquisition, the main time interval needed to sleep is nanosTimeout. To prevent premature notification, the nanosTimeout formula is: nanosTimeout -= now - lastTime, where now is the current wake-up time, lastTime is the last wake-up time, if the nanosTimeout is greater than 0, the timeout is less, you need to continue sleeping nanosTimeout nanoseconds, otherwise, Indicates that the time-out has occurred, and the code is as follows:

public abstract class AbstractQueuedSynchronizer extends AbstractOwnableSynchronizer

implements java.io.Serializable {

private boolean doAcquireNanos(int arg, long nanosTimeout) throws InterruptedException {

if (nanosTimeout <= 0L) return false;

final long deadline = System.nanoTime() + nanosTimeout;

final Node node = addWaiter(Node.EXCLUSIVE);

try {

for (;;) {

final Node p = node.predecessor();

if (p == head && tryAcquire(arg)) {

setHead(node);

p.next = null; // help GC

return true;

}

nanosTimeout = deadline - System.nanoTime();

if (nanosTimeout <= 0L) {

cancelAcquire(node);

return false;

}

if (shouldParkAfterFailedAcquire(p, node) && nanosTimeout > SPIN_FOR_TIMEOUT_THRESHOLD)

LockSupport.parkNanos(this, nanosTimeout);

if (Thread.interrupted())

throw new InterruptedException();

}

} catch (Throwable t) {

cancelAcquire(node);

throw t;

}

}

}

This method tries to get synchronization status when the precursor node of the node is the head node during the spinning process, and returns from this method if it succeeds. This process is similar to exclusive synchronization acquisition, but differs in the processing of failed synchronization status acquisition. If the current thread fails to get the synchronization state, it determines whether it has timed out (nanosTimeout less than or equal to 0 means it has timed out), if it does not, recalculates the timeout interval nanosTimeout, and then causes the current thread to wait nanosTimeout nanoseconds (when the set timeout time-out has elapsed, the thread returns from the LockSupport.parkNanos(Object blocker,long nanos) method).

If the nanosTimeout is less than or equal to spinForTimeoutThreshold (1000 nanoseconds), the thread will not wait for a timeout, but will enter a fast spin process. The reason is that very short timeout waits cannot be very precise, and if you wait for a timeout at that time, the nanosTimeout's timeout will behave inaccurately as a whole. Therefore, in very short timeout scenarios, the synchronizer enters an unconditional fast spin.

The process of obtaining synchronization with an exclusive timeout is shown in the diagram:

As you can see from the diagram, exclusive timeout acquisition of synchronization state doAcquireNanos (int arg, long nanos Timeout) and exclusive acquisition of synchronization state acquisition (int args) are very similar in process, the main difference is the processing logic when the synchronization state is not obtained. acquire(int args) keeps the current thread waiting until it reaches the synchronization state, while doAcquireNanos(int arg,long nanosTimeout) keeps the current thread waiting for nanosTimeout nanoseconds, which automatically returns from the wait logic if the current thread does not get the synchronization state within nanosTimeout nanoseconds.

2.2.5 Custom Synchronization Component - TwinsLock

Here's how to better understand synchronizers by writing a custom synchronization component. Design a synchronization tool: This tool only allows up to two threads to access at the same time. Access to more than two threads will be blocked. Name this synchronization tool TwinsLock.

First, determine the access mode. TwinsLock can support multiple threads at the same time, which is obviously shared access. Therefore, it is necessary to use the acquireShared(int args) method provided by the synchronizer and other methods related to Shared, which requires TwinsLock to override the tryAcquireShared(int args) and tryReleaseShared(int args) methods. In this way, the acquisition and release of the shared synchronization state of the synchronizer can be guaranteed.

Second, define the number of resources. TwinsLock allows simultaneous access by up to two threads at a time, indicating that the number of synchronization resources is 2. This sets the initial state status to 2. When a thread acquires, status minus 1, and the thread releases, status adds 1, and the legal range of States is 0, 1, and 2, where 0 indicates that two threads currently have acquired synchronization resources. At this point, other threads get the synchronization status, and the thread can only be blocked. The compareAndSet(int expect,int update) method is required to guarantee atomicity when synchronizing state changes.

Finally, combine custom synchronizers. Custom synchronization components accomplish synchronization by combining custom synchronizers, which are typically defined as internal classes of custom synchronization components.

class TwinsLock implements Lock {

private final Sync sync = new Sync(2);

private static final class Sync extends AbstractQueuedSynchronizer {

Sync(int count) {

if (count <= 0) {

throw new IllegalArgumentException("count must large than zero");

}

setState(count);

}

@Override

protected int tryAcquireShared(int reduceCount) {

for (; ; ) {

int current = getState();

int newCount = current - reduceCount;

if (newCount < 0 || compareAndSetState(current, newCount)) {

return newCount;

}

}

}

@Override

protected boolean tryReleaseShared(int returnCount) {

for (; ; ) {

int current = getState();

int newCount = current + returnCount;

if (compareAndSetState(current, newCount)) {

return true;

}

}

}

}

@Override

public void lock() {

sync.acquireShared(1);

}

@Override

public void lockInterruptibly() throws InterruptedException { }

@Override

public boolean tryLock() {

return false;

}

@Override

public boolean tryLock(long time, TimeUnit unit) throws InterruptedException {

return false;

}

@Override

public void unlock() {

sync.releaseShared(1);

}

@Override

public Condition newCondition() {

return null;

}

}

In the example above, TwinsLock implements the Lock interface, providing a user-oriented interface, where the user invokes the lock() method to acquire the lock, then invokes the unlock() method to release the lock, whereas only two threads can acquire the lock at the same time. TwinsLock also includes a custom synchronizer Sync, which is designed for thread access and synchronization state control. An example of a shared acquisition of synchronization state is that the synchronizer first calculates the acquired synchronization state, then uses CAS to ensure that the state is set correctly. When the return value of the tryAcquireShared(int reduceCount) method is greater than or equal to 0, the current thread acquires the synchronization state. For the upper TwinsLock, the current thread acquires the lock.

Synchronizer serves as a bridge connecting underlying technologies such as thread access and synchronization state control to the interface meaning of different concurrent components such as Lock, CountDownLatch, and so on.

Write a test below to verify that TwinsLock works as expected. In the test case, a worker thread Worker is defined, which acquires a lock during execution, sleeps the current thread for one second (without releasing the lock) after acquiring the lock, then prints the name of the current thread, and finally sleeps for another second and releases the lock:

class TwinsLockTest {

public static void main(String[] args) {

final Lock lock = new TwinsLock();

class Worker extends Thread {

@Override

public void run() {

while (true) {

lock.lock();

try {

sleep(1000);

System.out.println(Thread.currentThread().getName());

sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

lock.unlock();

}

}

}

}

for (int i = 0; i < 10; i++) {

Worker w = new Worker();

w.setDaemon(true);

w.start();

}

for (int i = 0; i < 10; i++) {

try {

Thread.sleep(1000);

System.out.println();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

Running the test case, you can see paired output of thread names, meaning that only two threads can acquire locks at the same time, indicating that TwinsLock works as expected.

3 Re-entry Lock

ReentrantLock, as its name implies, supports reentrant locks, which indicate that the lock can support a thread's repeated locking of resources. In addition, it supports fair and unfair choices when acquiring locks.

Recall the example in the Synchronizer section (Mutex) and consider the following scenarios: When a thread invokes Mutex's lock() method to acquire a lock, if it invokes the lock() method again, the thread will be blocked by itself because Mutex did not consider the scenario where the thread holding the lock acquired the lock acquired again when implementing the tryAcquire (int) method, but instead invoked tryAcquire(int acquires) The method returned false, causing the thread to be blocked. Simply put, Mutex is a lock that does not support reentry. The **synchronized keyword implicitly supports reentry**, such as a synchronized modified recursive method, when a method executes, the executing thread can acquire the lock several times in a row after acquiring it, unlike Mutex, which acquires the lock and proceeds to the next The next time a lock is acquired, there is a blocking condition.

Although ReentrantLock does not support implicit reentry like the synchronized keyword, threads that have acquired a lock when calling the lock() method can call the lock() method again to acquire the lock without being blocked.

This raises the issue of fairness in lock acquisition. If a request to acquire a lock first must be satisfied in absolute time, then the lock is fair, and vice versa, it is unfair. A fair acquisition of a lock means that the thread with the longest wait time acquires the lock first, or that lock acquisition is sequential. ReentrantLock provides a constructor to control it. Is locking fair or not.

In fact, fair locking mechanisms are often not inequitable and efficient, but not every scenario uses TPS as the only indicator. Fair locking reduces the probability of "hunger" occurring and the longer a request is waiting, the better it will be satisfied.

The following will focus on how ReentrantLock implements reentrance and fair acquisition of locks, and test the impact of fair acquisition of locks on performance.

3.1 Realize Reentry

Reentry means that any thread can acquire a lock again after it has been acquired without being blocked by the lock. The implementation of this feature requires the following two issues to be addressed:

- The thread acquires the lock again. Locks need to identify if the thread that acquired the lock is the one currently occupying the lock and, if so, acquire it again successfully.

- The final release of the lock. Threads repeatedly acquire a lock n times, and then after releasing the lock for the nth time, other threads can acquire the lock. The final release of a lock requires the lock to count itself for acquisitions, which indicates the number of times the current lock has been repeatedly acquired, while when the lock is released, the count decreases, and when the count equals 0, the lock has been successfully released.

ReentrantLock achieves lock acquisition and release by combining custom synchronizers. For example, with an unfair (default) implementation, the code to get synchronization status is as follows:

public class ReentrantLock implements Lock, java.io.Serializable {

final boolean nonfairTryAcquire(int acquires) {

final Thread current = Thread.currentThread();

int c = getState();

if (c == 0) {

if (compareAndSetState(0, acquires)) {

setExclusiveOwnerThread(current);

return true;

}

}

else if (current == getExclusiveOwnerThread()) {

int nextc = c + acquires;

if (nextc < 0) // overflow

throw new Error("Maximum lock count exceeded");

setState(nextc);

return true;

}

return false;

}

}

This method adds processing logic to retrieve the synchronization state: determines whether the acquisition operation succeeds by determining whether the current thread is the thread that acquired the lock, and if the thread that acquired the lock requests it again, increments the synchronization state value and returns true to indicate that the acquisition of the synchronization state succeeds.

The thread that successfully acquired the lock acquired the lock again, adding only the synchronization state value, which also requires ReentrantLock to reduce the synchronization state value when releasing the synchronization state, as shown in the code below:

public class ReentrantLock implements Lock, java.io.Serializable {

abstract static class Sync extends AbstractQueuedSynchronizer {

protected final boolean tryRelease(int releases) {

int c = getState() - releases;

if (Thread.currentThread() != getExclusiveOwnerThread())

throw new IllegalMonitorStateException();

boolean free = false;

if (c == 0) {

free = true;

setExclusiveOwnerThread(null);

}

setState(c);

return free;

}

}

}

If the lock is acquired n times, the previous (n-1) tryRelease(int releases) method must return false, and true can only be returned if the synchronization state is fully released. You can see that this method takes whether the synchronization state is 0 as the final release condition, and when the synchronization state is 0, sets the possession thread to null and returns true to indicate that the release was successful.

3.2 Differences between fair and unfair lock acquisition

Fairness is about acquiring locks. If a lock is fair, the acquisition order of the locks should match the absolute time order of the request, that is, FIFO.

For the nonfairTryAcquire(int acquires) method, for unfair locks, if CAS successfully sets the synchronization state, the current thread acquires the lock, unlike fair locks, which have the following code:

public class ReentrantLock implements Lock, java.io.Serializable {

static final class NonfairSync extends Sync {

protected final boolean tryAcquire(int acquires) {

final Thread current = Thread.currentThread();

int c = getState();

if (c == 0) {

if (!hasQueuedPredecessors() &&

compareAndSetState(0, acquires)) {

setExclusiveOwnerThread(current);

return true;

}

}

else if (current == getExclusiveOwnerThread()) {

int nextc = c + acquires;

if (nextc < 0)

throw new Error("Maximum lock count exceeded");

setState(nextc);

return true;

}

return false;

}

}

}

Compared with nonfairTryAcquire(int acquires), the only difference is that there are more hasQueuedPredecessors() methods to judge if the current node in the synchronization queue has a precursor node. If this method returns true, it means that a thread requests a lock earlier than the current thread. Therefore, you need to wait for the predecessor thread to acquire and release the lock before continuing to acquire the lock.

Write a test below to see the difference between fair and unfair locks when acquiring locks. An internal class, ReentrantLock2, is defined in the test case, which mainly exposes the getQueuedThreads() method, which returns a list of threads waiting to acquire locks. Since the list is an inverse output, the test case (part) reverses the list for easy observation. As the code shows:

class FairAndUnfairTest {

private static Lock fairLock = new ReentrantLock2(true);

private static Lock unfairLock = new ReentrantLock2(false);

public static void main(String[] args) {

testLock(fairLock);

testLock(unfairLock);

}

private static void testLock(Lock lock) {

for (int i = 0; i < 5; i++) {

Job w = new Job(lock);

w.start();

}

}

private static class Job extends Thread {

private Lock lock;

public Job(Lock lock) {

this.lock = lock;

}

@Override

public void run() {

// Print the current Thread and the Thread in the waiting queue twice in a row

}

}

private static class ReentrantLock2 extends ReentrantLock {

public ReentrantLock2(boolean fair) {

super(fair);

}

public Collection<Thread> getQueueThreads() {

List<Thread> arrayList = new ArrayList<>(super.getQueuedThreads());

Collections.reverse(arrayList);

return arrayList;

}

}

}

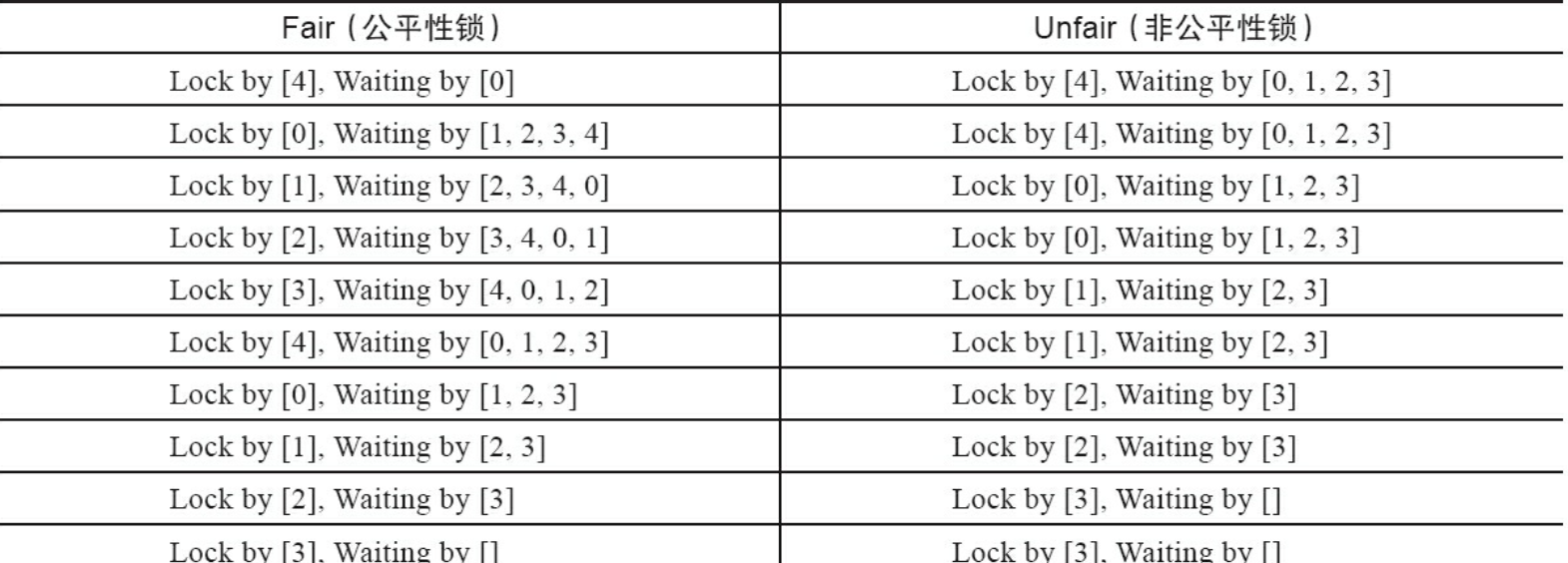

Run fair() and unfair() test methods respectively, and the output is as follows;

As shown in the result (where each number represents a thread), a fairness lock is acquired from the first node in the synchronization queue each time, instead of a fairness lock where a thread continuously acquires the lock.

Why do threads acquire locks continuously? Recalling the nonfairTryAcquire(int acquires) method, when a thread requests a lock, the lock is acquired successfully as long as the synchronization state is acquired. With this precondition, threads that just released the lock have a very high chance of getting synchronization status again, making other threads wait only in the synchronization queue.

Unfair locks can starve threads, so why is it set to default implementation? Looking at the results of the table above again, if each different thread gets a lock definition of one switch, the fairness lock is switched 10 times in the test, while the non-fairness lock has only five switches, which means that the unfairness lock has a lower overhead.

The following runs the test case (test environment: ubuntu server 14.04 i5-34708GB, test scenario: 10 threads, each thread acquires 100,000 locks), and counts the number of system thread context switches at run time by vmstat. The results are as follows:

In the test, the total time consumed was 94.3 times for fair locks and 133 times for unfair locks. You can see that fairness locks guarantee that locks are acquired according to FIFO principles at the expense of a large number of thread switches. Unfair locks can cause threads to "starve", but very few thread switches guarantee greater throughput.

4 Read-write lock

As mentioned earlier, locks (such as Mutex and ReentrantLock) are basically exclusive locks that allow only one thread to access at a time, while read-write locks allow multiple read threads to access at the same time, but when a write thread accesses, all read threads and other write threads are blocked. Read-write locks maintain a pair of locks, a read lock and a write lock. By separating read and write locks, concurrency is greatly improved compared to the general exclusive locks.

In addition to ensuring the visibility and concurrency of read operations for write operations, read-write locks simplify the programming of read-write interactive scenes. Suppose you define a shared cached data structure in your program that provides read services (such as queries and searches) most of the time, while write operations take up very little time, but updates after the write operation are completed need to be visible to subsequent read services.

When there is no Read-Write Lock support (before Java 5), Java's Wait Notification mechanism is used if you need to complete the above work, that is, when the write operation starts, all read operations that are later than the write operation enter a wait state, and only after the write operation is completed and notified, all wait read operations can continue (synchronized critical synchronization between write operations) This is done so that the read operation can read the correct data without dirty reads. Instead, read-write locks are used to achieve the above functions, only read locks are acquired during the read operation and write locks are acquired during the write operation. When a write lock is acquired, subsequent (non-current write operation threads) follow up. Read and write operations are blocked, and when the write lock is released, all operations continue. Programming becomes simpler than using a wait notification mechanism.

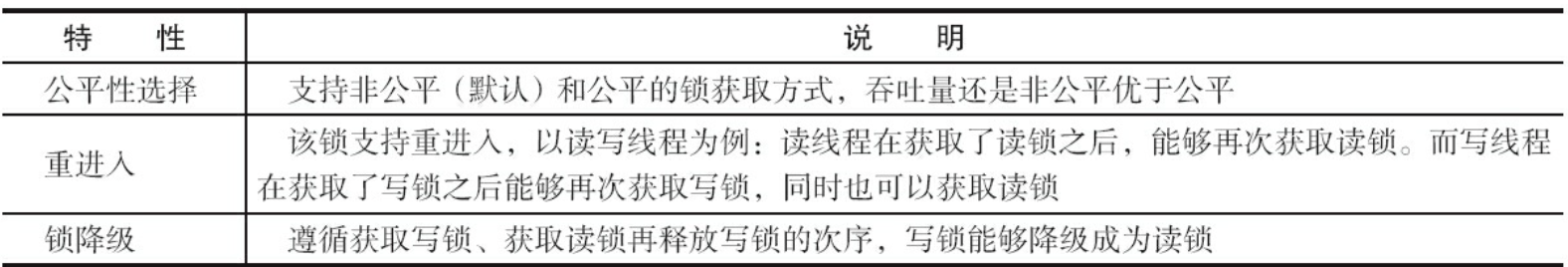

In general, read-write locks perform better than exclusive locks because most scenarios read more than write. Read-write locks provide better concurrency and throughput than exclusive locks when there are more reads than writes. A Java concurrent package provides a read-write lock implementation, ReentrantReadWriteLock, which provides the following features:

4.1 Interfaces and examples of read-write locks

ReadWriteLock defines only two methods for acquiring read and write locks, the readLock() method and the writeLock() method, and its implementation, ReentrantReadWriteLock, provides methods for external monitoring of its internal working state in addition to interface methods, which are described as shown in the figure:

public class ReentrantReadWriteLock implements ReadWriteLock, java.io.Serializable {

// Returns the number of times the current read lock has been acquired. This number is not equal to the number of threads acquiring read locks. For example, if only one thread continuously acquires (re-enters) n read locks, then the number of threads occupying the read lock is 1, but the method returns n

public int getReadLockCount() {

return sync.getReadLockCount();

}

// Returns the number of times the current thread acquired a read lock. This method was added to the ReentrantReadWriteLock in Java 6 and uses ThreadLocal to save the number of times the current thread fetches, which also complicates the implementation of Java 6

public int getReadHoldCount() {

return sync.getReadHoldCount();

}

// Determine if a write lock is acquired

public boolean isWriteLocked() {

return sync.isWriteLocked();

}

// Returns the number of times the current write lock was acquired

public int getWriteHoldCount() {

return sync.getWriteHoldCount();

}

}

Next, an example cache is provided to illustrate how read and write locks are used, and the code is as follows:

class Cache {

static Map<String, Object> map = new HashMap<>();

static ReentrantReadWriteLock rw1 = new ReentrantReadWriteLock();

static Lock r = rw1.readLock();

static Lock w = rw1.writeLock();

public static final Object get(String key) {

r.lock();

try {

return map.get(key);

} finally {

r.unlock();

}

}

public static final Object put(String key, Object value) {

w.lock();

try {

return map.put(key, value);

} finally {

w.unlock();

}

}

public static final void clear() {

w.lock();

try {

map.clear();

} finally {

w.unlock();

}

}

}

In the example above, Cache combines a non-thread-safe HashMap as an implementation of the cache, using both read and write locks to ensure that the Cache is thread-safe. In the read operation get(String key) method, a read lock needs to be acquired so that concurrent access to the method is not blocked. The write operation put(String key,Object value) and clear() methods must acquire a write lock in advance when updating HashMap. When a write lock is acquired, acquisition of both read and write locks by other threads is blocked, and other read and write operations cannot continue until the write lock is released. Cache uses read and write locks to improve the concurrency of read operations, to ensure that each write operation is visible to all read and write operations, and to simplify programming.

Analysis of 4.2 Read-Write Lock Implementation

Next, the implementation of ReentrantReadWriteLock is analyzed, including the design of read-write state, acquisition and release of write lock, acquisition and release of read lock, and lock degradation.

Design of 4.2.1 Read and Write Status

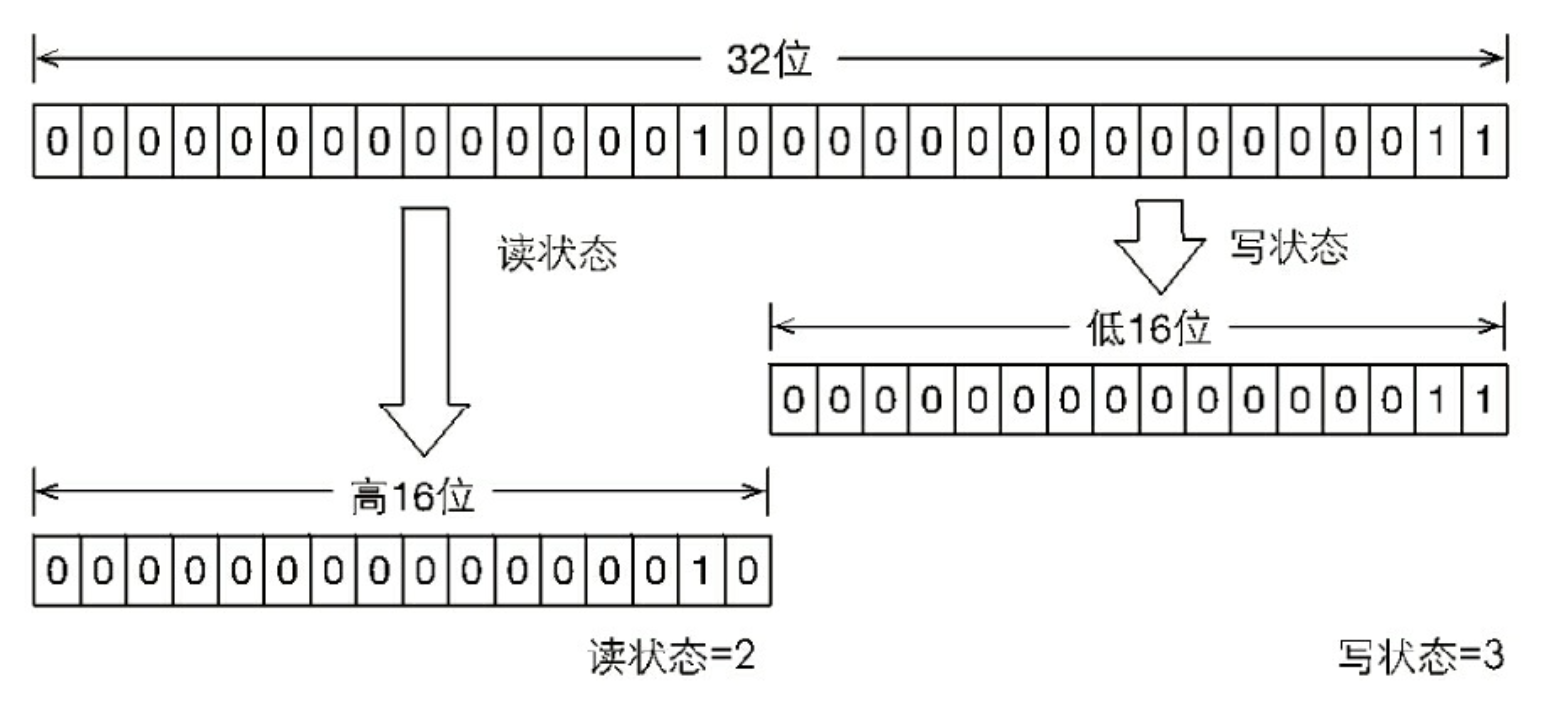

Read-write locks also rely on custom synchronizers for synchronization, and the read-write state is the synchronization state of their synchronizers. Recalling the implementation of a custom synchronizer in ReentrantLock, the synchronization state represents the number of times a lock has been repeatedly acquired by a thread, while a custom synchronizer for read-write locks needs to maintain the states of multiple read and one write threads in the synchronization state (an integer variable), making the design of this state the key to the implementation of read-write locks.

If you maintain multiple states on an integer variable, you must use the variable Bit Cutting. Read and write locks divide the variable into two parts, a high 16-bit representation for reading and a low 16-bit representation for writing, as shown in the figure:

The current synchronization state indicates that a thread has acquired a write lock and re-entered it twice, as well as two consecutive read locks. How can read and write locks quickly determine their respective states? The answer is through bitwise operations. Assuming the current synchronization state value is S, the write state is equal to S&0x0000FFFFFF (erase all 16 bits high), and the read state is equal to S >> 16 (unsigned complement 0 moves 16 bits right). When the write state is increased by 1, it is equal to S+1, and when the read state is increased by 1, it is equal to S+ (1< 16), that is, S+0x00010000.

A corollary can be drawn from the division of states: when S is not equal to 0, when the write state (S&0x0000FFFF) is equal to 0, the read state (S >> 16) is greater than 0, that is, the read lock has been acquired.

4.2.2 Acquisition and Release of Write Lock

A write lock is an exclusive lock that supports reentrance. If the current thread has acquired a write lock, increase the write state. If the current thread acquires a write lock when the read lock has already been acquired (read state is not zero) or if the thread is not already a thread that has acquired a write lock, the current thread enters a wait state and the code to acquire the write lock is as follows:

public class ReentrantReadWriteLock implements ReadWriteLock, java.io.Serializable {

protected final boolean tryAcquire(int acquires) {

Thread current = Thread.currentThread();

int c = getState();

int w = exclusiveCount(c);

if (c != 0) {

if (w == 0 || current != getExclusiveOwnerThread())

return false;

if (w + exclusiveCount(acquires) > MAX_COUNT)

throw new Error("Maximum lock count exceeded");

setState(c + acquires);

return true;

}

if (writerShouldBlock() || !compareAndSetState(c, c + acquires))

return false;

setExclusiveOwnerThread(current);

return true;

}

}

In addition to the reentry condition (the current thread is the one that acquired the write lock), this method adds a judgment about the existence of a read lock. If a read lock exists, the write lock cannot be acquired because read and write locks ensure that the operation of the write lock is visible to the read lock, and if read locks are allowed to be acquired for the write lock while it has been acquired, the operation of the current write thread cannot be perceived by the other running read threads. Therefore, a write lock can only be acquired by the current thread if it waits for all other read threads to release it, and subsequent access to the other read and write threads will be blocked once the write lock is acquired.

The release of a write lock is similar to the release of ReentrantLock in that each release reduces the write state, indicating that the write lock has been released when the write state is 0, allowing the waiting read-write threads to continue accessing the read-write lock, while the previous write thread modification is visible to subsequent read-write threads.

4.2.3 Acquisition and Release of Read Lock

A read lock is a shared lock that supports reentry and can be acquired simultaneously by multiple threads. When no other write thread accesses it (or the write state is 0), the read lock is always acquired successfully, and all that is done is to increase the read state (thread-safe). If the current thread has acquired a read lock, increase the read state. If the current thread acquires a read lock and the write lock is already acquired by another thread, it enters a wait state. The implementation of acquiring read locks has become much more complex from Java 5 to Java 6, mainly because of new functionality, such as the getReadHoldCount() method, which returns the number of times the current thread has acquired read locks. Read state is the sum of the number of read locks acquired by all threads, and the number of read locks acquired by each thread can only be saved in ThreadLocal, which is maintained by the thread itself, complicating the implementation of acquiring read locks. Therefore, the code to acquire the read lock is truncated, leaving the necessary parts as follows:

public class ReentrantReadWriteLock implements ReadWriteLock, java.io.Serializable {

protected final int tryAcquireShared(int unused) {

Thread current = Thread.currentThread();

int c = getState();

if (exclusiveCount(c) != 0 && getExclusiveOwnerThread() != current)

return -1;

int r = sharedCount(c);

if (!readerShouldBlock() && r < MAX_COUNT && compareAndSetState(c, c + SHARED_UNIT)) {

if (r == 0) {

firstReader = current;

firstReaderHoldCount = 1;

} else if (firstReader == current) {

firstReaderHoldCount++;

} else {

HoldCounter rh = cachedHoldCounter;

if (rh == null || rh.tid != getThreadId(current))

cachedHoldCounter = rh = readHolds.get();

else if (rh.count == 0)

readHolds.set(rh);

rh.count++;

}

return 1;

}

return fullTryAcquireShared(current);

}

}

In the tryAcquireShared(int unused) method, if another thread has already acquired a write lock, the current thread fails to acquire a read lock and enters a wait state. If the current thread acquires a write lock or if the write lock is not acquired, the current thread (thread security, depending on CAS) increases the read state and successfully acquires the read lock.

Each release of a read lock (thread-safe, possibly multiple read threads releasing the read lock at the same time) reduces the read state by a value of << 16.

4.2.4 Lock Degradation

Lock demotion refers to a write lock being demoted to a read lock. If the current thread has a write lock, then releases it, and finally acquires a read lock, the segmented completion process cannot be called lock degradation. Lock demotion refers to the process of holding (currently owned) a write lock, acquiring a read lock, and then releasing (previously owned) a write lock.

Next, let's look at an example of lock demotion. Because the data does not change very often, multiple threads can process data concurrently. When the data changes, if the current thread is aware of the data changes, it prepares the data, while the other processing threads are blocked until the current thread has finished preparing the data. The code is as follows:

public void processData() {

readLock.lock();

if (!update) {

// Read lock must be released first

readLock.unlock();

// Lock demotion starts with write lock acquisition

writeLocl.lock();

try {

if (!update) {

// Process for preparing data (omitted)

update = true;

}

} finally {

writeLock.unlock();

}

// Lock demotion complete, write lock demoted to read lock

}

try {

// Process for using data (omitted)

} finally {

readLock.unlock();

}

}

In the example above, when the data changes and the update variable (Boolean type and volatile modifier) is set to false, all threads accessing the processData() method will be aware of the change, but only one thread will be able to acquire a write lock and the other threads will be blocked on the read and write lock() methods. The current thread acquires a write lock, completes data preparation, acquires a read lock, then releases the write lock to complete lock demotion.

Is acquisition of a read lock necessary for lock degradation? The answer is necessary. Mainly to ensure the visibility of the data, if the current thread does not acquire a read lock but releases a write lock directly, assuming another thread (denoted as thread T) acquires a write lock and modifies the data at this time, the current thread is not aware of data updates from thread T. If the current thread acquires a read lock, following the steps of lock degradation, thread T will be blocked until the current thread uses the data and releases the read lock before thread T acquires a write lock for data updates.

RentrantReadWriteLock does not support lock escalation (the process of holding a read lock, acquiring a write lock, and finally releasing the read lock). The goal is also to ensure data visibility, and if a read lock has been acquired by multiple threads, and any thread successfully acquired a write lock and updated the data, its update will not be visible to other threads that acquired a read lock.

5 LockSupport Tool

When a thread needs to be blocked or waked up, the LockSupport tool class is used to do the work. LockSupport defines a set of common static methods that provide the most basic thread blocking and wake-up capabilities, and LockSupport is also the underlying tool for building synchronization components.

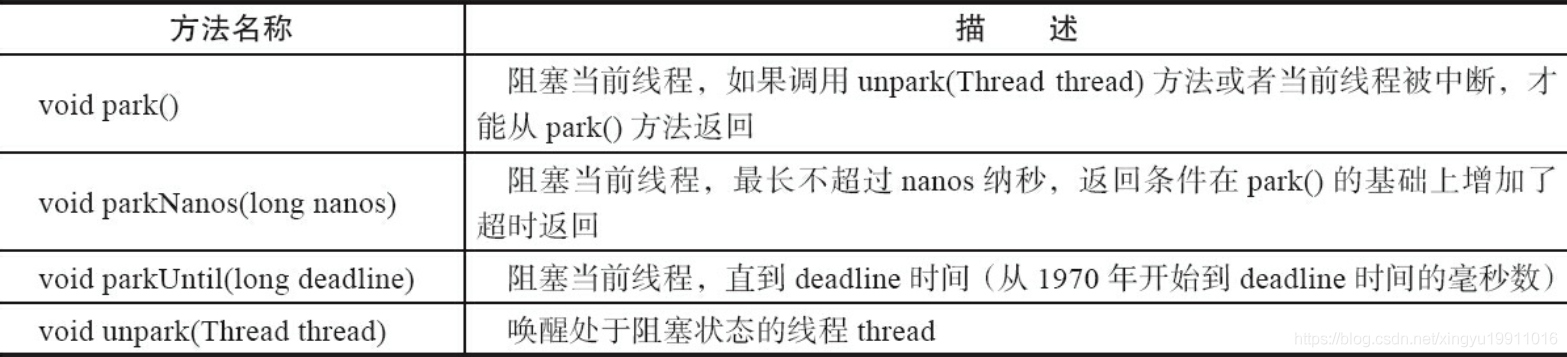

LockSupport defines a set of methods that start with a park to block the current thread and unpark(Thread thread) methods to wake up a blocked thread. Park means parking. Assuming the threads are vehicles, the park method represents parking, while the unpark method refers to starting and leaving a vehicle. These methods and descriptions are as follows:

public class LockSupport {

// Blocks the current thread and returns from the park() method only if the unpark(Thread thread) method is called or if the current thread is interrupted

public static void park(Object blocker) {

Thread t = Thread.currentThread();

setBlocker(t, blocker);

U.park(false, 0L);

setBlocker(t, null);

}

// Blocks the current thread, up to nanos nanoseconds, with a return condition that adds a timeout return based on park()

public static void parkNanos(Object blocker, long nanos) {

if (nanos > 0) {

Thread t = Thread.currentThread();

setBlocker(t, blocker);

U.park(false, nanos);

setBlocker(t, null);

}

}

// Block the current thread until deadline time (milliseconds from 1970 to deadline time)

public static void parkUntil(long deadline) {

U.park(true, deadline);

}

// Wake up blocked thread

public static void unpark(Thread thread) {

if (thread != null)

U.unpark(thread);

}

}

In Java 6, LockSupport adds park(Object blocker), parkNanos(Object blocker,long nanos), and parkUntil (long deadline) methods to block the current thread, where the parameter blocker identifies the object the current thread is waiting for (hereinafter referred to as the blocking object), which is mainly used for problem troubleshooting and system monitoring.

6 Condition Interface

Any Java object has a set of monitor methods (defined on java.lang.Object), including wait(), wait(long timeout), notify(), and notifyAll() methods that work with the synchronized synchronization keyword to achieve a wait/notification mode. The Condition interface also provides Object-like monitoring methods that work with Lock to implement wait/notification modes, but they differ in usage and functionality.

By comparing Object's monitor method with the Condition interface, you can get a more detailed understanding of Condition's characteristics, as shown below:

6.1 Condition interface and examples

Condition defines two types of wait/notification methods that the current thread needs to acquire in advance the lock associated with the Condition object when invoking. The Condition object is created by a Lock object (calling the newCondition() method of the Lock object), in other words, the Condition is dependent on the Lock object.

Condition is used in a simple way, and it is important to note that the lock is acquired before calling the method, as follows:

Lock lock = new ReentrantLock();

Condition condition = lock.newCondition();

public void conditionWait() throws InterruptedException {

lock.lock();

try {

condition.await();

} finally {

lock.unlock();

}

}

public void conditionSignal() throws InterruptedException {

lock.lock();

try {

condition.signal();

} finally {

lock.unlock();

}

}

As the example shows, Condition objects are generally used as member variables. When the await() method is called, the current thread releases the lock and waits there, while other threads call the signal() method of the Condition object to notify the current thread that the current thread returns from the await() method and acquires the lock before returning.

The (partial) method defined by Condition and its description are as follows:

public interface Condition {

// The current thread enters the wait state until it knows it has been notified (signal) or interrupted, and the current thread enters the running state and returns from the await() method as follows:

// 1. Other threads call the signal() or signalAll() method of the Condition, and the current thread is interrupted by the selected wake (interrupt() method call)

// 2. If the currently waiting thread returns from the await() method, it indicates that the thread alert acquired the lock corresponding to the Condition object

void await() throws InterruptedException;

// The current thread enters a wait state until it is notified and the method name indicates that it is insensitive to interrupts

void awaitUninterruptibly();

// The current thread enters a wait state until it is notified, interrupted, or timed out. The return value indicates the time remaining, and if waked up before nanosTimeout nanoseconds, the return value of nanoseconds is (nanosTimeout - actual time consumed). If the return value is 0 or negative, you can assume that the time-out has elapsed

long awaitNanos(long nanosTimeout) throws InterruptedException;

// The current thread enters a wait state until it is notified, interrupted, or at some point. If it is not notified at the set time, the method returns true; otherwise, it returns false when the specified time is reached

boolean awaitUntil(Date deadline) throws InterruptedException;

// Wakes up a thread waiting on Condition that must acquire a lock associated with Condition before returning from the wait method

void signal();

// Wake up all threads waiting on Conditions, threads that can return from wait methods must acquire locks associated with Conditions

void signalAll();

}

Getting a Condition must pass the Lock's newCondition() method. Below is an example of a bounded queue to learn more about how Conditions are used. A bounded queue is a special kind of queue. When the queue is empty, the fetch operation of the queue will block the fetch thread until there are new elements in the queue. When the queue is full, the insert operation of the queue will block the insert thread until "empty" appears in the queue.

Implementation Analysis of 6.2 Condition

ConditionObject is the internal class of the synchronizer AbstractQueuedSynchronizer, and since Condition__8364 Each Condition object contains a queue (hereinafter referred to as the wait queue), which is the key to the ability of the Condition object to wait/notify.

The following will analyze the implementation of Conditions, mainly including waiting queues, waiting, and notifications. The Conditions mentioned below refer to ConditionObject without explanation.

public abstract class AbstractQueuedSynchronizer extends AbstractOwnableSynchronizer

implements java.io.Serializable {

public class ConditionObject implements Condition, java.io.Serializable { }

}

6.2.1 Waiting Queue

The wait queue is a FIFO queue in which each node contains a thread reference, which is the thread waiting on the Condition object. If a thread invokes the Condition.await() method, the thread releases the lock, constructs the node to join the wait queue and enters the wait state. In fact, the definition of the node reuses the definition of the node in the synchronizer, that is, the static internal class AbstractQueuedSynchronizer.Node in both the synchronization queue and the waiting queue is of the synchronizer type:

public abstract class AbstractQueuedSynchronizer extends AbstractOwnableSynchronizer

implements java.io.Serializable {

static final class Node { }

}

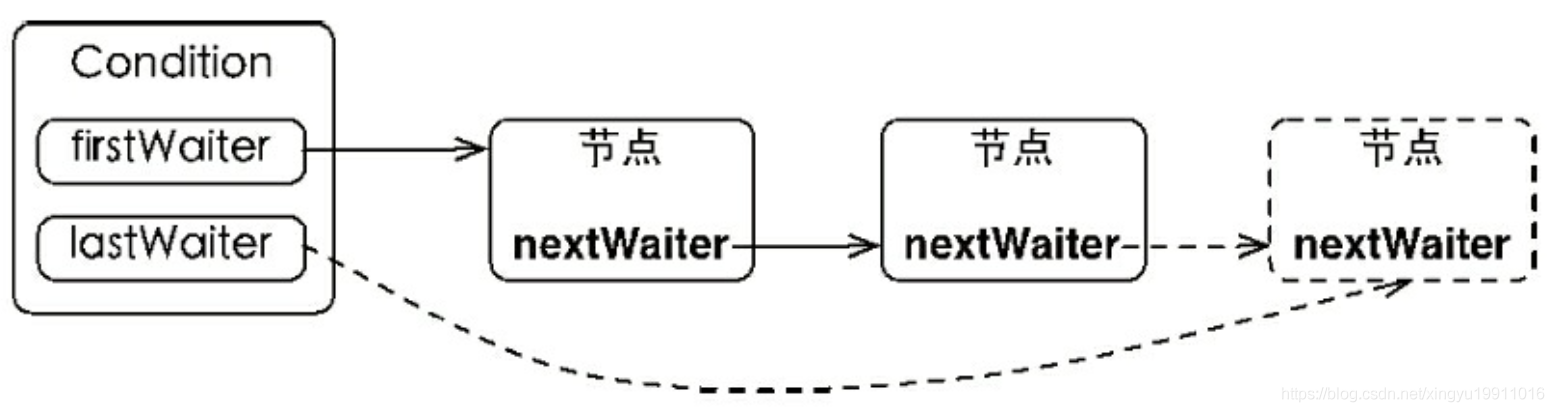

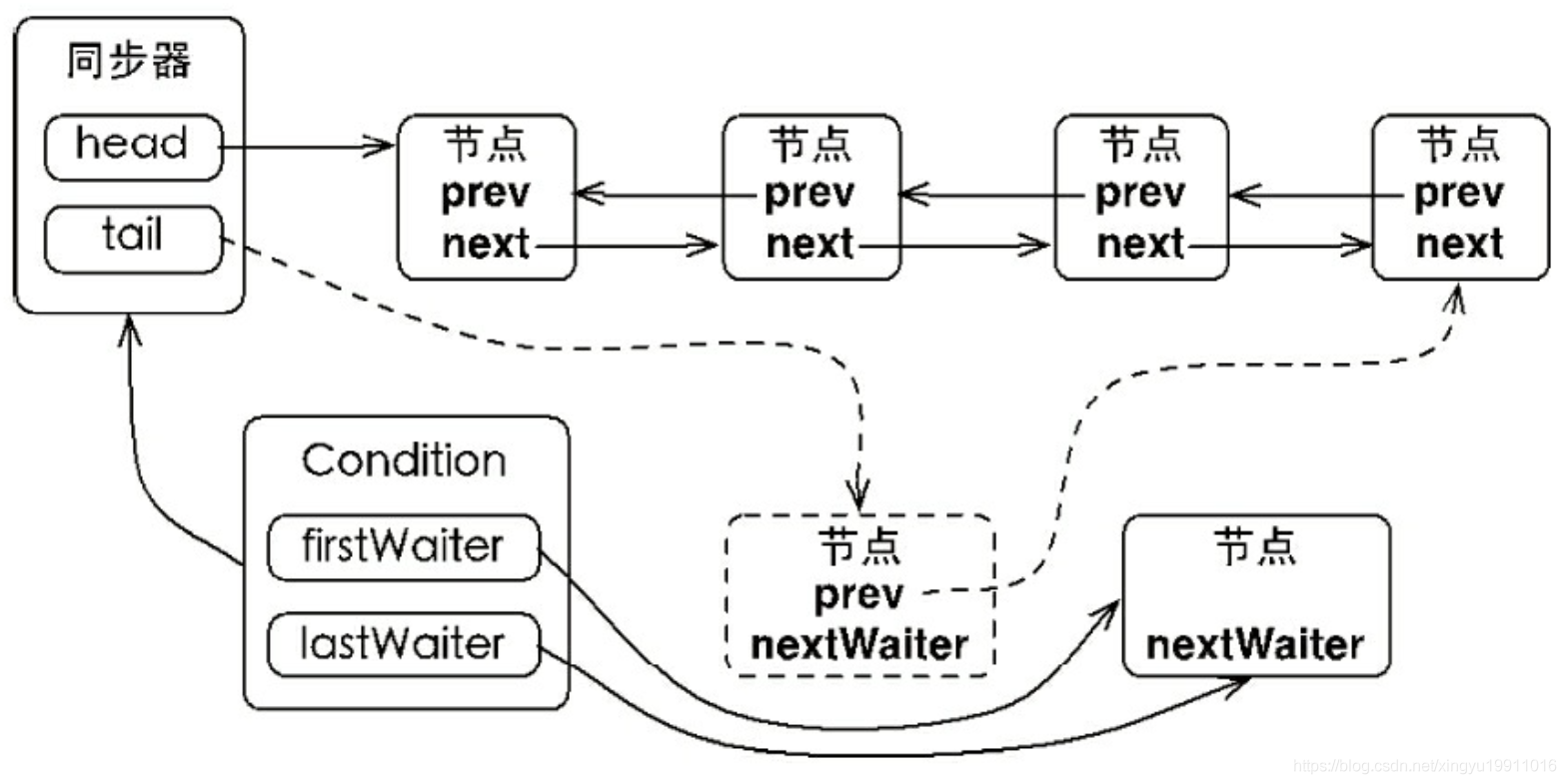

A Condition contains a wait queue, and Condition has a first and a last Waiter. When the current thread invokes the Condition.await() method, it constructs the node with the current thread and joins the node from the tail to the waiting queue. The basic structure of the waiting queue is shown in the following figure:

public abstract class AbstractQueuedSynchronizer extends AbstractOwnableSynchronizer

implements java.io.Serializable {

public class ConditionObject implements Condition, java.io.Serializable {

private transient Node firstWaiter;

private transient Node lastWaiter;

}

}

As shown in the diagram, Condition has a reference to the first and last node, whereas the new node simply points the original tail node nextWaiter to it and updates the tail node. The above node reference update process does not use CAS guarantees because the thread calling the await() method must have acquired the lock, that is, the process is secured by the lock.

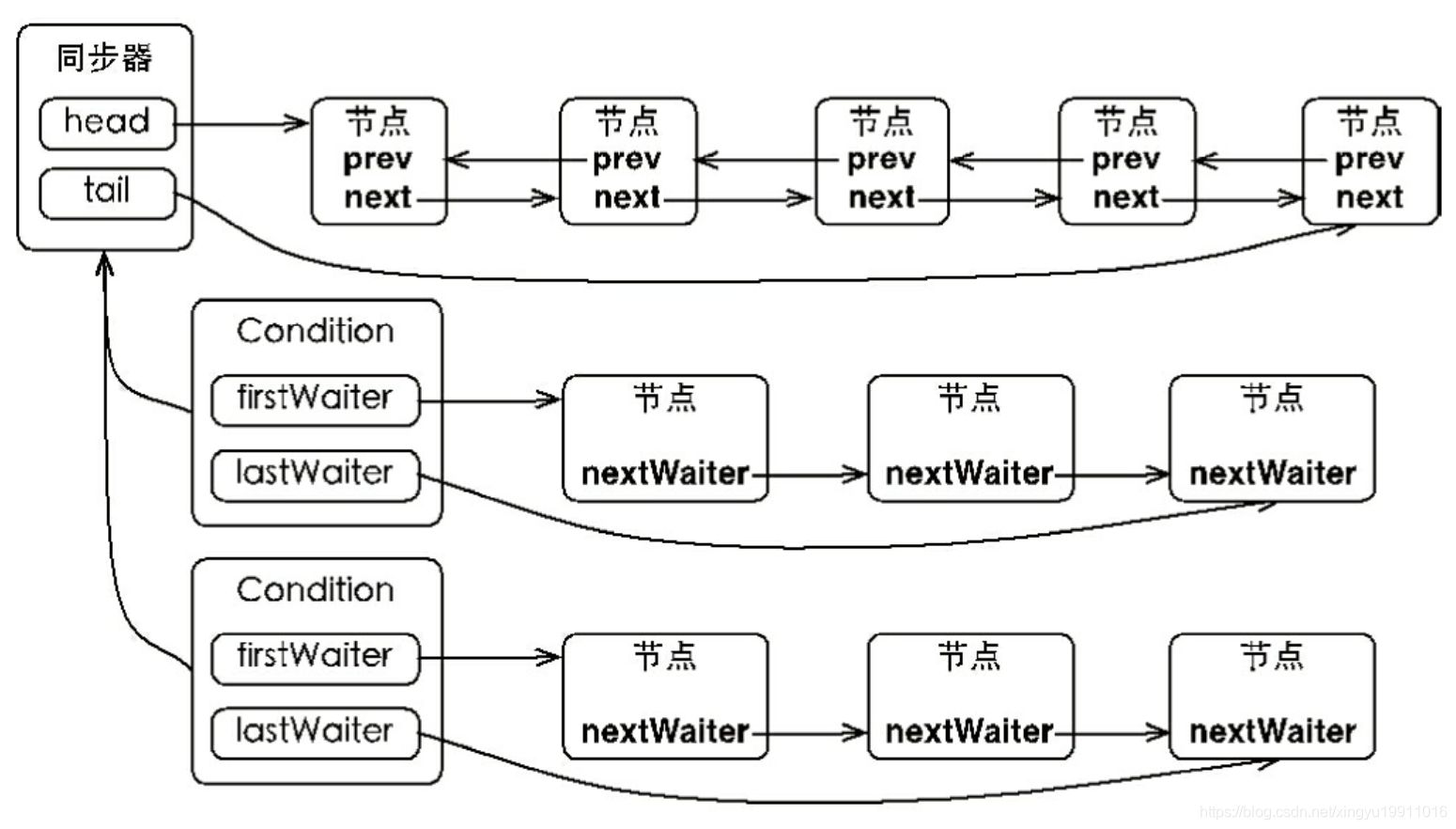

In Object's monitor model, an object has a synchronization queue and a waiting queue, while Lock in a concurrent package (more precisely, a synchronizer) has a synchronization queue and multiple waiting queues, and their corresponding relationships are shown in the diagram:

The Condition implementation is an internal class of synchronizers, so each Condition instance has access to the methods provided by the synchronizer, which is equivalent to having a reference to the synchronizer to which it belongs.

6.2.2 Waiting

Calling the Condition.await() method (or one that starts with await) puts the current thread in the wait queue and unlocks it, changing the thread state to a wait state. When returned from the await() method, the current thread must have acquired the lock associated with Condition.

If you look at the await () method from the perspective of the queue (synchronous queue and waiting queue), when you call the await () method, the first node of the synchronous queue (the node that acquired the lock) moves to the Condition's waiting queue. The Condition.await() method is as follows:

public abstract class AbstractQueuedSynchronizer extends AbstractOwnableSynchronizer

implements java.io.Serializable {

public class ConditionObject implements Condition, java.io.Serializable {

public final void await() throws InterruptedException {

if (Thread.interrupted())

throw new InterruptedException();

Node node = addConditionWaiter();

int savedState = fullyRelease(node);

int interruptMode = 0;

while (!isOnSyncQueue(node)) {

LockSupport.park(this);

if ((interruptMode = checkInterruptWhileWaiting(node)) != 0)

break;

}

if (acquireQueued(node, savedState) && interruptMode != THROW_IE)

interruptMode = REINTERRUPT;

if (node.nextWaiter != null) // clean up if cancelled

unlinkCancelledWaiters();

if (interruptMode != 0)

reportInterruptAfterWait(interruptMode);

}

}

}

The thread calling this method successfully acquired the lock, which is the first node in the synchronization queue. This method constructs the current thread into a node and joins the waiting queue, releases the synchronization state, wakes up subsequent nodes in the synchronization queue, and the current thread enters the waiting state.

When waiting for a node in the queue to wake up, the thread waking the node starts trying to get the synchronization status. An InterruptedException is thrown if the Condition.signal() method is not called from another thread and the waiting thread is interrupted.

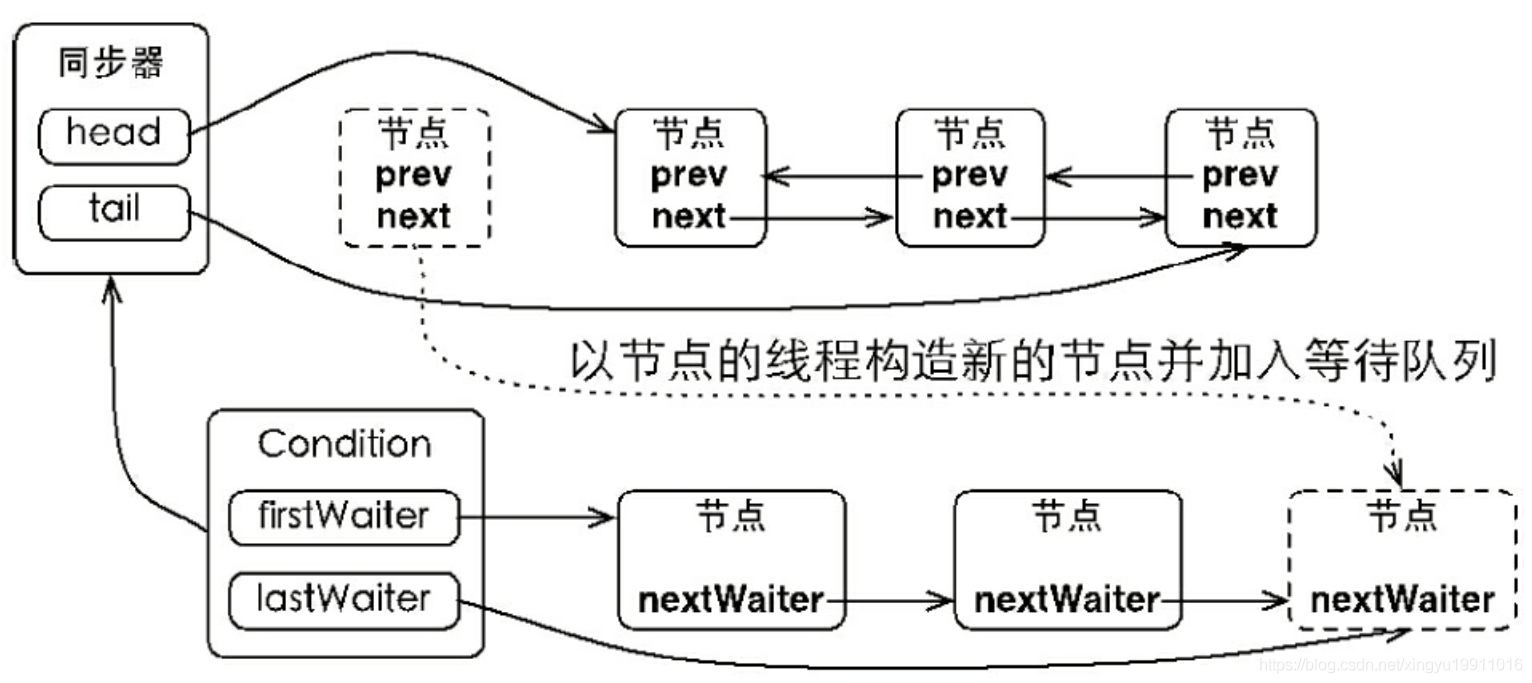

If you look at it from a queue perspective, the current thread joins Condition's waiting queue, which is illustrated below:

Instead of directly joining the wait queue, the first node of the synchronization queue constructs the current thread into a new node and joins it to the wait queue using the addConditionWaiter() method.

6.2.3 Notification

Calling the Condition.signal() method wakes up the node (the first node) that has been waiting the longest in the waiting queue and moves the node to a synchronous queue before waking up the node. The code is as follows:

public abstract class AbstractQueuedSynchronizer extends AbstractOwnableSynchronizer

implements java.io.Serializable {

public class ConditionObject implements Condition, java.io.Serializable {

public final void signal() {

if (!isHeldExclusively())

throw new IllegalMonitorStateException();

Node first = firstWaiter;

if (first != null)

doSignal(first);

}

}

}

The precondition to invoking this method is that the current thread must acquire a lock, and you can see that the signal() method is isHeldExclusively() checked, that is, the current thread must be the thread that acquired the lock. Next, get the first node of the waiting queue, move it to the synchronization queue, and use LockSupport to wake up the threads in the node. The node moves from the waiting queue to the synchronization queue as shown in the diagram:

Wait for the head node thread in the queue to move safely to the synchronization queue by calling the enq(Node node) method of the synchronizer. When a node moves to a synchronization queue, the current thread uses LockSupport to wake up the node's thread.

The awakened thread will exit from the while loop in the await() method (isOnSyncQueue(Node node) method returns true, the node is already in the synchronization queue), and then call the synchronizer's acquireQueued() method to join the competition for synchronization status.

After the synchronization state (or lock) is successfully acquired, the awakened thread returns from the previously called await() method, at which point it has successfully acquired the lock.

The Condition.signalAll() method, which is equivalent to executing the signal() method once for each node in the waiting queue, has the effect of moving all the nodes in the waiting queue to the synchronous queue and waking up the threads of each node.