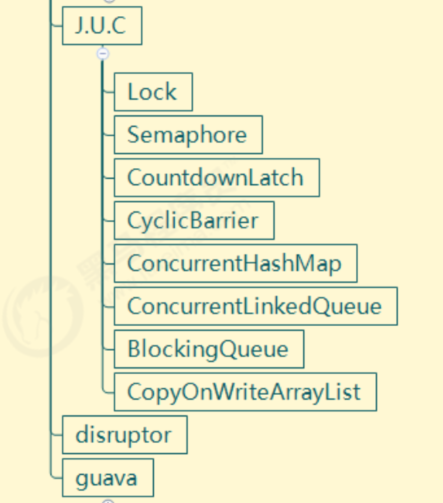

2, J.U.C

1. * AQS principle

1. General

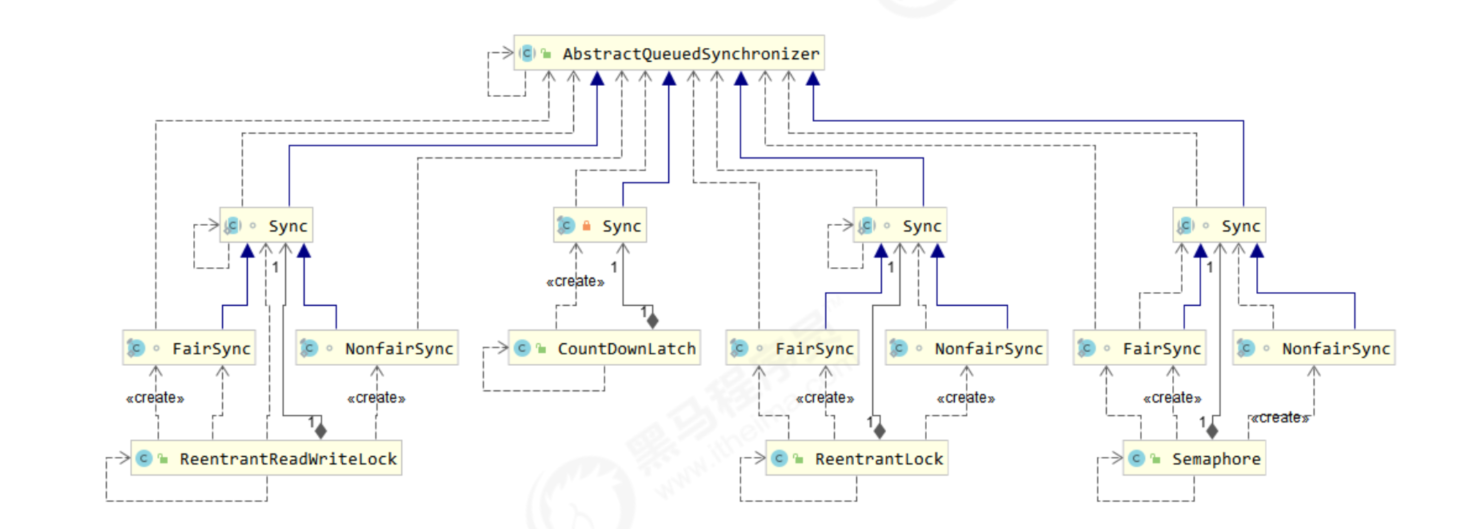

Its full name is AbstractQueuedSynchronizer, which is the framework of blocking locks and related synchronizer tools

characteristic:

- The state attribute is used to represent the state of resources (exclusive mode and shared mode). Subclasses need to define how to maintain this state and control how to obtain and release locks

- getState - get state

- setState - set state

- compareAndSetState - cas mechanism sets state state

- Exclusive mode allows only one thread to access resources, while shared mode allows multiple threads to access resources

- A FIFO based wait queue is provided, which is similar to the EntryList of Monitor

- Condition variables are used to implement the wait and wake-up mechanism. Multiple condition variables are supported, similar to the WaitSet of Monitor

Subclasses mainly implement such methods (UnsupportedOperationException is thrown by default)

- tryAcquire

- tryRelease

- tryAcquireShared

- tryReleaseShared

- isHeldExclusively

Get lock pose

// If lock acquisition fails

if (!tryAcquire(arg)) {

// After joining the queue, you can choose to block the current thread park unpark

}

Release lock posture

// If the lock is released successfully

if (tryRelease(arg)) {

// Let the blocked thread resume operation

}

2. Implement non reentrant lock

Custom synchronizer, custom lock

With a custom synchronizer, it is easy to reuse AQS and realize a fully functional custom lock

// Custom lock (non reentrant lock is implemented here)

class MyLock implements Lock {

// Synchronizer class - an exclusive lock is implemented here

class MySync extends AbstractQueuedSynchronizer {

// Attempt to acquire lock

@Override

protected boolean tryAcquire(int arg) {

if (compareAndSetState(0, 1)) {

// true, locking succeeded

// Set owner as the current thread

setExclusiveOwnerThread(Thread.currentThread());

return true;

}

return false;

}

// Attempt to release the lock

@Override

protected boolean tryRelease(int arg) {

// Note the order here to prevent reordering of JVM instructions

setExclusiveOwnerThread(null);

setState(0); // Write barrier

return true;

}

// Do you hold an exclusive lock

@Override

protected boolean isHeldExclusively() {

return getState() == 1;

}

public Condition newCondition() {

return new ConditionObject();

}

}

private final MySync sync = new MySync();

// Lock - if unsuccessful, it will enter the waiting queue

@Override

public void lock() {

sync.acquire(1);

}

// Lock, interruptible

@Override

public void lockInterruptibly() throws InterruptedException {

sync.acquireInterruptibly(1);

}

// Try locking - try once

@Override

public boolean tryLock() {

return sync.tryAcquire(1);

}

// Attempt to lock - with timeout

@Override

public boolean tryLock(long time, TimeUnit unit) throws InterruptedException {

return sync.tryAcquireNanos(1, unit.toNanos(time));

}

// Unlock

@Override

public void unlock() {

sync.release(1);

}

// Create condition variable

@Override

public Condition newCondition() {

return sync.newCondition();

}

}

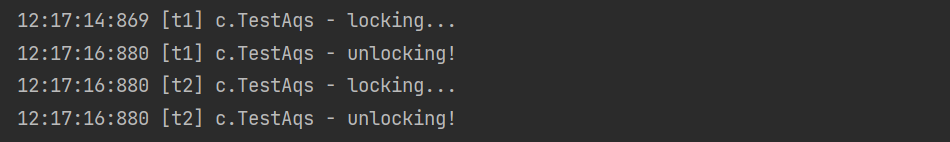

Test it

package top.onefine.test.c8;

import lombok.extern.slf4j.Slf4j;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.locks.AbstractQueuedSynchronizer;

import java.util.concurrent.locks.Condition;

import java.util.concurrent.locks.Lock;

@Slf4j(topic = "c.TestAqs")

public class TestAqs {

public static void main(String[] args) throws InterruptedException {

MyLock lock = new MyLock();

new Thread(() -> {

lock.lock();

// lock.lock(); // Cannot re-enter the lock. Locking failed here

try {

log.debug("locking...");

// TimeUnit.SECONDS.sleep(2);

Thread.sleep(2000);

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

log.debug("unlocking!");

lock.unlock();

}

}, "t1").start();

TimeUnit.MILLISECONDS.sleep(100);

new Thread(() -> {

lock.lock();

try {

log.debug("locking...");

} finally {

log.debug("unlocking!");

lock.unlock();

}

}, "t2").start();

}

}

output

Non reentrant test

If you change to the following code, you will find that you will also be blocked (locking will only be printed once)

lock.lock();

log.debug("locking...");

lock.lock();

log.debug("locking...");

3. Experience

origin

Early programmers would use one synchronizer to implement another similar synchronizer, such as using reentrant locks to implement semaphores, or vice versa. This is obviously not elegant enough, so AQS was created in JSR166 (java specification proposal) to provide this common synchronizer mechanism.

target

Functional objectives to be achieved by AQS

- The blocked version acquires the lock acquire and the non blocked version attempts to acquire the lock tryAcquire

- Get lock timeout mechanism

- By interrupting the cancellation mechanism

- Exclusive mechanism and sharing mechanism

- Waiting mechanism when conditions are not met

Performance goals to achieve

Instead, the primary performance goal here is scalability: to predictably maintain efficiency even, or especially, when synchronizers are contended.

Design

The basic idea of AQS is actually very simple

Logic for obtaining locks

while(state Status not allowed to get) {

if(This thread is not in the queue yet) {

Join the team and block

}

}

The current thread is dequeued

Logic for releasing locks

if(state Status allowed) {

Recover blocked threads(s)

}

main points

- Atomic maintenance state

- Blocking and resuming threads

- Maintenance queue

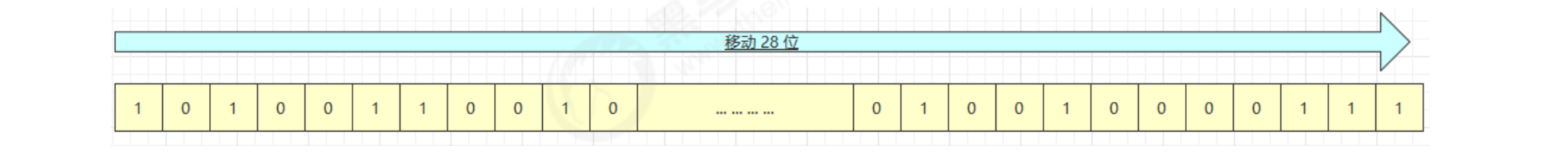

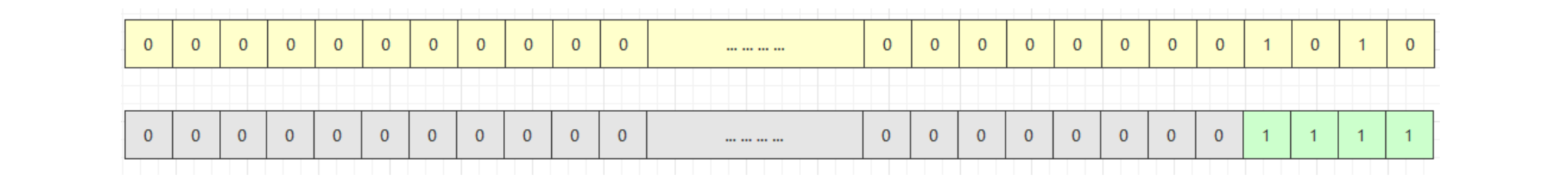

- state design

- state uses volatile with cas to ensure its atomicity during modification

- State uses 32bit int to maintain the synchronization state, because the test results using long on many platforms are not ideal

- Blocking recovery design

- The early APIs that control thread pause and resume include suspend and resume, but they are not available because suspend will not be aware if resume is called first

- The solution is to use Park & unpark to pause and resume threads. The specific principle has been mentioned before. There is no problem with unpark and then park

- Park & unpark is for the thread, not for the synchronizer, so the control granularity is more fine

- park threads can also be interrupted by interrupt

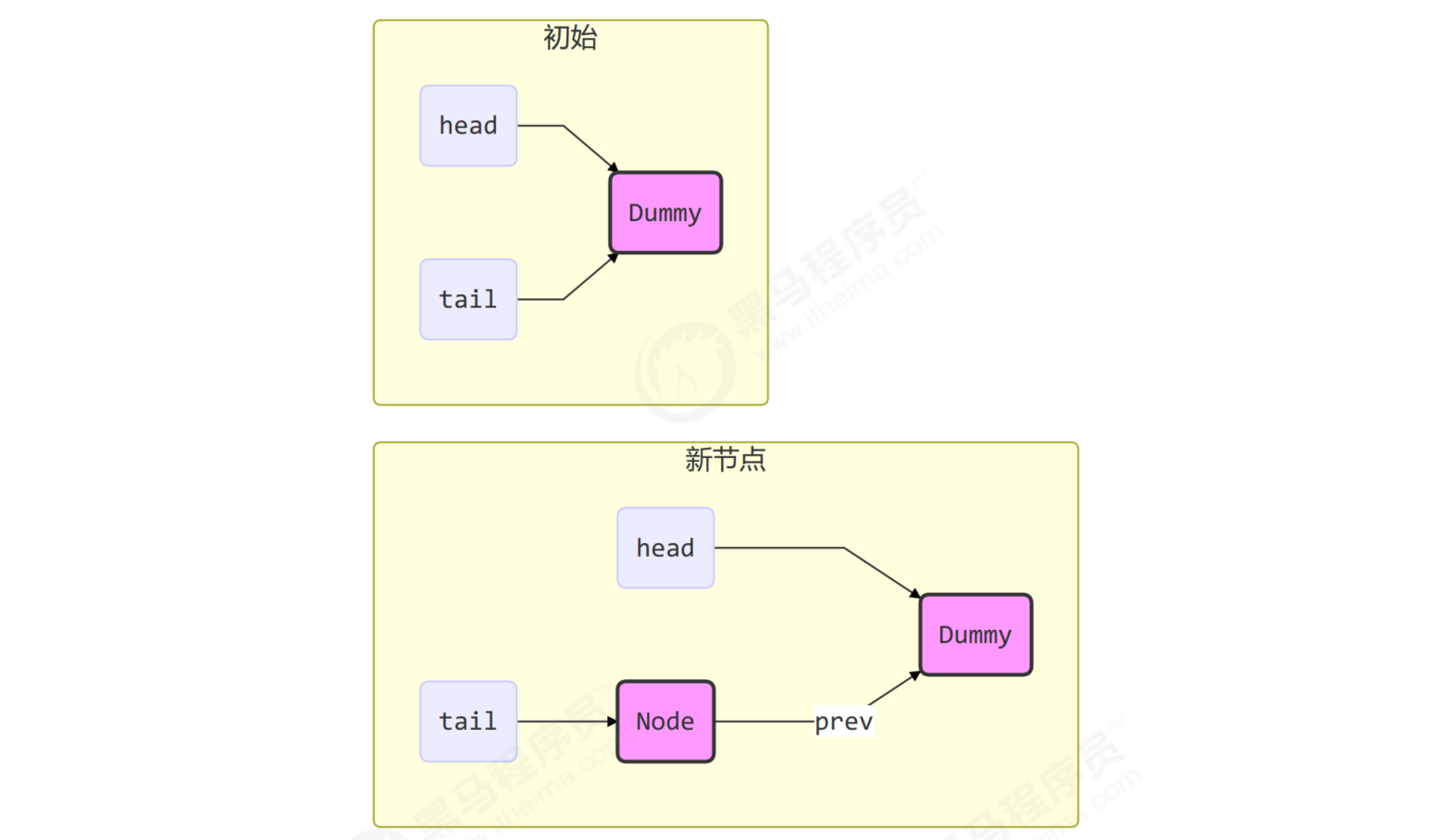

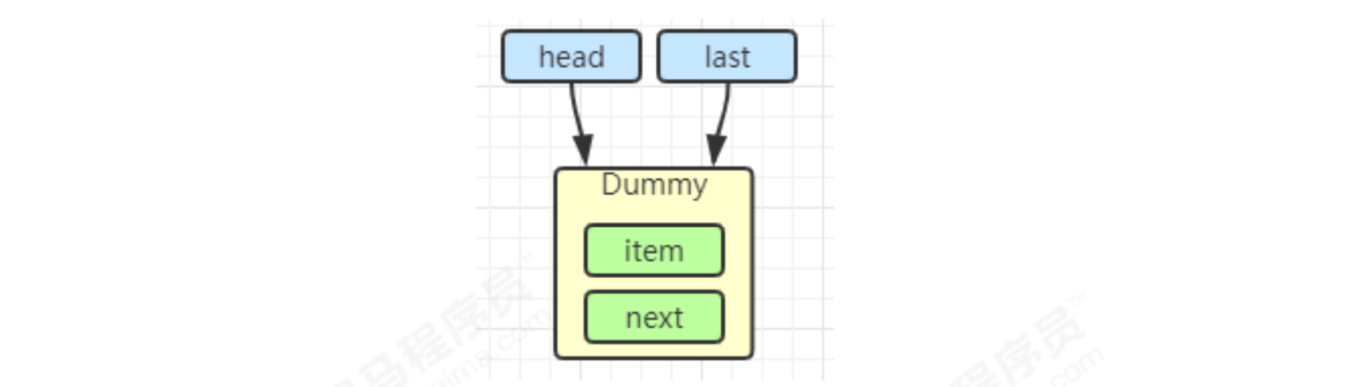

- Queue design

- FIFO first in first out queue is used, and priority queue is not supported

- CLH queue is a one-way lockless queue

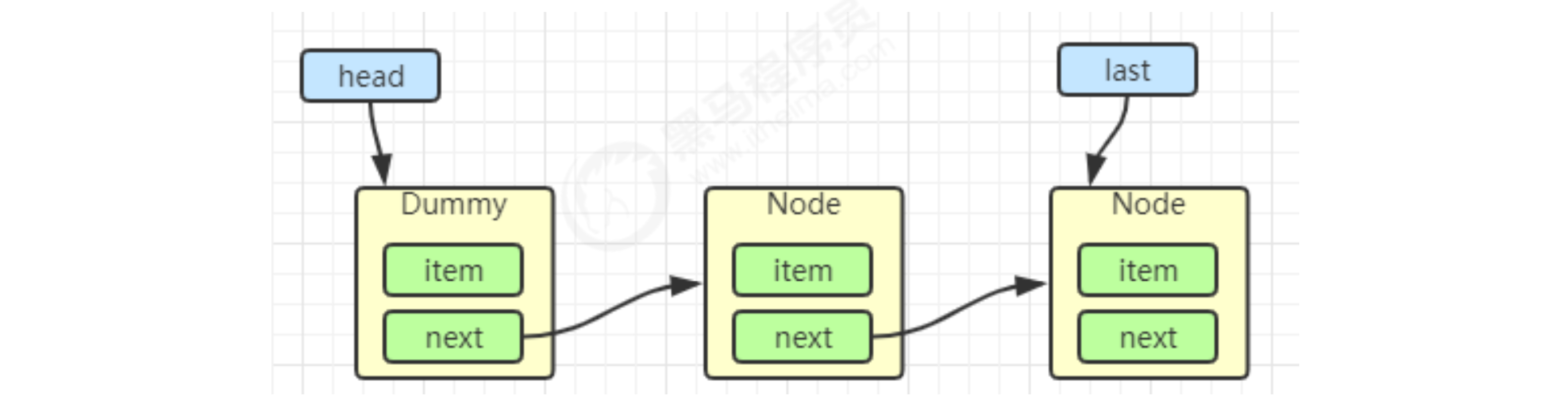

There are two pointer nodes in the queue, head and tail, which are decorated with volatile and used with cas. Each node has a state to maintain the node state

For the queued pseudo code, only the atomicity of tail assignment needs to be considered

do {

// Original tail

Node prev = tail;

// Use cas to change the original tail to node

} while(tail.compareAndSet(prev, node))

Outgoing pseudo code

// prev is the previous node

while((Node prev=node.prev).state != Wake up status) {

}

// Set header node

head = node;

CLH benefits:

- No lock, use spin

- Fast, non blocking

AQS improves CLH in some ways

private Node enq(final Node node) {

for (;;) {

Node t = tail;

// There is no element in the queue. tail is null

if (t == null) {

// Change head from null - > dummy

if (compareAndSetHead(new Node()))

tail = head;

} else {

// Set the prev of node to the original tail

node.prev = t;

// Set the tail from the original tail to node

if (compareAndSetTail(t, node)) {

// The next of the original tail is set to node

t.next = node;

return t;

}

}

}

}

It mainly uses the concurrency tool class of AQS

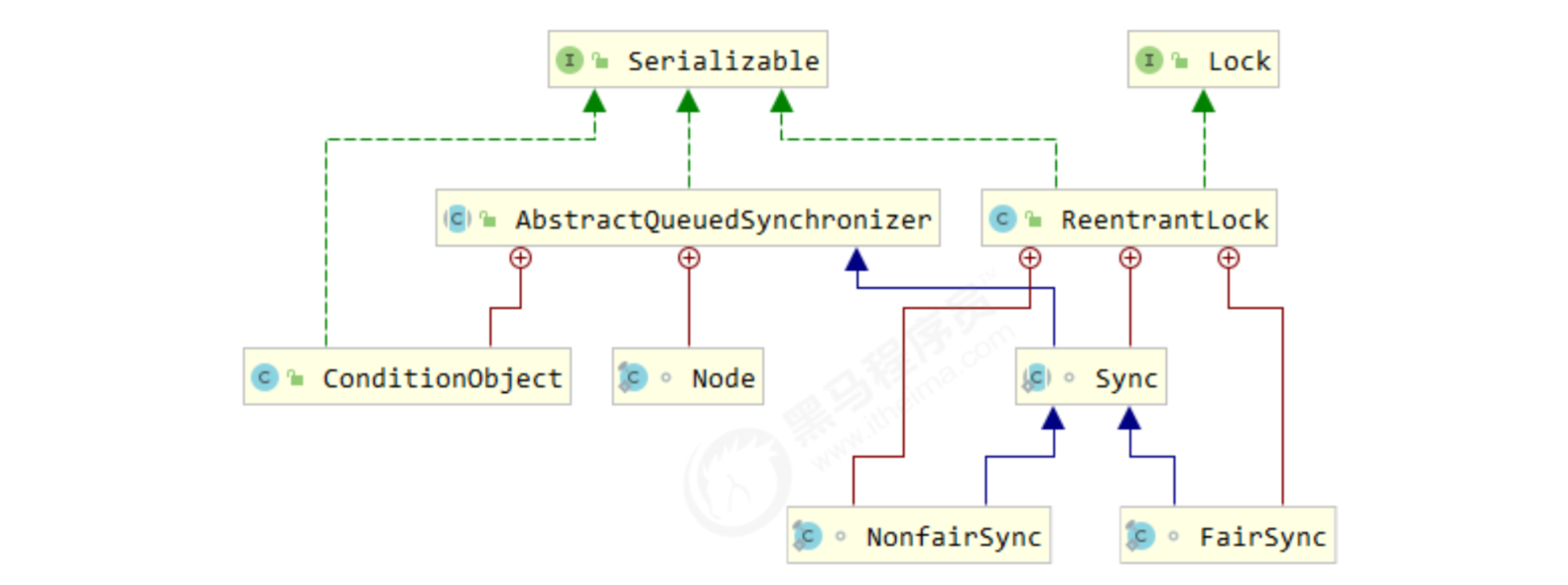

2. * ReentrantLock principle

1. Implementation principle of unfair lock

Lock and unlock process

Starting from the constructor, the default is the implementation of unfair lock

public ReentrantLock() {

sync = new NonfairSync();

}

NonfairSync inherits from AQS

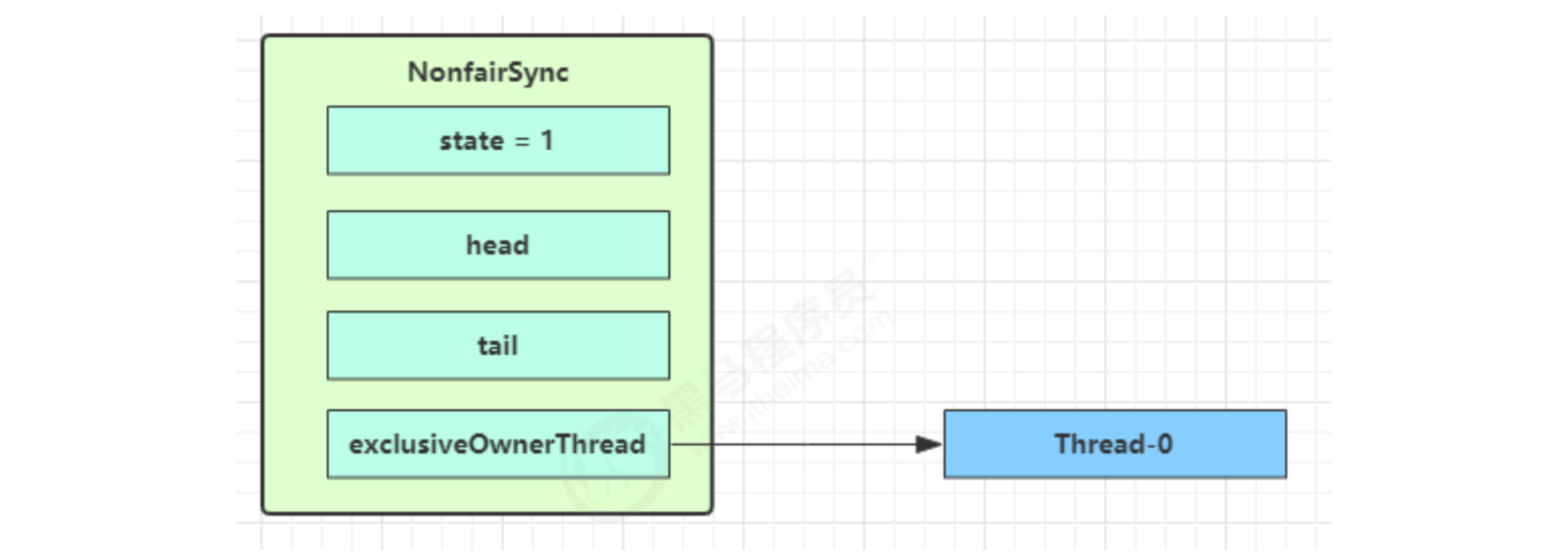

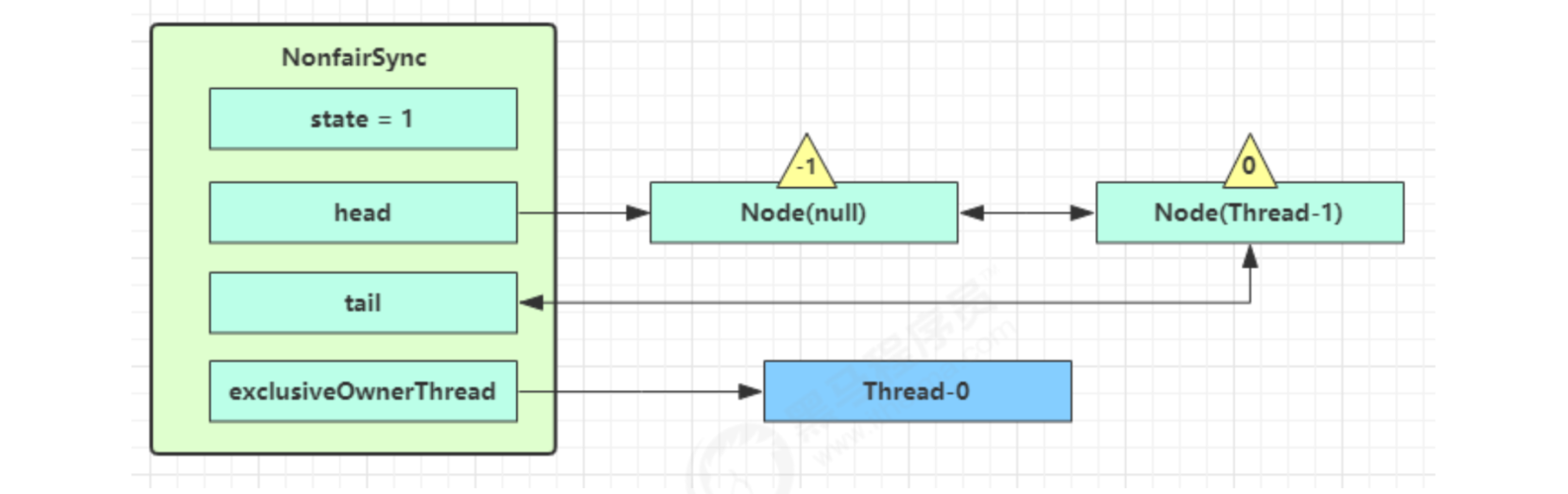

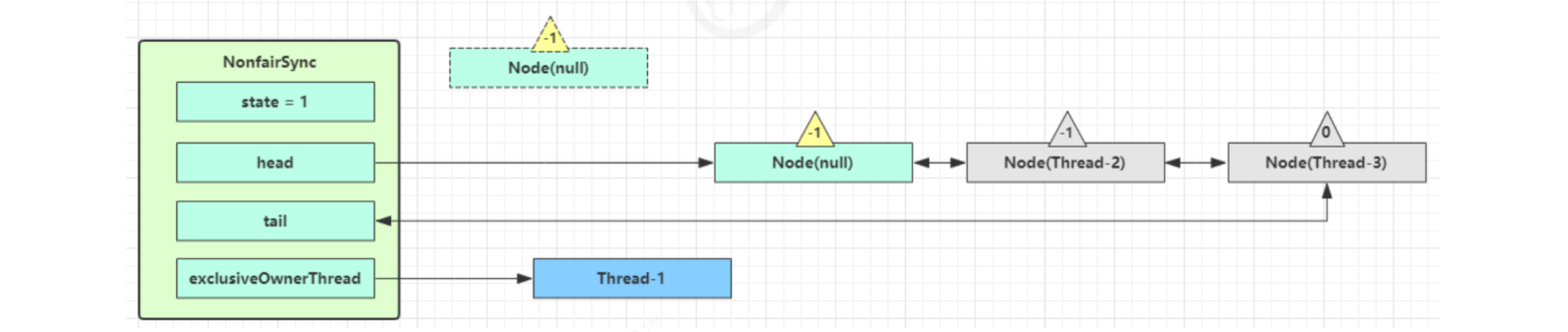

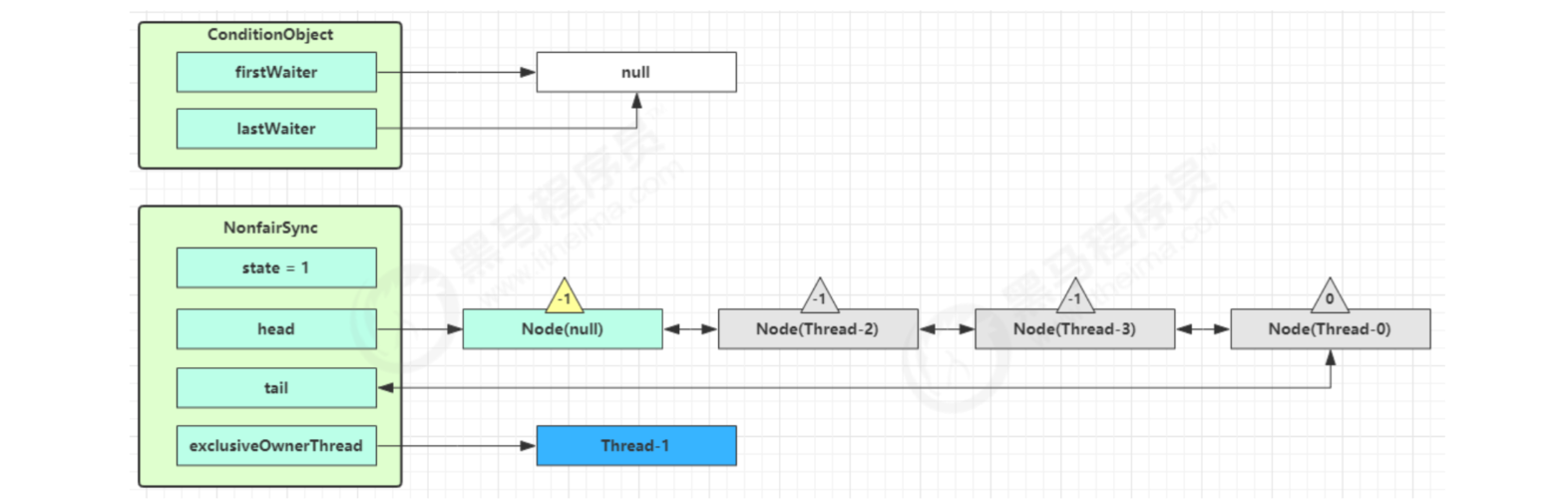

When there is no competition

When the first competition appears

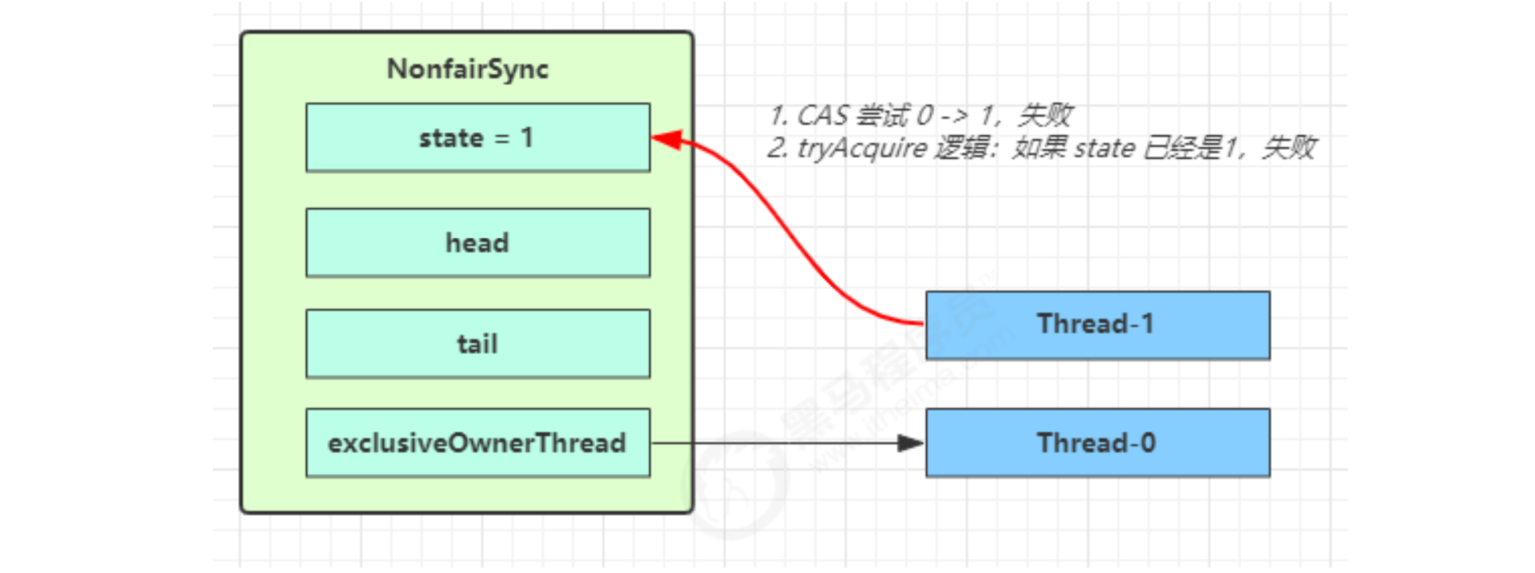

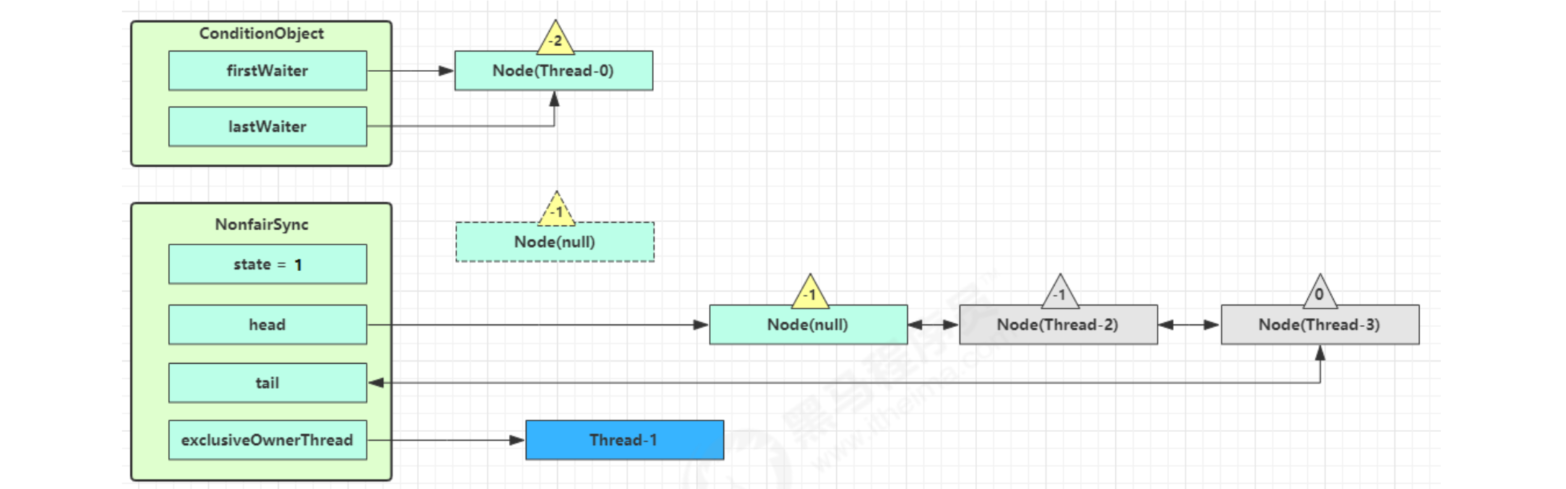

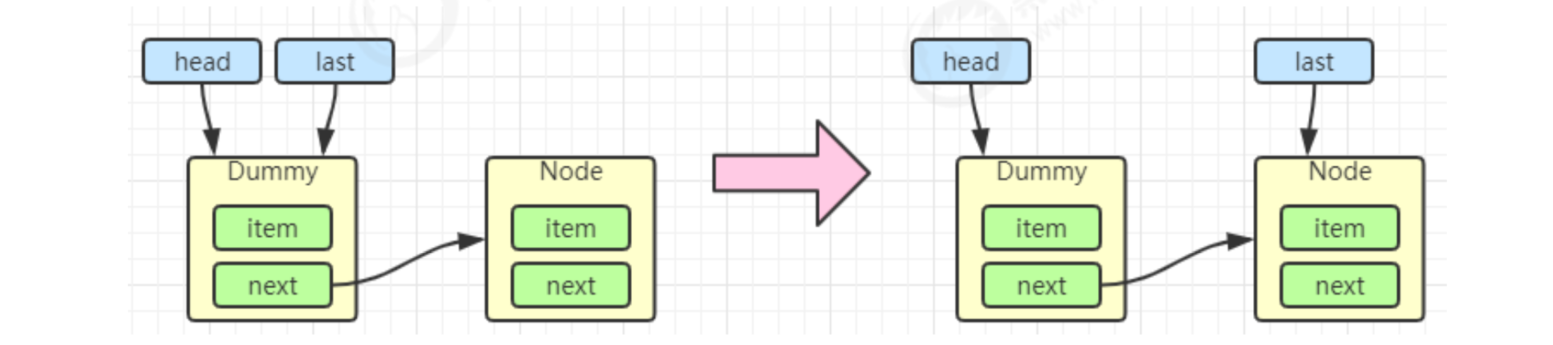

Thread-1 executed

- CAS tried to change state from 0 to 1, but failed

- Enter the tryAcquire logic. At this time, the state is already 1, and the result still fails

- Next, enter the addWaiter logic to construct the Node queue

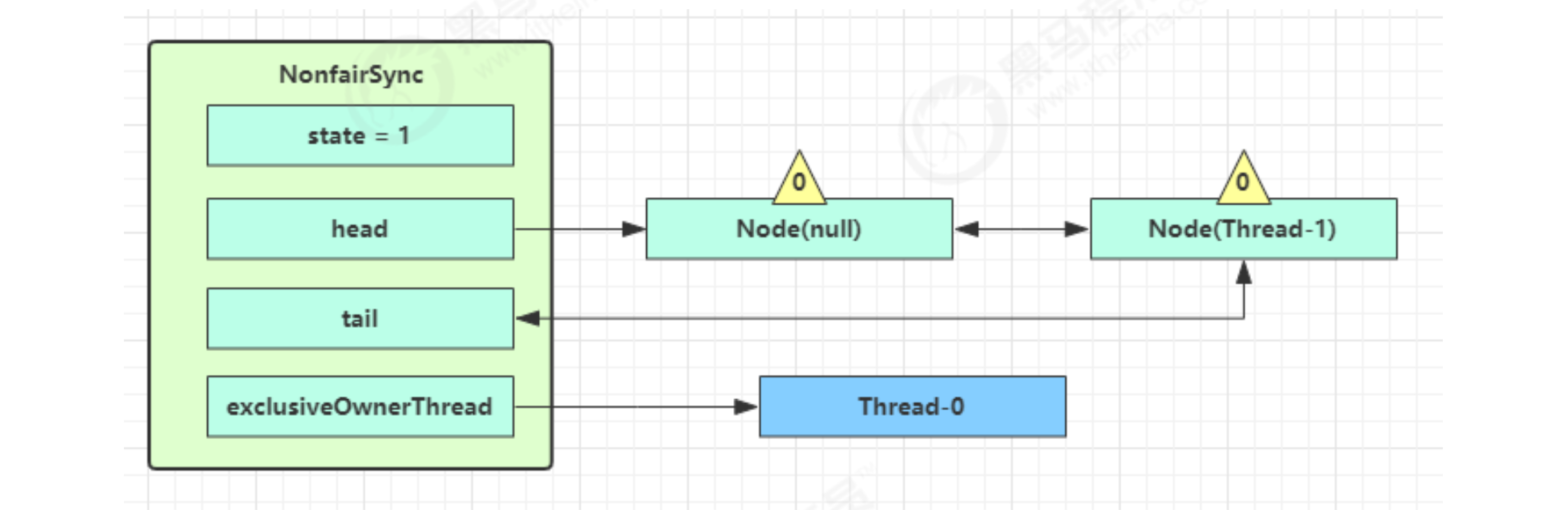

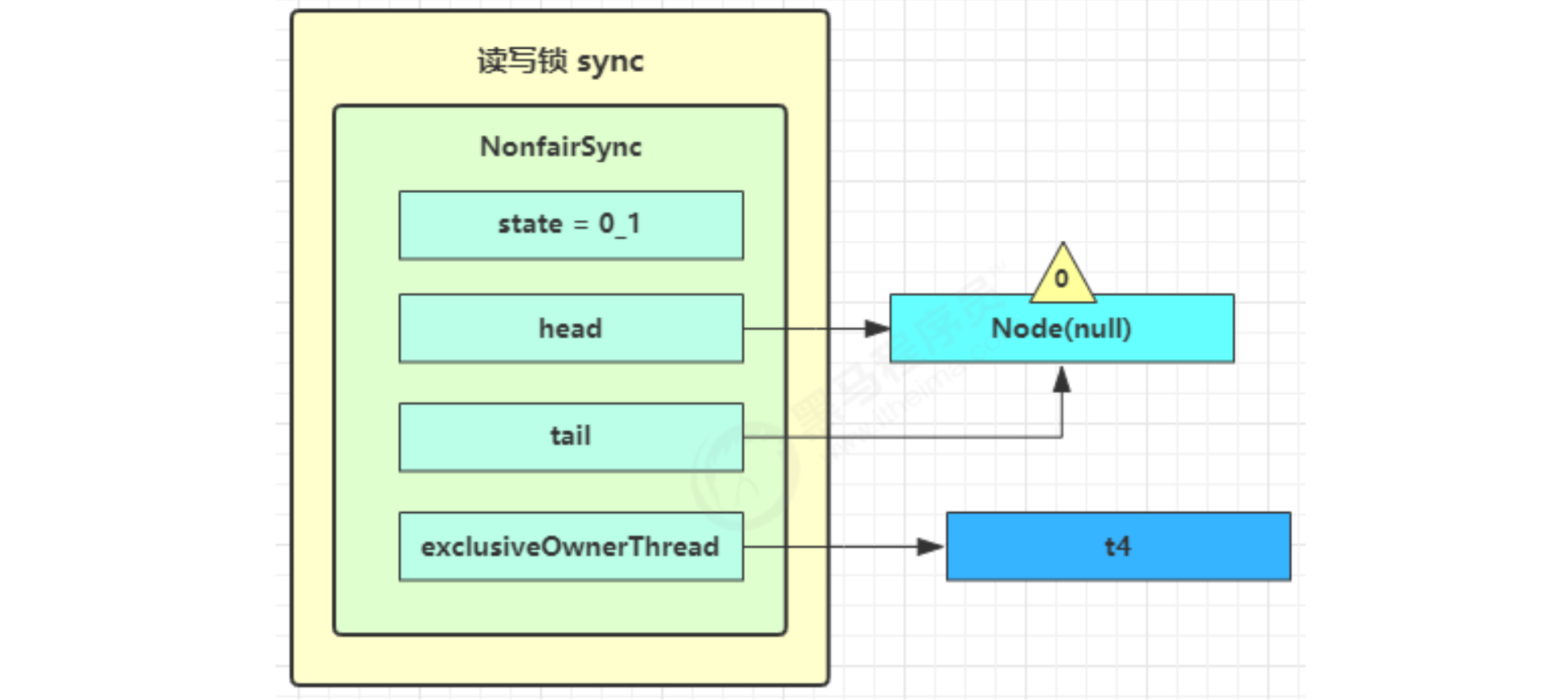

- The yellow triangle in the figure indicates the waitStatus status of the Node, where 0 is the default normal status

- Node creation is lazy

- The first Node is called Dummy or sentinel. It is used to occupy bits and is not associated with threads

The current thread enters the acquirequeueueueueueueueued logic

- Acquirequeueueued will keep trying to obtain locks in an endless loop, and enter park blocking after failure

- If you are next to the head (second), try to acquire the lock again. Of course, the state is still 1 and fails

- Enter the shouldParkAfterFailedAcquire logic, change the waitStatus of the precursor node, that is, head, to - 1, and return false this time

- shouldParkAfterFailedAcquire returns to acquirequeueueueued after execution. Try to acquire the lock again. Of course, the state is still 1 and fails

- When you enter shouldParkAfterFailedAcquire again, because the waitStatus of its precursor node is - 1, true is returned this time

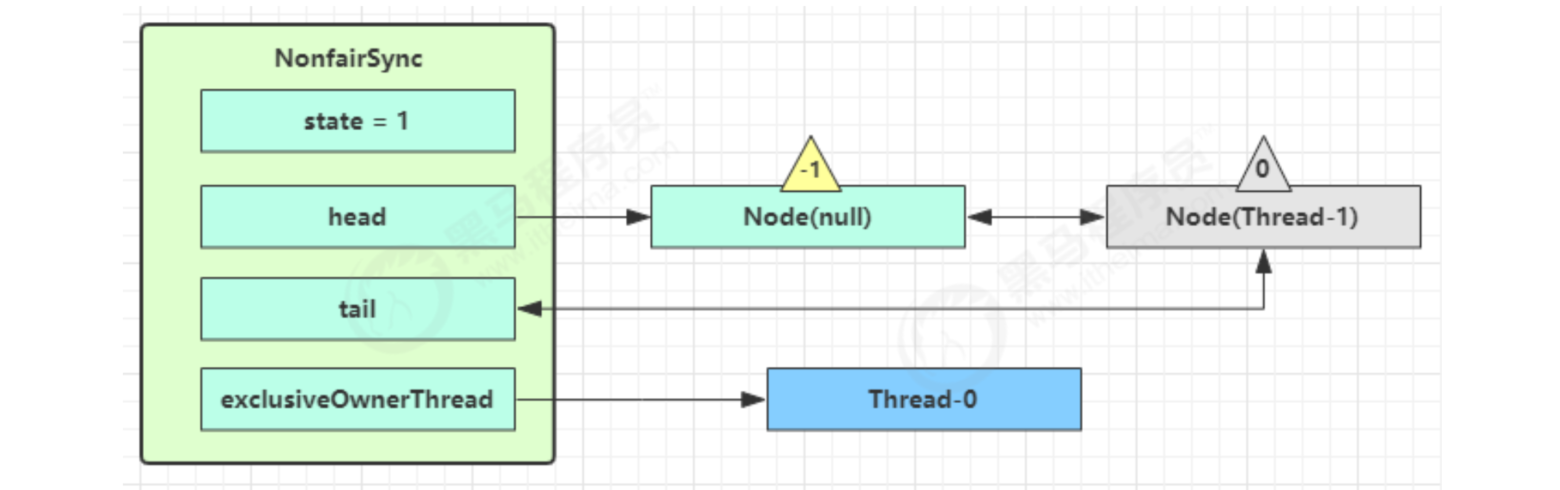

- Enter parkAndCheckInterrupt, Thread-1 park (gray)

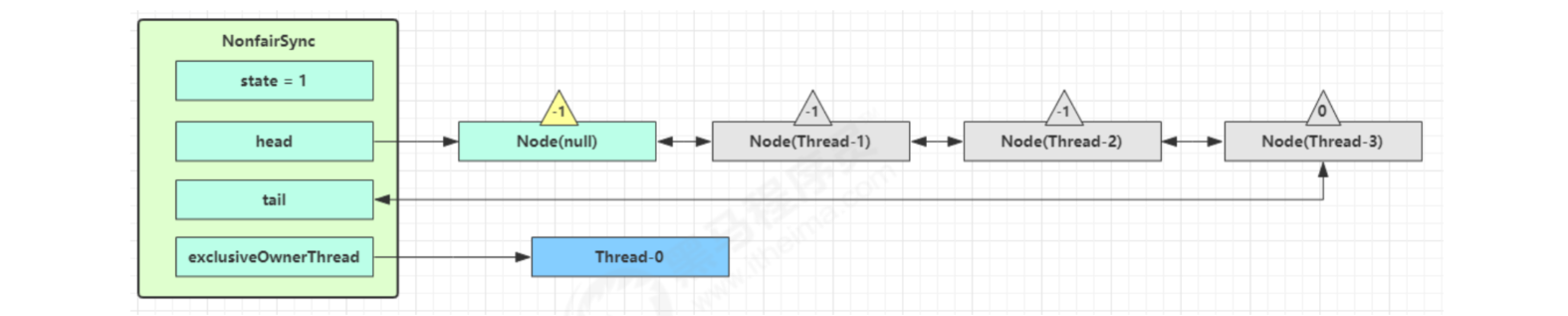

Again, multiple threads go through the above process, and the competition fails, which becomes like this

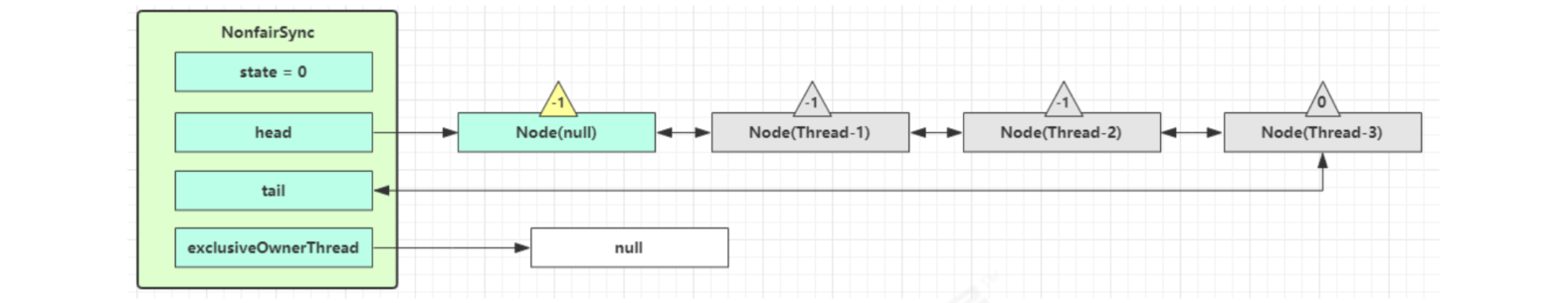

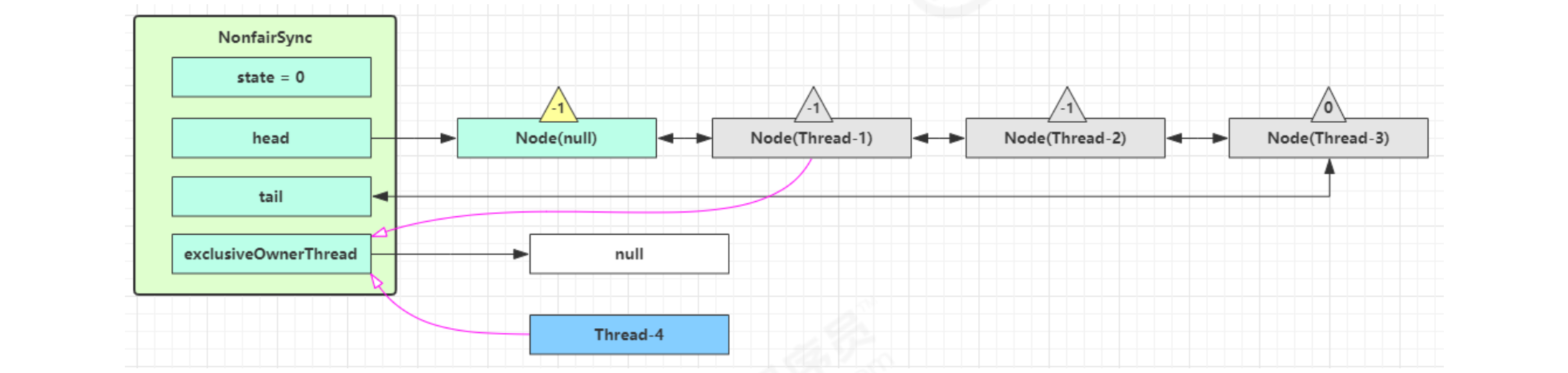

Thread-0 releases the lock and enters the tryRelease process. If successful

- Set exclusiveOwnerThread to null

- state = 0

The current queue is not null, and the waitstatus of head = - 1. Enter the unparksuccess process

Find the Node closest to the head in the queue (not cancelled), and unpark will resume its operation. In this case, it is Thread-1

Return to the acquirequeueueueueued process of Thread-1

If the lock is successful (no competition), it will be set

- exclusiveOwnerThread is Thread-1, state = 1

- The head points to the Node where Thread-1 is just located, and the Node clears the Thread

- The original head can be garbage collected because it is disconnected from the linked list

- If there are other threads competing at this time (unfair embodiment), for example, Thread-4 comes at this time

If Thread-4 takes the lead again

- Thread-4 is set to exclusiveOwnerThread, state = 1

- Thread-1 enters the acquirequeueueueueueueueued process again, fails to obtain the lock, and re enters the park block

Lock source code

// Sync inherited from AQS

static final class NonfairSync extends Sync {

private static final long serialVersionUID = 7316153563782823691L;

// Lock implementation

final void lock() {

// First, use cas to try (only once) to change the state from 0 to 1. If successful, it means that an exclusive lock has been obtained

if (compareAndSetState(0, 1))

setExclusiveOwnerThread(Thread.currentThread());

else

// If the attempt fails, enter a

acquire(1);

}

// A method inherited from AQS, which is easy to read, is placed here

public final void acquire(int arg) {

// ㈡ tryAcquire

if (

!tryAcquire(arg) &&

// When tryAcquire returns false, addWaiter four is called first, followed by acquirequeueueueued five

acquireQueued(addWaiter(Node.EXCLUSIVE), arg)

) {

selfInterrupt();

}

}

// Two into three

protected final boolean tryAcquire(int acquires) {

return nonfairTryAcquire(acquires);

}

// Three inherited methods are easy to read and placed here

final boolean nonfairTryAcquire(int acquires) {

final Thread current = Thread.currentThread();

int c = getState();

// If the lock has not been obtained

if (c == 0) {

// Try to obtain with cas, which reflects the unfairness: do not check the AQS queue

if (compareAndSetState(0, acquires)) {

setExclusiveOwnerThread(current);

return true;

}

}

// If the lock has been obtained and the thread is still the current thread, it indicates that lock reentry has occurred

else if (current == getExclusiveOwnerThread()) {

// state++

int nextc = c + acquires;

if (nextc < 0) // overflow

throw new Error("Maximum lock count exceeded");

setState(nextc);

return true;

}

// Failed to get. Go back to calling

return false;

}

// IV. The method inherited from AQS is easy to read and placed here

private Node addWaiter(Node mode) {

// Associate the current thread to a Node object in exclusive mode

Node node = new Node(Thread.currentThread(), mode);

// If tail is not null, cas tries to add the Node object to the end of AQS queue

Node pred = tail;

if (pred != null) {

node.prev = pred;

if (compareAndSetTail(pred, node)) {

// Bidirectional linked list

pred.next = node;

return node;

}

}

// Try to add Node to AQS and enter six steps

enq(node);

return node;

}

// Six AQS inherited methods, easy to read, put here

private Node enq(final Node node) {

for (; ; ) {

Node t = tail;

if (t == null) {

// Not yet. Set the head as the sentinel node (no corresponding thread, status 0)

if (compareAndSetHead(new Node())) {

tail = head;

}

} else {

// cas tries to add the Node object to the end of AQS queue

node.prev = t;

if (compareAndSetTail(t, node)) {

t.next = node;

return t;

}

}

}

}

// Five AQS inherited methods, easy to read, put here

final boolean acquireQueued(final Node node, int arg) {

boolean failed = true;

try {

boolean interrupted = false;

for (; ; ) {

final Node p = node.predecessor();

// The previous node is head, which means it's your turn (the node corresponding to the current thread). Try to get it

if (p == head && tryAcquire(arg)) {

// Get success, set yourself (the node corresponding to the current thread) as head

setHead(node);

// Previous node

p.next = null;

failed = false;

// Return interrupt flag false

return interrupted;

}

if (

// Judge whether to park and enter seven

shouldParkAfterFailedAcquire(p, node) &&

// park and wait. At this time, the status of the Node is set to Node.SIGNAL

parkAndCheckInterrupt()

) {

interrupted = true;

}

}

} finally {

if (failed)

cancelAcquire(node);

}

}

// VII. The method inherited from AQS is easy to read and placed here

private static boolean shouldParkAfterFailedAcquire(Node pred, Node node) {

// Gets the status of the previous node

int ws = pred.waitStatus;

if (ws == Node.SIGNAL) {

// If the previous node is blocking, you can also block it yourself

return true;

}

// >0 indicates cancellation status

if (ws > 0) {

// If the previous node is cancelled, the refactoring deletes all previous cancelled nodes and returns to the outer loop for retry

do {

node.prev = pred = pred.prev;

} while (pred.waitStatus > 0);

pred.next = node;

} else {

// It's not blocked this time

// However, if the next retry is unsuccessful, blocking is required. At this time, the status of the previous node needs to be set to Node.SIGNAL

compareAndSetWaitStatus(pred, ws, Node.SIGNAL);

}

return false;

}

// Eight blocks the current thread

private final boolean parkAndCheckInterrupt() {

LockSupport.park(this);

return Thread.interrupted();

}

}

be careful

Whether unpark is required is determined by the waitStatus == Node.SIGNAL of the predecessor node of the current node, not the waitStatus of the current node

Unlock source code

// Sync inherited from AQS

static final class NonfairSync extends Sync {

// Unlock implementation

public void unlock() {

sync.release(1);

}

// The method inherited from AQS is easy to read and placed here

public final boolean release(int arg) {

// Try to release the lock and enter a

if (tryRelease(arg)) {

// Queue header node unpark

Node h = head;

if (

// Queue is not null

h != null &&

// Only when waitStatus == Node.SIGNAL, unpark is required

h.waitStatus != 0

) {

// Unpark the thread waiting in AQS and enters the second stage

unparkSuccessor(h);

}

return true;

}

return false;

}

// An inherited method, easy to read, is placed here

protected final boolean tryRelease(int releases) {

// state--

int c = getState() - releases;

if (Thread.currentThread() != getExclusiveOwnerThread())

throw new IllegalMonitorStateException();

boolean free = false;

// Lock reentry is supported. It can be released successfully only when the state is reduced to 0

if (c == 0) {

free = true;

setExclusiveOwnerThread(null);

}

setState(c);

return free;

}

// II. The method inherited from AQS is easy to read and placed here

private void unparkSuccessor(Node node) {

// If the status is Node.SIGNAL, try to reset the status to 0

// It's ok if you don't succeed

int ws = node.waitStatus;

if (ws < 0) {

compareAndSetWaitStatus(node, ws, 0);

}

// Find the node that needs unpark, but this node is separated from the AQS queue and is completed by the wake-up node

Node s = node.next;

// Regardless of the cancelled nodes, find the top node of the AQS queue that needs unpark from back to front

if (s == null || s.waitStatus > 0) {

s = null;

for (Node t = tail; t != null && t != node; t = t.prev)

if (t.waitStatus <= 0)

s = t;

}

if (s != null)

LockSupport.unpark(s.thread);

}

}

2. Reentrant principle

static final class NonfairSync extends Sync {

// ...

// Sync inherited method, easy to read, put here

final boolean nonfairTryAcquire(int acquires) {

final Thread current = Thread.currentThread();

int c = getState();

if (c == 0) {

if (compareAndSetState(0, acquires)) {

setExclusiveOwnerThread(current);

return true;

}

}

// If the lock has been obtained and the thread is still the current thread, it indicates that lock reentry has occurred

else if (current == getExclusiveOwnerThread()) {

// state++

int nextc = c + acquires;

if (nextc < 0) // overflow

throw new Error("Maximum lock count exceeded");

setState(nextc);

return true;

}

return false;

}

// Sync inherited method, easy to read, put here

protected final boolean tryRelease(int releases) {

// state--

int c = getState() - releases;

if (Thread.currentThread() != getExclusiveOwnerThread())

throw new IllegalMonitorStateException();

boolean free = false;

// Lock reentry is supported. It can be released successfully only when the state is reduced to 0

if (c == 0) {

free = true;

setExclusiveOwnerThread(null);

}

setState(c);

return free;

}

}

3. Interruptible principle

Non interruptible mode

In this mode, even if it is interrupted, it will still reside in the AQS queue. You can't know that you are interrupted until you get the lock

// Sync inherited from AQS

static final class NonfairSync extends Sync {

// ...

private final boolean parkAndCheckInterrupt() {

// If the break flag is already true, the park will be invalidated

LockSupport.park(this);

// interrupted clears the break flag

return Thread.interrupted();

}

final boolean acquireQueued(final Node node, int arg) {

boolean failed = true;

try {

boolean interrupted = false;

for (; ; ) {

final Node p = node.predecessor();

if (p == head && tryAcquire(arg)) {

setHead(node);

p.next = null;

failed = false;

// You still need to obtain the lock before you can return to the broken state

return interrupted;

}

if (

shouldParkAfterFailedAcquire(p, node) &&

parkAndCheckInterrupt()

) {

// If the interrupt is awakened, the interrupt status returned is true

interrupted = true;

}

}

} finally {

if (failed)

cancelAcquire(node);

}

}

public final void acquire(int arg) {

if (

!tryAcquire(arg) &&

acquireQueued(addWaiter(Node.EXCLUSIVE), arg)

) {

// If the interrupt status is true

selfInterrupt();

}

}

static void selfInterrupt() {

// Regenerate an interrupt

Thread.currentThread().interrupt();

}

}

Interruptible mode

static final class NonfairSync extends Sync {

public final void acquireInterruptibly(int arg) throws InterruptedException {

if (Thread.interrupted())

throw new InterruptedException();

// If the lock is not obtained, enter one

if (!tryAcquire(arg))

doAcquireInterruptibly(arg);

}

// A interruptible lock acquisition process

private void doAcquireInterruptibly(int arg) throws InterruptedException {

final Node node = addWaiter(Node.EXCLUSIVE);

boolean failed = true;

try {

for (; ; ) {

final Node p = node.predecessor();

if (p == head && tryAcquire(arg)) {

setHead(node);

p.next = null; // help GC

failed = false;

return;

}

if (shouldParkAfterFailedAcquire(p, node) &&

parkAndCheckInterrupt()) {

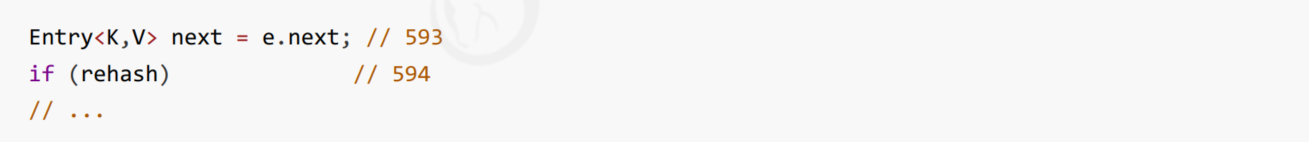

// In the process of park, if it is interrupt ed, it will enter this field

// At this time, an exception is thrown without entering for (;) again

throw new InterruptedException();

}

}

} finally {

if (failed)

cancelAcquire(node);

}

}

}

4. Implementation principle of fair lock

static final class FairSync extends Sync {

private static final long serialVersionUID = -3000897897090466540L;

final void lock() {

acquire(1);

}

// The method inherited from AQS is easy to read and placed here

public final void acquire(int arg) {

if (

!tryAcquire(arg) &&

acquireQueued(addWaiter(Node.EXCLUSIVE), arg)

) {

selfInterrupt();

}

}

// The main difference from non fair lock is the implementation of tryAcquire method

protected final boolean tryAcquire(int acquires) {

final Thread current = Thread.currentThread();

int c = getState();

if (c == 0) {

// First check whether there are precursor nodes in the AQS queue. If not, compete

if (!hasQueuedPredecessors() &&

compareAndSetState(0, acquires)) {

setExclusiveOwnerThread(current);

return true;

}

} else if (current == getExclusiveOwnerThread()) {

int nextc = c + acquires;

if (nextc < 0)

throw new Error("Maximum lock count exceeded");

setState(nextc);

return true;

}

return false;

}

// A method inherited from AQS, which is easy to read, is placed here

public final boolean hasQueuedPredecessors() {

Node t = tail;

Node h = head;

Node s;

// h != t indicates that there are nodes in the queue

return h != t &&

(

// (s = h.next) == null indicates whether there is a dick in the queue

(s = h.next) == null ||

// Or the second thread in the queue is not this thread

s.thread != Thread.currentThread()

);

}

}

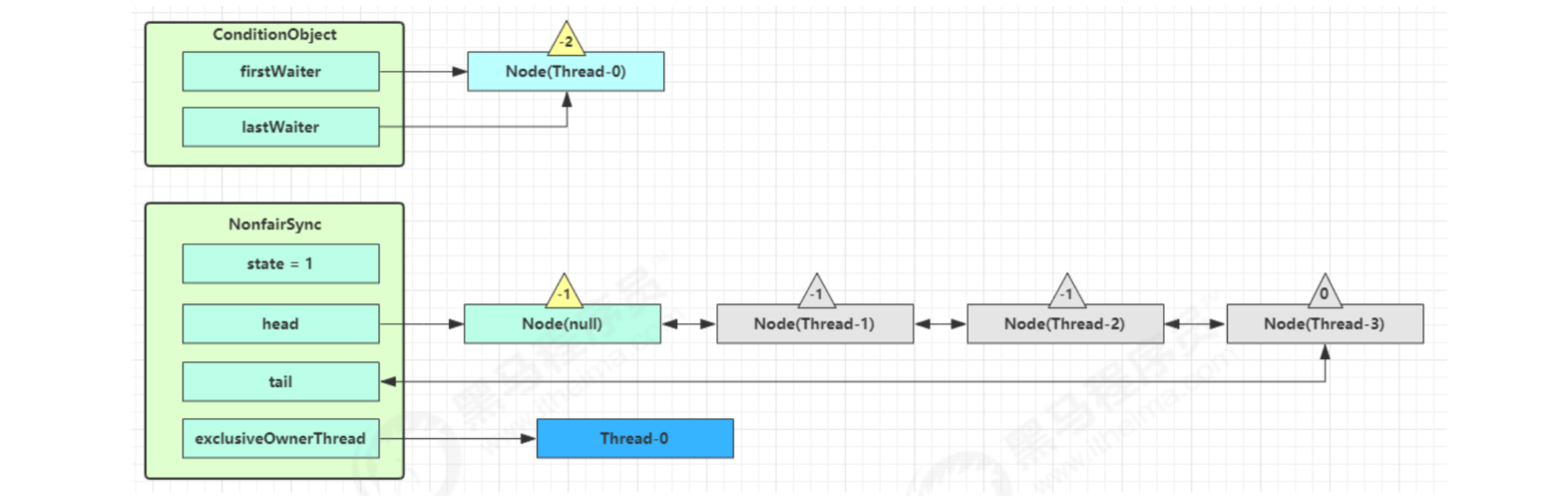

5. Realization principle of conditional variable

Each condition variable actually corresponds to a waiting queue, and its implementation class is ConditionObject

await process

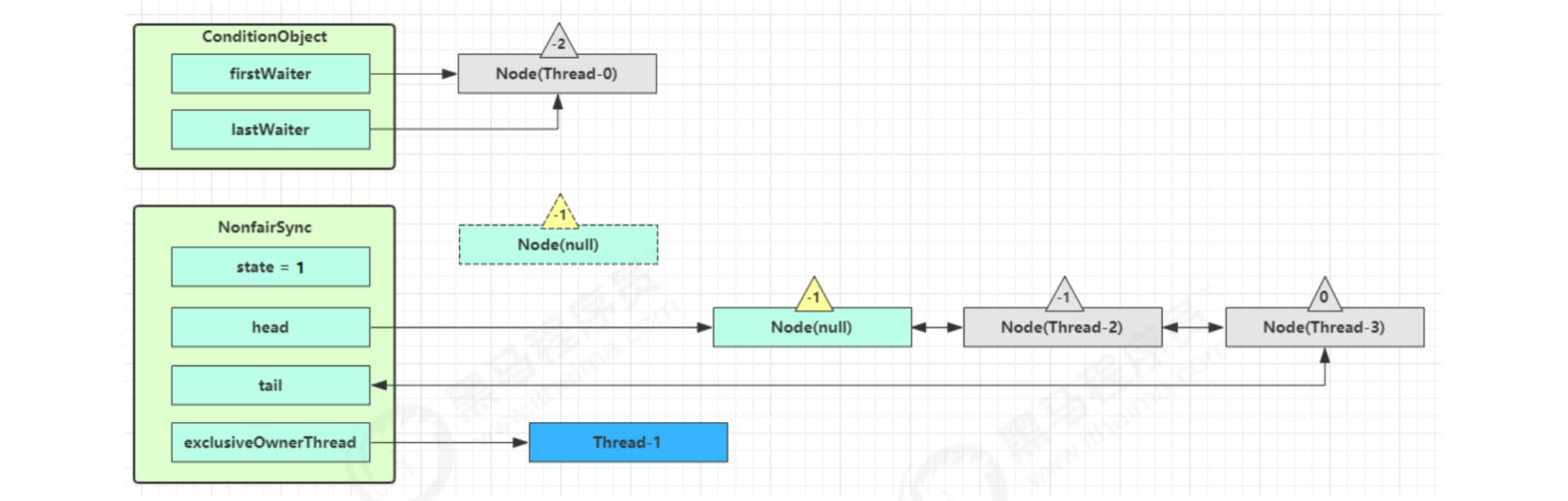

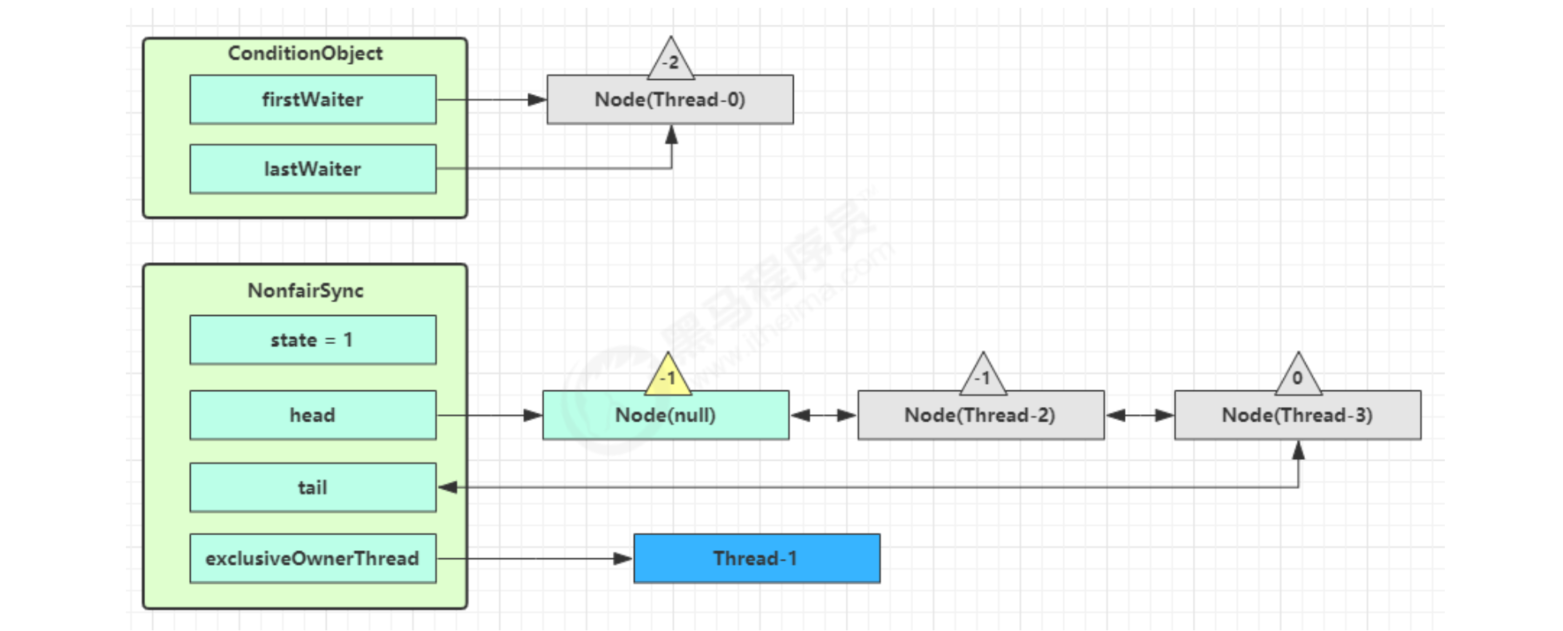

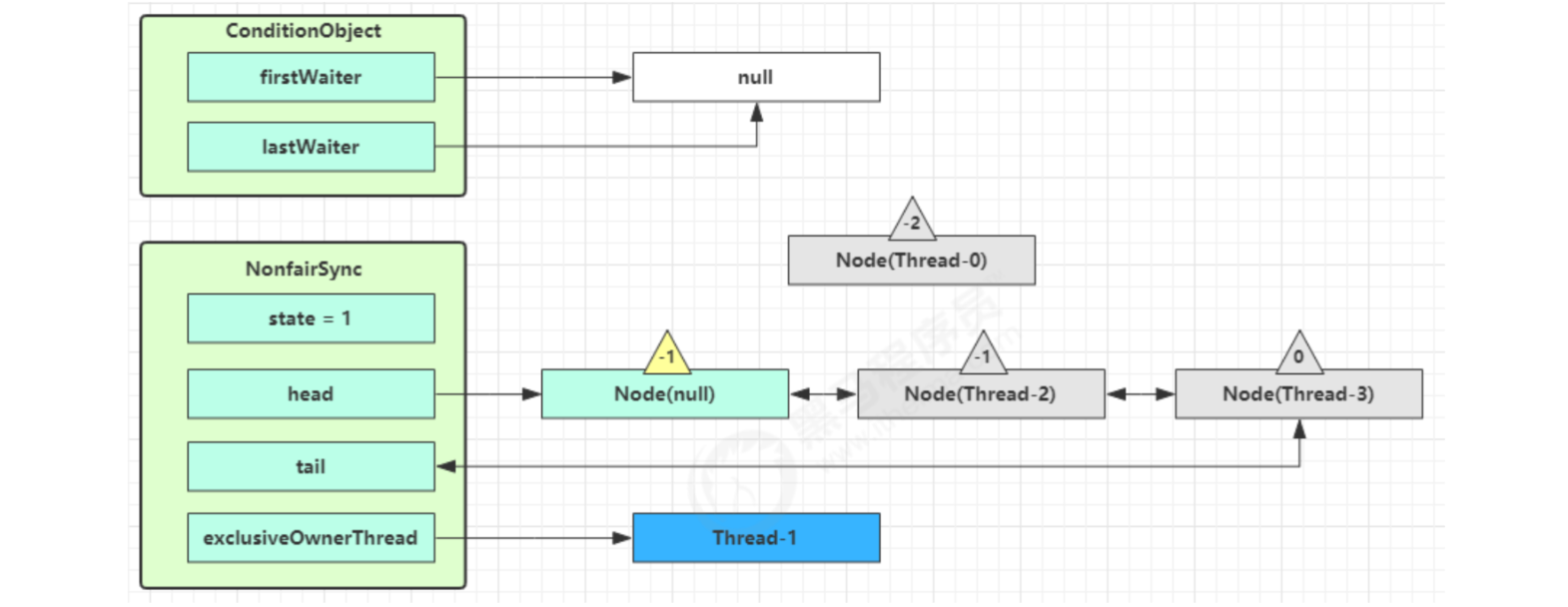

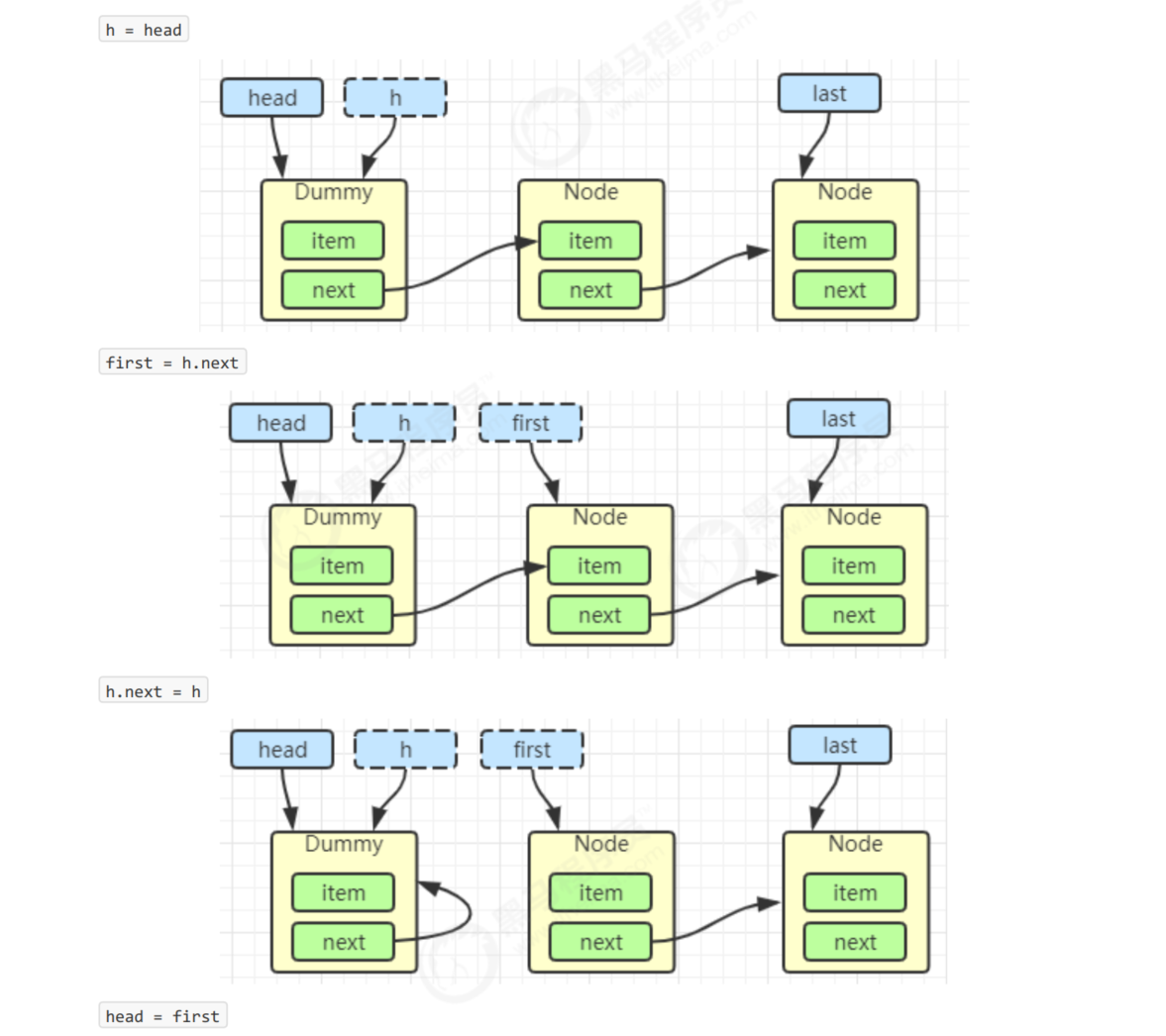

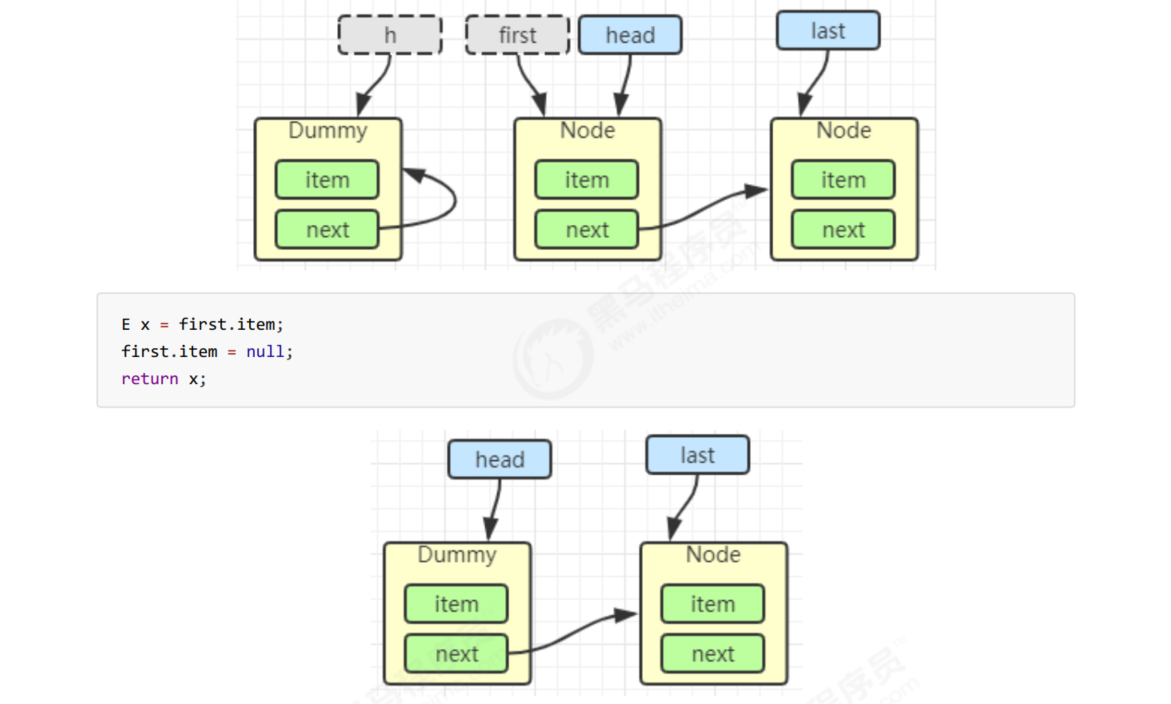

Start Thread-0 to hold the lock, call await, and enter the addConditionWaiter process of ConditionObject

Create a new Node whose status is - 2 (Node.CONDITION), associate Thread-0, and add it to the tail of the waiting queue

Next, enter the fully release process of AQS to release the lock on the synchronizer

The next node in the unpark AQS queue competes for the lock. Assuming that there are no other competing threads, the Thread-1 competition succeeds

park blocking Thread-0

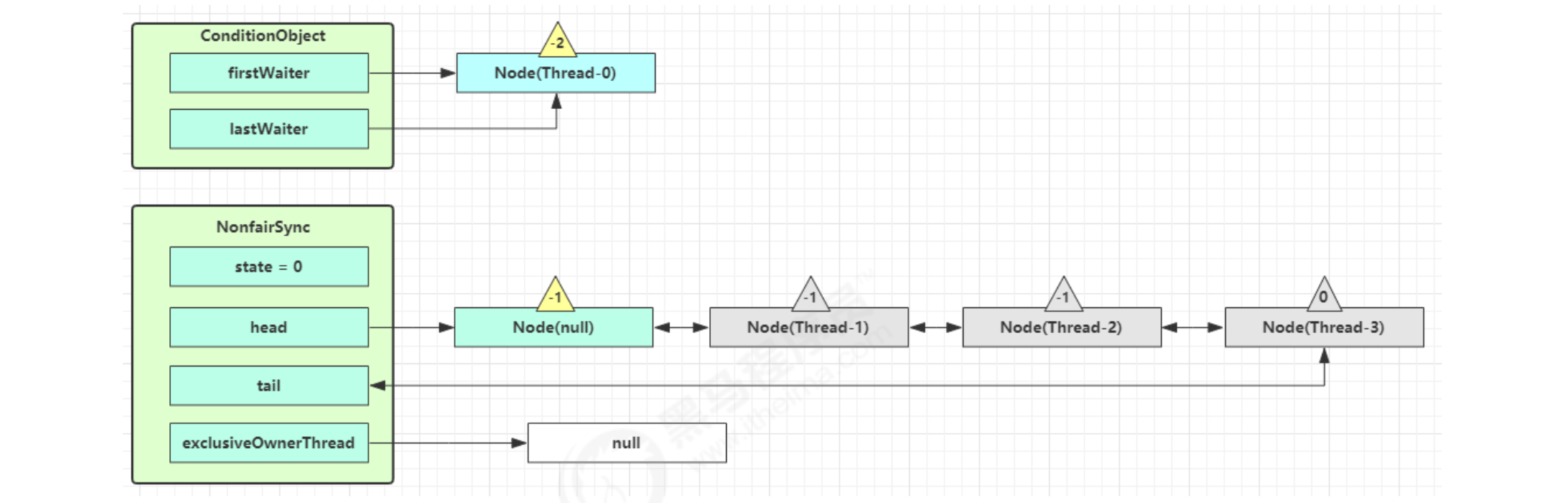

signal process

Suppose Thread-1 wants to wake up Thread-0

Enter the doSignal process of ConditionObject and obtain the first Node in the waiting queue, that is, the Node where Thread-0 is located

Execute the transferForSignal process, add the Node to the end of the AQS queue, change the waitStatus of Thread-0 to 0, and the waitStatus of Thread-3 to - 1

Thread-1 releases the lock and enters the unlock process

Source code

public class ConditionObject implements Condition, java.io.Serializable {

private static final long serialVersionUID = 1173984872572414699L;

// First waiting node

private transient Node firstWaiter;

// Last waiting node

private transient Node lastWaiter;

public ConditionObject() {

}

// Add a Node to the waiting queue

private Node addConditionWaiter() {

Node t = lastWaiter;

// All cancelled nodes are deleted from the queue linked list, as shown in Figure 2

if (t != null && t.waitStatus != Node.CONDITION) {

unlinkCancelledWaiters();

t = lastWaiter;

}

// Create a new Node associated with the current thread and add it to the end of the queue

Node node = new Node(Thread.currentThread(), Node.CONDITION);

if (t == null)

firstWaiter = node;

else

t.nextWaiter = node;

lastWaiter = node;

return node;

}

// Wake up - transfer the first node not cancelled to the AQS queue

private void doSignal(Node first) {

do {

// It's already the tail node

if ((firstWaiter = first.nextWaiter) == null) {

lastWaiter = null;

}

first.nextWaiter = null;

} while (

// Transfer the nodes in the waiting queue to the AQS queue. If it is unsuccessful and there are still nodes, continue to cycle for three times

!transferForSignal(first) &&

// Queue and node

(first = firstWaiter) != null

);

}

// External class methods are easy to read and placed here

// 3. If the node status is cancel, return false to indicate that the transfer failed, otherwise the transfer succeeded

final boolean transferForSignal(Node node) {

// If the status is no longer Node.CONDITION, it indicates that it has been cancelled

if (!compareAndSetWaitStatus(node, Node.CONDITION, 0))

return false;

// Join the end of AQS queue

Node p = enq(node);

int ws = p.waitStatus;

if (

// The previous node was cancelled

ws > 0 ||

// The status of the previous node cannot be set to Node.SIGNAL

!compareAndSetWaitStatus(p, ws, Node.SIGNAL)

) {

// unpark unblocks the thread to resynchronize the state

LockSupport.unpark(node.thread);

}

return true;

}

// Wake up all - wait for all nodes in the queue to transfer to the AQS queue

private void doSignalAll(Node first) {

lastWaiter = firstWaiter = null;

do {

Node next = first.nextWaiter;

first.nextWaiter = null;

transferForSignal(first);

first = next;

} while (first != null);

}

// ㈡

private void unlinkCancelledWaiters() {

// ...

}

// Wake up - you must hold a lock to wake up, so there is no need to consider locking in doSignal

public final void signal() {

if (!isHeldExclusively())

throw new IllegalMonitorStateException();

Node first = firstWaiter;

if (first != null)

doSignal(first);

}

// Wake up all - you must hold a lock to wake up, so there is no need to consider locking in dosignallall

public final void signalAll() {

if (!isHeldExclusively())

throw new IllegalMonitorStateException();

Node first = firstWaiter;

if (first != null)

doSignalAll(first);

}

// Non interruptible wait - until awakened

public final void awaitUninterruptibly() {

// Add a Node to the waiting queue, as shown in Figure 1

Node node = addConditionWaiter();

// Release the lock held by the node, see Figure 4

int savedState = fullyRelease(node);

boolean interrupted = false;

// If the node has not been transferred to the AQS queue, it will be blocked

while (!isOnSyncQueue(node)) {

// park blocking

LockSupport.park(this);

// If it is interrupted, only the interruption status is set

if (Thread.interrupted())

interrupted = true;

}

// After waking up, try to compete for the lock. If it fails, enter the AQS queue

if (acquireQueued(node, savedState) || interrupted)

selfInterrupt();

}

// External class methods are easy to read and placed here

// Fourth, because a thread may re-enter, it is necessary to release all States

final int fullyRelease(Node node) {

boolean failed = true;

try {

int savedState = getState();

if (release(savedState)) {

failed = false;

return savedState;

} else {

throw new IllegalMonitorStateException();

}

} finally {

if (failed)

node.waitStatus = Node.CANCELLED;

}

}

// Interrupt mode - resets the interrupt state when exiting the wait

private static final int REINTERRUPT = 1;

// Break mode - throw an exception when exiting the wait

private static final int THROW_IE = -1;

// Judge interrupt mode

private int checkInterruptWhileWaiting(Node node) {

return Thread.interrupted() ?

(transferAfterCancelledWait(node) ? THROW_IE : REINTERRUPT) :

0;

}

// V. application interrupt mode

private void reportInterruptAfterWait(int interruptMode)

throws InterruptedException {

if (interruptMode == THROW_IE)

throw new InterruptedException();

else if (interruptMode == REINTERRUPT)

selfInterrupt();

}

// Wait until awakened or interrupted

public final void await() throws InterruptedException {

if (Thread.interrupted()) {

throw new InterruptedException();

}

// Add a Node to the waiting queue, as shown in Figure 1

Node node = addConditionWaiter();

// Release the lock held by the node

int savedState = fullyRelease(node);

int interruptMode = 0;

// If the node has not been transferred to the AQS queue, it will be blocked

while (!isOnSyncQueue(node)) {

// park blocking

LockSupport.park(this);

// If interrupted, exit the waiting queue

if ((interruptMode = checkInterruptWhileWaiting(node)) != 0)

break;

}

// After exiting the waiting queue, you also need to obtain the lock of the AQS queue

if (acquireQueued(node, savedState) && interruptMode != THROW_IE)

interruptMode = REINTERRUPT;

// All cancelled nodes are deleted from the queue linked list, as shown in Figure 2

if (node.nextWaiter != null)

unlinkCancelledWaiters();

// Apply interrupt mode, see v

if (interruptMode != 0)

reportInterruptAfterWait(interruptMode);

}

// Wait - until awakened or interrupted or timed out

public final long awaitNanos(long nanosTimeout) throws InterruptedException {

if (Thread.interrupted()) {

throw new InterruptedException();

}

// Add a Node to the waiting queue, as shown in Figure 1

Node node = addConditionWaiter();

// Release the lock held by the node

int savedState = fullyRelease(node);

// Get deadline

final long deadline = System.nanoTime() + nanosTimeout;

int interruptMode = 0;

// If the node has not been transferred to the AQS queue, it will be blocked

while (!isOnSyncQueue(node)) {

// Timed out, exiting the waiting queue

if (nanosTimeout <= 0L) {

transferAfterCancelledWait(node);

break;

}

// park blocks for a certain time, and spinForTimeoutThreshold is 1000 ns

if (nanosTimeout >= spinForTimeoutThreshold)

LockSupport.parkNanos(this, nanosTimeout);

// If interrupted, exit the waiting queue

if ((interruptMode = checkInterruptWhileWaiting(node)) != 0)

break;

nanosTimeout = deadline - System.nanoTime();

}

// After exiting the waiting queue, you also need to obtain the lock of the AQS queue

if (acquireQueued(node, savedState) && interruptMode != THROW_IE)

interruptMode = REINTERRUPT;

// All cancelled nodes are deleted from the queue linked list, as shown in Figure 2

if (node.nextWaiter != null)

unlinkCancelledWaiters();

// Apply interrupt mode, see v

if (interruptMode != 0)

reportInterruptAfterWait(interruptMode);

return deadline - System.nanoTime();

}

// Wait - until awakened or interrupted or timed out, the logic is similar to awaitNanos

public final boolean awaitUntil(Date deadline) throws InterruptedException {

// ...

}

// Wait - until awakened or interrupted or timed out, the logic is similar to awaitNanos

public final boolean await(long time, TimeUnit unit) throws InterruptedException {

// ...

}

// Tool method omitted

}

3. Read write lock

3.1 ReentrantReadWriteLock

When the read operation is much higher than the write operation, the read-write lock is used to make the read-read concurrent and improve the performance. Similar to select... From... Lock in share mode in the database

A data container class is provided, which uses the read() method for reading lock protection data and the write() method for writing lock protection data

@Slf4j(topic = "c.DataContainer")

class DataContainer {

private Object data;

private final ReentrantReadWriteLock rw = new ReentrantReadWriteLock();

private final ReentrantReadWriteLock.ReadLock r = rw.readLock();

private final ReentrantReadWriteLock.WriteLock w = rw.writeLock();

public Object read() {

log.debug("Acquire read lock...");

r.lock();

try {

log.debug("read");

try {

TimeUnit.SECONDS.sleep(1);

} catch (InterruptedException e) {

e.printStackTrace();

}

return data;

} finally {

log.debug("Release read lock...");

r.unlock();

}

}

public void write() {

log.debug("Get write lock...");

w.lock();

try {

log.debug("write in");

try {

TimeUnit.SECONDS.sleep(1);

} catch (InterruptedException e) {

e.printStackTrace();

}

} finally {

log.debug("Release write lock...");

w.unlock();

}

}

}

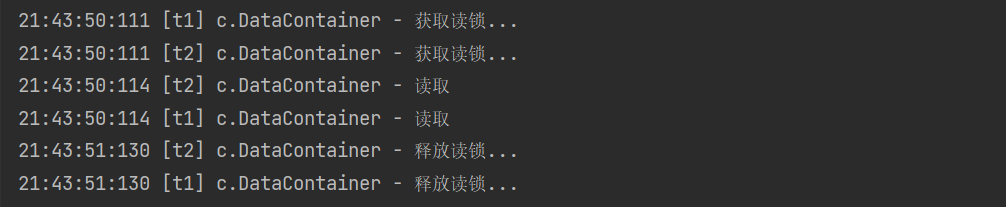

Test read locks - read locks can be concurrent

@Slf4j(topic = "c.TestReadWriteLock")

public class TestReadWriteLock {

public static void main(String[] args) {

DataContainer dataContainer = new DataContainer();

new Thread(() -> {

dataContainer.read();

}, "t1").start();

new Thread(() -> {

dataContainer.read();

}, "t2").start();

}

}

The output result shows that during Thread-0 locking, the read operation of Thread-1 is not affected

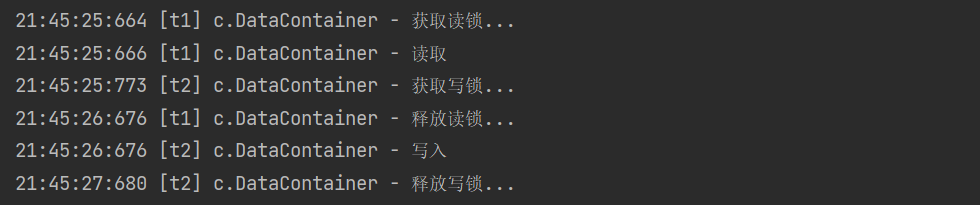

Test read lock write lock mutual blocking

@Slf4j(topic = "c.TestReadWriteLock")

public class TestReadWriteLock {

public static void main(String[] args) throws InterruptedException {

DataContainer dataContainer = new DataContainer();

new Thread(() -> {

dataContainer.read();

}, "t1").start();

Thread.sleep(100);

new Thread(() -> {

dataContainer.write();

}, "t2").start();

}

}

Output results

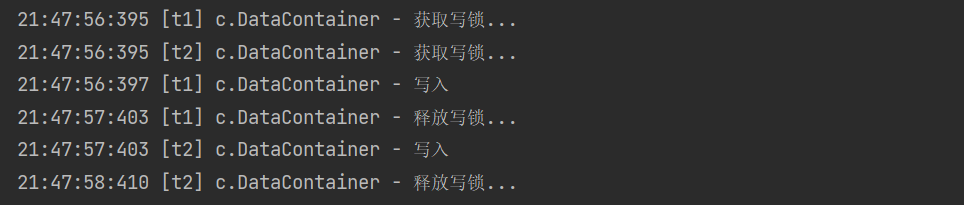

Write locks - write locks are also mutually blocking

@Slf4j(topic = "c.TestReadWriteLock")

public class TestReadWriteLock {

public static void main(String[] args) throws InterruptedException {

DataContainer dataContainer = new DataContainer();

new Thread(() -> {

dataContainer.write();

}, "t1").start();

new Thread(() -> {

dataContainer.write();

}, "t2").start();

}

}

matters needing attention

- Read lock does not support conditional variables

- Upgrade during reentry is not supported: that is, obtaining a write lock while holding a read lock will cause permanent waiting for obtaining a write lock

r.lock();

try {

// ...

w.lock();

try {

// ...

} finally{

w.unlock();

}

} finally{

r.unlock();

}

- Downgrade support on reentry: to obtain a read lock while holding a write lock

class CachedData {

Object data;

// Is it valid? If it fails, recalculate the data

volatile boolean cacheValid;

final ReentrantReadWriteLock rwl = new ReentrantReadWriteLock();

void processCachedData() {

rwl.readLock().lock();

if (!cacheValid) {

// The read lock must be released before acquiring the write lock

rwl.readLock().unlock();

rwl.writeLock().lock();

try {

// Judge whether other threads have obtained the write lock and updated the cache to avoid repeated updates

if (!cacheValid) {

data = ...

cacheValid = true;

}

// Demote to read lock and release the write lock, so that other threads can read the cache

rwl.readLock().lock();

} finally {

rwl.writeLock().unlock();

}

}

// When you run out of data, release the read lock

try {

use(data);

} finally {

rwl.readLock().unlock();

}

}

}

*Application cache

1. Cache update strategy

When updating, clear the cache first or update the database first

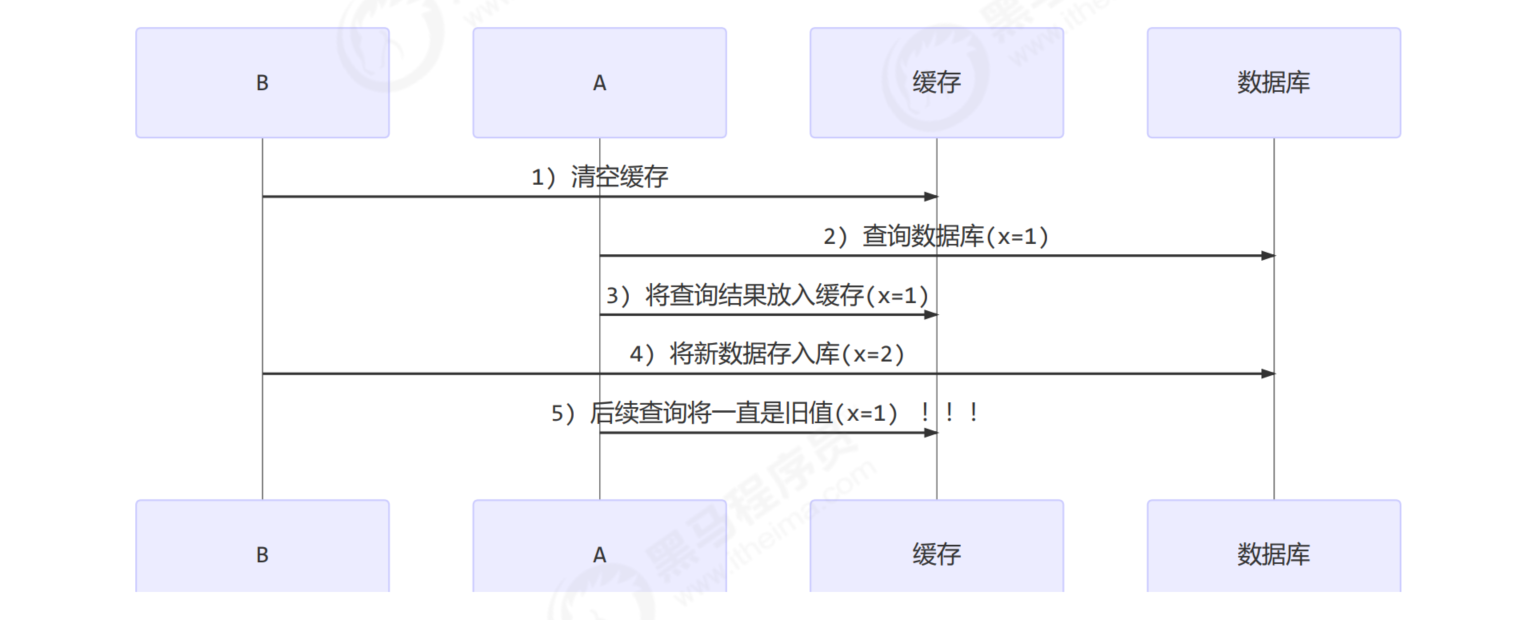

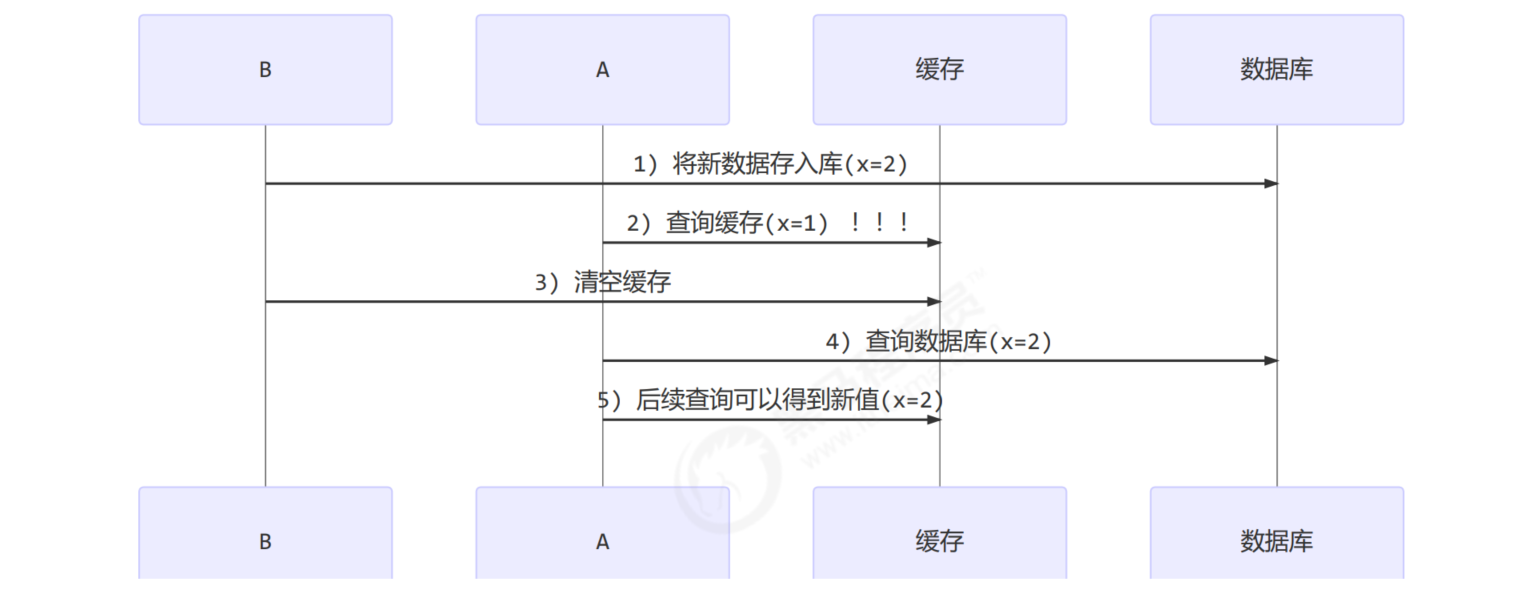

First clear cache

Update database first

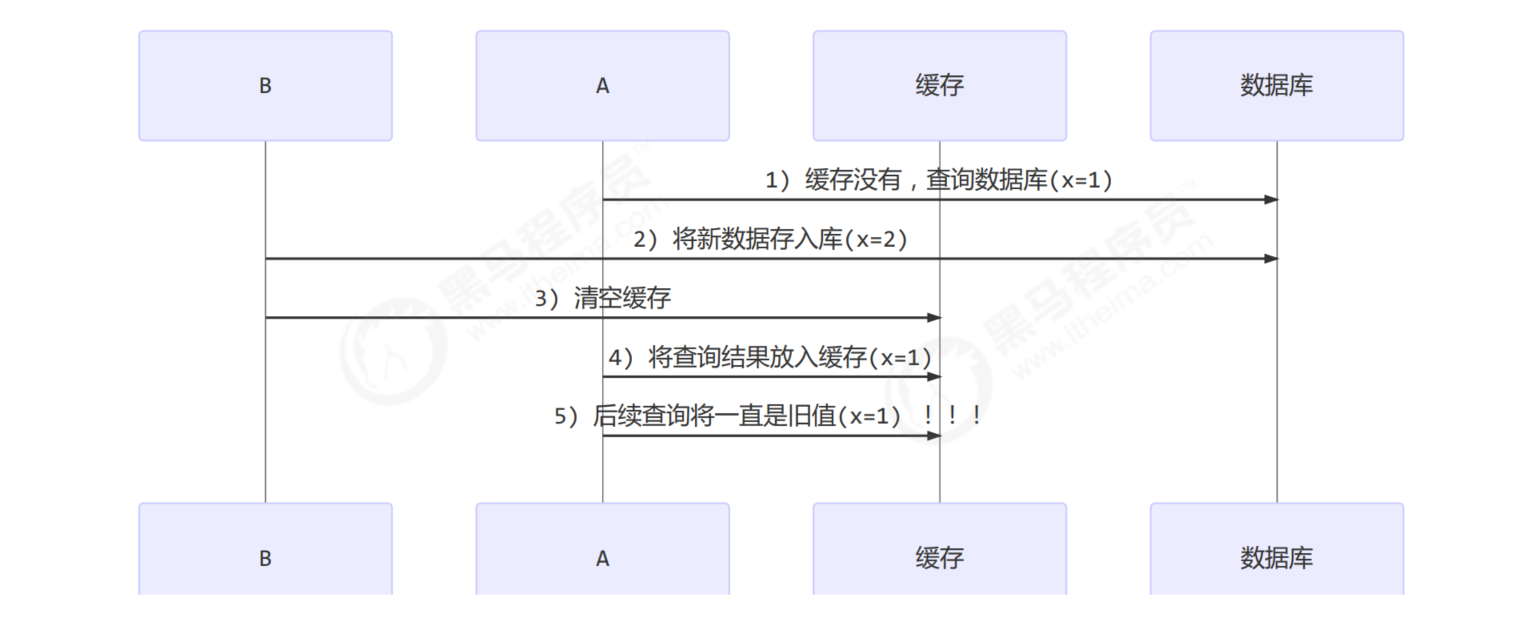

In addition, it is assumed that when query thread A queries the data, the cached data fails due to the expiration of time, or it is the first query

The chances of this happening are very small, see the facebook paper

2. Read / write locks implement consistent caching

Use read-write locks to implement a simple on-demand cache

package top.onefine.test.c8;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.EqualsAndHashCode;

import java.math.BigDecimal;

import java.util.*;

import java.util.concurrent.locks.ReentrantReadWriteLock;

public class TestGenericDao {

public static void main(String[] args) {

GenericDao dao = new GenericDaoCached();

System.out.println("============> query");

String sql = "select * from emp where empno = ?";

int empno = 7369;

Emp emp = dao.queryOne(Emp.class, sql, empno);

System.out.println(emp);

emp = dao.queryOne(Emp.class, sql, empno);

System.out.println(emp);

emp = dao.queryOne(Emp.class, sql, empno);

System.out.println(emp);

System.out.println("============> to update");

dao.update("update emp set sal = ? where empno = ?", 800, empno);

emp = dao.queryOne(Emp.class, sql, empno);

System.out.println(emp);

}

}

// Decorator mode

// GenericDao implementation class is omitted to understand the idea

class GenericDaoCached extends GenericDao {

private final GenericDao dao = new GenericDao();

// Cache, key: sql statement, value: result

// As a cache, HashMap is not thread safe and needs to be protected

private final Map<SqlPair, Object> map = new HashMap<>();

private final ReentrantReadWriteLock rw = new ReentrantReadWriteLock();

@Override

public <T> T queryOne(Class<T> beanClass, String sql, Object... args) {

// Find it in the cache first and return it directly

SqlPair key = new SqlPair(sql, args);

// Add a read lock to prevent other threads from changing the cache

rw.readLock().lock(); // Read lock

try {

T value = (T) map.get(key);

if (value != null) {

return value;

}

} finally {

rw.readLock().unlock();

}

// Add a write lock to prevent other threads from reading and changing the cache

rw.writeLock().lock(); // Write lock

try {

// The upper part of the get method may be accessed by multiple threads, and the cache may have been filled with data

// To prevent repeated query of the database, verify again

T value = (T) map.get(key);

if (value == null) { // duplication check

// No in cache, query database

value = dao.queryOne(beanClass, sql, args);

map.put(key, value);

}

return value;

} finally {

rw.writeLock().unlock();

}

}

// Note the order: update db first and then cache

@Override

public int update(String sql, Object... args) {

// Add a write lock to prevent other threads from reading and changing the cache

rw.writeLock().lock(); // Write lock

try {

// Update library first

int update = dao.update(sql, args);

// wipe cache

map.clear();

return update;

} finally {

rw.writeLock().unlock();

}

}

}

// As a key, it is immutable

@AllArgsConstructor

@EqualsAndHashCode

class SqlPair {

private final String sql;

private final Object[] args;

}

@Data

class Emp {

private int empno;

private String ename;

private String job;

private BigDecimal sal;

}

be careful

The above implementation reflects the application of read-write lock to ensure the consistency between cache and database, but the following problems are not considered

- It is suitable for more reading and less writing. If writing operations are frequent, the performance of the above implementation is low

- Cache capacity is not considered

- Cache expiration is not considered

- Only for single machine

- The concurrency is still low. At present, only one lock is used (for example, different locks are used for different database tables)

- The update method is too simple and crude, clearing all keys (consider partitioning by type or redesigning keys)

Optimistic lock implementation: update with CAS

*Read write lock principle

1. Graphic process

The read-write lock uses the same Sycn synchronizer, so the waiting queue and state are also the same

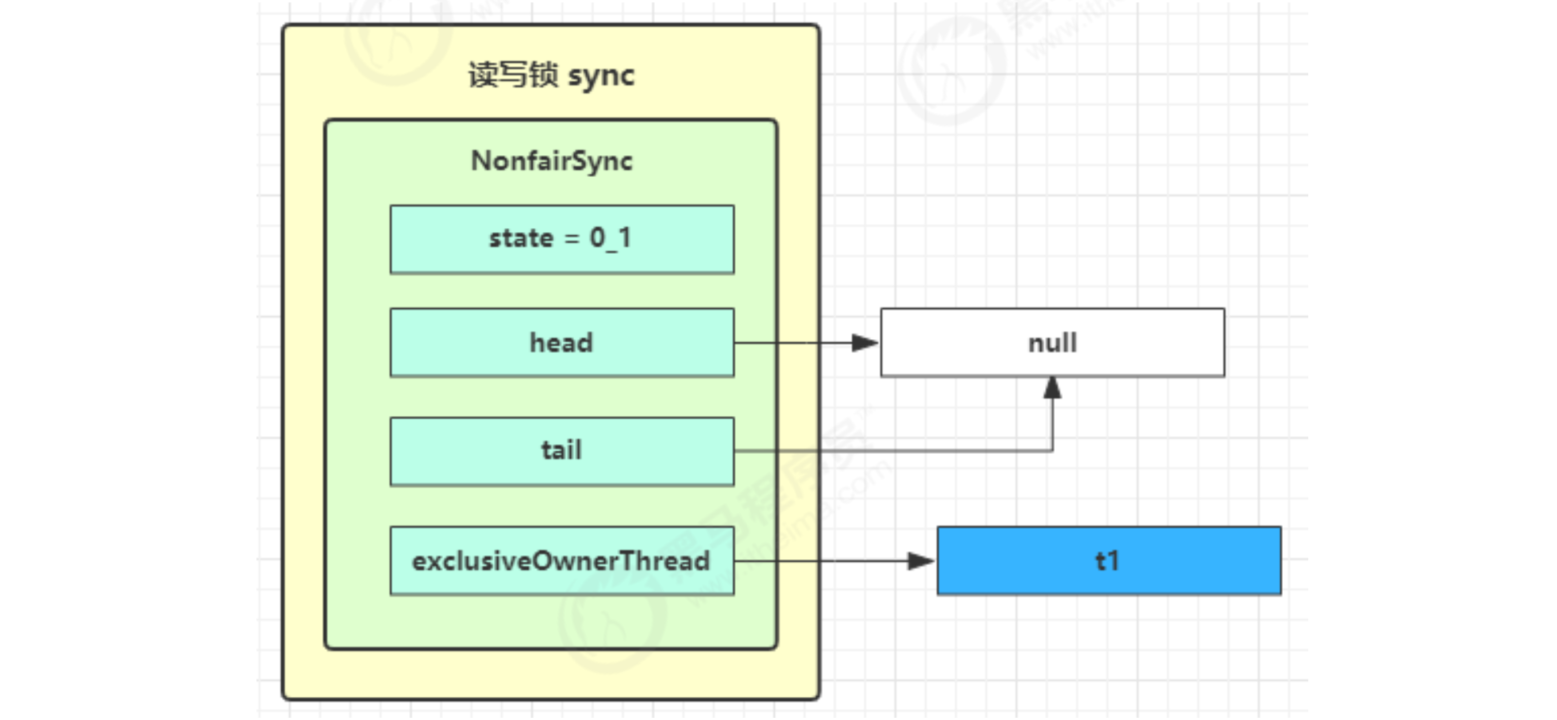

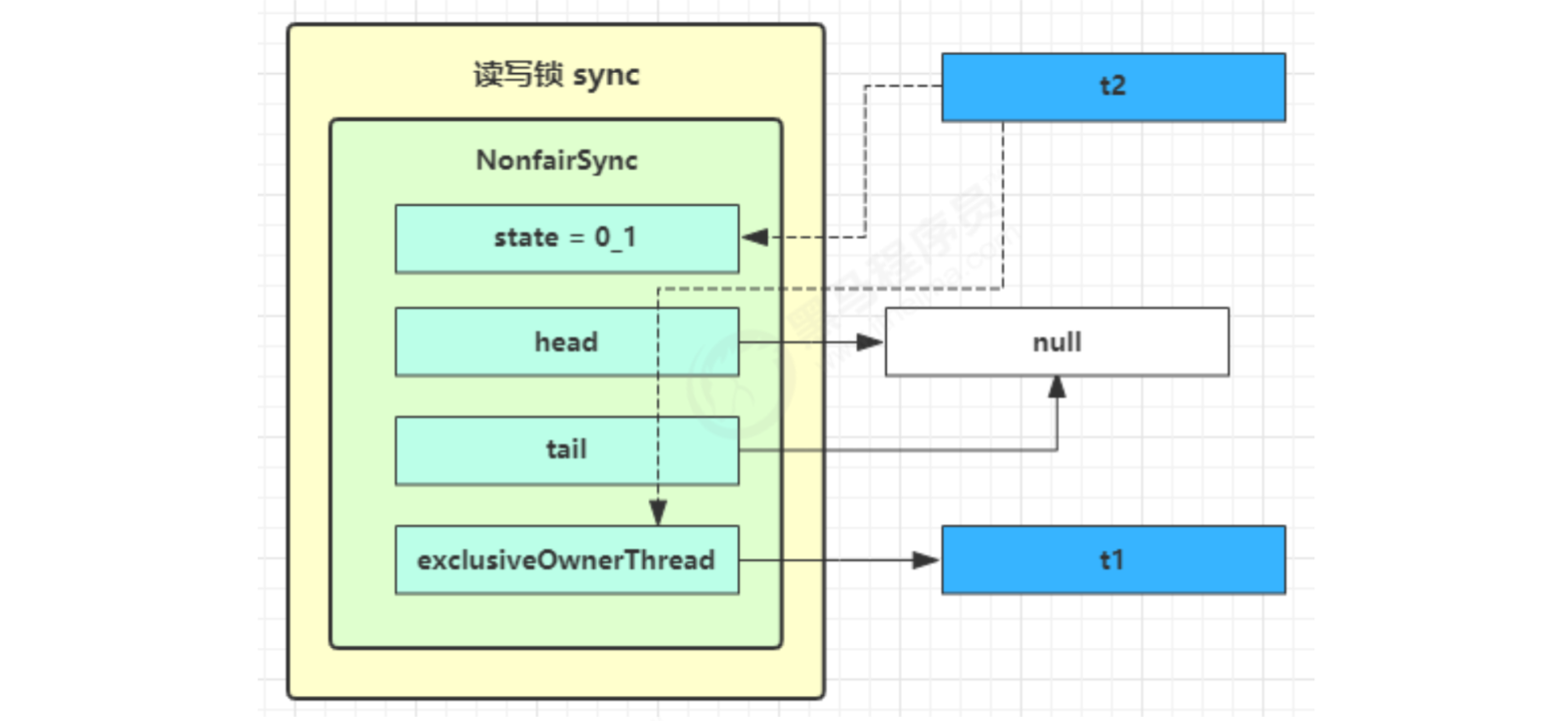

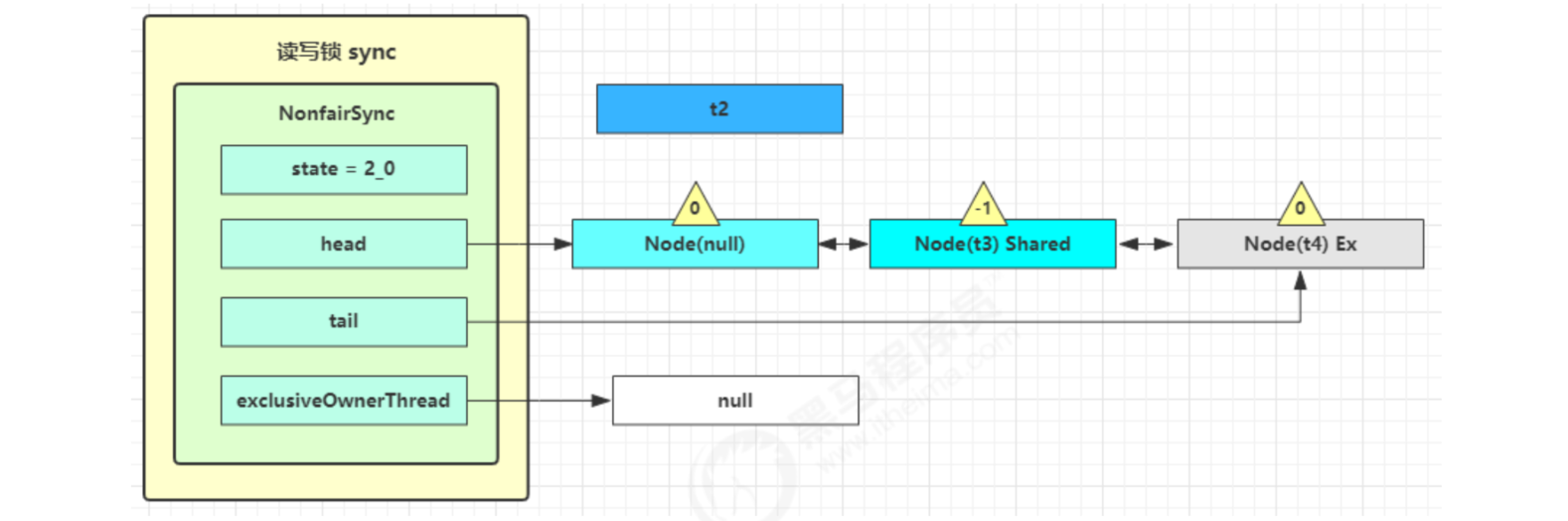

t1 w.lock,t2 r.lock

1) t1 locks successfully. The process is no different from ReentrantLock locking. The difference is that the write lock status accounts for the lower 16 bits of state, while the read lock uses the upper 16 bits of state

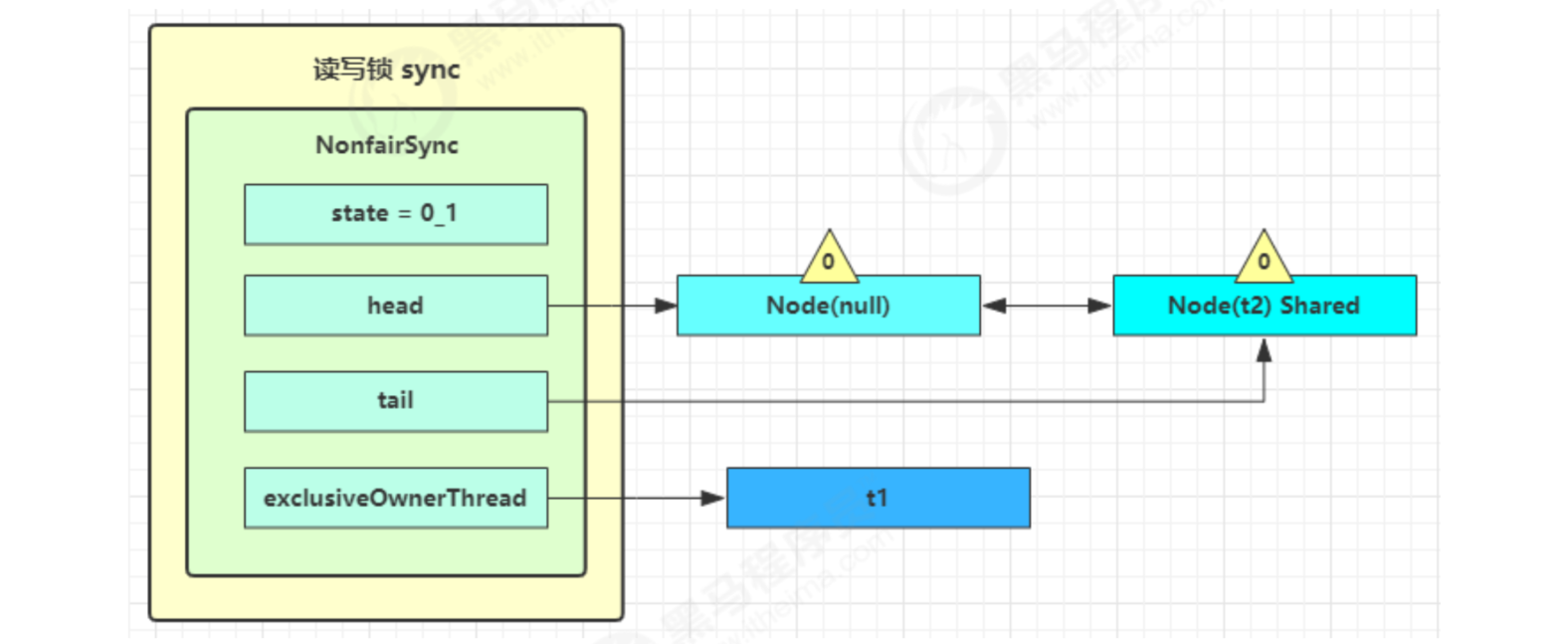

2) t2 execute r.lock. At this time, enter the sync.acquiresshared (1) process of lock reading. First, enter the tryacquisresshared process. If a write lock is occupied, tryAcquireShared returns - 1, indicating failure

The return value of tryAcquireShared indicates

- -1 means failure

- 0 indicates success, but subsequent nodes will not continue to wake up

- A positive number indicates success, and the value is that several subsequent nodes need to wake up, and the read-write lock returns 1

3) At this time, the sync.doAcquireShared(1) process will be entered. First, addWaiter is called to add the node. The difference is that the node is set to Node.SHARED mode instead of Node.EXCLUSIVE mode. Note that t2 is still active at this time

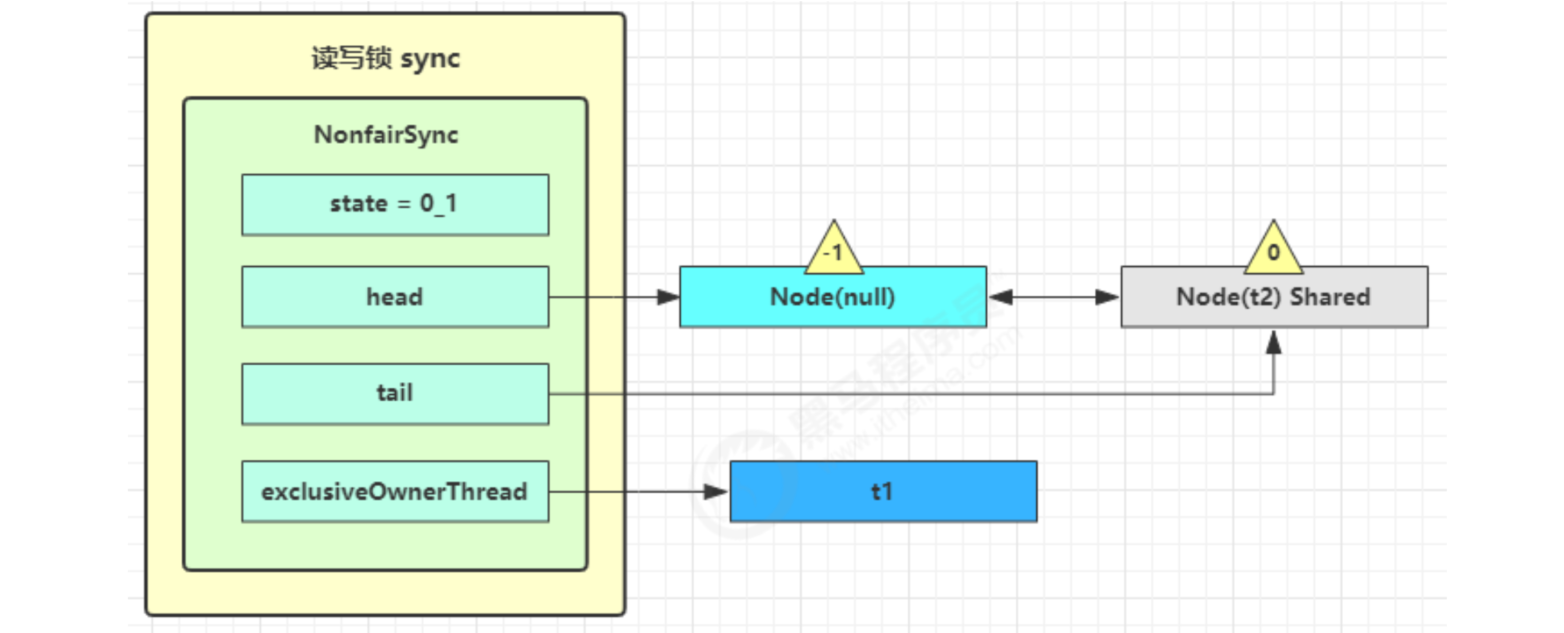

4) t2 will check whether its node is the second. If it is, it will call tryAcquireShared(1) again to try to obtain the lock

5) If it is not successful, cycle for (;;) in doAcquireShared, change the waitStatus of the precursor node to - 1, and then cycle for (;) to try tryAcquireShared(1). If it is not successful, park at parkAndCheckInterrupt()

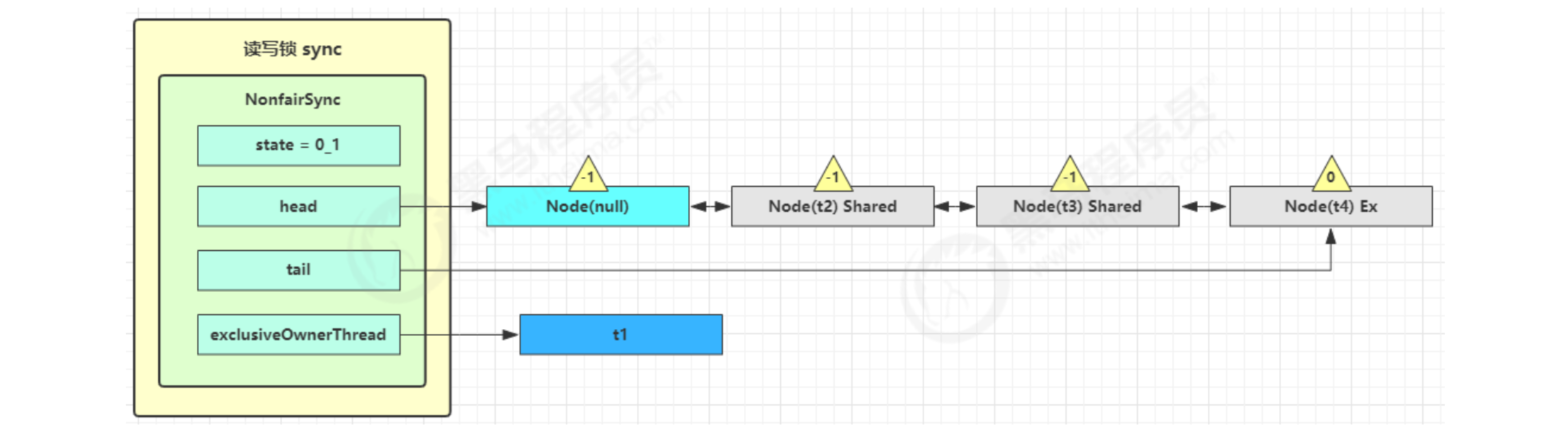

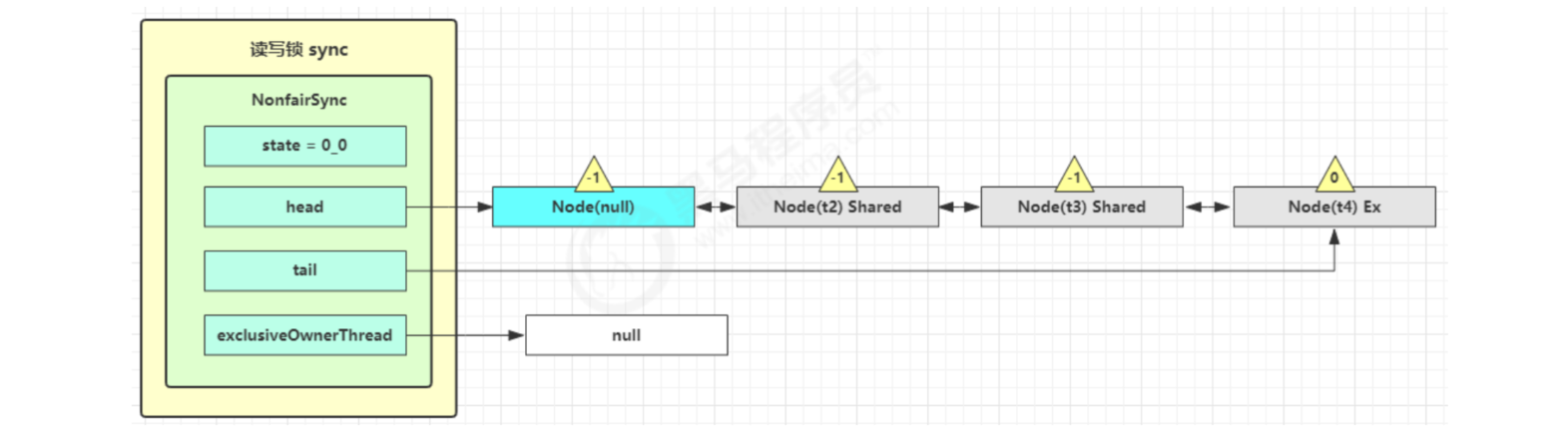

t3 r.lock,t4 w.lock

In this state, suppose t3 adds a read lock and t4 adds a write lock. During this period, t1 still holds the lock, which becomes the following

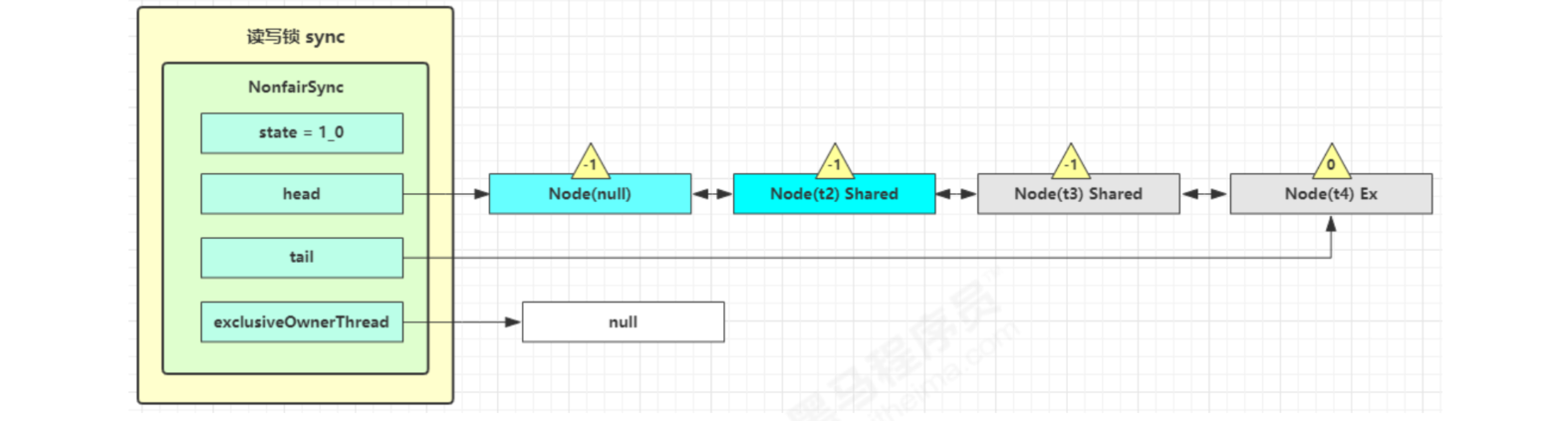

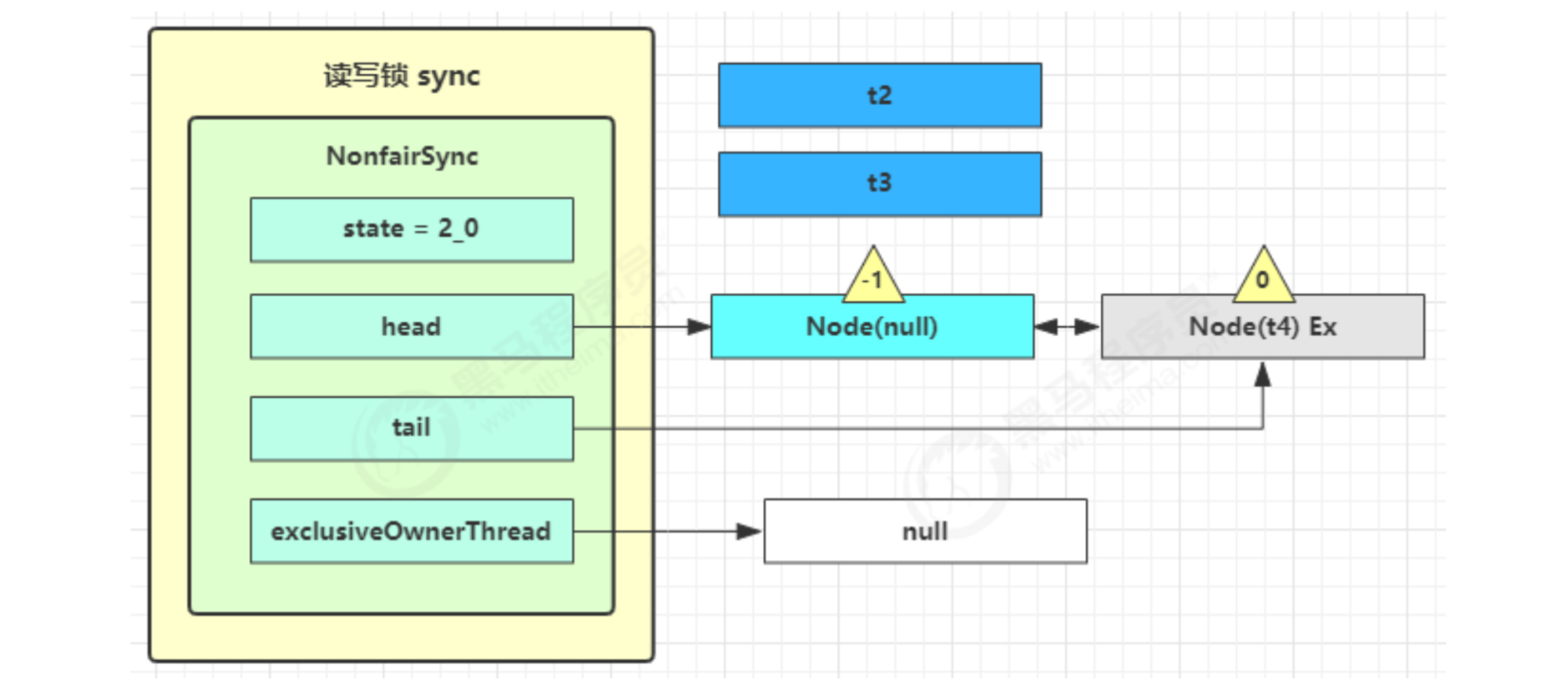

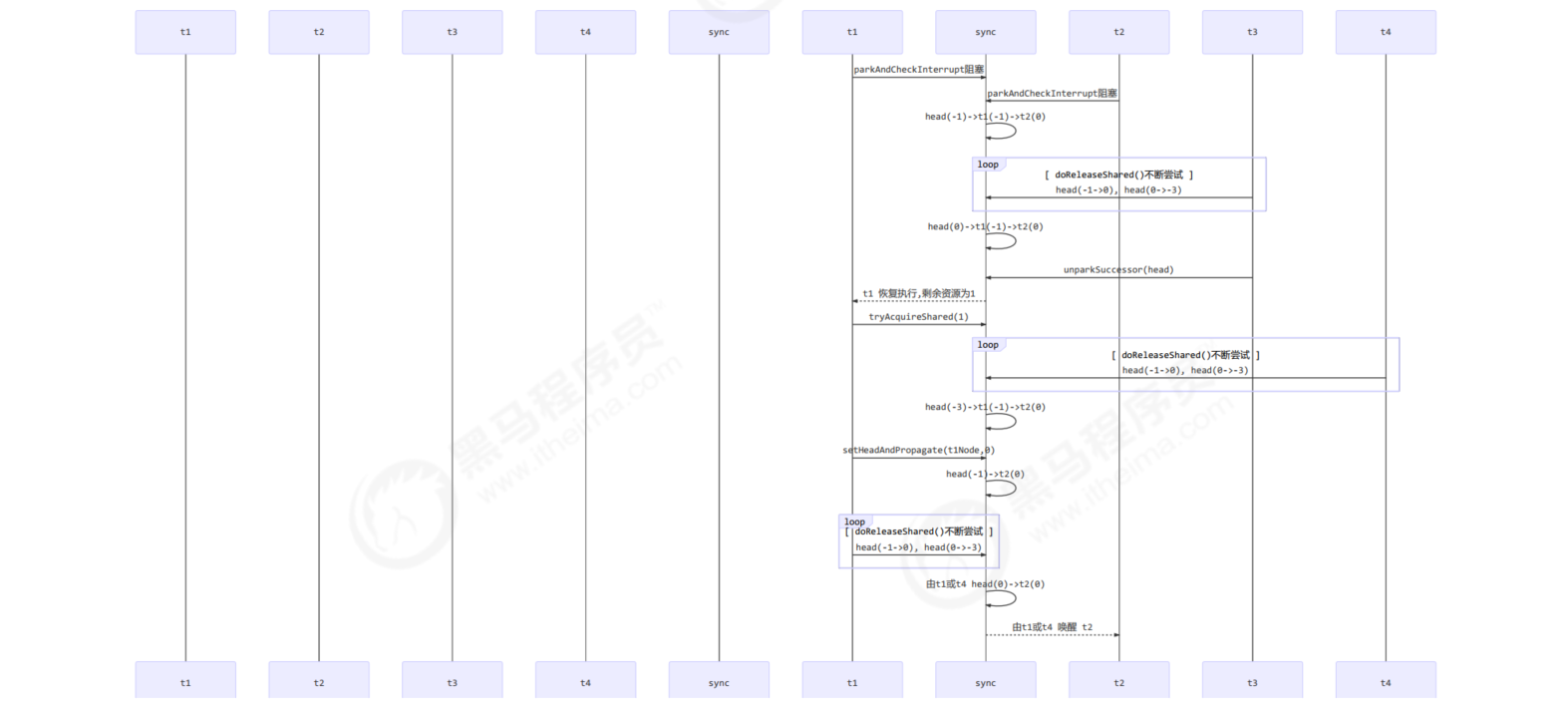

t1 w.unlock

At this time, you will go to the sync.release(1) process of writing locks, and call sync.tryRelease(1) successfully, as shown below

Next, execute the wake-up process sync.unparksuccess, that is, let the dick resume operation. At this time, t2 resumes operation at parkAndCheckInterrupt() in doAcquireShared

This time again, for (; 😉 If tryAcquireShared is executed successfully, the read lock count is incremented by one

At this time, t2 has resumed operation. Next, t2 calls setHeadAndPropagate(node, 1), and its original node is set as the head node

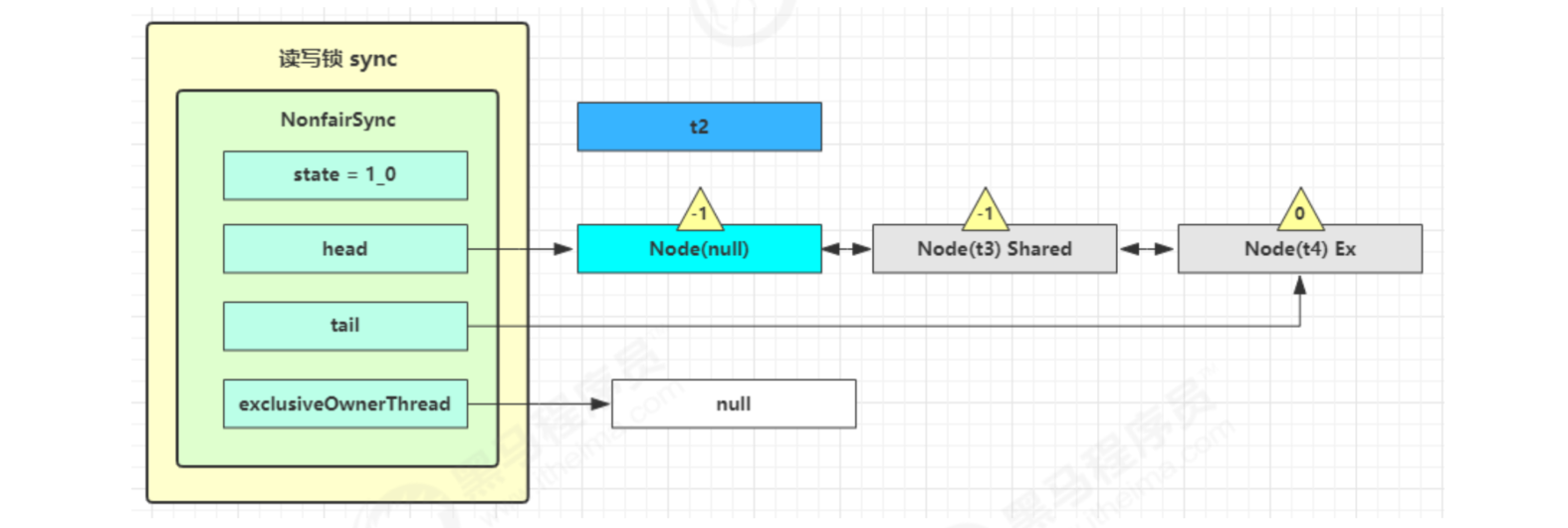

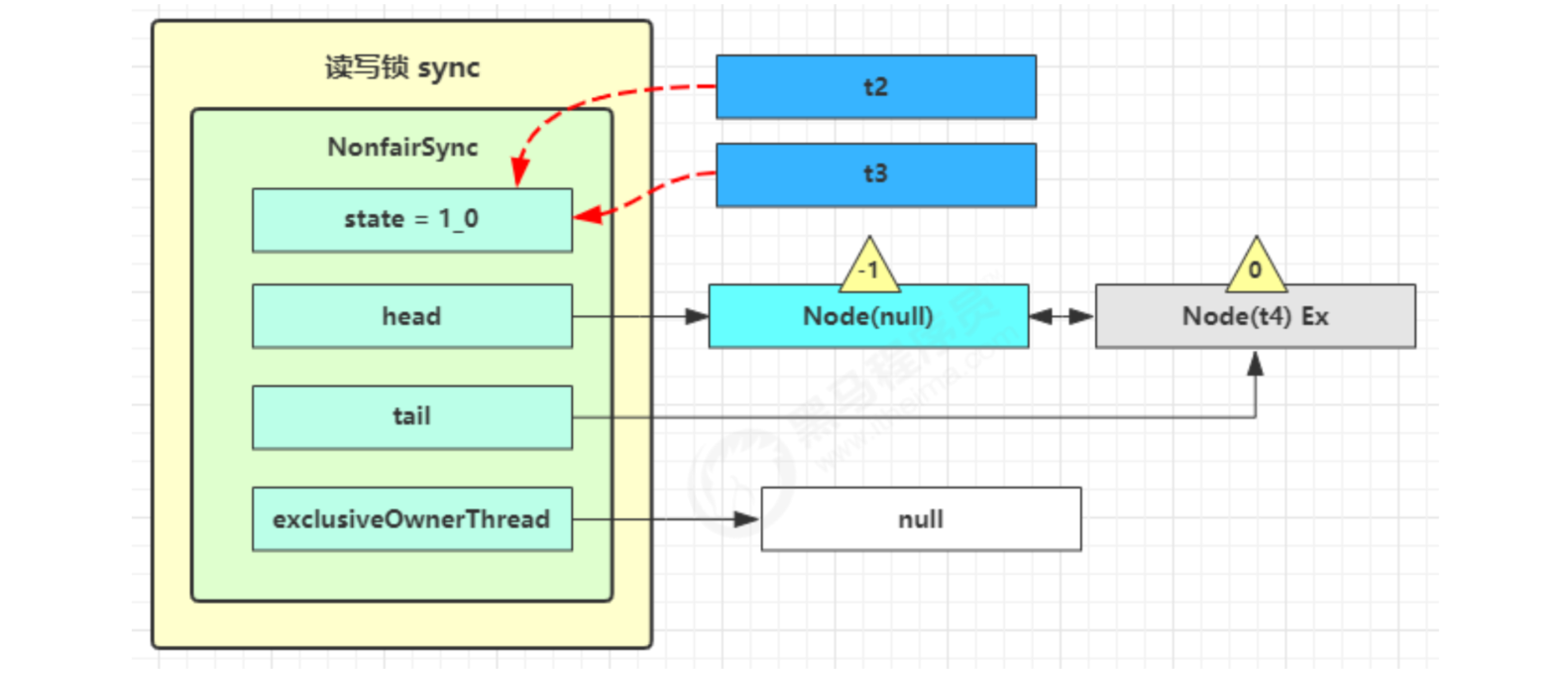

Before it's over, check whether the next node is shared in setHeadAndPropagate method. If so, call doReleaseShared() to change the state of head from - 1 to 0 and wake up the second. At this time, t3 resumes running at parkAndCheckInterrupt() in doacquishared

This time, for (;;) executes tryAcquireShared again. If it succeeds, the read lock count is incremented by one

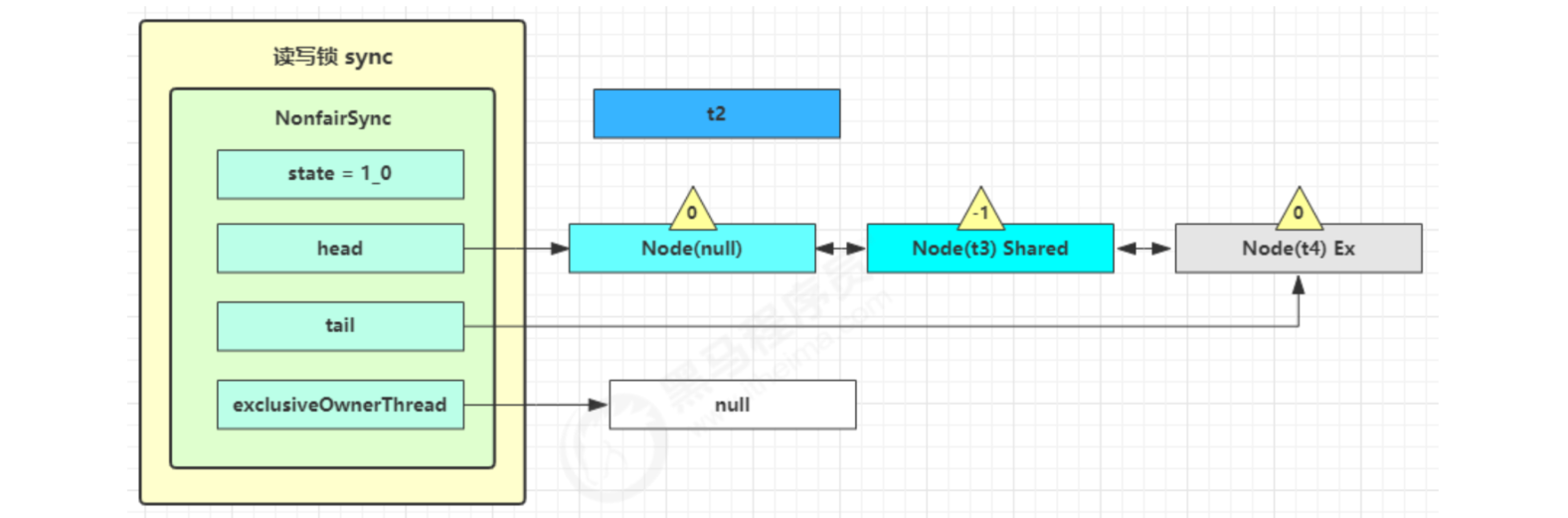

At this time, t3 has resumed operation. Next, t3 calls setHeadAndPropagate(node, 1), and its original node is set as the head node

The next node is not shared, so it will not continue to wake up t4

t2 r.unlock,t3 r.unlock

t2 enters sync.releaseShared(1) and calls tryReleaseShared(1) to reduce the count by one, but the count is not zero.

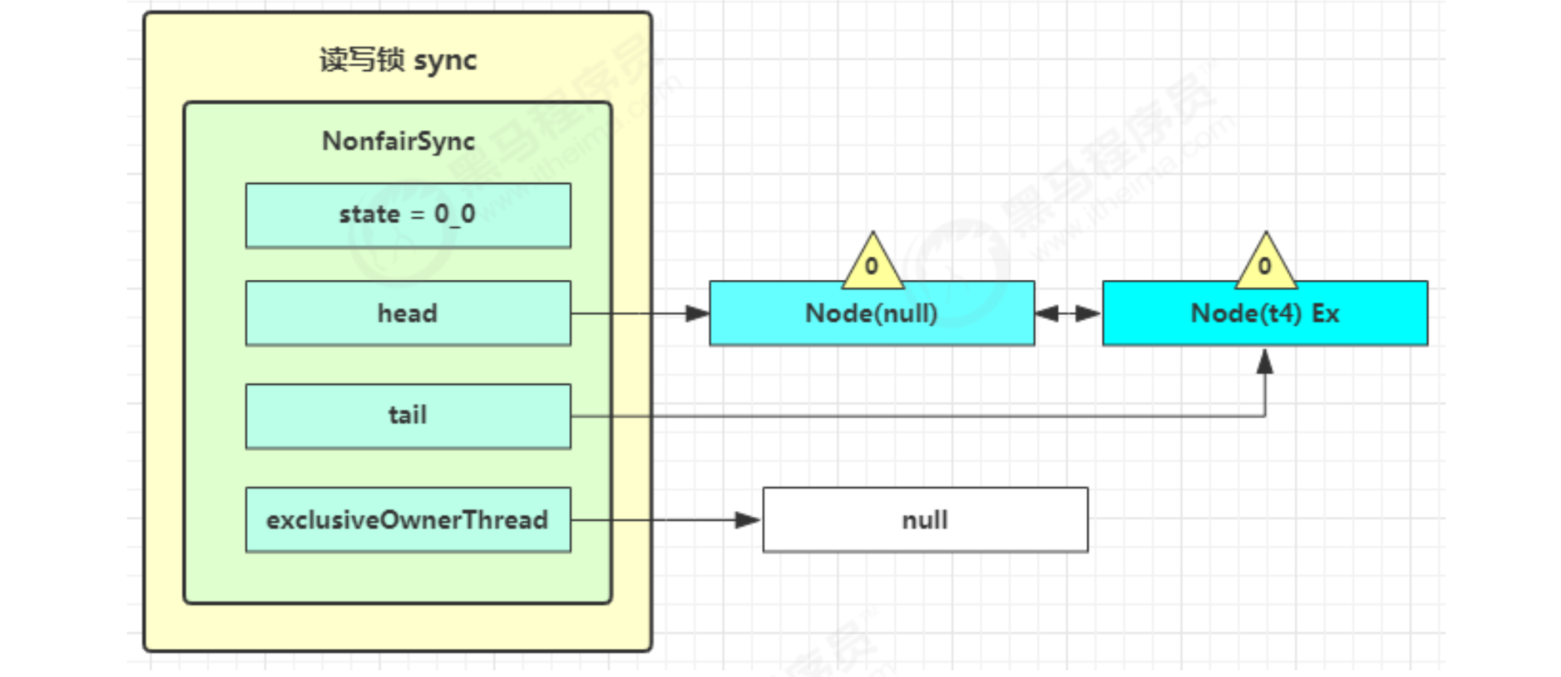

t3 enters sync.releaseShared(1), calls tryReleaseShared(1) to reduce count, this time count is zero, enters doReleaseShared(), changes the header node from -1 to 0, and wakes up the second.

Then t4 resume running at parkAndCheckInterrupt in acquirequeueueued, and for() again; 😉 This time, you are the second and there is no other competition. tryAcquire(1) succeeds. Modify the header node and the process ends

2. Source code analysis

Write lock locking process

static final class NonfairSync extends Sync {

// ... omit irrelevant code

// The external class WriteLock method is easy to read and placed here

public void lock() {

sync.acquire(1);

}

// The method inherited from AQS is easy to read and placed here

public final void acquire(int arg) {

if (

// The attempt to obtain a write lock failed

!tryAcquire(arg) &&

// Associate the current thread to a Node object in exclusive mode

// Access to AQS queue blocked

acquireQueued(addWaiter(Node.EXCLUSIVE), arg)

) {

selfInterrupt();

}

}

// Sync inherited method, easy to read, put here

protected final boolean tryAcquire(int acquires) {

// Get the low 16 bits, representing the state count of the write lock

Thread current = Thread.currentThread();

int c = getState();

int w = exclusiveCount(c);

if (c != 0) {

if (

// C! = 0 and w = = 0 indicates that there is a read lock, or

w == 0 ||

// If exclusiveOwnerThread is not your own

current != getExclusiveOwnerThread()

) {

// Failed to acquire lock

return false;

}

// If the write lock count exceeds the lower 16 bits, an exception is reported

if (w + exclusiveCount(acquires) > MAX_COUNT)

throw new Error("Maximum lock count exceeded");

// Write lock reentry to obtain lock successfully

setState(c + acquires);

return true;

}

if (

// Determine whether the write lock should be blocked, or

writerShouldBlock() ||

// The attempt to change the count failed

!compareAndSetState(c, c + acquires)

) {

// Failed to acquire lock

return false;

}

// Lock obtained successfully

setExclusiveOwnerThread(current);

return true;

}

// Non fair lock writerShouldBlock always returns false without blocking

final boolean writerShouldBlock() {

return false;

}

}

Write lock release process

static final class NonfairSync extends Sync {

// ... omit irrelevant code

// WriteLock method, easy to read, put here

public void unlock() {

sync.release(1);

}

// The method inherited from AQS is easy to read and placed here

public final boolean release(int arg) {

// The attempt to release the write lock succeeded

if (tryRelease(arg)) {

// Unpark thread waiting in AQS

Node h = head;

if (h != null && h.waitStatus != 0)

unparkSuccessor(h);

return true;

}

return false;

}

// Sync inherited method, easy to read, put here

protected final boolean tryRelease(int releases) {

if (!isHeldExclusively())

throw new IllegalMonitorStateException();

int nextc = getState() - releases;

// For reentrant reasons, the release is successful only when the write lock count is 0

boolean free = exclusiveCount(nextc) == 0;

if (free) {

setExclusiveOwnerThread(null);

}

setState(nextc);

return free;

}

}

Lock reading and locking process

static final class NonfairSync extends Sync {

// ReadLock method, easy to read, put here

public void lock() {

sync.acquireShared(1);

}

// The method inherited from AQS is easy to read and placed here

public final void acquireShared(int arg) {

// tryAcquireShared returns a negative number, indicating that the acquisition of read lock failed

if (tryAcquireShared(arg) < 0) {

doAcquireShared(arg);

}

}

// Sync inherited method, easy to read, put here

protected final int tryAcquireShared(int unused) {

Thread current = Thread.currentThread();

int c = getState();

// If another thread holds a write lock, obtaining a read lock fails

if (

exclusiveCount(c) != 0 &&

getExclusiveOwnerThread() != current

) {

return -1;

}

int r = sharedCount(c);

if (

// The read lock should not be blocked (if the second is a write lock, the read lock should be blocked), and

!readerShouldBlock() &&

// Less than the read lock count, and

r < MAX_COUNT &&

// Attempt to increase count succeeded

compareAndSetState(c, c + SHARED_UNIT)

) {

// ... omit unimportant code

return 1;

}

return fullTryAcquireShared(current);

}

// The unfair lock readerShouldBlock determines whether the first node in the AQS queue is a write lock

// true to block, false not to block

final boolean readerShouldBlock() {

return apparentlyFirstQueuedIsExclusive();

}

// The method inherited from AQS is easy to read and placed here

// The function is similar to tryAcquireShared, but it will keep trying for (;;) to obtain the read lock without blocking during execution

final int fullTryAcquireShared(Thread current) {

HoldCounter rh = null;

for (; ; ) {

int c = getState();

if (exclusiveCount(c) != 0) {

if (getExclusiveOwnerThread() != current)

return -1;

} else if (readerShouldBlock()) {

// ... omit unimportant code

}

if (sharedCount(c) == MAX_COUNT)

throw new Error("Maximum lock count exceeded");

if (compareAndSetState(c, c + SHARED_UNIT)) {

// ... omit unimportant code

return 1;

}

}

}

// The method inherited from AQS is easy to read and placed here

private void doAcquireShared(int arg) {

// Associate the current thread to a Node object. The mode is shared mode

final Node node = addWaiter(Node.SHARED);

boolean failed = true;

try {

boolean interrupted = false;

for (; ; ) {

final Node p = node.predecessor();

if (p == head) {

// Try to acquire the read lock again

int r = tryAcquireShared(arg);

// success

if (r >= 0) {

// ㈠

// r represents the number of available resources, where 1 is always allowed to propagate

//(wake up the next Share node in AQS)

setHeadAndPropagate(node, r);

p.next = null; // help GC

if (interrupted)

selfInterrupt();

failed = false;

return;

}

}

if (

// Whether to block when acquiring read lock fails (waitStatus == Node.SIGNAL in the previous stage)

shouldParkAfterFailedAcquire(p, node) &&

// park current thread

parkAndCheckInterrupt()

) {

interrupted = true;

}

}

} finally {

if (failed)

cancelAcquire(node);

}

}

// A method inherited from AQS, which is easy to read, is placed here

private void setHeadAndPropagate(Node node, int propagate) {

Node h = head; // Record old head for check below

// Set yourself to head

setHead(node);

// propagate indicates that there are shared resources (such as shared read locks or semaphores)

// Original head waitStatus == Node.SIGNAL or Node.PROPAGATE

// Now head waitStatus == Node.SIGNAL or Node.PROPAGATE

if (propagate > 0 || h == null || h.waitStatus < 0 ||

(h = head) == null || h.waitStatus < 0) {

Node s = node.next;

// If it is the last node or the node waiting to share the read lock

if (s == null || s.isShared()) {

// Enter two

doReleaseShared();

}

}

}

// II. The method inherited from AQS is easy to read and placed here

private void doReleaseShared() {

// If head.waitstatus = = node. Signal = = > 0 succeeds, the next node is unpark

// If head.waitstatus = = 0 = = > node.propagate, to solve the bug, see the following analysis

for (; ; ) {

Node h = head;

// Queue and node

if (h != null && h != tail) {

int ws = h.waitStatus;

if (ws == Node.SIGNAL) {

if (!compareAndSetWaitStatus(h, Node.SIGNAL, 0))

continue; // loop to recheck cases

// If the next node unpark successfully obtains the read lock

// And the next node is still shared. Continue to do releaseshared

unparkSuccessor(h);

} else if (ws == 0 &&

!compareAndSetWaitStatus(h, 0, Node.PROPAGATE))

continue; // loop on failed CAS

}

if (h == head) // loop if head changed

break;

}

}

}

Read lock release process

static final class NonfairSync extends Sync {

// ReadLock method, easy to read, put here

public void unlock() {

sync.releaseShared(1);

}

// The method inherited from AQS is easy to read and placed here

public final boolean releaseShared(int arg) {

if (tryReleaseShared(arg)) {

doReleaseShared();

return true;

}

return false;

}

// Sync inherited method, easy to read, put here

protected final boolean tryReleaseShared(int unused) {

// ... omit unimportant code

for (; ; ) {

int c = getState();

int nextc = c - SHARED_UNIT;

if (compareAndSetState(c, nextc)) {

// The count of read locks will not affect other threads that acquire read locks, but will affect other threads that acquire write locks

// A count of 0 is the true release

return nextc == 0;

}

}

}

// The method inherited from AQS is easy to read and placed here

private void doReleaseShared() {

// If head.waitstatus = = node. Signal = = > 0 succeeds, the next node is unpark

// If head.waitstatus = = 0 = = > node.propagate

for (; ; ) {

Node h = head;

if (h != null && h != tail) {

int ws = h.waitStatus;

// If other threads are also releasing the read lock, you need to change the waitStatus to 0 first

// Prevent unparksuccess from being executed multiple times

if (ws == Node.SIGNAL) {

if (!compareAndSetWaitStatus(h, Node.SIGNAL, 0))

continue; // loop to recheck cases

unparkSuccessor(h);

}

// If it is already 0, change it to - 3 to solve the propagation. See semaphore bug analysis later

else if (ws == 0 &&

!compareAndSetWaitStatus(h, 0, Node.PROPAGATE))

continue; // loop on failed CAS

}

if (h == head) // loop if head changed

break;

}

}

}

3.2 StampedLock

This class is added from JDK 8 to further optimize the read performance. Its feature is that it must be used with the [stamp] when using the read lock and write lock

Add interpretation lock

long stamp = lock.readLock(); lock.unlockRead(stamp);

Add / remove write lock

long stamp = lock.writeLock(); lock.unlockWrite(stamp);

Optimistic reading. StampedLock supports the tryOptimisticRead() method (happy reading). After reading, a stamp verification needs to be done. If the verification passes, it means that there is no write operation during this period and the data can be used safely. If the verification fails, the read lock needs to be obtained again to ensure data security.

long stamp = lock.tryOptimisticRead();

// Check stamp

if(!lock.validate(stamp)){

// Lock upgrade

}

A data container class is provided, which uses the read() method for reading lock protection data and the write() method for writing lock protection data

@Slf4j(topic = "c.DataContainerStamped")

class DataContainerStamped {

private int data;

private final StampedLock lock = new StampedLock();

public DataContainerStamped(int data) {

this.data = data;

}

public int read(int readTime) {

long stamp = lock.tryOptimisticRead(); // Optimistic reading

log.debug("optimistic read locking...{}", stamp);

try {

TimeUnit.SECONDS.sleep(readTime);

} catch (InterruptedException e) {

e.printStackTrace();

}

if (lock.validate(stamp)) {

log.debug("read finish...{}, data:{}", stamp, data);

return data;

}

// Lock upgrade - read lock

log.debug("updating to read lock... {}", stamp);

try {

stamp = lock.readLock(); // Read lock

log.debug("read lock {}", stamp);

try {

TimeUnit.SECONDS.sleep(readTime);

} catch (InterruptedException e) {

e.printStackTrace();

}

log.debug("read finish...{}, data:{}", stamp, data);

return data;

} finally {

log.debug("read unlock {}", stamp);

lock.unlockRead(stamp);

}

}

public void write(int newData) {

long stamp = lock.writeLock(); // Write lock

log.debug("write lock {}", stamp);

try {

try {

TimeUnit.SECONDS.sleep(2);

} catch (InterruptedException e) {

e.printStackTrace();

}

this.data = newData;

} finally {

log.debug("write unlock {}", stamp);

lock.unlockWrite(stamp);

}

}

}

Test read can be optimized

@Slf4j(topic = "c.TestStampedLock")

public class TestStampedLock {

public static void main(String[] args) {

DataContainerStamped dataContainer = new DataContainerStamped(1);

new Thread(() -> {

dataContainer.read(1);

}, "t1").start();

try {

TimeUnit.MILLISECONDS.sleep(500);

} catch (InterruptedException e) {

e.printStackTrace();

}

new Thread(() -> {

dataContainer.read(0);

}, "t2").start();

}

}

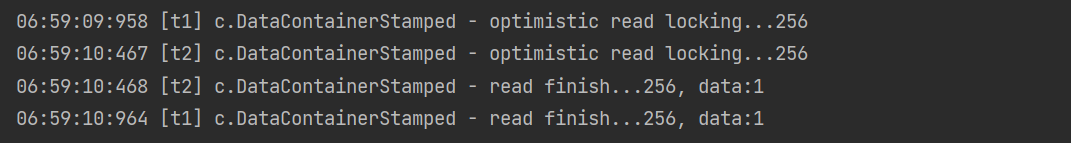

The output result shows that there is no read lock

Optimize read complement and add read lock during test read-write

@Slf4j(topic = "c.TestStampedLock")

public class TestStampedLock {

public static void main(String[] args) {

DataContainerStamped dataContainer = new DataContainerStamped(1);

new Thread(() -> {

dataContainer.read(1);

}, "t1").start();

try {

TimeUnit.MILLISECONDS.sleep(500);

} catch (InterruptedException e) {

e.printStackTrace();

}

new Thread(() -> {

// dataContainer.read(0);

dataContainer.write(100);

}, "t2").start();

}

}

Output results

be careful

- StampedLock does not support conditional variables

- StampedLock does not support reentry

4. Semaphore

Basic use

[ ˈ s ɛ m əˌ f ɔ r] Semaphore used to limit the maximum number of threads that can access shared resources at the same time.

package top.onefine.test.c8;

import lombok.extern.slf4j.Slf4j;

import java.util.concurrent.Semaphore;

import java.util.concurrent.TimeUnit;

@Slf4j(topic = "c.TestSemaphore")

public class TestSemaphore {

public static void main(String[] args) {

// 1. Create a semaphore object

Semaphore semaphore = new Semaphore(3); // The upper limit is 3

// 2. 10 threads running at the same time

for (int i = 0; i < 10; i++) {

new Thread(() -> {

// 3. Obtaining permits

try {

semaphore.acquire(); // Get this semaphore

} catch (InterruptedException e) {

e.printStackTrace();

}

try {

log.debug("running...");

try {

TimeUnit.SECONDS.sleep(1);

} catch (InterruptedException e) {

e.printStackTrace();

}

log.debug("end...");

} finally {

// 4. Release permit

semaphore.release(); // Release semaphore

}

}).start();

}

}

}

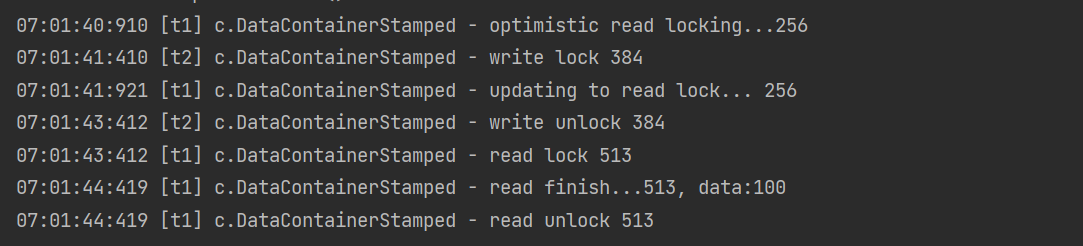

output

*Semaphore application – restrict the use of shared resources

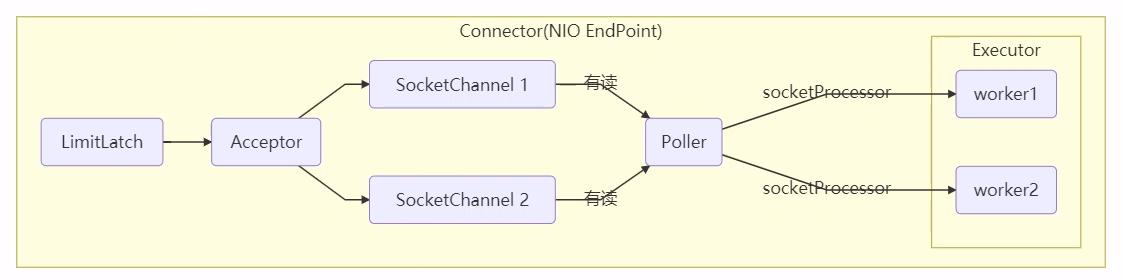

- Using Semaphore flow restriction, the requesting thread is blocked during the peak period, and the license is released after the peak period. Of course, it is only suitable for limiting the number of stand-alone threads, and only the number of threads, not the number of resources (for example, the number of connections, please compare the implementation of Tomcat LimitLatch)

- The implementation of simple connection pool with Semaphore has better performance and readability than the implementation under "meta sharing mode" (using wait notify). Note that the number of threads and database connections in the following implementation are equal

package top.onefine.test.c8;

import lombok.AllArgsConstructor;

import lombok.ToString;

import lombok.extern.slf4j.Slf4j;

import java.sql.*;

import java.util.Map;

import java.util.Properties;

import java.util.concurrent.Executor;

import java.util.concurrent.Semaphore;

import java.util.concurrent.atomic.AtomicIntegerArray;

@Slf4j(topic = "c.TestPoolSemaphore")

public class TestPoolSemaphore {

// 1. Connection pool size

private final int poolSize;

// 2. Connection object array

private Connection[] connections;

// 3. The connection status array 0 indicates idle and 1 indicates busy

private AtomicIntegerArray states;

private Semaphore semaphore;

// 4. Initialization of construction method

public TestPoolSemaphore(int poolSize) {

this.poolSize = poolSize;

// Make the number of licenses consistent with the number of resources

this.semaphore = new Semaphore(poolSize);

this.connections = new Connection[poolSize];

this.states = new AtomicIntegerArray(new int[poolSize]);

for (int i = 0; i < poolSize; i++) {

connections[i] = new MockConnection2("connect" + (i + 1));

}

}

// 5. Borrow connection

public Connection borrow() {// t1, t2, t3

// Get permission

try {

semaphore.acquire(); // Thread without permission, wait here

} catch (InterruptedException e) {

e.printStackTrace();

}

for (int i = 0; i < poolSize; i++) {

// Get idle connection

if (states.get(i) == 0) {

if (states.compareAndSet(i, 0, 1)) {

log.debug("borrow {}", connections[i]);

return connections[i];

}

}

}

// Will not be executed here

return null;

}

// 6. Return the connection

public void free(Connection conn) {

for (int i = 0; i < poolSize; i++) {

if (connections[i] == conn) {

states.set(i, 0);

log.debug("free {}", conn);

semaphore.release(); // Release semaphore

break;

}

}

}

}

@AllArgsConstructor

@ToString

class MockConnection2 implements Connection {

private final String name;

// The following code ignores

// ...

}

*Semaphore principle

1. Lock and unlock process

Semaphore is a bit like a parking lot. Permissions is like the number of parking spaces. When the thread obtains permissions, it is like obtaining parking spaces, and then the parking lot displays the empty parking spaces minus one.

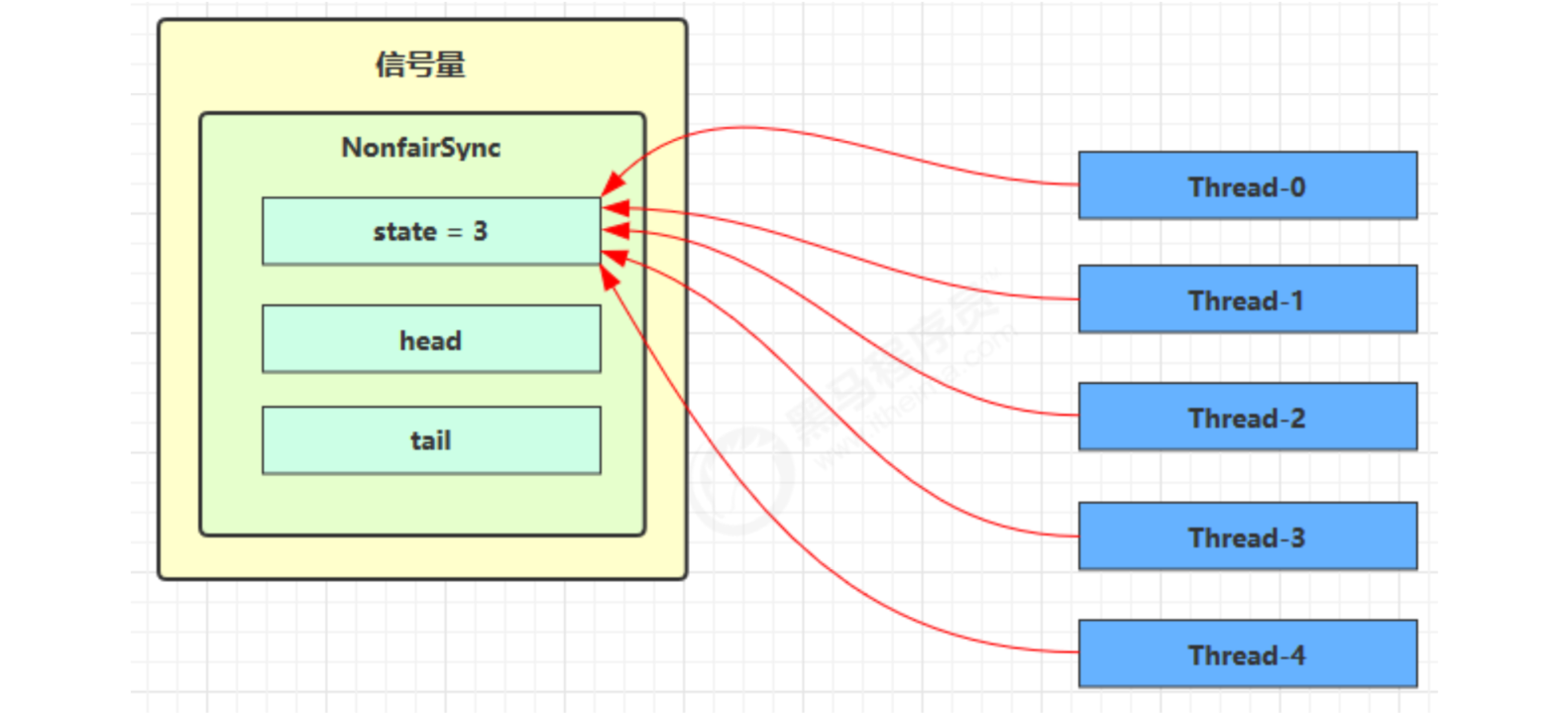

At first, the permissions (state) is 3. At this time, there are five threads to obtain resources

Suppose that Thread-1, Thread-2 and Thread-4 cas compete successfully, while Thread-0 and Thread-3 compete failed, and enter the AQS queue park to block

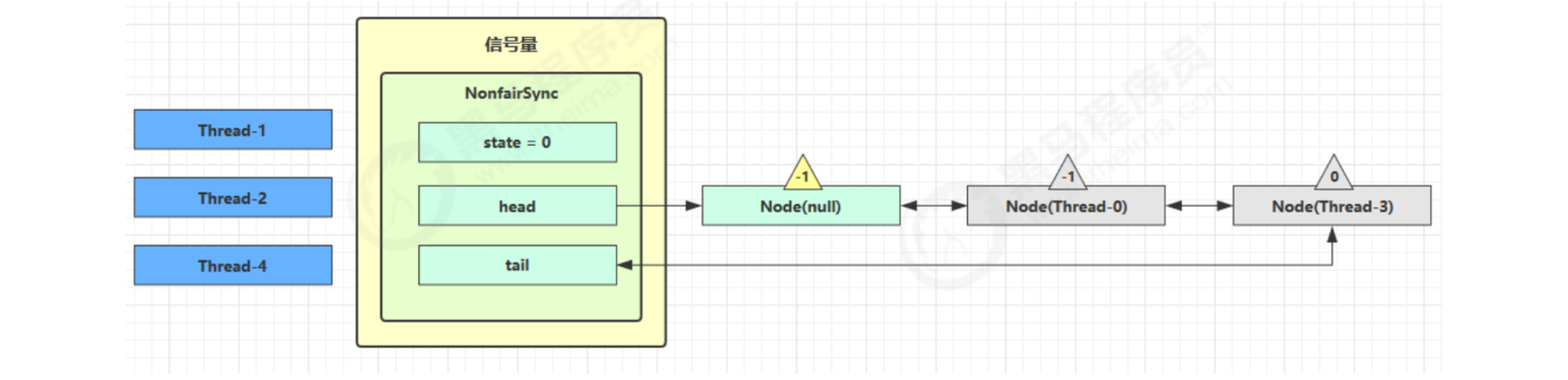

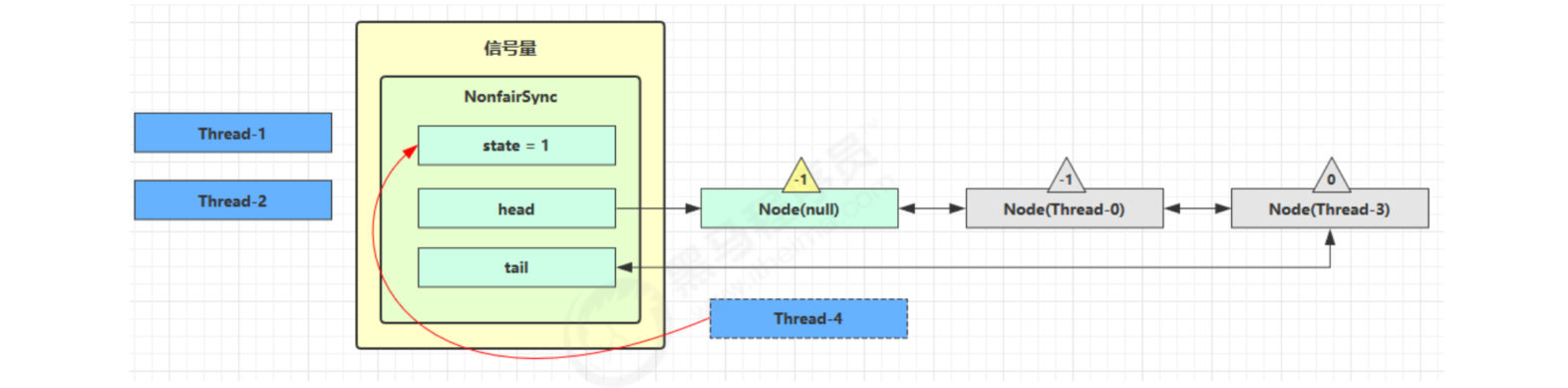

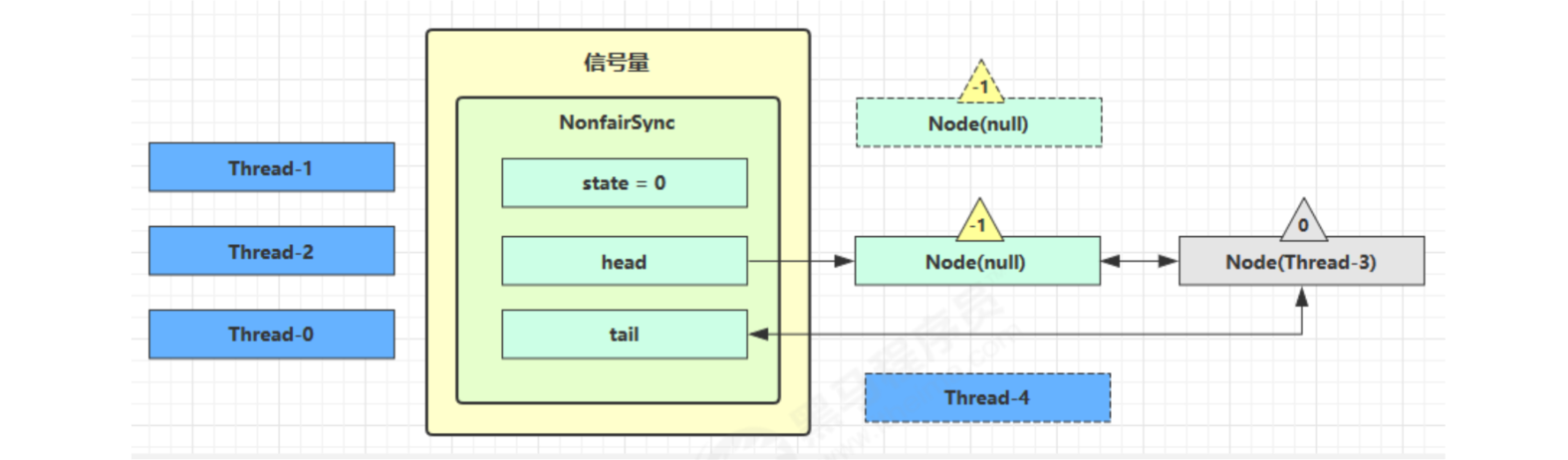

At this time, Thread-4 releases permissions, and the status is as follows

Next, the Thread-0 competition succeeds. The permissions are set to 0 again. Set yourself as the head node. Disconnect the original head node and unpark the next Thread-3 node. However, since the permissions are 0, Thread-3 enters the park state again after unsuccessful attempts

2. Source code analysis

static final class NonfairSync extends Sync {

private static final long serialVersionUID = -2694183684443567898L;

NonfairSync(int permits) {

// permits is state

super(permits);

}

// Semaphore method, easy to read, put here

public void acquire() throws InterruptedException {

sync.acquireSharedInterruptibly(1);

}

// The method inherited from AQS is easy to read and placed here

public final void acquireSharedInterruptibly(int arg)

throws InterruptedException {

if (Thread.interrupted())

throw new InterruptedException();

if (tryAcquireShared(arg) < 0)

doAcquireSharedInterruptibly(arg);

}

// Trying to get a shared lock

protected int tryAcquireShared(int acquires) {

return nonfairTryAcquireShared(acquires);

}

// Sync inherited method, easy to read, put here

final int nonfairTryAcquireShared(int acquires) {

for (; ; ) {

int available = getState();

int remaining = available - acquires;

if (

// If the license has been used up, a negative number is returned, indicating that the acquisition failed. Enter doacquisuresharedinterruptible

remaining < 0 ||

// If cas retries successfully, a positive number is returned, indicating success

compareAndSetState(available, remaining)

) {

return remaining;

}

}

}

// The method inherited from AQS is easy to read and placed here

private void doAcquireSharedInterruptibly(int arg) throws InterruptedException {

final Node node = addWaiter(Node.SHARED);

boolean failed = true;

try {

for (; ; ) {

final Node p = node.predecessor();

if (p == head) {

// Try obtaining the license again

int r = tryAcquireShared(arg);

if (r >= 0) {

// After success, the thread goes out of the queue (AQS) and the Node is set to head

// If head.waitstatus = = node. Signal = = > 0 succeeds, the next node is unpark

// If head.waitstatus = = 0 = = > node.propagate

// r indicates the number of available resources. If it is 0, it will not continue to propagate

setHeadAndPropagate(node, r);

p.next = null; // help GC

failed = false;

return;

}

}

// Unsuccessful, set the previous node waitStatus = Node.SIGNAL, and the next round enters park blocking

if (shouldParkAfterFailedAcquire(p, node) &&

parkAndCheckInterrupt())

throw new InterruptedException();

}

} finally {

if (failed)

cancelAcquire(node);

}

}

// Semaphore method, easy to read, put here

public void release() {

sync.releaseShared(1);

}

// The method inherited from AQS is easy to read and placed here

public final boolean releaseShared(int arg) {

if (tryReleaseShared(arg)) {

doReleaseShared();

return true;

}

return false;

}

// Sync inherited method, easy to read, put here

protected final boolean tryReleaseShared(int releases) {

for (; ; ) {

int current = getState();

int next = current + releases;

if (next < current) // overflow

throw new Error("Maximum permit count exceeded");

if (compareAndSetState(current, next))

return true;

}

}

}

3. Why is there PROPAGATE

Early bug

- releaseShared method

public final boolean releaseShared(int arg) {

if (tryReleaseShared(arg)) {

Node h = head;

if (h != null && h.waitStatus != 0)

unparkSuccessor(h);

return true;

}

return false;

}

- doAcquireShared method

private void doAcquireShared(int arg) {

final Node node = addWaiter(Node.SHARED);

boolean failed = true;

try {

boolean interrupted = false;

for (; ; ) {

final Node p = node.predecessor();

if (p == head) {

int r = tryAcquireShared(arg);

if (r >= 0) {

// There will be a gap here

setHeadAndPropagate(node, r);

p.next = null; // help GC

if (interrupted)

selfInterrupt();

failed = false;

return;

}

}

if (shouldParkAfterFailedAcquire(p, node) &&

parkAndCheckInterrupt()) interrupted = true;

}

} finally {

if (failed)

cancelAcquire(node);

}

}

- setHeadAndPropagate method

private void setHeadAndPropagate(Node node, int propagate) {

setHead(node);

// There are free resources

if (propagate > 0 && node.waitStatus != 0) {

Node s = node.next;

// next

if (s == null || s.isShared())

unparkSuccessor(node);

}

}

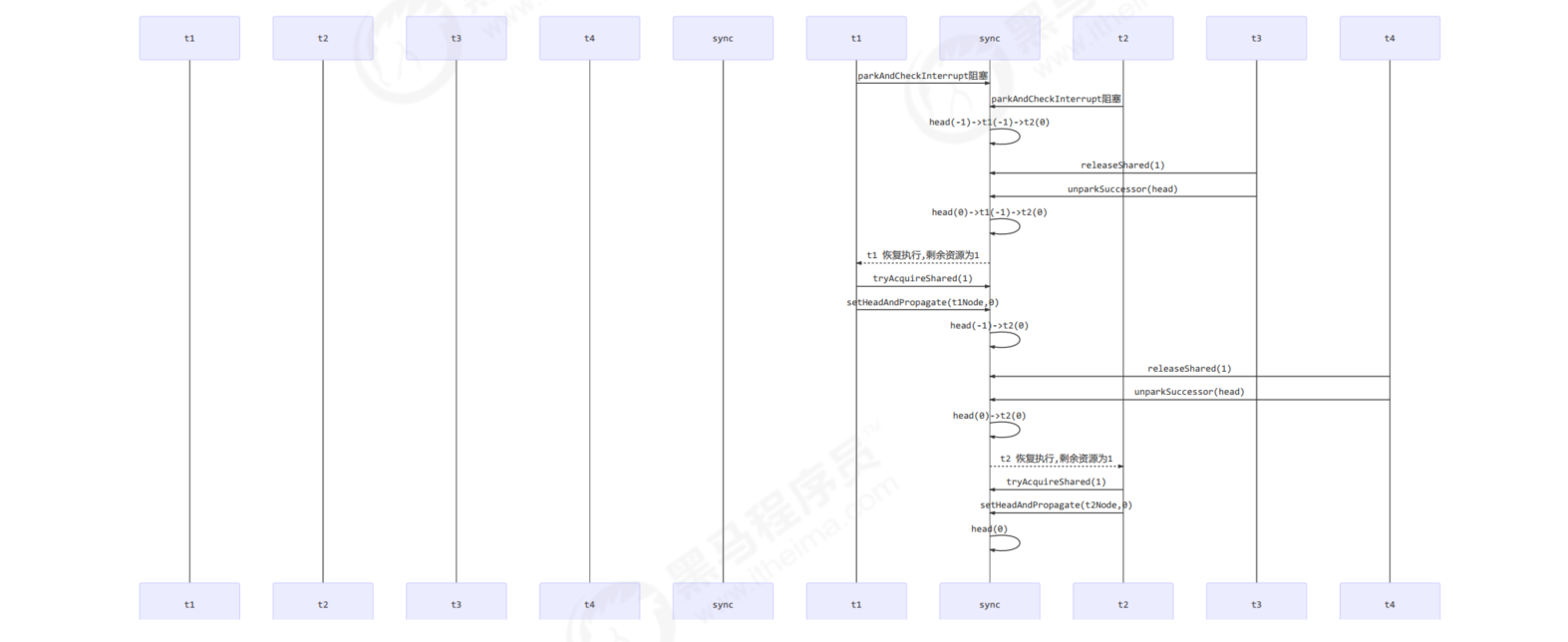

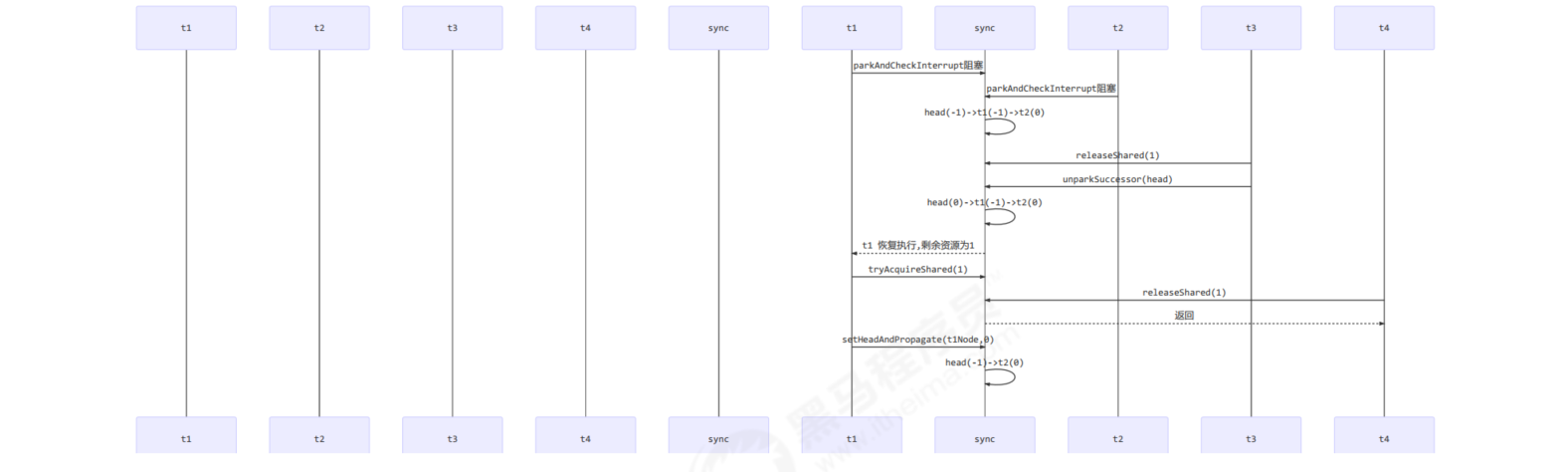

- Suppose there are nodes queued in the queue in a cycle, which is head (- 1) - > T1 (- 1) - > T2 (- 1)

- It is assumed that there are T3 and T4 semaphores to be released, and the release order is T3 first and then T4

Normal process

bug generation

Implementation process of version before repair

- T3 calls releaseShared(1) and directly calls unparksuccess (head). The waiting state of head changes from - 1 to 0

- T1 is awakened due to the T3 release semaphore, and calls tryAcquireShared. It is assumed that the return value is 0 (lock acquisition is successful, but there is no remaining resource)

- T4 calls releaseShared(1). At this time, the head.waitStatus is 0 (the read head and 1 are the same head), which does not meet the conditions. Therefore, unparksuccess (head) is not called

- T1 gets the semaphore successfully. When calling setHeadAndPropagate, because the return value of propagate > 0 (that is, propagate (remaining resources) = = 0) is not satisfied, the subsequent node will not wake up, and T2 thread cannot wake up

After bug repair

private void setHeadAndPropagate(Node node, int propagate) {

Node h = head; // Record old head for check below

// Set yourself to head

setHead(node);

// propagate indicates that there are shared resources (such as shared read locks or semaphores)

// Original head waitStatus == Node.SIGNAL or Node.PROPAGATE

// Now head waitStatus == Node.SIGNAL or Node.PROPAGATE

if (propagate > 0 || h == null || h.waitStatus < 0 ||

(h = head) == null || h.waitStatus < 0) {

Node s = node.next;

// If it is the last node or the node waiting to share the read lock

if (s == null || s.isShared()) {

doReleaseShared();

}

}

}

private void doReleaseShared() {

// If head.waitstatus = = node. Signal = = > 0 succeeds, the next node is unpark

// If head.waitstatus = = 0 = = > node.propagate

for (; ; ) {

Node h = head;

if (h != null && h != tail) {

int ws = h.waitStatus;

if (ws == Node.SIGNAL) {

if (!compareAndSetWaitStatus(h, Node.SIGNAL, 0))

continue; // loop to recheck cases

unparkSuccessor(h);

} else if (ws == 0 &&

!compareAndSetWaitStatus(h, 0, Node.PROPAGATE))

continue; // loop on failed CAS

}

if (h == head) // loop if head changed

break;

}

}

- T3 called releaseShared(), directly called unparksuccess (head), and the waiting state of head changed from - 1 to 0

- T1 is awakened due to the T3 release semaphore, and calls tryAcquireShared. It is assumed that the return value is 0 (lock acquisition is successful, but there is no remaining resource)

- T4 calls releaseShared(), and the head.waitStatus is 0 (the read head and 1 are the same head at this time), and calls doReleaseShared() to set the waiting state to PROPAGATE (- 3)

- T1 acquires semaphore successfully. When calling setHeadAndPropagate, it reads that h.waitstatus < 0, so it calls doReleaseShared() to wake up T2

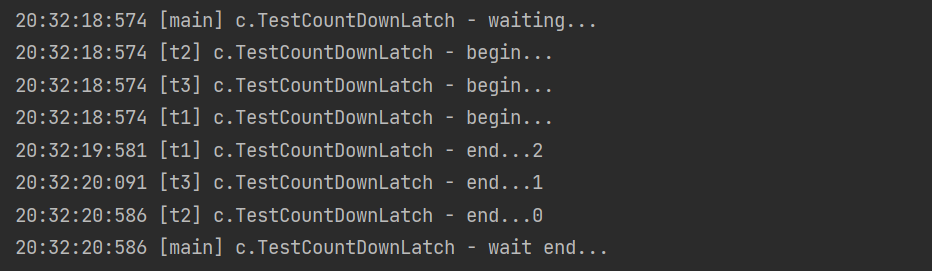

5. CountdownLatch

Used for thread synchronization and cooperation, waiting for all threads to complete the countdown.

The construction parameter is used to initialize the waiting count value, await() is used to wait for the count to return to zero, and countDown() is used to reduce the count by one

package top.onefine.test.c8;

import lombok.extern.slf4j.Slf4j;

import java.util.concurrent.CountDownLatch;

import java.util.concurrent.TimeUnit;

@Slf4j(topic = "c.TestCountDownLatch")

public class TestCountDownLatch {

public static void main(String[] args) throws InterruptedException {

CountDownLatch latch = new CountDownLatch(3);

new Thread(() -> {

log.debug("begin...");

try {

TimeUnit.SECONDS.sleep(1);

} catch (InterruptedException e) {

e.printStackTrace();

}

latch.countDown();

log.debug("end...{}", latch.getCount());

}).start();

new Thread(() -> {

log.debug("begin...");

try {

TimeUnit.SECONDS.sleep(2);

} catch (InterruptedException e) {

e.printStackTrace();

}

latch.countDown();

log.debug("end...{}", latch.getCount());

}).start();

new Thread(() -> {

log.debug("begin...");

try {

TimeUnit.MILLISECONDS.sleep(1500);

} catch (InterruptedException e) {

e.printStackTrace();

}

latch.countDown();

log.debug("end...{}", latch.getCount());

}).start();

log.debug("waiting...");

latch.await();

log.debug("wait end...");

}

}

output

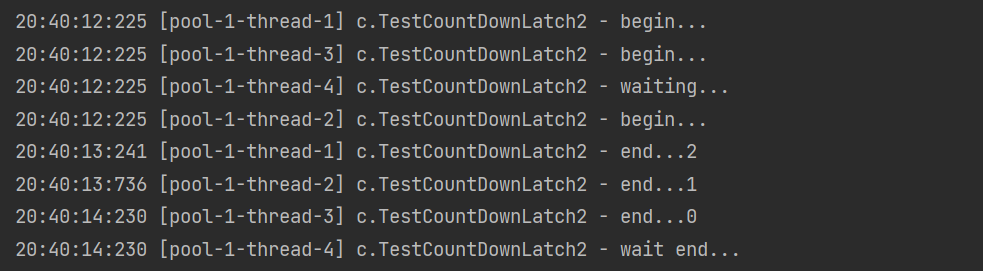

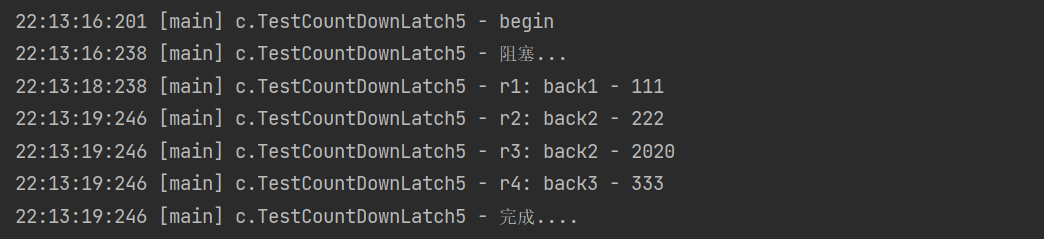

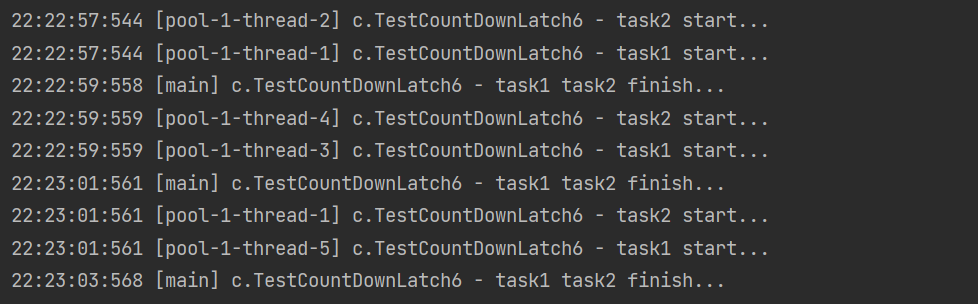

It can be used with thread pool. The improvements are as follows

package top.onefine.test.c8;

import lombok.extern.slf4j.Slf4j;

import java.util.concurrent.CountDownLatch;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

import java.util.concurrent.TimeUnit;

@Slf4j(topic = "c.TestCountDownLatch2")

public class TestCountDownLatch2 {

public static void main(String[] args) throws InterruptedException {

CountDownLatch latch = new CountDownLatch(3);

ExecutorService service = Executors.newFixedThreadPool(4);

service.submit(() -> {

log.debug("begin...");

try {

TimeUnit.SECONDS.sleep(1);

} catch (InterruptedException e) {

e.printStackTrace();

}

latch.countDown();

log.debug("end...{}", latch.getCount());

});

service.submit(() -> {

log.debug("begin...");

try {

TimeUnit.MILLISECONDS.sleep(1500);

} catch (InterruptedException e) {

e.printStackTrace();

}

latch.countDown();

log.debug("end...{}", latch.getCount());

});

service.submit(() -> {

log.debug("begin...");

try {

TimeUnit.SECONDS.sleep(2);

} catch (InterruptedException e) {

e.printStackTrace();

}

latch.countDown();

log.debug("end...{}", latch.getCount());

});

service.submit(() -> {

try {

log.debug("waiting...");

latch.await();

log.debug("wait end...");

} catch (InterruptedException e) {

e.printStackTrace();

}

});

}

}

output

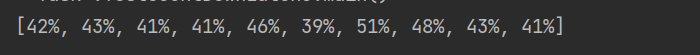

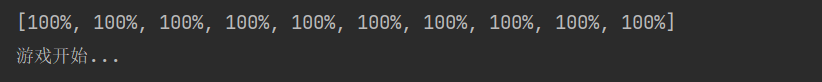

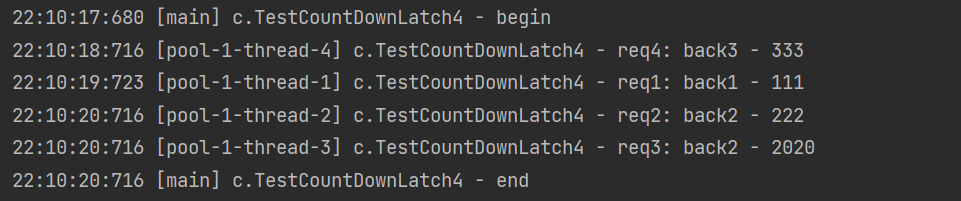

*Application synchronization waits for multithreading to be ready

package top.onefine.test.c8;

import lombok.extern.slf4j.Slf4j;

import java.util.Arrays;

import java.util.Random;

import java.util.concurrent.CountDownLatch;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

import java.util.concurrent.atomic.AtomicInteger;

@Slf4j(topic = "c.TestCountDownLatch3")

public class TestCountDownLatch3 {

public static void main(String[] args) throws InterruptedException {

// AtomicInteger num = new AtomicInteger(0);

// ExecutorService service = Executors.newFixedThreadPool(10

// , r -> new Thread(r, "t" + num.getAndIncrement()));

ExecutorService service = Executors.newFixedThreadPool(10);

CountDownLatch latch = new CountDownLatch(10);

String[] all = new String[10]; // Loading results

Random r = new Random();