Threads and processes

Process

It is a running activity of the program in the computer on a certain data set. It is the basic unit of resource allocation and scheduling of the system and the basis of the structure of the operating system.

(process understanding: an independent area on the operating system. Each process runs independently and resources are not shared with each other.)

Thread

It is the smallest unit that the operating system can schedule operations. A process can have multiple threads, and resources can be shared between threads. Multiple threads can be concurrent in a process, and each thread performs different tasks in parallel.

CPU time slicing

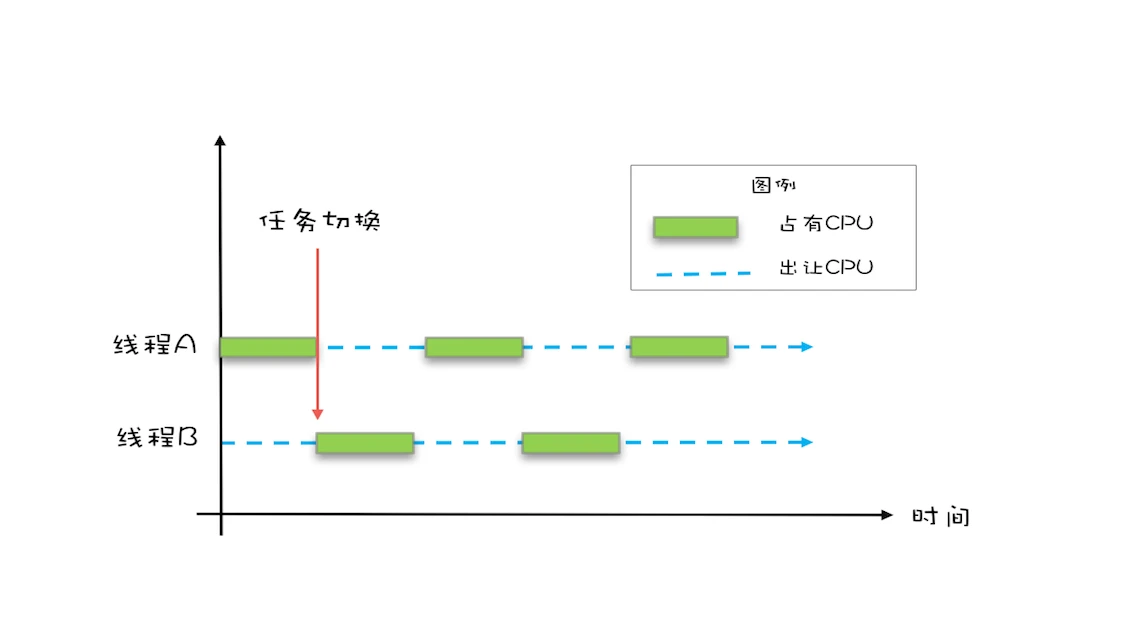

Process is the resource allocation unit and thread is the CPU scheduling unit. If the number of threads is no more than the number of CPU cores, each thread will be assigned a core. There is no need for fragmentation, and when the number of threads is more than the number of CPU cores, it will be fragmented.

When multiple threads are operating, if the system has only one CPU, the CPU will divide the running time into several time slices and allocate them to each thread for execution. When the thread code of a time period is running, other threads are in the suspended state.

Concurrency means that multiple things happen at the same time. Through time slice switching, even if there is only a single core, the effect of "doing multiple things at the same time" can be realized.

What is concurrency

Sequence: the current task cannot start until the previous task is executed

concurrent: the current task can start no matter whether the previous task has been executed or not

Serial: there is only one toilet, and you can only queue up one by one

parallel: there are multiple toilets, and multiple people can go to the toilet at the same time

5 threads, each thread can handle 1 million tasks. (5 refers to parallelism and 1 million refers to concurrency)

What is resource sharing

What are the reverse thinking resources?

Process address space division: stack area, heap area, code area (storage area of machine instructions after source code compilation), data area (storage of full set variables and static variables)

The essence of thread running is actually the execution of functions. There is always a source for the execution of functions. This source is the so-called entry function. The CPU starts to execute from the entry function to form an execution flow, but we artificially give the execution flow a name, which is called thread.

The stack area, program counter, stack pointer and registers used by function operation of the thread are private to the thread. These resources are collectively referred to as thread context.

Therefore, shared resources include heap area, code area and data area

Resource sharing related articles: What process resources are shared between threads? You will understand after reading this article~

Concurrent programming

Concurrent programming: in order to make the program run faster, let multiple threads complete different tasks respectively.

However, multithreading also brings a new problem - concurrency (competition of shared resources)

The source of concurrency problems

-

Atomicity problems caused by thread switching

-

Visibility problems caused by caching

-

Ordering problems caused by compilation optimization

Atomicity problem

Atomicity: the operation of a thread cannot be interrupted by other threads. Only one thread operates on a variable at the same time.

The reason for the atomicity problem: Java is a high-level language. A statement often needs multiple CPU instructions to complete. CPU task switching can occur after any CPU instruction is executed, rather than a statement in a high-level language.

Why are thread operations interrupted

Thread is the CPU scheduling unit. When the number of threads exceeds the number of CPU cores, the CPU will allocate time to each thread through time slicing, for example, 50 milliseconds. After 50 milliseconds, the thread will switch to another thread. Threads in the time slice have the right to use the CPU, and other threads will hang. This switching can be called task switching (or thread switching).

Examples of atomic problems

Take i + + as an example:

//G.java

public class G {

private int i;

public void test(){

i++;

}

}

//Look at bytecode after javap

Compiled from "G.java"

public class com.llk.kt.G {

public com.llk.kt.G();

Code:

0: aload_0

1: invokespecial #1 // Method java/lang/Object."<init>":()V

4: return

public void test();

Code:

0: aload_0

1: dup

2: getfield #2 / / get the value of the object field

5: iconst_1 //1(int) value stack

6: iadd //Add the two int types at the top of the stack and put the result on the stack

7: putfield #2 / / assign a value to the object field

10: return

}

/*

i++There are three steps

step1 Load i memory into CPU registers

step2 Perform the + 1 operation in the register

step3 Write the result to memory (the cache mechanism may cause the CPU cache to be written instead of memory)

After each step above is executed, the CPU may switch tasks.

For example:

Thread A and thread B execute the test() method at the same time. Thread A operates after obtaining the right to use the CPU. After executing step 1, the task switches to thread B.

Then thread B executes. Thread B is lucky enough to directly execute test(), and successfully write the value after self increment back to memory. At this time, i becomes 1.

The task switches back to thread A. since thread a has cached the value of i in the register after passing through step 1, continue to execute step 2, directly use the old value 0 of i to do self increment, and write the result back to memory after completion.

i The expected value of should have been 2. Finally, due to task switching, the value of i was abnormal.

*/

Visibility issues

Visibility: refers to whether a thread has modified the value of a shared variable, while other threads can see the modified value of the shared variable.

Cause of visibility problem: in order to solve the problem of slow memory io operation, the CPU will have its own cache. In multi-core CPUs, each CPU has its own independent cache. Threads are CPU scheduling units, so threads have this cache space. When a thread operates on a variable in shared memory, it will first copy it into its own cache space. In the concurrent environment, the cache consistency of CPU cannot be guaranteed, which will lead to the occurrence of visibility problems.

CPU cache consistency

Cache consistency

It refers to the mechanism to ensure that the shared resource data stored in multiple caches are the same.

Cache inconsistency

It means that the same data presents different performance in different caches. Cache inconsistencies are easy to occur in multi-core CPU systems.

If the cache consistency of CPU is guaranteed

There are many cache consistency protocols. The mainstream is the "snooping" protocol. Its basic idea is that all memory transmission takes place on a shared bus, which can be seen by all CPU s.

The cache itself is independent, but the memory is a shared resource, and all memory accesses must be arbitrated (only one CPU cache can read and write memory in the same instruction cycle).

The CPU cache does not only deal with the bus when doing memory transmission, but also keeps sniffing the data exchange on the bus and tracking what other caches are doing. Therefore, when a cache reads and writes memory on behalf of its CPU, other processors will be notified to keep their cache synchronized. As soon as a CPU writes memory, other CPUs immediately know that this memory has expired in their cache segment.

MESI protocol is the most popular cache consistency protocol. (interested to know for yourself)

Examples of visibility issues

public class V {

private static boolean bool = false;

public static void b_test(){

new Thread(new Runnable() {//Thread 1

@Override

public void run() {

System.out.println("11111");

while (!bool){ }

System.out.println("22222");

}

}).start();

try {

Thread.sleep(10);

} catch (InterruptedException e) {

e.printStackTrace();

}

new Thread(new Runnable() { //Thread 2

@Override

public void run() {

System.out.println("33333");

bool = true;

System.out.println("44444");

}

}).start();

}

public static void main(String[] args) {

b_test();

}

/* Output - >

11111

33333

44444

Thread 2 has set bool to true. Why didn't thread 1 end the loop?

Because the thread has its own cache area, it will first copy the variables in the shared memory to its own cache area. Therefore, this causes thread 2 to refresh the bool value in shared memory, but thread 1 still uses its own cached bool value, resulting in thread 1 being unable to exit.

*/

}

Order problem

Order: in the code order structure, we can intuitively specify the execution order of the code, that is, from top to bottom.

The reason for the order problem: the compiler and CPU processor will reorder the execution order of the code according to their own decisions. Optimize the execution order of instructions, improve the performance and execution speed of the program, change the execution order of statements and reorder them. The final result of single core CPU seems to have no change.

However, in a multi-threaded environment (multi-core CPU), after the execution of statement reordering, this part of the reordering is not executed together, so it is switched to other threads, resulting in the problem that the result is inconsistent with the expectation.

Examples of ordering problems

public class V {

private static V instance;

private V(){}

public static V getInstance() {

if (instance==null){ //First inspection

synchronized (V.class){ //synchronized ensures the visibility, atomicity and order of code blocks and context

if (instance == null){ //Secondary inspection

instance = new V();

}

}

}

return instance;

}

}

The above is a classic singleton implementation, Double Check Lock singleton. However, this singleton is not perfect. In multi-threaded mode, getInstance() may still have problems, and there will be ordering problems due to instruction rearrangement.

Isn't synchronized able to ensure order? Why is there an order problem? Synchronized can only guarantee the order of the protected code block and context, but can not guarantee the order within the code block.

//The reason is: instance = new V(); //This line of code is not an atomic operation, but is completed by three operations //1. Open up a memory space for instance //2. Initialize object //3. instance points to the memory address just allocated //Due to compiler optimization, instruction rearrangement occurs and becomes //1. Open up a memory space for instance //3. instance points to the memory address just allocated //2. Initialize object //Suppose thread A executes the step of "instance points to the memory address just allocated", then instance is not empty. //At this time, thread B just performs the first check of getInstance(). It finds that instance is not empty and returns directly. //However, the instance object may not be initialized. If we access the member variable of instance at this time, we may trigger a null pointer exception.

To solve the ordering problem in the above example, just add the volatile keyword to the instance variable.

Java Memory Model

JMM (Java Memory Model) is also called Java Memory Model

JMM is used to define a consistent and cross platform memory model. It is a cache consistency protocol to define data read and write rules.

The core concept of JMM is happens before.

Happens before rule

Happens before rule: the result of the previous operation is visible to subsequent operations

1. Sequential rules

In a thread, according to the program sequence, the previous operation happens before is applied to any subsequent operation

int a = 1; //1 int b = 2; //2 int c = a + b; //3

2. volatile variable rules

For the write operation of a volatile variable, happens before is used for any subsequent read operation of the volatile variable

volatile int i = 0; //Thread A execution i = 10; //Write operation //Thread execution int b = i; //Read operation /* Thread A executes first, followed by thread B Because volatile modifies i to ensure visibility, thread B can get the latest value of i immediately */

3. Transitivity rules

If a happens before B and B happens before C, then a happens before C

int i = 0;

volatile boolean b;

void set(){

i = 10;

b = true;

}

void get(){

if(b){

int g = i;

}

}

/*

After thread A calls set(), thread B calls get().

i = 10 Happens-Before b = true ---> Sequential rules

b Write operation Happens-Before b read operation ---> Volatile variable rule

Finally, according to the transitivity rule, i = 10 Happens-Before int g = i. The value of i obtained by G here is the latest value 10

*/

4. Rules of lock in pipe program

The unlocking of a lock happens before the subsequent locking of the lock

Pipe process is a general synchronization primitive. synchronized is the implementation of pipe process in Java.

int i = 5;

void set(){

synchronized (this) { //Lock

if (i < 10) {

i = 99;

}

} //Unlock

}

/*

Thread A and thread B call set() at the same time. Thread A enters the code block first, and thread B enters the waiting

When thread A automatically releases the lock, thread B enters the code block. The i value read by thread B is already the latest value 99.

*/

5. Thread start() rule

After main thread A starts sub thread B, sub thread B can see the operation of the main thread before starting sub thread B

In other words: the operation before thread B.start() happens before any operation in thread B

int i = 0; //Shared variable

Thread B = new Thread(()->{

System.out.println("i=" + i); //Output - > I = 10

});

i = 10;

B.start();

/*

i = 10 It is the operation before thread B starts, so the value of i in thread B is the latest value

*/

6. Thread join() rule

The main thread A waits for the completion of the sub thread B (the main thread A calls the join() method of the sub thread b). When the execution of the sub thread B is completed (the join() method in the main thread A returns), the main thread can see the operation of the sub thread on the shared variable.

In other words: any operation in thread B occurs before the operation after thread B.join()

int i = 0; //Shared variable

Thread B = new Thread(()->{

i = 10;

}).start();

System.out.println("i=" + i); //Output - > I = 0

B.join();

System.out.println("i=" + i); //Output - > I = 10

/*

The i = 10 operation in thread B is visible after B.join()

*/