The architecture design of WebMagic refers to scripy, and the implementation applies mature Java tools such as HttpClient and jsup.

WebMagic consists of four components (Downloader, PageProcessor, Scheduler and Pipeline):

- Downloader: Downloader

- PageProcessor: page parser

- Scheduler: task allocation, url de duplication

- Pipeline: data storage and processing

WebMagic data flow object:

- Request: a request corresponds to a URL address. It is the only way that PageProcessor controls Downloader.

- Page: represents the content downloaded from the Downloader

- ResultItems: equivalent to a Map, which saves the results processed by the PageProcessor for Pipeline use.

Crawler engine – Spider:

- Spider is the core of WebMagic's internal process. The above four components are equivalent to a property of spider. Different functions can be realized by setting this property.

- Spider is also the entrance of WebMagic operation. It encapsulates the functions of creating, starting, stopping, multithreading and so on

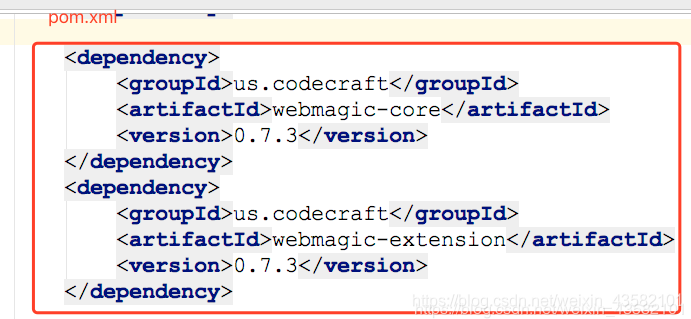

Using Maven to install WebMagic

<dependency>

<groupId>us.codecraft</groupId>

<artifactId>webmagic-core</artifactId>

<version>0.7.3</version>

</dependency>

<dependency>

<groupId>us.codecraft</groupId>

<artifactId>webmagic-extension</artifactId>

<version>0.7.3</version>

</dependency>

WebMagic uses slf4j-log4j12 as the implementation of slf4j. If you customize the implementation of slf4j, you need to remove this dependency from the project.

<dependency>

<groupId>us.codecraft</groupId>

<artifactId>webmagic-extension</artifactId>

<version>0.7.3</version>

<exclusions>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

</exclusions>

</dependency>If you don't use Maven, you can go http://webmagic.io Download the latest jar package from, unzip it after downloading, and then import it in the project.

Start developing the first crawler

After WebMagic dependencies are added to the project, you can start the development of the first crawler! The following is a test. Click the main method and select "run" to check whether it runs normally.

package com.example.demo;

import us.codecraft.webmagic.Page;

import us.codecraft.webmagic.Site;

import us.codecraft.webmagic.Spider;

import us.codecraft.webmagic.processor.PageProcessor;

public class DemoPageGet implements PageProcessor {

private Site site = Site.me();

@Override

public void process(Page page) {

System.out.println(page.getHtml());

}

@Override

public Site getSite() {

return site;

}

public static void main(String[] args) {

Spider.create(new DemoPageGet()).addUrl("http://httpbin.org/get").run();

}

}Write basic crawler

In WebMagic, to implement a basic crawler, you only need to write a class to implement the PageProcessor interface.

In this part, we directly introduce the writing method of PageProcessor through the example of GithubRepoPageProcessor.

The customization of PageProcessor is divided into three parts: crawler configuration, page element extraction and link discovery.

public class GithubRepoPageProcessor implements PageProcessor {

// Part I: relevant configuration of the crawl website, including code, crawl interval, retry times, etc

private Site site = Site.me().setRetryTimes(3).setSleepTime(1000);

@Override

// process is the core interface of custom crawler logic, and extraction logic is written here

public void process(Page page) {

// Part 2: define how to extract page information and save it

page.putField("author", page.getUrl().regex("https://github\\.com/(\\w+)/.*").toString());

page.putField("name", page.getHtml().xpath("//h1[@class='entry-title public']/strong/a/text()").toString());

if (page.getResultItems().get("name") == null) {

//skip this page

page.setSkip(true);

}

page.putField("readme", page.getHtml().xpath("//div[@id='readme']/tidyText()"));

// Part 3: find the subsequent url address from the page to grab

page.addTargetRequests(page.getHtml().links().regex("(https://github\\.com/[\\w\\-]+/[\\w\\-]+)").all());

}

@Override

public Site getSite() {

return site;

}

public static void main(String[] args) {

Spider.create(new GithubRepoPageProcessor())

//From“ https://github.com/code4craft "Start catching

.addUrl("https://github.com/code4craft")

//Start 5 thread grabs

.thread(5)

//Start crawler

.run();

}

}Append requested link

First, match or splice the links through regular, such as page. Gethtml(). Links(). Regex() ". All() Then add these links to the queue to be fetched through the addTargetRequests method page.addTargetRequests(url).

Configuration of crawler

Spider: the entrance of the crawler. Other components of the spider (Downloader, Scheduler, Pipeline) can be set through the set method.

Site: some configuration information of the site itself, such as code, HTTP header, timeout, retry policy, proxy, etc., can be configured by setting the site object.

Configure the http proxy. Since version 0.7.1, WebMagic began to use the new proxy APIProxyProvider. Compared with the "configuration" of the Site, the ProxyProvider is more a "component", so the proxy is no longer set from the Site, but by the HttpClientDownloader.

For more information, see Official documents.

Extraction of page elements

WebMagic mainly uses three data extraction technologies:

- XPath

- regular expression

- CSS selector

- In addition, JsonPath can be used to parse the content in JSON format

Save results using Pipeline

The component WebMagic uses to save the results is called Pipeline.

For example, we use a built-in Pipeline to output results through the console, which is called ConsolePipeline.

So, now I want to save the results in Json format. What should I do?

I just need to change the implementation of Pipeline to "JsonFilePipeline".

public static void main(String[] args) {

Spider.create(new GithubRepoPageProcessor())

//From“ https://github.com/code4craft "Start catching

.addUrl("https://github.com/code4craft")

.addPipeline(new JsonFilePipeline("./webmagic"))

//Start 5 thread grabs

.thread(5)

//Start crawler

.run();

}Simulated POST request method

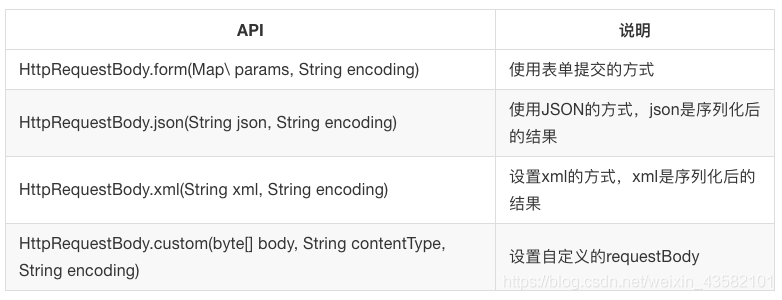

After version 0.7.1, the old writing Method of nameValuePair is abandoned and implemented by adding Method and requestBody to the Request object.

Request request = new Request("http://xxx/path");

request.setMethod(HttpConstant.Method.POST);

request.setRequestBody(HttpRequestBody.json("{'id':1}","utf-8"));HttpRequestBody has several built-in initialization methods, supporting the most common forms submission, json submission, etc.