1. Java Memory Model

JMM is the Java Memory Model, which defines the abstract concepts of main memory and working memory from the Java level. The bottom layer corresponds to CPU register, cache, hardware memory, CPU instruction optimization, etc. JMM is reflected in the following aspects:

- Atomicity - ensures that instructions are not affected by thread context switching

- Visibility - ensure that instructions are not affected by cpu cache (JIT cache optimization of hot code)

- Orderliness - ensure that the instructions will not be affected by the parallel optimization of cpu instructions

2. Visibility

2.1. Cycle that cannot be returned

The modification of the run variable by the main thread is invisible to the t thread, which makes the t thread unable to stop

public class Test1 {

volatile static boolean run = true;

public static void main(String[] args) throws InterruptedException {

Thread t1 = new Thread(() -> {

while (run) {

// If you print a sentence

// At this point, it can end because synchronized is used in the println method

// synchronized ensures atomicity, visibility and order

// System.out.println("123");

}

});

t1.start();

Thread.sleep(1000);

run = false;

System.out.println(run);

}

}

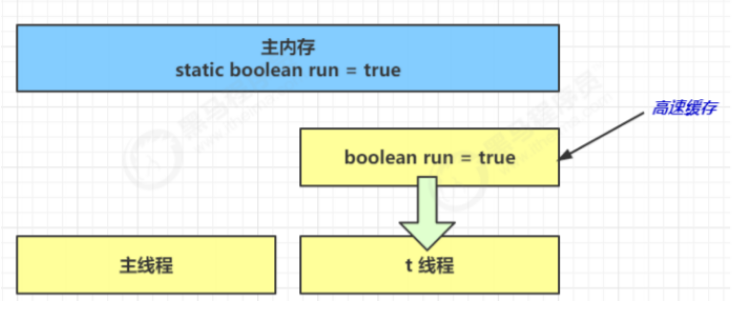

- The reason why it doesn't end at the beginning is that the infinite loop and run are true. The JIT timely compiler will cache the run variable executed by thread t1 and cache it to the local working memory Do not access run. In main memory This improves performance

- It can also be said that after the JVM reaches a certain threshold, while(true) becomes a hot code, so the run cached in the local working memory (local) is always accessed

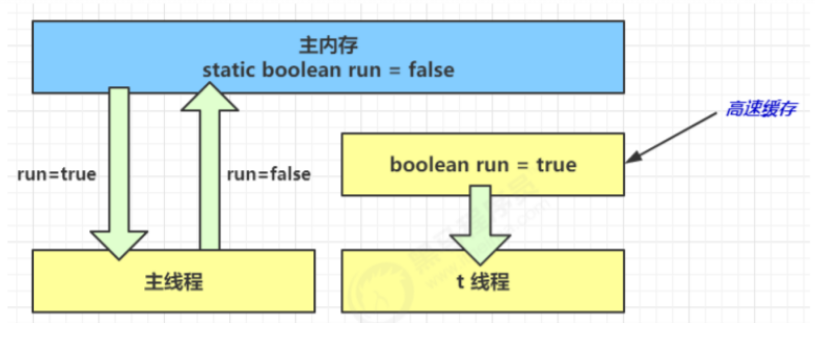

- When the main thread modifies the run variable in the main memory, t1 thread always accesses its own cache, so it does not think that run has been changed to false So it runs all the time

- We modify volatile for main memory (member variables) to increase the visibility of variables. When the main thread modifies run to false, thread T1 can see the value of run This will exit the loop

Using synchronized solutions

public class Test1 {

static boolean run = true;

final static Object obj = new Object();

public static void main(String[] args) {

Thread t1 = new Thread(() -> {

// Within 1 s, the lock has been acquired in an infinite loop After 1s, the main thread grabs the lock and changes it to false. At this time, t1 thread grabs the lock object and the while loop also exits

while (run) {

synchronized (obj) {

}

}

});

t1.start();

Sleeper.sleep(1);

// When the main thread obtains the lock, it is changed to false

synchronized (obj) {

run = false;

System.out.println("false");

}

}

}

Analyze the reasons for the invisibility of run variables?

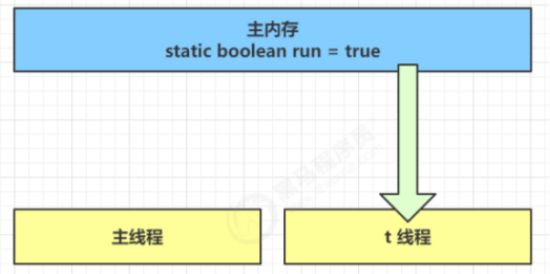

- In the initial state, thread t just starts from the main memory (member variable), because the main thread sleeps for (1) seconds. At this time, thread t1 circulates the value of run for many times. If it exceeds a certain threshold, JIT will read the value of run from the main memory to the working memory (equivalent to caching a copy and will not read the value of run from the main memory)

- Because t1 thread frequently reads the value of run from main memory, JIT real-time compiler will cache the value of run into the cache in its own working memory to reduce the access to run in main memory to improve efficiency

- After one second, the main thread modifies the value of run and synchronizes it to main memory. The t thread reads the value of this variable from the cache in its working memory, and the result is always the old value

2.2. Method of realizing visibility

-

volatile can be used to modify the value of the variable stored in the thread's main memory and the variable stored in the thread's working memory, which means that it can be used to directly find the value of the variable stored in the main memory and the variable stored in the thread's working memory

-

Volatile can be regarded as a lightweight lock. For variables modified by volatile, assembly instructions will exist in a prefix of "lock". At the CPU level and the main memory level, through the cache consistency protocol, the write value can be synchronized to the main memory after locking, so that other threads can obtain the latest value

-

Using the synchronized keyword also has the same effect. In the Java memory model, synchronized stipulates that when a thread locks, it first empties the working memory → copies the copy of the latest variable in the main memory to the working memory → executes the code → flushes the value of the changed shared variable into the main memory → releases the mutex

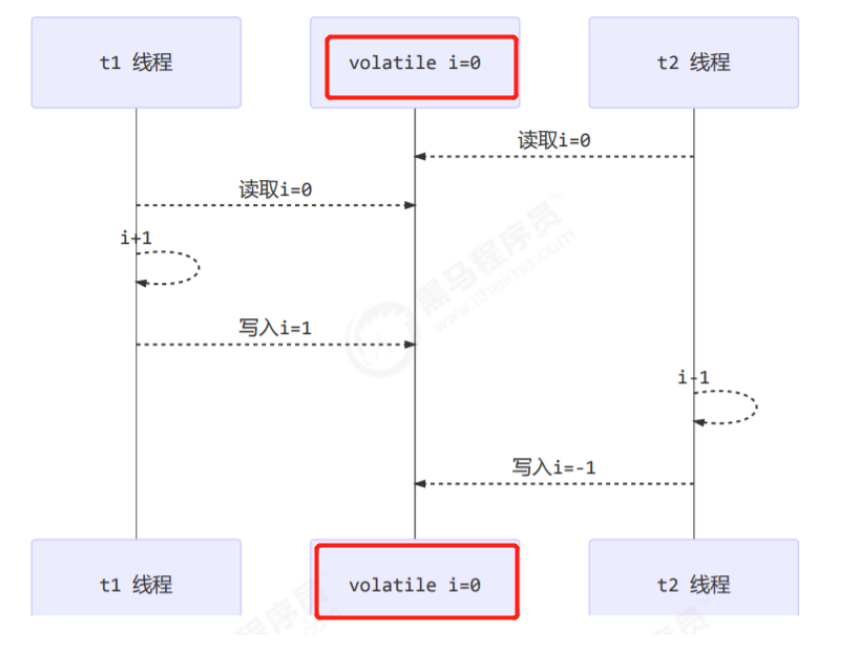

2.3 visibility vs atomicity

Two threads, one i + + and one i --, can only guarantee to see the latest value (visibility), and cannot solve the instruction interleaving (atomicity)

// Assume that the initial value of i is 0 getstatic i // Thread 2 - get the value of static variable i, i=0 in the thread getstatic i // Thread 1 - get the value of static variable i, i=0 in the thread iconst_1 // Thread 1 - prepare constant 1 iadd // Thread 1 - self incrementing thread i=1 putstatic i // Thread 1 - store the modified value into static variable I, static variable i=1 iconst_1 // Thread 1 - constant preparation isub // Thread 2 - self decreasing thread i=-1 putstatic i // Thread 2 - store the modified value into static variable I, static variable i=-1

- synchronized statement blocks can not only ensure the atomicity of code blocks, but also ensure the visibility of variables in code blocks

- But the disadvantage is that synchronized is a heavyweight operation with relatively lower performance

- If you add system. In the dead loop of the previous example out. Println () will find that even without the volatile modifier, thread t can correctly see the modification of the run variable. Think about why?

- Because there is a synchronized modifier in the println method. There is also the example of waiting for smoke. Why is there no visibility problem? And synchrozized is the same truth

3. Order

- It is an optimization of JIT just in time compiler, which may lead to instruction rearrangement

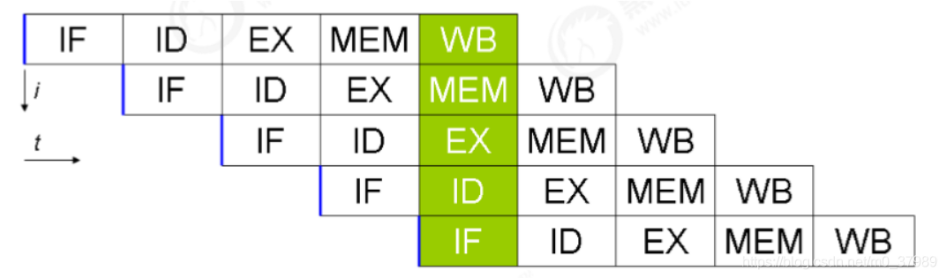

- Why optimize? Because the CPU supports multi-level instruction pipeline

- For example, a processor that supports simultaneous instruction fetch - instruction decoding - instruction execution - memory access - data write back has fast efficiency

- The JVM can adjust the execution order of statements without affecting the correctness, which is an optimization

3.1 processor supporting pipeline

- Modern CPU s support multi-level instruction pipeline. For example, processors that support simultaneous instruction fetching, instruction decoding, instruction execution, memory access and data write back can be called five level instruction pipeline

- Pipelining technology does not mean that multiple instructions are executed in parallel. It may still need to wait until other instructions are executed. At this time, there will be a pause. We can continue to execute instructions unrelated to this instruction, which is instruction rearrangement

3.2 reordering requirements

- Instruction reordering does not reorder operations that have data dependencies

- For example: a=1;b=a; In this instruction sequence, since the second operation depends on the first operation, the two operations will not be reordered at compile time and processor runtime

- Reordering is to optimize performance, but no matter how reordering, the execution result of the program under a single thread cannot be changed

- For example: a = 1; b=2; For the three operations of c=a+b, the first step (a=1) and the second step (b=2) may be reordered because there is no data dependency, but the operation of c=a+b will not be reordered because the final result must be c=a+b=3

- Instruction reordering in single thread mode will certainly ensure the correctness of the final result, but in multi-threaded environment, the problem arises

- volatile modified variable, which can disable instruction rearrangement

4. volatile principle

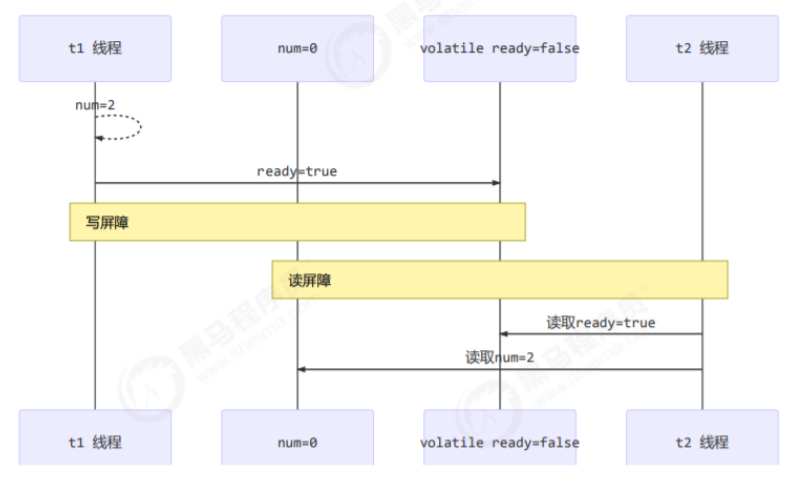

- The underlying implementation principle of volatile is Memory Barrier (Memory Fence)

- The write barrier will be added after the write instruction to the volatile variable. (ensure that all write operations before the write barrier can be synchronized to main memory)

- The read barrier will be added before the read instruction of volatile variable. (ensure that the data in main memory can be read by the read operation after the read barrier)

4.1 how does volatile ensure visibility

- The write barrier (sfence) ensures that changes to shared variables before the barrier are synchronized to main memory

public void actor2(I_Result r) {

num = 2;

ready = true; // ready is modified by volatile, and the assignment has a write barrier

// Write barrier (added after the ready=true write instruction,

//Changes to shared variables before the barrier are synchronized to main memory (including num)

}

- lfence ensures that after the barrier, the latest data in main memory is loaded for the reading of shared variables

public void actor1(I_Result r) {

// Read barrier

// ready is modified by volatile, and the read value has a read barrier

if(ready) { // ready, read is the new value in main memory

r.r1 = num + num; // num, which also reads the new value in main memory

} else {

r.r1 = 1;

}

}

4.2 how does volatile ensure order

- The write barrier ensures that the code before the write barrier is not placed after the write barrier when the instruction is reordered

public void actor2(I_Result r) {

num = 2;

ready = true; // ready is modified by volatile, and the assignment has a write barrier

// Write barrier

}

- The read barrier ensures that the code behind the read barrier does not rank before the read barrier when the instruction is reordered

public void actor1(I_Result r) {

// Read barrier

// ready is modified by volatile, and the read value has a read barrier

if(ready) {

r.r1 = num + num;

} else {

r.r1 = 1;

}

}

4.3 volatile cannot solve instruction interleaving (atomicity cannot be solved)

- The write barrier only guarantees that subsequent reads can read the latest results, but it cannot guarantee that other threads can read and run in front of it

- The guarantee of order only ensures that the relevant code in this thread will not be reordered

- The t2 thread in the figure below reads i=0 first. At this time, there will still be instruction interleaving. You can use synchronized to solve atomicity

5. Double checked locking problem

Take the famous double checked locking single instance mode as an example, which is the most commonly used place of volatile

// The initial singleton mode is like this

public final class Singleton {

private Singleton() { }

private static Singleton INSTANCE = null;

public static Singleton getInstance() {

/*

Multiple threads call getInstance() at the same time. If the synchronized lock is not added, the two threads will be locked at the same time

If the INSTANCE is judged to be empty, new Singleton() will be generated, and the singleton will be destroyed So lock it,

Prevent multi-threaded operations from sharing resources, resulting in security problems

*/

synchronized(Singleton.class) {

if (INSTANCE == null) { // t1

INSTANCE = new Singleton();

}

}

return INSTANCE;

}

}

/*

First of all, the efficiency of the above code is problematic, because when we create a singleton object, another thread obtains the lock and still adds the lock,

Seriously affect the performance. Judge whether INSTANCE==null again. It must not be null at this time, and then return the INSTANCE just created;

This leads to a lot of unnecessary judgments;

Therefore, double check. The first time the thread calls getInstance(), directly outside of synchronized, to determine whether the instance object exists,

If it does not exist, it will acquire the lock, then create a singleton object and return; The second thread calls getInstance(), and the

if(instance==null)If there is already a singleton object, the lock in the synchronization block will not be obtained at this time increase of efficiency

*/

public final class Singleton {

private Singleton() { }

private static Singleton INSTANCE = null;

public static Singleton getInstance() {

if(INSTANCE == null) { // t2

// The first access is synchronized, but the subsequent use is not synchronized

synchronized(Singleton.class) {

if (INSTANCE == null) { // t1

INSTANCE = new Singleton();

}

}

}

return INSTANCE;

}

}

//However, the above if(INSTANCE == null) judgment code is not in the synchronized code block,

// Cannot enjoy the atomicity, visibility, and orderliness guaranteed by synchronized. Therefore, it may cause instruction rearrangement

Note: in multi-threaded environment, the above code is problematic. The byte code corresponding to getInstance method is

0: getstatic #2 // Field INSTANCE:Lcn/itcast/n5/Singleton; 3: ifnonnull 37 // Judge whether it is empty // ldc is to obtain class objects 6: ldc #3 // class cn/itcast/n5/Singleton // The value at the top of the copy operand stack is placed at the top of the stack, and the reference address of the class object is copied 8: dup // The value at the top of the operand stack pops up, that is, the reference address of the object is saved in the local variable table // The object will be stored with an address in the future 9: astore_0 10: monitorenter 11: getstatic #2 // Field INSTANCE:Lcn/itcast/n5/Singleton; 14: ifnonnull 27 // Create a new instance 17: new #3 // class cn/itcast/n5/Singleton // Copied a reference to an instance 20: dup // Call its constructor through this copied reference 21: invokespecial #4 // Method "<init>":()V // The initial reference is used for assignment 24: putstatic #2 // Field INSTANCE:Lcn/itcast/n5/Singleton; 27: aload_0 28: monitorexit 29: goto 37 32: astore_1 33: aload_0 34: monitorexit 35: aload_1 36: athrow 37: getstatic #2 // Field INSTANCE:Lcn/itcast/n5/Singleton; 40: areturn

- 17 means to create an object and reference the object into the stack / / new Singleton

- 20 means to copy an object reference. / / the reference address is copied and unlocked

- 21 refers to using an object reference to call the construction method. / / the construction method is called according to the copied reference address

- 24 means that an object reference is used to assign a value to static INSTANCE

The jvm may be optimized to execute 24 (assignment) first, and then 21 (construction method)

- t1 has not completely executed the construction method. If many initialization operations need to be performed in the construction method, t2 will get a single instance that has not been initialized

- Use volatile modification on INSTANCE to disable instruction rearrangement

6. Happens before (write operations to shared variables are visible to read operations of other threads)

Happens before specifies the write operation of shared variables, which is visible to the read operation of other threads. It is a set of rules summary of visibility and order. Regardless of the following happens before rules, JMM cannot guarantee that one thread writes to the shared variable, which is visible to other threads' reading of the shared variable

The following variables refer to member variables or static member variables

Mode 1:

- The writing of the variable before the thread unlocks m is visible to the reading of the variable by other threads that lock m next

- synchronized lock for visibility

static int x;

static Object m = new Object();

new Thread(()->{

synchronized(m) {

x = 10;

}

},"t1").start();

new Thread(()->{

synchronized(m) {

System.out.println(x);

}

},"t2").start();

// 10

Mode 2:

- The writing of volatile variable by thread is visible to the reading of this variable by other threads

- volatile modified variables are shared in main memory through the write barrier, and other threads read the data in main memory through the read barrier

volatile static int x;

new Thread(()->{

x = 10;

},"t1").start();

new Thread(()->{

System.out.println(x);

},"t2").start();

Mode 3:

- The writing of the variable before the thread start() is visible to the reading of the variable after the thread starts

- When the thread is not started, modify the value of the variable. After starting the thread, the variable value obtained must be modified

static int x;

x = 10;

new Thread(()->{

System.out.println(x);

},"t2").start();

Mode 4:

- The writing of variables before the end of a thread is visible to the reading of other threads after they know that it ends (for example, other threads call t1.isAlive() or T1 Join() wait for it to end)

- The x value obtained by the main thread is the value after the thread has finished writing to X

static int x;

Thread t1 = new Thread(()->{

x = 10;

},"t1");

t1.start();

t1.join();

System.out.println(x);

Mode 5:

- Write to variable before thread t1 interrupts t2 (interrupt)

- After other threads know that T2 is interrupted, the reading of variables is visible (through t2.interrupted or t2.isInterrupted)

static int x;

public static void main(String[] args) {

Thread t2 = new Thread(()->{

while(true) {

if(Thread.currentThread().isInterrupted()) {

System.out.println(x); // 10. Interrupted. The value modified before the interruption is also read

break;

}

}

},"t2");

t2.start();

new Thread(()->{

sleep(1);

x = 10;

t2.interrupt();

},"t1").start();

while(!t2.isInterrupted()) {

Thread.yield();

}

System.out.println(x); // 10

}

Mode 6:

- The writing of the default value of the variable (0, false, null) is visible to the reading of the variable by other threads (the most basic)

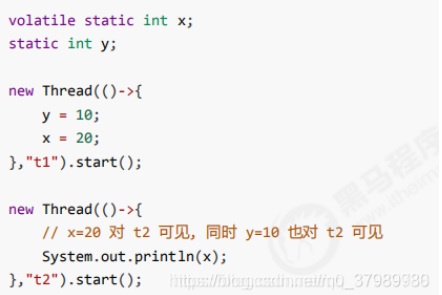

- It has transitivity. If x HB - > y and Y HB - > Z, then there is x HB - > Z, which is matched with volatile's anti instruction rearrangement. The following example is given

- Because volatile is added to x, a read barrier is added to the volatile static int x code to ensure that the changes of X and Y read are visible (including y, as long as it is OK under the read barrier); Through transitivity, the write operations of t2 thread to X and y are visible