catalogue

Zero. java thread understanding

0.1.2 kernel level thread KLT -- thread model (KLT) used by JAVA virtual machine

0.2 java thread and system kernel thread

Significance of 0.3 thread pool

0.4.2 how does the thread pool ensure thread safety under high parallel delivery

0.4.3 usage scenario of multithreading

0.4.4 creation method of multithreading

0.4.5} control the running sequence of multithreads -- join method

1, Basic concepts and usage examples of thread pool

2, java's own thread pool tool

2.1 newCachedThreadPool - not recommended

2.2 newFixedThreadPool - not recommended

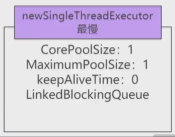

2.3 newSingleThreadExecutor - not recommended

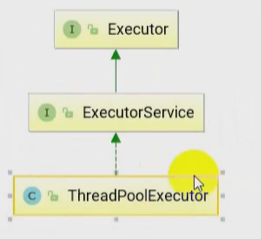

III. core method and architecture of thread pool

3.1 the most basic frame of the route pool

3.2.1 ThreadPoolExecutor parameter description

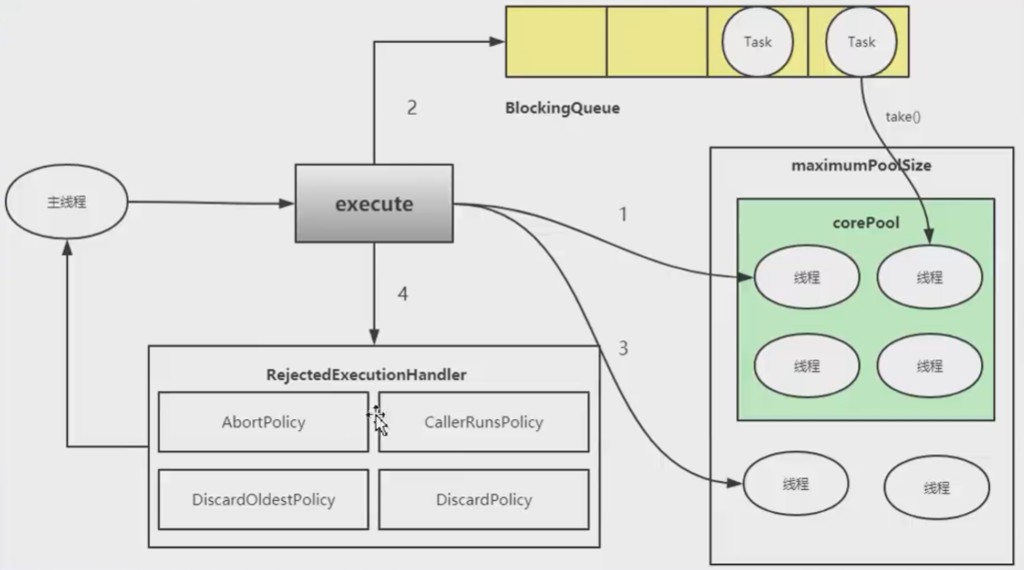

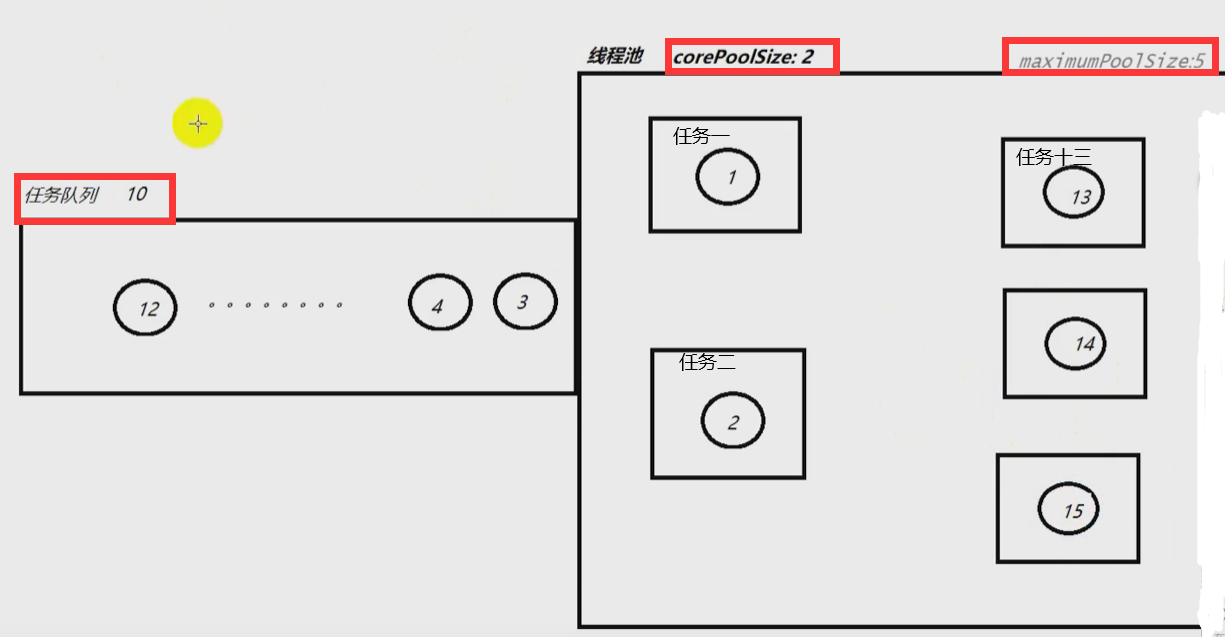

3.2.2 creation sequence of thread pool tasks and threads

3.3 three queues of thread pool

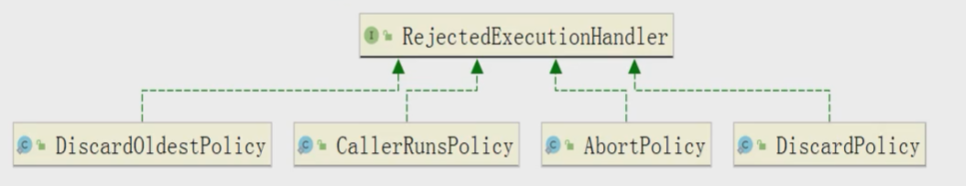

3.4 four rejection strategies of thread pool

4.2 submission priority and execution priority

4.2.2 submission priority and execution priority of thread pool

4.2.3 source code verification

4.3} thread pool processing flow

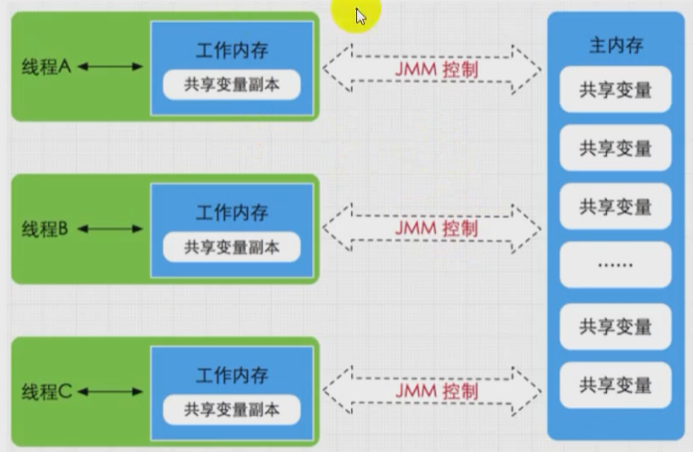

5, JVM memory model -- why thread safety issues occur

Vi. three features of java Concurrent Programming

6.2.2 example code of visibility problem

6.4.1 volatile keyword - ensure the visibility of variables

6.4.2 volatile keyword - mask instruction reordering

6.5.2 basic principle of synchronized:

6.6.2 advantages of lock over synchronized

6.6.3 difference between lock and synchronized

7, Inter thread communication in concurrent programming

7.1.2 difference between wait and sleep

7.2 practical interview questions

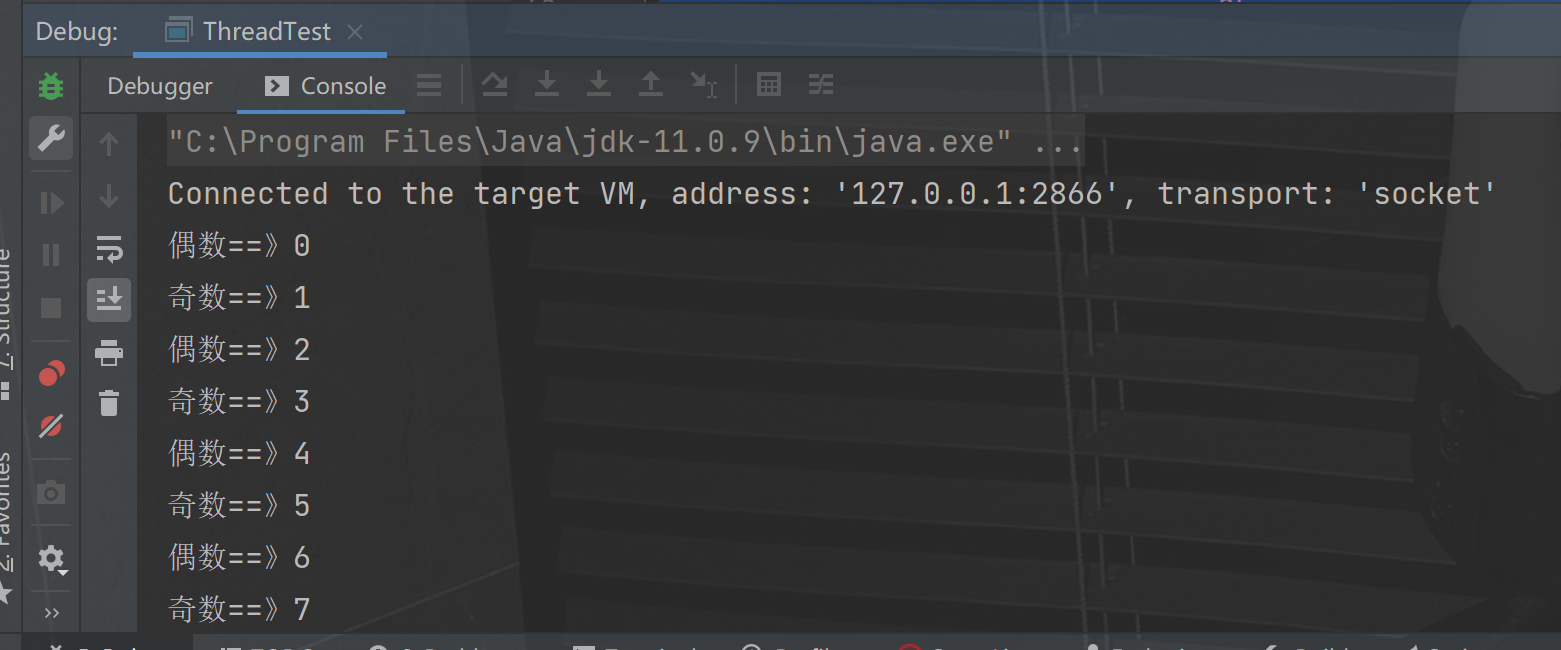

7.2.1 two threads, printing alternately 1 ~ 100

Zero. java thread understanding

Thread is the smallest unit for scheduling CPU, also known as lightweight process LWP (Light Weight Process)

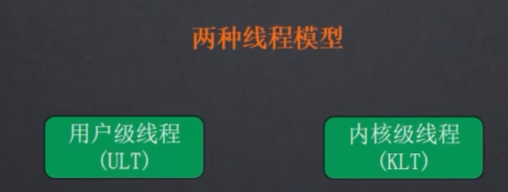

0.1 two thread models

0.1.1 user level thread ULT

- The implementation of user program does not depend on the core of the operating system. The application provides functions to create, synchronize, schedule and manage threads to control user threads.

- No user mode / kernel mode switching is required, and the speed is fast.

- The kernel is not aware of ULT. If a thread is blocked, the process (including all its threads) is blocked.

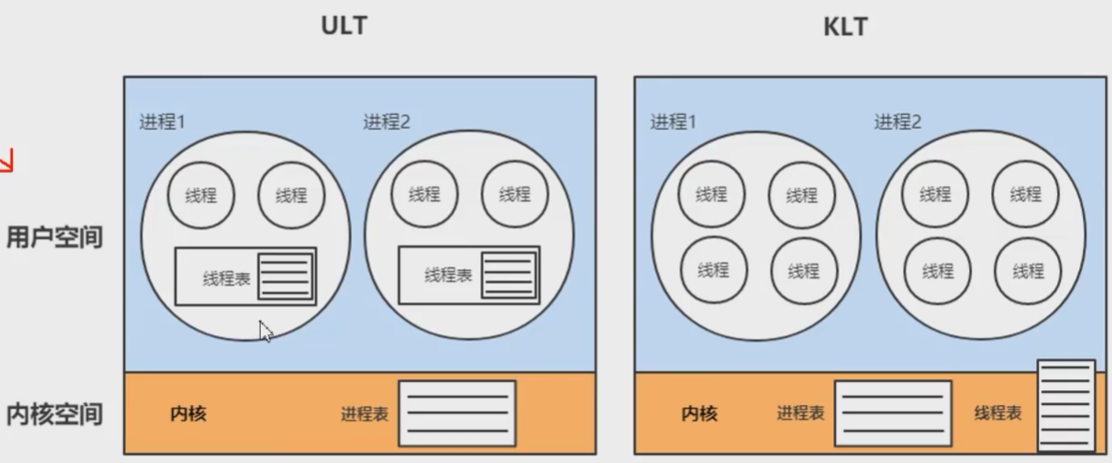

0.1.2 kernel level thread KLT -- thread model (KLT) used by JAVA virtual machine

- The system kernel manages threads (KLT). The kernel saves thread status and context information. Thread blocking will not cause process blocking.

- On multiprocessor systems, multithreading runs in parallel on multiprocessors.

- The creation, scheduling and management of threads are completed by the kernel, which is slower than ULT and faster than process operation.

0.2 java thread and system kernel thread

The creation of Java thread depends on the system kernel. The kernel thread is created by calling the system library through the JVM. The mapping relationship between kernel thread and Java thread is 1:1

Meaning of thread pool 3.0

Thread is a scarce resource. Its creation and destruction is a relatively heavy and resource consuming operation, while Java thread depends on kernel thread. Creating thread requires operating system state switching. In order to avoid excessive resource consumption, it is necessary to try to reuse thread to perform multiple tasks.

Thread pool is a thread cache, which is responsible for unified allocation, tuning and monitoring of threads.

When do I use thread pools?

- The processing time of a single task is relatively short

- The number of tasks to be processed is large

Thread pool advantage

- Reuse existing threads, reduce the overhead of thread creation and extinction, and improve performance

- Improve response speed. When the task arrives, the task can be executed immediately without waiting for the thread to be created.

- Improve thread manageability, unified allocation, tuning and monitoring.

0.4 threads

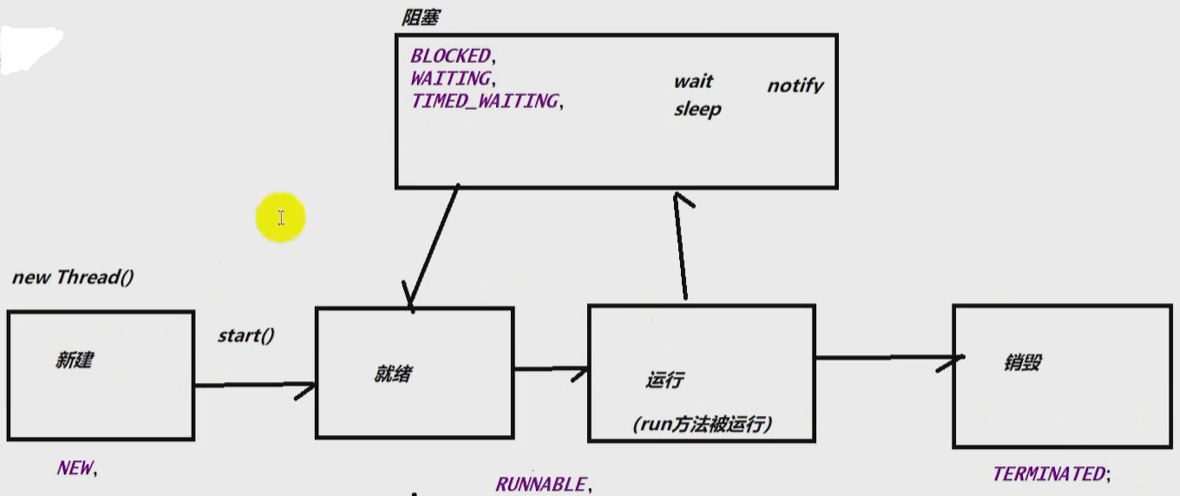

0.4.1 five thread states

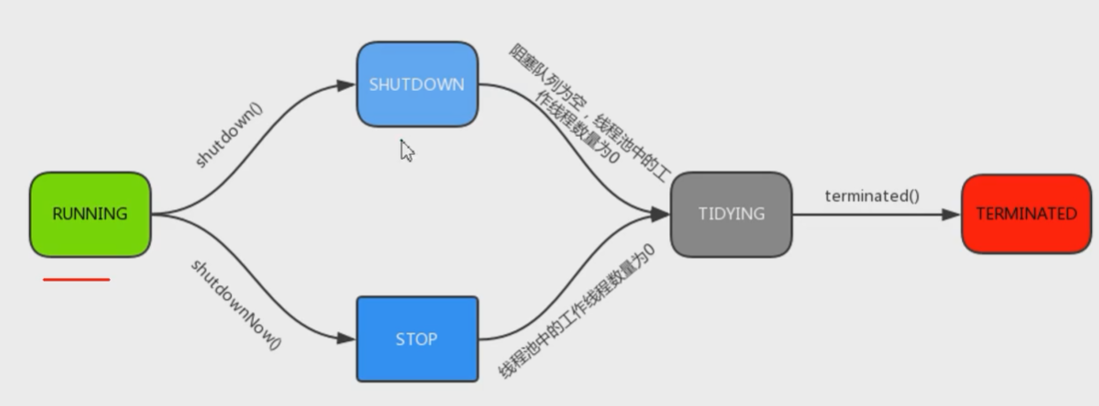

- Running can accept new tasks and handle added tasks

- Shutdown does not accept new tasks, but can handle added tasks

- Stop} does not accept new tasks, does not process added tasks, and interrupts the task being processed

- Tidying # all tasks have been terminated. The "number of tasks" recorded by ctl is 0. ctl is responsible for recording the running status of the thread pool and the number of active threads

- Terminated} when the thread pool is completely terminated, the thread pool changes to the terminated state

You can use the getState() interface to get the state of the thread

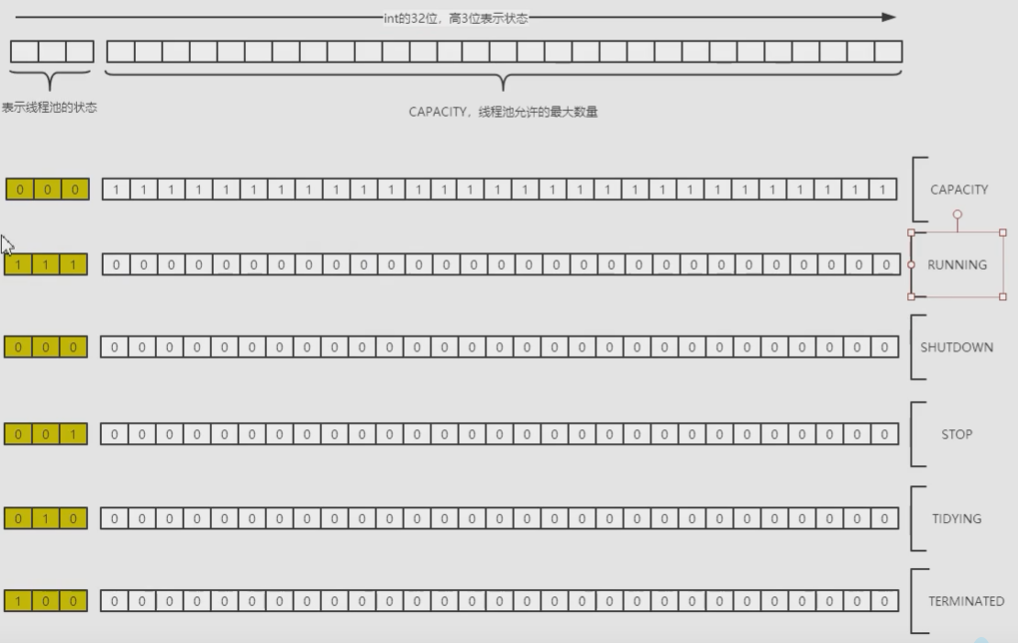

0.4.2 how does the thread pool ensure thread safety under high parallel delivery

Thread pool records the thread life state and the number of worker threads in an integer variable. Prevent atomic synchronization when using multiple variable records

private final AtomicInteger ct1 = new AtomicInteger (ct1of(RUNNING, 0));

private static fina1 int COUNT_BITS = Integer.SIZE - 3;

// The upper 3 bits record the thread pool life state

// The lower 29 bits record the current number of worker threads

private static final int CAPACITY = (1 << COUNT_BITS) - 1;

// runState is stored in the high-order bits

// -1 = 1111 1111 1111 1111 1111 1111 1111 1111

// -1 << COUNT_ Bits (i.e. 29) = 1110 million

private static final int RUNNING = -1 << COUNT_BITS;

private static final int SHUTDOWN = 0 << COUNT_BITS;

private static final int STOP = 1 << COUNT_BITS;

private static final int TIDYING = 2 << COUNT_BITS;

private static final int TERMINATED = 3 << COUNT_BITS;

// Packing and unpacking ct1

private static int runStateof(int c) { return C & ~CAPACITY; }

private static int workerCountof(int C) { return C & CAPACITY; }

private static int ct1of(int rs, int wc) { return rs | wC; }

0.4.3 usage scenario of multithreading

- 1. Background tasks, such as regularly sending mail to a large number of users (above 100w)

- 2. Asynchronous processing, such as statistical results, logging, sending SMS, etc

- 3. Distributed computing slice download and breakpoint continuation

Summary:

- When the number of tasks is relatively large and the efficiency can be improved through multithreading

- When asynchronous processing is required

- Work hours that occupy system resources and cause congestion

Multithreading can be used to improve efficiency

0.4.4 creation method of multithreading

Inherit Thread

public static void main(String[] args) {

MyThread myThread = new MyThread();

myThread.start();

}

//Inherit the Thread class to implement the run method

static class MyThread extends Thread {

@Override

public void run() {

for (int i = 0; i < 1000; i++) {

System.out.println("Output printing" + i);

}

}

}Implement Runnable

public static void main(String[] args) {

Thread thread = new Thread(new MyRunnable());

thread.start();

}

//Implement the run nab7e interface I method

static class MyRunnable implements Runnable {

@Override

public void run() {

for (int i = 0; i < 1000; i++) {

System.out.println("output:" + i);

}

}

}If you want the thread to give us a return value after executing the task. At this point, we need to execute the Callable interface

public static void main(String[] args) {

FutureTask<Integer> ft = new FutureTask<>(new MyCallable());

Thread thread = new Thread(ft);

thread.start();

try {

Integer num = ft.get();

System.out.println("Results obtained:" + num);

} catch (InterruptedException | ExecutionException e) {

e.printStackTrace();

}

}

static class MyCallable implements Callable<Integer> {

@Override

public Integer call() throws Exception {

int num = 0;

for (int i = 0; i < 1000; i++) {

System.out.println("output" + i);

num += i;

}

return num;

}

}0.4.4 multithreading stop

Use , interrupt() and , isInterrupted()

public static void main(String[] args) {

Thread t1 = new Thread(()->{

while (true){

try {

boolean interrupted = Thread.currentThread().isInterrupted();

if(interrupted){

System.out.println("Thread stopped");

break;

}else{

System.out.println("Thread executing");

}

} catch (Exception e) {

e.printStackTrace();

}

}

});

t1.start();

try {

Thread.sleep(2000);

t1.interrupt();

}catch (InterruptedException e){

e.printStackTrace();

}

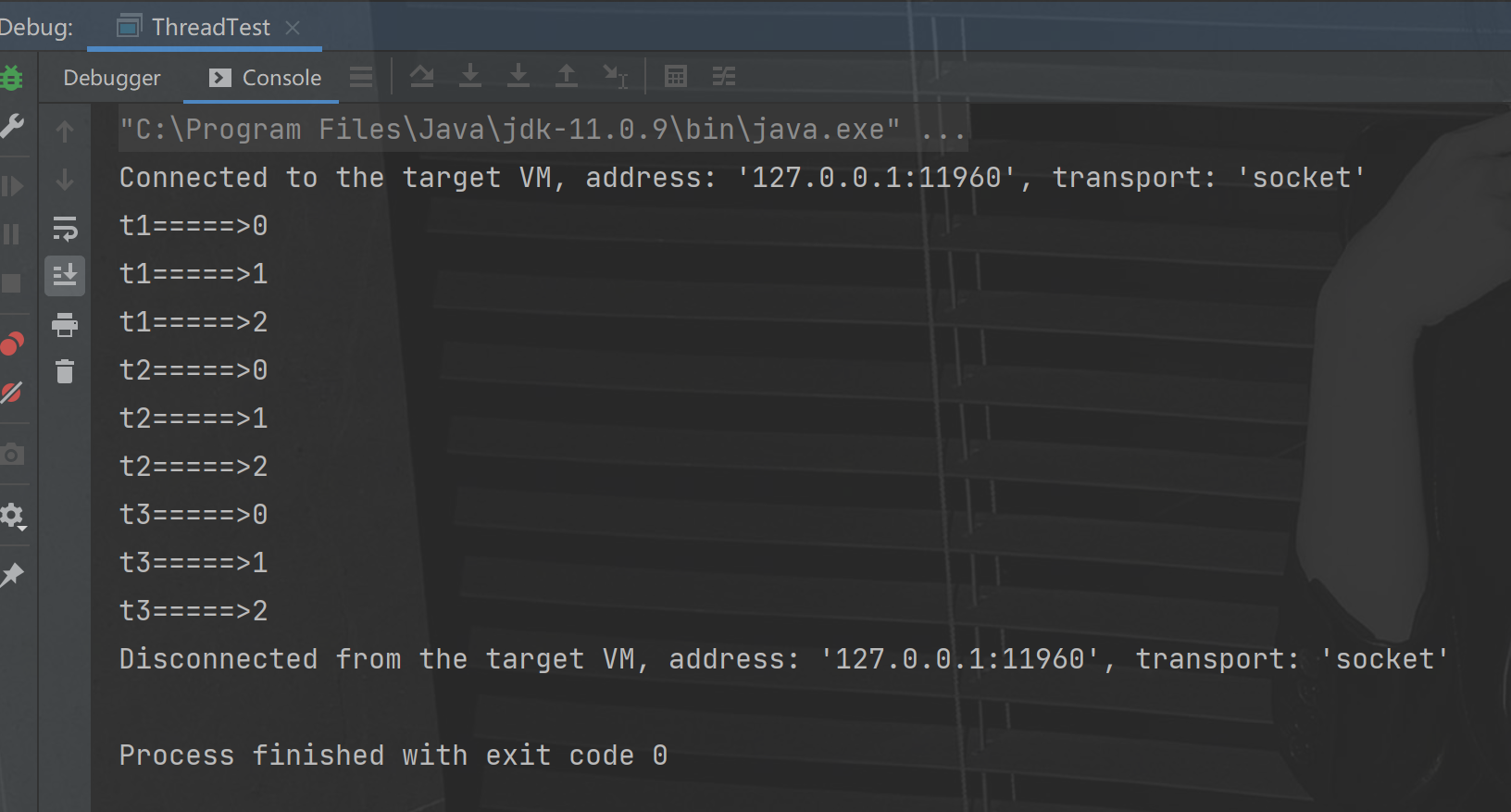

}0.4.5} control the running sequence of multithreads -- join method

Interview questions:

There are three threads: T1, T2 and T3. How do you ensure that T2 executes after T1 and T3 executes after T2

Thread method join

If a thread calls the join method, it will not run other processes until the thread ends This can control the execution order of threads.

Using the join method is equivalent to the queue jumping method of threads

public static void main(String[] args) {

Thread t1 = new Thread(() -> {

for (int i = 0; i < 3; i++) {

System.out.println("t1=====>" + i);

}

});

Thread t2 = new Thread(() -> {

try {

t1.join();

} catch (InterruptedException e) {

e.printStackTrace();

}

for (int i = 0; i < 3; i++) {

System.out.println("t2=====>" + i);

}

});

Thread t3 = new Thread(() -> {

try {

t2.join();

} catch (InterruptedException e) {

e.printStackTrace();

}

for (int i = 0; i < 3; i++) {

System.out.println("t3=====>" + i);

}

});

t1.start();

t2.start();

t3.start();

}

1, Basic concepts and usage examples of thread pool

1.1 basic concepts

Thread pool in Java is the most used concurrent framework. Almost all programs that need to execute tasks asynchronously or concurrently can use thread pool.

In the development process, rational use of thread pool can bring three benefits.

First: reduce resource consumption

Reduce the consumption caused by thread creation and destruction by reusing the created threads.

Second: improve response speed

When the task arrives, the task can be executed immediately without waiting for the thread to be created.

Third: improve thread manageability

Threads are scarce resources. If they are created without restrictions, they will not only consume system resources, but also reduce the stability of the system. Using thread pool can be uniformly allocated, tuned and monitored. However, to make rational use of thread pool, we must know its implementation principle like the back of our hand.

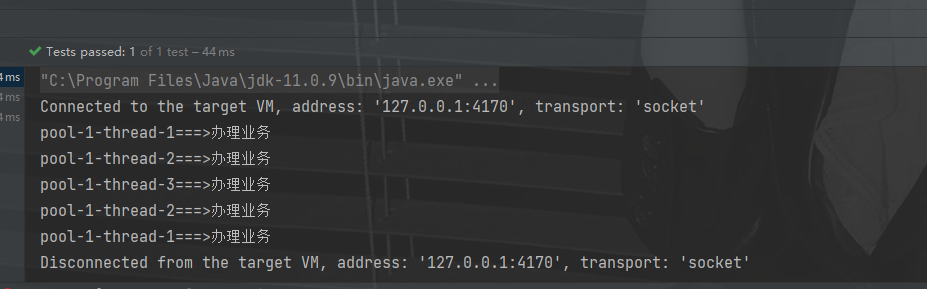

1.2 basic usage examples

public void testThread() {

//Where the thread pool factory parameter is used to create threads

ExecutorService executorService = new ThreadPoolExecutor(3, 5, 1L,

TimeUnit.SECONDS,

new ArrayBlockingQueue<>(3), Executors.defaultThreadFactory(),

new ThreadPoolExecutor.AbortPolicy());

for (int i = 0; i < 5; i++) {

executorService.execute(() -> {

System.out.println(Thread.currentThread().getName() + "===>Handle the business");

});

}

}

2, java's own thread pool tool

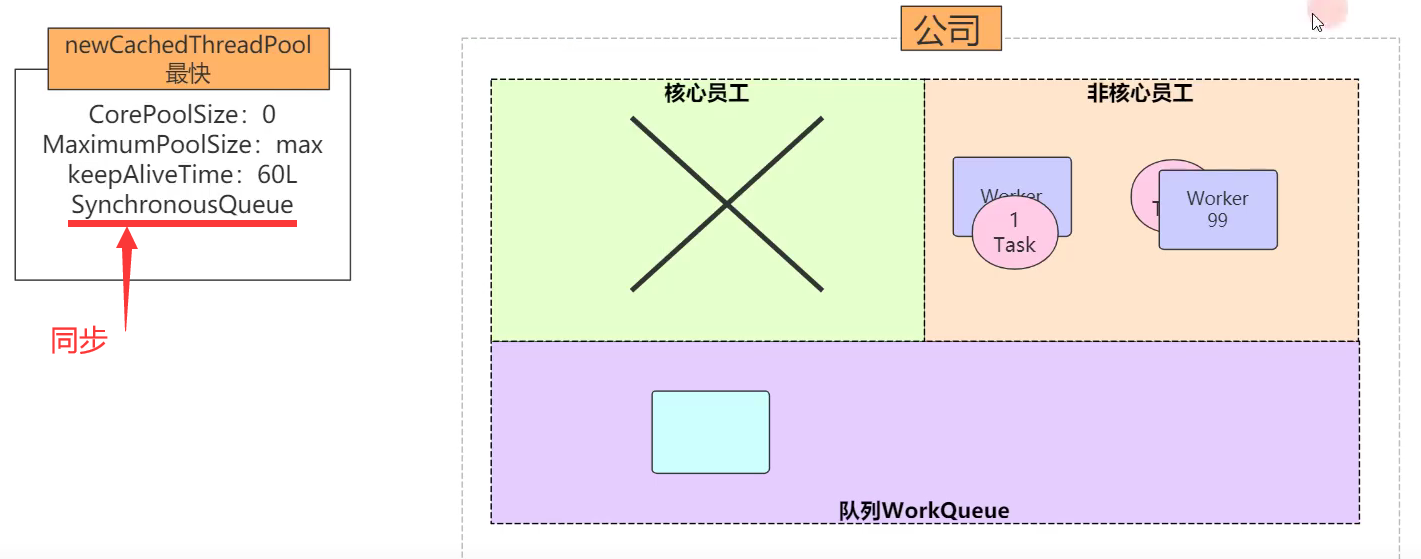

2.1 newCachedThreadPool - not recommended

2.1.1 source code

The underlying layer uses threadpooleffector

public static ExecutorService newCachedThreadPool() {

return new ThreadPoolExecutor(0, Integer.MAX_VALUE,

60L, TimeUnit.SECONDS,

new SynchronousQueue<Runnable>());

}2.1.2 features

There is no core thread. The waiting queue uses a synchronous queue. When a task occurs, a temporary thread is created to execute the task

2.1.3 problems

There will be no memory overflow, but it will waste CPU resources and cause the machine to get stuck.

2.2 newFixedThreadPool - not recommended

public static ExecutorService newFixedThreadPool(int nThreads) {

return new ThreadPoolExecutor(nThreads, nThreads,

0L, TimeUnit.MILLISECONDS,

new LinkedBlockingQueue<Runnable>());

}2.2.1 features

Specific core thread, no temporary thread. The waiting queue uses a linked list, and the waiting queue has an infinite length

2.2.2 problems

This causes a memory overflow because the waiting queue is infinitely long.

2.3 newSingleThreadExecutor - not recommended

public static ExecutorService newSingleThreadExecutor() {

return new FinalizableDelegatedExecutorService

(new ThreadPoolExecutor(1, 1,

0L, TimeUnit.MILLISECONDS,

new LinkedBlockingQueue<Runnable>()));

}2.3.1 features

Create a singleton thread pool. It will only use a unique worker thread to execute tasks to ensure that all tasks are executed in the specified order (FIFO, LIFO, priority).

There is only one core thread that executes tasks in turn.

2.4 newscheduledThreadPool

Create a fixed length routing pool to support regular and periodic task execution.

2.4.1 delayed execution

The following example is to execute the run method after 4s

public static void pool4() {

ScheduledExecutorService newScheduledThreadPool =

Executors.newScheduledThreadPool(5);

//Thread pool for deferred execution

//Parameter: task delay time unit

newScheduledThreadPool.schedule(new Runnable() {

public void run() {

System.out.println("i:" + 1);

}

}, 4, TimeUnit.SECONDS);

}2.4.2 periodic tasks

In the following example, a scheduled task is set. After the thread is started, the task is executed after 3s and every 4s

public static void pool4() {

ScheduledExecutorService newScheduledThreadPool =

Executors.newScheduledThreadPool(5);

//Thread pool for deferred execution

//Parameter: task delay interval unit

newScheduledThreadPool.scheduleAtFixedRate(new Runnable() {

public void run() {

System.out.println("i:" + 1);

}

}, 3, 4, TimeUnit.SECONDS);

}III. core method and architecture of thread pool

3.1 the most basic frame of the route pool

public interface Executor {

/**

* Executes the given command at some time in the future. The command

* may execute in a new thread, in a pooled thread, or in the calling

* thread, at the discretion of the {@code Executor} implementation.

*

* @param command the runnable task

* @throws RejectedExecutionException if this task cannot be

* accepted for execution

* @throws NullPointerException if command is null

*/

void execute(Runnable command);

}3.2 ThreadPoolExecutor

3.2.1 ThreadPoolExecutor parameter description

- int corePoolSize number of core threads

- int maximumPoolSize maximum number of threads

- long keepAliveTime, keep alive time -- refers to the survival time of external thread when no new task is executed

- Timeunit

- BlockingQueue < runnable > workqueue, task queue

- Rejectedexecutionhandler} saturation policy

3.2.2 creation sequence of thread pool tasks and threads

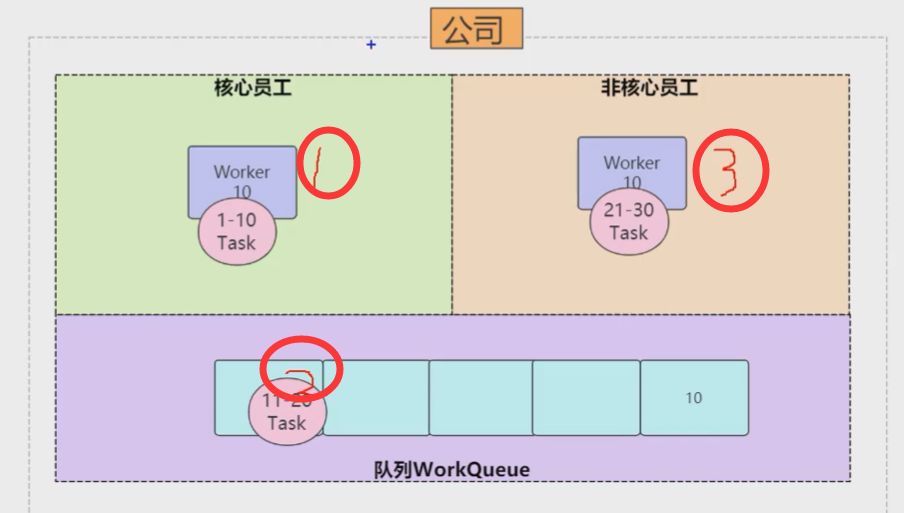

Suppose the number of core threads created by ThreadPoolExecutor is 2, the waiting queue length is 10, and the maximum number of threads is 5 When each task comes, the creation sequence of threads is as follows:

- When task 1 and task 2 come, a core thread will be created and executed respectively

- When the task comes from three to twelve, the core thread is full and needs to enter the waiting queue to wait

- When tasks 13 to 15 come, the core thread and waiting queue are full, so additional threads are created to execute tasks

- When task 16 comes, because the whole thread pool is full, feedback is made according to the saturation strategy

3.3 three queues of thread pool

3.3.1 SynchronousQueue

synchronousQueue has no capacity. It is a non buffered waiting queue. It is a blocking queue that does not store elements. It will directly hand over the task to the consumer. New elements can be added only after the added elements in the queue are consumed.

Blocking a queue using synchronousQueue generally requires that the maximum sizes be unbounded to avoid the thread refusing to execute an operation.

- When there are no tasks in the queue, the action of obtaining tasks will be blocked;

- When there are tasks in the queue, the action of saving tasks will be blocked

3.3.2 LinkedBlockingQueue

LinkedBlockingQueue is an unbounded cache waiting queue.

When the number of threads currently executing reaches the number of corePoolsize, the remaining elements will wait in the blocking queue. (so when using this blocking queue, max imumPoolsizes is equivalent to invalid), and each thread is completely independent of other threads.

Producers and consumers use independent locks to control data synchronization, that is, in the case of high concurrency, they can operate the data in the queue in parallel.

3.3.3 ArrayBlockingQueue

ArrayBlockingQueue is a bounded cache waiting queue. You can specify the size of the cache queue

When the number of executing threads is equal to the corePoolsize, the redundant elements are cached in the ArrayBlockingQueue queue and continue to execute when there are idle threads

When the ArrayBlockingQueue is full, it fails to join the ArrayBlockingQueue, and a new thread will be opened for execution

When the number of threads has reached the maximum poolsizes, an error will be reported when a new element tries to join ArrayBlocki ngQueue.

3.4 four rejection strategies of thread pool

/* Predefined RejectedExecutionHandlers */

/**

* A handler for rejected tasks that runs the rejected task

* directly in the calling thread of the {@code execute} method,

* unless the executor has been shut down, in which case the task

* is discarded.

*/

// Do not abandon the task, and request to call the main thread of the thread pool (such as main) to help execute the task

public static class CallerRunsPolicy implements RejectedExecutionHandler {

/**

* Creates a {@code CallerRunsPolicy}.

*/

public CallerRunsPolicy() { }

/**

* Executes task r in the caller's thread, unless the executor

* has been shut down, in which case the task is discarded.

*

* @param r the runnable task requested to be executed

* @param e the executor attempting to execute this task

*/

public void rejectedExecution(Runnable r, ThreadPoolExecutor e) {

if (!e.isShutdown()) {

r.run();

}

}

}

/**

* A handler for rejected tasks that throws a

* {@link RejectedExecutionException}.

*

* This is the default handler for {@link ThreadPoolExecutor} and

* {@link ScheduledThreadPoolExecutor}.

*/

// Throw an exception and discard the task

public static class AbortPolicy implements RejectedExecutionHandler {

/**

* Creates an {@code AbortPolicy}.

*/

public AbortPolicy() { }

/**

* Always throws RejectedExecutionException.

*

* @param r the runnable task requested to be executed

* @param e the executor attempting to execute this task

* @throws RejectedExecutionException always

*/

public void rejectedExecution(Runnable r, ThreadPoolExecutor e) {

throw new RejectedExecutionException("Task " + r.toString() +

" rejected from " +

e.toString());

}

}

/**

* A handler for rejected tasks that silently discards the

* rejected task.

*/

// Directly discard the task and discard the task with the shortest waiting time

public static class DiscardPolicy implements RejectedExecutionHandler {

/**

* Creates a {@code DiscardPolicy}.

*/

public DiscardPolicy() { }

/**

* Does nothing, which has the effect of discarding task r.

*

* @param r the runnable task requested to be executed

* @param e the executor attempting to execute this task

*/

public void rejectedExecution(Runnable r, ThreadPoolExecutor e) {

}

}

/**

* A handler for rejected tasks that discards the oldest unhandled

* request and then retries {@code execute}, unless the executor

* is shut down, in which case the task is discarded.

*/

// Directly discard the task and discard the task with the longest waiting time

public static class DiscardOldestPolicy implements RejectedExecutionHandler {

/**

* Creates a {@code DiscardOldestPolicy} for the given executor.

*/

public DiscardOldestPolicy() { }

/**

* Obtains and ignores the next task that the executor

* would otherwise execute, if one is immediately available,

* and then retries execution of task r, unless the executor

* is shut down, in which case task r is instead discarded.

*

* @param r the runnable task requested to be executed

* @param e the executor attempting to execute this task

*/

public void rejectedExecution(Runnable r, ThreadPoolExecutor e) {

if (!e.isShutdown()) {

e.getQueue().poll();

e.execute(r);

}

}

}

3.5 close thread pool

//Wait until all tasks in the task queue are completed before closing executor.shutdown(); //Close thread pool now executor.shutdownNow();

4, Thread pool workflow

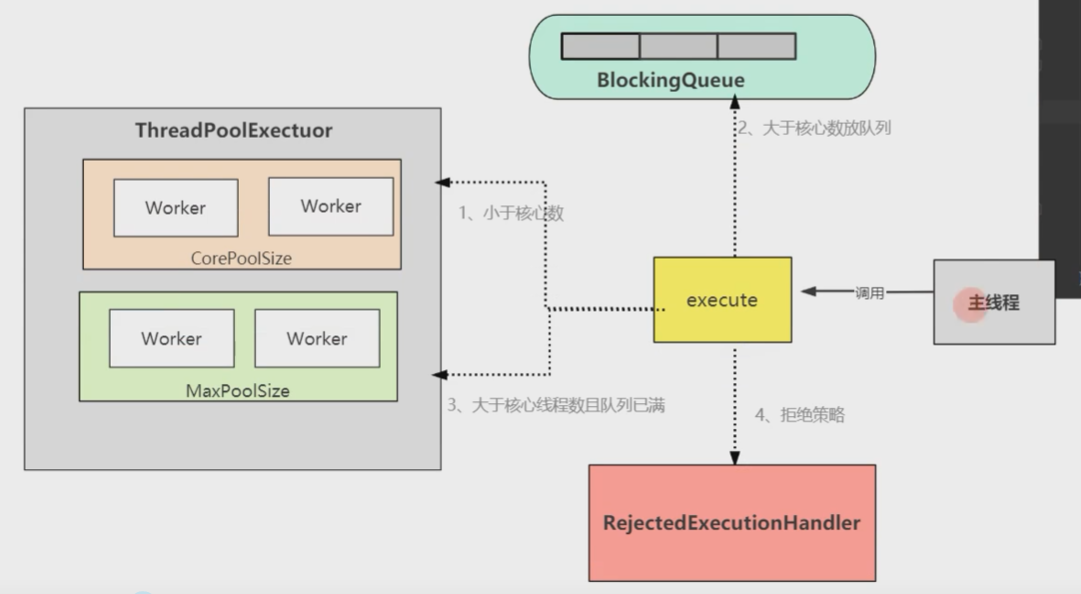

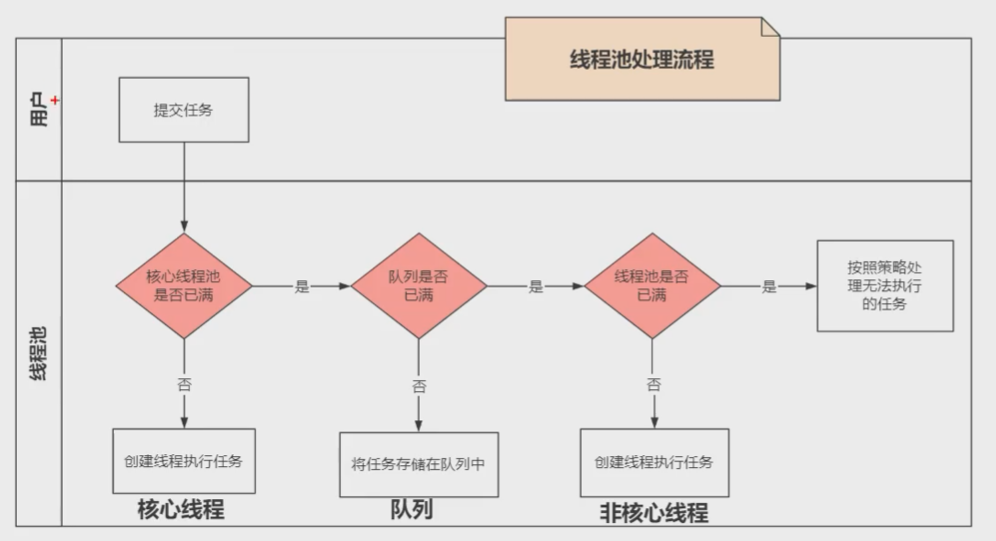

4.1} workflow of thread pool

- Determine the number of core threads

- Judge whether the task can be added to the task queue

- Determine the maximum number of threads

- Process the task according to the rejection policy of the thread pool

4.2 submission priority and execution priority

4.2.1 raising questions

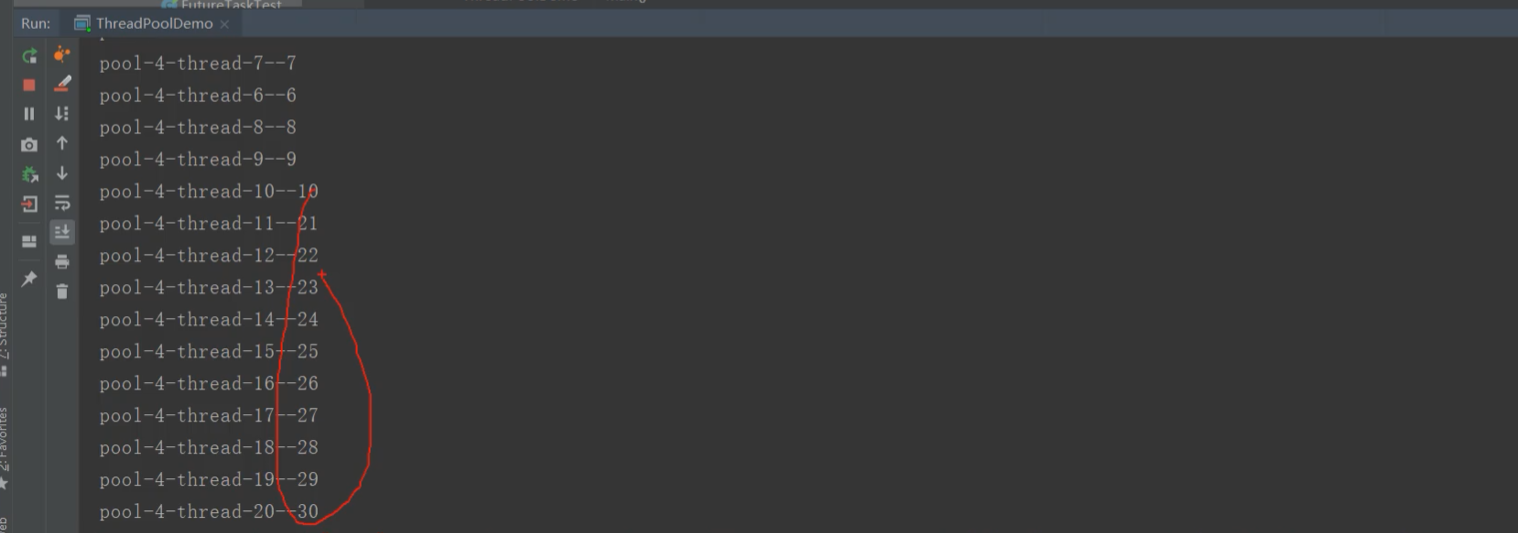

Using the thread pool, set the number of core threads to 10 and the maximum number of additional threads to 20. When executing a task, the output result is not output in order, but as shown in the figure. After 10, it directly jumps to 21

4.2.2 submission priority and execution priority of thread pool

The priority order of thread pool submission is {core thread > waiting queue > additional thread

The execution priority is: core thread > extra thread > wait queue

Therefore, the order of output data is 1-10, 21-30 and 11-19

4.2.3 source code verification

Source code of execute method in ThreadPoolExecutor class

public void execute(Runnable command) {

if (command == null)

throw new NullPointerException();

/*

* Proceed in 3 steps:

*

* 1. If fewer than corePoolSize threads are running, try to

* start a new thread with the given command as its first

* task. The call to addWorker atomically checks runState and

* workerCount, and so prevents false alarms that would add

* threads when it shouldn't, by returning false.

*

* 2. If a task can be successfully queued, then we still need

* to double-check whether we should have added a thread

* (because existing ones died since last checking) or that

* the pool shut down since entry into this method. So we

* recheck state and if necessary roll back the enqueuing if

* stopped, or start a new thread if there are none.

*

* 3. If we cannot queue task, then we try to add a new

* thread. If it fails, we know we are shut down or saturated

* and so reject the task.

*/

int c = ctl.get();

if (workerCountOf(c) < corePoolSize) {

if (addWorker(command, true))

return;

c = ctl.get();

}

if (isRunning(c) && workQueue.offer(command)) {

int recheck = ctl.get();

if (! isRunning(recheck) && remove(command))

reject(command);

else if (workerCountOf(recheck) == 0)

addWorker(null, false);

}

else if (!addWorker(command, false))

reject(command);

}Code analysis

4.3} thread pool processing flow

5, JVM memory model -- why thread safety issues occur

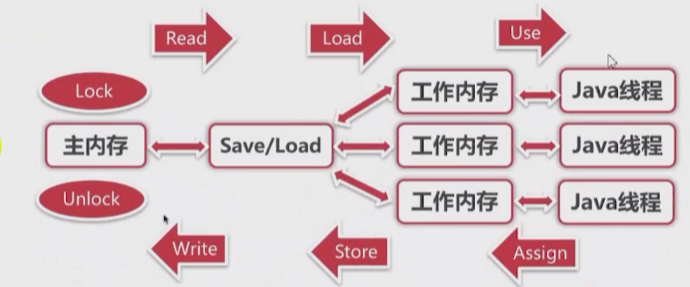

Java Memory Model (Java Memory Mode1, JMM for short).

JMM itself is an abstract concept and does not really exist. It describes a set of rules or specifications that define the access methods of various variables (including instance fields, static fields and elements constituting array objects) in the program.

Since the running entity of the JVM is a thread, when each thread is created, the JVM will create a working memory (called stack space in some places) for it to store thread private data.

The Java memory model specifies:

All variables are stored in main memory, which is a shared memory area that can be accessed by all threads.

The operation of a thread on a variable (reading, assignment, etc.) must be carried out in the working memory - first copy the variable from the main memory to its own working memory space, and then operate the variable. After the operation is completed, write the variable back to the main memory. The variable in the main memory cannot be operated directly. The copy of the variable in the main memory is stored in the working memory

As mentioned earlier, the working memory is the private data area of each thread, so different threads cannot access each other's working memory. The communication (value transfer) between threads must be completed through the main memory. The brief access process is as follows:

Vi. three features of java Concurrent Programming

Because of the JMM memory model and the design of java language, we may often encounter the following problems in concurrent programming. These problems are called three characteristics of concurrent programming:

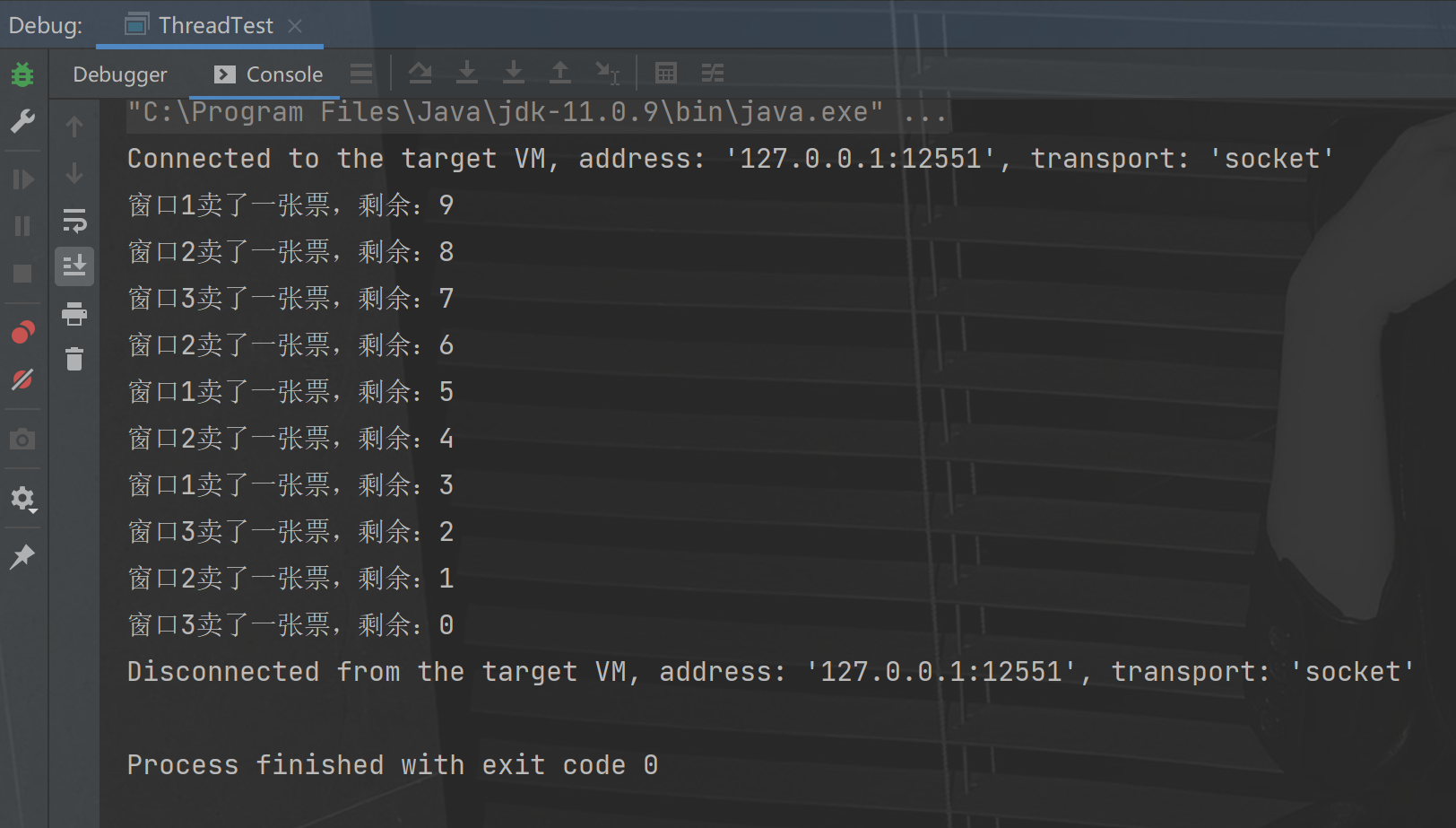

6.1 atomicity

6.1.1 basic concepts

Atomicity, that is, one or more operations, either all execute and are not interrupted during execution, or all do not execute. (mutually exclusive access is provided, and only one thread can access at the same time)

It can be solved by locking

6.1.2 code example

package rudy.study.language.thread;

import org.junit.jupiter.api.Test;

import org.springframework.stereotype.Component;

import java.util.concurrent.*;

/**

* @author rudy

* @date 2021/7/18 18:33

*/

@Component

public class ThreadTest {

static int ticket = 10;

public static void main(String[] args) {

Object o = new Object();

Runnable runnable = () -> {

while (true) {

try {

Thread.sleep(1);

} catch (InterruptedException e) {

e.printStackTrace();

}

//When using synchronized, you need to use an object as a lock

synchronized (o) {

if (ticket > 0) {

ticket--;

System.out.println(Thread.currentThread().getName() +

"Sold a ticket, the rest:" + ticket);

} else {

break;

}

}

}

};

Thread t1 = new Thread(runnable,"Window 1");

Thread t2 = new Thread(runnable,"Window 2");

Thread t3 = new Thread(runnable,"Window 3");

t1.start();

t2.start();

t3.start();

}

}

6.2 visibility

6.2.1 basic concepts

When multiple threads access the same variable, - threads modify the value of the variable, and other threads can immediately see the modified value.

If two threads are on different CPUs, the value of i changed by thread 1 has not been refreshed to the main memory, and thread 2 uses i, then the value of i must be the same as before, and the thread 1 does not see the modification of variables.

This is the problem of visibility.

6.2.2 example code of visibility problem

package rudy.study.language.thread;

import org.springframework.stereotype.Component;

/**

* @author rudy

* @date 2021/7/18 18:33

*/

@Component

public class ThreadTest {

private static boolean flag = true;

public static void main(String[] args) throws InterruptedException {

new Thread(() -> {

System.out.println("1 Thread 1 starts and executes while loop");

long num = 0;

while (flag) {

num++;

}

System.out.println("1 Thread 1 executes, num=" + num);

}).start();

Thread.sleep(1);

new Thread(() -> {

System.out.println("2 Thread No. 1 starts and changes the variable flag Value is false");

setStop();

}).start();

}

public static void setStop() {

flag = false;

}

}6.3 order

When the compiler executes the code, it may optimize the code, resulting in the execution order of the code not as expected.

6.4 volatile keyword

The function is that variables are visible between multiple threads. And it can ensure the order of the modified variables

6.4.1 volatile keyword - ensure the visibility of variables

When a variable modified by volatile keyword is modified by one thread, other threads can immediately get the modified result.

When a thread modifies a variable modified by volatile keyword, the virtual opportunity forces the changed result to be synchronized to main memory.

When the value of a volatile modified variable in main memory is updated, the virtual opportunity forces the new value to be synchronized to each thread using the variable.

6.4.2 volatile keyword - mask instruction reordering

Instruction reordering is a means for compiler and processor to optimize the program efficiently. It can only ensure that the result of program execution is correct, but it can not ensure that the operation order of the program is consistent with the code order.

This is not a problem in a single thread, but it can be a problem in multiple threads.

A very classic example is to add volatile to the fields in the singleton method at the same time to prevent instruction reordering.

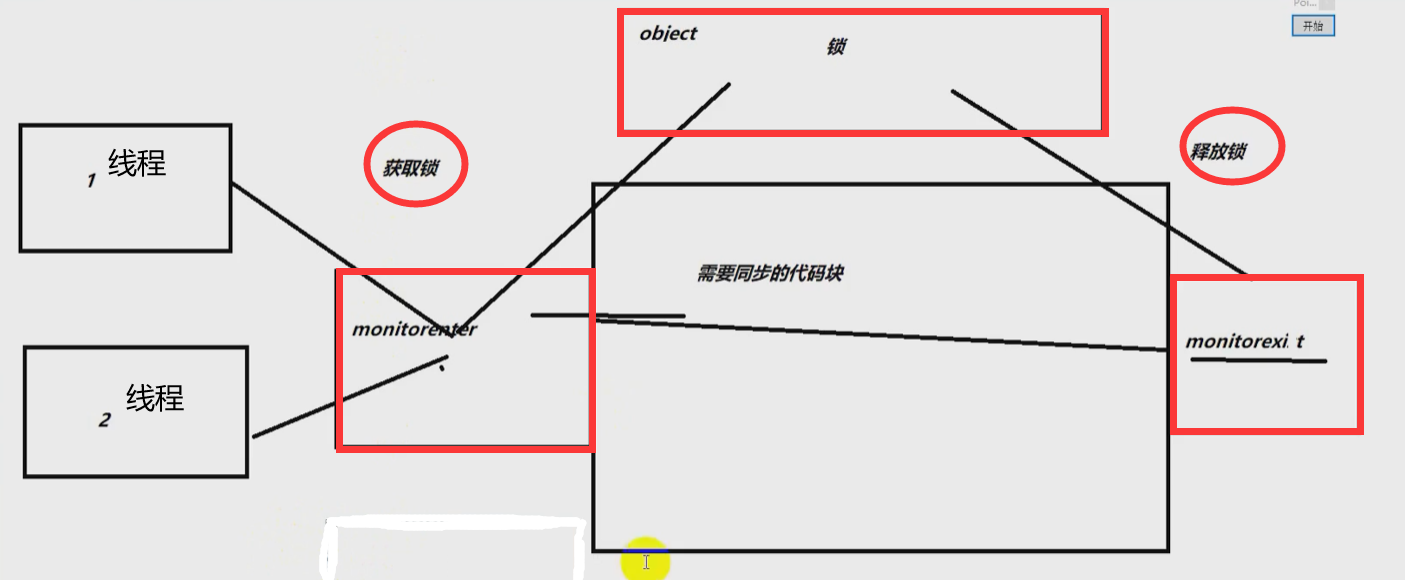

6.5 keyword synchronized

6.5.1 basic concepts

- It can ensure that only one thread can execute a method or a code block at the same time

- synchronized ensures that a thread's changes are visible, that is, it can replace volatile

synchronized must use an object as a lock

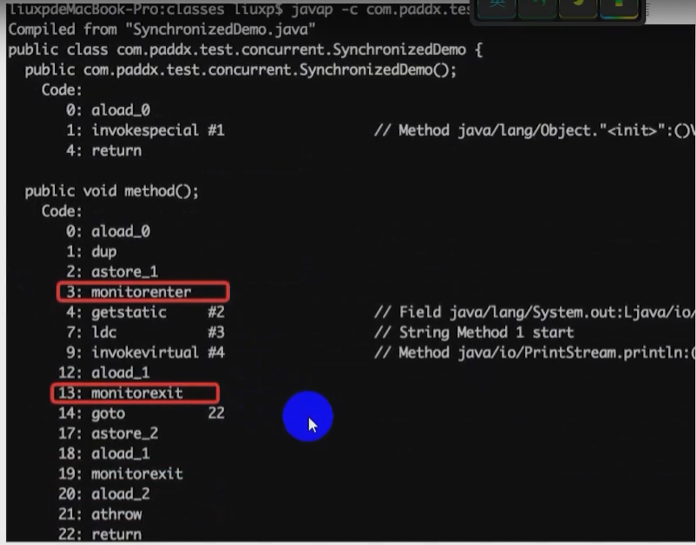

6.5.2 basic principle of synchronized:

The lock is implemented with the help of the jvm.

The JVM synchronizes methods and synchronization blocks by entering and exiting the object Monitor.

The specific implementation is to add a monitor before the synchronous method call after compilation Enter instruction, insert monitor. At the exit method and exception Exit instruction.

Its essence is to acquire an object Monitor, and this acquisition process is exclusive, so that only one thread can access it at the same time. For the thread that does not acquire the lock, it will block the method entry until the thread that acquires the lock monitors Exit before attempting to continue acquiring the lock.

6.6 lock lock

In jdk1 After 5, the Lock interface (and related implementation classes) is added to the contract to realize the Lock function. The Lock interface provides a synchronization function similar to the synchronized keyword, but it needs to manually obtain and release the Lock when in use.

6.6.1 lock lock usage

Lock lock = new ReentrantLock();

lock.lock();

try {

//Thread safe operations may occur

} finally {

//-Set in finally to release the lock

//You can't get the lock in a try, because it's possible to throw an exception when getting the lock

lock.unlock();

}6.6.2 advantages of lock over synchronized

1. tryLock is supported to try to obtain the lock

lock has a tryLock interface. When tryLock fails, no other things can be done

synchronized must wait for the lock to release

2. Support read / write lock

6.6.3 difference between lock and synchronized

1) Lock is an interface, and synchronization zed is a keyword in Java

synchroni zed is a built-in language implementation; The synchronized keyword can directly modify methods or code blocks, while lock can only modify code blocks

2) synchronized automatically releases the lock held by the thread when an exception occurs

Therefore, deadlock will not occur; When an exception occurs in Lock, if you do not actively release the Lock through unLock(), it is likely to cause deadlock. Therefore, when using Lock, you need to release the Lock in the finally block;

3) Lock allows threads waiting for locks to respond to interrupts, but synchronized does not

When synchronized is used, the waiting thread will wait and cannot respond to interrupts;

4) Through Lock, you can know whether you have successfully obtained the Lock, but synchronized cannot. (provide tryLock)

5) Lock can improve the efficiency of reading operations by multiple threads. (provide read / write lock)

In terms of performance, if the competition for resources is not fierce, the performance of the two is similar. When the competition for resources is very fierce (that is, a large number of threads compete at the same time), the performance of lock is much better than that of synchronized

Therefore, in the specific use, it should be selected according to the appropriate situation.

7, Inter thread communication in concurrent programming

7.1 basic concepts

7.1.1 wait,notify

When multiple threads are processing the same resource and different tasks, thread communication is needed to help solve the use or operation of the same variable between threads.

So we introduce the wait wake mechanism: (wait(), notify())

wait(), notify(), and notifyA11() are three methods defined in the object class, which can be used to control the status of threads.

These three methods eventually call jvm level native methods. There may be some differences depending on the jvm running platform.

- If the object calls the wait method, the thread holding the object will hand over the control of the object and be in a waiting state.

- If an object calls the notify method, it will notify a thread waiting for control of the object to continue running.

- If an object calls the notifyAll method, all threads waiting for control of the object will be notified to continue running.

Note: the call to the wait() method must be placed in a synchronized method or synchronized block—— Because the wait method is used to release the lock, the lock must be guaranteed

7.1.2 difference between wait and sleep

The sleep() method belongs to the Thread class, and the wait() method belongs to the object class

During the call to the sleep() method, the thread does not release the object lock.

When the wait() method is called, the thread will give up the object lock and enter the wait lock pool waiting for this object. Only after the notify() method is called for this object will the thread enter the object lock pool to get the object lock and enter the running state.

The sleep() method causes the program to suspend execution for the specified time and give up the cpu to other threads, but its monitoring state remains. When the specified time expires, it will automatically resume running state.

7.2 practical interview questions

7.2.1 two threads, printing alternately 1 ~ 100

Thread A prints odd numbers and thread B prints even numbers.

package rudy.study.language.thread;

import lombok.AllArgsConstructor;

import org.springframework.stereotype.Component;

/**

* @author rudy

* @date 2021/7/18 18:33

*/

@Component

public class ThreadTest {

public static void main(String[] args) {

Integer lock = 0;

NumObj numObj = new NumObj();

numObj.num = 0;

new Thread(new JiNum(numObj)).start();

new Thread(new OuNum(numObj)).start();

}

@AllArgsConstructor

static class JiNum implements Runnable {

private NumObj numObj;

@Override

public void run() {

while (true) {

synchronized (numObj) {

if (numObj.num < 100) {

if (numObj.num % 2 != 0) {

System.out.println("Odd number==>" + numObj.num);

numObj.num++;

numObj.notify();

} else {

try {

numObj.wait();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

} else {

break;

}

}

}

}

}

@AllArgsConstructor

static class OuNum implements Runnable {

private NumObj numObj;

@Override

public void run() {

while (true) {

synchronized (numObj) {

if (numObj.num < 100) {

if (numObj.num % 2 == 0) {

System.out.println("even numbers==>" + numObj.num);

numObj.num++;

numObj.notify();

} else {

try {

numObj.wait();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

} else {

break;

}

}

}

}

}

}