catalogue

1.2 introduction to JAVA Memory Model

2.2 optimistic lock and pessimistic lock

2.4 exclusive lock and shared lock

2.5 reentrant lock and non reentrant lock

III. synchronization mechanism in JAVA

4, Waiting and notification mechanism between threads

4.1 waiting / notification mechanism of implicit lock

4.1.1} wait and notify/notifyAll methods

4.1.2 application of wait / notify

4.1.3 recommended usage of waiting / notification mechanism of implicit lock

4.2 waiting / notification mechanism of explicit lock

1, Synchronization in JAVA

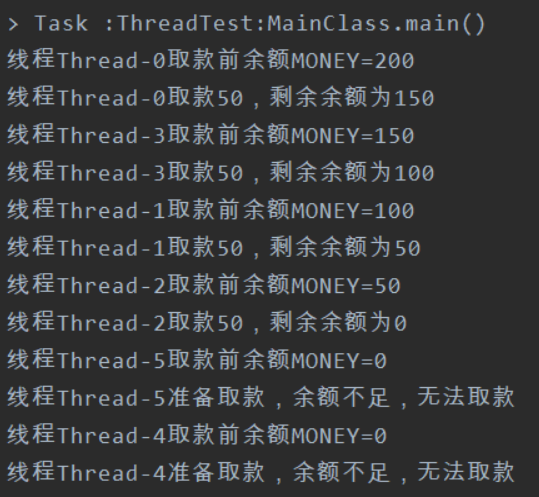

What happens if multiple threads are modifying / deleting / reading a data? It can be imagined that the data operated by each thread may not be up-to-date, which will bring many problems. For example, in the following example, we simulated the Money withdrawal operation, defined a Money variable value of 200, and then opened 6 threads to withdraw Money. The code is as follows:

package com.example.threadtest;

import static com.example.threadtest.MainClass.MONEY;

public class MyThread extends Thread {

@Override

public void run() {

super.run();

getMoney();

}

private void getMoney() {

String threadName = getName();

System.out.println("thread "+threadName+"Balance before withdrawal MONEY="+MONEY);

if (MONEY>0) {

MONEY=MONEY-50;

System.out.println("thread "+threadName+"Withdraw 50 and the remaining balance is"+MONEY);

}else {

System.out.println("thread "+threadName+"Ready to withdraw, insufficient balance, unable to withdraw");

}

}

}ppackage com.example.threadtest;

import java.util.concurrent.locks.ReentrantLock;

public class MainClass {

public static int MONEY=200;

public static void main(String[] args) throws Exception {

//Open six threads to withdraw money

MyThread myThread=new MyThread();

MyThread myThread2=new MyThread();

MyThread myThread3=new MyThread();

MyThread myThread4=new MyThread();

MyThread myThread5=new MyThread();

MyThread myThread6=new MyThread();

myThread.start();

myThread2.start();

myThread3.start();

myThread4.start();

myThread5.start();

myThread6.start();

}

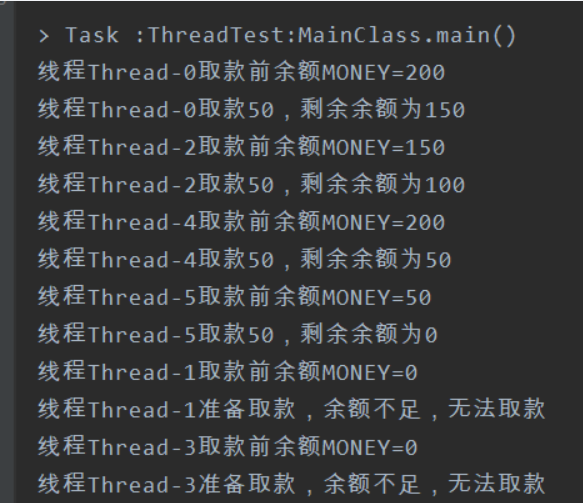

}After the code is executed, the printed results are as follows:

We can see that there are multiple threads operating the MONEY field at the same time. Thread 0 and thread 2 have taken 50 blocks respectively. When thread 4 accesses, the balance queried is still 200, which leads to synchronization problems.

In order to solve the above problems, JAVA provides a variety of mechanisms to ensure the synchronization relationship between multiple threads.

1.1 several concepts

Atomicity is similar to the atomicity concept of database transactions, that is, an operation (which may contain multiple sub operations) is either fully executed (effective) or not executed at all (ineffective).

Visibility refers to that when multiple threads access shared variables concurrently, one thread can immediately see the modification of shared variables by other threads.

The efficiency of CPU reading data from main memory is relatively low. Now mainstream computers have several levels of cache. When each thread reads a shared variable, it will load the variable into the cache of its corresponding CPU. After modifying the variable, the CPU will update the cache immediately, but it does not necessarily write it back to the main memory immediately (in fact, the time to write back to the main memory is unexpected). At this time, when other threads (especially threads not executing on the same CPU) access the variable, what they read from the main memory is the old data, not the updated data of the first thread.

Sequencing means that the program is executed in the order of the code.

Take the following code as an example

| 1 | boolean started = false; // Statement 1 |

In terms of code order, the above four statements should be executed in sequence, but in fact, when the JVM really executes this code, it is not guaranteed that they must be executed in this order. In order to improve the overall execution efficiency of the program, the processor may optimize the code. One of the optimization methods is to adjust the code order and execute the code in a more efficient order. Although the CPU does not guarantee that the program is executed completely in the code sequence, it will ensure that the final execution result of the program is consistent with that of the code sequence.

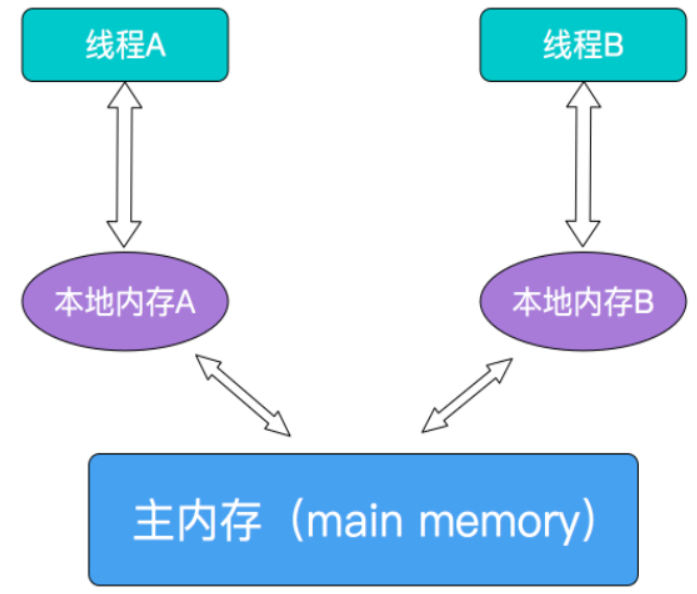

1.2 introduction to JAVA Memory Model

In Java, it can be considered that there is a memory shared by all threads, which is usually called main memory. In addition, each thread has its own private local memory. If the thread needs to use the data in main memory, it will first copy the data in main memory and save it to local memory, All operations of threads on variables must be carried out in private local memory, rather than directly operating variables in main memory. The following figure is a schematic diagram of the JAVA memory model. It should be noted that the main memory and local memory mentioned above are abstract concepts and do not necessarily correspond to the real cpu cache and physical memory.

It is conceivable that if the operation tasks of multiple threads need to operate on the same shared data, because threads have to copy their own local memory first, the copy of main memory data copied by one thread may be the value before other threads modify, which will lead to serious problems. In order to prevent this situation, JAVA provides many locking and synchronization mechanisms to deal with this problem.

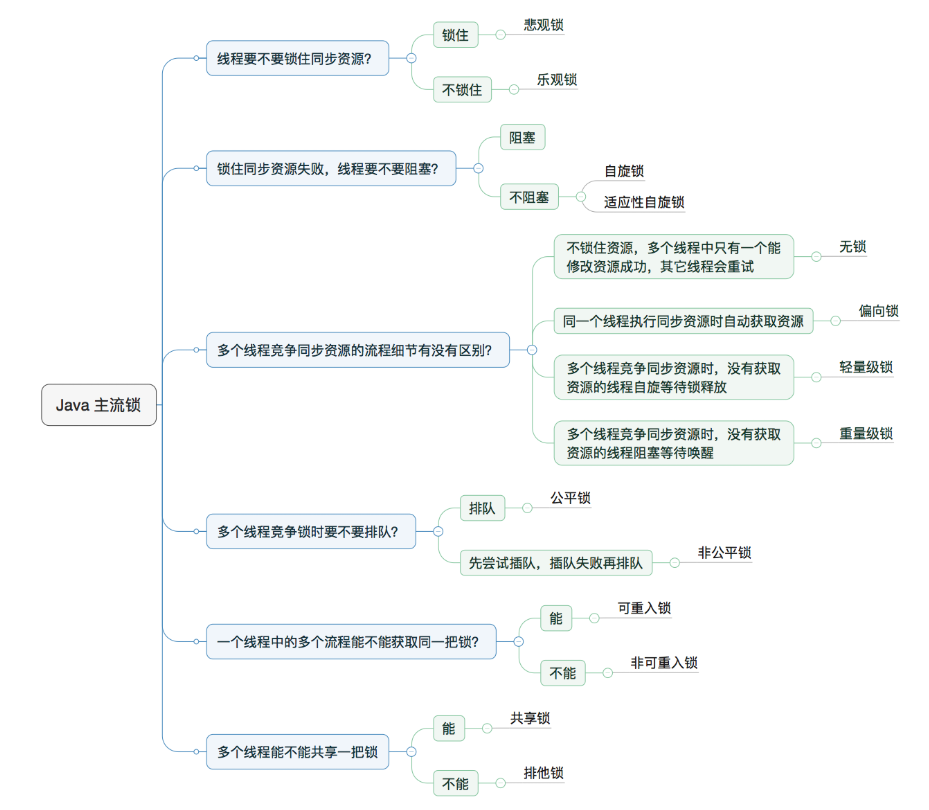

2, Lock

2.1 concept of lock

Locks can be seen everywhere in daily life. For example, the bathroom can only accommodate one person. When one person goes in and uses it, he will lock it to ensure that others can't come in and avoid embarrassment. After this person uses the bathroom, unlock it, and others can continue to come in and use it. The same is true in JAVA. When multiple threads need to perform operations on shared resources, a lock can be added before the operation and unlocked after the operation, so the synchronization problem described above will not occur. It can be said that in a multi-threaded environment, the lock is an essential component. There are many kinds of locks with different functions and use scenarios. We can divide locks into different categories from different angles. Next, let's introduce the common classifications of locks.

2.2 optimistic lock and pessimistic lock

In real life, some people are very optimistic and think of the good in everything, while others are very pessimistic and think of the worst in everything. Locks are the same, divided into optimistic locks and pessimistic locks.

Optimistic lock: always assume the best situation. Every time someone goes to get the data, they think that others just can't modify it, so they won't lock it. However, when someone wants to update the data, they will judge whether others have updated the data during this period. If they have, they will try again to obtain the latest data, but if there are too many write operations, It will retry all the time. Using optimistic lock will affect efficiency, but it will not be locked when data needs to be read. Therefore, optimistic lock is suitable for scenarios with more read operations and less write operations, which can improve throughput.

Pessimistic lock: always assume the worst case. When A goes to get the data, he will think that A will modify the data, so he will lock it first. Here, if B wants to get the data, it will be blocked until A gets the lock and B gets the lock (shared resources are only used by one thread at A time, blocked by other threads, and then transferred to other threads after use). In other words, in order to ensure security, even read operations may be blocked, so pessimistic lock is suitable for scenarios with less read and more write.

2.3 fair lock and unfair lock

In life, there are many examples of fairness and unfairness. For example, if we go to the bank to handle business, assuming that everyone believes in absolute fairness, everyone should take the number in order according to the principle of first come, first served, and then wait to be called. When it comes to our number, naturally, some staff ask us to handle business; But in reality, there is often no absolute fairness, because there are always speculators who like to try to plug in a team first. As soon as they enter the bank, they go directly to the counter to handle business. In case everyone is weak and has no opinion, the person who cuts in the queue can directly handle business without taking the number or waiting for the number. In case everyone has an opinion, educate the person who cuts in the queue, He can only go to the back and get the number, and then wait to be called; The same is true in locks, which are divided into fair locks and unfair locks.

Fair lock: multiple threads obtain locks in the order of applying for locks. Threads will directly enter the queue to queue. They will always be the first in the queue to obtain locks. In other words, everyone strictly abides by the rules, stands in line honestly and never cuts in line.

Advantages: due to the implementation of absolute fairness, as long as we queue in order, we can get to the top of the queue sooner or later. Therefore, all threads can get resources and will not starve to death in the queue.

Disadvantages: except for the first thread in the queue, other threads will block. After the thread at the head of the queue releases the lock, the CPU needs to wake up the next thread every time to operate resources. The cost of waking up the blocked thread will be very large.

Unfair lock: when multiple threads acquire a lock, they will directly try to obtain it. If they cannot obtain it, they will enter the waiting queue. If they can obtain it, they will directly obtain the lock. In other words, whenever someone needs to obtain the lock, first go to the front and insert a team. If others have no opinion, they will successfully insert the queue and directly obtain the lock; If you encounter a tough, just don't let you jump in the queue, and then you obediently run to the back of the queue.

Advantages: each thread will actively "jump in the queue" first. In case of successful queue jumping, you can directly operate resources. It is not necessary for all threads to passively wait for the CPU to wake up. In this way, the overhead of CPU wake-up threads can be reduced, and the overall throughput efficiency will be high.

Disadvantages: just imagine that if someone can always jump the queue successfully, it is very unfair to those threads in the middle of the queue. They may not get the lock all the time, or they may not get the lock for a long time, leading to starvation.

2.4 exclusive lock and shared lock

Exclusive lock: an exclusive lock, also known as an exclusive lock, means that the lock can only be held by one thread at A time. If thread T adds an exclusive lock to data A, other threads cannot add any type of lock to A. The thread that obtains the exclusive lock can read and modify the data.

ReentrantLock and synchronized are exclusive locks

Shared lock: A shared lock means that the lock can be held by multiple threads. If thread T adds A shared lock to data A, other threads can only add A shared lock to A, not an exclusive lock. The thread that obtains the shared lock can only read data and cannot modify data. Exclusive locks and shared locks are also realized through AQS. Exclusive locks or shared locks are realized in different ways.

ReentrantReadWriteLock: a read lock is a shared lock and a write lock is an exclusive lock. The sharing of read locks can ensure that concurrent reads are efficient. Read / write, write / read and write are mutually exclusive

2.5 reentrant lock and non reentrant lock

Reentrant lock, also known as recursive lock, means that when the same thread acquires a lock in the outer method, the inner method of the thread will automatically acquire the lock (provided that the lock object must be the same object or class), and it will not be blocked because it has been acquired before and has not been released.

In the same thread, even if the outer method has acquired the lock, the inner method cannot automatically acquire the lock and must wait for the lock to be released. However, in fact, the object lock has been held by the current thread and cannot be released, so a deadlock will occur at this time.

In addition to the classification of locks described above, there are many classification methods. Here is a classification map of Baidu (I forgot where Baidu came from... Attach a link when I found it):

III. synchronization mechanism in JAVA

There are many implementations of locks in JAVA, and there are also many means to ensure synchronization. Next, some typical ones are introduced.

3.1 ReentrantLock

ReentrantLock, as can be seen from the Chinese translation, is a lock that supports reentrant locks, and it is also an exclusive lock. In addition, it can be seen from its construction method that the lock also supports fair and unfair selection when obtaining the lock. The default is unfair lock.

public ReentrantLock(boolean var1) {

this.sync = (ReentrantLock.Sync)(var1 ? new ReentrantLock.FairSync() : new ReentrantLock.NonfairSync());

}We can use ReentrantLock to protect code blocks and use it in conjunction with try catch finally. Lock the code before try, write the code to be protected in try, and finally don't forget to unlock it in finally. The basic usage is as follows:

Lock lock = new ReentrantLock();

lock.lock();

try {

// update object state

}

finally {

lock.unlock();

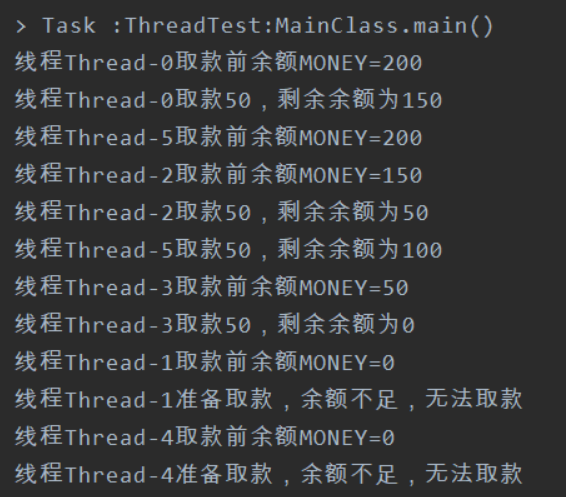

}Let's use ReentrantLock to protect the money withdrawal method mentioned above, that is, lock the money withdrawal operation to ensure that only one thread is withdrawing money at the same time to see if there will be any exceptions in the result.

package com.example.threadtest;

import java.util.concurrent.locks.ReentrantLock;

public class MainClass {

public static int MONEY=200;

public static ReentrantLock MYLOCK=new ReentrantLock();

public static void main(String[] args) throws Exception {

MyThread myThread=new MyThread();

MyThread myThread2=new MyThread();

MyThread myThread3=new MyThread();

MyThread myThread4=new MyThread();

MyThread myThread5=new MyThread();

MyThread myThread6=new MyThread();

myThread.start();

myThread2.start();

myThread3.start();

myThread4.start();

myThread5.start();

myThread6.start();

}

}

}

}package com.example.threadtest;

import static com.example.threadtest.MainClass.MONEY;

import static com.example.threadtest.MainClass.MYLOCK;

public class MyThread extends Thread {

@Override

public void run() {

super.run();

MYLOCK.lock();

try {

getMoney();

} catch (Exception e) {

} finally {

MYLOCK.unlock();

}

}

private void getMoney() {

String threadName = getName();

System.out.println("thread " + threadName + "Balance before withdrawal MONEY=" + MONEY);

if (MONEY > 50) {

MONEY = MONEY - 50;

System.out.println("thread " + threadName + "Withdraw 50 and the remaining balance is" + MONEY);

} else {

System.out.println("thread " + threadName + "Ready to withdraw, insufficient balance, unable to withdraw");

}

}

}

It can be seen from the log that after the money withdrawal operation is protected by ReentrantLock, there is no abnormal data, and only one thread can "withdraw money" at the same time.

3.2 synchronized

1. Modify a code block. The modified code block is called synchronization statement block. Its scope of action is the code enclosed in braces {}, and the object of action is the object calling the code block;

synchronized (MyThread.class){

getMoney();

}2. Modify a method. The modified method is called synchronous method. Its scope of action is the whole method, and the object of action is the object calling the method;

private synchronized void getMoney() {

String threadName = getName();

System.out.println("thread " + threadName + "Balance before withdrawal MONEY=" + MONEY);

if (MONEY >= 50) {

MONEY = MONEY - 50;

System.out.println("thread " + threadName + "Withdraw 50 and the remaining balance is" + MONEY);

} else {

System.out.println("thread " + threadName + "Ready to withdraw, insufficient balance, unable to withdraw");

}

}3. Modify a static method. Its scope of action is the whole static method, and the object of action is all objects of this class;

private static synchronized void getMoney() {

String threadName = getName();

System.out.println("thread " + threadName + "Balance before withdrawal MONEY=" + MONEY);

if (MONEY >= 50) {

MONEY = MONEY - 50;

System.out.println("thread " + threadName + "Withdraw 50 and the remaining balance is" + MONEY);

} else {

System.out.println("thread " + threadName + "Ready to withdraw, insufficient balance, unable to withdraw");

}

}4. Modify a class. Its scope of action is the part enclosed by parentheses after synchronized. The main objects are all objects of this class.

private void getMoney() {

synchronized (MyThread.class){

String threadName = getName();

System.out.println("thread " + threadName + "Balance before withdrawal MONEY=" + MONEY);

if (MONEY >= 50) {

MONEY = MONEY - 50;

System.out.println("thread " + threadName + "Withdraw 50 and the remaining balance is" + MONEY);

} else {

System.out.println("thread " + threadName + "Ready to withdraw, insufficient balance, unable to withdraw");

}

}

}Next, we use synchronized in the above example of withdrawing money to see the effect. First, we directly add synchronized in front of the method. The content in the main class is the same as that in section 3.2.1:

package com.example.threadtest;

import static com.example.threadtest.MainClass.MONEY;

public class MyThread extends Thread {

@Override

public void run() {

super.run();

getMoney();

}

private synchronized void getMoney() {

String threadName = getName();

System.out.println("thread " + threadName + "Balance before withdrawal MONEY=" + MONEY);

if (MONEY >= 50) {

MONEY = MONEY - 50;

System.out.println("thread " + threadName + "Withdraw 50 and the remaining balance is" + MONEY);

} else {

System.out.println("thread " + threadName + "Ready to withdraw, insufficient balance, unable to withdraw");

}

}

}

Through the log, we found that multiple threads read data at the same time. This is because getMoney is a member method. Six MyThread objects are created in the main class. Each call to getMoney belongs to different MyThread objects, not mutually exclusive. We should set the getMoney method as a static resource and let different thread objects call the same static resource, so as to achieve the effect. We can also declare a static object, use it as a lock, and write the logical modifications to be protected in the code block to achieve synchronization.

package com.example.threadtest;

import static com.example.threadtest.MainClass.MONEY;

public class MyThread extends Thread {

public static final String LOCK="lock";

@Override

public void run() {

super.run();

synchronized (LOCK){

getMoney();

}

}

private void getMoney() {

String threadName = getName();

System.out.println("thread " + threadName + "Balance before withdrawal MONEY=" + MONEY);

if (MONEY >= 50) {

MONEY = MONEY - 50;

System.out.println("thread " + threadName + "Withdraw 50 and the remaining balance is" + MONEY);

} else {

System.out.println("thread " + threadName + "Ready to withdraw, insufficient balance, unable to withdraw");

}

}

}

3.3 ReentrantReadWriteLock

The ReentrantLock described above is an exclusive lock that allows only one thread to access at the same time. The read-write lock to be introduced next allows multiple read threads to access at the same time, but when the write thread accesses, all read threads and other write threads will be blocked. The read-write lock maintains a pair of locks, one read lock and one write lock. By separating the read lock and the write lock, the concurrency is greatly improved compared with the general exclusive lock.

Generally, the performance of read-write locks is better than that of exclusive locks, because most scenarios have more reads and less writes. In this case, read-write locks can provide better concurrency and throughput. java. util. ReentrantReadWriteLock under concurrent package is the implementation of read-write lock. Next is an example to illustrate the usage of read-write lock. In the code, we create 10 threads, 5 threads to obtain read lock and 5 threads to obtain write lock. After obtaining the lock, the thread sleeps for 5 seconds.

package com.example.threadtest;

import java.time.LocalDateTime;

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantReadWriteLock;

public class ReadAndWriteLockDemo {

public static ReentrantReadWriteLock readWriteLock = new ReentrantReadWriteLock();

public static Lock readLock = readWriteLock.readLock();

public static Lock writeLock = readWriteLock.writeLock();

public static void main(String[] args) {

for(int i = 0;i < 10;i++){

if(i%2 == 0){

Thread readThread = new Thread(new Runnable() {

@Override

public void run() {

try {

System.out.println(LocalDateTime.now() + ":"+Thread.currentThread().getName() + "***Request for read lock***");

readLock.lock();

System.out.println(LocalDateTime.now() + ":"+Thread.currentThread().getName() + "***Got the read lock***");

Thread.sleep(1000*5);

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

System.out.println(LocalDateTime.now() + ":"+Thread.currentThread().getName() + "***The read lock was released***");

readLock.unlock();

}

}

});

readThread.start();

}else{

Thread writeThread = new Thread(new Runnable() {

@Override

public void run() {

try {

System.out.println(LocalDateTime.now() + ":"+Thread.currentThread().getName() + "---Request for write lock---");

writeLock.lock();

System.out.println(LocalDateTime.now() + ":"+Thread.currentThread().getName() + "---Got a write lock---");

Thread.sleep(1000*5);

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

System.out.println(LocalDateTime.now() + ":"+Thread.currentThread().getName() + "---The write lock was released---");

writeLock.unlock();

}

}

});

writeThread.start();

}

}

}

}The log printed after running is as follows:

> Task :ThreadTest:ReadAndWriteLockDemo.main()

2021-07-13T16:03:29.687:Thread-1 --- request to obtain write lock---

2021-07-13T16:03:29.687:Thread-6 * * * request to obtain read lock***

2021-07-13T16:03:29.687:Thread-8 * * * request to obtain read lock***

2021-07-13T16:03:29.687:Thread-3 --- request to obtain write lock---

2021-07-13T16:03:29.687:Thread-7 --- request to obtain write lock---

2021-07-13T16:03:29.687:Thread-2 * * * request to obtain read lock***

2021-07-13T16:03:29.687:Thread-5 --- request to obtain write lock---

2021-07-13T16:03:29.687:Thread-4 * * * request to obtain read lock***

2021-07-13T16:03:29.687:Thread-0 * * * request to obtain read lock***

2021-07-13T16:03:29.687:Thread-9 --- request to obtain write lock---

2021-07-13T16:03:29.687:Thread-1 --- write lock obtained---

2021-07-13T16:03:34.700:Thread-1 --- write lock released---

2021-07-13T16:03:34.700:Thread-6 * * * read lock obtained***

2021-07-13T16:03:34.701:Thread-8 * * * read lock obtained***

2021-07-13T16:03:39.708:Thread-6 * * * released the read lock***

2021-07-13T16:03:39.708:Thread-8 * * * released the read lock***

2021-07-13T16:03:39.708:Thread-3 --- write lock obtained---

2021-07-13T16:03:44.721:Thread-3 --- write lock released---

2021-07-13T16:03:44.721:Thread-7 --- write lock obtained---

2021-07-13T16:03:49.721:Thread-7 --- write lock released---

2021-07-13T16:03:49.721:Thread-2 * * * got the read lock***

2021-07-13T16:03:54.735:Thread-2 * * * released the read lock***

2021-07-13T16:03:54.735:Thread-5 --- write lock obtained---

2021-07-13T16:03:59.738:Thread-5 --- write lock released---

2021-07-13T16:03:59.738:Thread-4 * * * read lock obtained***

2021-07-13T16:03:59.738:Thread-0 * * * got the read lock***

2021-07-13T16:04:04.746:Thread-0 * * * released the read lock***

2021-07-13T16:04:04.746:Thread-4 * * * released the read lock***

2021-07-13T16:04:04.746:Thread-9 --- write lock obtained---

2021-07-13T16:04:09.761:Thread-9 --- write lock released---

It can be seen from the output log that multiple threads can acquire read locks at the same time, but only one thread can acquire write locks at the same time.

3.4 volatile

If there is a shared variable, thread a first modifies it and saves it in the local memory of thread A. at this time, the modified new value has not been synchronized to the main memory. However, thread B has cached the old value of the shared variable before, so the two threads access the shared variable with different values. This problem can certainly be avoided by using the various locks described above. However, if thread B just wants to read the latest value of the shared variable at any time, using the various locks described above is too "heavy" and wastes performance. In this case, the more reasonable way is to use the volatile keyword. The reason why the volatile keyword can read the latest value of a shared variable is that when a thread writes to a volatile modified variable, the value of the variable in the thread's local memory will be immediately forced to write to the main memory. At the same time, after the write operation, the value of the volatile shared variable cached in other threads will become invalid. The effect is that the volatile keyword can ensure that a variable is read directly from main memory. If the variable is modified, it will always be written back to main memory.

A classic use of volatile keyword is to cooperate with lock to realize the singleton mode of double check.

When implementing singleton mode, if multithreading is not considered, beginners often write the following code:

package com.example.threadtest;

public class SingleClass {

private static SingleClass singleInstance;

public SingleClass(String threadName) {

System.out.println(threadName + "---------------------A singleton is created------------------------");

}

public static SingleClass getInstance(String threadName) {

if (singleInstance == null) {

singleInstance = new SingleClass(threadName);

}

return singleInstance;

}

}In this way, if multiple threads call getInstance method at the same time, when judging if (singleInstance == null), no thread may create a singleinstance successfully, so singleInstance = new SingleClass(threadName) will be executed;, As a result, multiple singletons are created, and the singleton pattern is meaningless.

In order to avoid this situation, some people naturally thought that synchronized described above would lock up the code for creating an instance, so they changed it to the following code:

package com.example.threadtest;

public class SingleClass {

private static SingleClass singleInstance;

public SingleClass(String threadName) {

System.out.println(threadName + "---------------------A singleton is created------------------------");

}

public static SingleClass getInstance(String threadName) {

synchronized (SingleClass.class){

if (singleInstance==null){

singleInstance = new SingleClass(threadName);

}

}

return singleInstance;

}

}Write like this every time you enter

if (singleInstance==null){

singleInstance = new SingleClass(threadName);

}The lock acquisition will be requested before. Of course, this can avoid the repeated creation of multiple instances. However, as mentioned above, synchronized synchronization code block is a "heavy" synchronization mechanism, which consumes more performance. A more elegant method is introduced below.

package com.example.threadtest;

public class SingleClass {

private volatile static SingleClass singleInstance;

public SingleClass(String threadName) {

System.out.println(threadName + "---------------------A singleton is created------------------------");

}

public static SingleClass getInstance(String threadName) {

if (singleInstance==null){

synchronized (SingleClass.class){

if (singleInstance==null) {

singleInstance = new SingleClass(threadName);

}

}

}

return singleInstance;

}

}In this way, the single instance is written twice. The outer layer is empty. In order to reduce the execution times of synchronized code blocks (synchronized consumes performance, as mentioned above), if the single instance has been created, it will not be created. However, if multiple threads execute to the outer space at the same time, but no instance has been successfully created, it means that multiple threads can enter the synchronous code block. Therefore, a layer of space determination needs to be added to the synchronous code block, because only one thread can execute the contents of the synchronous code block. Once a thread has been successfully created, Then the next thread entering the code block must determine that the instance is not empty, so it will not be created again. Then why do you need to use private volatile static SingleClass singleInstance; The volatile keyword is added in front of the singleton, because we said earlier that the variable modified by the volatile keyword can make all threads have their latest values, which avoids the situation that in some cases, the instance has been created, but what other threads read is still null, resulting in inaccurate null conditions.

3.5 ThreadLocal

threadlocal is an internal thread storage class that can store data in the specified thread. After data storage, only the stored data can be obtained in the specified thread, and the desired value cannot be accessed outside the thread. Its usage is also relatively simple, mainly including get(), set() and initializing initialValue(). The functions of these methods can be seen from the name without too much explanation. Next, introduce their usage through simple examples;

package com.example.threadtest;

import java.util.Random;

public class MyThread extends Thread {

private static ThreadLocal<Integer> threadLocalNum = new ThreadLocal<Integer>(){

@Override

protected Integer initialValue() {

return 1;

}

};

public String getThreadName() {

return getName();

}

@Override

public void run() {

super.run();

threadLocalNumAdd();

}

private void threadLocalNumAdd() {

double random = Math.random();

random = random * 10;

threadLocalNum.set(threadLocalNum.get() + (int) random);

System.out.println(getName()+"take threadLocal Increased"+(int)random+"The subsequent value is,"+threadLocalNum.get());

}

public MyThread() {

System.out.println("Thread created"+getName());

}

}We create a ThreadLocal variable in the thread, and then add a random integer to it in the run method. The output results are as follows:

> Task :ThreadTest:MainClass.main()

Created thread Thread-0

Created thread Thread-1

Thread-2 created

Thread-3 created

Created thread Thread-4

Created thread Thread-5

Created thread Thread-6

Created thread Thread-7

Created thread Thread-8

Created thread Thread-9

Created thread Thread-10

Thread-4 increases threadLocal by 5, and the value is 6

Thread-9 increases threadLocal by 0, and the value is 1

Thread-3 increases threadLocal by 2 and the value is 3

Thread-1 increases threadLocal by 4, and the value is 5

Thread-2 increases threadLocal by 1 and the value is 2

Thread-0 increases threadLocal by 5, and the value is 6

Thread-8 increases threadLocal by 0, and the value is 1

Thread-7 increases threadLocal by 2 and the value is 3

Thread-5 increases threadLocal by 6, and the value is 7

Thread-6 increases threadLocal by 2, and the value is 3

Thread-10 increases threadLocal by 6, and the value is 7

Thread-11 was created

Thread-12 was created

Thread-11 increases threadLocal by 9, and the value is 10

Thread-13 was created

Thread-12 increases threadLocal by 3, and the value is 4

Thread-14 was created

Thread-13 increases threadLocal by 2 and the value is 3

Thread-15 was created

Thread-14 increases threadLocal by 6, and the value is 7

Thread-16 was created

Created thread Thread-17

Thread-15 increases threadLocal by 3, and the value is 4

Thread-16 increases threadLocal by 6, and the value is 7

Created thread Thread-18

Thread-17 increases threadLocal by 3, and the value is 4

Created thread Thread-19

Thread-18 increases threadLocal by 7, and the value is 8

Time spent: 4

Thread-19 increases threadLocal by 9, and the value is 10

BUILD SUCCESSFUL in 24s

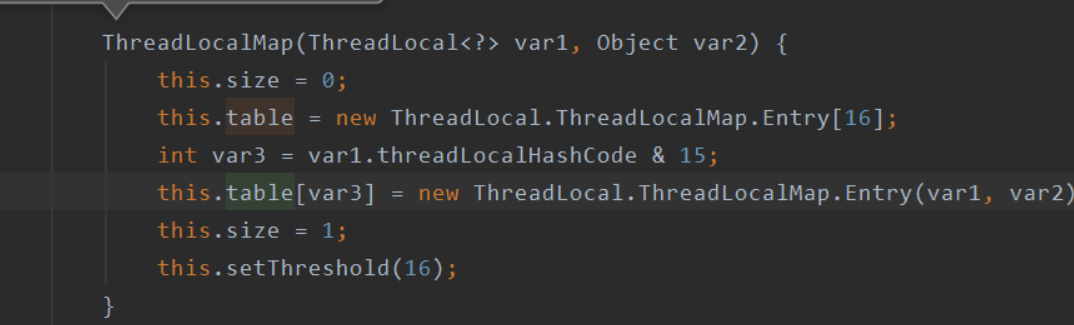

It can be seen from the log that the ThreadLocal variable of each Thread is independent and the values do not affect each other. The basic principle is that a ThreadLocal is maintained in each Thread Threadlocalmap isolates the data and does not share the data. Naturally, there is no Thread safety problem.

It can be seen from the ThreadLocalMap source code that when instantiating ThreadLocalMap, an Entry array with a length of 16 is created, and then an index value var3 is calculated through some operation. Var3 is the location stored in the table array, that is, each Thread holds an Entry array table, and different threads operate on different tables.

4, Waiting and notification mechanism between threads

4.1 waiting / notification mechanism of implicit lock

If there is a resource that may be modified by other threads at any time, we hope to monitor the status of the resource in real time and trigger certain logic when the resource meets certain conditions. A stupid implementation method is to open a thread and always poll the status of the resource, as shown in the following example:

package com.example.threadtest.waitnotifytest;

import java.util.LinkedList;

import java.util.List;

public class YSList {

public volatile static List<Integer> list = new LinkedList<>();

public static void addList() {

list.add(1);

}

}package com.example.threadtest.waitnotifytest;

public class AddListThread extends Thread {

@Override

public void run() {

super.run();

while (true) {

YSList.addList();

try {

sleep(1000L);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}package com.example.threadtest.waitnotifytest;

public class MonitorListThread extends Thread {

@Override

public void run() {

super.run();

while (true) {

int size = YSList.list.size();

System.out.println("Monitored list Already in" + size + "Elements");

if (size > 20) {

System.out.println("Stop monitoring");

break;

}

try {

sleep(1000L);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}In the above example, the AddListThread thread will modify the list. In addition, the MonitorListThread thread uses an endless loop to monitor the list all the time. Although this can realize real-time monitoring of resources, it undoubtedly takes up special resources because the monitoring thread has been running all the time, even if the list has not been modified for a long time, The monitoring thread will always monitor it. This is just like our mobile phone doesn't ring and vibrate. Our users need to keep staring at the mobile phone screen to see if anyone calls. Although we can also receive calls, no doubt most of our time is wasted. If we can understand wait/notify and other methods and flexibly use the wait / wake mechanism between threads, it will be much more elegant;

4.1.1} wait and notify/notifyAll methods

Both wait and notify are interface thread methods provided in Object, that is, each Object has these two methods. We can easily use an Object object as a "lock". It should be noted that both wait and notify methods must be used in the synchronization code block. Before calling wait() method and notify() method, we need to obtain the lock first, 4.1 in this section, we mainly introduce the wait/notify mechanism combined with synchronized to realize the waiting and notification mechanism of implicit lock.

The function of the wait method is: when the wait method of an instance object is executed in a thread, the thread will become a waiting state, put the current thread into the waiting queue of the instance object until it is notified or interrupted, and release the lock on the instance object at the same time.

The function of the notify/notifyAll method is to wake up the threads in the waiting queue of an instance object. If there are multiple threads in the waiting queue, one will be awakened randomly. The selection here is unfair. If you want to wake up all threads in the waiting queue, you can use notifyAll;

4.1.2 application of wait / notify

At the beginning of Section 4.1, we realized the monitoring of a list in a stupid way. Next, we tried to use wait and notify to achieve the above effect more gracefully. The basic idea is that the MonitorListThread does not have to be running all the time. Only when addListThread modifies the list and calls notify to wake up the MonitorListThread can the state of the list be monitored, which can greatly save resources. The code is as follows:

package com.example.threadtest.waitnotifytest;

public class MainTest {

public static Object LOCK = new Object();

public static final int threshold=500;

public static void main(String[] args) {

AddListThread addListThread = new AddListThread();

MonitorListThread monitorListThread = new MonitorListThread();

addListThread.start();

monitorListThread.start();

}

}package com.example.threadtest.waitnotifytest;

import java.util.LinkedList;

import java.util.List;

public class YSList {

public volatile static List<Integer> list = new LinkedList<>();

public static void addList() {

list.add(1);

}

public static int size() {

return list.size();

}

}package com.example.threadtest.waitnotifytest;

import static com.example.threadtest.waitnotifytest.MainTest.LOCK;

import static com.example.threadtest.waitnotifytest.MainTest.threshold;

public class AddListThread extends Thread {

@Override

public void run() {

super.run();

synchronized (LOCK){

while (YSList.size()<threshold) {

YSList.addList();

System.out.println("AddList Will list Increased, current size" + YSList.size());

}

LOCK.notifyAll();

System.out.println("list of size Reached"+threshold+",awaken MonitorListThread");

}

}

}package com.example.threadtest.waitnotifytest;

import static com.example.threadtest.waitnotifytest.MainTest.LOCK;

import static com.example.threadtest.waitnotifytest.MainTest.threshold;

public class MonitorListThread extends Thread {

@Override

public void run() {

super.run();

synchronized (LOCK){

while (YSList.list.size()<threshold){

waitThread();

System.out.println("size Not yet achieved"+threshold+",give up CPU,MonitorListThread Enter the waiting state");

}

System.out.println("MonitorListThread Monitored list Already in" + YSList.list.size() + "Elements");

}

}

private void waitThread() {

try {

LOCK.wait();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}The above code is an example of the flexible use of wait/notify. This implementation method does not make the MonitorListThread run all the time, which consumes CPU resources. Instead, it keeps it in a dormant state when it does not meet the conditions. It will not be awakened until the AddListThread is notified, and the business logic will be handled. The operation results are as follows:

> Task :ThreadTest:MainTest.main()

AddList has increased the list, the current size is 1

AddList has increased the list, the current size is 2

AddList has increased the list, and the current size is 3

Add list has been increased. The current size is 4

Add list has been increased. The current size is 5

Add list has been increased. The current size is 6

Add list has been increased. The current size is 7

Add list has been increased. The current size is 8

Add list has been increased. The current size is 9

Add list has been increased. The current size is 10

AddList has increased the list, and the current size is 11

Add list has been increased. The current size is 12

AddList has increased the list, and the current size is 13

Add list has been increased. The current size is 14

AddList has increased the list, and the current size is 15

Add list has been increased. The current size is 16

AddList has increased the list, and the current size is 17

Add list has been increased. The current size is 18

Add list has been increased. The current size is 19

Add list has been increased. The current size is 20

The size of list has reached 20. Wake up MonitorListThread

MonitorListThread has detected that there are 20 elements in the list

BUILD SUCCESSFUL in 1s

3 actionable tasks: 3 executed

4.1.3 recommended usage of waiting / notification mechanism of implicit lock

For the need to monitor the change of a static resource, we used two implementation methods in 4.1.1 and 4.1.2 to compare them. It is easy to know from the results that the efficiency of using the waiting / notification mechanism is high. This section summarizes the recommended usage of the waiting / notification mechanism.

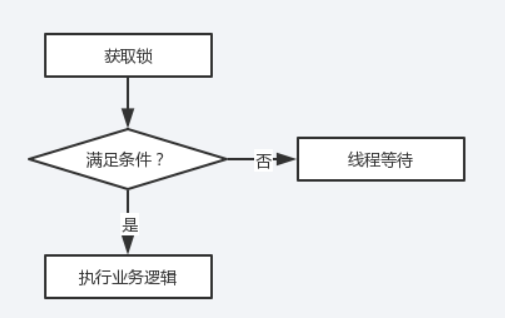

As mentioned in 4.1.1, whether you call wait or notify, you must first obtain the lock, and then the waiting party should generally be in the waiting state. It will be awakened and executed business logic only when you receive the notification; As the notifying party, it shall notify the waiting party in time after the conditions are met, so as to achieve good cooperation. The implementation steps of the waiting party and the notifying party are summarized as follows:

Waiting party steps:

The pseudo code is represented as follows:

synchronized(LOCK){

while(Conditions not met){

LOCK.wait();

}

//The conditions are met

Execute business code

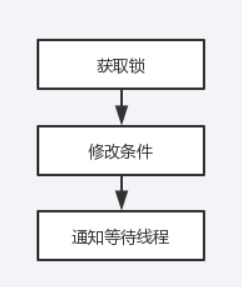

}Steps for notifying party:

The pseudo code is represented as follows:

synchronized(LOCK){

Execute the business code to change the condition

LOCK.notify()/notifyAll();

}The above is the recommended usage of the inter thread wait / notification mechanism. There is another small problem that needs to be explained. I don't know if anyone will notice that both the notifying party and the waiting party need to obtain the lock first. In case the conditions of the waiting party are not met, will it be unable to release the lock all the time, resulting in the Notifying Party's failure to get the lock and deadlock? The answer is no, because the wait method will release the lock, so there will be no deadlock, while the sleep method will not release the lock, which is also a difference between the wait method and the sleep method.

4.2 waiting / notification mechanism of explicit lock

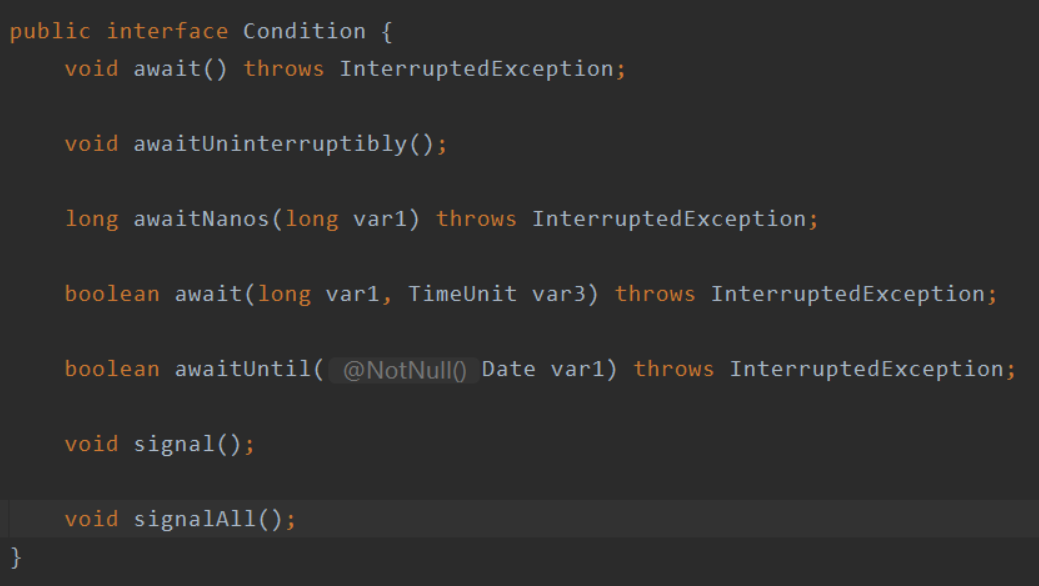

Section 4.1 above mainly introduces the wait/notify mechanism for implementing implicit locks in combination with synchronized. If explicit locks such as ReentrantLock are used, how to implement the wait/notify mechanism? You can use the Condition interface provided in JAVA.

We can see that await(), signal(), signalAll() and other methods are promoted in the Condition interface. In fact, the functions of these methods are similar to wait(), notify(), notifyAll() introduced in Section 4.1 above. According to specific business requirements, each Lock object can use the newCondition() method to create multiple Condition objects. For example, in 4.1.3, we judge whether there is only one Condition "size greater than 50", so we only need to create one Condition object. If there are multiple conditions, we can create multiple Condition objects. The use steps of the wait / notify mechanism of the display Lock are basically the same as those of the display Lock. Here, the above case is used as an example, but the implementation method of the explicit Lock is replaced here.

package com.example.threadtest.waitnotifytest;

public class MainTest {

public static final int threshold=20;

public static void main(String[] args) {

AddListThread addListThread = new AddListThread();

MonitorListThread monitorListThread = new MonitorListThread();

addListThread.start();

monitorListThread.start();

}

}package com.example.threadtest.waitnotifytest;

import java.util.LinkedList;

import java.util.List;

public class YSList {

public volatile static List<Integer> list = new LinkedList<>();

public static void addList() {

list.add(1);

}

public static int size() {

return list.size();

}

}package com.example.threadtest.waitnotifytest;

import java.util.concurrent.locks.Condition;

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantLock;

import static com.example.threadtest.waitnotifytest.MainTest.threshold;

public class AddListThread extends Thread {

public static Lock LOCK = new ReentrantLock();

public static Condition conditionSize = LOCK.newCondition();

@Override

public void run() {

super.run();

LOCK.lock();

try {

while (YSList.size() < threshold) {

YSList.addList();

System.out.println("AddList Will list Increased, current size" + YSList.size());

}

// LOCK.notifyAll()

conditionSize.signalAll();

System.out.println("list of size Reached" + threshold + ",awaken MonitorListThread");

} catch (Exception e) {

e.printStackTrace();

}finally {

LOCK.unlock();

}

}

}package com.example.threadtest.waitnotifytest;

import static com.example.threadtest.waitnotifytest.AddListThread.LOCK;

import static com.example.threadtest.waitnotifytest.AddListThread.conditionSize;

import static com.example.threadtest.waitnotifytest.MainTest.threshold;

public class MonitorListThread extends Thread {

@Override

public void run() {

super.run();

LOCK.lock();

try {

while (YSList.list.size()<threshold){

waitThread();

System.out.println("size Not yet achieved"+threshold+",give up CPU,MonitorListThread Enter the waiting state");

}

System.out.println("MonitorListThread Monitored list Already in" + YSList.list.size() + "Elements");

} catch (Exception e) {

e.printStackTrace();

}finally {

LOCK.unlock();

}

}

private void waitThread() {

try {

// LOCK.wait();

conditionSize.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}The output results are as follows:

AddList has increased the list, the current size is 1

AddList has increased the list, the current size is 2

AddList has increased the list, and the current size is 3

Add list has been increased. The current size is 4

Add list has been increased. The current size is 5

Add list has been increased. The current size is 6

Add list has been increased. The current size is 7

Add list has been increased. The current size is 8

Add list has been increased. The current size is 9

Add list has been increased. The current size is 10

AddList has increased the list, and the current size is 11

Add list has been increased. The current size is 12

AddList has increased the list, and the current size is 13

Add list has been increased. The current size is 14

AddList has increased the list, and the current size is 15

Add list has been increased. The current size is 16

AddList has increased the list, and the current size is 17

Add list has been increased. The current size is 18

Add list has been increased. The current size is 19

Add list has been increased. The current size is 20

The size of list has reached 20. Wake up MonitorListThread

MonitorListThread has detected that there are 20 elements in the list

BUILD SUCCESSFUL in 1s

summary

This paper mainly introduces the relevant knowledge of synchronization, locking, notification and waiting in JAVA multithreading. Later, we will continue to discuss more knowledge about JAVA multithreading programming. If there are errors, please point them out. At the same time, you are also welcome to discuss and exchange technology. My email is hbutys@vip.qq.com