catalogue

Thread safe:

When multiple threads access a class, regardless of the scheduling mode adopted by the runtime environment or how these processes execute alternately, and this class can show correct behavior without any additional synchronization or cooperation in the calling code, this class is called thread safe.

Thread safety features:

| Atomicity | Only one thread can operate on it at a time. |

| visibility | The modification of main memory by one thread can be observed by other threads in time. |

| Order | One thread observes the instruction execution order of other threads. Due to the existence of instruction reordering, the observation is generally disordered |

Atomicity:

Atomic class:

Atomic package adopts CAS algorithm:

public final int getAndAddInt(Object var1, long var2, int var4) {

int var5;

do {

var5 = this.getIntVolatile(var1, var2);

} while(!this.compareAndSwapInt(var1, var2, var5, var5 + var4));

return var5;

}var1 is the operation object, var2 is the current value of the object, var4 is the added value of the object, and var5 is the underlying current value.

| Core principles of CAS(Compare And Swap) implementation | Compare the value of the current object (working memory) with the value of the underlying (main memory). If the current value is equal to the underlying value, perform the corresponding operation. If it is not equal, continue to cycle the value until it is equal (dead cycle), and keep trying to cycle. |

| Operation process of CAS | The CAS comparison process can be popularly understood as CAS(V,O,N), which contains three values: the value actually stored in the V memory address; The expected value of O (old value) and the new value updated by N. when V and o are the same, it means that the old value is the same as the actual value in memory, indicating that the value has not been modified by other threads, that is, the old value is the latest value at present. Naturally, the new value n can be assigned to v. on the contrary, if V and o are different, it means that the value has been modified by other threads, Then the old value o is not the latest version, so the value n cannot be assigned to V, and V can be returned. When multiple threads use CAS to operate a variable, only one thread will succeed and update successfully, the rest will fail, and the failed thread will try again. Of course, you can also choose to suspend the thread. |

AtomicLong and LongAdder:

AtomicLong's principle is to rely on the underlying cas to ensure the atomic update data (continuously try to modify the target value in the dead cycle until the modification is successful). When it is to be added or reduced, it will continuously cas to a specific value using the self-follow (CLH) method, so as to achieve the purpose of updating the data. However, in the case of fierce thread competition, self-loop often wastes a lot of computing resources to achieve the desired effect.

For variables of normal type, long and double, the JVM allows 64 bit read or write operations to be split into two 32-bit operations.

LongAdder separates hotspot data (the internal core data value of AtomicLong is separated into an array. When each thread accesses it, it is mapped to one of the numbers through algorithms such as hash for counting. The final technical result is the summation and accumulation of this array. The value of hot data is separated into multiple single cells. Each cell maintains its own internal value, and the actual value of the current object is accumulated by multiple cells In this way, the hot spots are effectively separated and the parallelism is improved. On the basis of AtomicLong, the update pressure of a single point is dispersed to each node. In the case of low concurrency, the performance of AtomicLong can be basically consistent with that of AtomicLong through direct update of base, while in the case of high concurrency, the performance is improved through dispersion).

Disadvantages of LongAdder: if there are concurrent updates during statistics, there may be errors in the statistical data.

Atomic type division

In order to master these classes level by level, I divide these atomic types into the following categories:

| Common atomic type: provides atomic operations on boolean, int, long, and objects | AtomicBoolean AtomicInteger AtomicLong AtomicReference |

| Atomic type array: provides atomic operations on array elements | AtomicLongArray AtomicIntegerArray AtomicReferenceArray |

| Atomic type field updater: provides atomic operations on specified fields of specified objects | AtomicLongFieldUpdater AtomicIntegerFieldUpdater AtomicReferenceFieldUpdater |

| Atomic reference type with version number: the ABA problem of atomic type is solved by version stamp | AtomicStampedReference AtomicMarkableReference |

| Atomic accumulator (JDK1.8): upgrade type of AtomicLong and AtomicDouble. It is specially used for data statistics and has higher performance | DoubleAccumulator DoubleAdder LongAccumulator LongAdder |

AtomicInteger:

//ThreadSafe

public class AtomicIntegerTest {

public static int clientTotal = 5000;// Total requests

public static int threadTotal = 200;// Number of threads executing concurrently

public static AtomicInteger count = new AtomicInteger(0);

public static void main(String[] args) throws Exception {

ExecutorService executorService = Executors.newCachedThreadPool();

final Semaphore semaphore = new Semaphore(threadTotal);

final CountDownLatch countDownLatch = new CountDownLatch(clientTotal);

for (int i = 0; i < clientTotal ; i++) {

executorService.execute(() -> {

try {

semaphore.acquire();

add();

semaphore.release();

} catch (Exception e) {

e.printStackTrace();

}

countDownLatch.countDown();

});

}

countDownLatch.await();

executorService.shutdown();

System.out.println("count:" + count.get());

}

private static void add() {

count.incrementAndGet();

// count.getAndIncrement();

}

}Execution results:

count:5000

AtomicLong:

//ThreadSafe

public class AtomicLongTest {

public static int clientTotal = 5000;// Total requests

public static int threadTotal = 200;// Number of threads executing concurrently

public static AtomicLong count = new AtomicLong(0);

public static void main(String[] args) throws Exception {

ExecutorService executorService = Executors.newCachedThreadPool();

final Semaphore semaphore = new Semaphore(threadTotal);

final CountDownLatch countDownLatch = new CountDownLatch(clientTotal);

for (int i = 0; i < clientTotal ; i++) {

executorService.execute(() -> {

try {

semaphore.acquire();

add();

semaphore.release();

} catch (Exception e) {

e.printStackTrace();

}

countDownLatch.countDown();

});

}

countDownLatch.await();

executorService.shutdown();

System.out.println("count:" + count.get());

}

private static void add() {

count.incrementAndGet();

// count.getAndIncrement();

}

}Execution results:

count:5000

LongAdder:

//ThreadSafe

public class LongAdderTest{

public static int clientTotal = 5000;// Total requests

public static int threadTotal = 200;// Number of threads executing concurrently

public static LongAdder count = new LongAdder();

public static void main(String[] args) throws Exception {

ExecutorService executorService = Executors.newCachedThreadPool();

final Semaphore semaphore = new Semaphore(threadTotal);

final CountDownLatch countDownLatch = new CountDownLatch(clientTotal);

for (int i = 0; i < clientTotal ; i++) {

executorService.execute(() -> {

try {

semaphore.acquire();

add();

semaphore.release();

} catch (Exception e) {

e.printStackTrace();

}

countDownLatch.countDown();

});

}

countDownLatch.await();

executorService.shutdown();

System.out.println("count:" + count);

}

private static void add() {

count.increment();

}

}Execution results:

count:5000

AtomicReference:

//ThreadSafe

public class AtomicReferenceTest {

private static AtomicReference<Integer> count = new AtomicReference<>(0);

public static void main(String[] args) {

count.compareAndSet(0, 2); // 2

count.compareAndSet(0, 1); // no

count.compareAndSet(1, 3); // no

count.compareAndSet(2, 4); // 4

count.compareAndSet(3, 5); // no

System.out.println("result: " + count.get());

}

}Execution results:

result: 4

AtomicIntegerFieldUpdater:

//ThreadSafe

@Data

public class AtomicIntegerFieldUpdaterTest {

private static AtomicIntegerFieldUpdater<AtomicIntegerFieldUpdaterTest> updater =

AtomicIntegerFieldUpdater.newUpdater(AtomicIntegerFieldUpdaterTest.class, "count");

@Getter

public volatile int count = 100;

public static void main(String[] args) {

AtomicIntegerFieldUpdaterTest example = new AtomicIntegerFieldUpdaterTest();

if (updater.compareAndSet(example, 100, 120)) {

System.out.println("update success 1 : " + example.getCount());

}

if (updater.compareAndSet(example, 100, 120)) {

System.out.println("update success 2 : " + example.getCount());

} else {

System.out.println("update failed : " + example.getCount());

}

}

}Execution results:

update success 1 : 120

update failed : 120

AtomicBoolean:

//ThreadSafe

public class AtomicBooleanTest {

private static AtomicBoolean isHappened = new AtomicBoolean(false);

public static int clientTotal = 5000;// Total requests

public static int threadTotal = 200;// Number of threads executing concurrently

public static void main(String[] args) throws Exception {

ExecutorService executorService = Executors.newCachedThreadPool();

final Semaphore semaphore = new Semaphore(threadTotal);

final CountDownLatch countDownLatch = new CountDownLatch(clientTotal);

for (int i = 0; i < clientTotal ; i++) {

executorService.execute(() -> {

try {

semaphore.acquire();

method();

semaphore.release();

} catch (Exception e) {

e.printStackTrace();

}

countDownLatch.countDown();

});

}

countDownLatch.await();

executorService.shutdown();

System.out.println("isHappened: " + isHappened.get());

}

private static void method() {

if (isHappened.compareAndSet(false, true)) {

System.out.println("executing...");

}

}

}

Execution results:

executing...

isHappened: true

ABA question:

The principle of CAS mechanism is atomic operation supported by CPU, and its atomicity is guaranteed at the hardware level.

The CAS mechanism may have ABA problems, that is, T1 reads the memory variable as A,T2 modifies the memory variable as B, and T2 modifies the memory variable as a. at this time, it is feasible for T1 to operate CAS A. in fact, it has been modified by other threads during T1's second operation a.

Solution: add a version number.

Locks achieve atomicity:

| synchronized | Dependent on JVM. synchronized is not transitive during class inheritance. synchronized is not part of the method declaration. |

| lock | Depending on special CPU instructions, code implementation, such as ReenTrantLock; |

Atomic comparison:

| Atomic | When the competition is fierce, it can maintain the normal state and has better performance than lock. Only one value can be synchronized. |

| synchronized | Non interruptible lock, suitable for non fierce competition and good readability. |

| Lock | Interruptible lock, diversified synchronization, and can maintain normality in fierce competition. |

Visibility:

Reasons why shared variables are invisible to the thread:

| Thread interleaving. |

| Reordering is performed in combination with thread crossing. |

| The updated value of shared variable is not updated in time between working memory and main memory. |

synchronized keyword:

JMM has two provisions on synchronized:

| The value of the shared variable must be flushed to the main memory before the thread is unlocked |

| When a thread locks, the value of the shared variable in the working memory will be cleared. Therefore, when using the shared variable, it is necessary to re read the latest value from the main memory. Note (locking and unlocking are the same lock); |

volatile keyword:

According to JMM, as like as two peas, there is a main memory in Java, and different threads have their own working memory. The same variable value has one part in main memory. If the thread is used for this variable, there is a copy exactly in its working memory. Each time the incoming thread gets the variable value from the main memory, and each time after execution, the thread synchronizes the variable from the working memory back to the main memory.

volatile solves the visibility of variables among multiple threads, but it cannot guarantee atomicity.

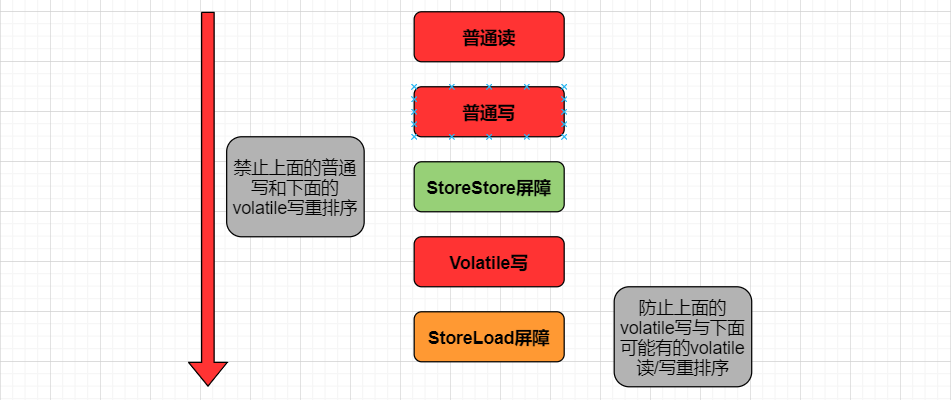

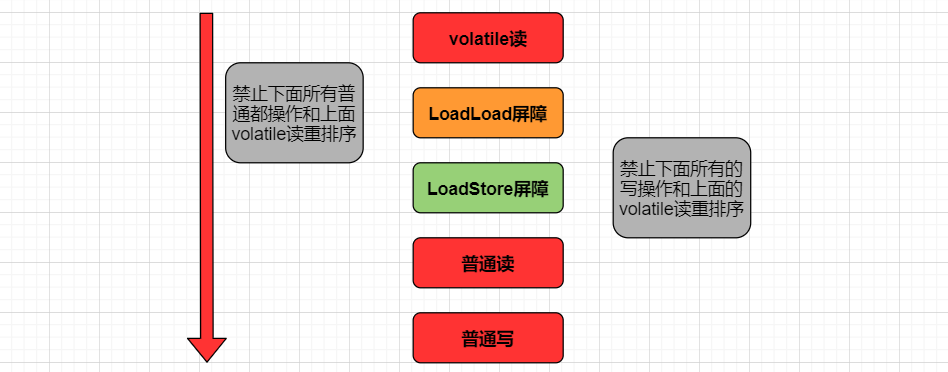

This is achieved by adding memory barriers and prohibiting reordering.

| When writing to volatile, a store barrier instruction will be added after the write operation to refresh the shared variable value in local memory to main memory. |

| When reading volatile, a load instruction will be added after the write operation to read the shared variables from the main memory. |

Visibility - volatile write diagram:

Visibility - volatile reading diagram:

Note: volatile cannot guarantee atomicity;

| Writes to variables do not depend on the current value. |

| The value of this variable is not contained in an invariant formula with other variables. |

Visibility -- use of volatile: (as state identifier)

volatile boolean inited = false;

//Thread 1

context = loadContext();

inited = true;

//Thread 2

while(!inited){

sleep;

}

doSomethingWithConfig(context);Order:

| In the Java memory model, the compiler and processor are allowed to reorder instructions, but the reordering process will not affect the execution of a single thread, but will affect the correctness of multi-threaded concurrent execution. |

| The orderliness is guaranteed through volatile, synchronized and lock. Synchronized and lock ensure that only one thread executes code at the same time, so as to ensure orderliness. |

Congenital order -- happens before principle:

| Program Order Rule | In a thread, according to the code order, the operation written in front occurs first in the operation written in the back. |

| Lock rule | An unlock operation occurs first in a subsequent lock operation on the same lock. |

| volatile variable rule | The write operation on a variable occurs first and then the read operation on the variable. |

| Transfer rule | If operation A first occurs in operation B and operation B first occurs in operation C, it can be concluded that operation A first occurs in operation C. |

| Thread start rule | The start() method of the Thread object first occurs in each action of this Thread. |

| Thread interrupt rule | The call to the thread interrupt() method occurs first. The code of the interrupted thread detects the occurrence of an interrupt event. |

| Thread interrupt rule | All operations in the thread occur in the thread termination detection first. We can detect that the thread has terminated execution through the end of the Thread.join() method and the return value of Thread.isAlive(). |

| Object termination rule | The initialization of an object occurs first at the beginning of its finalize() method. |