Introduction

Watching How I built a wind map with WebGL When I used the framebuffer, I checked the data and tried it alone.

Framebuffer object

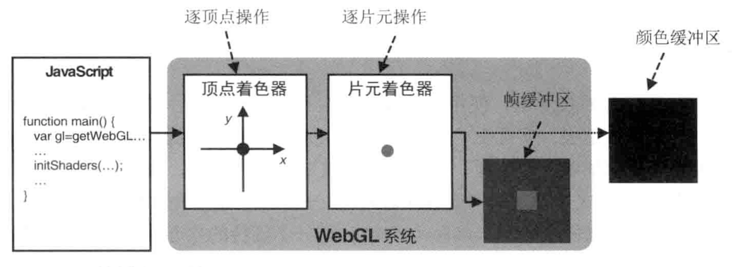

WebGL has the ability to use the rendering results as textures, which is the framebuffer object.

By default, the final drawing result of WebGL is stored in the color buffer, and the frame buffer object can be used to replace the color buffer. As shown in the figure below, the object drawn in the frame buffer will not be directly displayed on Canvas, so this technology is also called off screen drawing.

Examples

In order to verify the above functions, this Examples A picture will be drawn in the frame buffer, and then it will be drawn and displayed again as a texture.

be based on Use picture example The logic of is mainly changed in the following aspects:

- data

- Framebuffer object

- draw

data

Painting in the frame buffer is the same as normal painting, but it is not displayed, so there should also be corresponding painting area size, vertex coordinates and texture coordinates.

offscreenWidth: 200, // Width drawn off screen

offscreenHeight: 150, // Height drawn off screen

// Partial code omission

// Vertex and texture coordinates painted against the framebuffer

this.offScreenBuffer = this.initBuffersForFramebuffer(gl);

// Partial code omission

initBuffersForFramebuffer: function (gl) {

const vertices = new Float32Array([

0.5, 0.5, -0.5, 0.5, -0.5, -0.5, 0.5, -0.5,

]); // rectangle

const indices = new Uint16Array([0, 1, 2, 0, 2, 3]);

const texCoords = new Float32Array([

1.0,

1.0, // Upper right corner

0.0,

1.0, // top left corner

0.0,

0.0, // lower left quarter

1.0,

0.0, // Lower right corner

]);

const obj = {};

obj.verticesBuffer = this.createBuffer(gl, gl.ARRAY_BUFFER, vertices);

obj.indexBuffer = this.createBuffer(gl, gl.ELEMENT_ARRAY_BUFFER, indices);

obj.texCoordsBuffer = this.createBuffer(gl, gl.ARRAY_BUFFER, texCoords);

return obj;

},

createBuffer: function (gl, type, data) {

const buffer = gl.createBuffer();

gl.bindBuffer(type, buffer);

gl.bufferData(type, data, gl.STATIC_DRAW);

gl.bindBuffer(type, null);

return buffer;

}

// Partial code omissionVertex shaders and slice shaders can be newly defined. Here, a set is shared for convenience.

Framebuffer object

To draw in the frame buffer, you need to create the corresponding frame buffer object.

// Framebuffer object

this.framebufferObj = this.createFramebufferObject(gl);

// Partial code omission

createFramebufferObject: function (gl) {

let framebuffer = gl.createFramebuffer();

let texture = gl.createTexture();

gl.bindTexture(gl.TEXTURE_2D, texture);

gl.texImage2D(

gl.TEXTURE_2D,

0,

gl.RGBA,

this.offscreenWidth,

this.offscreenHeight,

0,

gl.RGBA,

gl.UNSIGNED_BYTE,

null

);

// Reverse the Y-axis direction of the picture

gl.pixelStorei(gl.UNPACK_FLIP_Y_WEBGL, true);

// Texture coordinates horizontal fill s

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE);

// Texture coordinates vertical fill t

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE);

// Texture amplification processing

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.LINEAR);

// Texture reduction processing

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR);

framebuffer.texture = texture; // Save texture object

// Associate buffer object

gl.bindFramebuffer(gl.FRAMEBUFFER, framebuffer);

gl.framebufferTexture2D(

gl.FRAMEBUFFER,

gl.COLOR_ATTACHMENT0,

gl.TEXTURE_2D,

texture,

0

);

// Check whether the configuration is correct

var e = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

if (gl.FRAMEBUFFER_COMPLETE !== e) {

console.log("Frame buffer object is incomplete: " + e.toString());

return;

}

gl.bindFramebuffer(gl.FRAMEBUFFER, null);

gl.bindTexture(gl.TEXTURE_2D, null);

return framebuffer;

}

// Partial code omission- createFramebuffer Function to create a framebuffer object. The function to delete the object is deleteFramebuffer .

- After creation, you need to assign the color association object of the frame buffer to a texture object. The texture object created in the example has several characteristics: 1. The width and height of the texture are consistent with the width and height of the drawing area; 2 when using textimage2d, the last parameter is null, that is, a blank area for storing texture objects is reserved; 3 the created texture object is placed on the frame buffer object, which is this line of code framebuffer texture = texture .

- bindFramebuffer Function to bind the framebuffer to the target, and then use framebufferTexture2D Bind the texture object you created earlier to the color association object GL COLOR_ Attachment0.

- checkFramebufferStatus Check whether the framebuffer object is configured correctly.

draw

The main difference in drawing is the switching process:

// Partial code omission

draw: function () {

const gl = this.gl;

const frameBuffer = this.framebufferObj;

this.canvasObj.clear();

const program = this.shaderProgram;

gl.useProgram(program.program);

// This makes the target of painting become a frame buffer

gl.bindFramebuffer(gl.FRAMEBUFFER, frameBuffer);

gl.viewport(0, 0, this.offscreenWidth, this.offscreenHeight);

this.drawOffFrame(program, this.imgTexture);

// When the frame buffer is unbound, the painted target becomes a color buffer

gl.bindFramebuffer(gl.FRAMEBUFFER, null);

gl.viewport(0, 0, gl.canvas.width, gl.canvas.height);

this.drawScreen(program, frameBuffer.texture);

},

// Partial code omission- First use bindFramebuffer to turn the painted target into a frame buffer. You need to specify the corresponding viewport.

- After the frame buffer is drawn, unbind and return to the normal default color buffer. You also need to specify the corresponding viewport, especially the texture of the buffer object, which indicates that it is the drawing result obtained from the frame buffer.

Observation and thinking

The relevant examples found on the Internet feel complex. The following observations and thoughts are made in the process of trying to simplify.

framebuffer. Is texture an inherent attribute or an artificial addition?

When creating a framebuffer object, there is this logic: framebuffer Texture = texture, does the framebuffer object itself have a texture attribute?

It is found that this attribute was not found when the log was first created, so it is speculated that it should be added artificially.

framebuffer. When did texture have content?

When initializing the frame buffer object, the stored texture is blank, but from the final result, after the frame buffer is drawn, the texture has content, so framebuffer When did the texture attribute have content?

In the drawing logic, the statements related to texture are:

gl.activeTexture(gl.TEXTURE0); gl.bindTexture(gl.TEXTURE_2D, texture); gl.uniform1i(program.uSampler, 0); gl.drawElements(gl.TRIANGLES, 6, gl.UNSIGNED_SHORT, 0);

Presumably GL The drawelements method draws the color association object whose result is stored in the frame buffer, and the color association object of the frame buffer is associated with the created blank texture object, framebuffer Texture also points to the same blank texture object, so there is content in the end.

Why didn't the final display cover the whole canvas?

When you finally paint the displayable content, you can find that the vertices correspond to the whole canvas and the texture coordinates correspond to the whole texture, but why not spread the whole canvas?

The texture used in the final rendering of displayable content comes from the rendering result of the frame buffer, and the vertices of the frame buffer correspond to half of the whole buffer. If the rendering result of the whole frame buffer is regarded as a texture and scaled according to the scale of the final rendering visual area, the final rendering is not covered, which is the expected correct result.

This is covered with canvas Examples , simply adjust the buffer vertices to correspond to the entire buffer size.