Section I: Overview of JDBC

Section II: JDBC Connecting to Database

Section III: Use Statement interface to add, delete and change operations

Section IV: Use PreparedStatement interface to add, delete and change

Section 5: Resultset result set

Section VI: Processing large data objects

Section 7: Calling stored procedures using the CallableStatement interface

Section 8: Using metadata to analyze databases

Section 9: JDBC Transaction Processing Transactions

Here's the sixth part, and the rest can be accessed through the links above.

8. Processing Big Data Objects

It mainly studies from the following two aspects.

- Processing CLOB data

- Processing BLOG data

Large data object processing mainly includes two types of fields: CLOB (Character Large Object) and BLOB (binary large object); CLOB can store large character data objects, such as novels; BLOB can store binary large data objects, such as pictures, movies, music;

Preparations are now under way.

First of all, we learn the CLOB field: we are operating on big character data, so we need to use the knowledge of IO stream and file operation File class. Briefly mention this IO stream, the first contact was in Hangzhou Dane internship, when we made a fishing game, we used the image IO stream at that time. At that time, we simply mastered the image IO stream, but did not have a deep study of the IO stream. Here is a systematic study of my recent basic knowledge.

Specific knowledge learning can be seen in another category I wrote. Systematic Learning of J2SE: IO Flow and File Class This part of the knowledge is not introduced here too much.

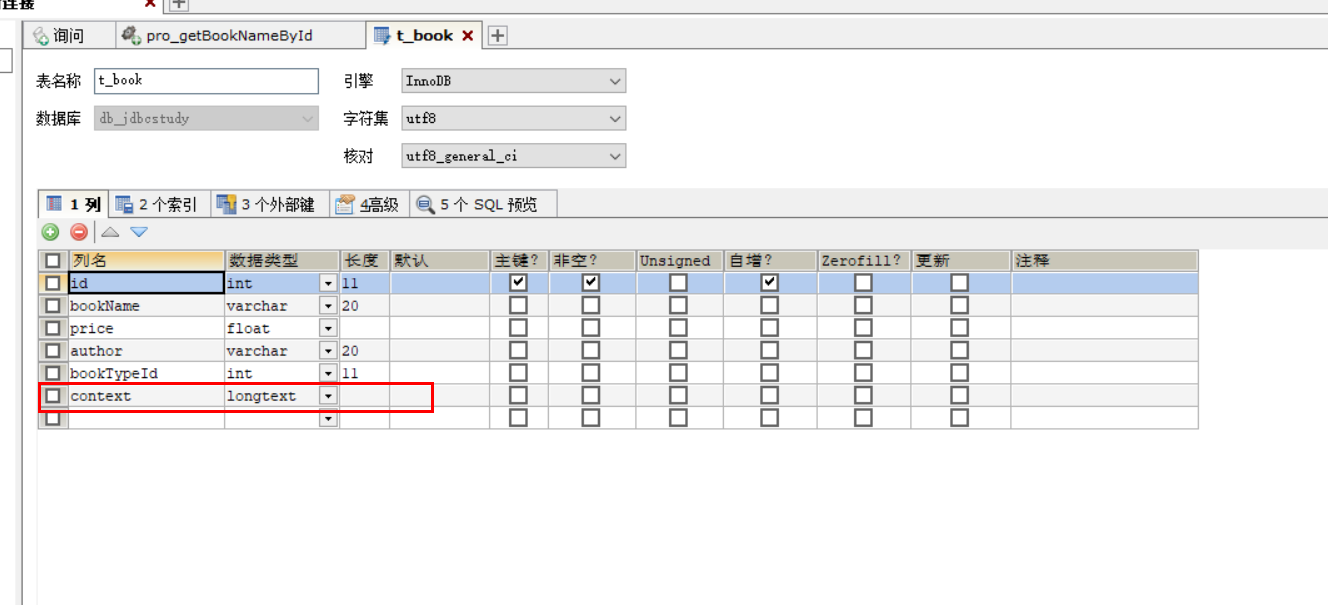

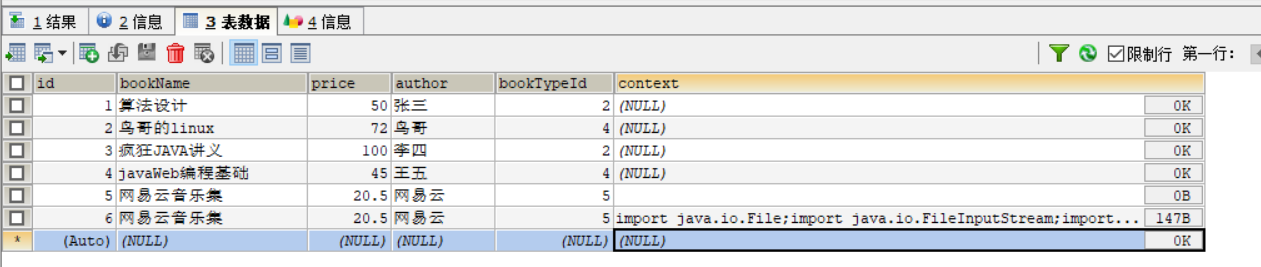

Firstly, I added another column to the t_book table I first created, which serves as a summary of the article.

Pictured This data type stores large character data.

This data type stores large character data.

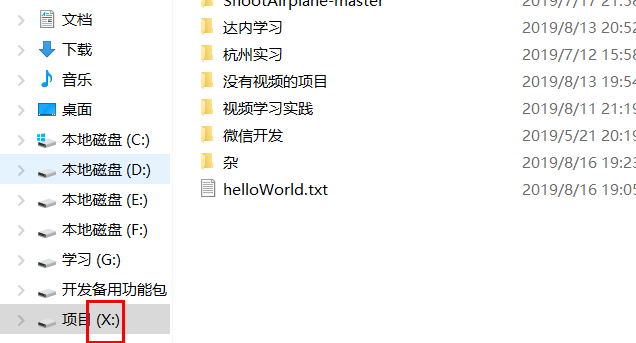

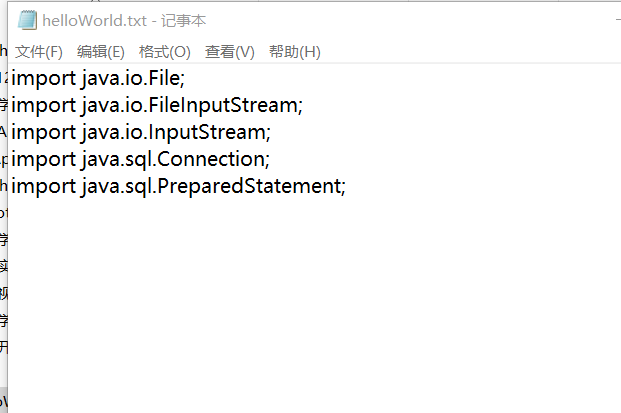

Next, select a hard disk and create a text text file that you can put in without putting anything in.

Text text I created on my project learning disk, as shown in Hello World This is the path, I won't find it here, but remember, to change it to /, here is wrong!.

This is the path, I won't find it here, but remember, to change it to /, here is wrong!.

Then the following work is on the code side.

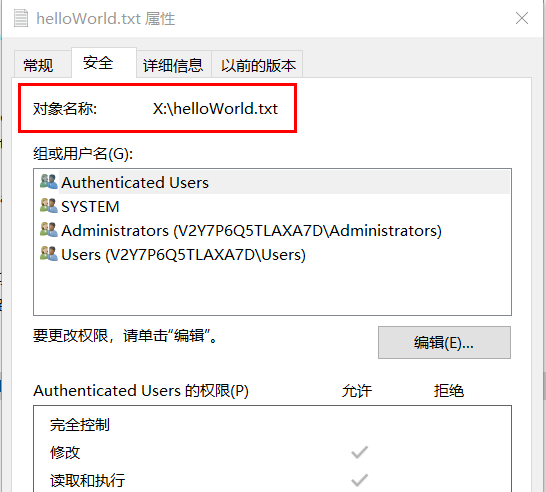

With the addition of columns in the table, the Book class also adds an attribute with this, and overrides the construction method.

Compare the specific differences with the previous one.

package model;

import java.io.File;

/**

* The entity of a book has all the attributes of a book.

* @author Administrator

*

*/

public class Book {

private int id;

private String bookName;

private float price;

private String author;

private int bookTypeId;

private File context;

//Constructing method (shortcut key writing)

public Book(String bookName, float price, String author, int bookTypeId) {

super();

this.bookName = bookName;

this.price = price;

this.author = author;

this.bookTypeId = bookTypeId;

}

//Construction Method of Heavy Load

public Book(int id, String bookName, float price, String author, int bookTypeId) {

super();

this.id = id;

this.bookName = bookName;

this.price = price;

this.author = author;

this.bookTypeId = bookTypeId;

}

//Constructing learning overload for Chapter 6

public Book(String bookName, float price, String author, int bookTypeId, File context) {

super();

this.bookName = bookName;

this.price = price;

this.author = author;

this.bookTypeId = bookTypeId;

this.context = context;

}

//All of the following code is implemented by shortcut keys

public int getId() {

return id;

}

public void setId(int id) {

this.id = id;

}

public String getBookName() {

return bookName;

}

public void setBookName(String bookName) {

this.bookName = bookName;

}

public float getPrice() {

return price;

}

public void setPrice(float price) {

this.price = price;

}

public String getAuthor() {

return author;

}

public void setAuthor(String author) {

this.author = author;

}

public int getBookTypeId() {

return bookTypeId;

}

public void setBookTypeId(int bookTypeId) {

this.bookTypeId = bookTypeId;

}

public File getContext() {

return context;

}

public void setContext(File context) {

this.context = context;

}

@Override

public String toString() {

return "Book [id=" + id + ", bookName=" + bookName + ", price=" + price + ", author=" + author + ", bookTypeId="

+ bookTypeId + "]";

}

}

A newly defined property is File-type

A newly defined property is File-type

Then I'll quote what I've learned before. Section IV Learning The only change in the knowledge of writing data to a database is to write in a large character data.

package chap6_sec01;

import java.io.File;

import java.io.FileInputStream;

import java.io.InputStream;

import java.sql.Connection;

import java.sql.PreparedStatement;

import model.Book;

import util.DbUtil;

public class Demo1 {

private static DbUtil dbUtil=new DbUtil();

private static int addBook(Book book) throws Exception{

Connection con=null;

con=dbUtil.getCon();//Get the connection

//Data add sql statement, then dig five pits, five pits represent five attributes in the table

String sql="insert into t_book values(null,?,?,?,?,?)";

PreparedStatement pstmt= con.prepareStatement(sql);

//Here are five pits to fill.

pstmt.setString(1, book.getBookName());

pstmt.setFloat(2, book.getPrice());

pstmt.setString(3, book.getAuthor());

pstmt.setInt(4, book.getBookTypeId());

File context=book.getContext();//get files

InputStream inputStream=new FileInputStream(context);//Define an input stream

//The last parameter is how often to fill the fifth pit.

pstmt.setAsciiStream(5, inputStream, context.length());//Fill in the fifth pit with values

//The value returned is the number of items of data affected by the table after the sql statement is executed

int result=pstmt.executeUpdate();

dbUtil.close(pstmt, con);

return result;

}

public static void main(String[] args) {

File context=new File("x:/helloWorld.txt");

Book book=new Book("Netease Cloud Music Collection", 20.5f, "Netease Cloud", 5, context);

try {

int result = addBook(book);

System.out.println("Information Added Successfully");

} catch (Exception e) {

// TODO Auto-generated catch block

e.printStackTrace();

System.out.println("Failure to add information");

}

}

}

Look at the changes:

- The introduction of IO stream, in the main function to get the text text in the text, and then pass to the addBook function.

- The addBook function introduces the knowledge of IO stream and File class, and digs an extra hole in the SQL statement, and writes the data of the last hole into it, because the data of big character type is mostly the length of the data to be written in when we write, so that the storage space can be reasonably applied and the dead space defined can also be solved. Not enough.

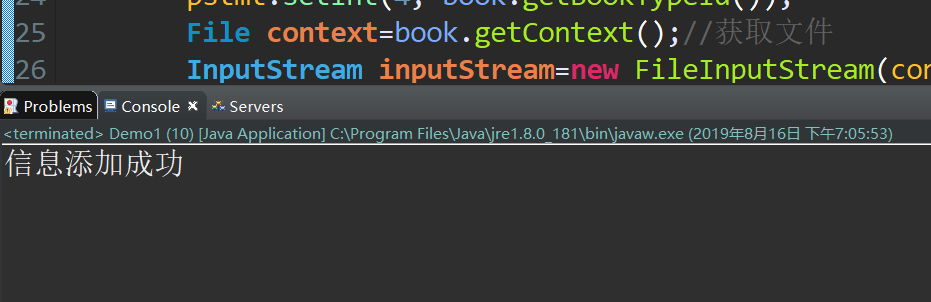

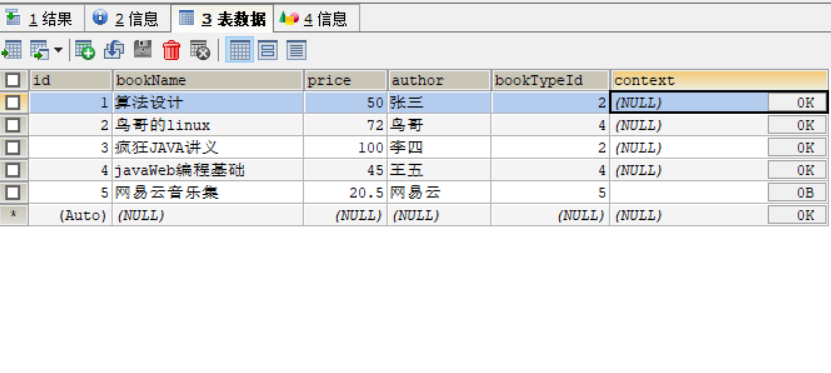

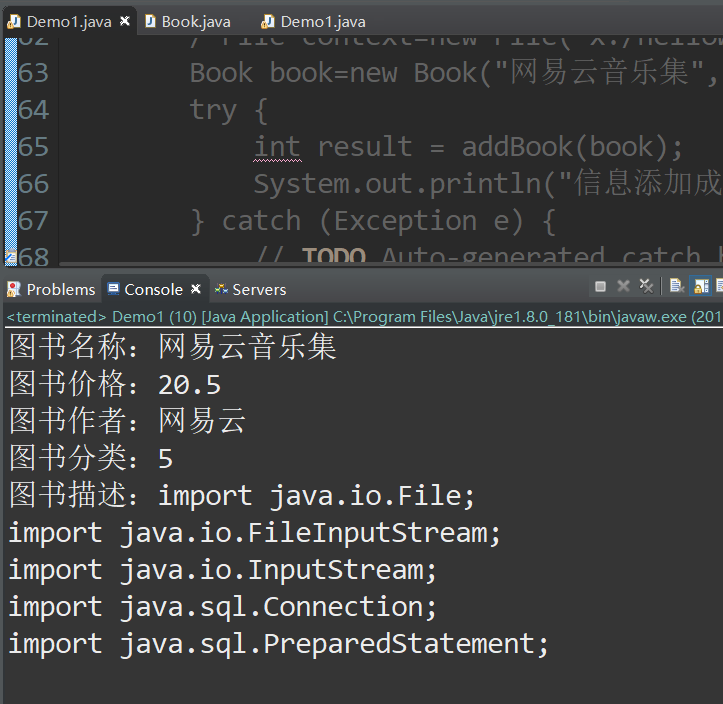

results of enforcement

Why is the content of the inserted information empty? Because I didn't write anything in when I created text text text, we just need to write something in text text text and execute it again to see the effect.

Why is the content of the inserted information empty? Because I didn't write anything in when I created text text text, we just need to write something in text text text and execute it again to see the effect.

Simply write something in

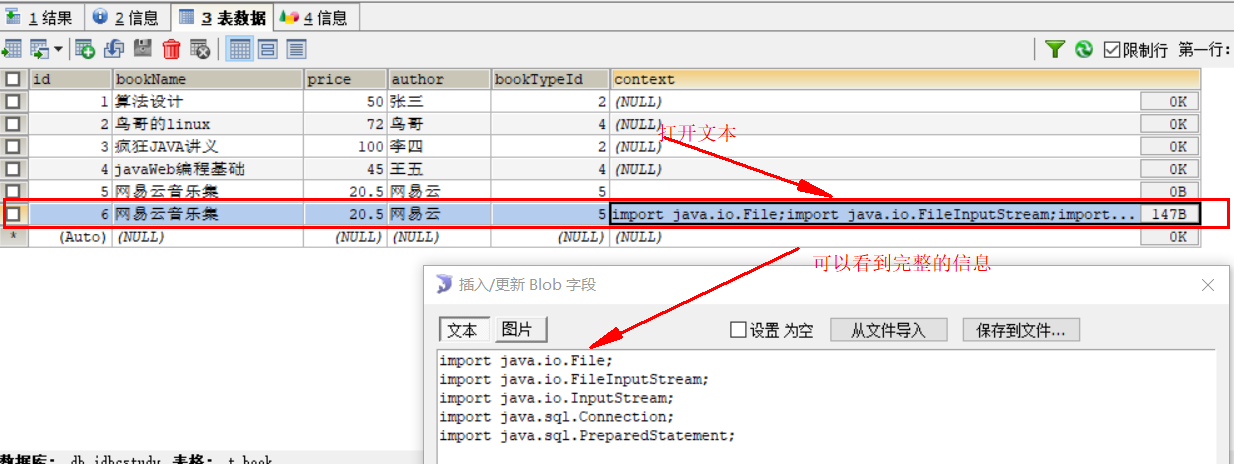

Look at the database after running the code again However, it is worth noting that it is not allowed to execute code stored in text files. I write it in accordance with the English keyboard. Long text does not support Chinese text.

However, it is worth noting that it is not allowed to execute code stored in text files. I write it in accordance with the English keyboard. Long text does not support Chinese text.

The above is inserted into the database, and the following is how to read the large character type data in the database.

Let's take a look at what I've done. Comparing with the above, we can see that we read correctly. This is read from the database, not from the text text file.

Comparing with the above, we can see that we read correctly. This is read from the database, not from the text text file.

Look at the code below:

package chap6_sec01;

import java.io.File;

import java.io.FileInputStream;

import java.io.InputStream;

import java.sql.Clob;

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import model.Book;

import util.DbUtil;

public class Demo1 {

private static DbUtil dbUtil=new DbUtil();

private static int addBook(Book book) throws Exception{

Connection con=null;

con=dbUtil.getCon();//Get the connection

//Data add sql statement, then dig five pits, five pits represent five attributes in the table

String sql="insert into t_book values(null,?,?,?,?,?)";

PreparedStatement pstmt= con.prepareStatement(sql);

//Here are five pits to fill.

pstmt.setString(1, book.getBookName());

pstmt.setFloat(2, book.getPrice());

pstmt.setString(3, book.getAuthor());

pstmt.setInt(4, book.getBookTypeId());

File context=book.getContext();//get files

InputStream inputStream=new FileInputStream(context);//Define an input stream

//The last parameter is how often to fill the fifth pit.

pstmt.setAsciiStream(5, inputStream, context.length());//Fill in the fifth pit with values

//The value returned is the number of items of data affected by the table after the sql statement is executed

int result=pstmt.executeUpdate();

dbUtil.close(pstmt, con);

return result;

}

public static void getBook(int id)throws Exception{

Connection con=dbUtil.getCon();

String sql="select * from t_book where id=?";

PreparedStatement pstmt=con.prepareStatement(sql);

pstmt.setInt(1, id);

//What is used is the learning of the result set in Section 5.

ResultSet rs=pstmt.executeQuery();

if(rs.next()){

String bookName=rs.getString("bookName");

Float price=rs.getFloat("price");

String author=rs.getString("author");

int bookTypeId=rs.getInt("bookTypeId");

Clob c=rs.getClob("context");

String context=c.getSubString(1, (int)c.length());

System.out.println("Book Name:"+bookName);

System.out.println("Book price:"+price);

System.out.println("Book authors:"+author);

System.out.println("Classification of books:"+bookTypeId);

System.out.println("Book Description:"+context);

}

dbUtil.close(pstmt, con);//Close related operations and connections

}

public static void main(String[] args) throws Exception {

/*File context=new File("x:/helloWorld.txt");

Book book=new Book("Netease Cloud Music Collection, 20.5f, Netease Cloud, 5, context;

try {

int result = addBook(book);

System.out.println("Successful information addition.

} catch (Exception e) {

// TODO Auto-generated catch block

e.printStackTrace();

System.out.println("Failed to add information.

}*/

getBook(6);

}

}

Looking at the function of getBook, the rest is the same as above. I'm just lazy annotation.

Then, based on this, we can read all the data in the database with id=6. Still the same, why query through id, because the ID here is unique, ID is the primary key, so the results of the query is unique, we can only query all the information of a data.

Still the same, why query through id, because the ID here is unique, ID is the primary key, so the results of the query is unique, we can only query all the information of a data.

Clob is used above. I read this article for a while. JAVA Handles Clob Large Objects You can also use IO streams for processing, but it's cumbersome, and then use the encapsulated Clob directly.

========================================================================================

Below is the BLOG part, mainly for the storage of pictures, movies, music, these information stored in the database, the essence is to deal with binary content.

Preparations, with a brief introduction to the book on top, and illustrations on the book below, so as usual, I'm going to change the tables in the database, add another column to the table, and then change the entity Book, and add another attribute to it.

I checked the limit size of this binary storage on the internet. It can save several G's, so I'm sure it's enough. Change the table and add a new column.

Change the table and add a new column.

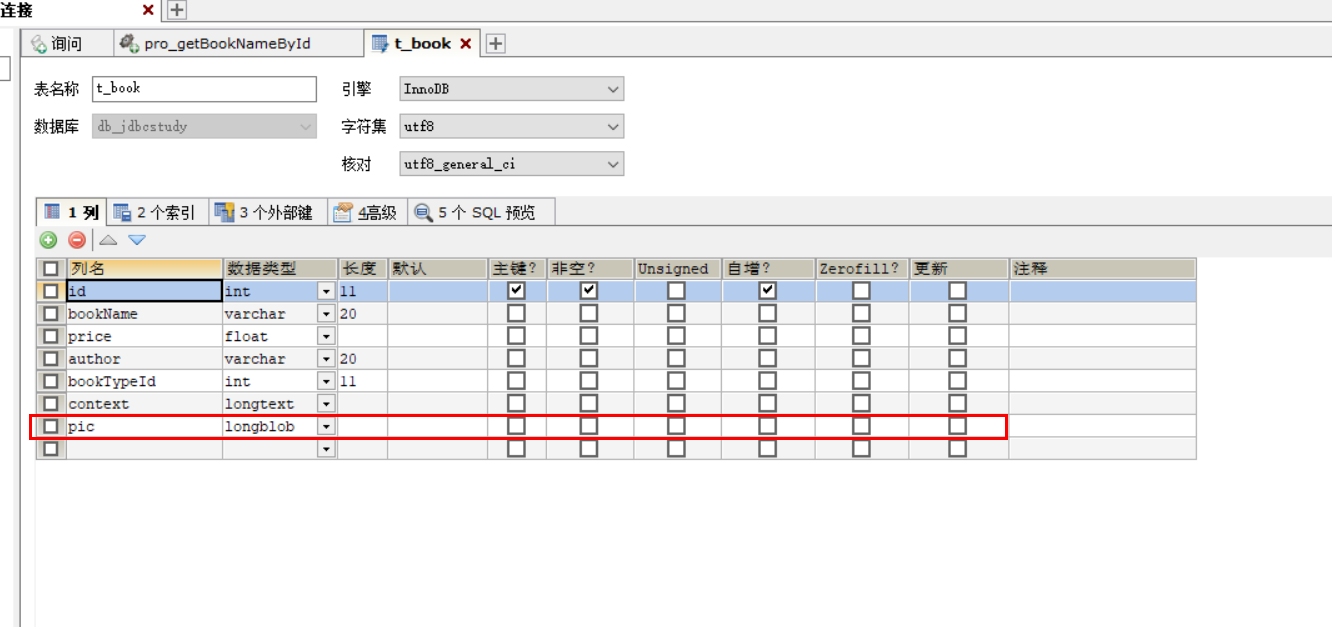

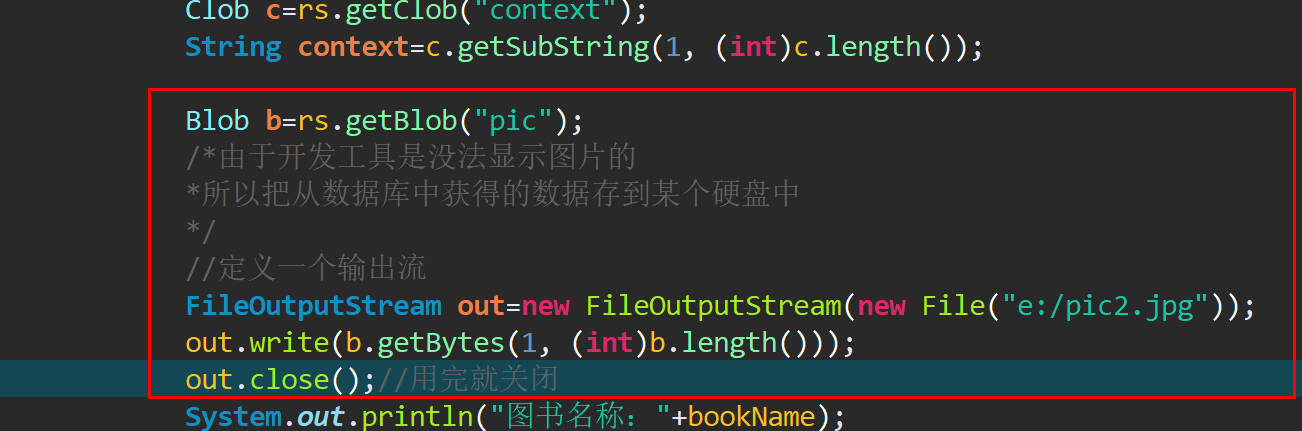

Then put a picture on the X disk. Recently, I've been fascinated by Big Q Baby. I'll take a look at it. It's quite lovely.

Recently, I've been fascinated by Big Q Baby. I'll take a look at it. It's quite lovely.

Then we look at the code.

As usual, first change the Book class, add new attributes, and override the construction method.

pa inserts the code slice ckage model here;

import java.io.File;

/**

- The entity of a book has all the attributes of a book.

- @author Administrator

*/

public class Book {

private int id;

private String bookName;

private float price;

private String author;

private int bookTypeId;

private File context;

private File pic;

//Constructing method (shortcut key writing)

public Book(String bookName, float price, String author, int bookTypeId) {

super();

this.bookName = bookName;

this.price = price;

this.author = author;

this.bookTypeId = bookTypeId;

}

//Construction Method of Heavy Load

public Book(int id, String bookName, float price, String author, int bookTypeId) {

super();

this.id = id;

this.bookName = bookName;

this.price = price;

this.author = author;

this.bookTypeId = bookTypeId;

}

//Constructing learning overload for Chapter 6

public Book(String bookName, float price, String author, int bookTypeId, File context) {

super();

this.bookName = bookName;

this.price = price;

this.author = author;

this.bookTypeId = bookTypeId;

this.context = context;

}

//Overload of BLOG

public Book(String bookName, float price, String author, int bookTypeId, File context, File pic) {

super();

this.bookName = bookName;

this.price = price;

this.author = author;

this.bookTypeId = bookTypeId;

this.context = context;

this.pic = pic;

}

//All of the following code is implemented by shortcut keys

public int getId() {

return id;

}

public void setId(int id) {

this.id = id;

}

public String getBookName() {

return bookName;

}

public void setBookName(String bookName) {

this.bookName = bookName;

}

public float getPrice() {

return price;

}

public void setPrice(float price) {

this.price = price;

}

public String getAuthor() {

return author;

}

public void setAuthor(String author) {

this.author = author;

}

public int getBookTypeId() {

return bookTypeId;

}

public void setBookTypeId(int bookTypeId) {

this.bookTypeId = bookTypeId;

}

public File getContext() {

return context;

}

public void setContext(File context) {

this.context = context;

}

public File getPic() {

return pic;

}

public void setPic(File pic) {

this.pic = pic;

}

@Override

public String toString() {

return "Book [id=" + id + ", bookName=" + bookName + ", price=" + price + ", author=" + author + ", bookTypeId="

+ bookTypeId + "]";

}

}

With the new attributes, new constructions, set and get, we can now write the main code, copy the learning code directly, and make a slight change.

package chap6_sec02;

import java.io.File;

import java.io.FileInputStream;

import java.io.InputStream;

import java.sql.Clob;

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import model.Book;

import util.DbUtil;

public class Demo1 {

private static DbUtil dbUtil=new DbUtil();

private static int addBook(Book book) throws Exception{

Connection con=null;

con=dbUtil.getCon();//Get the connection

//Data add sql statement, then dig five pits, five pits represent five attributes in the table

String sql="insert into t_book values(null,?,?,?,?,?,?)";

PreparedStatement pstmt= con.prepareStatement(sql);

//Here are five pits to fill.

pstmt.setString(1, book.getBookName());

pstmt.setFloat(2, book.getPrice());

pstmt.setString(3, book.getAuthor());

pstmt.setInt(4, book.getBookTypeId());

File context=book.getContext();//get files

InputStream inputStream=new FileInputStream(context);//Define an input stream

//The last parameter is how often to fill the fifth pit.

pstmt.setAsciiStream(5, inputStream, context.length());//Fill in the fifth pit with values

File pic=book.getPic();

InputStream inputStream2=new FileInputStream(pic);//Define an input stream

pstmt.setBinaryStream(6, inputStream2, pic.length());

//The value returned is the number of items of data affected by the table after the sql statement is executed

int result=pstmt.executeUpdate();

dbUtil.close(pstmt, con);

return result;

}

public static void getBook(int id)throws Exception{

Connection con=dbUtil.getCon();

String sql="select * from t_book where id=?";

PreparedStatement pstmt=con.prepareStatement(sql);

pstmt.setInt(1, id);

//What is used is the learning of the result set in Section 5.

ResultSet rs=pstmt.executeQuery();

if(rs.next()){

String bookName=rs.getString("bookName");

Float price=rs.getFloat("price");

String author=rs.getString("author");

int bookTypeId=rs.getInt("bookTypeId");

Clob c=rs.getClob("context");

String context=c.getSubString(1, (int)c.length());

System.out.println("Book Name:"+bookName);

System.out.println("Book price:"+price);

System.out.println("Book authors:"+author);

System.out.println("Classification of books:"+bookTypeId);

System.out.println("Book Description:"+context);

}

dbUtil.close(pstmt, con);//Close related operations and connections

}

public static void main(String[] args) throws Exception {

File context=new File("x:/helloWorld.txt");

File pic=new File("x:/pic.jpg");

Book book=new Book("Netease Cloud Music Collection", 20.5f, "Netease Cloud", 5, context,pic);

try {

int result = addBook(book);

System.out.println("Information Added Successfully");

} catch (Exception e) {

e.printStackTrace();

System.out.println("Failure to add information");

}

}

}

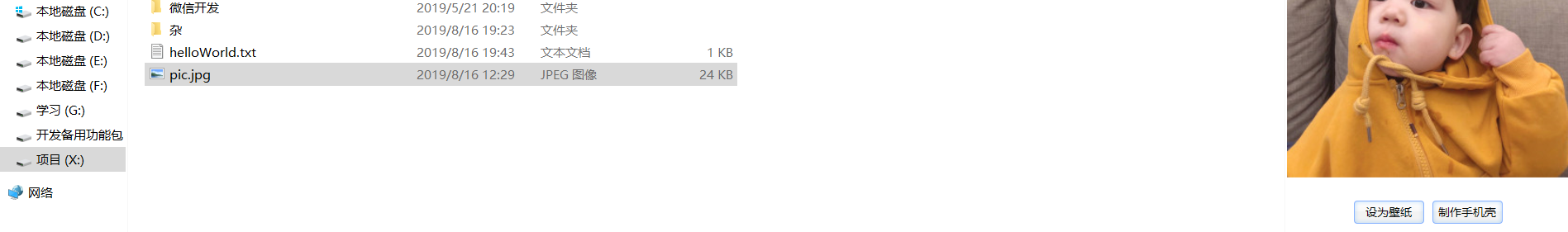

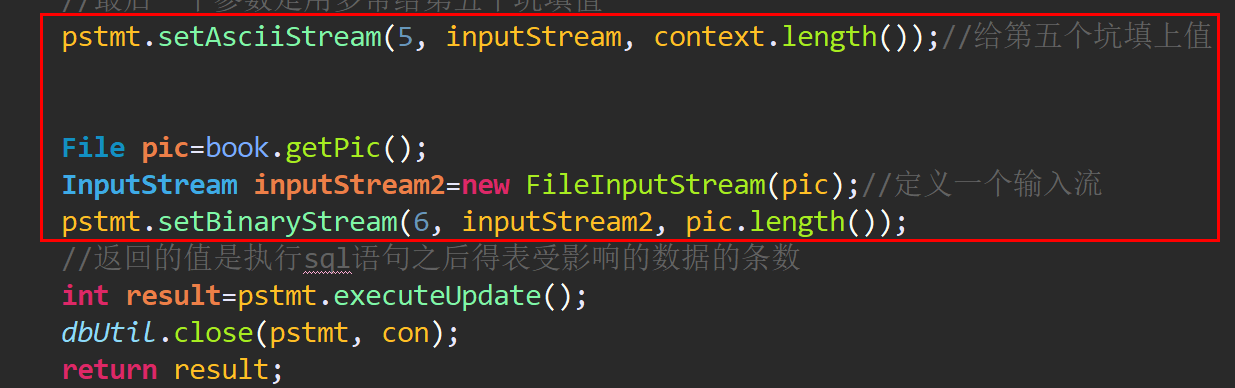

The main change is in the addBook class, and then a new File is written in the main function as an introduction to the picture.

The changes are as follows, in addBook Changes in main

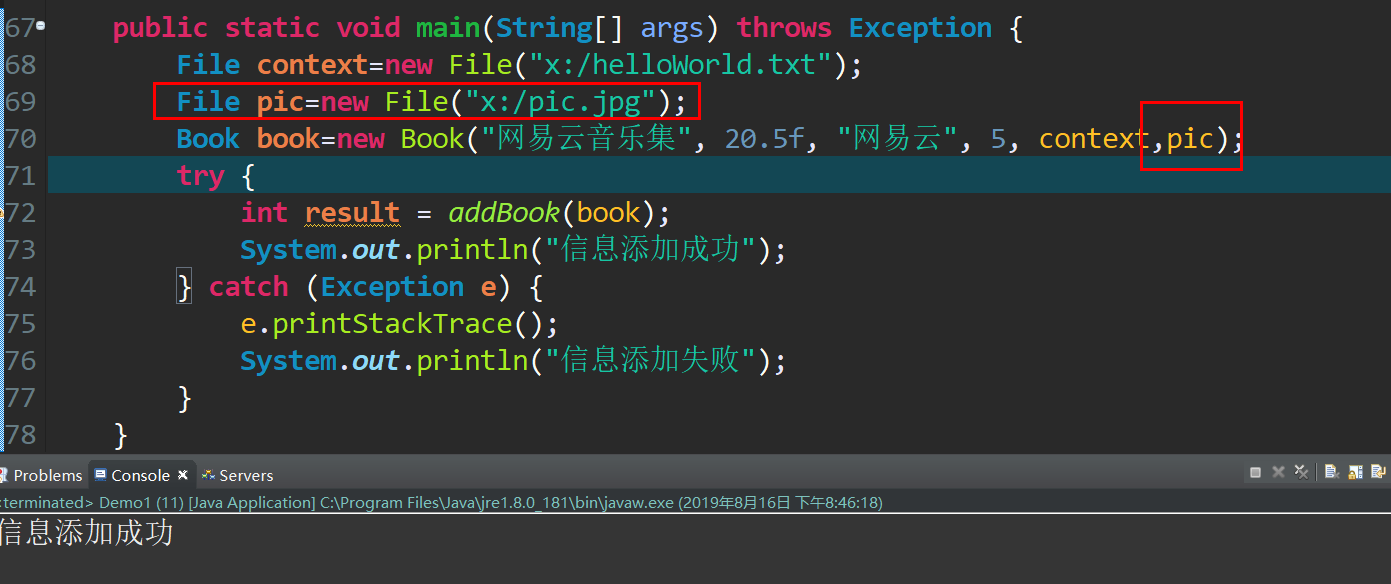

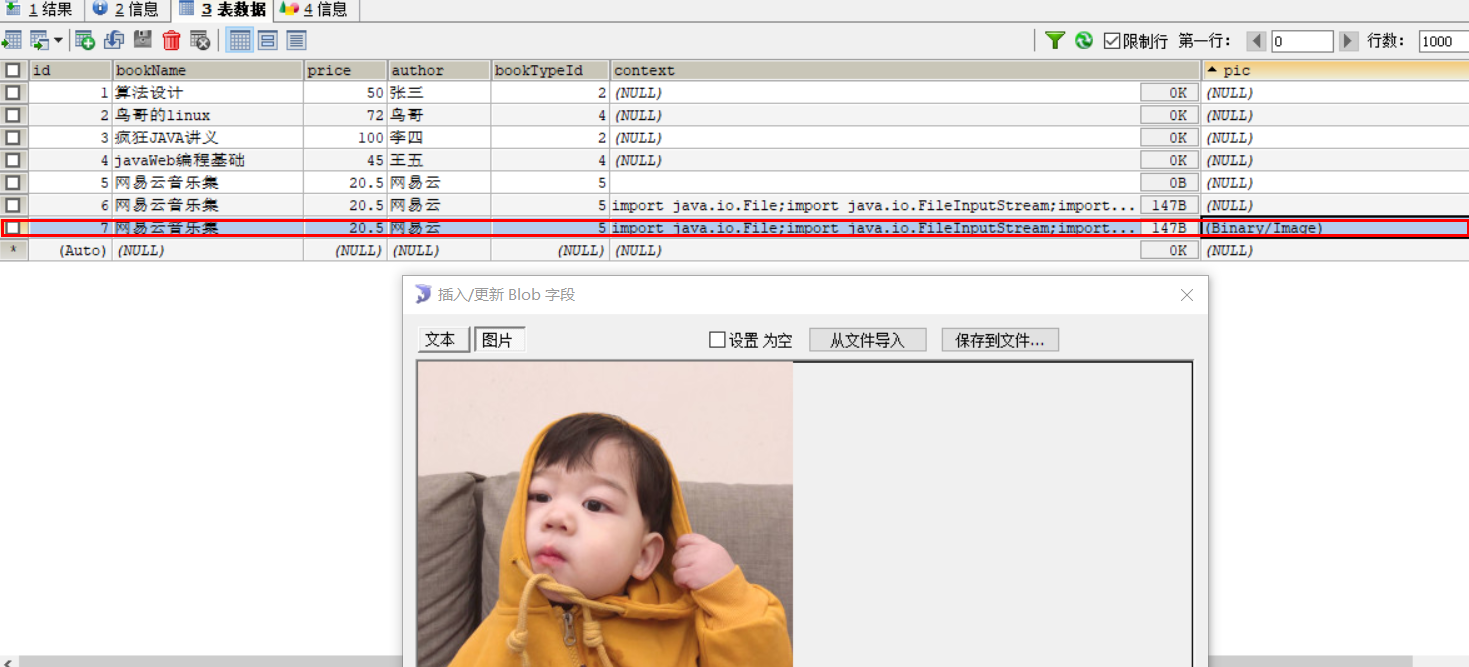

Changes in main After execution, go to the database to check, the effect is as follows, or you can see our lovely big Q treasure.

After execution, go to the database to check, the effect is as follows, or you can see our lovely big Q treasure. As usual, when you go in, you go in. How can you get it out of the database?

As usual, when you go in, you go in. How can you get it out of the database?

Let's look at the code

package chap6_sec02;

import java.io.File;

import java.io.FileInputStream;

import java.io.FileOutputStream;

import java.io.InputStream;

import java.sql.Blob;

import java.sql.Clob;

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import model.Book;

import util.DbUtil;

public class Demo1 {

private static DbUtil dbUtil=new DbUtil();

private static int addBook(Book book) throws Exception{

Connection con=null;

con=dbUtil.getCon();//Get the connection

//Data add sql statement, then dig five pits, five pits represent five attributes in the table

String sql="insert into t_book values(null,?,?,?,?,?,?)";

PreparedStatement pstmt= con.prepareStatement(sql);

//Here are five pits to fill.

pstmt.setString(1, book.getBookName());

pstmt.setFloat(2, book.getPrice());

pstmt.setString(3, book.getAuthor());

pstmt.setInt(4, book.getBookTypeId());

File context=book.getContext();//get files

InputStream inputStream=new FileInputStream(context);//Define an input stream

//The last parameter is how often to fill the fifth pit.

pstmt.setAsciiStream(5, inputStream, context.length());//Fill in the fifth pit with values

File pic=book.getPic();

InputStream inputStream2=new FileInputStream(pic);//Define an input stream

pstmt.setBinaryStream(6, inputStream2, pic.length());

//The value returned is the number of items of data affected by the table after the sql statement is executed

int result=pstmt.executeUpdate();

dbUtil.close(pstmt, con);

return result;

}

public static void getBook(int id)throws Exception{

Connection con=dbUtil.getCon();

String sql="select * from t_book where id=?";

PreparedStatement pstmt=con.prepareStatement(sql);

pstmt.setInt(1, id);

//What is used is the learning of the result set in Section 5.

ResultSet rs=pstmt.executeQuery();

if(rs.next()){

String bookName=rs.getString("bookName");

Float price=rs.getFloat("price");

String author=rs.getString("author");

int bookTypeId=rs.getInt("bookTypeId");

Clob c=rs.getClob("context");

String context=c.getSubString(1, (int)c.length());

Blob b=rs.getBlob("pic");

/*Because development tools can't display pictures

*So store the data from the database into a hard disk.

*/

//Define an output stream

FileOutputStream out=new FileOutputStream(new File("e:/pic2.jpg"));

out.write(b.getBytes(1, (int)b.length()));

out.close();//Turn it off when it's used up

System.out.println("Book Name:"+bookName);

System.out.println("Book price:"+price);

System.out.println("Book authors:"+author);

System.out.println("Classification of books:"+bookTypeId);

System.out.println("Book Description:"+context);

}

dbUtil.close(pstmt, con);//Close related operations and connections

}

public static void main(String[] args) throws Exception {

/*File context=new File("x:/helloWorld.txt");

File pic=new File("x:/pic.jpg");

Book book=new Book("Netease Cloud Music Collection, 20.5f, Netease Cloud, 5, context, pic;

try {

int result = addBook(book);

System.out.println("Successful information addition.

} catch (Exception e) {

e.printStackTrace();

System.out.println("Failed to add information.

}*/

getBook(7);

}

}

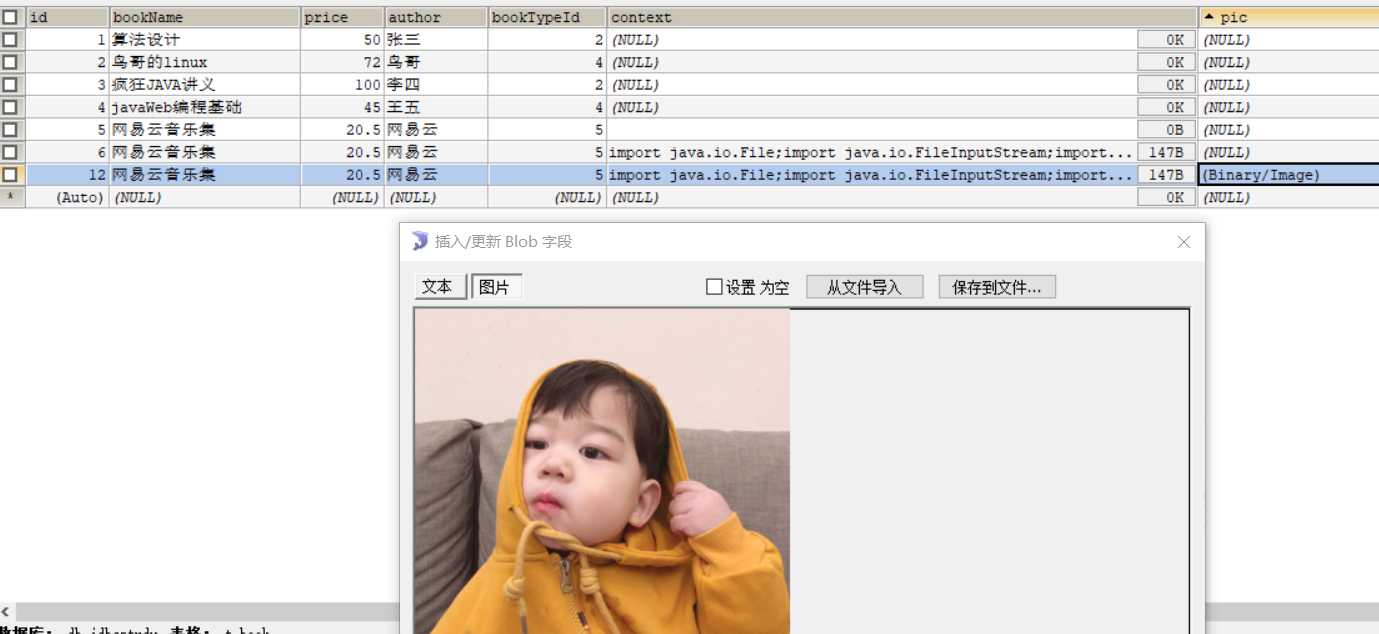

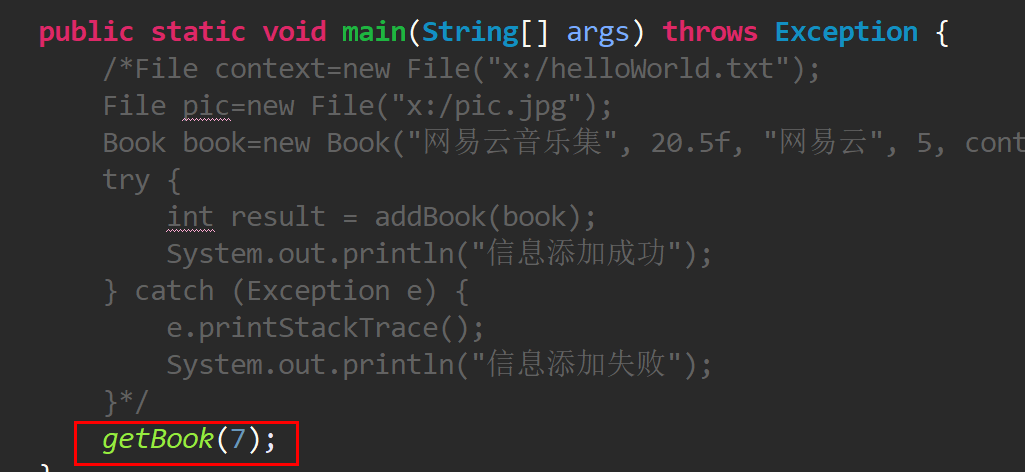

The main changes are as follows:

Added in getBook Use of the annotation point addBook function in main function, and then call the getBook function

Use of the annotation point addBook function in main function, and then call the getBook function id=7 is in the database. Look at the database.

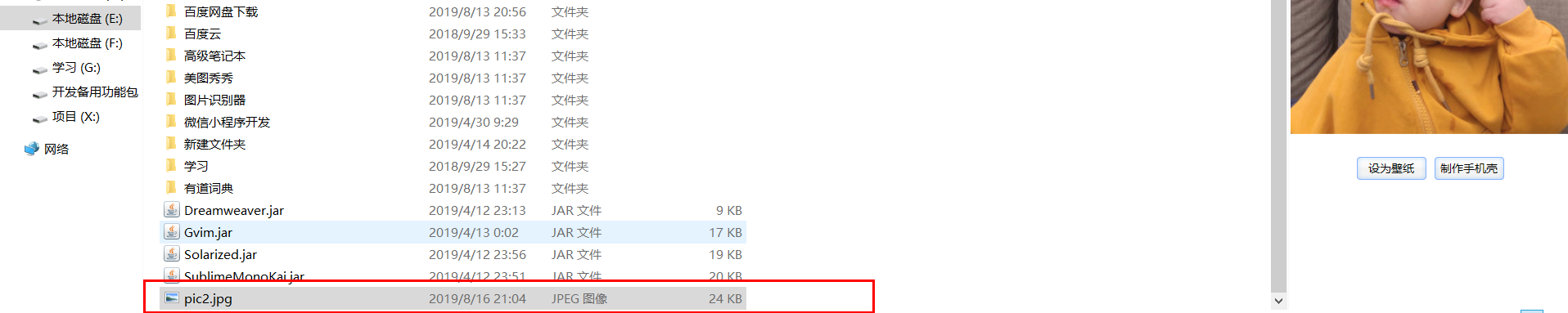

id=7 is in the database. Look at the database. Then the output stream is written on the top, and the data from the database is stored in E disk. The following is the effect after executing the code.

Then the output stream is written on the top, and the data from the database is stored in E disk. The following is the effect after executing the code.

This study is over here, pay attention to the BLOG part of the study, we operate on binary data, learning the composition of the computer know that the data stored in the computer are in the form of binary, octal and hexadecimal only for the purpose of our human data processing, so when we calculate. The shift operation is more important than the normal enlargement and reduction operation. In essence, it does not need to be converted into binary system for calculation. When learning the ClOB part, it does not need to write when we define the data type in the database, whether it is ClOB or BLOG. Length of data.