Preface

When we write crawlers, we often encounter a variety of anti climbing measures. For example, more and more js loading on various large websites is a headache.

The data of these websites is not directly accessible like a simple website. We often cannot find the data source. Can we only use selenium to simulate browser access? Of course not.

In this paper, we take how to crack the parameters of Dao translation as an example to complete js cracking step by step.

Webpage analysis

Target website: http://fanyi.youdao.com/

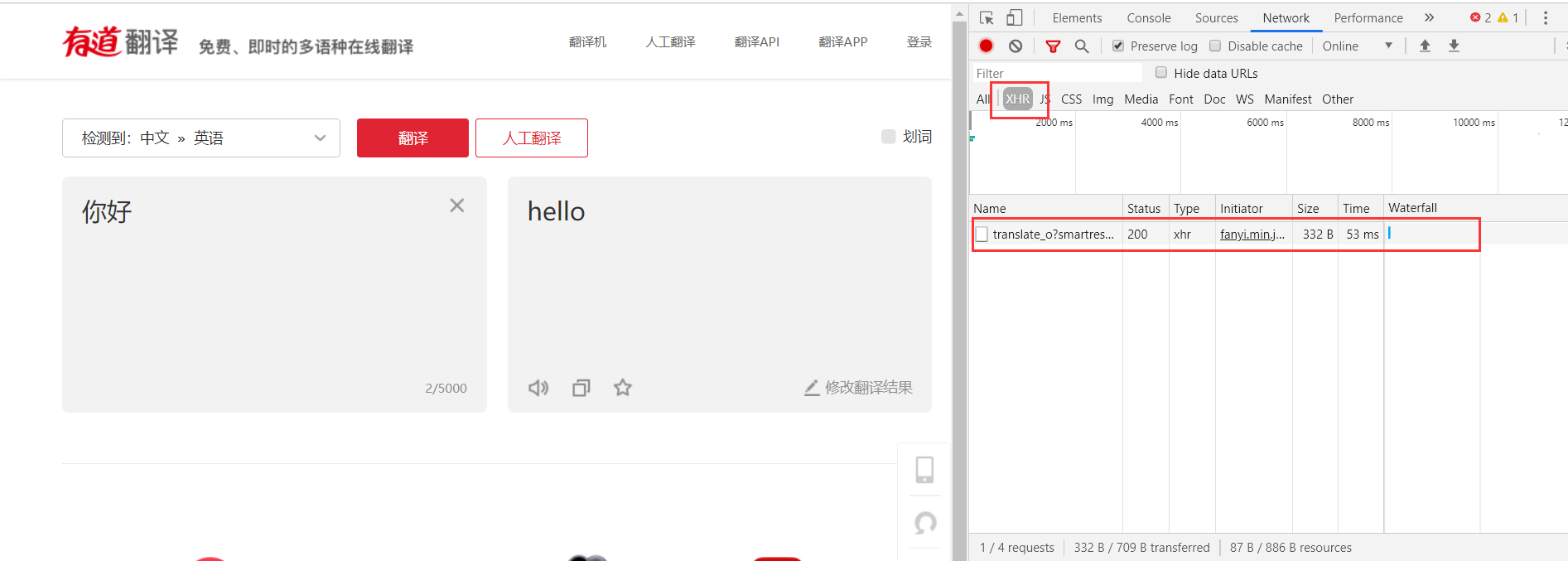

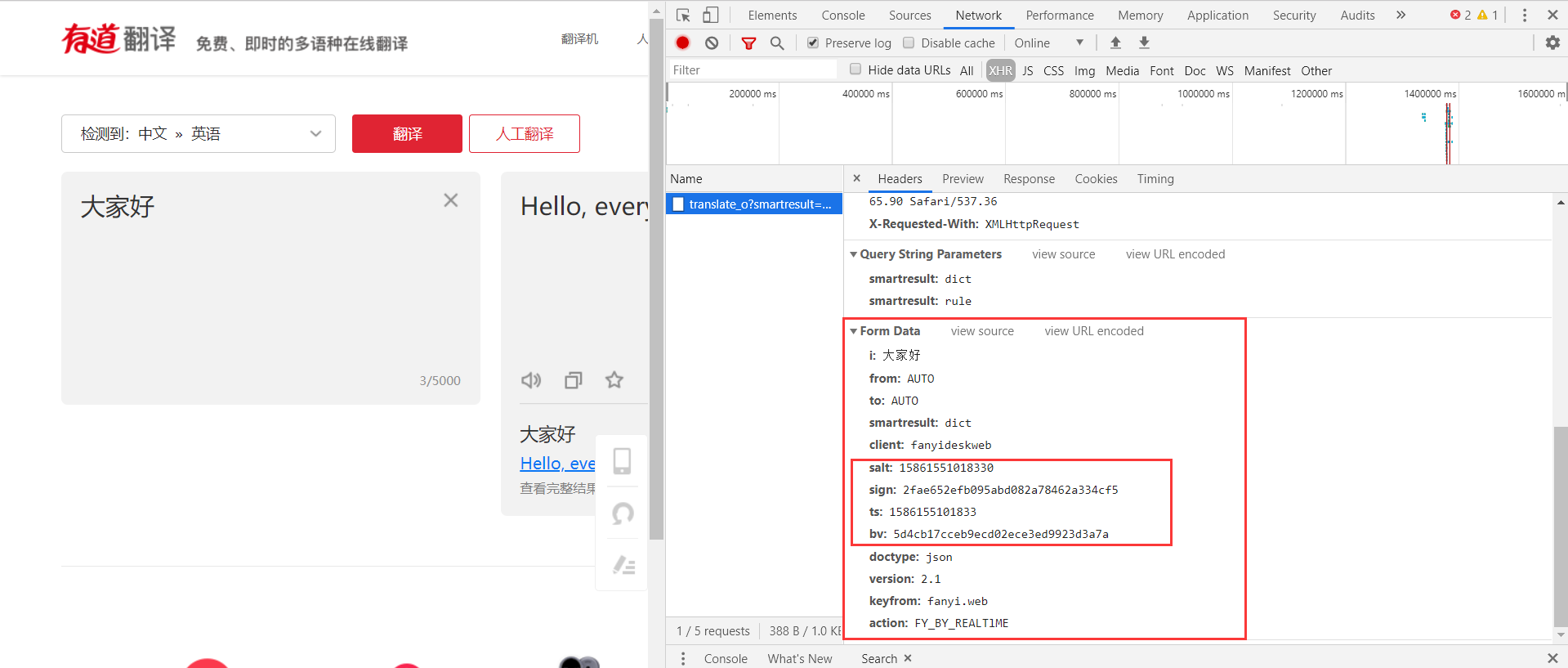

First of all, open the chrome debugging platform, type a sentence in the target web address to let it translate, and take a look at the structure of the request. (generally, check the XHR option under the Network, which is used to interact with the server)

See a file with the name at the beginning of translate. Guess this is what we are looking for. Next, click this file to see what the response is

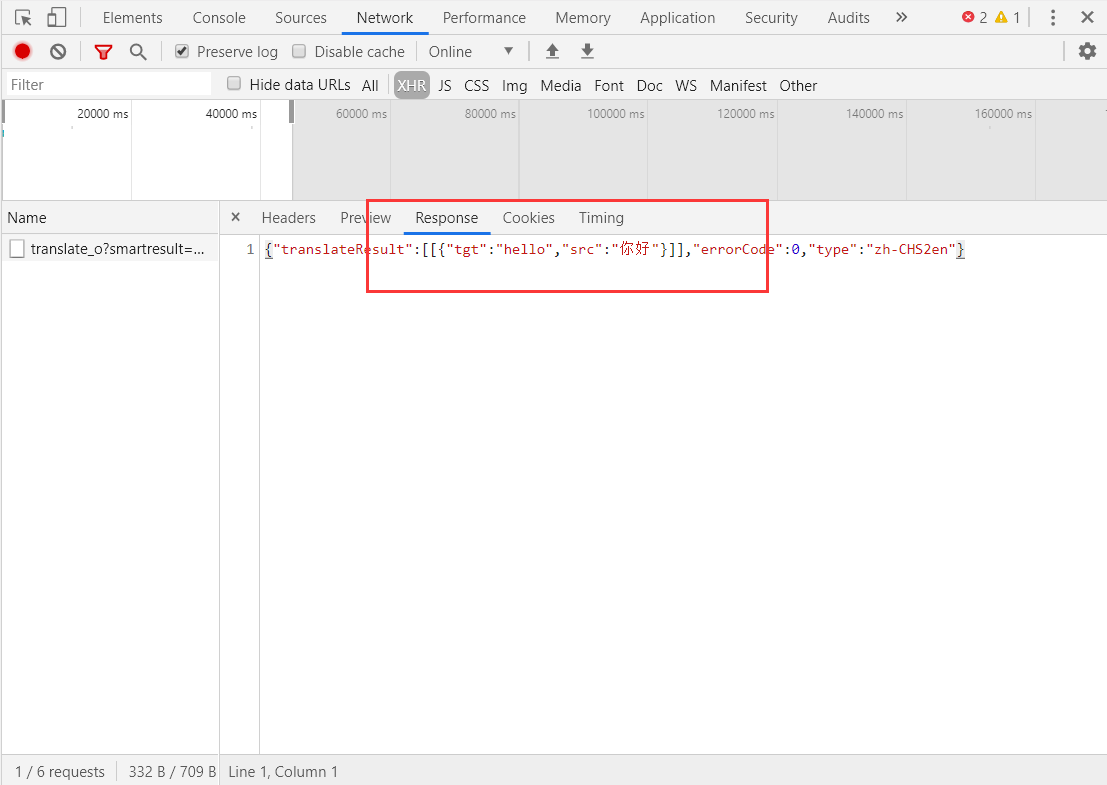

Yes, we can see that the returned json data is the data we want, including the statements to be translated and the translated statements. Next, we will analyze the request

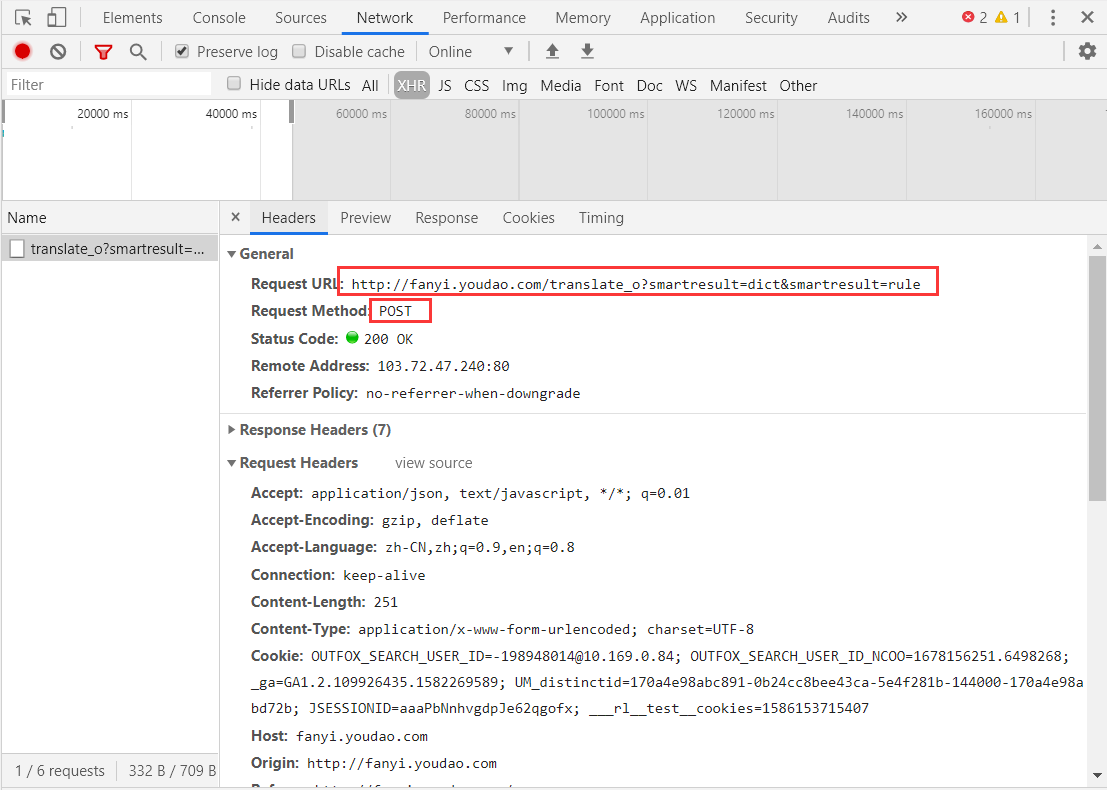

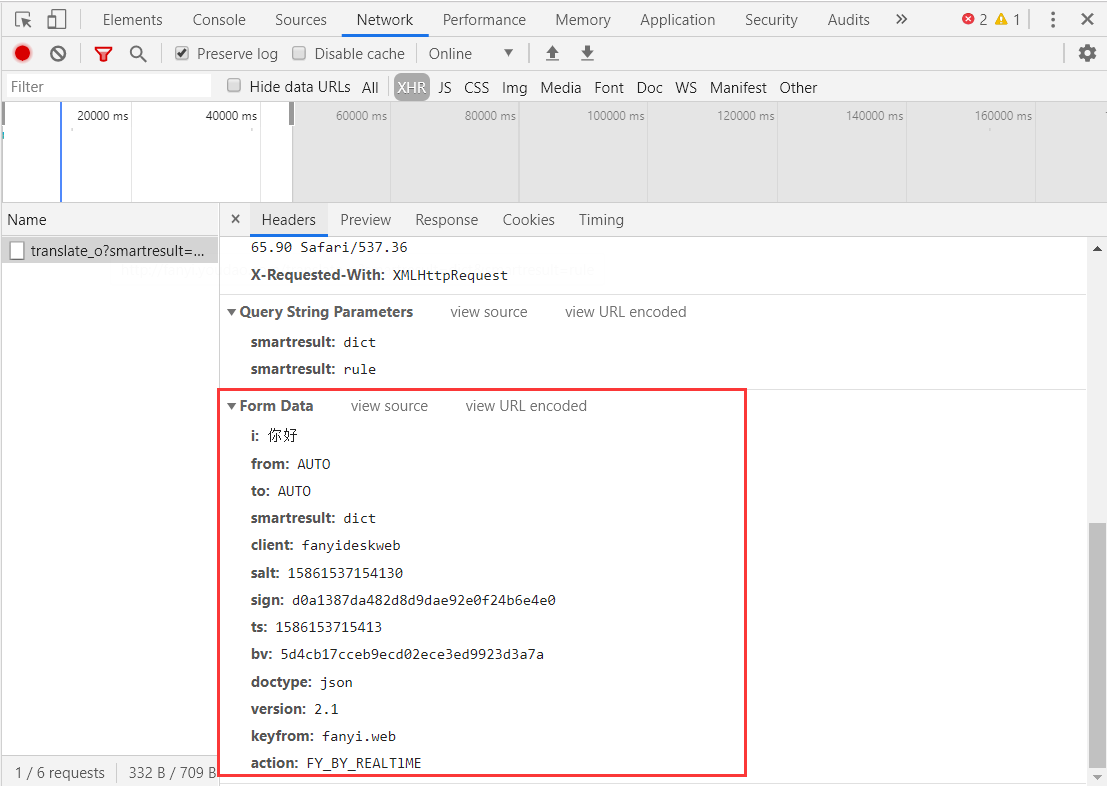

We can get the URL of the request, and at the same time we can see that the request is post mode. What data does it post? Let's keep looking down.

Next, we will use the next requests to construct the next request to see if it can be directly constructed successfully.

import requests url='http://fanyi.youdao.com/translate_o?smartresult=dict&smartresult=rule' headers={ "Accept":"application/json, text/javascript, */*; q=0.01", "Accept-Encoding":"gzip, deflate", "Accept-Language":"zh-CN,zh;q=0.9,en;q=0.8", "Connection":"keep-alive", "Content-Length":"251", "Content-Type":"application/x-www-form-urlencoded; charset=UTF-8", "Cookie":"OUTFOX_SEARCH_USER_ID=-198948014@10.169.0.84; OUTFOX_SEARCH_USER_ID_NCOO=1678156251.6498268; _ga=GA1.2.109926435.1582269589; UM_distinctid=170a4e98abc891-0b24cc8bee43ca-5e4f281b-144000-170a4e98abd72b; JSESSIONID=aaaPbNnhvgdpJe62qgofx; ___rl__test__cookies=1586153715407", "Host":"fanyi.youdao.com", "Origin":"http://fanyi.youdao.com", "Referer":"http://fanyi.youdao.com/", "User-Agent":"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36", "X-Requested-With":"XMLHttpRequest", } data={ "i":"Hello", "from":"AUTO", "to":"AUTO", "smartresult":"dict", "client":"fanyideskweb", "salt":"15861537154130", "sign":"d0a1387da482d8d9dae92e0f24b6e4e0", "ts":"1586153715413", "bv":"5d4cb17cceb9ecd02ece3ed9923d3a7a", "doctype":"json", "version":"2.1", "keyfrom":"fanyi.web", "action":"FY_BY_REALTlME", } res=requests.post(url=url,headers=headers,data=data) print(res.text)

Take a look at the results

{"translateResult":[[{"tgt":"hello","src":"Hello"}]],"errorCode":0,"type":"zh-CHS2en"}

It looks ok. Is it really that simple? In fact, when we change the data we need to translate from hello to other statements, we will find a problem and the web page will return an error code.

So we need to analyze where there is a problem, because this is post mode. We guess that some parameters of data data have changed. Let's go back to the browser and input other statements to see what will change.

By comparing the last translation request, we can see that there are several parameters in the post data that seem to be different every time. So how are these parameters constructed?

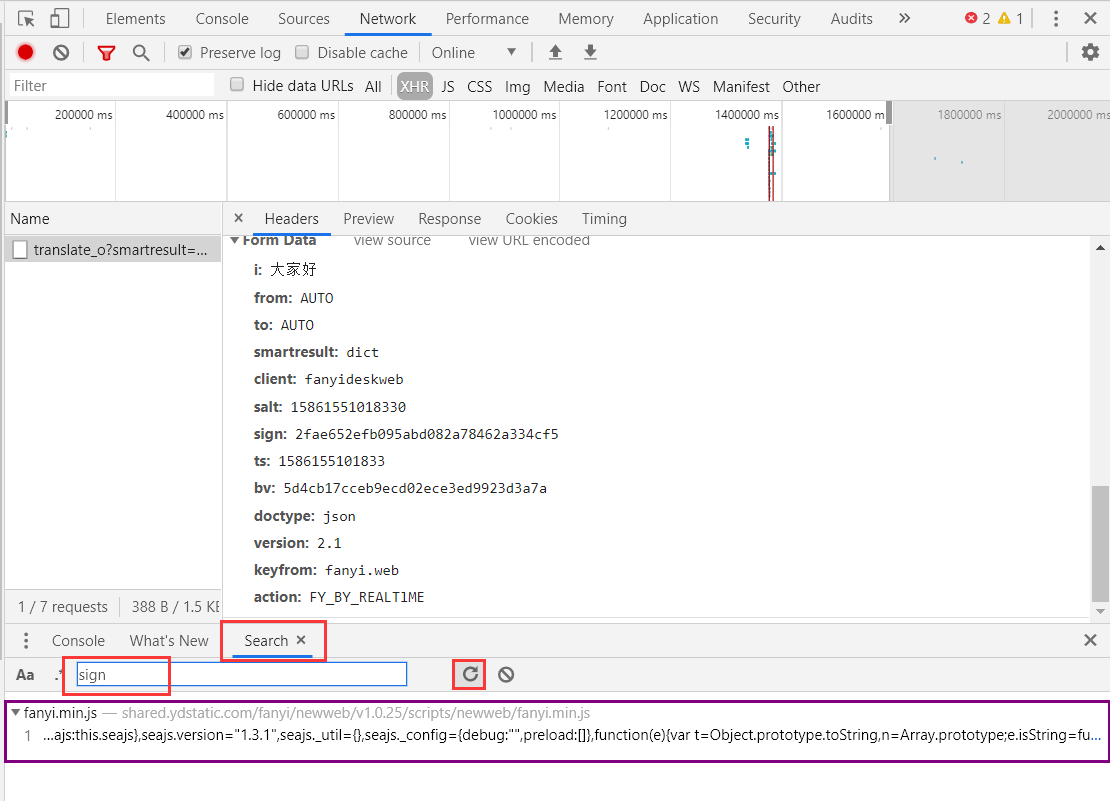

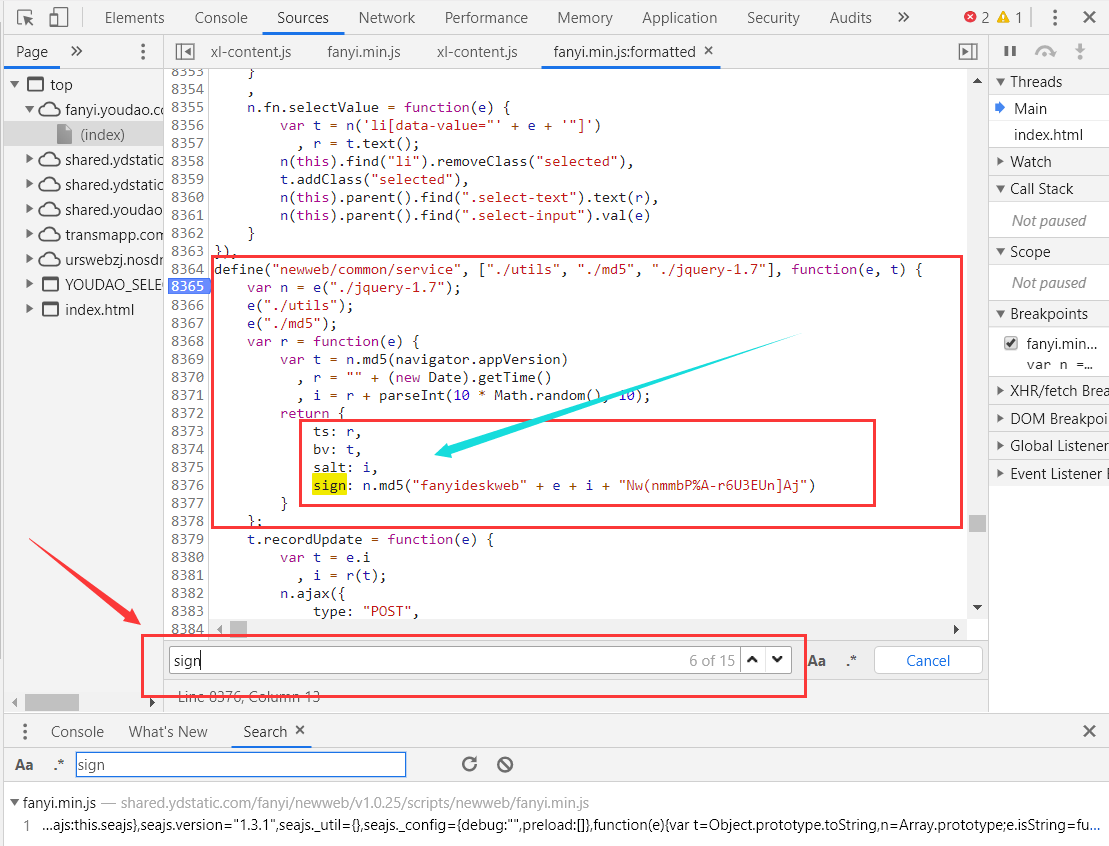

Open the debugging platform to search for the parameter sign, as follows.

We found that there is this parameter in a js file. Guess that this file is the file for constructing parameters. Open this file (double-click the purple box and circle the part) to see the code.

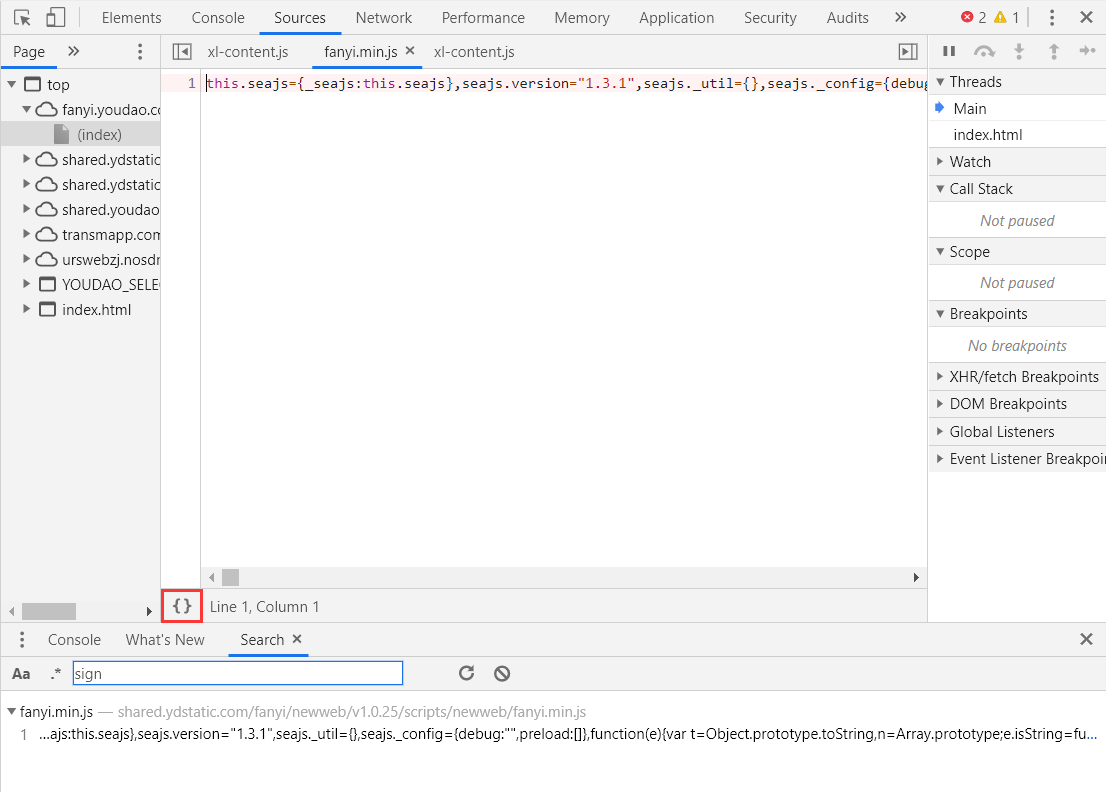

The code shows only one line. It doesn't matter. We can format the code by clicking the button in the red box.

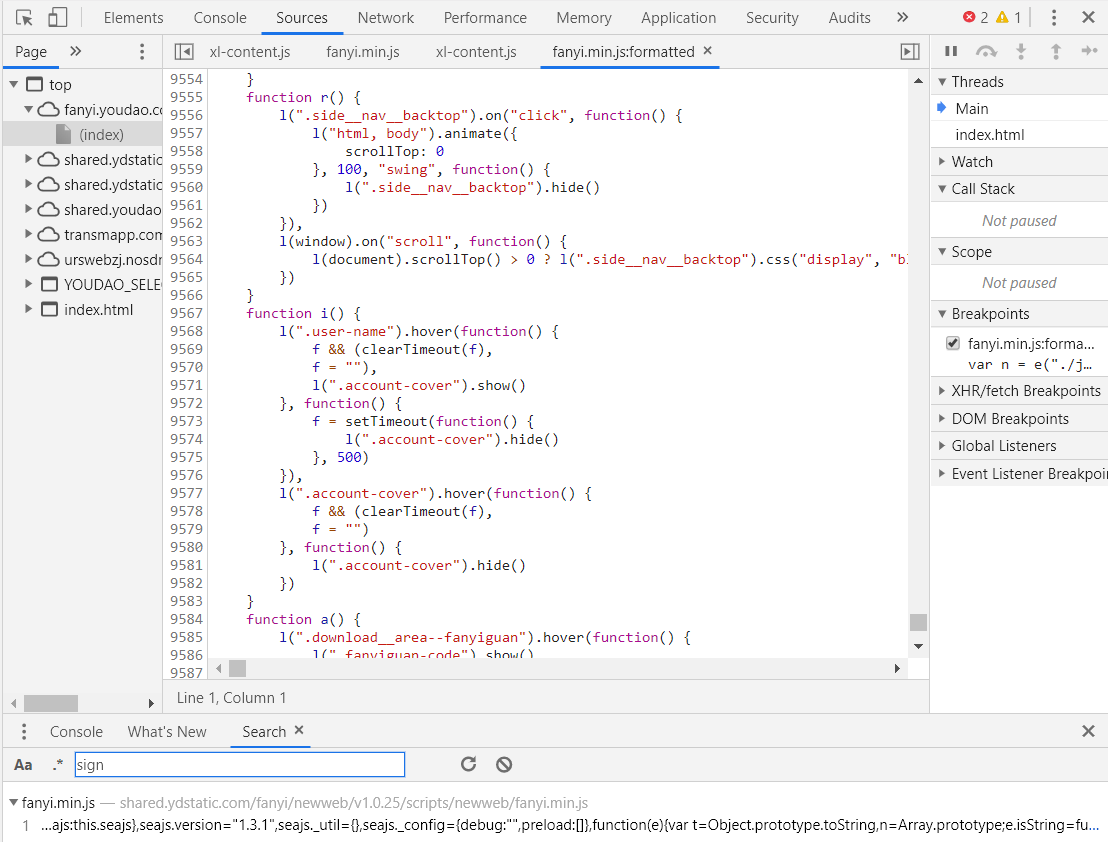

It is found that this is a js code of nearly 10000 lines, but we only need to construct four parameters. How to analyze it in this code of nearly 10000 lines? Let's continue to search the code for parameter names.

Click the code part with the mouse, and then press ctrl-f on the keyboard to display the search box of the code part. There are about ten search results. We can find useful functions by observing more search results.

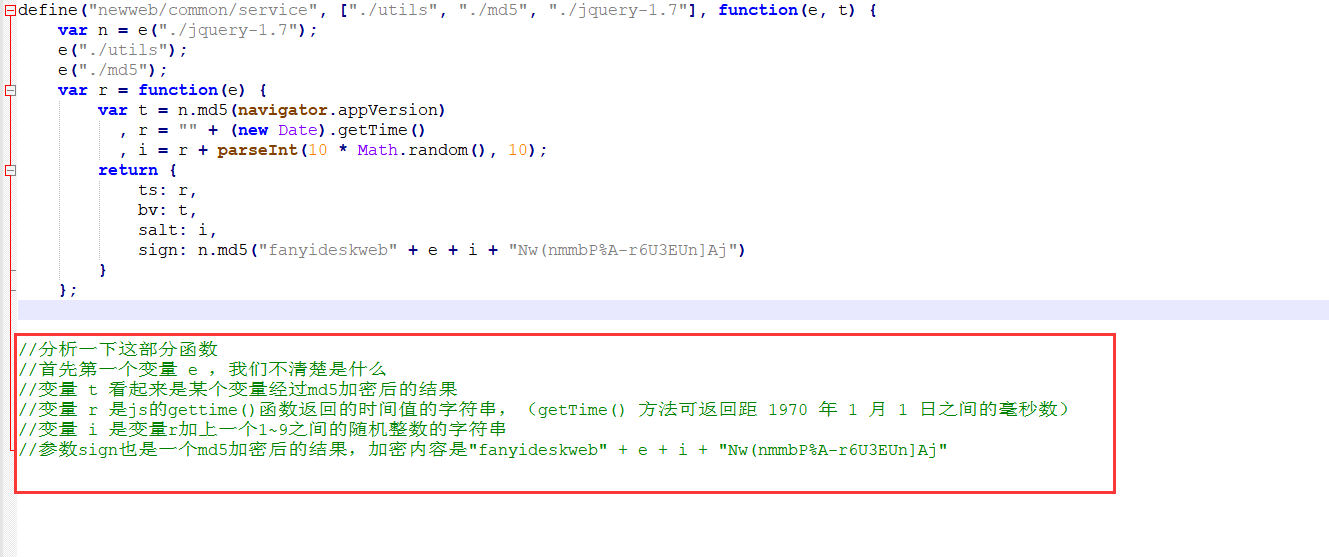

As shown in the following figure, how about the function? It turns out that there are four parameters: ts, bv, salt and sign. Isn't this the constructor we are looking for?

Let's take this function out and put it into notepad + + for analysis

So for now, it seems that we just need to figure out what navigator.appVersion in variables e and t is to get the constructor.

In fact, it's half a success now. We have found the construction method of parameters, but the next task is not simple. We need to find out what the contents of the two variables are.

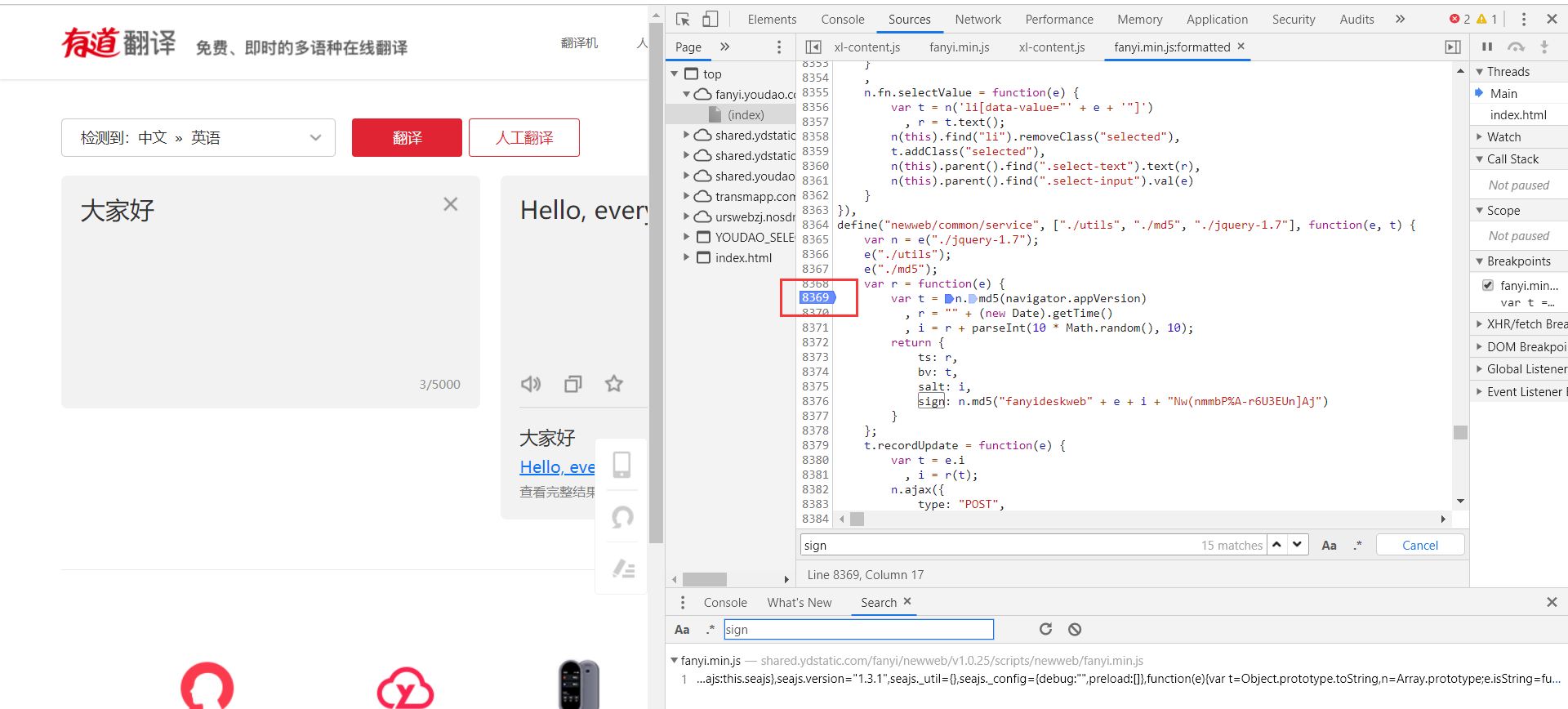

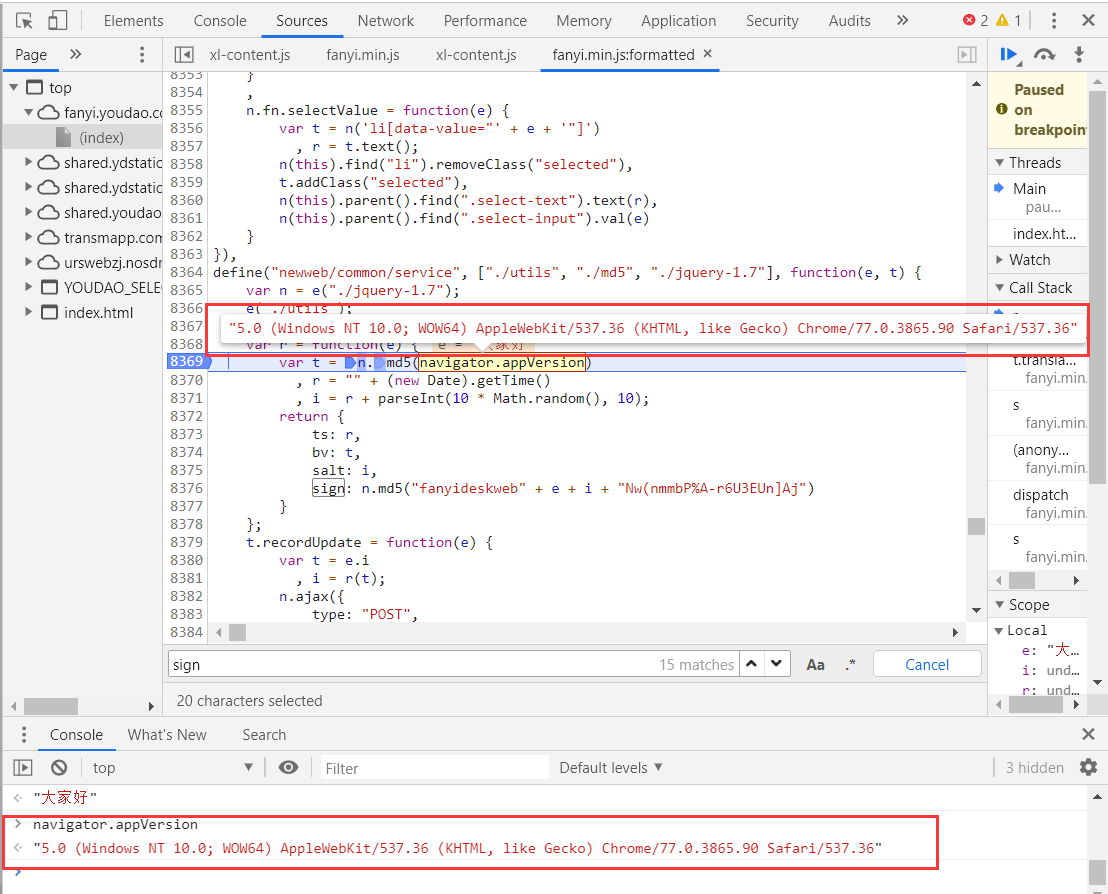

Let's use the debug function of the chrome debugging console to debug and see what these two variables are. First, put a breakpoint on the key code (click the corresponding code before the blue mark appears).

Next, we click the translation button on the left page again to let the page execute once and see what the variables will be (note that you may need to click the next step of debug to let the page continue to execute before you can see the parameters).

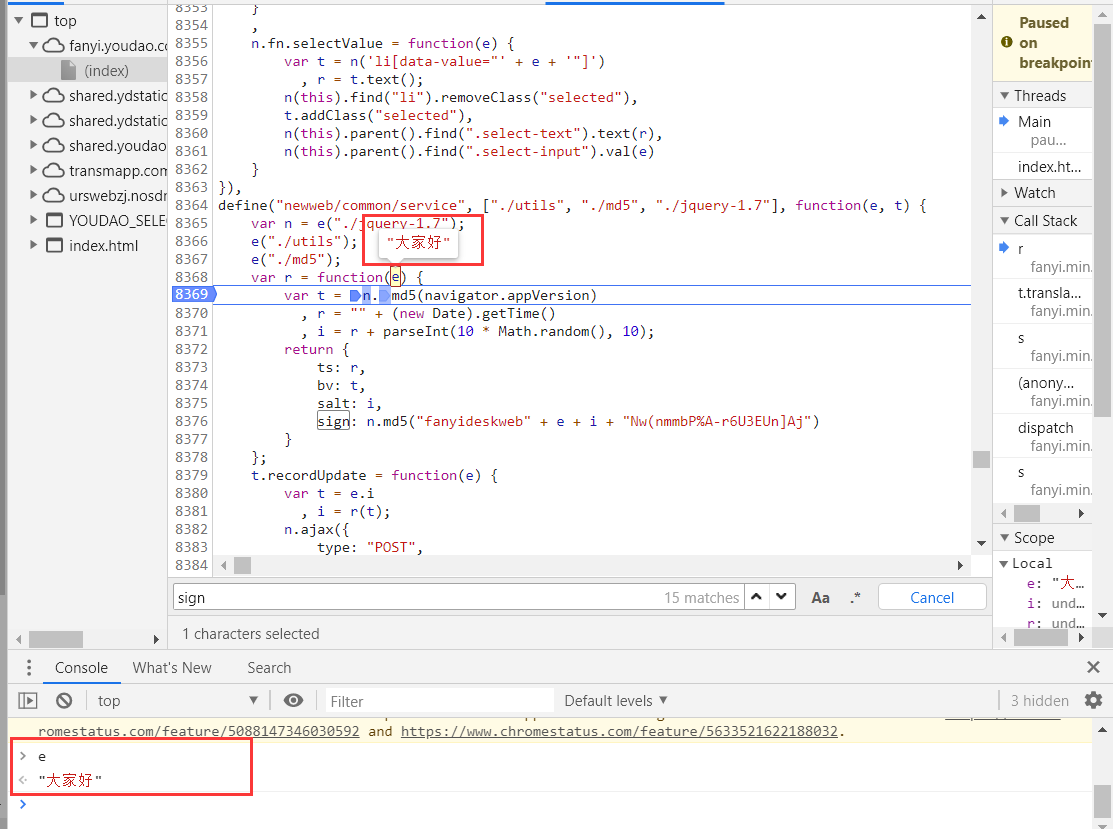

There are two ways to view variables as shown in the figure below. One is to move the mouse over the variables, and the other is to mark the variable name on the debugging platform.

We can see that the variable e is the statement that we need to translate. Similarly, we can see navigator.appVersion. Oh, it turns out that it is user agent.

Now that we have figured out all the problems, the next step is to witness the miracle. We use python to construct the js function to see if we can get the parameter results we want.

import hashlib#Be used for md5 encryption import time import random def md5_b(str): hash=hashlib.md5() hash.update(str.encode('utf-8')) return hash.hexdigest() data={} def get_data_params(t_str): e=t_str t=md5_b(e) r=str(int(time.time()*1000))#Millisecond.*1000 i=r+str(random.randint(0,10)) data['ts'] = r data['bv'] = t data['salt'] = i data['sign'] = md5_b("fanyideskweb" + e + i + "Nw(nmmbP%A-r6U3EUn]Aj") get_data_params('Hello everyone') print(data)

This is our copy constructor. Let's see what the result is.

{'ts': '1586158204425', 'bv': '756d8fbf195de794dc36490b464fb4d1', 'salt': '15861582044251', 'sign': '2fe4a6b70024b6e52ebe910230c42b7f'}

Well, it looks like that. Let's add other constant parameters to see if the request can be constructed successfully. Next is the complete code.

import requests import hashlib#Be used for md5 encryption import time import random url='http://fanyi.youdao.com/translate_o?smartresult=dict&smartresult=rule' headers={ "Accept":"application/json, text/javascript, */*; q=0.01", "Accept-Encoding":"gzip, deflate", "Accept-Language":"zh-CN,zh;q=0.9,en;q=0.8", "Connection":"keep-alive", "Content-Length":"251", "Content-Type":"application/x-www-form-urlencoded; charset=UTF-8", "Cookie":"OUTFOX_SEARCH_USER_ID=-198948014@10.169.0.84; OUTFOX_SEARCH_USER_ID_NCOO=1678156251.6498268; _ga=GA1.2.109926435.1582269589; UM_distinctid=170a4e98abc891-0b24cc8bee43ca-5e4f281b-144000-170a4e98abd72b; JSESSIONID=aaaPbNnhvgdpJe62qgofx; ___rl__test__cookies=1586153715407", "Host":"fanyi.youdao.com", "Origin":"http://fanyi.youdao.com", "Referer":"http://fanyi.youdao.com/", "User-Agent":"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36", "X-Requested-With":"XMLHttpRequest", } #data Medium from And to The parameter represents the translation from what language to what language. The default is Chinese English translation data={ "from":"AUTO", "to":"AUTO", "smartresult":"dict", "client":"fanyideskweb", "doctype":"json", "version":"2.1", "keyfrom":"fanyi.web", "action":"FY_BY_REALTlME", } #This function is used for the md5 encryption def md5_b(str): hash=hashlib.md5() hash.update(str.encode('utf-8')) return hash.hexdigest() def get_data_params(t_str): e=t_str t=md5_b(e) r=str(int(time.time()*1000))#Millisecond.*1000 i=r+str(random.randint(0,10)) data['i'] = e data['ts'] = r data['bv'] = t data['salt'] = i data['sign'] = md5_b("fanyideskweb" + e + i + "Nw(nmmbP%A-r6U3EUn]Aj") return data res=requests.post(url=url,headers=headers,data=get_data_params('Hello, what are you doing?')) print(res.text)

Look at the results:

{"translateResult":[[{"tgt":"Hello. What are you doing?","src":"Hello, what are you doing?"}]],"errorCode":0,"type":"zh-CHS2en"}

OK, I found that I can get the returned json. I can use the json library to continue extraction. In this way, we can break the js encryption of Dao translation. Other websites can do the same.