JUC Foundation

1. Tube side: monitor, monitor, lock

It ensures that only one process is active in the pipe at the same time, that is, the operations defined in the pipe are called by only one process at the same time (implemented by the compiler) However, this does not guarantee that the processes are executed in the designed order

Synchronization in the JVM is based on entering and exiting (locking and unlocking) monitor objects. Each object will have a monitor object, which will be created and destroyed together with java objects

2. A java file can only have one public, and other classes can be omitted

3. Multi thread programming steps

one ⃣ Common resource classes: create attributes and operation methods in resource classes two ⃣ Judge, work and notify in the resource class three ⃣ Create multiple threads and call the operation methods of the resource class four ⃣ ⅸ preventing false wake-up problems (key points) Prevent false wake-up: 1 Put the relevant judgment conditions into the while loop to avoid false wake-up of methods such as wait (when a thread enters the wait for the first time, it will wait, but if it executes the wait method for the second time during the wait, it will be false wake-up. Continue to execute the subsequent code, and add while to solve it) At the same time, the relevant unlocking needs to be added to the finally code block to avoid problems

4. Solutions to unsafe ArrayList, HashSet and HashMap threads:

//Vector resolution List<String> list=new Vector<>(); //Collections List<String> list=Collections.synchronizedList(new ArrayList<>()); //CopyOnWriteArrayList solution List<String> list=new CopyOnWriteArrayList<>(); //CopyOnWriteArraySet resolution List<String> list=new CopyOnWriteArraySet<>(); //ConcurrentHashMap solution List<String> list=new ConcurrentHashMap<>();

synchronized object locks depend on whether the objects are the same. If so, whoever gets the lock first will execute the contents first. However, it should be noted that the static modification method is not used. If modified, no matter how many objects are created, they all represent class objects, that is, whoever gets the lock first will execute first

synchronized is the foundation of synchronization: every object in Java can be used as a lock. It is embodied in the following three forms. For normal synchronization methods, the lock is the current instance object. For static synchronization methods, the lock is the Class object of the current Class. For synchronized method blocks, locks are objects configured in synchronized parentheses

1. Lock

1⃣️

Unfair lock: threads starve to death, high efficiency Fair lock: the sun shines and the efficiency is relatively low

2⃣️

Reentrant lock: Both synchronized (implicit) and Lock (explicit) are reentrant locks Recursive lock

3⃣️

Deadlock (handwritten deadlock code): 1. Insufficient system resources 2. The process running sequence is inappropriate 3. Improper allocation of resources terminal: use jps-1 + stack tracing tool: jstack + process number

2.Callable

Four ways to create threads

1. Inherit Thread class

2. Implement Runnable interface

3.Callable interface

4. Thread pool mode

/* Runnable Difference between Callable interface and Callable interface:

1.Runnable no return value

2.Runnable The interface does not throw an exception

3.The names of the implementation methods are different. The former is the run method and the latter is the call method

4.Callable The interface cannot create a thread by means of new Thread. FutureTask (implementation class of flexible interface) can be used to construct and pass Callable */

import java.util.concurrent.Callable;

import java.util.concurrent.ExecutionException;

import java.util.concurrent.FutureTask;

//Compare two interfaces

//Implement Runnable interface

class MyThread1 implements Runnable {

@Override

public void run() {

}

}

//Implement Callable interface

class MyThread2 implements Callable {

@Override

public Integer call() throws Exception {

System.out.println(Thread.currentThread().getName()+" come in callable");

return 200;

}

}

public class Demo1 {

public static void main(String[] args) throws ExecutionException, InterruptedException {

//The Runnable interface creates a thread

new Thread(new MyThread1(),"AA").start();

//**Callable interface, error**

// new Thread(new MyThread2(),"BB").start();

//FutureTask

FutureTask<Integer> futureTask1 = new FutureTask<>(new MyThread2());

//lam expression

FutureTask<Integer> futureTask2 = new FutureTask<>(()->{

System.out.println(Thread.currentThread().getName()+" come in callable");

return 1024;

});

//Create a thread

new Thread(futureTask2,"lucy").start();

new Thread(futureTask1,"mary").start();

// while(!futureTask2.isDone()) {

// System.out.println("wait.....");

// }

//Call the get method of FutureTask

System.out.println(futureTask2.get());

System.out.println(futureTask1.get());

System.out.println(Thread.currentThread().getName()+" come over");

//FutureTask principle future task: it can be understood as the idea of asynchronous io and mapreduce

/**

* 1,The teacher was thirsty in class. It was inappropriate to buy a ticket. The lecture thread continued.

* Just open the thread, find the monitor of the class to buy water for me, buy the water back, and get it directly when necessary

*

* 2,4 Students, 1 student, 1 + 2 5, 2 students 10 + 11 + 12 50, 3 students 60 + 61 + 62, 4 students 100 + 200

* The second student has a large amount of calculation,

* FutureTask Start a single thread to calculate for 2 students, summarize 1, 3 and 4 first, and finally wait for 2 students to complete the calculation, and summarize uniformly

*

* 3,Test, do what you can do, and finally look at what you can't do

*

* Summary once

*

*/

}

}3.JUC powerful auxiliary class

1. Reduce the count countDownLatch, including two methods: countDown and await. The former decreases by 1 every time, and the latter waits. When the initial value of the thread is reduced to 0, the operation after await is executed, just like locking the door. When everyone in the classroom is gone, lock the door

import java.util.concurrent.CountDownLatch;

//Demonstrate CountDownLatch

public class CountDownLatchDemo {

//After six students left the classroom one after another, the monitor locked the door

public static void main(String[] args) throws InterruptedException {

//Create a CountDownLatch object and set the initial value

CountDownLatch countDownLatch = new CountDownLatch(6);

//After six students left the classroom one after another

for (int i = 1; i <=6; i++) {

//This method doesn't work. It won't lock the door until the end

new Thread(()->{

System.out.println(Thread.currentThread().getName()+" Classmate No. left the classroom");

//Count - 1

countDownLatch.countDown();

},String.valueOf(i)).start();

}

//wait for

countDownLatch.await();

System.out.println(Thread.currentThread().getName()+" lock the door");

}

}2. The circular barrier, such as gathering seven dragon balls to summon the dragon, will execute await only after gathering, which is a bit like the flink distributed snapshot

CyclicBarrier cyclicBarrier =

new CyclicBarrier(NUMBER,()->{

System.out.println("*****Collect 7 dragon balls to summon the dragon");

});

//Process of gathering seven dragon balls

for (int i = 1; i <=7; i++) {

new Thread(()->{

try {

System.out.println(Thread.currentThread().getName()+" The star dragon was collected");

//wait for

cyclicBarrier.await();

} catch (Exception e) {

e.printStackTrace();

}

},String.valueOf(i)).start(); //The thread name is valueof

}3. Semaphore, acquire and release the license. For example, several parking spaces can only be entered by other cars after the car has gone

4. Read write lock

1. Optimistic lock: it does not support concurrent operations and is inefficient

2. Pessimistic lock: supports concurrency,

3. Table lock: even if only one row is modified, the whole table will be locked

4. Row lock: deadlock will occur when the row is locked

5. Read lock: shared lock (many locks can be read together) and deadlock occurs

6. Write lock: exclusive lock (the next one can only be written after writing one), and deadlock occurs

/*Cause of read lock Deadlock: for example, thread 1 reads from a table and modifies it at the same time. It needs to wait for thread 2 to read before modifying it. In this way, thread 2 will wait for deadlock Cause of write lock Deadlock: threads 1 and 2 write to two records at the same time, and they will wait for each other to deadlock The evolution of read-write locks; Multiple read threads can be read together, but there cannot be read and write threads at the same time, that is, read and write are mutually exclusive (the doctrine is that you can't write when reading, but you can read when writing), and read sharing (ReentrantReadWriteLock) Disadvantages of read-write lock: It causes lock hunger, reading all the time and no writing operation (reading and writing are mutually exclusive). For example, many people get on the subway and it is difficult to get off Degradation of read / write lock: Demote a write lock to a read lock. A read lock cannot be upgraded to a write lock jdk8 Description: first obtain the write lock, then obtain the read lock, release the write lock, and release the read lock (the write permission is higher than the read permission)*/

import java.util.concurrent.locks.ReentrantReadWriteLock;

//Demote read / write lock

public class Demo1 {

public static void main(String[] args) {

//Reentrant read-write lock object

ReentrantReadWriteLock rwLock = new ReentrantReadWriteLock();

ReentrantReadWriteLock.ReadLock readLock = rwLock.readLock();//Read lock

ReentrantReadWriteLock.WriteLock writeLock = rwLock.writeLock();//Write lock

//Lock degradation

//2 acquire read lock

readLock.lock();

System.out.println("---read");

//1 get write lock

writeLock.lock();

System.out.println("mile");

//3 release the write lock

//writeLock.unlock();

//4 release the read lock

//readLock.unlock();

}

}

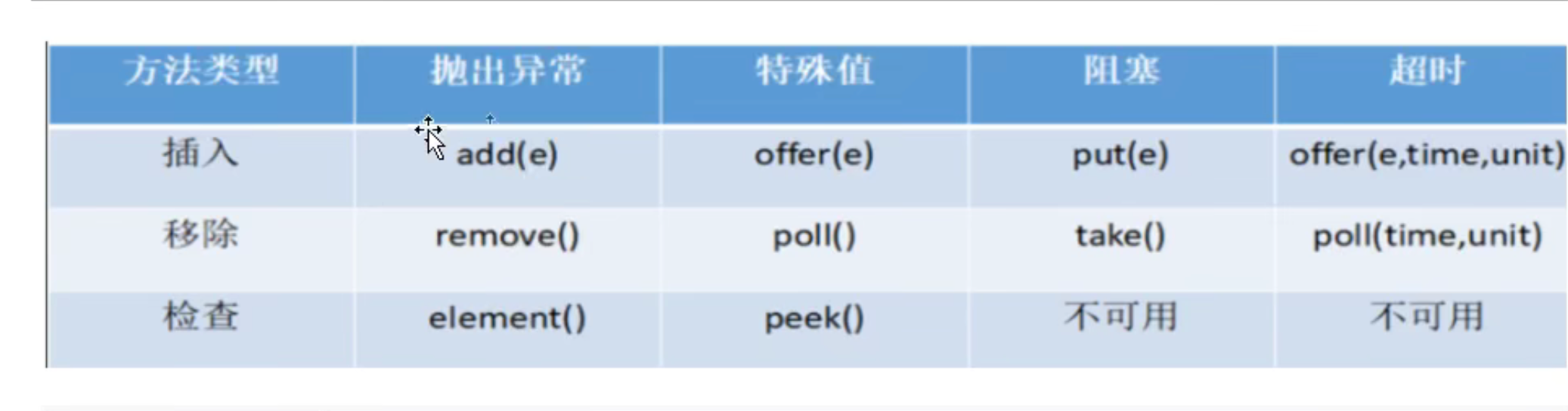

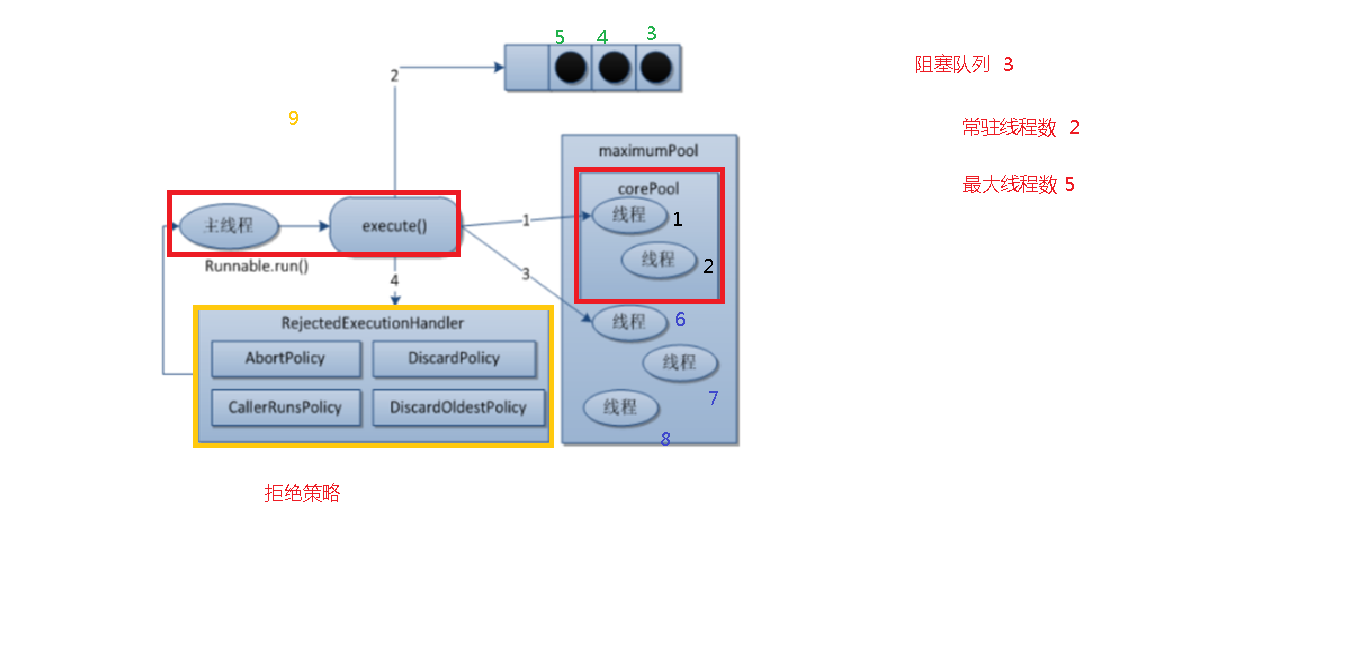

5. Blocking queue

Add: the queue is first in first out (like remove in the figure below, the poll follows this principle), and the stack is first in first out

Definition: when the queue is empty or full, elements cannot be obtained and added, and will be blocked (Note: it is assumed that the code will be blocked if the addition is full, but if there is a remove code below, it will continue to be executed instead of stuck in the addition line, and if there is no remove, it will also be blocked). It is fully automated and does not need to be processed manually

Classification:

1. Arrayblockingqueue (commonly used), a bounded blocking queue composed of an array structure

2. Linkedblockingqueue (commonly used), a bounded (but the default size is integer.MAX_VALUE) blocking queue composed of linked list structure

3.DelayQueue, a delay unbounded blocking queue implemented using priority queue

4.PriorityBlockingQueue, an unbounded blocking queue that supports priority sorting

5. Synchronous queue, which does not store the blocking queue of elements, that is, the queue of a single element

6.LinkedTransferQueue, an unbounded blocking queue composed of linked lists

7.LinkedBlockingDeque, a bidirectional blocking queue composed of linked lists

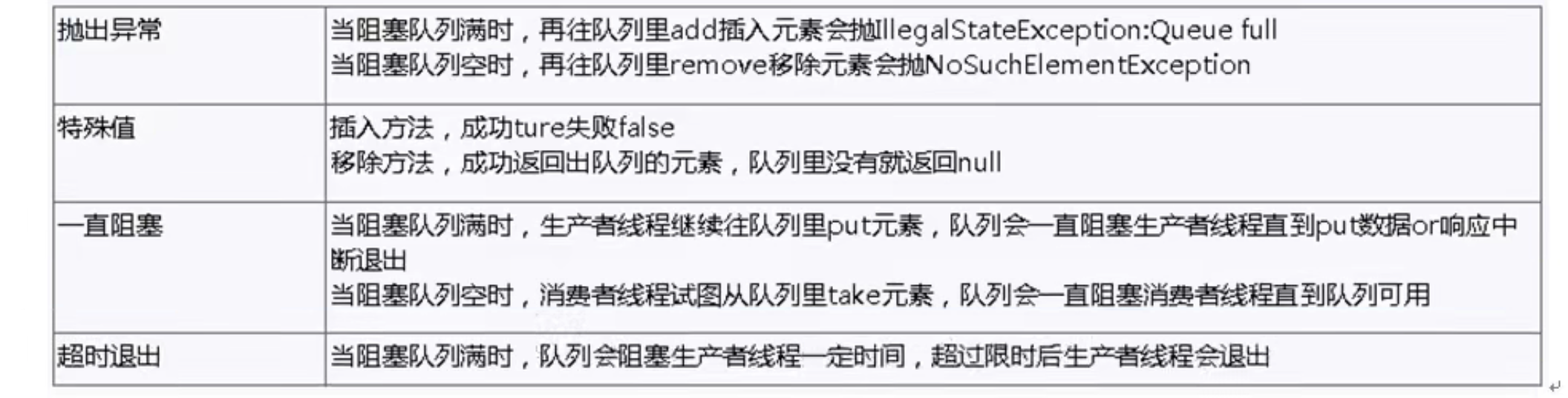

6. Thread pool

1)newCachedThreadPool Create a cacheable thread pool. If the length of the thread pool exceeds the processing needs, you can flexibly recycle idle threads. If there is no recyclable thread, you can create a new thread. This type of thread pool is characterized by: There is almost no limit on the number of worker threads created (in fact, there is also a limit, the number is Interger. MAX_VALUE), so you can flexibly add threads to the thread pool. If the task is not submitted to the thread pool for a long time, that is, if the worker thread is idle for the specified time (the default is 1 minute), the worker thread will terminate automatically. After termination, if you submit a new task, the thread pool recreates a worker thread. When using CachedThreadPool, you must pay attention to controlling the number of tasks. Otherwise, the system will be paralyzed because a large number of threads run at the same time. 2)newFixedThreadPool Create a thread pool with a specified number of worker threads. Each time a task is submitted, a worker thread is created. If the number of worker threads reaches the initial maximum number of thread pool, the submitted task is stored in the pool queue. FixedThreadPool is a typical and excellent thread pool. It has the advantages of improving program efficiency and saving the overhead when creating threads. However, when the thread pool is idle, that is, when there are no runnable tasks in the thread pool, it will not release the working threads and occupy certain system resources. 3)newSingleThreadExecutor Create a single thread Executor, that is, only create a unique worker thread to execute tasks. It will only use a unique worker thread to execute tasks to ensure that all tasks are executed in the specified order (FIFO, LIFO, priority). If this thread ends abnormally, another thread will replace it to ensure sequential execution. The biggest feature of a single working thread is that it can ensure the sequential execution of various tasks, and no more than one thread will be active at any given time. 4)newScheduleThreadPool Create a thread pool with a fixed length, and support timed and periodic task execution, as well as timed and periodic task execution. Execution is delayed by 3 seconds.

1. Thread pool is for background programs, which is to improve memory and cpu efficiency, similar to optimization on the client. Connection pool is database connection oriented. It is used to optimize database connection resources, similar to that on the client

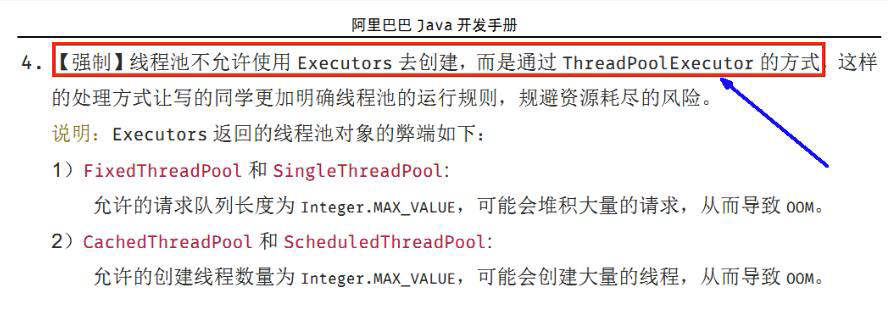

2. The thread pool in Java is implemented through the Executor framework, which uses Executor, Executors, ExecutorService and ThreadPoolExecutor

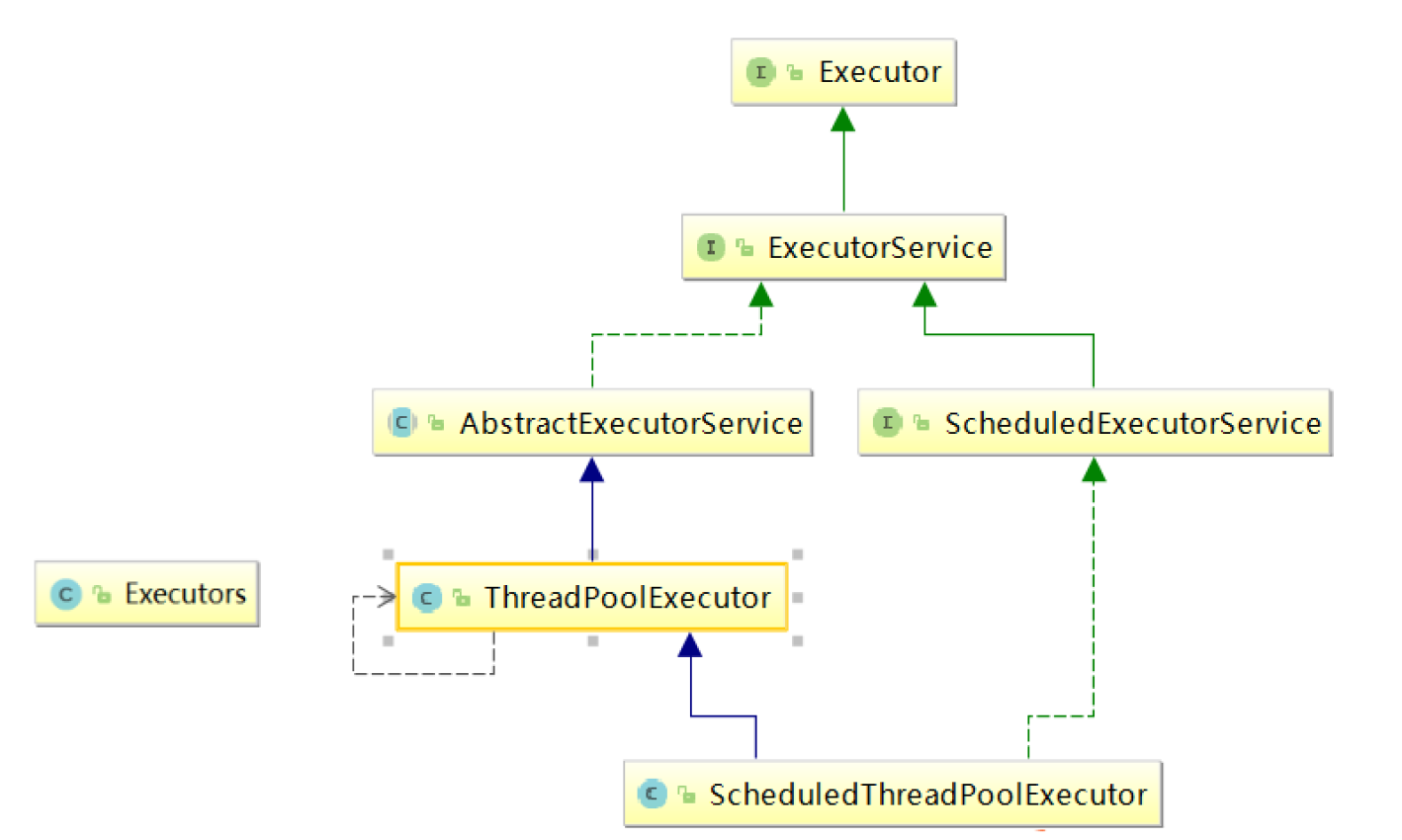

3. The bottom layers of several thread pools call ThreadPoolExecutor, which has seven parameters

4. Seven parameters

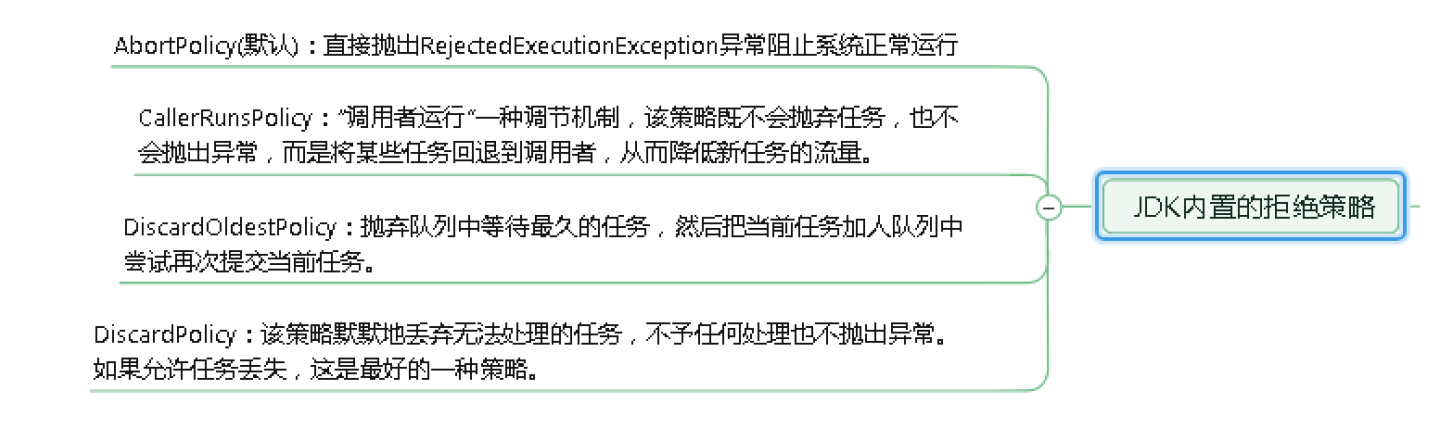

• Int corePoolSize the number of core threads in the thread pool • Int maximumPoolSize is the maximum number of threads that can be accommodated • long keepAliveTime idle thread lifetime • TimeUnit unit • BlockingQueue < runnable > workqueue holds the queue submitted but not executed • ThreadFactory threadFactory creates a factory class for threads • RejectedExecutionHandler handler the rejection policy when the waiting queue is full

5. Workflow and work strategy: 3, 4 and 5 will wait for 1 and 2 of the corePool in the blocking queue. When 6, 7 and 8 come again, a new thread will be started (equivalent to queue jumping), that is, when the resident thread pool and blocking queue are full, a new thread pool will be opened for processing (according to the rejection Policy)

6. Customize thread pool

import java.util.concurrent.*;

//Custom thread pool creation

public class ThreadPoolDemo2 {

public static void main(String[] args) {

ExecutorService threadPool = new ThreadPoolExecutor(

2,

5,

2L,

TimeUnit.SECONDS,

new ArrayBlockingQueue<>(3),

Executors.defaultThreadFactory(),

new ThreadPoolExecutor.AbortPolicy()

);

//10 customer requests

try {

for (int i = 1; i <=10; i++) {

//implement

threadPool.execute(()->{

System.out.println(Thread.currentThread().getName()+" Handle the business");

});

}

}catch (Exception e) {

e.printStackTrace();

}finally {

//close

threadPool.shutdown();

}

}

}7. Fork/Join framework

1.Fork: split a complex task and make it small. Join: merge the results of the split task (similar to mapreduce). The bottom layer of fork is implemented recursively through recursive task

import java.util.concurrent.*;

class MyTask extends RecursiveTask<Integer> {

//The split difference cannot exceed 10, and the calculation is within 10

private static final Integer VALUE = 10;

private int begin ;//Split start value

private int end;//Split end value

private int result ; //Return results

//Create a structure with parameters

public MyTask(int begin,int end) {

this.begin = begin;

this.end = end;

}

//Split and merge process

@Override

protected Integer compute() {

//Judge whether the added two values are greater than 10

if((end-begin)<=VALUE) {

//Addition operation

for (int i = begin; i <=end; i++) {

result = result+i;

}

} else {//Further split

//Get intermediate value

int middle = (begin+end)/2;

//Split left

MyTask task01 = new MyTask(begin,middle);

//Split right

MyTask task02 = new MyTask(middle+1,end);

//Call method split

task01.fork();

task02.fork();

//Consolidated results

result = task01.join()+task02.join();

}

return result;

}

}

public class ForkJoinDemo {

public static void main(String[] args) throws ExecutionException, InterruptedException {

//Create MyTask object

MyTask myTask = new MyTask(0,100);

//Create branch merge pool object

ForkJoinPool forkJoinPool = new ForkJoinPool();

ForkJoinTask<Integer> forkJoinTask = forkJoinPool.submit(myTask);

//Get the results after the final merge

Integer result = forkJoinTask.get();

System.out.println(result);

//Close pool object

forkJoinPool.shutdown();

}

}2. Completable future asynchronous callback (you can see it from the idea view)

import java.util.concurrent.CompletableFuture;

import java.util.concurrent.ExecutionException;

//Asynchronous and synchronous calls

public class CompletableFutureDemo {

public static void main(String[] args) throws Exception {

//Synchronous call

CompletableFuture<Void> completableFuture1 = CompletableFuture.runAsync(()->{

System.out.println(Thread.currentThread().getName()+" : CompletableFuture1");

});

//End method

completableFuture1.get();

//mq message queue

//Asynchronous call

CompletableFuture<Integer> completableFuture2 = CompletableFuture.supplyAsync(()->{

System.out.println(Thread.currentThread().getName()+" : CompletableFuture2");

//Simulation anomaly

int i = 10/0;

return 1024;

});

completableFuture2.whenComplete((t,u)->{

System.out.println("------t="+t);

System.out.println("------u="+u);

}).get();

}

}8. Spin lock

Keep trying until you succeed, CAS

Mutex features: Lock the shared resource before accessing it, and unlock it after accessing it. After locking, any other thread trying to lock again will be blocked until the current thread is unlocked. If more than one thread is blocked during unlocking, all threads on the lock will be programmed into the ready state. The first thread that becomes ready will perform the lock operation, and other threads will enter the waiting state. In this way, only one thread can access the resources protected by the mutex. (only one thread can have a mutex at a time, and other threads can only wait) Spin lock is a special kind of mutually exclusive lock. When resources are locked, other threads want to lock again. At this time, the thread will not be blocked to sleep, but will fall into a circular waiting state (the CPU can't do other things). Loop check whether the resource holder has released resources. The advantage of this is to reduce the resource consumption of the thread from sleep to wake up, But it will always occupy CPU resources. It is applicable to the situation that the lock of the resource is held for a short time and you do not want to spend too much resources on the wake-up of the thread.

9. Deflection lock

In the absence of actual competition, it can continue to optimize for some scenarios. If not only there is no actual competition, but there is only one thread using locks from beginning to end, maintaining lightweight locks is a waste. The goal of biased locking is to reduce the performance consumption caused by using lightweight locks when there is no competition and only one thread uses locks. Lightweight locks require CAS at least once every time they apply for and release locks, but biased locks require CAS only once during initialization.

"Bias" means that the bias lock assumes that only the first thread applying for the lock will use the lock in the future (no thread will apply for the lock again). Therefore, it is only necessary to record the owner in CAS in Mark Word (it is also updated in essence, but the initial value is empty). If the record is successful, the bias lock is obtained successfully and the record lock status is bias lock, In the future, if the current thread is equal to the owner, the lock can be obtained directly at zero cost; Otherwise, it indicates that other threads compete and expand into lightweight locks.

Spin lock optimization cannot be used for biased locking, because once another thread applies for a lock, the assumption of biased locking is broken.

shortcoming

Similarly, if it is obvious that other threads apply for locks, biased locks will quickly expand into lightweight locks.

But the side effect is much smaller.

If necessary, use the parameter - XX:-UseBiasedLocking to disable bias lock optimization (on by default).