Multi node deployment is based on the previous single node

master02 node deployment

1. Copy the certificate file, configuration file and service management file of each master component from the master01 node to the master02 node

scp -r /opt/etcd/ root@192.168.226.80:/opt/

scp -r /opt/kubernetes/ root@192.168.226.80:/opt

scp /usr/lib/systemd/system/{kube-apiserver,kube-controller-manager,kube-scheduler}.serviceroot@192.168.226.80:/usr/lib/systemd/system/

2. Modify the IP address in the configuration file Kube apiserver

vim /opt/kubernetes/cfg/kube-apiserver KUBE_APISERVER_OPTS="--logtostderr=true \ --v=4 \ --etcd-servers=https://192.168.226.70:2379,https://192.168.226.40:2379,https://192.168.226.50:2379 \ --bind-address=192.168.226.80 \ #Modify to the IP of this master node --secure-port=6443 \ --advertise-address=192.168.226.80 \ #modify

3. Start the services on the master02 node and set the startup and self startup

systemctl start kube-apiserver.service systemctl enable kube-apiserver.service systemctl start kube-controller-manager.service systemctl enable kube-controller-manager.service systemctl start kube-scheduler.service systemctl enable kube-scheduler.service

4. View node status

ln -s /opt/kubernetes/bin/* /usr/local/bin/ #Path optimization kubectl get nodes kubectl get nodes -o wide #-o=wide: output additional information; For Pod, the Node name where the Pod is located will be output

Load balancing deployment

Configure load balancer cluster dual machine hot standby load balancing (nginx realizes load balancing and keepalived realizes dual machine hot standby)

1, Deploy nginx reverse proxy service

, configure the official online Yum source of nginx, and configure the yum source of local nginx

cat > /etc/yum.repos.d/nginx.repo << 'EOF' [nginx] name=nginx repo baseurl=http://nginx.org/packages/centos/7/$basearch/ gpgcheck=0 EOF yum install nginx -y #Installing nginx

2. Modify the nginx configuration file, configure the four layer reverse proxy load balancing, and specify the node ip and 6443 port of the two master servers in the k8s cluster

vim /etc/nginx/nginx.conf

events {

worker_connections 1024;

}

#add to

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.226.70:6443; #Configure the upstream address pool for use by the cash back agent

server 192.168.226.80:6443;

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

http {

......

//Check configuration file syntax

nginx -t

//Start nginx service and check that 6443 port has been monitored

systemctl start nginx

systemctl enable nginx

netstat -natp | grep nginx

2, Deploy keepalived service

Yum install Keepalived -y#yum install Keepalived service

1. Modify the keepalived configuration file

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

# Receiving email address

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

# Email address

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER #The of nginx01 node is NGINX_MASTER, nginx02 node is NGINX_BACKUP

}

#Add a script that executes periodically

vrrp_script check_nginx {

script "/etc/nginx/check_nginx.sh" #Specifies the script path to check nginx survival

}

vrrp_instance VI_1 {

state MASTER #The of nginx01 node is MASTER, and that of nginx02 node is BACKUP

interface ens33 #Specify the network card name ens33

virtual_router_id 51 #Specify vrid, and the two nodes should be consistent

priority 100 #The of nginx01 node is 100 and that of nginx02 node is 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.226.10/24 #Specify VIP

}

track_script {

check_nginx #Specify vrrp_script configured script

}

}

3. Create nginx status check script

vim /etc/nginx/check_nginx.sh

#!/bin/bash

#egrep -cv "grep $$" is used to filter out the current Shell process ID containing grep or $$

count=$(ps -ef | grep nginx | egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

chmod +x /etc/nginx/check_nginx.sh #Startup script

4. Start the keepalived service (be sure to start the nginx service before starting the keepalived service)

systemctl start keepalived

systemctl enable keepalived

5. Modify the bootstrap on the node kubeconfig,kubelet. Kubeconfig configuration file is VIP

cd /opt/kubernetes/cfg/

vim bootstrap.kubeconfig

server: https://192.168.226.10:6443

vim kubelet.kubeconfig

server: https://192.168.226.10:6443

vim kube-proxy.kubeconfig

server: https://192.168.226.10:6443

6. Restart kubelet and Kube proxy services

systemctl restart kubelet.service

systemctl restart kube-proxy.service

Operate on the master01 node

1. Test and create pod

#Create a pod kubectl run nginx --image=nginx #Check the pods and find that they are being created kubectl get pods NAME READY STATUS RESTARTS AGE nginx-dbddb74b8-gkgh7 0/1 ContainerCreating 0 12s Found again ok Yes kubectl get pods NAME READY STATUS RESTARTS AGE nginx-dbddb74b8-gkgh7 1/1 Running 0 27s #View more detailed information, such as the node, external IP, etc. kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE nginx-dbddb74b8-gkgh7 1/1 Running 0 109s 172.17.97.2 192.168.226.40 <none> #We went to visit the log of this pod and found that it could not be accessed. kubectl logs nginx-dbddb74b8-gkgh7 Error from server (Forbidden): Forbidden (user=system:anonymous, verb=get, resource=nodes, subresource=proxy) ( pods/log nginx-dbddb74b8-gkgh7)server (Forbidden): Forbidden (user=system:anonymous, verb=get, resource=nodes, subresource=proxy) ( nginx-dbddb74b8-nf9sk) #On the master01 node, grant the cluster admin role to the user system:anonymous kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous clusterrolebinding.rbac.authorization.k8s.io/cluster-system-anonymous created #Check the nginx log again kubectl logs nginx-dbddb74b8-gkgh7 You can view the log file.

4, Verify it

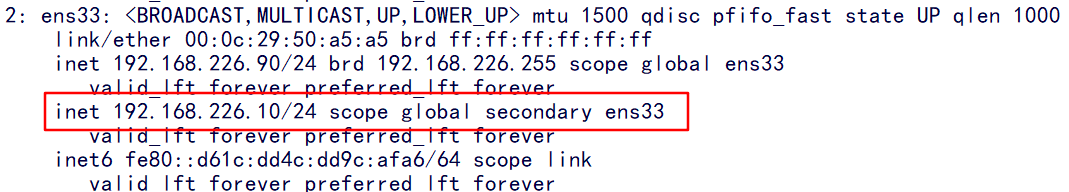

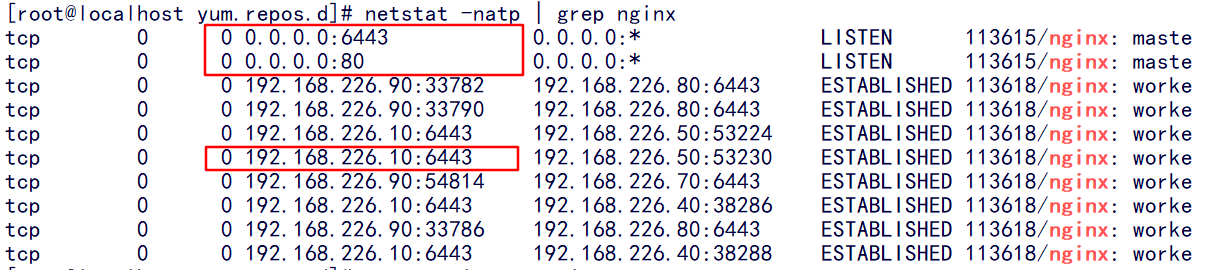

On the nginx01 node, view the ports of the nginx service

netstat -natp | grep nginx

You can see that we are listening on ports 6443 and 80, and our 6443 port monitored by the virtual IP10 address

Now let's close the nginx service of this node and verify whether the Keepalived service is normal.

systemctl stop nginx systemctl start nginx

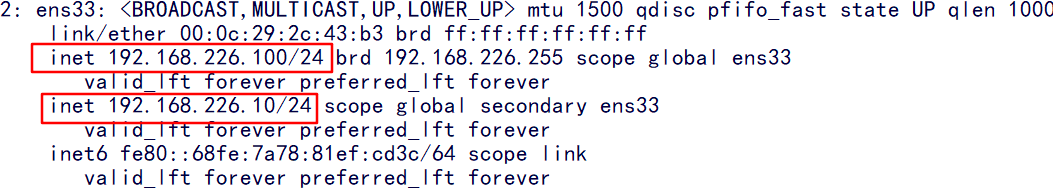

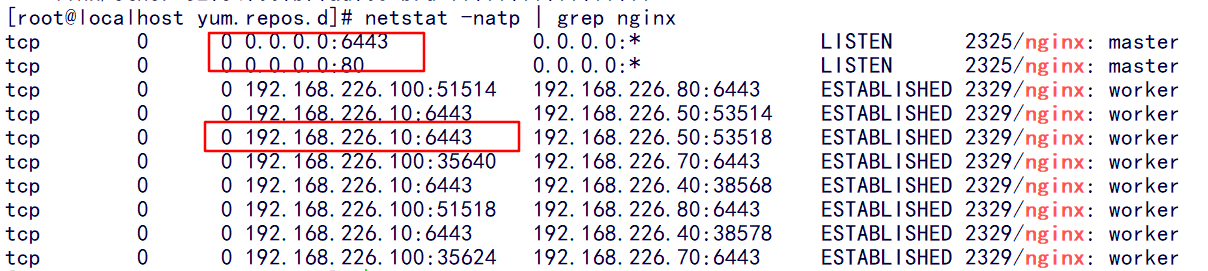

After the service is disconnected, we go to the nginx02 node to check whether the virtual ip has drifted to this node, and check the services monitored by nginx

You can see that the virtual ip has drifted to our 100 hosts

And nginx's monitoring is in place. It shows that our multi node + load balancing high availability has been built successfully.