Introduction to Kube API server components

- Kube API server is the data bus and data center of the whole system, which provides the addition, deletion, modification and query of k8s resource objects (pod,RC,Service, etc.) and HTTP Rest interfaces such as watch.

Functions of Kube API server

- Provides REST API interface for cluster management (including authentication and authorization, data verification and cluster status change)

- Provide data interaction and communication hub between other modules (other modules query or modify data through API Server, only API Server can directly operate etcd)

- Access to resource quota control

- Complete cluster security mechanism

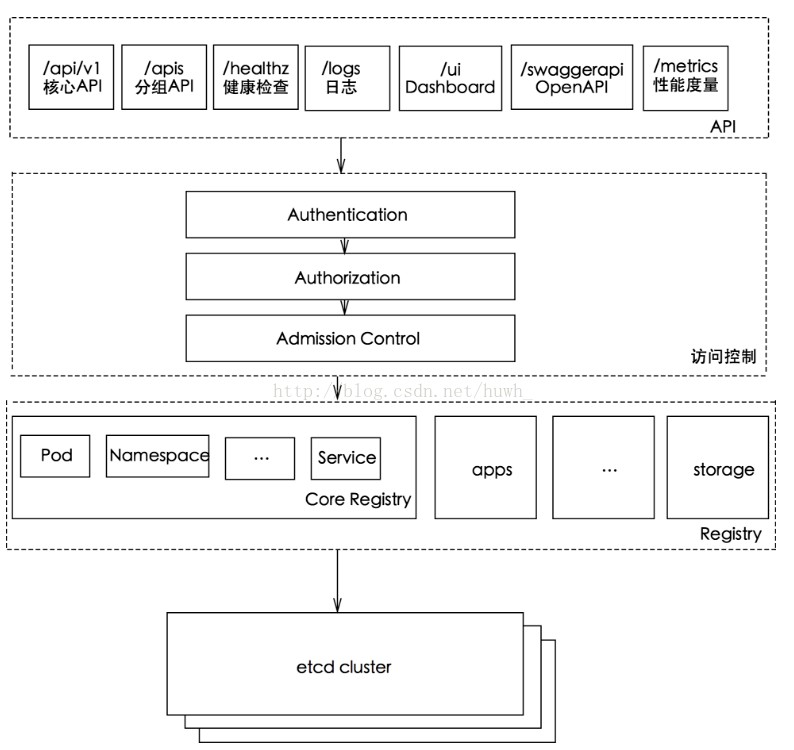

Schematic diagram of Kube API server

Access to kubernetes API

- K8s provides services through the Kube apiserver process, which runs on a single k8s master node. There are two ports by default

- Local port

- This port is used to receive HTTP requests

- The default value of this port is 8080, which can be modified by the value of the API Server startup parameter "- secure port"

- The default IP address is "localhost". You can modify the IP address by starting the value of the parameter "- Secure bind address"

- Non authenticated or authorized HTTP requests access the API Server through this port

- Secure port

- The default value of this port is 6443, which can be modified by the value of the startup parameter "- secure port"

- The default IP address is the non localhost network port, which is set by the startup parameter "-- bind address"

- This port is used to receive HTTPS requests

- For authentication based on the token file or client certificate and HTTP Base

- For policy based authorization

- HTTPS security access control is not enabled by default

Introduction to Kube controller manager component

- As the internal management control center of the cluster, the Kube Controller Manager is responsible for the management of Node, Pod replica, Endpoint, Namespace, service account and resource quota in the cluster. When a Node goes down unexpectedly, the Controller Manager discovers and executes automated repair processes in a timely manner to ensure that the cluster is always in the expected working state.

Introduction to Kube scheduler components

- Kube scheduler is a component in the form of plug-ins. Because it exists in the form of plug-ins, it has extensible and customizable features. Kube scheduler is equivalent to the scheduling decision maker of the whole cluster. It determines the optimal scheduling location of the container through two processes: pre selection and optimization.

- Kube scheduler's accusation is to find the most suitable node in the cluster for the newly created pod, and schedule the pod to the node

- From all nodes in the cluster, all nodes that can run the pod are selected according to the scheduling algorithm

- According to the scheduling algorithm, the optimal node is selected as the final result

- The Scheduler scheduler runs on the master node. Its core function is to listen to the apiserver to obtain a pod with a null PodSpec.NodeName, then create a binding for the pod to indicate which node the pod should be scheduled to, and write the scheduling result to the apiserver

Main responsibilities of Kube scheduler

- Cluster high availability: if the Kube scheduler has set the leader select election start parameter, the node will be selected as the master through etcd (both Kube scheduler and Kube controller manager use the high availability scheme of one master and multiple slaves)

- Scheduling resource monitoring: monitor the change of resources on the Kube API server through the list watch mechanism. The resources here mainly refer to Pod and Node

- Scheduling Node allocation: assign a Node to the Pod to be scheduled for binding and filling in the nodeName through the strategies of predictions and priorities, and write the allocation result to etcd through the Kube apicerver

Experimental deployment

Experimental environment

- Master01:192.168.80.12

- Node01:192.168.80.13

- Node02:192.168.80.14

- The experimental deployment of this article is based on the deployment of Flannel in the previous article, so the experimental environment is unchanged. This deployment is mainly to deploy the components required by the master node

Kube API server component deployment

- master01 server operation, configure apserver self signed certificate

[root@master01 k8s]# cd /mnt / / / enter the host mount directory [root@master01 mnt]# ls etcd-cert etcd-v3.3.10-linux-amd64.tar.gz k8s-cert.sh master.zip etcd-cert.sh flannel.sh kubeconfig.sh node.zip etcd.sh flannel-v0.10.0-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz [root@master01 mnt]# cp master.zip /root/k8s / / / copy the compressed package to k8s working directory [root@master01 mnt]# cd /root/k8s / / / enter k8s working directory [root@master01 k8s]# ls cfssl.sh etcd-v3.3.10-linux-amd64 kubernetes-server-linux-amd64.tar.gz etcd-cert etcd-v3.3.10-linux-amd64.tar.gz master.zip etcd.sh flannel-v0.10.0-linux-amd64.tar.gz [root@master01 k8s]# unzip master.zip / / unzip the package Archive: master.zip inflating: apiserver.sh inflating: controller-manager.sh inflating: scheduler.sh [root@master01 k8s]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p / / create the working directory in master01, and also in the node node before [root@master01 k8s]# MKDIR k8s Cert / / create self signed certificate directory [root@master01 k8s]# CP / MNT / k8s cert.sh / root / k8s / k8s Cert / / move the mounted self signed certificate script to the self signed certificate directory in the k8s working directory [root@master01 k8s]# CD k8s Cert / / enter the directory [root@master01 k8s-cert]# vim k8s-cert.sh / / edit the copied script file ... cat > server-csr.json <<EOF { "CN": "kubernetes", "hosts": [ "10.0.0.1", "127.0.0.1", "192.168.80.12", //Change address to master01IP address "192.168.80.11", //Add the address as master02IP address to prepare for the multi nodes we will do later "192.168.80.100", //Add vrrp address to prepare for load balancing later "192.168.80.13", //Change the address to node01 node IP address "192.168.80.14", //Change the address to node02 node IP address "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF ... :wq [root@master01 k8s-cert]# bash k8s-cert.sh / / execute the script to generate the certificate 2020/02/10 10:59:17 [INFO] generating a new CA key and certificate from CSR 2020/02/10 10:59:17 [INFO] generate received request 2020/02/10 10:59:17 [INFO] received CSR 2020/02/10 10:59:17 [INFO] generating key: rsa-2048 2020/02/10 10:59:17 [INFO] encoded CSR 2020/02/10 10:59:17 [INFO] signed certificate with serial number 10087572098424151492431444614087300651068639826 2020/02/10 10:59:17 [INFO] generate received request 2020/02/10 10:59:17 [INFO] received CSR 2020/02/10 10:59:17 [INFO] generating key: rsa-2048 2020/02/10 10:59:17 [INFO] encoded CSR 2020/02/10 10:59:17 [INFO] signed certificate with serial number 125779224158375570229792859734449149781670193528 2020/02/10 10:59:17 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). 2020/02/10 10:59:17 [INFO] generate received request 2020/02/10 10:59:17 [INFO] received CSR 2020/02/10 10:59:17 [INFO] generating key: rsa-2048 2020/02/10 10:59:17 [INFO] encoded CSR 2020/02/10 10:59:17 [INFO] signed certificate with serial number 328087687681727386760831073265687413205940136472 2020/02/10 10:59:17 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). 2020/02/10 10:59:17 [INFO] generate received request 2020/02/10 10:59:17 [INFO] received CSR 2020/02/10 10:59:17 [INFO] generating key: rsa-2048 2020/02/10 10:59:18 [INFO] encoded CSR 2020/02/10 10:59:18 [INFO] signed certificate with serial number 525069068228188747147886102005817997066385735072 2020/02/10 10:59:18 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). [root@master01 k8s-cert]# ls *pem / / viewing will generate 8 certificates admin-key.pem admin.pem ca-key.pem ca.pem kube-proxy-key.pem kube-proxy.pem server-key.pem server.pem [root@master01 k8s-cert]# cp ca*pem server*pem /opt/kubernetes/ssl / / / move the certificate to the ssl directory under k8s working directory -

Configure apiserver

[root@master01 k8s-cert]# cd.. / / return to k8s working directory [root@master01 k8s]# tar zxvf kubernetes-server-linux-amd64.tar.gz / / extract the package kubernetes/ kubernetes/server/ kubernetes/server/bin/ ... [root@master01 k8s]# cd kubernetes/server/bin / / / enter the directory where the software commands are stored after pressurization [root@master01 bin]# ls apiextensions-apiserver kube-apiserver.docker_tag kube-proxy cloud-controller-manager kube-apiserver.tar kube-proxy.docker_tag cloud-controller-manager.docker_tag kube-controller-manager kube-proxy.tar cloud-controller-manager.tar kube-controller-manager.docker_tag kube-scheduler hyperkube kube-controller-manager.tar kube-scheduler.docker_tag kubeadm kubectl kube-scheduler.tar kube-apiserver kubelet mounter [root@master01 bin]# CP Kube apiserver kubectl Kube Controller Manager Kube scheduler / opt / kubernets / bin / / / copy the key command files to the bin directory of k8s working directory [root@master01 bin]# cd /root/k8s/ [root@master01 k8s]# head -c 16 /dev/urandom | od -An -t x | tr -d '"/ / generate a serial number c37758077defd4033bfe95a071689272 [root@master01 k8s]# vim /opt/kubernetes/cfg/token.csv / / create the token.csv file, which can be understood as creating an administrative role c37758077defd4033bfe95a071689272,kubelet-bootstrap,10001,"system:kubelet-bootstrap" //Specifies the user role identity, and the previous serial number uses the generated serial number :wq [root@master01 k8s]# bash apiserver.sh 192.168.80.12 https://192.168.80.12:2379,https://192.168.80.13:2379,https://192.168.80.14:2379 / / the binary file, token and certificate are all ready, execute the apiserver script, and generate the configuration file at the same time Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service. [root@master01 k8s]# ps aux | grep kube / / check whether the process starts successfully root 17088 8.7 16.7 402260 312192 ? Ssl 11:17 0:08 /opt/kubernetes/bin/kube-apiserver --logtostderr=true --v=4 --etcd-servers=https://192.168.80.12:2379,https://192.168.80.13:2379,https://192.168.80.14:2379 --bind-address=192.168.80.12 --secure-port=6443 --advertise-address=192.168.80.12 --allow-privileged=true --service-cluster-ip-range=10.0.0.0/24 --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction --authorization-mode=RBAC,Node --kubelet-https=true --enable-bootstrap-token-auth --token-auth-file=/opt/kubernetes/cfg/token.csv --service-node-port-range=30000-50000 --tls-cert-file=/opt/kubernetes/ssl/server.pem --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem --client-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --etcd-cafile=/opt/etcd/ssl/ca.pem --etcd-certfile=/opt/etcd/ssl/server.pem --etcd-keyfile=/opt/etcd/ssl/server-key.pem root 17101 0.0 0.0 112676 980 pts/0 S+ 11:19 0:00 grep --color=auto kube [root@master01 k8s]# Cat / opt / kubernetes / CFG / Kube API server / / view the generated configuration file KUBE_APISERVER_OPTS="--logtostderr=true \ --v=4 \ --etcd-servers=https://192.168.80.12:2379,https://192.168.80.13:2379,https://192.168.80.14:2379 \ --bind-address=192.168.80.12 \ --secure-port=6443 \ --advertise-address=192.168.80.12 \ --allow-privileged=true \ --service-cluster-ip-range=10.0.0.0/24 \ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \ --authorization-mode=RBAC,Node \ --kubelet-https=true \ --enable-bootstrap-token-auth \ --token-auth-file=/opt/kubernetes/cfg/token.csv \ --service-node-port-range=30000-50000 \ --tls-cert-file=/opt/kubernetes/ssl/server.pem \ --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \ --client-ca-file=/opt/kubernetes/ssl/ca.pem \ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \ --etcd-cafile=/opt/etcd/ssl/ca.pem \ --etcd-certfile=/opt/etcd/ssl/server.pem \ --etcd-keyfile=/opt/etcd/ssl/server-key.pem" [root@master01 k8s]# netstat -ntap | grep 6443 / / check whether the listening port is enabled tcp 0 0 192.168.80.12:6443 0.0.0.0:* LISTEN 17088/kube-apiserve tcp 0 0 192.168.80.12:48320 192.168.80.12:6443 ESTABLISHED 17088/kube-apiserve tcp 0 0 192.168.80.12:6443 192.168.80.12:48320 ESTABLISHED 17088/kube-apiserve [root@master01 k8s]# netstat -ntap | grep 8080 / / check whether the listening port is enabled tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 17088/kube-apiserve

- Configure the scheduler service

[root@master01 k8s]# . / scheduler.sh 127.0.0.1 / / directly execute the script, start the service, and generate the configuration file Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service. [root@master01 k8s]# systemctl status kube-scheduler.service / / view the running status of the service ● kube-scheduler.service - Kubernetes Scheduler Loaded: loaded (/usr/lib/systemd/system/kube-scheduler.service; enabled; vendor preset: disabled) Active: active (running) since One 2020-02-10 11:22:13 CST; 2min 46s ago //Successful operation Docs: https://github.com/kubernetes/kubernetes ...

- Configure the controller manager service

[root@master01 k8s]# chmod +x controller-manager.sh / / add script execution permission [root@master01 k8s]# . / controller-manager.sh 127.0.0.1 / / execute the script, start the service, and generate the configuration file Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service. [root@master01 k8s]# Systemctl status Kube controller manager. Service / / check the running status ● kube-controller-manager.service - Kubernetes Controller Manager Loaded: loaded (/usr/lib/systemd/system/kube-controller-manager.service; enabled; vendor preset: disabled) Active: active (running) since One 2020-02-10 11:28:21 CST; 7min ago //Successful operation ... [root@master01 k8s]# /opt/kubernetes/bin/kubectl get cs / / check the running status of the node NAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok etcd-2 Healthy {"health":"true"} etcd-0 Healthy {"health":"true"} etcd-1 Healthy {"health":"true"}master node component deployment completed