Network

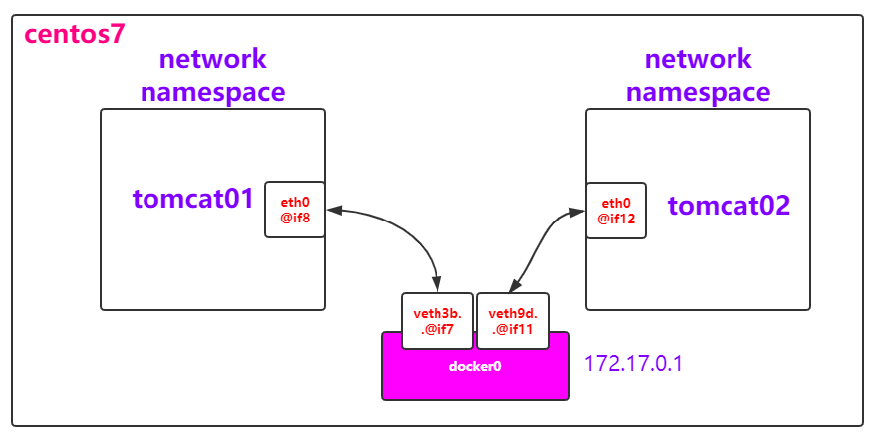

Access between Docker containers

The same network segment can communicate through docker0 network card

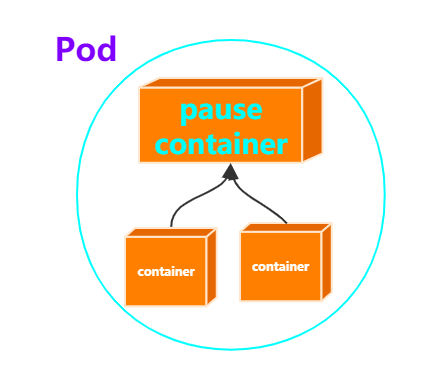

6.1 container communication in the same Pod - pause container

Next, let's talk about the content related to Kubernetes network communication

We all know that the smallest operation unit of K8S is Pod. First, think about the communication between multiple containers in the same Pod

It can be seen from the passage on the official website that the containers in the same pod share the network ip address and port number, and the communication is obviously no problem

Each Pod is assigned a unique IP address. Every container in a Pod shares the network namespace, including the IP address and network ports.

What if communication is through the name of the container? You need to add all the containers in the pod to the network of the same container. We call this container the pause container in the pod.

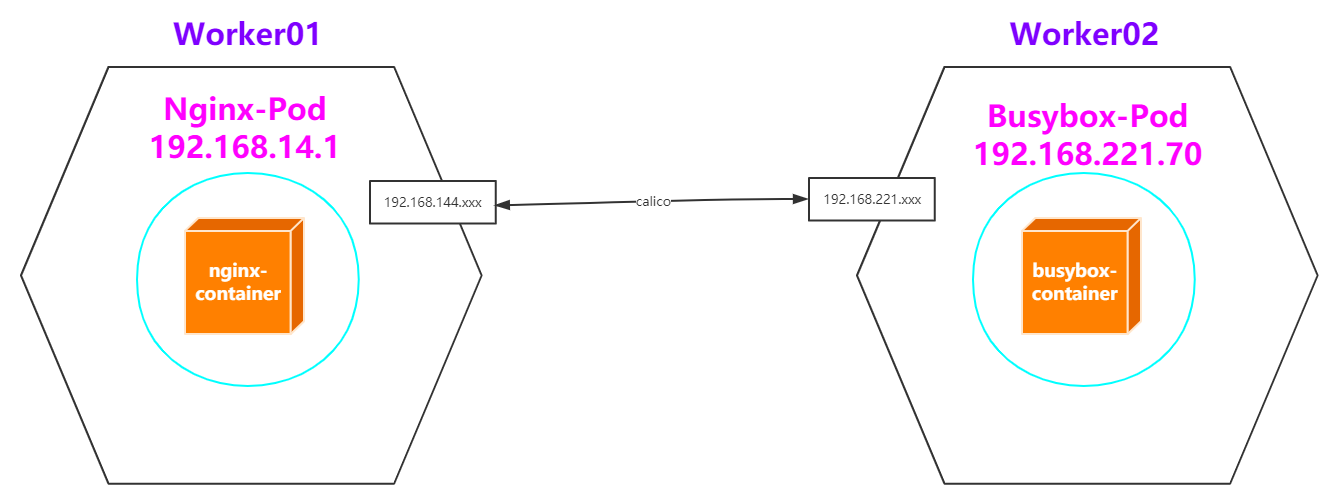

6.2 communication between pods in the cluster Calico

Next, let's talk about the communication between Pod, the smallest operation unit of K8S

All our pods have independent IP addresses, which are shared by all the containers in the Pod

Can the communication between multiple pods pass through this IP address?

I think it needs to be divided into two dimensions: one is the Pod in the same machine in the cluster, and the other is the Pod in different machines in the cluster

Prepare two pod s, one nginx and one busybox

nginx_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx

spec:

containers:

- name: nginx-container

image: nginx

ports:

- containerPort: 80

busybox_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: busybox

labels:

app: busybox

spec:

containers:

- name: busybox

image: busybox

command: ['sh', '-c', 'echo The app is running! && sleep 3600']

Run the two pod s and check the operation

kubectl apply -f nginx_pod.yaml

kubectl apply -f busy_pod.yaml

kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE busybox 1/1 Running 0 49s 192.168.221.70 worker02-kubeadm-k8s nginx-pod 1/1 Running 0 7m46s 192.168.14.1 worker01-kubeadm-k8s

It is found that the ip of nginx pod is 192.168.14.1 and that of busybox pod is 192.168.221.70

The same machine in the same cluster

(1) Go to worker01: ping 192.168.14.1

PING 192.168.14.1 (192.168.14.1) 56(84) bytes of data. 64 bytes from 192.168.14.1: icmp_seq=1 ttl=64 time=0.063 ms 64 bytes from 192.168.14.1: icmp_seq=2 ttl=64 time=0.048 ms

(2) Come to worker01: curl 192.168.14.1

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

Different machines in the same cluster

(1) Go to worker02: ping 192.168.14.1

[root@worker02-kubeadm-k8s ~]# ping 192.168.14.1 PING 192.168.14.1 (192.168.14.1) 56(84) bytes of data. 64 bytes from 192.168.14.1: icmp_seq=1 ttl=63 time=0.680 ms 64 bytes from 192.168.14.1: icmp_seq=2 ttl=63 time=0.306 ms 64 bytes from 192.168.14.1: icmp_seq=3 ttl=63 time=0.688 ms

(2) Go to worker02: curl 192.168.14.1, and you can also access nginx

(3) To master:

ping/curl 192.168.14.1 accesses nginx pod on worker01

ping 192.168.221.70 accesses busybox pod on worker02

(4) Go to worker01: ping 192.168.221.70 and visit busybox pod on worker02

How to implement the Kubernetes Cluster networking model–Calico

Official website: https://kubernetes.io/docs/concepts/cluster-administration/networking/#the-kubernetes-network-model

- pods on a node can communicate with all pods on all nodes without NAT

- agents on a node (e.g. system daemons, kubelet) can communicate with all pods on that node

- pods in the host network of a node can communicate with all pods on all nodes without NAT

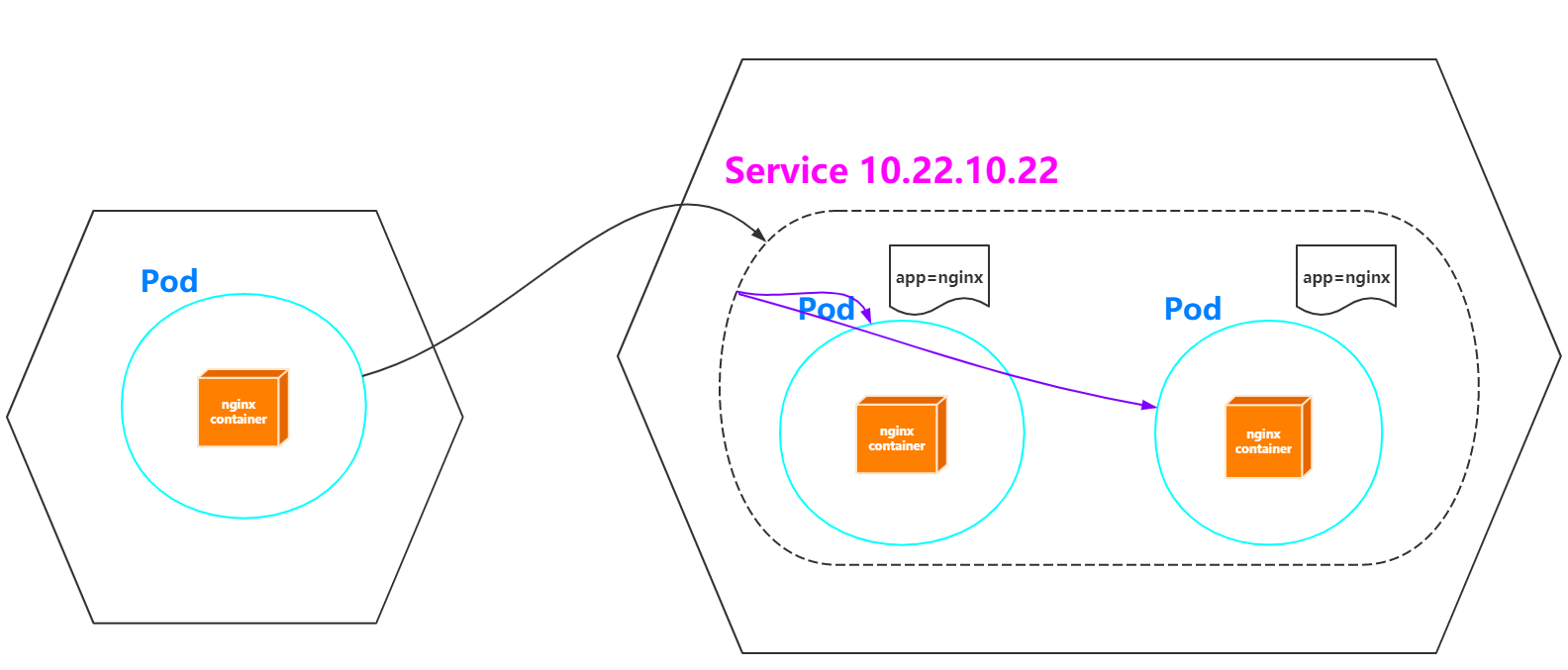

6.3 accessing the service Kube proxy in the cluster

For the above-mentioned Pod, although the internal communication of the cluster is realized, the Pod is unstable. For example, the capacity of the Pod may be expanded or reduced at any time by managing the Pod through Deployment. At this time, the IP address of the Pod changes. It can have a fixed IP so that it can be accessed in the cluster. That is, as mentioned earlier in the architecture description, the same or associated Pod can be labeled to form a Service. The Service has a fixed IP. No matter how the Pod is created or destroyed, it can be accessed through the Service IP

Service official website: https://kubernetes.io/docs/concepts/services-networking/service/

An abstract way to expose an application running on a set of Pods as a network service. With Kubernetes you don't need to modify your application to use an unfamiliar service discovery mechanism. Kubernetes gives Pods their own IP addresses and a single DNS name for a set of Pods, and can load-balance across them.

[the external chain image transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the image and upload it directly (img-6qmjifrm-1624149255351)( https://gitee.com/onlycreator/draw/raw/master/img/services-userspace-overview.svg )]

Graphic scene

(1) Create whoamI deployment Yaml file, and apply

apiVersion: apps/v1

kind: Deployment

metadata:

name: whoami-deployment

labels:

app: whoami

spec:

replicas: 3

selector:

matchLabels:

app: whoami

template:

metadata:

labels:

app: whoami

spec:

containers:

- name: whoami

image: jwilder/whoami

ports:

- containerPort: 8000

(2) View pod and service

whoami-deployment-5dd9ff5fd8-22k9n 192.168.221.80 worker02-kubeadm-k8s whoami-deployment-5dd9ff5fd8-vbwzp 192.168.14.6 worker01-kubeadm-k8s whoami-deployment-5dd9ff5fd8-zzf4d 192.168.14.7 worker01-kubeadm-k8s

kubectl get svc: it can be found that there is no service about whoami at present

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 19h

(3) Normal access within the cluster

curl 192.168.221.80:8000/192.168.14.6:8000/192.168.14.7:8000

(4) Create whoami's service

Note: this address can only be accessed within the cluster

# Create a service kubectl expose deployment whoami-deployment kubectl get svc [root@master-kubeadm-k8s ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 19h whoami-deployment ClusterIP 10.105.147.59 <none> 8000/TCP 23s # Delete svc # kubectl delete service whoami-deployment

You can find a service of Cluster IP type, whose name is whoamI deployment, and its IP address is 10.101.201.192

(5) Access through Cluster IP of Service

[root@master-kubeadm-k8s ~]# curl 10.105.147.59:8000 I'm whoami-deployment-678b64444d-b2695 [root@master-kubeadm-k8s ~]# curl 10.105.147.59:8000 I'm whoami-deployment-678b64444d-hgdrk [root@master-kubeadm-k8s ~]# curl 10.105.147.59:8000 I'm whoami-deployment-678b64444d-65t88

(6) Check the details of whoamI deployment and find that one endpoint is connected to three specific pods

[root@master-kubeadm-k8s ~]# kubectl describe svc whoami-deployment Name: whoami-deployment Namespace: default Labels: app=whoami Annotations: <none> Selector: app=whoami Type: ClusterIP IP: 10.105.147.59 Port: <unset> 8000/TCP TargetPort: 8000/TCP Endpoints: 192.168.14.8:8000,192.168.221.81:8000,192.168.221.82:8000 Session Affinity: None Events: <none>

(7) We might as well expand whoami to 5

kubectl scale deployment whoami-deployment --replicas=5

(8) Visit again: curl 10.105.147.59:8000

(9) Check the service details again: kubectl describe SVC whoamI deployment

(10) In fact, for Service creation, you can not only use kubectl expose, but also define a yaml file

apiVersion: v1

kind: Service

metadata:

name: my-service

spec:

selector:

app: MyApp

ports:

- protocol: TCP

port: 80

targetPort: 9376

type: Cluster

conclusion: in fact, the meaning of Service is for the instability of Pod, and the above discussion is about a type of Service Cluster IP, which can only be accessed in the cluster

With Pod as the center, the communication mode in the cluster has been discussed. The next step is to discuss the access of Pod in the cluster to external services and the access of external services to Pod in the cluster

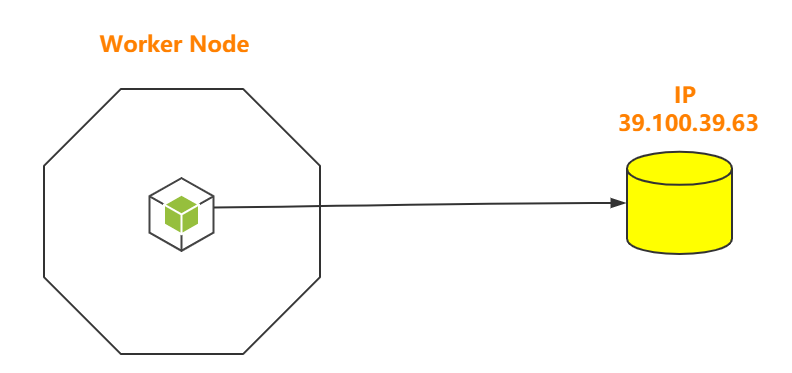

6.4 Pod access to external services

It's relatively simple. There's not much to say. Just visit it directly

6.5 external service accessing Pod in cluster

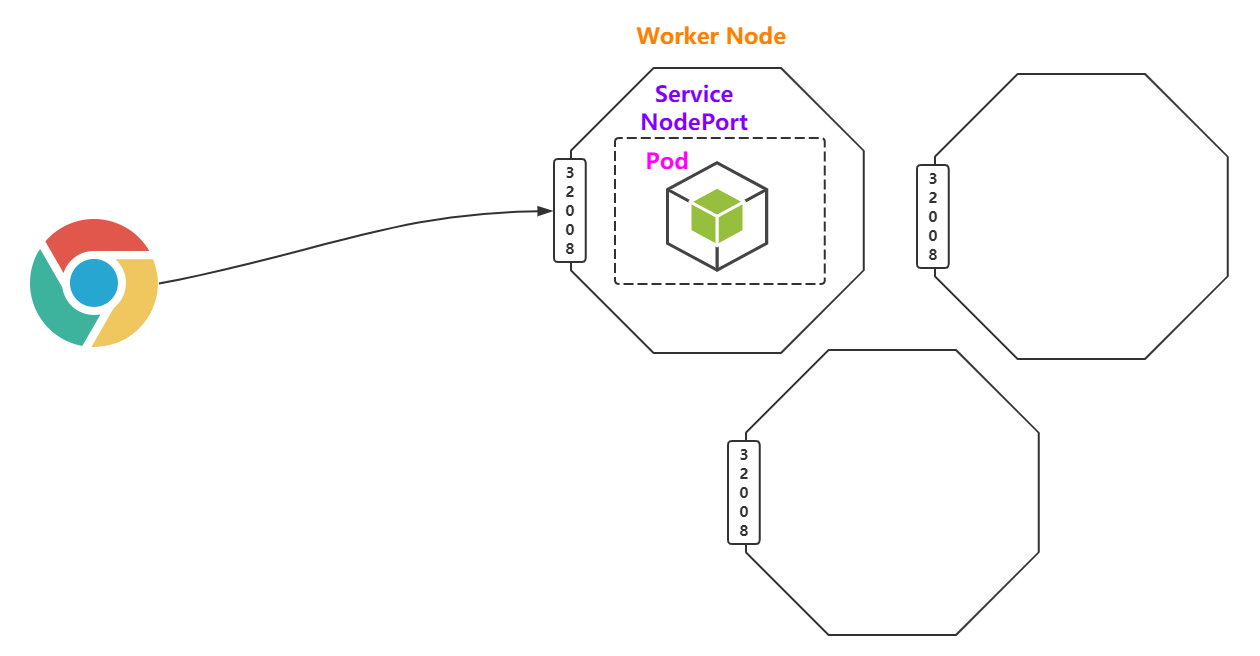

Service-NodePort

It is also a type of Service, which can be through NodePort

To put it bluntly, because the external can access the physical machine IP of the cluster, the same IP is exposed on each physical machine in the cluster, such as 32008

(1) According to whoamI deployment Yaml create pod

apiVersion: apps/v1

kind: Deployment

metadata:

name: whoami-deployment

labels:

app: whoami

spec:

replicas: 3

selector:

matchLabels:

app: whoami

template:

metadata:

labels:

app: whoami

spec:

containers:

- name: whoami

image: jwilder/whoami

ports:

- containerPort: 8000

(2) Create a service of NodePort type with the name whoamI deployment

kubectl delete svc whoami-deployment kubectl expose deployment whoami-deployment --type=NodePort [root@master-kubeadm-k8s ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 21h whoami-deployment NodePort 10.99.108.82 <none> 8000:32041/TCP 7s

(3) Note that the above port 32041 is actually the port exposed on the physical machine in the cluster

lsof -i tcp:32041 netstat -ntlp|grep 32041

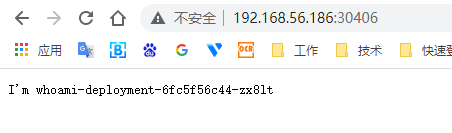

(4) The browser is accessed through the IP of the physical machine

http://192.168.0.51:32041 curl 192.168.0.61:32041

conclusion: Although NodePort can meet the requirements of external access to Pod, is it really good? In fact, it's not good. It occupies the ports on each physical host

Service-LoadBalance

It usually needs the support of third-party cloud providers, which is binding

Ingress

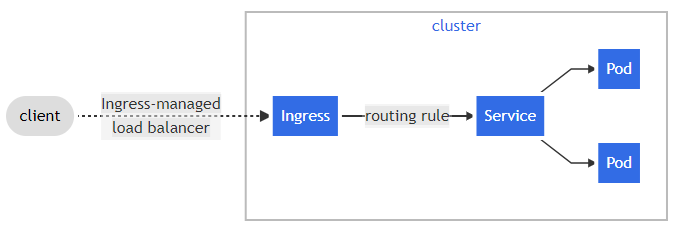

Official website: https://kubernetes.io/docs/concepts/services-networking/ingress/

An API object that manages external access to the services in a cluster, typically HTTP. Ingress can provide load balancing, SSL termination and name-based virtual hosting.

What is Ingress?

Ingress exposes HTTP and HTTPS routes from outside the cluster to services within the cluster. Traffic routing is controlled by rules defined on the Ingress resource.

Here is a simple example where an Ingress sends all its traffic to one Service:

It can be found that Ingress helps us access the services in the cluster. But before we look at progress, let's start with a case.

Obviously, the service nodeport production environment is not recommended. Next, based on the above requirements, deploy whoami in the K8S cluster, which can be accessed by external hosts.

Official website: Ingress: https://kubernetes.io/docs/concepts/services-networking/ingress/

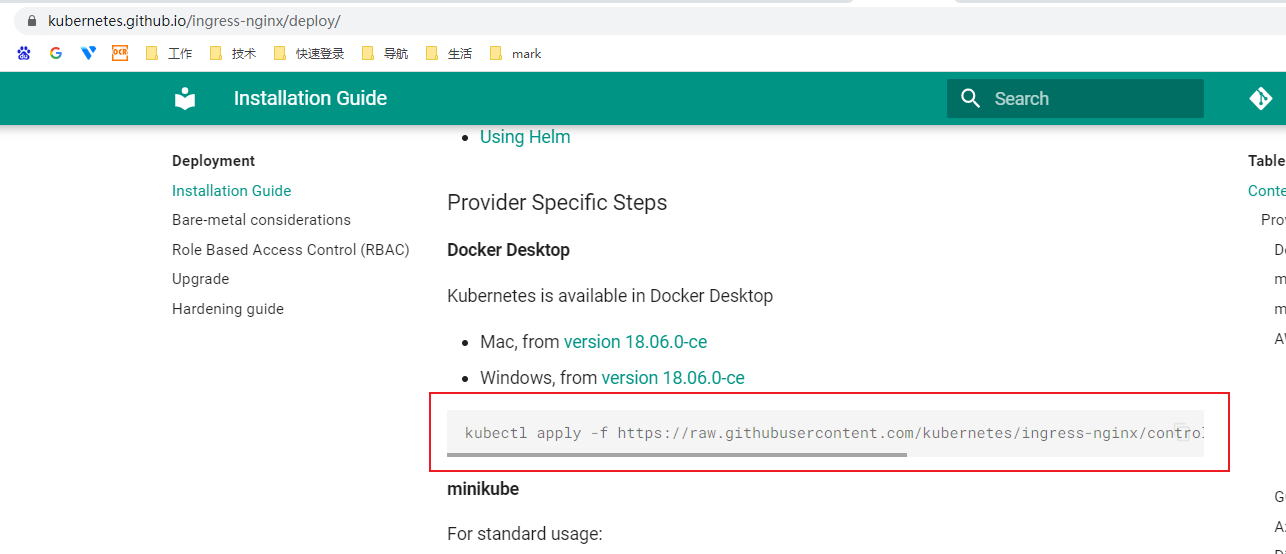

GitHub Ingress Nginx:https://github.com/kubernetes/ingress-nginx

Nginx Ingress Controller:<https://kubernetes.github.io/ingress-nginx/

Download the official offer of deploy Yaml needs scientific Internet access

I provide a local version saved as mandatory yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

hostNetwork: true

nodeSelector:

name: ingress

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.26.1

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 33

runAsUser: 33

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

(1) Create a Pod in the mode of Deployment. The Pod is an Ingress Nginx Controller. To allow external access, you can use the NodePort or HostPort mode of the Service. Here, select HostPort, for example, specify worker01 to run

#1. Make sure nginx controller runs on w1 node

kubectl label node w1 name=ingress

#2. To run in HostPort mode, you need to add configuration

# I have made the following changes in the yaml provided. Just look back

hostNetwork: true # Use local network

nodeSelector:

name: ingress #Node select the w1 node of step 1

# Search nodeSelector and ensure that ports 80 and 443 on node w1 are not occupied. It takes a long time to pull the image. Please pay attention to this

# Through cat mandatory Yaml | grep image can view which images need to be pulled

# mandatory.yaml in

kubectl apply -f mandatory.yaml

kubectl get all -n ingress-nginx

kubectl get all -n ingress-nginx -o wide

(2) View ports 80 and 443 of w1

lsof -i tcp:80 lsof -i tcp:443

(3) Create pod s and service s for whoamI deployment

Remember to delete the previous whoamI deployment: kubectl delete - F whoamI deployment yaml

vi whoami-deployment.yaml

kubectl apply -f whoami-deployment.yaml

kubectl get svc

kubectl get pods

apiVersion: apps/v1

kind: Deployment

metadata:

name: whoami-deployment

labels:

app: whoami

spec:

replicas: 3

selector:

matchLabels:

app: whoami

template:

metadata:

labels:

app: whoami

spec:

containers:

- name: whoami

image: jwilder/whoami

ports:

- containerPort: 8000

---

apiVersion: v1

kind: Service

metadata:

name: whoami-service

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8000

selector:

app: whoami

(4) Create Ingress and define forwarding rules

kubectl apply -f nginx-ingress.yaml

kubectl get ingress

kubectl describe ingress nginx-ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-ingress

spec:

rules:

- host: whoami.com

http:

paths:

- path: /

backend:

serviceName: whoami-service

servicePort: 80

(5) Modify the hosts file of win and add dns resolution

192.168.56.187 whoami.com

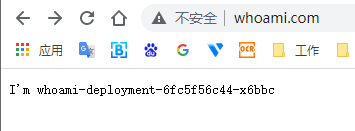

(6) Open your browser and visit whoamI com

Conclusion: if you want to use ingress network in the future, you can only define ingress, service and pod. The premise is to ensure that the nginx ingress controller has been configured.