Reprint:

https://zhuanlan.zhihu.com/p/94418251

https://sq.163yun.com/blog/article/174981072898940928

https://draveness.me/kubernetes-service/

1. Why do I need Kube proxy and service

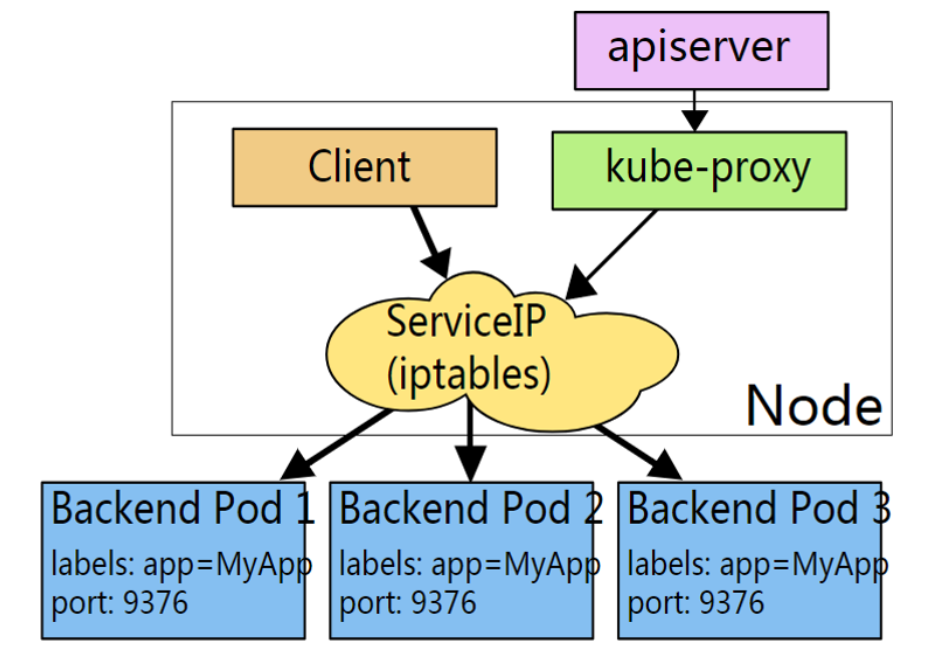

Containers are characterized by rapid creation and destruction. In order to access these pod s, load balancing and VIP need to be introduced to realize the automatic forwarding and fault drift of back-end real services.

This load balancing is service, VIP is service IP, and service is a four layer load balancing.

k8s in order to enable all nodes in the cluster to access the service, Kube proxy will create this VIP on all nodes by default to achieve load balancing

2.service

Creating a service requires the cooperation of ServiceController, EndpointController and Kube proxy

2.1.ServiceController

When the state of a service object changes, Informer will notify ServiceController to create the corresponding service

2.2.EndpointController

EndpointController will subscribe to the addition and deletion events of Service and Pod at the same time. Its functions are as follows

Responsible for generating and maintaining all endpoint Object controller Responsible for monitoring service And corresponding pod Change of Monitor service If deleted, delete and service Homonymous endpoint object Listen for new service If it is created, create it according to the service Information acquisition related pod List, and then create the corresponding endpoint object Monitor service Is updated according to the updated service Information acquisition related pod List, and then update the corresponding endpoint object Monitor pod Event, update the corresponding service of endpoint Object, will podIp Recorded endpoint in

3.kube-proxy

Kube proxy is responsible for the implementation of the service and realizes the k8s internal service and external node port access to the service.

Kube proxy uses iptables to configure load balancing. The main responsibilities of iptables based Kube proxy include two parts: one is to listen to service update events and update iptables rules related to services, and the other is to listen to endpoint update events and update iptables rules related to endpoints (such as rules in Kube SVC chain), Then transfer the package request to the Pod corresponding to the endpoint.

3.1. iptables implementation principle of Kube proxy

# kubectl get svc -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes-bootcamp-v1 ClusterIP 10.106.224.41 <none> 8080/TCP 163m

The ClusterIP is 10.106.224.41. We can verify that this IP does not exist locally, so don't try to Ping the ClusterIP. It can't pass.

root@ip-192-168-193-172:~# ping -c 2 -w 2 10.106.224.41 PING 10.106.224.41 (10.106.224.41) 56(84) bytes of data. --- 10.106.224.41 ping statistics --- 2 packets transmitted, 0 received, 100% packet loss, time 1025ms

At this time, when accessing the Service on Node 192.168.193.172, the first traffic reaches the OUTPUT chain. Here, we only care about the OUTPUT chain of nat table:

# iptables-save -t nat | grep -- '-A OUTPUT' -A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

The chain jumps to the KUBE-SERVICES sub chain:

# iptables-save -t nat | grep -- '-A KUBE-SERVICES' ... -A KUBE-SERVICES ! -s 10.244.0.0/16 -d 10.106.224.41/32 -p tcp -m comment --comment "default/kubernetes-bootcamp-v1: cluster IP" -m tcp --dport 8080 -j KUBE-MARK-MASQ -A KUBE-SERVICES -d 10.106.224.41/32 -p tcp -m comment --comment "default/kubernetes-bootcamp-v1: cluster IP" -m tcp --dport 8080 -j KUBE-SVC-RPP7DHNHMGOIIFDC

Two of our findings are relevant:

- Article 1: mark MARK0x4000/0x4000, which will be used later

- Article 2: jump to KUBE-SVC-RPP7DHNHMGOIIFDC sub chain

The rules of KUBE-SVC-RPP7DHNHMGOIIFDC sub chain are as follows:

# iptables-save -t nat | grep -- '-A KUBE-SVC-RPP7DHNHMGOIIFDC' -A KUBE-SVC-RPP7DHNHMGOIIFDC -m statistic --mode random --probability 0.33332999982 -j KUBE-SEP-FTIQ6MSD3LWO5HZX -A KUBE-SVC-RPP7DHNHMGOIIFDC -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-SQBK6CVV7ZCKBTVI -A KUBE-SVC-RPP7DHNHMGOIIFDC -j KUBE-SEP-IAZPHGLZVO2SWOVD

View kube-sep-ftiq6md3lwo5hzx sub chain rules:

# iptables-save -t nat | grep -- '-A KUBE-SEP-FTIQ6MSD3LWO5HZX' -A KUBE-SEP-FTIQ6MSD3LWO5HZX -p tcp -m tcp -j DNAT --to-destination 10.244.1.2:8080

It can be seen that the purpose of this rule is to make a DNAT, and the goal of DNAT is one of the endpoints, that is, Pod service

It can be seen that the function of the sub chain KUBE-SVC-RPP7DHNHMGOIIFDC is to connect DNAT to one of the endpoint IPS, namely Pod IP, according to the principle of equal probability, assuming that it is 10.244.1.2 This is equivalent to

192.168.193.172:xxxx -> 10.106.224.41:8080

|

| DNAT

V

192.168.193.172:xxxX -> 10.244.1.2:8080

Then come to the POSTROUTING chain:

# iptables-save -t nat | grep -- '-A POSTROUTING' -A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING # iptables-save -t nat | grep -- '-A KUBE-POSTROUTING' -A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -m mark --mark 0x4000/0x4000 -j MASQUERADE

These two rules only do one thing. As long as they are marked as 0x4000/0x4000 packages, they are all SANT. Since 10.244.1.2 defaults to flannel 1, so the source IP will be changed to flannel 1 IP10 244.0.0

192.168.193.172:xxxx -> 10.106.224.41:8080

|

| DNAT

V

192.168.193.172:xxxx -> 10.244.1.2:8080

|

| SNAT

V

10.244.0.0:xxxx -> 10.244.1.2:8080