Containers exist in both docker and K8S and have a life cycle, so data volumes can be used for data persistence.

Key issues addressed by data volumes:

1. Data persistence: When we write data, the files are temporary. When the container crashes, the host kills the container, then re-creates the container from the mirror, and the data is lost.

2. Data sharing: Running containers in the same Pod requires sharing files.

Type of data volume:

1.emptyDir

The emptyDir data volume is similar to the docker manager volume for docker data persistence. When initially assigned, the data volume is an empty directory in which a container in the same Pod can perform read and write operations and share data.

Scene features: one same pod, different containers, shared data volumes

If the container is deleted, the data still exists, and if the Pod is deleted, the data is deleted

Test:

**vim emptyDir.yaml**

apiVersion: v1

kind: Pod

metadata:

name: producer-consumer

spec:

containers:

- image: busybox

name: producer

volumeMounts:

- mountPath: /producer_dir#Here the path refers to the path within the container

name: shared-volume#Specify a local directory name

args:#Define what happens when the container runs

- /bin/sh

- -c

- echo "hello k8s" > /producer_dir/hello; sleep 30000

- image: busybox

name: consumer

volumeMounts:

- mountPath: /consumer_dir

name: shared-volume

args:

- /bin/sh

- -c

- cat /consumer_dir/hello; sleep 30000

volumes:

- name: shared-volume#The value here needs to correspond to the name value of the mountPath of the Pod above

emptyDir: {}#Define the type of data persistence that represents an empty directoryKubectl apply-f emptyDir.yaml #execute file

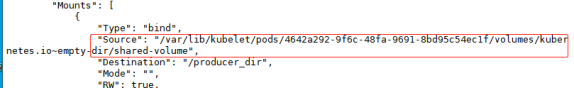

Docker inspect (see container details): Mount mount point

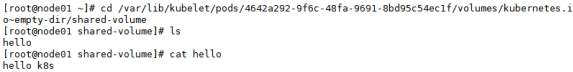

You can enter the directory to view the data on the host machine.

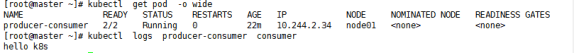

Kubectl get pod-o wide (-w): can view pod information in detail

Add-w: you can view it in real time.And know which node the container is running on.

kubectl logs {pod name} consumer can view the data in the directory.

Depending on the test, you can see the containers on the nodes and whether the mount directories are the same.The same is true.You can delete the container to see if the data is lost, and delete the master node pod to see if the data is still there.

According to tests, emptyDir data persistence is generally only used as temporary storage.

2.hostPath Volume

1) Mount a file or directory on the file system of the Pod node into a container.

2) A bind mount similar to docker data persistence.If the Pod is deleted, the data remains.It's better than emptyDir, but once the host crashes, hostPath can't be accessed.

3) There are few scenarios where this data persistence is used because it increases the coupling between Pod and nodes.

3. Persistent Volume: The directory where the pv (persistent volume) stores data persistence, done in advance.

persistentVolumeClaim: PVC (Persistent Volume Use Statement|Request)

The pvc is a user-stored request.Similar to pod.Pod consumes node resources, pvc consumes storage resources.A pod can request a specific level of resources (cpu, memory).The pvc can request specific sizes and access modes based on permissions.PV based on NFS services:

install NFS Services and rpcbind Services: 1.[root@master yaml]# yum install -y nfs-utils rpcbind #Note here that all three install NFS services. 2.[root@master yaml]# vim /etc/exports //Add / nfsdata * (rw,sync,no_root_squash) to the file 4.[root@master yaml]# mkdir /nfsdata 5.[root@master yaml]# systemctl start rpcbind 6.[root@master yaml]# systemctl start nfs-server.service 7.[root@master yaml]# showmount -e Export list for master: /nfsdata *

Create a PV resource object:

vim nfs-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: test

spec:

capacity:#Capacity given

storage: 1Gi

accessModes:#PV Supported Access Mode

- ReadWriteOnce#This means that you can only mount to a single node read-write

persistentVolumeReclaimPolicy: Recycle#Recycle policy for pv: Indicates clear data automatically

storageClassName: nfs#Defined Storage Class Name

nfs:#This should match the storage class name defined above

path: /nfsdata/pv1#Specify a directory for NFS

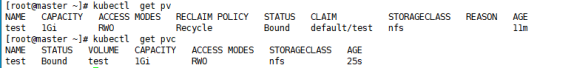

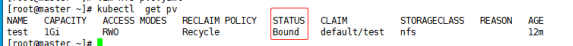

server: 192.168.1.1#Specify the IP of the NFS serverimplement nfs-pv.yaml Files: **` kubectl apply -f nfs-pv.yaml ` ** persistentvolume/test created **` kubectl get pv `** NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE test 1Gi RWO Recycle ** Available ** nfs 7s **Note here STATUS Status is Available To be able to use.**

Access modes supported by pv:

ReadWriteOnce: Access mode is to mount to a single node read-write only

ReadWriteMany: Access mode is to mount to multiple nodes read-write only

ReadOnlyMany: Access mode is to mount to multiple nodes in a read-only manner only

Recycling strategies for PV storage:

Recycle: Automatically clean up data.

Retain: Manual recycling by an administrator is required.

Delete: Cloud storage dedicated.

PV and PVC are related to each other: through storageClassName and accessModes

Establish PVC:

vim nfs-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: test

spec:

accessModes:

- ReadWriteOnce#Define the access mode, which must be consistent with the Pv definition

resources:

requests:

storage: 1Gi#Size of Request Capacity

storageClassName: nfs#The name of the storage class must match the pv definition.Run and view PVC and PV:

kubectl apply -f nfs-pvc.yaml

As you will see when you associate, Bound: