catalog

1, Sqoop export

1. Create a job to export the HDFS file to the MySQL database.

(1) Open PDI and create a new job, as shown in Figure 1.

Figure 1

Figure 1

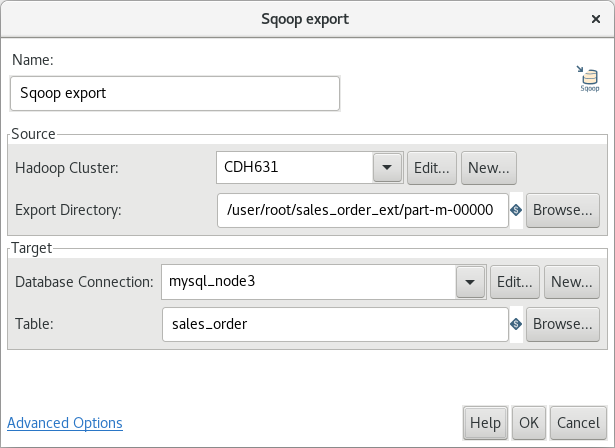

(2) Edit the 'Sqoop export' job item, as shown in Figure 2.

Figure 2

Figure 2

Description:

- CDH631 is a built Hadoop cluster connection. See“ https://wxy0327.blog.csdn.net/article/details/106406702#%E4%BA%8C%E3%80%81%E8%BF%9E%E6%8E%A5Hadoop%E9%9B%86%E7%BE%A4".

- mysql_node3 is the established MySQL database connection. See“ https://wxy0327.blog.csdn.net/article/details/106406702#%E4%BA%94%E3%80%81%E5%90%8E%E7%BB%AD%EF%BC%88%E5%BB%BA%E7%AB%8BMySQL%E6%95%B0%E6%8D%AE%E5%BA%93%E8%BF%9E%E6%8E%A5%EF%BC%89".

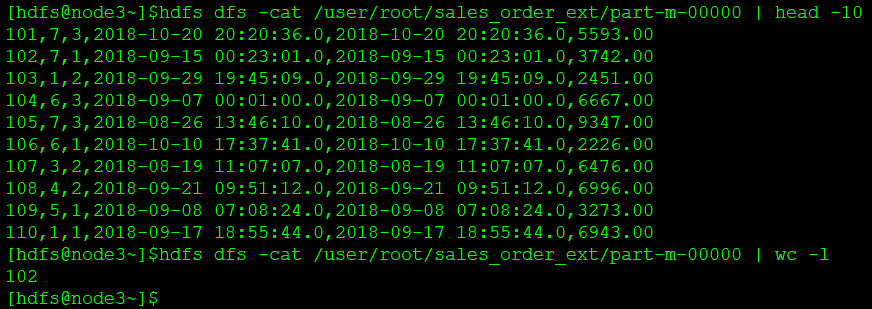

- /user/root/sales_order_ext/part-m-00000 is a CSV file on HDFS with 102 lines of records, as shown in Figure 3.

Figure 3

Figure 3

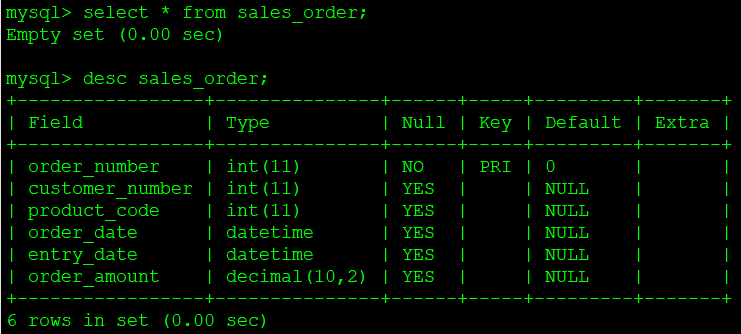

- sales_order is the target table in MySQL, whose structure matches the part-m-00000 file, as shown in Figure 4.

Figure 4

Figure 4

2. Save and execute the job. The log is as follows.

2020/06/08 09:28:40 - Spoon - Starting job... 2020/06/08 09:28:40 - sqoop_export - Start of job execution 2020/06/08 09:28:40 - sqoop_export - Starting entry [Sqoop export] 2020/06/08 09:28:40 - Sqoop export - 2020/06/08 09:28:40 - fs.default.name is deprecated. Instead, use fs.defaultFS 2020/06/08 09:28:40 - Sqoop export - 2020/06/08 09:28:40 - $SQOOP_CONF_DIR has not been set in the environment. Cannot check for additional configuration. 2020/06/08 09:28:40 - Sqoop export - 2020/06/08 09:28:40 - Running Sqoop version: 1.4.7-cdh6.1.0 2020/06/08 09:28:40 - Sqoop export - 2020/06/08 09:28:40 - Setting your password on the command-line is insecure. Consider using -P instead. 2020/06/08 09:28:40 - Sqoop export - 2020/06/08 09:28:40 - $SQOOP_CONF_DIR has not been set in the environment. Cannot check for additional configuration. 2020/06/08 09:28:40 - Sqoop export - 2020/06/08 09:28:40 - Preparing to use a MySQL streaming resultset. 2020/06/08 09:28:40 - Sqoop export - 2020/06/08 09:28:40 - Beginning code generation 2020/06/08 09:28:40 - Sqoop export - 2020/06/08 09:28:40 - Executing SQL statement: SELECT t.* FROM `sales_order` AS t LIMIT 1 2020/06/08 09:28:40 - Sqoop export - 2020/06/08 09:28:40 - Executing SQL statement: SELECT t.* FROM `sales_order` AS t LIMIT 1 2020/06/08 09:28:40 - Sqoop export - 2020/06/08 09:28:40 - $HADOOP_MAPRED_HOME is not set 2020/06/08 09:28:41 - Sqoop export - 2020/06/08 09:28:41 - Writing jar file: /tmp/sqoop-root/compile/7c09fc949771ba78a584cc07c1cd6b3f/sales_order.jar 2020/06/08 09:28:41 - Sqoop export - 2020/06/08 09:28:41 - Beginning export of sales_order 2020/06/08 09:28:41 - Sqoop export - 2020/06/08 09:28:41 - SQOOP_HOME is unset. May not be able to find all job dependencies. 2020/06/08 09:28:41 - Sqoop export - 2020/06/08 09:28:41 - Connecting to ResourceManager at manager/172.16.1.124:8032 2020/06/08 09:28:41 - Sqoop export - 2020/06/08 09:28:41 - Disabling Erasure Coding for path: /user/root/.staging/job_1591323999364_0046 2020/06/08 09:28:41 - Sqoop export - 2020/06/08 09:28:41 - Total input files to process : 1 2020/06/08 09:28:41 - Sqoop export - 2020/06/08 09:28:41 - Total input files to process : 1 2020/06/08 09:28:41 - Sqoop export - 2020/06/08 09:28:41 - number of splits:4 2020/06/08 09:28:41 - Sqoop export - 2020/06/08 09:28:41 - fs.default.name is deprecated. Instead, use fs.defaultFS 2020/06/08 09:28:41 - Sqoop export - 2020/06/08 09:28:41 - Submitting tokens for job: job_1591323999364_0046 2020/06/08 09:28:41 - Sqoop export - 2020/06/08 09:28:41 - Executing with tokens: [] 2020/06/08 09:28:41 - Sqoop export - 2020/06/08 09:28:41 - Submitted application application_1591323999364_0046 2020/06/08 09:28:41 - Sqoop export - 2020/06/08 09:28:41 - The url to track the job: http://manager:8088/proxy/application_1591323999364_0046/ 2020/06/08 09:28:41 - Sqoop export - 2020/06/08 09:28:41 - Running job: job_1591323999364_0046 2020/06/08 09:28:51 - Sqoop export - 2020/06/08 09:28:51 - Job job_1591323999364_0046 running in uber mode : false 2020/06/08 09:28:51 - Sqoop export - 2020/06/08 09:28:51 - map 0% reduce 0% 2020/06/08 09:28:58 - Sqoop export - 2020/06/08 09:28:58 - map 75% reduce 0% 2020/06/08 09:28:59 - Sqoop export - 2020/06/08 09:28:59 - map 100% reduce 0% 2020/06/08 09:28:59 - Sqoop export - 2020/06/08 09:28:59 - Job job_1591323999364_0046 completed successfully 2020/06/08 09:28:59 - Sqoop export - 2020/06/08 09:28:59 - Counters: 34 2020/06/08 09:28:59 - Sqoop export - File System Counters 2020/06/08 09:28:59 - Sqoop export - FILE: Number of bytes read=0 2020/06/08 09:28:59 - Sqoop export - FILE: Number of bytes written=968088 2020/06/08 09:28:59 - Sqoop export - FILE: Number of read operations=0 2020/06/08 09:28:59 - Sqoop export - FILE: Number of large read operations=0 2020/06/08 09:28:59 - Sqoop export - FILE: Number of write operations=0 2020/06/08 09:28:59 - Sqoop export - HDFS: Number of bytes read=15868 2020/06/08 09:28:59 - Sqoop export - HDFS: Number of bytes written=0 2020/06/08 09:28:59 - Sqoop export - HDFS: Number of read operations=16 2020/06/08 09:28:59 - Sqoop export - HDFS: Number of large read operations=0 2020/06/08 09:28:59 - Sqoop export - HDFS: Number of write operations=0 2020/06/08 09:28:59 - Sqoop export - HDFS: Number of bytes read erasure-coded=0 2020/06/08 09:28:59 - Sqoop export - Job Counters 2020/06/08 09:28:59 - Sqoop export - Launched map tasks=4 2020/06/08 09:28:59 - Sqoop export - Data-local map tasks=2 2020/06/08 09:28:59 - Sqoop export - Rack-local map tasks=2 2020/06/08 09:28:59 - Sqoop export - Total time spent by all maps in occupied slots (ms)=18912 2020/06/08 09:28:59 - Sqoop export - Total time spent by all reduces in occupied slots (ms)=0 2020/06/08 09:28:59 - Sqoop export - Total time spent by all map tasks (ms)=18912 2020/06/08 09:28:59 - Sqoop export - Total vcore-milliseconds taken by all map tasks=18912 2020/06/08 09:28:59 - Sqoop export - Total megabyte-milliseconds taken by all map tasks=19365888 2020/06/08 09:28:59 - Sqoop export - Map-Reduce Framework 2020/06/08 09:28:59 - Sqoop export - Map input records=102 2020/06/08 09:28:59 - Sqoop export - Map output records=102 2020/06/08 09:28:59 - Sqoop export - Input split bytes=556 2020/06/08 09:28:59 - Sqoop export - Spilled Records=0 2020/06/08 09:28:59 - Sqoop export - Failed Shuffles=0 2020/06/08 09:28:59 - Sqoop export - Merged Map outputs=0 2020/06/08 09:28:59 - Sqoop export - GC time elapsed (ms)=351 2020/06/08 09:28:59 - Sqoop export - CPU time spent (ms)=5660 2020/06/08 09:28:59 - Sqoop export - Physical memory (bytes) snapshot=857292800 2020/06/08 09:28:59 - Sqoop export - Virtual memory (bytes) snapshot=10344894464 2020/06/08 09:28:59 - Sqoop export - Total committed heap usage (bytes)=739770368 2020/06/08 09:28:59 - Sqoop export - Peak Map Physical memory (bytes)=228241408 2020/06/08 09:28:59 - Sqoop export - Peak Map Virtual memory (bytes)=2586439680 2020/06/08 09:28:59 - Sqoop export - File Input Format Counters 2020/06/08 09:28:59 - Sqoop export - Bytes Read=0 2020/06/08 09:28:59 - Sqoop export - File Output Format Counters 2020/06/08 09:28:59 - Sqoop export - Bytes Written=0 2020/06/08 09:28:59 - Sqoop export - 2020/06/08 09:28:59 - Transferred 15.4961 KB in 18.7657 seconds (845.5859 bytes/sec) 2020/06/08 09:28:59 - Sqoop export - 2020/06/08 09:28:59 - Exported 102 records. 2020/06/08 09:28:59 - sqoop_export - Finished job entry [Sqoop export] (result=[true]) 2020/06/08 09:28:59 - sqoop_export - Job execution finished 2020/06/08 09:28:59 - Spoon - Job has ended.

As you can see from the log, the job has been executed successfully. In the directory where the job is located, a file named sales will be generated_ order.java File.

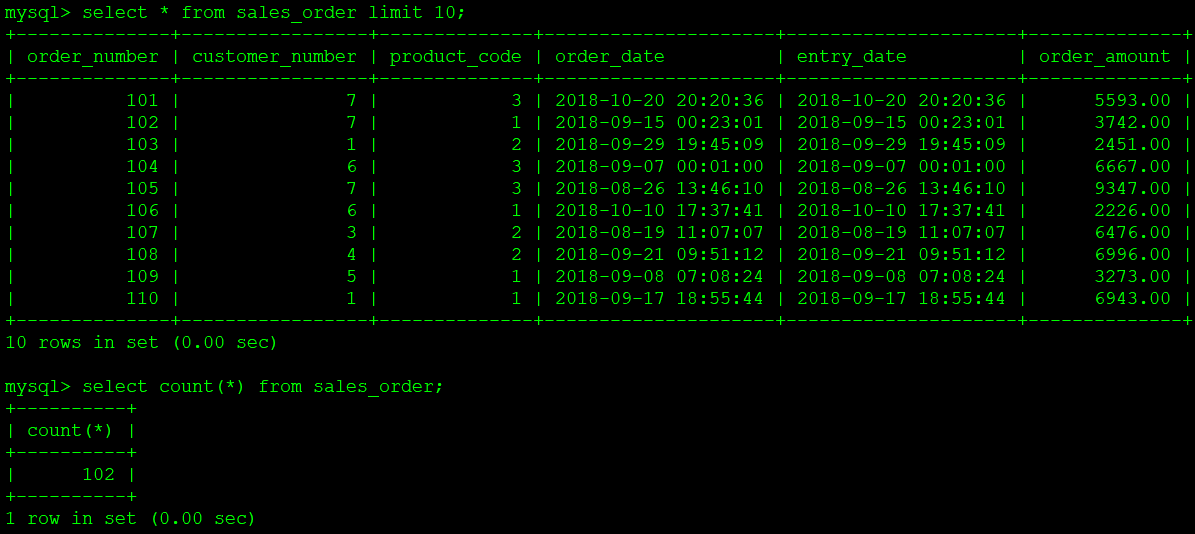

3. Check the MySQL table, and the result is shown in Figure 5.

Figure 5

Figure 5

As you can see from Figure 5, the content of the HDFS file is exported to the MySQL table through the Sqoop export job item.

2, Sqoop import

1. Create a job to import MySQL table data into HDFS.

(1) Open PDI and create a new job, as shown in Figure 6.

Figure 6

Figure 6

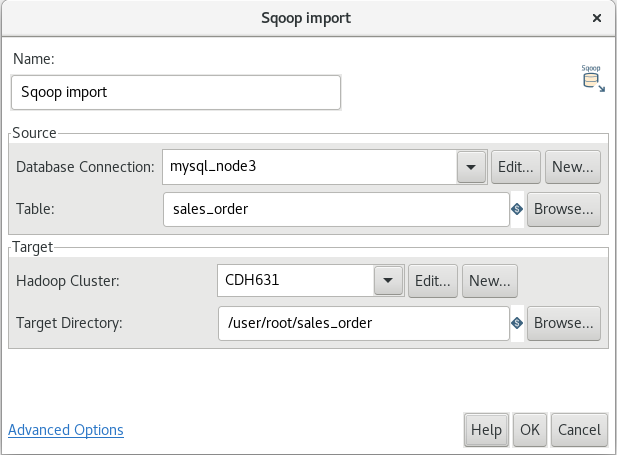

(2) Edit the 'Sqoop import' job item, as shown in Figure 7.

Figure 7

Figure 7

Description:

- /user/root/sales_order is the target directory of HDFS, which should not yet exist.

2. Save and execute the job. The log is as follows.

2020/06/08 10:00:52 - Spoon - Starting job... 2020/06/08 10:00:52 - sqoop_import - Start of job execution 2020/06/08 10:00:52 - sqoop_import - Starting entry [Sqoop import] 2020/06/08 10:00:52 - Sqoop import - 2020/06/08 10:00:52 - fs.default.name is deprecated. Instead, use fs.defaultFS 2020/06/08 10:00:52 - Sqoop import - 2020/06/08 10:00:52 - $SQOOP_CONF_DIR has not been set in the environment. Cannot check for additional configuration. 2020/06/08 10:00:52 - Sqoop import - 2020/06/08 10:00:52 - Running Sqoop version: 1.4.7-cdh6.1.0 2020/06/08 10:00:52 - Sqoop import - 2020/06/08 10:00:52 - Setting your password on the command-line is insecure. Consider using -P instead. 2020/06/08 10:00:52 - Sqoop import - 2020/06/08 10:00:52 - $SQOOP_CONF_DIR has not been set in the environment. Cannot check for additional configuration. 2020/06/08 10:00:52 - Sqoop import - 2020/06/08 10:00:52 - Preparing to use a MySQL streaming resultset. 2020/06/08 10:00:52 - Sqoop import - 2020/06/08 10:00:52 - Beginning code generation 2020/06/08 10:00:52 - Sqoop import - 2020/06/08 10:00:52 - Executing SQL statement: SELECT t.* FROM `sales_order` AS t LIMIT 1 2020/06/08 10:00:52 - Sqoop import - 2020/06/08 10:00:52 - Executing SQL statement: SELECT t.* FROM `sales_order` AS t LIMIT 1 2020/06/08 10:00:52 - Sqoop import - 2020/06/08 10:00:52 - $HADOOP_MAPRED_HOME is not set 2020/06/08 10:00:52 - Sqoop import - 2020/06/08 10:00:52 - Writing jar file: /tmp/sqoop-root/compile/7c09fc949771ba78a584cc07c1cd6b3f/sales_order.jar 2020/06/08 10:00:52 - Sqoop import - 2020/06/08 10:00:52 - Setting zero DATETIME behavior to convertToNull (mysql) 2020/06/08 10:00:52 - Sqoop import - 2020/06/08 10:00:52 - Beginning import of sales_order 2020/06/08 10:00:52 - Sqoop import - 2020/06/08 10:00:52 - SQOOP_HOME is unset. May not be able to find all job dependencies. 2020/06/08 10:00:53 - Sqoop import - 2020/06/08 10:00:53 - Connecting to ResourceManager at manager/172.16.1.124:8032 2020/06/08 10:00:53 - Sqoop import - 2020/06/08 10:00:53 - Disabling Erasure Coding for path: /user/root/.staging/job_1591323999364_0048 2020/06/08 10:00:53 - Sqoop import - 2020/06/08 10:00:53 - Using read commited transaction isolation 2020/06/08 10:00:53 - Sqoop import - 2020/06/08 10:00:53 - BoundingValsQuery: SELECT MIN(`order_number`), MAX(`order_number`) FROM `sales_order` 2020/06/08 10:00:53 - Sqoop import - 2020/06/08 10:00:53 - Split size: 25; Num splits: 4 from: 101 to: 202 2020/06/08 10:00:53 - Sqoop import - 2020/06/08 10:00:53 - number of splits:4 2020/06/08 10:00:53 - Sqoop import - 2020/06/08 10:00:53 - fs.default.name is deprecated. Instead, use fs.defaultFS 2020/06/08 10:00:53 - Sqoop import - 2020/06/08 10:00:53 - Submitting tokens for job: job_1591323999364_0048 2020/06/08 10:00:53 - Sqoop import - 2020/06/08 10:00:53 - Executing with tokens: [] 2020/06/08 10:00:53 - Sqoop import - 2020/06/08 10:00:53 - Submitted application application_1591323999364_0048 2020/06/08 10:00:53 - Sqoop import - 2020/06/08 10:00:53 - The url to track the job: http://manager:8088/proxy/application_1591323999364_0048/ 2020/06/08 10:00:53 - Sqoop import - 2020/06/08 10:00:53 - Running job: job_1591323999364_0048 2020/06/08 10:01:02 - Sqoop import - 2020/06/08 10:01:02 - Job job_1591323999364_0048 running in uber mode : false 2020/06/08 10:01:02 - Sqoop import - 2020/06/08 10:01:02 - map 0% reduce 0% 2020/06/08 10:01:09 - Sqoop import - 2020/06/08 10:01:09 - map 75% reduce 0% 2020/06/08 10:01:11 - Sqoop import - 2020/06/08 10:01:11 - map 100% reduce 0% 2020/06/08 10:01:11 - Sqoop import - 2020/06/08 10:01:11 - Job job_1591323999364_0048 completed successfully 2020/06/08 10:01:11 - Sqoop import - 2020/06/08 10:01:11 - Counters: 33 2020/06/08 10:01:11 - Sqoop import - File System Counters 2020/06/08 10:01:11 - Sqoop import - FILE: Number of bytes read=0 2020/06/08 10:01:11 - Sqoop import - FILE: Number of bytes written=969236 2020/06/08 10:01:11 - Sqoop import - FILE: Number of read operations=0 2020/06/08 10:01:11 - Sqoop import - FILE: Number of large read operations=0 2020/06/08 10:01:11 - Sqoop import - FILE: Number of write operations=0 2020/06/08 10:01:11 - Sqoop import - HDFS: Number of bytes read=481 2020/06/08 10:01:11 - Sqoop import - HDFS: Number of bytes written=6120 2020/06/08 10:01:11 - Sqoop import - HDFS: Number of read operations=24 2020/06/08 10:01:11 - Sqoop import - HDFS: Number of large read operations=0 2020/06/08 10:01:11 - Sqoop import - HDFS: Number of write operations=8 2020/06/08 10:01:11 - Sqoop import - HDFS: Number of bytes read erasure-coded=0 2020/06/08 10:01:11 - Sqoop import - Job Counters 2020/06/08 10:01:11 - Sqoop import - Launched map tasks=4 2020/06/08 10:01:11 - Sqoop import - Other local map tasks=4 2020/06/08 10:01:11 - Sqoop import - Total time spent by all maps in occupied slots (ms)=19048 2020/06/08 10:01:11 - Sqoop import - Total time spent by all reduces in occupied slots (ms)=0 2020/06/08 10:01:11 - Sqoop import - Total time spent by all map tasks (ms)=19048 2020/06/08 10:01:11 - Sqoop import - Total vcore-milliseconds taken by all map tasks=19048 2020/06/08 10:01:11 - Sqoop import - Total megabyte-milliseconds taken by all map tasks=19505152 2020/06/08 10:01:11 - Sqoop import - Map-Reduce Framework 2020/06/08 10:01:11 - Sqoop import - Map input records=102 2020/06/08 10:01:11 - Sqoop import - Map output records=102 2020/06/08 10:01:11 - Sqoop import - Input split bytes=481 2020/06/08 10:01:11 - Sqoop import - Spilled Records=0 2020/06/08 10:01:11 - Sqoop import - Failed Shuffles=0 2020/06/08 10:01:11 - Sqoop import - Merged Map outputs=0 2020/06/08 10:01:11 - Sqoop import - GC time elapsed (ms)=500 2020/06/08 10:01:11 - Sqoop import - CPU time spent (ms)=5680 2020/06/08 10:01:11 - Sqoop import - Physical memory (bytes) snapshot=783245312 2020/06/08 10:01:11 - Sqoop import - Virtual memory (bytes) snapshot=10357563392 2020/06/08 10:01:11 - Sqoop import - Total committed heap usage (bytes)=659554304 2020/06/08 10:01:11 - Sqoop import - Peak Map Physical memory (bytes)=202842112 2020/06/08 10:01:11 - Sqoop import - Peak Map Virtual memory (bytes)=2591666176 2020/06/08 10:01:11 - Sqoop import - File Input Format Counters 2020/06/08 10:01:11 - Sqoop import - Bytes Read=0 2020/06/08 10:01:11 - Sqoop import - File Output Format Counters 2020/06/08 10:01:11 - Sqoop import - Bytes Written=6120 2020/06/08 10:01:11 - Sqoop import - 2020/06/08 10:01:11 - Transferred 5.9766 KB in 18.733 seconds (326.697 bytes/sec) 2020/06/08 10:01:11 - Sqoop import - 2020/06/08 10:01:11 - Retrieved 102 records. 2020/06/08 10:01:11 - sqoop_import - Finished job entry [Sqoop import] (result=[true]) 2020/06/08 10:01:11 - sqoop_import - Job execution finished 2020/06/08 10:01:11 - Spoon - Job has ended.

As you can see from the log, the job has been executed successfully. In the directory where the job is located, a file named sales will be generated_ order.java File.

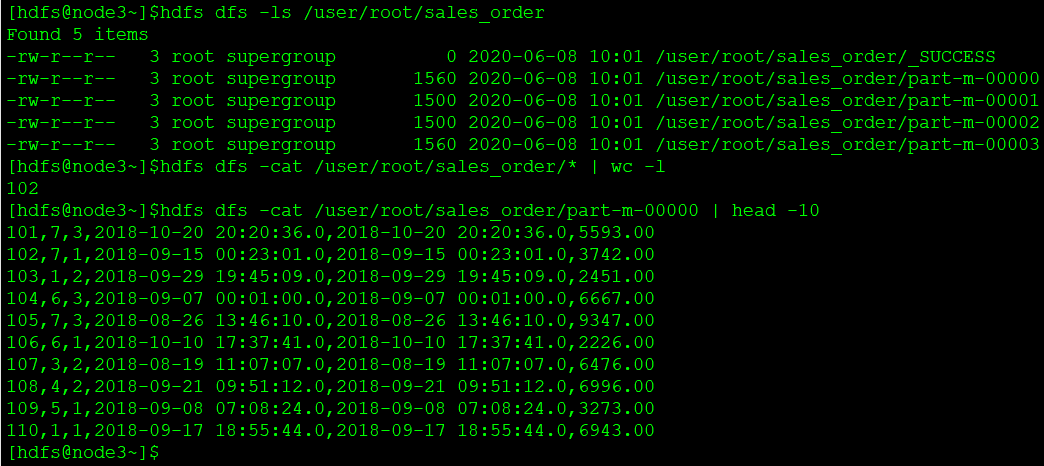

3. Check the HDFS target directory, as shown in Figure 8.

Figure 8

Figure 8

As you can see from figure 8, the data in MySQL table is imported into HDFS through the Sqoop import job item.