Author: Yan Yongqiang, algorithm engineer, Datawhale member

In this paper, through the self built gesture data set, we use YOLOv5s detection, then train squeezenet to predict the key points of the hand through the open source data set, and finally judge the specific gesture through the angle algorithm between the fingers and display it. The fourth part of the article is to use C + + to realize the overall ncnn reasoning (the code is long, which can be seen after horse first)

1, YOLOV5 training hand detection

The training and deployment idea is similar to expression recognition. It is necessary to change the label of the handle dataset into one class, only detect the hand, simplify the process and make it easier to use.

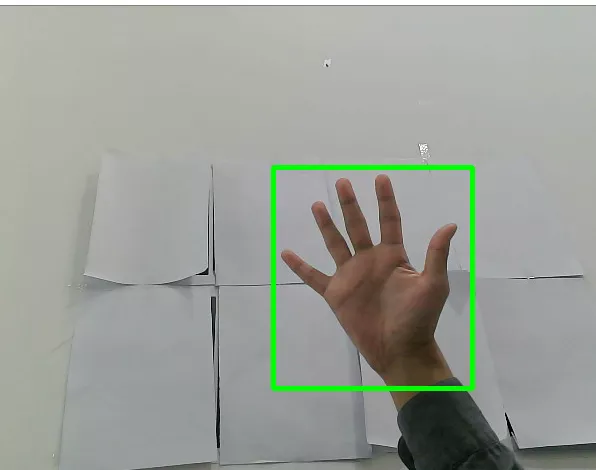

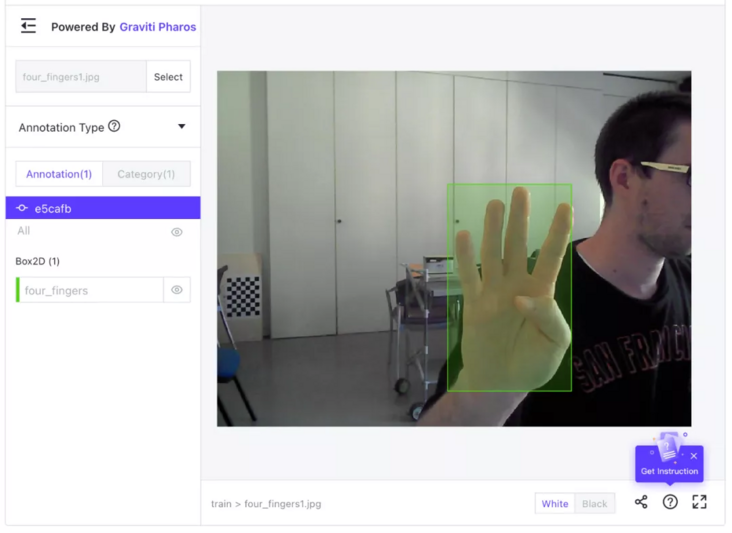

This part of the data set comes from grid titanium https://gas.graviti.cn/datase... , the specific effect is shown in the figure:

Training environment for this tutorial:

System environment: Ubuntu 16 04

cuda version: 10.2

cudnn version: 7.6.5

pytorch version: 1.6.0

python version: 3.8

Deployment environment:

Compiler: vs2015

Dependency Library: opencv ncnn

Peripheral: ordinary USB camera

2, Hand joint point detection

1. Dependent environment

It is consistent with YOLOV5 training hand detection.

2. Test data set preparation

The data set includes network pictures and the data set < large scale multiview 3D hand pose dataset > to filter pictures with low action repetition, and there are about 5w data samples for production. The official website address of the < large scale multiview 3D hand pose dataset > dataset: http://www.rovit.ua.es/datase... The example of annotation file is shown in Figure 2

The data sets that can be trained directly are put in the grid of the open source data platform: https://gas.graviti.com/datas...

3. Dataset online use

Step 1: install Grid titanium platformSDK

pip install tensorbay

Step 2: Data Preprocessing

To use the data set that has been processed and can be trained directly, the steps are as follows:

a. Open the data set link corresponding to this article https://gas.graviti.cn/datase... , on the dataset page, fork the dataset to your account;

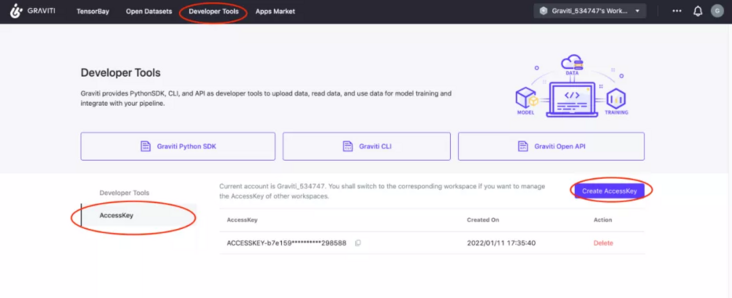

b. Click the developer tool -- > accessKey -- > at the top of the web page to create a new accessKey -- > copy the Key: Key = 'accesses......'

We can preprocess the data through grid titanium without downloading the data set, and save the results locally. Taking the use of handpoint dataset as an example, the operation of using HandPoseKeyPoints dataset is the same as that of handpoint.

Data set open source address:

https://gas.graviti.com/datas...

Complete project code:

https://github.com/datawhalec...

import numpy as np

from PIL import Image

from tensorbay import GAS

from tensorbay.dataset import Dataset

def read_gas_image(data):

with data.open() as fp:

image = Image.open(fp)

image.load()

return np.array(image)

# Authorize a GAS client.

gas = GAS('Fill in your AccessKey')

# Get a dataset.

dataset = Dataset("HandPose", gas)

dataset.enable_cache("data")

# List dataset segments.

segments = dataset.keys()

# Get a segment by name

segment = dataset["train"]

for data in segment:

# Picture data

image = read_gas_image(data)

# Tag data

# Use the data as you like.

for label_box2d in data.label.box2d:

xmin = label_box2d.xmin

ymin = label_box2d.ymin

xmax = label_box2d.xmax

ymax = label_box2d.ymax

box2d_category = label_box2d.category

breakDataset page visualization:

#Data set partition

print(segments)

# ("train",'val')

print(len(dataset["train"]), "images in train dataset")

print(len(dataset["val"]), "images in valid dataset")

# 1306 images in train dataset

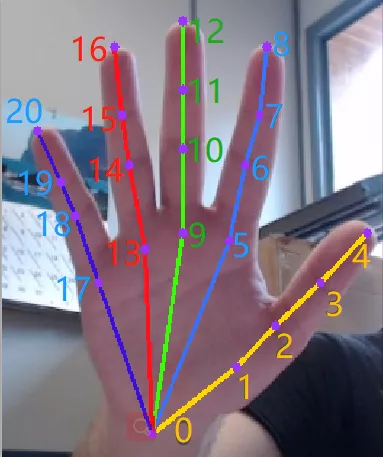

# 14 images in valid dataset4. Joint point detection principle

The process of joint point detection pipeline is as follows:

1) Input the coordinates of 42 joint points of the hand corresponding to the picture,

2) The backbone of the whole network can be any classified network. I use squeezenet here, and then the loss function is wingloss.

3) The whole process is to input the original image, calculate 42 coordinate values through squeezenet network, then calculate and update the weight through wingloss regression, and finally reach the specified threshold to obtain the final model.

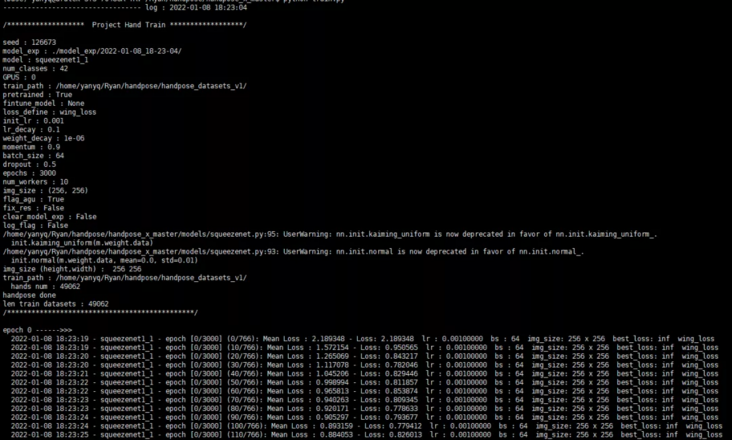

5. Hand joint point training

The hand joint point algorithm adopts open source code. Reference address: https://gitcode.net/EricLee/h...

1) Pre training model

The pre training model has the corresponding network disk link in the above link, which can be downloaded directly. If you don't want to use the pre training model, you can start training directly from the original weight of the original classification network.

2) Model training

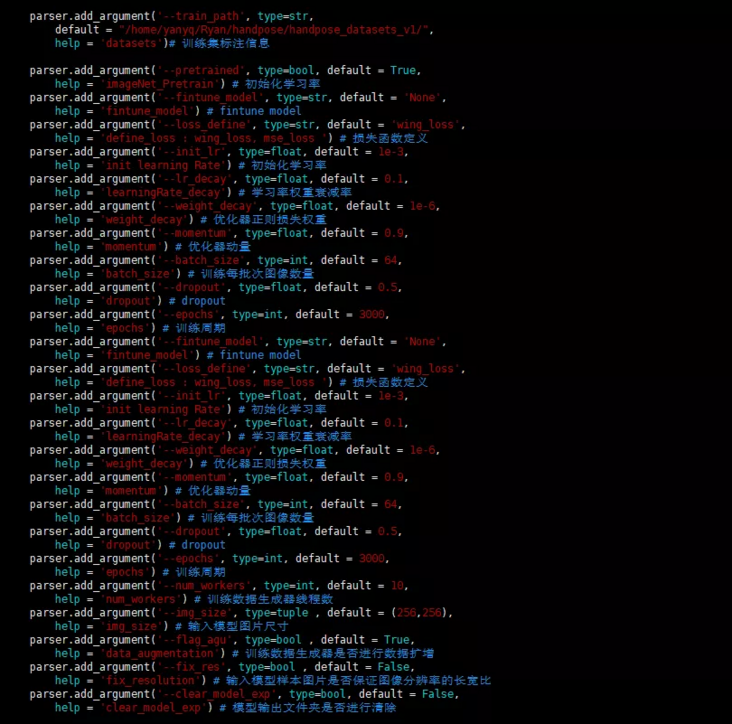

The following is the explanation of the specified parameters of the training network. Its significance can be seen directly from the notes in the figure.

For training, you only need to run the training command and specify the parameters you want to specify, as shown in the following figure:

6. Hand joint point model conversion

1) Install dependent Libraries

pip install onnx coremltools onnx-simplifier

2) Export onnx model

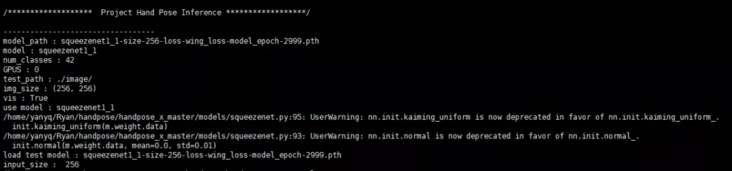

python model2onnx.py --model_path squeezenet1_1-size-256-loss-wing_loss-model_epoch-2999.pth --model squeezenet1_1

The following figure will appear

Where model2onnx The PY file is under the above linked project directory. A corresponding onnx model export will appear under the current folder.

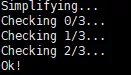

3) Using onnx simplifier to simplify the model

Why simplify?

Because after training the pytorch or tensorflow model of deep learning, sometimes it is necessary to convert the model into onnx, but many nodes, such as cast node and Identity node, may not be needed and need to be simplified, which will facilitate the conversion of the model into end-to-end deployment model formats such as ncnn and MNN.

python -m onnxsim squeezenet1_1_size-256.onnx squeezenet1_1_sim.onnx

The following figure will appear:

After the above process is completed, a simplified version of the model squeezenet1 is generated_ 1_ sim. onnx.

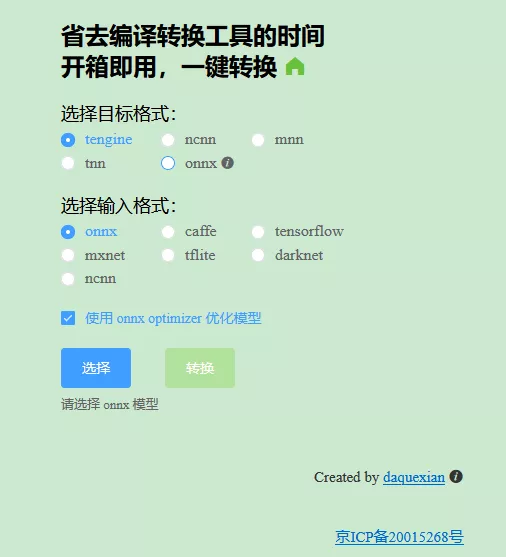

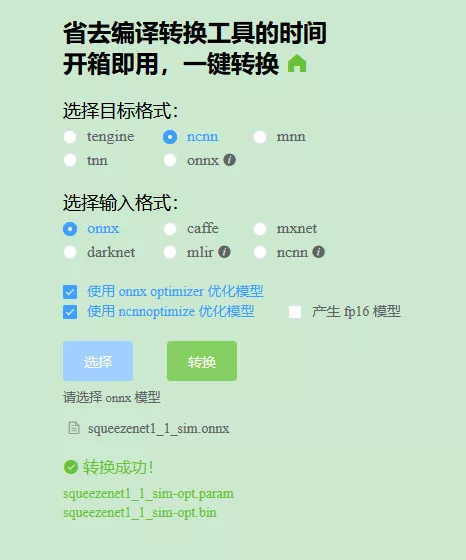

4) Transform the detection model into ncnn model

You can directly use the web page online version conversion model, address: https://convertmodel.com/ The page is shown in the figure below:

Select the target format ncnn, select the input format onnx, click Select, select the local simplified version of the model, and then select the conversion. You can see that the conversion is successful. The following two are the successfully converted model files, as shown in the figure.

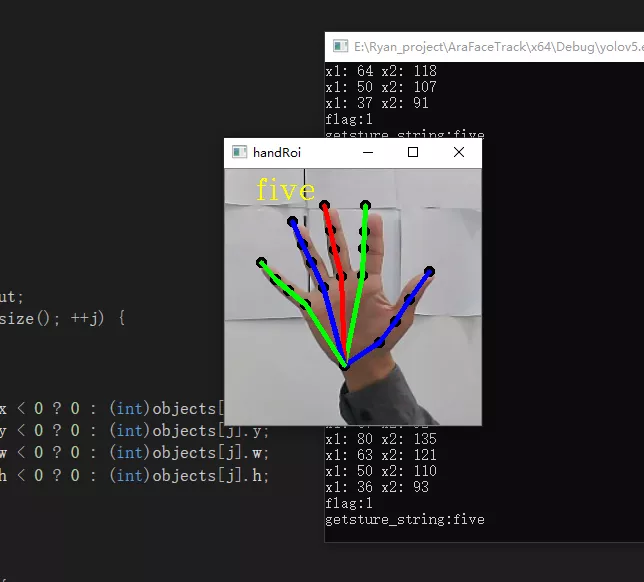

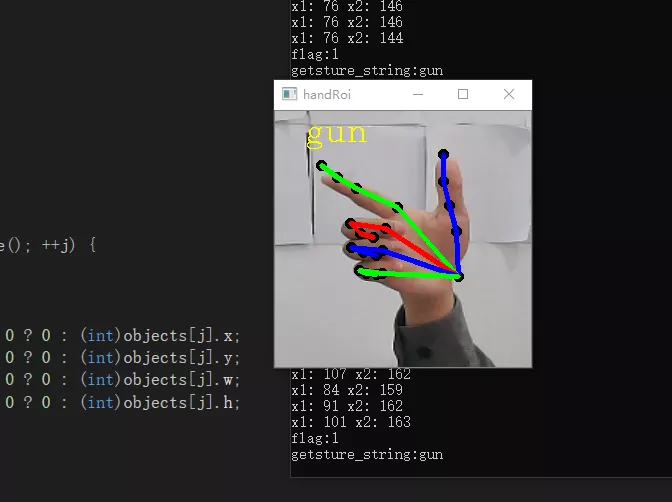

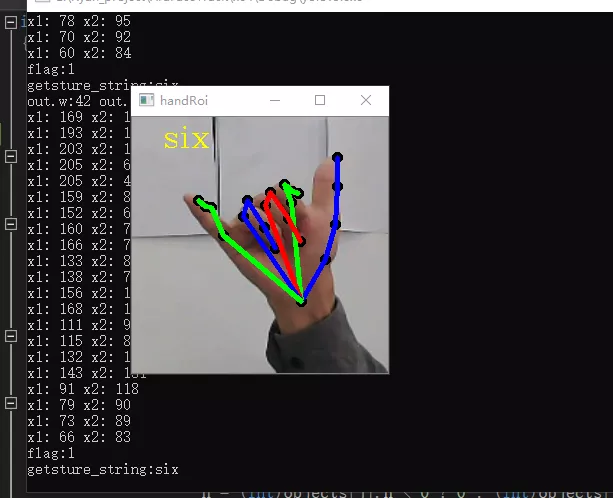

3, Gesture recognition algorithm using joint points

Simple hand recognition can be realized by calculating the angle of the hand. For example: calculate the angle between thumb vectors 0-2 and 3-4. If the angle between them is greater than a certain angle threshold (empirical value), it is defined as bending, and if it is less than a certain threshold (empirical value), it is defined as straightening. The specific effect is shown in the following three figures.

4, Overall implementation of Engineering reasoning deployment

This node summarizes the whole process of gesture recognition: first, use the target detection model to detect the position of the hand, and then use the hand joint point detection model to detect the specific position of the hand joint point, draw the joint point and the connecting line between the joint points. Then the simple angle between vectors is used for gesture recognition.

Overall ncnn reasoning C + + code implementation:

#include <string>

#include <vector>

#include "iostream"

#include<cmath>

// ncnn

#include "ncnn/layer.h"

#include "ncnn/net.h"

#include "ncnn/benchmark.h"

#include "opencv2/core/core.hpp"

#include "opencv2/highgui/highgui.hpp"

#include <opencv2/imgproc.hpp>

#include "opencv2/opencv.hpp"

using namespace std;

using namespace cv;

static ncnn::UnlockedPoolAllocator g_blob_pool_allocator;

static ncnn::PoolAllocator g_workspace_pool_allocator;

static ncnn::Net yolov5;

static ncnn::Net hand_keyPoints;

class YoloV5Focus : public ncnn::Layer

{

public:

YoloV5Focus()

{

one_blob_only = true;

}

virtual int forward(const ncnn::Mat& bottom_blob, ncnn::Mat& top_blob, const ncnn::Option& opt) const

{

int w = bottom_blob.w;

int h = bottom_blob.h;

int channels = bottom_blob.c;

int outw = w / 2;

int outh = h / 2;

int outc = channels * 4;

top_blob.create(outw, outh, outc, 4u, 1, opt.blob_allocator);

if (top_blob.empty())

return -100;

#pragma omp parallel for num_threads(opt.num_threads)

for (int p = 0; p < outc; p++)

{

const float* ptr = bottom_blob.channel(p % channels).row((p / channels) % 2) + ((p / channels) / 2);

float* outptr = top_blob.channel(p);

for (int i = 0; i < outh; i++)

{

for (int j = 0; j < outw; j++)

{

*outptr = *ptr;

outptr += 1;

ptr += 2;

}

ptr += w;

}

}

return 0;

}

};

DEFINE_LAYER_CREATOR(YoloV5Focus)

struct Object

{

float x;

float y;

float w;

float h;

int label;

float prob;

};

static inline float intersection_area(const Object& a, const Object& b)

{

if (a.x > b.x + b.w || a.x + a.w < b.x || a.y > b.y + b.h || a.y + a.h < b.y)

{

// no intersection

return 0.f;

}

float inter_width = std::min(a.x + a.w, b.x + b.w) - std::max(a.x, b.x);

float inter_height = std::min(a.y + a.h, b.y + b.h) - std::max(a.y, b.y);

return inter_width * inter_height;

}

static void qsort_descent_inplace(std::vector<Object>& faceobjects, int left, int right)

{

int i = left;

int j = right;

float p = faceobjects[(left + right) / 2].prob;

while (i <= j)

{

while (faceobjects[i].prob > p)

i++;

while (faceobjects[j].prob < p)

j--;

if (i <= j)

{

std::swap(faceobjects[i], faceobjects[j]);

i++;

j--;

}

}

#pragma omp parallel sections

{

#pragma omp section

{

if (left < j) qsort_descent_inplace(faceobjects, left, j);

}

#pragma omp section

{

if (i < right) qsort_descent_inplace(faceobjects, i, right);

}

}

}

static void qsort_descent_inplace(std::vector<Object>& faceobjects)

{

if (faceobjects.empty())

return;

qsort_descent_inplace(faceobjects, 0, faceobjects.size() - 1);

}

static void nms_sorted_bboxes(const std::vector<Object>& faceobjects, std::vector<int>& picked, float nms_threshold)

{

picked.clear();

const int n = faceobjects.size();

std::vector<float> areas(n);

for (int i = 0; i < n; i++)

{

areas[i] = faceobjects[i].w * faceobjects[i].h;

}

for (int i = 0; i < n; i++)

{

const Object& a = faceobjects[i];

int keep = 1;

for (int j = 0; j < (int)picked.size(); j++)

{

const Object& b = faceobjects[picked[j]];

float inter_area = intersection_area(a, b);

float union_area = areas[i] + areas[picked[j]] - inter_area;

// float IoU = inter_area / union_area

if (inter_area / union_area > nms_threshold)

keep = 0;

}

if (keep)

picked.push_back(i);

}

}

static inline float sigmoid(float x)

{

return static_cast<float>(1.f / (1.f + exp(-x)));

}

static void generate_proposals(const ncnn::Mat& anchors, int stride, const ncnn::Mat& in_pad, const ncnn::Mat& feat_blob, float prob_threshold, std::vector<Object>& objects)

{

const int num_grid = feat_blob.h;

int num_grid_x;

int num_grid_y;

if (in_pad.w > in_pad.h)

{

num_grid_x = in_pad.w / stride;

num_grid_y = num_grid / num_grid_x;

}

else

{

num_grid_y = in_pad.h / stride;

num_grid_x = num_grid / num_grid_y;

}

const int num_class = feat_blob.w - 5;

const int num_anchors = anchors.w / 2;

for (int q = 0; q < num_anchors; q++)

{

const float anchor_w = anchors[q * 2];

const float anchor_h = anchors[q * 2 + 1];

const ncnn::Mat feat = feat_blob.channel(q);

for (int i = 0; i < num_grid_y; i++)

{

for (int j = 0; j < num_grid_x; j++)

{

const float* featptr = feat.row(i * num_grid_x + j);

// find class index with max class score

int class_index = 0;

float class_score = -FLT_MAX;

for (int k = 0; k < num_class; k++)

{

float score = featptr[5 + k];

if (score > class_score)

{

class_index = k;

class_score = score;

}

}

float box_score = featptr[4];

float confidence = sigmoid(box_score) * sigmoid(class_score);

if (confidence >= prob_threshold)

{

float dx = sigmoid(featptr[0]);

float dy = sigmoid(featptr[1]);

float dw = sigmoid(featptr[2]);

float dh = sigmoid(featptr[3]);

float pb_cx = (dx * 2.f - 0.5f + j) * stride;

float pb_cy = (dy * 2.f - 0.5f + i) * stride;

float pb_w = pow(dw * 2.f, 2) * anchor_w;

float pb_h = pow(dh * 2.f, 2) * anchor_h;

float x0 = pb_cx - pb_w * 0.5f;

float y0 = pb_cy - pb_h * 0.5f;

float x1 = pb_cx + pb_w * 0.5f;

float y1 = pb_cy + pb_h * 0.5f;

Object obj;

obj.x = x0;

obj.y = y0;

obj.w = x1 - x0;

obj.h = y1 - y0;

obj.label = class_index;

obj.prob = confidence;

objects.push_back(obj);

}

}

}

}

}

extern "C" {

void release()

{

fprintf(stderr, "YoloV5Ncnn finished!");

}

int init_handKeyPoint() {

ncnn::Option opt;

opt.lightmode = true;

opt.num_threads = 4;

opt.blob_allocator = &g_blob_pool_allocator;

opt.workspace_allocator = &g_workspace_pool_allocator;

opt.use_packing_layout = true;

fprintf(stderr, "handKeyPoint init!\n");

hand_keyPoints.opt = opt;

int ret_hand = hand_keyPoints.load_param("squeezenet1_1.param"); //squeezenet1_1 resnet_50

if (ret_hand != 0) {

std::cout << "ret_hand:" << ret_hand << std::endl;

}

ret_hand = hand_keyPoints.load_model("squeezenet1_1.bin"); //squeezenet1_1 resnet_50

if (ret_hand != 0) {

std::cout << "ret_hand:" << ret_hand << std::endl;

}

return 0;

}

int init()

{

fprintf(stderr, "YoloV5sNcnn init!\n");

ncnn::Option opt;

opt.lightmode = true;

opt.num_threads = 4;

opt.blob_allocator = &g_blob_pool_allocator;

opt.workspace_allocator = &g_workspace_pool_allocator;

opt.use_packing_layout = true;

yolov5.opt = opt;

yolov5.register_custom_layer("YoloV5Focus", YoloV5Focus_layer_creator);

// init param

{

int ret = yolov5.load_param("yolov5s.param");

if (ret != 0)

{

std::cout << "ret= " << ret << std::endl;

fprintf(stderr, "YoloV5Ncnn, load_param failed");

return -301;

}

}

// init bin

{

int ret = yolov5.load_model("yolov5s.bin");

if (ret != 0)

{

fprintf(stderr, "YoloV5Ncnn, load_model failed");

return -301;

}

}

return 0;

}

int detect(cv::Mat img, std::vector<Object> &objects)

{

double start_time = ncnn::get_current_time();

const int target_size = 320;

const int width = img.cols;

const int height = img.rows;

int w = img.cols;

int h = img.rows;

float scale = 1.f;

if (w > h)

{

scale = (float)target_size / w;

w = target_size;

h = h * scale;

}

else

{

scale = (float)target_size / h;

h = target_size;

w = w * scale;

}

cv::resize(img, img, cv::Size(w, h));

ncnn::Mat in = ncnn::Mat::from_pixels(img.data, ncnn::Mat::PIXEL_BGR2RGB, w, h);

int wpad = (w + 31) / 32 * 32 - w;

int hpad = (h + 31) / 32 * 32 - h;

ncnn::Mat in_pad;

ncnn::copy_make_border(in, in_pad, hpad / 2, hpad - hpad / 2, wpad / 2, wpad - wpad / 2, ncnn::BORDER_CONSTANT, 114.f);

{

const float prob_threshold = 0.4f;

const float nms_threshold = 0.51f;

const float norm_vals[3] = { 1 / 255.f, 1 / 255.f, 1 / 255.f };

in_pad.substract_mean_normalize(0, norm_vals);

ncnn::Extractor ex = yolov5.create_extractor();

ex.input("images", in_pad);

std::vector<Object> proposals;

{

ncnn::Mat out;

ex.extract("output", out);

ncnn::Mat anchors(6);

anchors[0] = 10.f;

anchors[1] = 13.f;

anchors[2] = 16.f;

anchors[3] = 30.f;

anchors[4] = 33.f;

anchors[5] = 23.f;

std::vector<Object> objects8;

generate_proposals(anchors, 8, in_pad, out, prob_threshold, objects8);

proposals.insert(proposals.end(), objects8.begin(), objects8.end());

}

{

ncnn::Mat out;

ex.extract("771", out);

ncnn::Mat anchors(6);

anchors[0] = 30.f;

anchors[1] = 61.f;

anchors[2] = 62.f;

anchors[3] = 45.f;

anchors[4] = 59.f;

anchors[5] = 119.f;

std::vector<Object> objects16;

generate_proposals(anchors, 16, in_pad, out, prob_threshold, objects16);

proposals.insert(proposals.end(), objects16.begin(), objects16.end());

}

{

ncnn::Mat out;

ex.extract("791", out);

ncnn::Mat anchors(6);

anchors[0] = 116.f;

anchors[1] = 90.f;

anchors[2] = 156.f;

anchors[3] = 198.f;

anchors[4] = 373.f;

anchors[5] = 326.f;

std::vector<Object> objects32;

generate_proposals(anchors, 32, in_pad, out, prob_threshold, objects32);

proposals.insert(proposals.end(), objects32.begin(), objects32.end());

}

// sort all proposals by score from highest to lowest

qsort_descent_inplace(proposals);

std::vector<int> picked;

nms_sorted_bboxes(proposals, picked, nms_threshold);

int count = picked.size();

objects.resize(count);

for (int i = 0; i < count; i++)

{

objects[i] = proposals[picked[i]];

float x0 = (objects[i].x - (wpad / 2)) / scale;

float y0 = (objects[i].y - (hpad / 2)) / scale;

float x1 = (objects[i].x + objects[i].w - (wpad / 2)) / scale;

float y1 = (objects[i].y + objects[i].h - (hpad / 2)) / scale;

// clip

x0 = std::max(std::min(x0, (float)(width - 1)), 0.f);

y0 = std::max(std::min(y0, (float)(height - 1)), 0.f);

x1 = std::max(std::min(x1, (float)(width - 1)), 0.f);

y1 = std::max(std::min(y1, (float)(height - 1)), 0.f);

objects[i].x = x0;

objects[i].y = y0;

objects[i].w = x1;

objects[i].h = y1;

}

}

return 0;

}

}

static const char* class_names[] = {"hand"};

void draw_face_box(cv::Mat& bgr, std::vector<Object> object)

{

for (int i = 0; i < object.size(); i++)

{

const auto obj = object[i];

cv::rectangle(bgr, cv::Point(obj.x, obj.y), cv::Point(obj.w, obj.h), cv::Scalar(0, 255, 0), 3, 8, 0);

std::cout << "label:" << class_names[obj.label] << std::endl;

string emoji_path = "emoji\\" + string(class_names[obj.label]) + ".png";

cv::Mat logo = cv::imread(emoji_path);

if (logo.empty()) {

std::cout << "imread logo failed!!!" << std::endl;

return;

}

resize(logo, logo, cv::Size(80, 80));

cv::Mat imageROI = bgr(cv::Range(obj.x, obj.x + logo.rows), cv::Range(obj.y, obj.y + logo.cols));

logo.copyTo(imageROI);

}

}

static int detect_resnet(const cv::Mat& bgr,std::vector<float>& output) {

ncnn::Mat in = ncnn::Mat::from_pixels_resize(bgr.data,ncnn::Mat::PIXEL_RGB,bgr.cols,bgr.rows,256,256);

const float mean_vals[3] = { 104.f,117.f,123.f };//

const float norm_vals[3] = { 1/255.f, 1/255.f, 1/255.f };//1/255.f

in.substract_mean_normalize(mean_vals, norm_vals); //0 mean_vals, norm_vals

ncnn::Extractor ex = hand_keyPoints.create_extractor();

ex.input("input", in);

ncnn::Mat out;

ex.extract("output",out);

std::cout << "out.w:" << out.w <<" out.h: "<< out.h <<std::endl;

output.resize(out.w);

for (int i = 0; i < out.w; i++) {

output[i] = out[i];

}

return 0;

}

float vector_2d_angle(cv::Point p1,cv::Point p2) {

//Solve the angle of two-dimensional vector

float angle = 0.0;

try {

float radian_value = acos((p1.x*p2.x+p1.y*p2.y)/(sqrt(p1.x*p1.x+p1.y*p1.y)*sqrt(p2.x*p2.x+p2.y*p2.y)));

angle = 180*radian_value/3.1415;

}catch(...){

angle = 65535.;

}

if (angle > 180.) {

angle = 65535.;

}

return angle;

}

std::vector<float> hand_angle(std::vector<int>& hand_x,std::vector<int>& hand_y) {

//Obtain the two-dimensional angle of the corresponding hand correlation vector, and determine the gesture according to the angle

float angle = 0.0;

std::vector<float> angle_list;

//-------------------thumb angle

angle = vector_2d_angle(cv::Point((hand_x[0]-hand_x[2]),(hand_y[0]-hand_y[2])),cv::Point((hand_x[3]-hand_x[4]),(hand_y[3]-hand_y[4])));

angle_list.push_back(angle);

//--------------------index finger angle

angle = vector_2d_angle(cv::Point((hand_x[0] - hand_x[6]), (hand_y[0] - hand_y[6])), cv::Point((hand_x[7] - hand_x[8]), (hand_y[7] - hand_y[8])));

angle_list.push_back(angle);

//---------------------Middle middle finger angle

angle = vector_2d_angle(cv::Point((hand_x[0] - hand_x[10]), (hand_y[0] - hand_y[10])), cv::Point((hand_x[11] - hand_x[12]), (hand_y[11] - hand_y[12])));

angle_list.push_back(angle);

//----------------------ring finger angle

angle = vector_2d_angle(cv::Point((hand_x[0] - hand_x[14]), (hand_y[0] - hand_y[14])), cv::Point((hand_x[15] - hand_x[16]), (hand_y[15] - hand_y[16])));

angle_list.push_back(angle);

//-----------------------pink thumb angle

angle = vector_2d_angle(cv::Point((hand_x[0] - hand_x[18]), (hand_y[0] - hand_y[18])), cv::Point((hand_x[19] - hand_x[20]), (hand_y[19] - hand_y[20])));

angle_list.push_back(angle);

return angle_list;

}

string h_gestrue(std::vector<float>& angle_lists) {

//Define gestures in the way of 2D constraints

//fist five gun love one six three thumbup yeah

float thr_angle = 65.;

float thr_angle_thumb = 53.;

float thr_angle_s = 49.;

string gesture_str;

bool flag = false;

for (int i = 0; i < angle_lists.size(); i++) {

if (abs(65535 - int(angle_lists[i])) > 0) {

flag = true; //Enter gesture judgment sign

}

}

std::cout << "flag:" << flag << std::endl;

if (flag) {

if (angle_lists[0] > thr_angle_thumb && angle_lists[1] > thr_angle

&& angle_lists[2] > thr_angle && angle_lists[3] > thr_angle

&& angle_lists[4] > thr_angle) {

gesture_str = "fist";

}

else if (angle_lists[0] < thr_angle_s && angle_lists[1] < thr_angle_s

&& angle_lists[2] < thr_angle_s && angle_lists[3] < thr_angle_s

&& angle_lists[4] < thr_angle_s) {

gesture_str = "five";

}

else if(angle_lists[0] < thr_angle_s && angle_lists[1] < thr_angle_s

&& angle_lists[2] > thr_angle && angle_lists[3] > thr_angle

&& angle_lists[4] > thr_angle){

gesture_str = "gun";

}

else if (angle_lists[0] < thr_angle_s && angle_lists[1] < thr_angle_s

&& angle_lists[2] > thr_angle && angle_lists[3] > thr_angle

&& angle_lists[4] < thr_angle_s) {

gesture_str = "love";

}

else if (angle_lists[0] < 5 && angle_lists[1] < thr_angle_s

&& angle_lists[2] > thr_angle && angle_lists[3] > thr_angle

&& angle_lists[4] > thr_angle) {

gesture_str = "one";

}

else if (angle_lists[0] < thr_angle_s && angle_lists[1] > thr_angle

&& angle_lists[2] > thr_angle && angle_lists[3] > thr_angle

&& angle_lists[4] < thr_angle_s) {

gesture_str = "six";

}

else if (angle_lists[0] > thr_angle_thumb && angle_lists[1] < thr_angle_s

&& angle_lists[2] < thr_angle_s && angle_lists[3] < thr_angle_s

&& angle_lists[4] > thr_angle) {

gesture_str = "three";

}

else if (angle_lists[0] < thr_angle_s && angle_lists[1] > thr_angle

&& angle_lists[2] > thr_angle && angle_lists[3] > thr_angle

&& angle_lists[4] > thr_angle) {

gesture_str = "thumbUp";

}

else if (angle_lists[0] > thr_angle_thumb && angle_lists[1] < thr_angle_s

&& angle_lists[2] < thr_angle_s && angle_lists[3] > thr_angle

&& angle_lists[4] > thr_angle) {

gesture_str = "two";

}

}

return gesture_str;

}

int main()

{

Mat frame;

VideoCapture capture(0);

init();

init_handKeyPoint();

while (true)

{

capture >> frame;

if (!frame.empty()) {

std::vector<Object> objects;

detect(frame, objects);

std::vector<float> hand_output;

for (int j = 0; j < objects.size(); ++j) {

cv::Mat handRoi;

int x, y, w, h;

try {

x = (int)objects[j].x < 0 ? 0 : (int)objects[j].x;

y = (int)objects[j].y < 0 ? 0 : (int)objects[j].y;

w = (int)objects[j].w < 0 ? 0 : (int)objects[j].w;

h = (int)objects[j].h < 0 ? 0 : (int)objects[j].h;

if (w > frame.cols){

w = frame.cols;

}

if (h > frame.rows) {

h = frame.rows;

}

}

catch (cv::Exception e) {

}

//The handle area expands outward by 30 pixels

x = max(0, x - 30);

y = max(0, y - 30);

int w_ = min(w - x + 30, 640);

int h_ = min(h - y + 30, 480);

cv::Rect roi(x,y,w_,h_);

handRoi = frame(roi);

cv::resize(handRoi,handRoi,cv::Size(256,256));

//detect_resnet(handRoi, hand_output);

detect_resnet(handRoi, hand_output);

std::vector<float> angle_lists;

string gesture_string;

std::vector<int> hand_points_x; //

std::vector<int> hand_points_y;

for (int k = 0; k < hand_output.size()/2; k++) {

int x = int(hand_output[k * 2 + 0] * handRoi.cols);//+int(roi.x)-1;

int y = int(hand_output[k * 2 + 1] * handRoi.rows);// +int(roi.y) - 1;

//x1 = x1 < 0 ? abs(x1) : x1;

//x2 = x2 < 0 ? abs(x2) : x2;

hand_points_x.push_back(x);

hand_points_y.push_back(y);

std::cout << "x1: " << x << " x2: " << y << std::endl;

cv::circle(handRoi, cv::Point(x,y), 3, (0, 255, 0), 3);

cv::circle(handRoi, cv::Point(x,y), 3, (0, 255, 0), 3);

}

cv::line(handRoi, cv::Point(hand_points_x[0], hand_points_y[0]), cv::Point(hand_points_x[1], hand_points_y[1]), cv::Scalar(255, 0, 0), 3);

cv::line(handRoi, cv::Point(hand_points_x[1], hand_points_y[1]), cv::Point(hand_points_x[2], hand_points_y[2]), cv::Scalar(255, 0, 0), 3);

cv::line(handRoi, cv::Point(hand_points_x[2], hand_points_y[2]), cv::Point(hand_points_x[3], hand_points_y[3]), cv::Scalar(255, 0, 0), 3);

cv::line(handRoi, cv::Point(hand_points_x[3], hand_points_y[3]), cv::Point(hand_points_x[4], hand_points_y[4]), cv::Scalar(255, 0, 0), 3);

cv::line(handRoi, cv::Point(hand_points_x[0], hand_points_y[0]), cv::Point(hand_points_x[5], hand_points_y[5]), cv::Scalar(0, 255, 0), 3);

cv::line(handRoi, cv::Point(hand_points_x[5], hand_points_y[5]), cv::Point(hand_points_x[6], hand_points_y[6]), cv::Scalar(0, 255, 0), 3);

cv::line(handRoi, cv::Point(hand_points_x[6], hand_points_y[6]), cv::Point(hand_points_x[7], hand_points_y[7]), cv::Scalar(0, 255, 0), 3);

cv::line(handRoi, cv::Point(hand_points_x[7], hand_points_y[7]), cv::Point(hand_points_x[8], hand_points_y[8]), cv::Scalar(0, 255, 0), 3);

cv::line(handRoi, cv::Point(hand_points_x[0], hand_points_y[0]), cv::Point(hand_points_x[9], hand_points_y[9]), cv::Scalar(0, 0, 255), 3);

cv::line(handRoi, cv::Point(hand_points_x[9], hand_points_y[9]), cv::Point(hand_points_x[10], hand_points_y[10]), cv::Scalar(0, 0, 255), 3);

cv::line(handRoi, cv::Point(hand_points_x[10], hand_points_y[10]), cv::Point(hand_points_x[11], hand_points_y[11]), cv::Scalar(0, 0, 255), 3);

cv::line(handRoi, cv::Point(hand_points_x[11], hand_points_y[11]), cv::Point(hand_points_x[12], hand_points_y[12]), cv::Scalar(0, 0, 255), 3);

cv::line(handRoi, cv::Point(hand_points_x[0], hand_points_y[0]), cv::Point(hand_points_x[13], hand_points_y[13]), cv::Scalar(255, 0, 0), 3);

cv::line(handRoi, cv::Point(hand_points_x[13], hand_points_y[13]), cv::Point(hand_points_x[14], hand_points_y[14]), cv::Scalar(255, 0, 0), 3);

cv::line(handRoi, cv::Point(hand_points_x[14], hand_points_y[14]), cv::Point(hand_points_x[15], hand_points_y[15]), cv::Scalar(255, 0, 0), 3);

cv::line(handRoi, cv::Point(hand_points_x[15], hand_points_y[15]), cv::Point(hand_points_x[16], hand_points_y[16]), cv::Scalar(255, 0, 0), 3);

cv::line(handRoi, cv::Point(hand_points_x[0], hand_points_y[0]), cv::Point(hand_points_x[17], hand_points_y[17]), cv::Scalar(0, 255, 0), 3);

cv::line(handRoi, cv::Point(hand_points_x[17], hand_points_y[17]), cv::Point(hand_points_x[18], hand_points_y[18]), cv::Scalar(0, 255, 0), 3);

cv::line(handRoi, cv::Point(hand_points_x[18], hand_points_y[18]), cv::Point(hand_points_x[19], hand_points_y[19]), cv::Scalar(0, 255, 0), 3);

cv::line(handRoi, cv::Point(hand_points_x[19], hand_points_y[19]), cv::Point(hand_points_x[20], hand_points_y[20]), cv::Scalar(0, 255, 0), 3);

angle_lists = hand_angle(hand_points_x, hand_points_y);

gesture_string = h_gestrue(angle_lists);

std::cout << "getsture_string:" << gesture_string << std::endl;

cv::putText(handRoi,gesture_string,cv::Point(30,30),cv::FONT_HERSHEY_COMPLEX,1, cv::Scalar(0, 255, 255), 1, 1, 0);

cv::imshow("handRoi", handRoi);

cv::waitKey(10);

angle_lists.clear();

hand_points_x.clear();

hand_points_y.clear();

}

}

if (cv::waitKey(20) == 'q')

break;

}

capture.release();

return 0;

} For more information, please visit Grid titanium official website