Data processing with python can be roughly divided into the following three parts:

1, Data acquisition: generally, there are open data sets, web crawlers, self-organizing and other ways.

2, Data processing: including data preprocessing, data search / filtering / sorting / statistics and other operations.

3, Data display: including visual presentation of graphs and tables.

The following uses a key word cloud map of movie review as an example to demonstrate the whole process of data processing. In this example, we will use the following Toolkit:

import pandas as pd # Data processing tool pandas import sqlite3 # sql database tools import jieba # Text segmentation tool import pyecharts as pec # pyecharts: a data visualization tool from pyecharts.charts import WordCloud # Import WordCloud cloud cloud module from pyecharts.render import make_snapshot # Import output picture tool from snapshot_selenium import snapshot # Rendering pictures with snapshot selenium

1, Data acquisition.

We can download it to Douban's database, which contains three tables: comment, movie and movie Chinese. See the blog for details Get the movie name and average score by querying the database through movie ID We

We use sql statements to read the required data table and store it in comment_data. You can refer to the blog python's basic operations on sql database files.

conn = sqlite3.connect('data/douban_comment_data.db') comment_data = pd.read_sql_query('select * from comment;',conn)

2, Data processing

Here we write a function get comment key words (movie id, count) to process the data in the database. The parameters include the movie id and the number of popular key counts that need to be obtained. Here we use the screening technology of pandas Technology (refer to the blog Data frame data filtering in python)

String splicing technology (refer to blog Using "+" to complete string splicing in python ), jieba word segmentation technology (refer to the blog: Data analysis based on jieba module)

Wait.

def get_comment_key_words(movie_id,count): comment_list = comment_data[comment_data['MOVIEID']==movie_id]['CONTENT'] comment_str = "" for comment in comment_list: comment_str += comment + '\n' seg_list = list(jieba.cut(comment_str)) keywords_counts = pd.Series(seg_list) keywords_counts = keywords_counts[keywords_counts.str.len()>1]#Note that you need to filter before value_count() filtered_index = ~keywords_counts.str.contains(filter_condition) keywords_counts = keywords_counts[filtered_index] keywords_counts = keywords_counts.value_counts() keywords_counts = keywords_counts[:count] return keywords_counts

Note that we use the filter ﹣ words technology to filter out high-frequency meaningless words. First of all, we need to create a list containing a series of phrases to be filtered. Then use the. join() method of string to join these filter phrases with or operator "|" to form an or expression, and then use the. str.contains() method to get the data index value that meets these conditions, and then reverse it to get the filtered index.

FILTER_WORDS = ['True one','Where?','Ignorance','Two','such','that','Yes?','If', 'yes','Of','this','One','such','time','What','\n','One part','This part','No,', 'Also','because','See only','What is it?','Original','Afraid to','How','Never','Wen Yan','That monster','A sound', 'come out','...','But say','Film','Sure','Must not','Unable','such','Probably','Last','We','thing', 'Now','That','therefore','always','perhaps','Film','they','Can not','Here','today',"Think",'is','feel'] filter_condition = '|'.join(FILTER_WORDS)

filtered_index = ~keywords_counts.str.contains(filter_condition) keywords_counts = keywords_counts[filtered_index]

It should be noted that we need to perform the value "count operation after completing the filtering operation (refer to the blog) Use the data. Value "counts() method to count the duplicate elements in the Series array )And then we can get the data we need by slicing off the top count words.

3, Visualization of data.

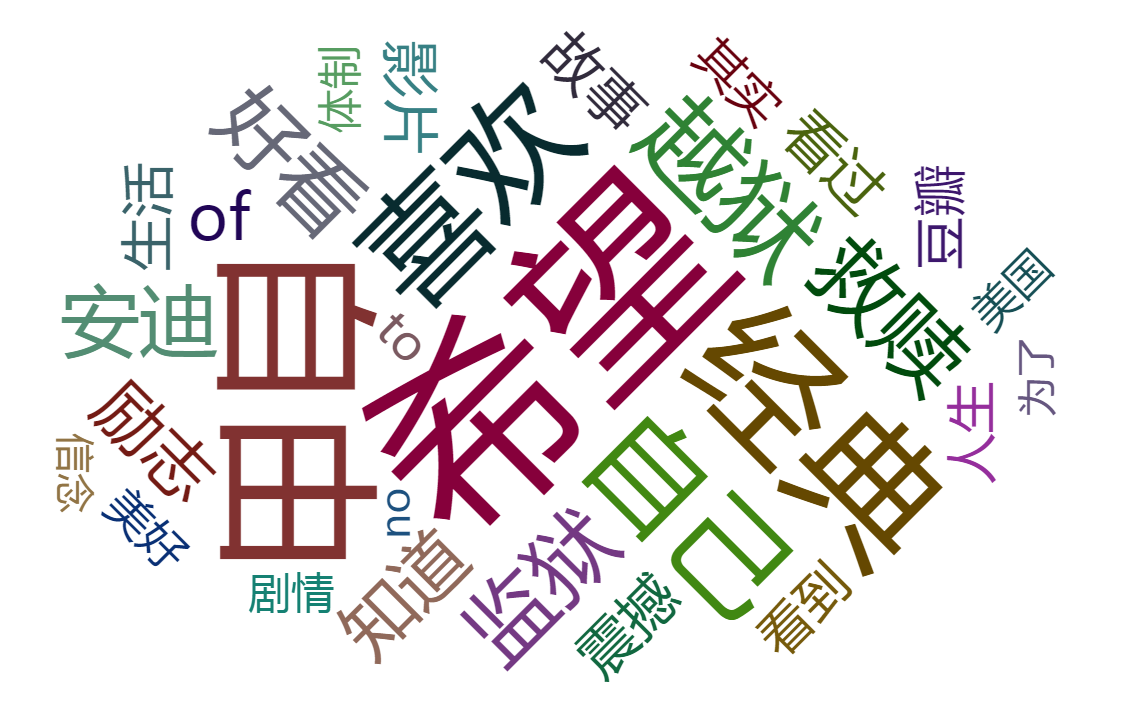

Here we use the way of cloud map to show the principle, refer to the blog Visual display of word cloud based on pyechart . html will be generated by default and can be seen in the browser. We use make ﹣ snapshot technology to directly generate png pictures (refer to the blog) Using pyechart to draw png picture directly in python)

Finally, we run the code to get the png file in the project directory. After opening, we get the top 30 hot words of the movie "Shawshank Redemption" with the movie ID of "1292052".