About kolla ansible

kolla's mission is to provide production level, out of the box delivery capabilities for the openstack cloud platform. kolla's basic idea is that all services are containers. All services are run based on docker, and one container can run only one service (process), so as to run docker with minimum granularity.

The first step is to make docker image, and the second step is to arrange the deployment. Therefore, the kolla project is divided into two small projects: kolla and kolla ansible.

Kolla ansible project

https://github.com/openstack/kolla-ansible

kolla project

https://tarballs.opendev.org/openstack/kolla/

dockerhub image address

https://hub.docker.com/u/kolla/

Installation environment preparation

Official deployment documents:

https://docs.openstack.org/kolla-ansible/train/user/quickstart.html

kolla installation node requirements:

- 2 network interfaces

- 8GB main memory

- 40GB disk space

In this deployment, all in one single node of train version is deployed with a centos7.8 minimal node, which is used as a control node, a computing node, a network node and a cinder storage node as well as a kolla ansible deployment node.

The installation of kolla requires that the target machine is two network cards, and a new network card ens37 is added in vmware workstation:

- ens33, NAT mode, management network, tenant network are reused with this network. The network card is not created separately, and static IP can be configured normally.

- ens37, bridge mode, external network, does not need to configure IP address. In fact, this is used by the br ex binding of neutron. The virtual machine accesses the external network through this network card.

Network card configuration information, most of which are default parameters

[root@kolla ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33 TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=static DEFROUTE=yes IPV4_FAILURE_FATAL=no IPV6INIT=yes IPV6_AUTOCONF=yes IPV6_DEFROUTE=yes IPV6_FAILURE_FATAL=no IPV6_ADDR_GEN_MODE=stable-privacy NAME=ens33 UUID=a41355ae-f475-39d7-9e61-eb5f8f19f881 DEVICE=ens33 ONBOOT=yes IPADDR=192.168.93.30 PREFIX=24 GATEWAY=192.168.93.2 DNS1=114.114.114.114 DNS2=8.8.8.8 IPV6_PRIVACY=no [root@kolla ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens37 TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=none DEFROUTE=yes IPV4_FAILURE_FATAL=no NAME=ens37 UUID=553a2dd0-b53e-417e-98a9-9a7a6a44a53c DEVICE=ens37 ONBOOT=yes

If you need to add additional disks to enable cinder, add an sdb disk here and create it as pv and vg. Use lvm as the back-end storage of cinder:

pvcreate /dev/sdb vgcreate cinder-volumes /dev/sdb

Note that the volume group name is cinder volumes, and the default is globals.yml agreement.

[root@kolla ~]# cat /etc/kolla/globals.yml | grep cinder_volume_group #cinder_volume_group: "cinder-volumes"

The first attempt at kolla ansible may encounter many errors. The following deployment process may be different from the official one, but most problems are avoided.

Deploy kolla ansible

Configure the host name. rabbitmq may need to be able to resolve the host name during kolla pre check

hostnamectl set-hostname kolla

Installation dependency

yum install -y python-devel libffi-devel gcc openssl-devel libselinux-python

Install Ansible, pay attention to the version, the default 2.9 should meet the requirements

yum install -y ansible

Configure alicloud pip source, otherwise pip installation will be slow

mkdir ~/.pip cat > ~/.pip/pip.conf << EOF [global] trusted-host=mirrors.aliyun.com index-url=https://mirrors.aliyun.com/pypi/simple/ EOF

Install kolla ansible

Correspondence between kolla version and openstack version: https://releases.openstack.org/teams/kolla.html

yum install -y epel-release yum install -y python-pip pip install -U pip pip install kolla-ansible==9.1.0 --ignore-installed PyYAML

Copy the kolla ansible configuration file to the current environment

mkdir -p /etc/kolla chown $USER:$USER /etc/kolla ##Copy globals.yml and passwords.yml cp -r /usr/share/kolla-ansible/etc_examples/kolla/* /etc/kolla ##Copy all-in-one and multinode inventory files cp /usr/share/kolla-ansible/ansible/inventory/* .

Modify ansible configuration file

$ vim /etc/ansible/ansible.cfg [defaults] host_key_checking=False pipelining=True forks=100

By default, there are two inventory files, all in one and multi node. Here, all in one is used to plan the cluster role. You can see that all nodes are the same node, kolla. When the environment is preconfigured, the hostname resolution of kolla will be added automatically.

# sed -i 's#localhost ansible_connection=local#kolla#g' all-in-one #View the modified configuration. Other defaults are OK # cat all-in-one | more [control] kolla [network] kolla [compute] kolla [storage] kolla [monitoring] kolla [deployment] kolla ...

Configure ssh security free

ssh-keygen ssh-copy-id root@kolla

Check whether the inventory configuration is correct, and execute:

ansible -i all-in-one all -m ping

Generate password for openstack component

kolla-genpwd

Modify keystone_admin_password, which can be changed to a custom password to facilitate subsequent horizon login. Here it is changed to kolla.

$ sed -i 's#keystone_admin_password:.*#keystone_admin_password: kolla#g' /etc/kolla/passwords.yml $ cat /etc/kolla/passwords.yml | grep keystone_admin_password keystone_admin_password: kolla

Modify global profile globals.yml , the change file is used to control which components are installed and how to configure them. Because all of them are comments, they can be directly added here, or the corresponding items can be found one by one for modification.

cp /etc/kolla/globals.yml{,.bak} cat >> /etc/kolla/globals.yml <<EOF #version kolla_base_distro: "centos" kolla_install_type: "binary" openstack_release: "train" #vip kolla_internal_vip_address: "192.168.93.100" #docker registry docker_registry: "registry.cn-shenzhen.aliyuncs.com" docker_namespace: "kollaimage" #network network_interface: "ens33" neutron_external_interface: "ens37" neutron_plugin_agent: "openvswitch" enable_neutron_provider_networks: "yes" #storage enable_cinder: "yes" enable_cinder_backend_lvm: "yes" #virt_type nova_compute_virt_type: "qemu" EOF

Parameter Description:

- kolla_base_distro: kolla image is built based on different linux hairstyles. The host uses centos. Here, the corresponding docker image of centos type is used.

- kolla_ install_ Type: the kolla image is built based on binary and source source code. The actual deployment can be done with binary.

- openstack_ Release: openstack version can be customized, and the corresponding version image will be pulled from dockerhub

- kolla_internal_vip_address: single node deployment of kolla will also enable haproxy and keepalived to facilitate subsequent expansion to highly available clusters.

- docker_registry: by default, images are pulled from dockerhub. If the network is poor, you can use an individual Alibaba cloud warehouse, but only the images of train and ussuri versions are available.

- docker_namespace: Alibaba cloud warehouse stores the namespace of kolla image. dockerhub is kolla.

- network_interface: network card for network management

- neutron_external_interface: network card of external network

- neutron_plugin_agent: openvswitch is enabled by default

- enable_neutron_provider_networks: enabling external networks=

- enable_cinder: enable cinder

- enable_cinder_backend_lvm: specify the folder backend store as lvm

- nova_compute_virt_type: due to the use of vmware installation, to change to qemu, the actual deployment uses libvirt by default.

Some of the above parameters may have default configuration, or they may not be explicitly enabled, such as neutron_plugin_agent.

Some parameters can also be configured after deployment, such as nova_compute_virt_type, find the configuration file and restart the corresponding component container:

[root@kolla ~]# cat /etc/kolla/nova-compute/nova.conf |grep virt_type #virt_type = kvm virt_type = qemu [root@kolla ~]# docker restart nova_compute

Deploy openstack components

By default, kolla will install docker automatically, but the official source is used, which may be slow. Here, install docker manually in advance.

#Installation dependency yum install -y yum-utils device-mapper-persistent-data lvm2 #Configure alicloud yum source yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo #Install docker yum -y install docker-ce #Start docker service systemctl daemon-reload;systemctl restart docker

Deploy openstack

#Pre configuration, installation of docker, docker sdk, shutdown of firewall, configuration of time synchronization, etc kolla-ansible -i ./all-in-one bootstrap-servers #Pre deployment environment check kolla-ansible -i ./all-in-one prechecks #Pull the image. This step can be omitted. It will be pulled automatically by default kolla-ansible -i ./all-in-one pull #Perform the actual deployment, pull the image, and run the corresponding component container kolla-ansible -i ./all-in-one deploy

The above deployment shows that the deployment is successful without error or interruption. All openstack components run in container mode. Check the container

[root@kolla ~]# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 325c17a52c79 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-chrony:train "dumb-init --single-..." 36 hours ago Up 25 hours chrony 6218d98755ee registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-cron:train "dumb-init --single-..." 36 hours ago Up 25 hours cron 02b6598c1089 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-kolla-toolbox:train "dumb-init --single-..." 36 hours ago Up 25 hours kolla_toolbox 8572e445abad registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-fluentd:train "dumb-init --single-..." 36 hours ago Up 25 hours fluentd f11a103c5ade registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-openstack-base:train "dumb-init --single-..." 44 hours ago Up 25 hours client 5c91def3c963 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-horizon:train "dumb-init --single-..." 44 hours ago Up 25 hours horizon e024bd4f5dd3 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-heat-engine:train "dumb-init --single-..." 44 hours ago Up 25 hours heat_engine 2d1491bd9e1a registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-heat-api-cfn:train "dumb-init --single-..." 44 hours ago Up 25 hours heat_api_cfn eeefcfb31a61 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-heat-api:train "dumb-init --single-..." 44 hours ago Up 25 hours heat_api 9b51b53448fc registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-neutron-metadata-agent:train "dumb-init --single-..." 44 hours ago Up 25 hours neutron_metadata_agent 9f88a6c0cf31 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-neutron-l3-agent:train "dumb-init --single-..." 44 hours ago Up 25 hours neutron_l3_agent a419cb3270a6 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-neutron-dhcp-agent:train "dumb-init --single-..." 44 hours ago Up 25 hours neutron_dhcp_agent 959f6faba972 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-neutron-openvswitch-agent:train "dumb-init --single-..." 44 hours ago Up 25 hours neutron_openvswitch_agent cc1b081cf876 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-neutron-server:train "dumb-init --single-..." 44 hours ago Up 25 hours neutron_server eea1a87feb43 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-openvswitch-vswitchd:train "dumb-init --single-..." 44 hours ago Up 25 hours openvswitch_vswitchd 376f81bf75a2 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-openvswitch-db-server:train "dumb-init --single-..." 44 hours ago Up 25 hours openvswitch_db c68fd9a92d73 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-nova-compute:train "dumb-init --single-..." 44 hours ago Up 25 hours nova_compute 2492e2a32c80 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-nova-libvirt:train "dumb-init --single-..." 44 hours ago Up 25 hours nova_libvirt 3802d199b29f registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-nova-ssh:train "dumb-init --single-..." 44 hours ago Up 25 hours nova_ssh 1281c311ecd4 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-nova-novncproxy:train "dumb-init --single-..." 44 hours ago Up 25 hours nova_novncproxy 2e8c8478116b registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-nova-conductor:train "dumb-init --single-..." 44 hours ago Up 25 hours nova_conductor 950feb59b549 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-nova-api:train "dumb-init --single-..." 44 hours ago Up 25 hours nova_api 49497e664922 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-nova-scheduler:train "dumb-init --single-..." 44 hours ago Up 25 hours nova_scheduler f5eb37b48f7d registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-placement-api:train "dumb-init --single-..." 44 hours ago Up 25 hours placement_api 54cd0e3be101 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-cinder-backup:train "dumb-init --single-..." 44 hours ago Up 25 hours cinder_backup b4efa4449e7f registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-cinder-volume:train "dumb-init --single-..." 44 hours ago Up 25 hours cinder_volume 159b669d2fd3 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-cinder-scheduler:train "dumb-init --single-..." 44 hours ago Up 25 hours cinder_scheduler 9fc7e6a4cb25 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-cinder-api:train "dumb-init --single-..." 44 hours ago Up 25 hours cinder_api b3f8f711f2b1 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-glance-api:train "dumb-init --single-..." 44 hours ago Up 25 hours glance_api 760e92d698e2 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-keystone-fernet:train "dumb-init --single-..." 44 hours ago Up 25 hours keystone_fernet 95f235c4ac10 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-keystone-ssh:train "dumb-init --single-..." 44 hours ago Up 25 hours keystone_ssh 03306334ce19 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-keystone:train "dumb-init --single-..." 44 hours ago Up 25 hours keystone 5173d4191567 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-rabbitmq:train "dumb-init --single-..." 44 hours ago Up 25 hours rabbitmq eb6bca26f6ce registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-tgtd:train "dumb-init --single-..." 44 hours ago Up 25 hours tgtd 79fac2ca1b19 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-iscsid:train "dumb-init --single-..." 44 hours ago Up 25 hours iscsid 4a3fcefc7009 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-memcached:train "dumb-init --single-..." 44 hours ago Up 25 hours memcached 0773eaf446e4 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-mariadb:train "dumb-init -- kolla_..." 44 hours ago Up 25 hours mariadb 77f0beaa28e5 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-keepalived:train "dumb-init --single-..." 44 hours ago Up 25 hours keepalived b02b744d2da3 registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-haproxy:train "dumb-init --single-..." 44 hours ago Up 25 hours haproxy

Confirm that there is no container with abnormal status such as Exited

[root@kolla ~]# docker ps -a | grep -v Up

39 containers are running in this deployment

[root@localhost kolla-env]# docker ps -a | wc -l 39

Check the pulled images and find that the number of images is the same as the number of containers.

[root@kolla ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-glance-api train aec757c5908a 2 days ago 1.05GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-keystone-ssh train 2c95619322ed 2 days ago 1.04GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-keystone-fernet train 918564aa9c01 2 days ago 1.04GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-keystone train 8d5f3ca2a73c 2 days ago 1.04GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-cinder-api train 500910236e85 2 days ago 1.19GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-cinder-volume train f76ebe1e133d 2 days ago 1.14GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-cinder-backup train 19342786a92c 2 days ago 1.13GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-cinder-scheduler train 920630f0ea6c 2 days ago 1.11GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-heat-api train 517f6a0643ee 2 days ago 1.07GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-heat-api-cfn train 2d46b91d44ef 2 days ago 1.07GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-heat-engine train ab570c135dbc 2 days ago 1.07GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-horizon train a00ddb359ea5 2 days ago 1.2GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-fluentd train 6a5b7be2551b 2 days ago 697MB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-cron train 0f784cd532e2 2 days ago 408MB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-chrony train 374dabc62868 2 days ago 408MB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-iscsid train 575873f9e4b8 2 days ago 413MB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-haproxy train 9cf840548535 2 days ago 433MB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-keepalived train b2a20ccd7d6a 2 days ago 414MB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-openstack-base train c35001fb182b 3 days ago 920MB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-nova-compute train 93be43a73a3e 5 days ago 1.85GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-placement-api train 26f8c88c3c50 5 days ago 1.05GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-nova-api train 2a9d3ea95254 5 days ago 1.08GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-nova-novncproxy train e6acfbe47b2b 5 days ago 1.05GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-nova-conductor train 836a9f775263 5 days ago 1.05GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-nova-ssh train f89a813f3902 5 days ago 1.05GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-nova-scheduler train 8061eaa33d21 5 days ago 1.05GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-openvswitch-vswitchd train 2b780c8075c6 5 days ago 425MB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-openvswitch-db-server train 86168147b086 5 days ago 425MB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-rabbitmq train 19cd34b4f503 5 days ago 487MB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-mariadb train 882472a192b5 6 days ago 593MB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-neutron-dhcp-agent train a007b53f0507 7 days ago 1.04GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-neutron-metadata-agent train 8bcff22221bd 7 days ago 1.04GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-nova-libvirt train 539673da5c25 7 days ago 1.25GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-kolla-toolbox train a18a474c65ea 7 days ago 842MB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-tgtd train ad5380187ca9 7 days ago 383MB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-memcached train 1fcf18645254 7 days ago 408MB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-neutron-server train 539cfb7c1fd2 8 days ago 1.08GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-neutron-openvswitch-agent train 95113c0f5b8c 8 days ago 1.08GB registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-neutron-l3-agent train fbe9385f49ca 8 days ago 1.08GB

View the volume used by the cinder and create lvm automatically

[root@kolla ~]# lsblk | grep cinder ├─cinder--volumes-cinder--volumes--pool_tmeta 253:3 0 20M 0 lvm │ └─cinder--volumes-cinder--volumes--pool 253:5 0 19G 0 lvm └─cinder--volumes-cinder--volumes--pool_tdata 253:4 0 19G 0 lvm └─cinder--volumes-cinder--volumes--pool 253:5 0 19G 0 lvm [root@kolla ~]# lvs | grep cinder cinder-volumes-pool cinder-volumes twi-a-tz-- 19.00g 0.00 10.55

View network card status

[root@kolla ~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:0c:4e:fe brd ff:ff:ff:ff:ff:ff inet 192.168.93.30/24 brd 192.168.93.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever inet 192.168.93.100/32 scope global ens33 valid_lft forever preferred_lft forever inet6 fe80::7a6c:d06c:ee49:4cd5/64 scope link noprefixroute valid_lft forever preferred_lft forever 3: ens37: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master ovs-system state UP group default qlen 1000 link/ether 00:0c:29:0c:4e:08 brd ff:ff:ff:ff:ff:ff inet6 fe80::20c:29ff:fe0c:4e08/64 scope link valid_lft forever preferred_lft forever 4: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:2a:d9:93:52 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:2aff:fed9:9352/64 scope link valid_lft forever preferred_lft forever 6: veth0c46c6a@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default link/ether 1a:ce:d7:61:d0:cc brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet6 fe80::18ce:d7ff:fe61:d0cc/64 scope link valid_lft forever preferred_lft forever 7: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether de:e5:b7:4d:e8:b8 brd ff:ff:ff:ff:ff:ff 11: br-int: <BROADCAST,MULTICAST> mtu 1450 qdisc noop state DOWN group default qlen 1000 link/ether 52:14:05:ba:ce:4c brd ff:ff:ff:ff:ff:ff 13: br-tun: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether d2:5b:76:f5:01:49 brd ff:ff:ff:ff:ff:ff 14: br-ex: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether 00:0c:29:0c:4e:08 brd ff:ff:ff:ff:ff:ff 22: qbr2749f64b-1f: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000 link/ether 3a:0d:ad:56:9d:9d brd ff:ff:ff:ff:ff:ff 23: qvo2749f64b-1f@qvb2749f64b-1f: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master ovs-system state UP group default qlen 1000 link/ether c2:c5:8b:a6:72:8b brd ff:ff:ff:ff:ff:ff inet6 fe80::c0c5:8bff:fea6:728b/64 scope link valid_lft forever preferred_lft forever 24: qvb2749f64b-1f@qvo2749f64b-1f: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master qbr2749f64b-1f state UP group default qlen 1000 link/ether 3a:0d:ad:56:9d:9d brd ff:ff:ff:ff:ff:ff inet6 fe80::380d:adff:fe56:9d9d/64 scope link valid_lft forever preferred_lft forever 25: tap2749f64b-1f: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc pfifo_fast master qbr2749f64b-1f state UNKNOWN group default qlen 1000 link/ether fe:16:3e:94:b5:71 brd ff:ff:ff:ff:ff:ff inet6 fe80::fc16:3eff:fe94:b571/64 scope link valid_lft forever preferred_lft forever 26: qbr0a14e63d-2e: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000 link/ether 02:f9:32:c0:f4:b7 brd ff:ff:ff:ff:ff:ff 27: qvo0a14e63d-2e@qvb0a14e63d-2e: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master ovs-system state UP group default qlen 1000 link/ether 76:86:46:4c:4f:61 brd ff:ff:ff:ff:ff:ff inet6 fe80::7486:46ff:fe4c:4f61/64 scope link valid_lft forever preferred_lft forever 28: qvb0a14e63d-2e@qvo0a14e63d-2e: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master qbr0a14e63d-2e state UP group default qlen 1000 link/ether 02:f9:32:c0:f4:b7 brd ff:ff:ff:ff:ff:ff inet6 fe80::f9:32ff:fec0:f4b7/64 scope link valid_lft forever preferred_lft forever 29: tap0a14e63d-2e: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc pfifo_fast master qbr0a14e63d-2e state UNKNOWN group default qlen 1000 link/ether fe:16:3e:ee:08:6b brd ff:ff:ff:ff:ff:ff inet6 fe80::fc16:3eff:feee:86b/64 scope link valid_lft forever preferred_lft forever

Install OpenStack client

To execute openstack related commands and operations, you need to install the openstack client locally, but this time you install the client in the kolla node and report an error, so you can directly start an official base container. By default, the container has client commands, which will send admin-openrc.sh Just mount it in the container.

Install OpenStack CLI client (error may be reported, skip this step)

pip install python-openstackclient

Generate an openrc file with administrator user credentials set

kolla-ansible post-deploy

cat /etc/kolla/admin-openrc.sh

kolla ansible provides a script to quickly create an instance of cirros demo (error may be reported, skip this step)

source /etc/kolla/admin-openrc.sh

/usr/share/kolla-ansible/init-runonce

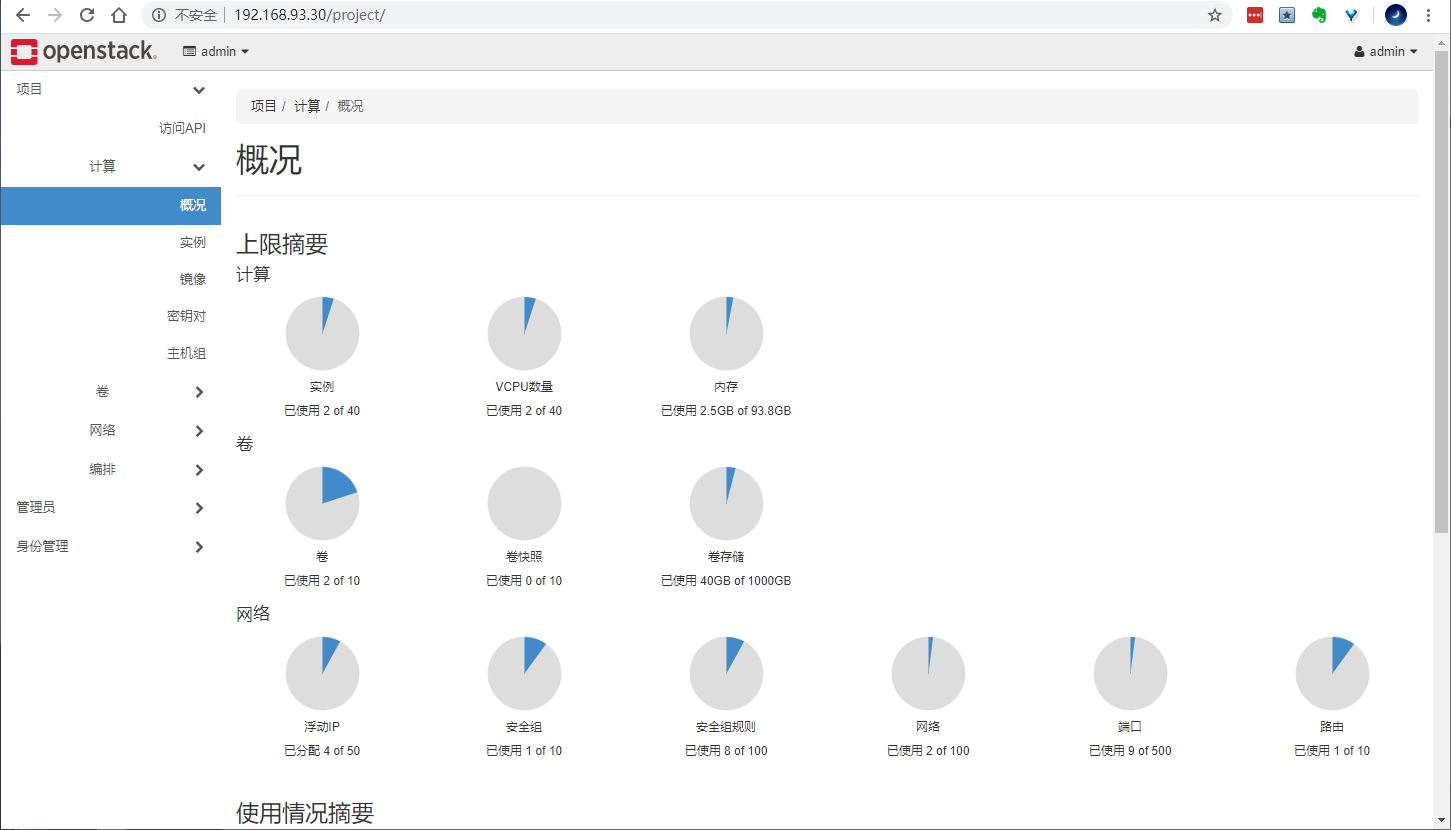

Visit openstack horizon

You need to use vip address to access openstack horizon. You can see vip generated by keepalived container

[root@kolla ~]# ip a |grep ens33 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 inet 192.168.93.30/24 brd 192.168.93.255 scope global ens33 inet 192.168.93.100/32 scope global ens33

The browser can directly access this address and log in to horizon

My username and password here is admin/kolla. Information can be obtained from admin-openrc.sh Get from

[root@kolla ~]# cat /etc/kolla/admin-openrc.sh | grep OS_PASSWORD export OS_PASSWORD=kolla

The default login is as follows

Container running openstack client

Since the openstack client was not successfully installed in the kolla host node, the client is used in the container

The official image address of pull image is kolla / CentOS binary openstack- base:train

docker pull registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-openstack-base:train

Start a client container and use the sleep infinity command to keep the container running.

docker run -d --name client \

--restart always \

-v /etc/kolla/admin-openrc.sh:/admin-openrc.sh:ro \

-v /usr/share/kolla-ansible/init-runonce:/init-runonce:rw \

registry.cn-shenzhen.aliyuncs.com/kollaimage/centos-binary-openstack-base:train sleep infinity

Enter the container to execute openstack commands normally

[root@kolla ~]# docker exec -it client bash ()[root@f11a103c5ade /]# source /admin-openrc.sh ()[root@f11a103c5ade /]# openstack service list +----------------------------------+-------------+----------------+ | ID | Name | Type | +----------------------------------+-------------+----------------+ | 2aed09dc3dbd450599042edd9badcc17 | nova_legacy | compute_legacy | | 2c26e8f09c20455bb67e1df58e7f5ab5 | nova | compute | | 2ec7dd7cd3ce4298931e7272a6e0abd4 | glance | image | | 47062da43fd644eabaa21ae3ec3189da | keystone | identity | | 567057b208ae4a3bb2e3e8e3e7b80bd8 | neutron | network | | 63418bb02ffd449f940c886e640162a1 | heat | orchestration | | 652da566d85c47eb8d38465fe54c232e | cinderv2 | volumev2 | | 9c8acd17ecbf457fb8b4f29cfc7859da | heat-cfn | cloudformation | | d1ef13894f2e44688a1bb117e64d8715 | placement | placement | | d35e629c03794b4c87c6dc2670f3f00a | cinderv3 | volumev3 | +----------------------------------+-------------+----------------+

The sample demo script is also mounted to the container, modifying the configuration of the external network part of the init RunOnce sample script, and then executing the shell script

()[root@f11a103c5ade /]# cat init-runonce # This EXT_NET_CIDR is your public network,that you want to connect to the internet via. ENABLE_EXT_NET=${ENABLE_EXT_NET:-1} EXT_NET_CIDR=${EXT_NET_CIDR:-'192.168.1.0/24'} EXT_NET_RANGE=${EXT_NET_RANGE:-'start=192.168.1.200,end=192.168.1.250'} EXT_NET_GATEWAY=${EXT_NET_GATEWAY:-'192.168.1.1'} $ bash init-runonce

Parameter Description:

- EXT_NET_CIDR specifies the external network. Due to the use of bridge mode, it directly bridges to the wireless network card of the computer, so the network here is the network segment of the wireless network card.

- EXT_NET_RANGE specifies to take an address range from the external network as the address pool of the external network

- EXT_NET_GATEWAY external network gateway, which is consistent with the gateway used by the external network

The script will create some resources, such as downloading and uploading cirros images, creating external and internal networks, etc. in addition, ssh key will be created during the execution of the script, which can be returned directly. It is saved in the / root/.ssh directory in the container by default, where the id_rsa private key can be used for remote connection instance.

Finally, run an example manually according to the prompt

openstack server create \

--image cirros \

--flavor m1.tiny \

--key-name mykey \

--network demo-net \

demo1

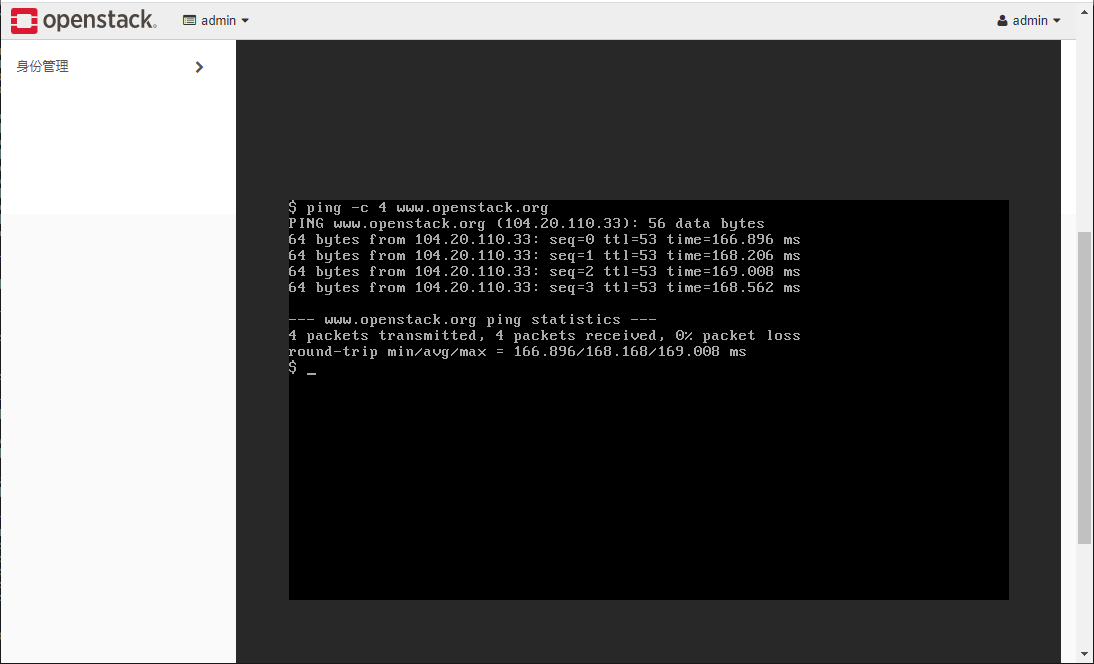

View created networks and instances

Log in to the instance console to verify the connectivity between the instance and the Internet. The cirros user password will prompt when logging in for the first time:

Bind floating IP address to the instance for remote connection from external ssh

Click + randomly assign a floating IP

On the kolla node, ssh connects to the instance floating IP, and the default user password of cirros image is cirros/gocubsgo. The image information official website describes:

https://docs.openstack.org/image-guide/obtain-images.html#cirros-test

[root@kolla ~]# ssh cirros@192.168.1.248 cirros@192.168.1.248's password: $ $

Or use SecureCRT to connect to the instance outside the cluster.

Running CentOS instance

centos officially maintains relevant cloud image s. If you do not need to make relevant customization, you can download the running instance directly.

reference resources: https://docs.openstack.org/image-guide/obtain-images.html

Download address of the image officially maintained by CentOS:

http://cloud.centos.org/centos/7/images/

You can also use the command to download the image directly, but the download may be slow. It is recommended to download the image before uploading. Take CentOS 7.8 as an example:

wget http://cloud.centos.org/centos/7/images/CentOS-7-x86_64-GenericCloud-2003.qcow2c

After downloading, upload the image to openstack and upload it directly to horizon. You can also upload using the command.

Note: by default, the instance running the image can only use ssh key to log in as centos user. If you need to use root remote ssh to connect to the instance, you need to configure root password free for the image and enable ssh access before uploading.

reference resources: https://blog.csdn.net/networken/article/details/106713658

In addition, our client is in the container, which is not convenient here. First, copy the image to the container, and then use the openstack command to upload.

Here, copy to the root directory of the client container.

[root@kolla ~]# docker cp CentOS-7-x86_64-GenericCloud-2003.qcow2c client:/root [root@kolla ~]# docker exec -it client bash ()[root@f11a103c5ade /]# ()[root@f11a103c5ade /]# source /admin-openrc.sh ()[root@f11a103c5ade /]# cd /root/ ()[root@f11a103c5ade ~]# ls anaconda-ks.cfg CentOS-7-x86_64-GenericCloud-2003.qcow2c

Execute the following openstack command to upload the image

openstack image create "CentOS78-image" \

--file CentOS-7-x86_64-GenericCloud-2003.qcow2c \

--disk-format qcow2 --container-format bare \

--public

Command execution result

()[root@f11a103c5ade ~]# openstack image create "CentOS78-image" \ > --file CentOS-7-x86_64-GenericCloud-2003.qcow2c \ > --disk-format qcow2 --container-format bare \ > --public +------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | Field | Value | +------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | checksum | 362d1e07d42bcbc61b839fb4269b173b | | container_format | bare | | created_at | 2020-06-13T03:23:16Z | | disk_format | qcow2 | | file | /v2/images/2d95d8a0-6fba-4ca8-9dde-8696eb7ebdbf/file | | id | 2d95d8a0-6fba-4ca8-9dde-8696eb7ebdbf | | min_disk | 0 | | min_ram | 0 | | name | CentOS78-image | | owner | 65850af146fe478ab13f59f7edf838ec | | properties | os_hash_algo='sha512', os_hash_value='aefa398f69e1746b420c44e5650f0dcf15926fb6f8c75f746bb2f48a04f7b140fdc745090f3d06b68fa0fe711ded7d822150765414e2a23f351efd2e181eb7b9', os_hidden='False' | | protected | False | | schema | /v2/schemas/image | | size | 385941504 | | status | active | | tags | | | updated_at | 2020-06-13T03:23:20Z | | virtual_size | None | | visibility | public | +------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

Use this image to create an instance in horizon, and other information can be used directly from the resources created by demo. Bind floating IP for instance after creation.

If the instance creation fails, you can view the error logs of related components

[root@kolla ~]# tail -100f /var/log/kolla/nova/nova-compute.log

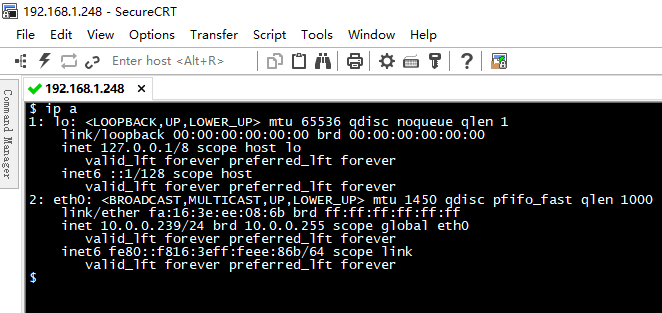

root password connection instance is not configured

If you do not customize the image in advance to modify the root password, you can only use centos user and sshkey to log in. Because it is a demo example running in the container, the ssh private key is also saved in the default directory of the container, and the floating IP test of the instance is connected in the container

[root@kolla ~]# docker exec -it client bash ()[root@f11a103c5ade /]# ssh -i /root/.ssh/id_rsa centos@192.168.1.105 Last login: Sat Jun 13 05:47:49 2020 from 192.168.1.100 [centos@centos78 ~]$ [centos@centos78 ~]$

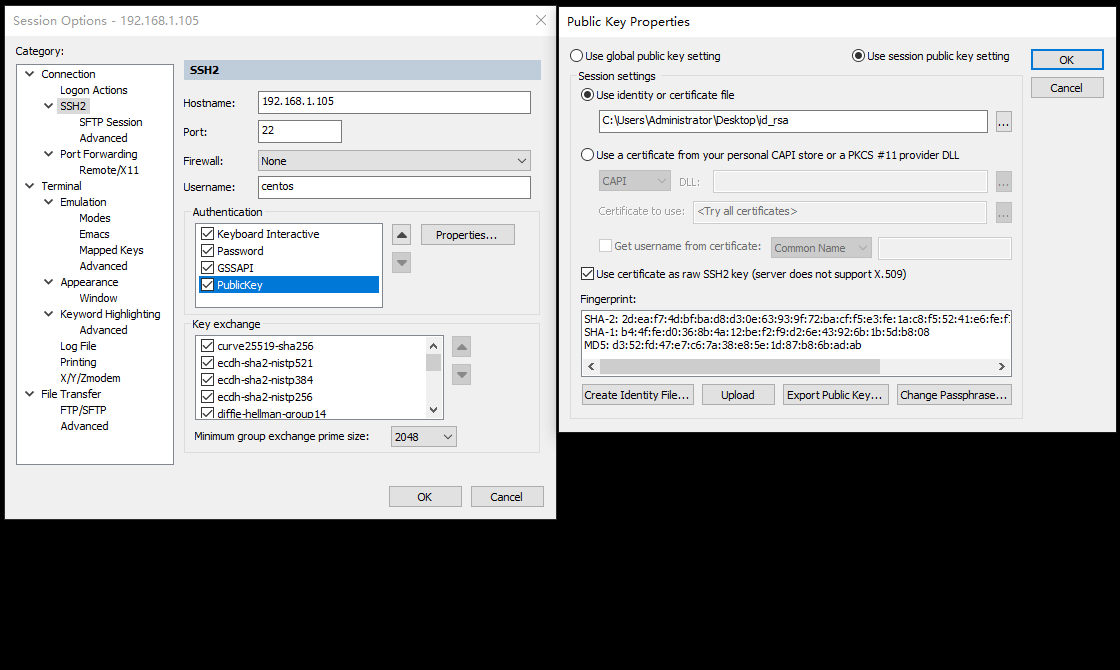

Or take out the id_rsa private key, use SecureCRT to log in:

Configure root password connection instance

If you change the root password of the image configuration in advance, you can log in to the instance directly with the root password,

[root@kolla ~]# ssh root@192.168.1.105 root@192.168.1.105's password: Last login: Sat Jun 13 05:51:53 2020 from 192.168.1.100 [root@centos78 ~]# [root@centos78 ~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc pfifo_fast state UP group default qlen 1000 link/ether fa:16:3e:94:b5:71 brd ff:ff:ff:ff:ff:ff inet 10.0.0.193/24 brd 10.0.0.255 scope global dynamic eth0 valid_lft 84215sec preferred_lft 84215sec inet6 fe80::f816:3eff:fe94:b571/64 scope link valid_lft forever preferred_lft forever [root@centos78 ~]# [root@centos78 ~]# cat /etc/redhat-release CentOS Linux release 7.8.2003 (Core)

Run Ubuntu instance

Download Image

wget https://cloud-images.ubuntu.com/bionic/current/bionic-server-cloudimg-amd64.img

Upload image

openstack image create "Ubuntu1804" \

--file bionic-server-cloudimg-amd64.img \

--disk-format qcow2 --container-format bare \

--public

Create an instance according to the normal process. The default user of the ubuntu image is ubuntu. Log in for the first time using the SSH key mode, and then execute the following command to directly switch to the root user (centos cannot use this mode)

$ sudo -i

kolla configuration and log files

- Directory of configuration files of each component / etc/kolla/

- Log file directory of each component / var/log/kolla/

Clean up the kolla ansilbe cluster

kolla-ansible destroy --include-images --yes-i-really-really-mean-it

#Reset the cinder volume, operate carefully

vgremove cinder-volume