Get ready

tool

https://github.com/easzlab/kubeasz

kubeasz using ansible to quickly deploy the non containerized and highly available k8s cluster

There are two versions of kubeasz: 1.x and 2.x. for version comparison, please refer to https://github.com/easzlab/kubeasz/blob/master/docs/mixes/branch.md

Use version 2.x here

Environmental Science

| Host | Intranet ip | Extranet ip | system |

|---|---|---|---|

| k8s-1 | 10.0.0.18 | 61.184.241.187 | ubuntu 18.04 |

| k8s-2 | 10.0.0.19 | ubuntu 18.04 | |

| k8s-3 | 10.0.0.20 | ubuntu 18.04 |

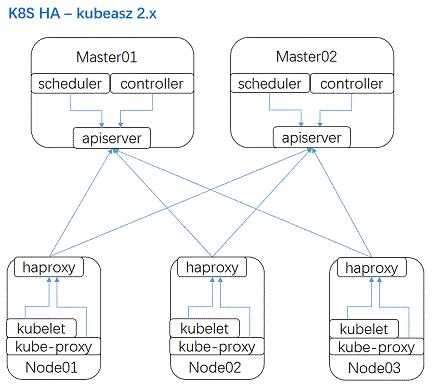

Framework

The internal access to the apserver of the k8s cluster can realize load and high availability through the HA proxy installed by the node itself, while the external access to the apserver is realized through another ha proxy + maintained. The external load balancing is optional, because the load only affects the access to the k8s management interface and does not affect the k8s The internal cluster is highly available, so you can choose not to install it

Reference: https://github.com/easzlab/kubeasz/issues/585 × issuecomment-502948966

When the master and node nodes are shared, haproxy + preserved cannot be deployed on the master to realize external access to apserver. Details: https://github.com/easzlab/kubeasz/issues/585#issuecomment-575954720

Plan

| Deployment node | k8s-1 | ||

|---|---|---|---|

| etcd node | k8s-1 | k8s-2 | k8s-3 |

| master node | k8s-1 | k8s-2 | |

| Node node | k8s-1 | k8s-2 | k8s-3 |

deploy

All root operations are used by default

Configure DNS

# All nodes

vim /etc/hosts

10.0.0.18 k8s-1

10.0.0.19 k8s-2

10.0.0.20 k8s-3

Modify apt source

# All nodes cp /etc/apt/sources.list /etc/apt/sources.list.bakcup cat > /etc/apt/sources.list <<EOF deb http://mirrors.aliyun.com/ubuntu/ xenial main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ xenial-security main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ xenial-updates main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ xenial-proposed main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ xenial-backports main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ xenial main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ xenial-security main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ xenial-updates main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ xenial-proposed main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ xenial-backports main restricted universe multiverse EOF apt update

Configure ssh password free login

# On deployment node k8s-1

ssh-keygen

ssh-copy-id k8s-1

ssh-copy-id k8s-2

ssh-copy-id k8s-3

Kernel upgrade

ubuntu 18.04 uses kernel 4.15, which meets the requirements and does not need to be updated. For other system kernel updates, please refer to:

https://github.com/easzlab/kubeasz/blob/master/docs/guide/kernel_upgrade.md

Installation dependency

# On all nodes apt install -y python2.7

download

cd /opt # Download the tool script easzup, for example, use kubeasz version 2.2.0 export release=2.2.0 curl -C- -fLO --retry 3 https://github.com/easzlab/kubeasz/releases/download/${release}/easzup chmod +x ./easzup # Download using tool script ./easzup -D

After the above script runs successfully, all files (kubeasz code, binary, offline image) have been put into the directory / etc/ansible

- /etc/ansible contains the release code of kubeasz version ${release}

- /etc/ansible/bin contains k8s/etcd/docker/cni and other binary files

- /etc/ansible/down contains the offline container image required for cluster installation

- /etc/ansible/down/packages contains the system basic software required for cluster installation

Installation cluster

# Containerized operation kubeasz cd /opt ./easzup -S # Installation cluster # Create Cluster Context docker exec -it kubeasz easzctl checkout myk8s

# Modify the hosts file cd /etc/ansible && cp example/hosts.multi-node hosts

# 'etcd' cluster should have odd member(s) (1,3,5,...) # variable 'NODE_NAME' is the distinct name of a member in 'etcd' cluster [etcd] 10.0.0.18 NODE_NAME=etcd1 10.0.0.19 NODE_NAME=etcd2 10.0.0.20 NODE_NAME=etcd3 # master node(s) [kube-master] 10.0.0.18 10.0.0.19 # work node(s) [kube-node] 10.0.0.18 10.0.0.19 10.0.0.20 # [optional] harbor server, a private docker registry # 'NEW_INSTALL': 'yes' to install a harbor server; 'no' to integrate with existed one # 'SELF_SIGNED_CERT': 'no' you need put files of certificates named harbor.pem and harbor-key.pem in directory 'down' [harbor] #192.168.1.8 HARBOR_DOMAIN="harbor.yourdomain.com" NEW_INSTALL=no SELF_SIGNED_CERT=yes # [optional] loadbalance for accessing k8s from outside [ex-lb] #192.168.1.6 LB_ROLE=backup EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443 #192.168.1.7 LB_ROLE=master EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443 # [optional] ntp server for the cluster [chrony] 10.0.0.18 [all:vars] # --------- Main Variables --------------- # Cluster container-runtime supported: docker, containerd CONTAINER_RUNTIME="docker" # Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn CLUSTER_NETWORK="flannel" # Service proxy mode of kube-proxy: 'iptables' or 'ipvs' PROXY_MODE="ipvs" # K8S Service CIDR, not overlap with node(host) networking SERVICE_CIDR="10.68.0.0/16" # Cluster CIDR (Pod CIDR), not overlap with node(host) networking CLUSTER_CIDR="172.20.0.0/16" # NodePort Range NODE_PORT_RANGE="20000-40000" # Cluster DNS Domain CLUSTER_DNS_DOMAIN="cluster.local." # -------- Additional Variables (don't change the default value right now) --- # Binaries Directory bin_dir="/opt/kube/bin" # CA and other components cert/key Directory ca_dir="/etc/kubernetes/ssl" # Deploy Directory (kubeasz workspace) base_dir="/etc/ansible"

# Configure the whole k8s cluster docker exec -it kubeasz easzctl setup

Verification

# If you prompt kubectl: command not found, exit and re ssh to log in, and the environment variable will take effect $ kubectl version # Verify cluster version $ kubectl get componentstatus # Verify the status of components such as scheduler / Controller Manager / etcd $ kubectl get node # Verify node ready status $ kubectl get pod --all-namespaces # Verify the cluster pod status. By default, network plug-ins, coredns, metrics server, etc. have been installed $ kubectl get svc --all-namespaces # Verify cluster service status

Clear

# Cleaning cluster docker exec -it kubeasz easzctl destroy # Further clean up, clean up the docker on the deployment node and all downloaded files # Clean up running container easzup -C # Clean container image docker system prune -a # Stop docker servicesystemctl stop docker # Delete the download file rm -rf /etc/ansible /etc/docker /opt/kube # Delete docker file $ umount /var/run/docker/netns/default $ umount /var/lib/docker/overlay $ rm -rf /var/lib/docker /var/run/docker # Restart node