kubectl Installation and Nginx Configuration Introduced by kubernetes

Using nginx 4 layer transparent proxy function, K8S nodes (master and worker nodes) are highly available to access kube-apiserver. Before deployment, you need to plan the node configuration of kubernetes cluster. The node information in this tutorial is as follows:

| host name | Host ip |

|---|---|

| k8s-master | 172.24.211.217 |

| k8s-master-1 | 172.24.211.220 |

| k8s-node-1 | 172.24.211.218 |

| k8s-node-2 | 172.24.211.219 |

(ps: In previous tutorials, there were only three hosts. To avoid having only one master node, we temporarily added k8s-master-1 node. For this node, we need to refer to the previous articles, install docker and flannel in advance, and complete the corresponding configuration. Direct use of existing clusters for etcd)

I. Installing client Tool - kubectl

Before deploying the k8s cluster, the client tool kubectl is deployed at each node of the cluster that needs to be connected to facilitate later testing.

Download and decompress:

cd /opt/k8s/work wget https://dl.k8s.io/v1.15.0/kubernetes-client-linux-amd64.tar.gz tar -xzvf kubernetes-client-linux-amd64.tar.gz chmod +x kubernetes/client/bin/kubectl

Deployment distribution (all nodes that need to execute the kubectl command can distribute one copy):

scp kubernetes/client/bin/kubectl root@k8s-master:/opt/k8s/bin/ scp kubernetes/client/bin/kubectl root@k8s-master-1:/opt/k8s/bin/ scp kubernetes/client/bin/kubectl root@k8s-node-1:/opt/k8s/bin/ scp kubernetes/client/bin/kubectl root@k8s-node-2:/opt/k8s/bin/

Kubectl communicates with apiserver https secure port, and apiserver authenticates and authorizes the certificates provided. As a cluster management tool, kubectl needs to be granted the highest privileges, where admin certificates with the highest privileges are created. Let's start creating admin certificates and private keys:

cd /opt/k8s/work

cat > admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

- O is system:masters. After receiving the certificate, kube-apiserver sets the requested Group to system:masters.

- The predefined Cluster RoleBinding cluster-admin binds Group system:masters to Role cluster-admin, which grants permissions to all API s;

- This certificate will only be used as a client certificate, so the hosts field is empty.

Generate certificates admin.pem and private key admin-key.pem:

export PATH=/opt/k8s/bin:$PATH cfssl gencert -ca=/opt/k8s/work/ca.pem -ca-key=/opt/k8s/work/ca-key.pem -config=/opt/k8s/work/ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

Create a configuration file: kubeconfig

cd /opt/k8s/work #Setting cluster parameters kubectl config set-cluster kubernetes --certificate-authority=/opt/k8s/work/ca.pem --embed-certs=true --server=https://127.0.0.1:8443 --kubeconfig=kubectl.kubeconfig #Setting Client Authentication Parameters kubectl config set-credentials admin --client-certificate=/opt/k8s/work/admin.pem --client-key=/opt/k8s/work/admin-key.pem --embed-certs=true --kubeconfig=kubectl.kubeconfig #Setting context parameters kubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=kubectl.kubeconfig #Setting default context kubectl config use-context kubernetes --kubeconfig=kubectl.kubeconfig

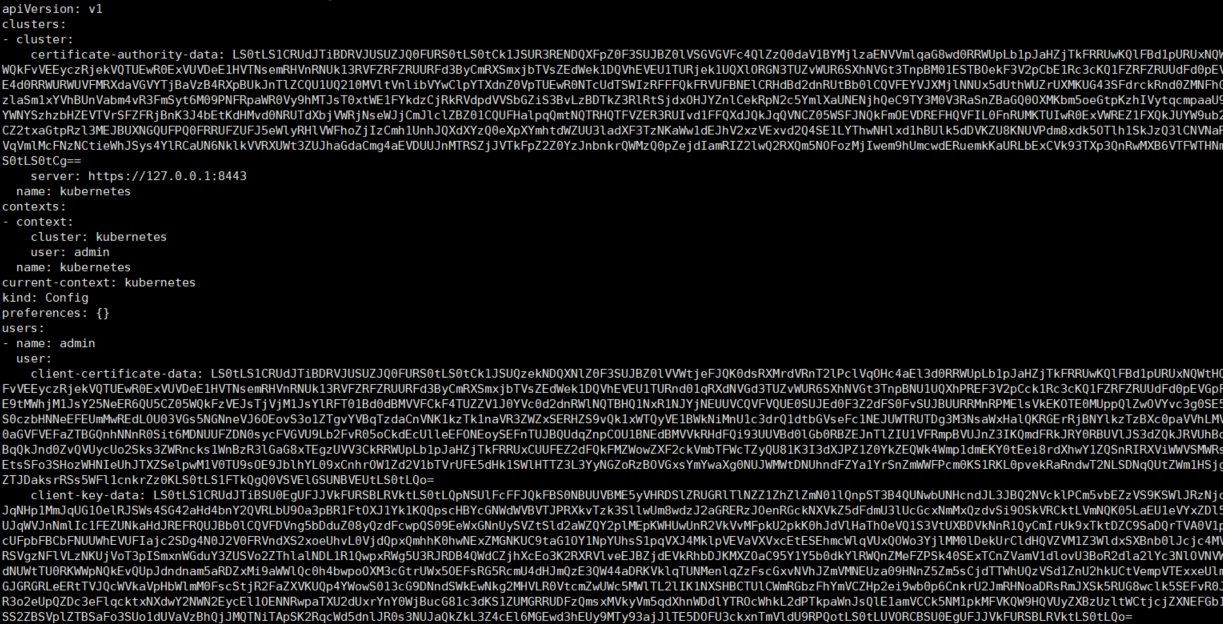

After the above commands are executed, the configuration file kubectl.kubeconfig is generated in the work directory, - Embedded - certs = true, which embeds the certificate into the configuration file without having to copy it to other nodes. Among them, https://127.0.0.1:8443 It is the kube-apiserver reverse proxy (kube-nginx) address port.

The generated configuration file is shown in the following figure:

Distribution profile:

scp kubectl.kubeconfig root@k8s-master:~/.kube/config scp kubectl.kubeconfig root@k8s-master-1:~/.kube/config scp kubectl.kubeconfig root@k8s-node-1:~/.kube/config scp kubectl.kubeconfig root@k8s-node-2:~/.kube/config

II. Cluster Nginx Configuration Planning

Now we need to start a nginx process at each node, and dock multiple apiserver instances at the back end. nginx checks them for health and load balancing. Then when the cluster is deployed later, both master and worker nodes can directly use the entries provided by nginx( https://127.0.0.1:8443 ) Accessing apiserver is not only convenient, but also highly usable.

2.1 Download and Compile

Download the Nginx source code:

cd /opt/k8s/work wget http://nginx.org/download/nginx-1.15.3.tar.gz tar -xzvf nginx-1.15.3.tar.gz

Configure compilation parameters:

with-stream: Open the four-layer transparent forwarding (TCP Proxy) function;

- without-xxx: Turn off all other functions so that the generated dynamic-link binary program has the least dependency.

cd /opt/k8s/work/nginx-1.15.3 mkdir nginx-prefix ./configure --with-stream --without-http --prefix=$(pwd)/nginx-prefix --without-http_uwsgi_module --without-http_scgi_module --without-http_fastcgi_module

Compile and verify:

cd /opt/k8s/work/nginx-1.15.3 make && make install ./nginx-prefix/sbin/nginx -v

2.2 Distribution and Installation of Nodes

Distribution of compiled installation files to each node:

#create folder

mkdir -p /opt/k8s/kube-nginx/{conf,logs,sbin}

ssh root@k8s-master-1 "mkdir -p /opt/k8s/kube-nginx/{conf,logs,sbin}"

ssh root@k8s-node-1 "mkdir -p /opt/k8s/kube-nginx/{conf,logs,sbin}"

ssh root@k8s-node-1 "mkdir -p /opt/k8s/kube-nginx/{conf,logs,sbin}"

#First grant permissions, then distribute files

chmod a+x /opt/k8s/work/nginx-1.15.3/nginx-prefix/sbin/nginx

scp /opt/k8s/work/nginx-1.15.3/nginx-prefix/sbin/nginx root@k8s-master:/opt/k8s/kube-nginx/sbin/kube-nginx

scp /opt/k8s/work/nginx-1.15.3/nginx-prefix/sbin/nginx root@k8s-master-1:/opt/k8s/kube-nginx/sbin/kube-nginx

scp /opt/k8s/work/nginx-1.15.3/nginx-prefix/sbin/nginx root@k8s-node-1:/opt/k8s/kube-nginx/sbin/kube-nginx

scp /opt/k8s/work/nginx-1.15.3/nginx-prefix/sbin/nginx root@k8s-node-2:/opt/k8s/kube-nginx/sbin/kube-nginx

2.3 Configuration

Configure nginx to turn on four-layer transparent forwarding function. The "upstream backend" configuration item fills in the ip and port that the master node kube-apiserver listens on, and the "server" configuration item fills in the ip and port that Ngnix listens on. According to the cluster planning, the following configurations are made:

cd /opt/k8s/work

cat > kube-nginx.conf << \EOF

worker_processes 1;

events {

worker_connections 1024;

}

stream {

upstream backend {

hash $remote_addr consistent;

server 172.24.211.220:6443 max_fails=3 fail_timeout=30s;

server 172.24.211.217:6443 max_fails=3 fail_timeout=30s;

}

server {

listen 127.0.0.1:8443;

proxy_connect_timeout 1s;

proxy_pass backend;

}

}

EOF

Distribution of the configuration file:

scp kube-nginx.conf root@k8s-master:/opt/k8s/kube-nginx/conf/kube-nginx.conf scp kube-nginx.conf root@k8s-master-1:/opt/k8s/kube-nginx/conf/kube-nginx.conf scp kube-nginx.conf root@k8s-node-1:/opt/k8s/kube-nginx/conf/kube-nginx.conf scp kube-nginx.conf root@k8s-node-2:/opt/k8s/kube-nginx/conf/kube-nginx.conf

Create startup configuration file, pay attention to file path:

cat > kube-nginx.service <<EOF [Unit] Description=kube-apiserver nginx proxy After=network.target After=network-online.target Wants=network-online.target [Service] Type=forking ExecStartPre=/opt/k8s/kube-nginx/sbin/kube-nginx -c /opt/k8s/kube-nginx/conf/kube-nginx.conf -p /opt/k8s/kube-nginx -t ExecStart=/opt/k8s/kube-nginx/sbin/kube-nginx -c /opt/k8s/kube-nginx/conf/kube-nginx.conf -p /opt/k8s/kube-nginx ExecReload=/opt/k8s/kube-nginx/sbin/kube-nginx -c /opt/k8s/kube-nginx/conf/kube-nginx.conf -p /opt/k8s/kube-nginx -s reload PrivateTmp=true Restart=always RestartSec=5 StartLimitInterval=0 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

Distribute the startup profile:

scp kube-nginx.service root@k8s-master:/usr/lib/systemd/system/kube-nginx.service scp kube-nginx.service root@k8s-master-1:/usr/lib/systemd/system/kube-nginx.service scp kube-nginx.service root@k8s-node-1:/usr/lib/systemd/system/kube-nginx.service scp kube-nginx.service root@k8s-node-2:/usr/lib/systemd/system/kube-nginx.service

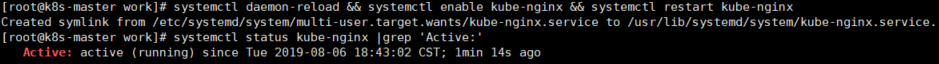

Configuration is completed and start commands are executed at each node:

systemctl daemon-reload && systemctl enable kube-nginx && systemctl restart kube-nginx

Verify that the startup is successful:

systemctl status kube-nginx |grep 'Active:'

So far, preparations for installation and deployment of the k8s cluster have been completed. The next article will describe how to deploy the highly available k8s cluster.