catalogue

1. Operations on the master node

2, Install kubedm, kubelet, and kubectl

1. Specify installation source

3. Specify installation version

4. Set startup and self startup

6. The chain that passes bridged traffic to iptables

7. Perform initialization (operation on the master node)

9. Install pod network plug-in (flannel)

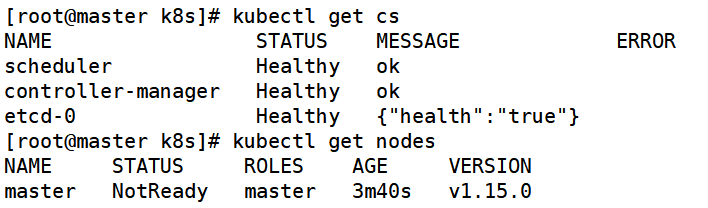

10. View component status & & view node status

11. docker pulls the flannel image

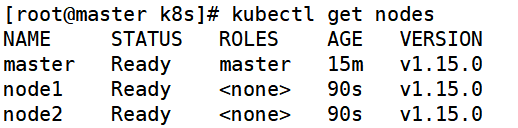

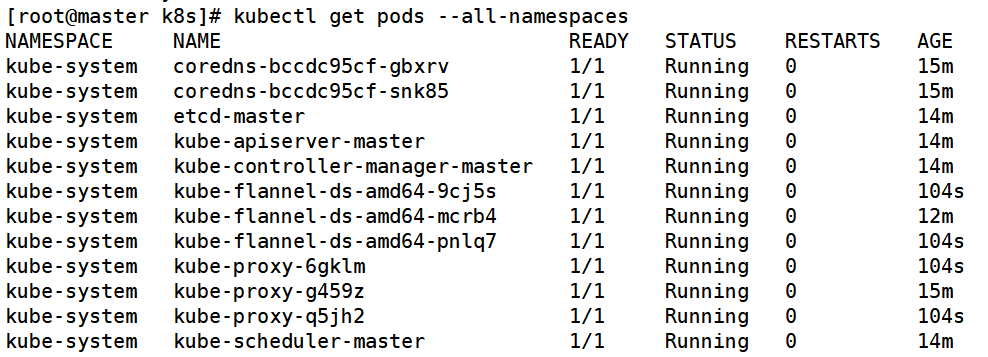

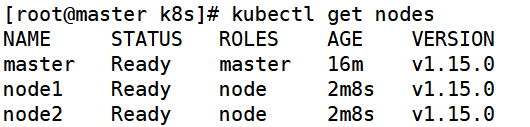

13. View the node status "three ready" on the master node

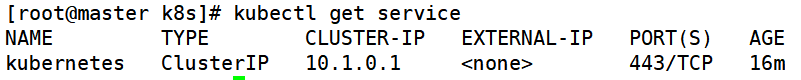

17. View resources discovered by service

| Host name | ip address | Main software |

| master | 192.168.32.11 | docker/kubeadm/kubelet/kubectl/flannel |

| node1 | 192.168.32.12 | docker/kubeadm/kubelet/kubectl/flannel |

| node2 | 192.168.32.13 | docker/kubeadm/kubelet/kubectl/flannel |

1, Deploy docker steps

1. Operations on the master node

(1) Install dependent packages

[root@master ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

(2) Set alicloud image source

[root@master ~]# cd /etc/yum.repos.d/ [root@master yum.repos.d]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

(3) Install docker CE Community Edition

[root@master yum.repos.d]# yum install -y docker-ce [root@master yum.repos.d]# systemctl start docker.service [root@master yum.repos.d]# systemctl enable docker.service

(4) Set mirror acceleration

[root@master yum.repos.d]# mkdir -p /etc/docker

[root@master yum.repos.d]# cd /etc/docker/

[root@master docker]# vim daemon.json

{

"registry-mirrors": ["https://cvzmdwsp.mirror.aliyuncs.com"]

}(5) Turn on routing forwarding

[root@master docker]# vim /etc/sysctl.conf net.ipv4.ip_forward=1 [root@master docker]# sysctl -p net.ipv4.ip_forward = 1

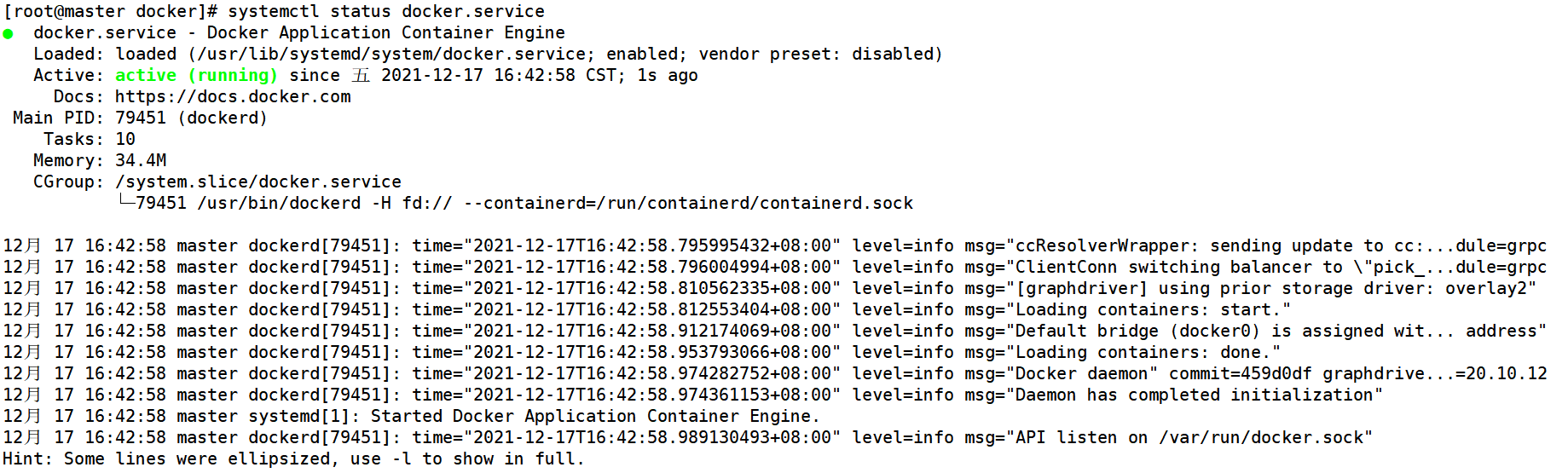

(6) Restart docker and self startup docker

[root@master docker]# systemctl daemon-reload [root@master docker]# systemctl restart docker.service [root@master docker]# systemctl status docker.service

(7) View version

[root@master docker]# docker --version Docker version 20.10.12, build e91ed57

2. Operation on node1 node

(1) Install dependent packages

[root@node1 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

(2) Set alicloud image source

[root@node1 ~]# cd /etc/yum.repos.d/ [root@node1 yum.repos.d]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

(3) Install docker CE Community Edition

[root@node1 yum.repos.d]# yum install -y docker-ce [root@node1 yum.repos.d]# systemctl start docker.service [root@node1 yum.repos.d]# systemctl enable docker.service

(4) Set mirror acceleration

[root@node1 yum.repos.d]# mkdir -p /etc/docker

[root@node1 yum.repos.d]# cd /etc/docker/

[root@node1 docker]# vim daemon.json

{

"registry-mirrors": ["https://cvzmdwsp.mirror.aliyuncs.com"]

}(5) Turn on routing forwarding

[root@node1 docker]# vim /etc/sysctl.conf net.ipv4.ip_forward=1 [root@node1 docker]# sysctl -p net.ipv4.ip_forward = 1

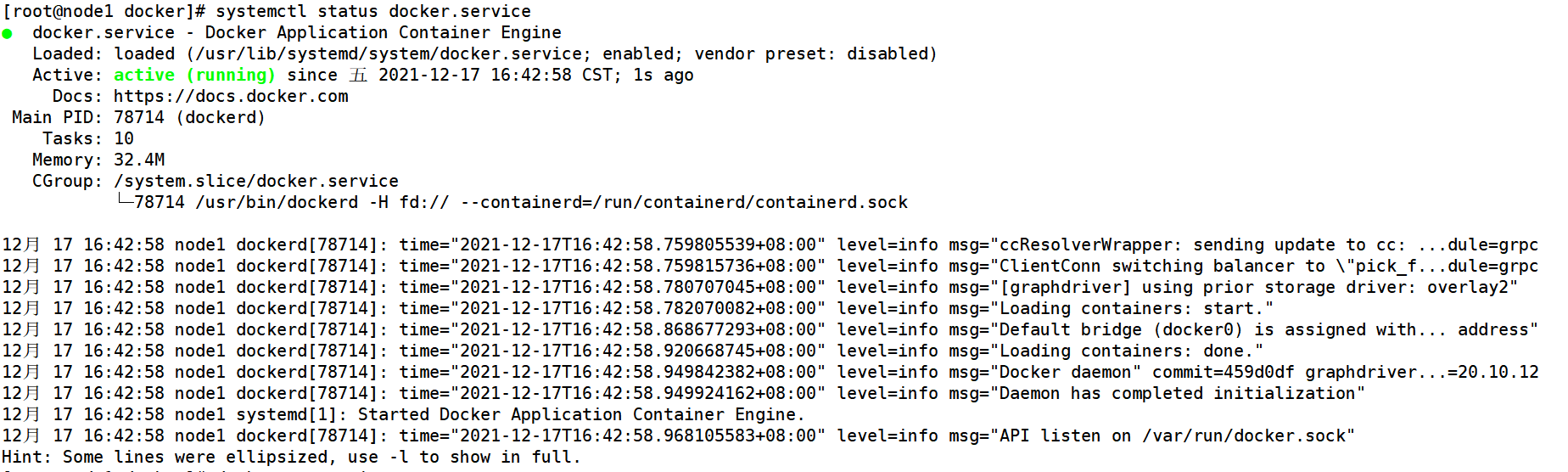

(6) Restart docker and self startup docker

[root@node1 docker]# systemctl daemon-reload [root@node1 docker]# systemctl restart docker.service [root@node1 docker]# systemctl status docker.service

(7) View version

[root@node1 docker]# docker --version Docker version 20.10.12, build e91ed57

3. Operation on node2 node

(1) Install dependent packages

[root@node2 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

(2) Set alicloud image source

[root@node2 ~]# cd /etc/yum.repos.d/ [root@node2 yum.repos.d]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

(3) Install docker CE Community Edition

[root@node2 yum.repos.d]# yum install -y docker-ce [root@node2 yum.repos.d]# systemctl start docker.service [root@node2 yum.repos.d]# systemctl enable docker.service

(4) Set mirror acceleration

[root@node2 yum.repos.d]# mkdir -p /etc/docker

[root@node2 yum.repos.d]# cd /etc/docker/

[root@node2 docker]# vim daemon.json

{

"registry-mirrors": ["https://cvzmdwsp.mirror.aliyuncs.com"]

}(5) Turn on routing forwarding

[root@node2 docker]# vim /etc/sysctl.conf net.ipv4.ip_forward=1 [root@node2 docker]# sysctl -p net.ipv4.ip_forward = 1

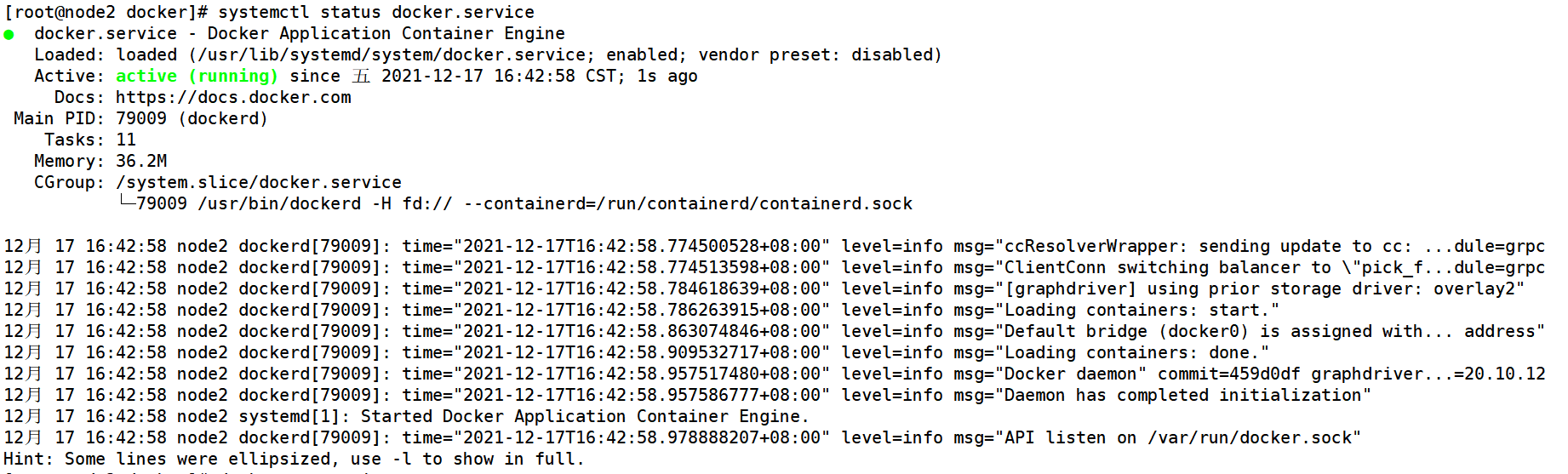

(6) Restart docker and self startup docker

[root@node2 docker]# systemctl daemon-reload [root@node2 docker]# systemctl restart docker.service [root@node2 docker]# systemctl status docker.service

(7) View version

[root@node2 docker]# docker --version Docker version 20.10.12, build e91ed57

2, Install kubedm, kubelet, and kubectl

1. Specify installation source

(1) Operations on the master node

[root@master ~]# cd /etc/yum.repos.d/ [root@master yum.repos.d]# vim kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

(2) Operation on node1 node

[root@node1 ~]# cd /etc/yum.repos.d/ [root@node1 yum.repos.d]# vim kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

(3) Operation on node2 node

[root@node2 ~]# cd /etc/yum.repos.d/ [root@node2 yum.repos.d]# vim kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

2. Local mapping / etc/hosts

(1) Operations on the master node

[root@master ~]# vim /etc/hosts 192.168.32.11 master 192.168.32.12 node1 192.168.32.13 node2

(2) Operation on node1 node

[root@node1 ~]# vim /etc/hosts 192.168.32.11 master 192.168.32.12 node1 192.168.32.13 node2

(3) Operation on node2 node

[root@node2 ~]# vim /etc/hosts 192.168.32.11 master 192.168.32.12 node1 192.168.32.13 node2

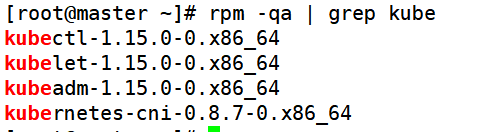

3. Specify installation version

(1) Operations on the master node

[root@master ~]# yum install -y kubelet-1.15.0 kubeadm-1.15.0 kubectl-1.15.0 [root@master ~]# rpm -qa | grep kube

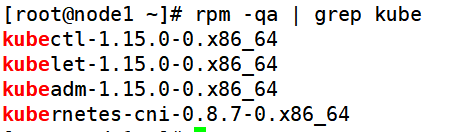

(2) Operation on node1 node

[root@node1 ~]# yum install -y kubelet-1.15.0 kubeadm-1.15.0 kubectl-1.15.0 [root@node1 ~]# rpm -qa | grep kube

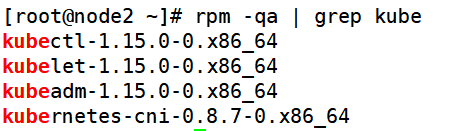

(3) Operation on node2 node

[root@node2 ~]# yum install -y kubelet-1.15.0 kubeadm-1.15.0 kubectl-1.15.0 [root@node2 ~]# rpm -qa | grep kube

4. Set startup and self startup

(1) Operations on the master node

[root@master ~]# systemctl enable kubelet.service

(2) Operation on node1 node

[root@node1 ~]# systemctl enable kubelet.service

(3) Operation on node2 node

[root@node2 ~]# systemctl enable kubelet.service

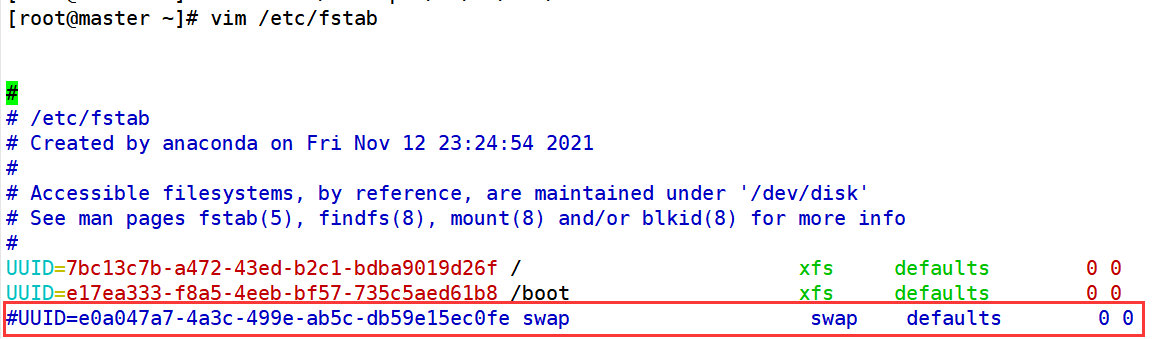

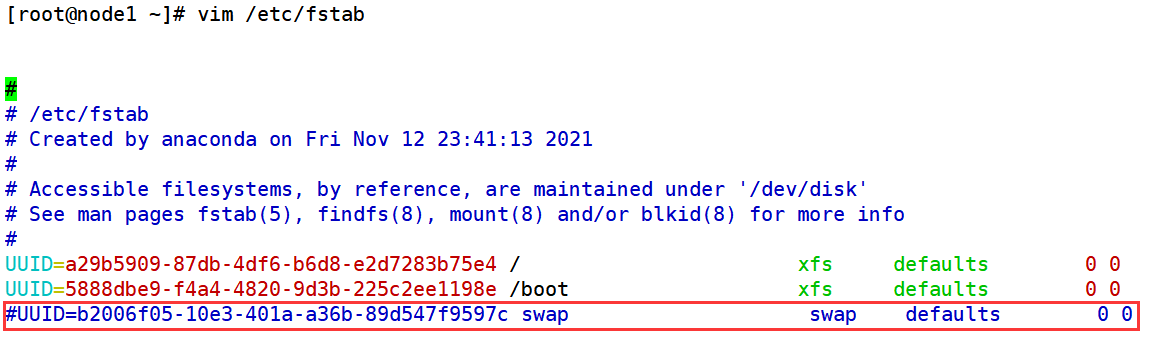

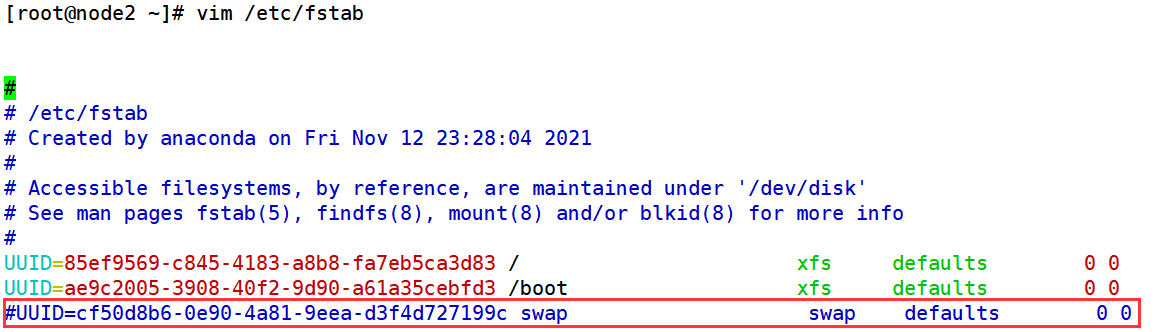

5. Close swap partition

(1) Operations on the master node

① Temporary closure

[root@master ~]# swapoff -a

② Permanent shutdown

[root@master ~]# sed -i 's/.*swap.*/#&/' /etc/fstab

(2) Operation on node1 node

① Temporary closure

[root@node1 ~]# swapoff -a

② Permanent shutdown

[root@node1 ~]# sed -i 's/.*swap.*/#&/' /etc/fstab

(3) Operation on node2 node

① Temporary closure

[root@node2 ~]# swapoff -a

② Permanent shutdown

[root@node2 ~]# sed -i 's/.*swap.*/#&/' /etc/fstab

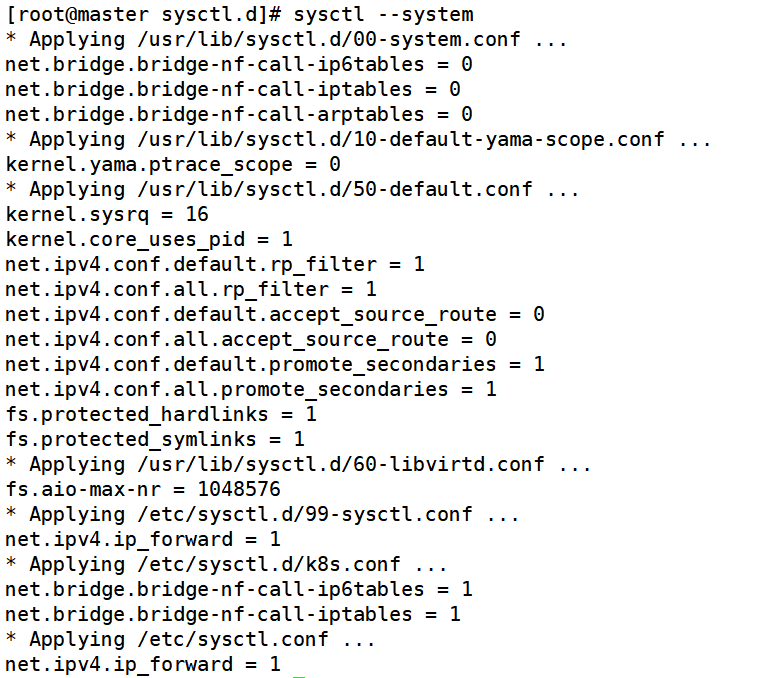

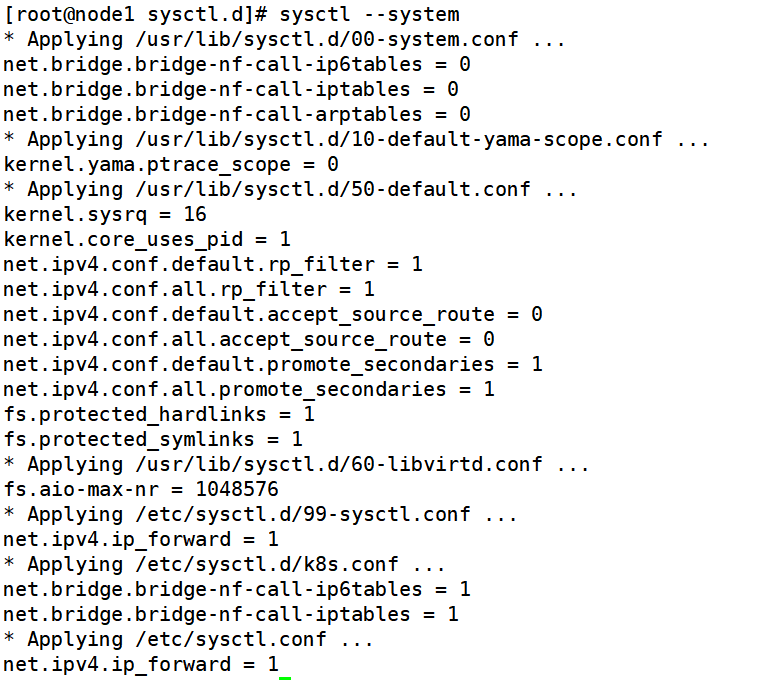

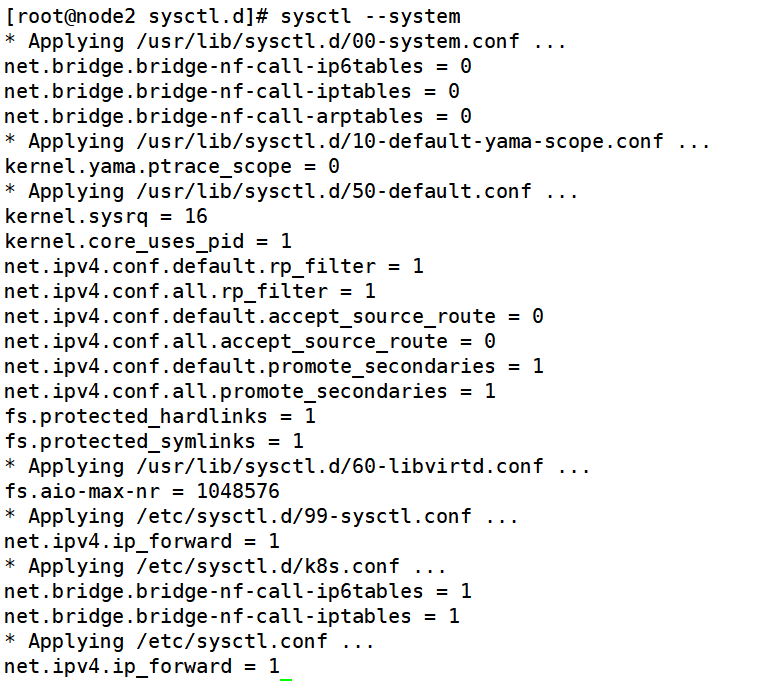

6. The chain that passes bridged traffic to iptables

(1) Operations on the master node

[root@master ~]# cd /etc/sysctl.d/ [root@master sysctl.d]# vim k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 [root@master sysctl.d]# sysctl --system

(2) Operation on node1 node

[root@node1 ~]# cd /etc/sysctl.d/ [root@node1 sysctl.d]# vim k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 [root@node1 sysctl.d]# sysctl --system

(3) Operation on node2 node

[root@node2 ~]# cd /etc/sysctl.d/ [root@node2 sysctl.d]# vim k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 [root@node2 sysctl.d]# sysctl --system

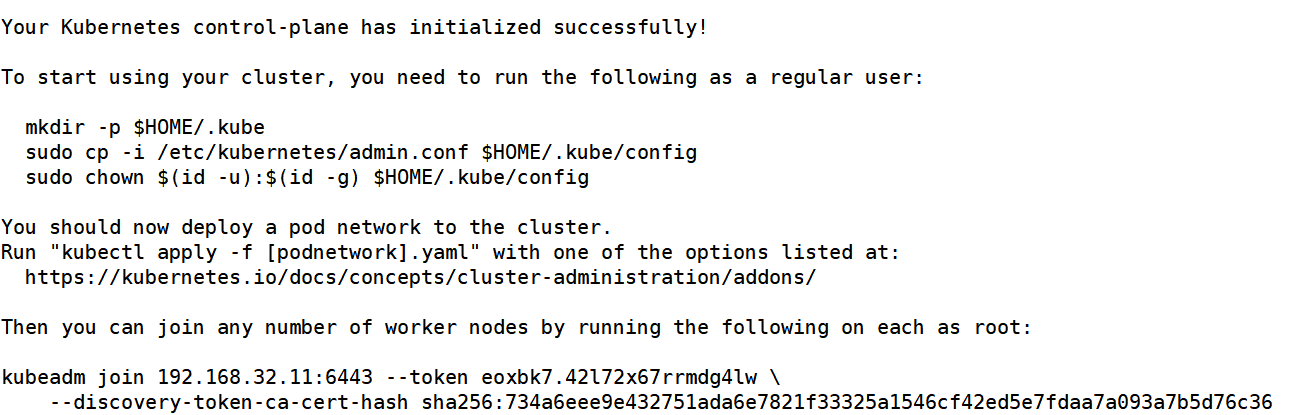

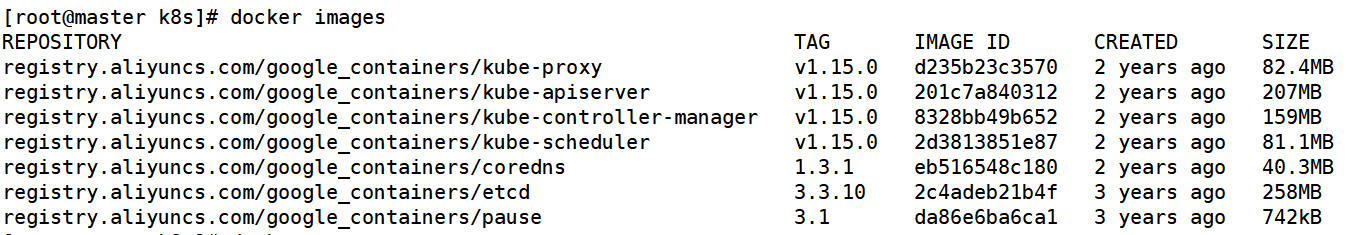

7. Perform initialization (operation on the master node)

[root@master ~]# mkdir k8s [root@master ~]# cd k8s/ [root@master k8s]# kubeadm init \ > --apiserver-advertise-address=192.168.32.11 \ > --image-repository registry.aliyuncs.com/google_containers \ > --kubernetes-version v1.15.0 \ > --service-cidr=10.1.0.0/16 \ > --pod-network-cidr=10.244.0.0/16

8. Using the kubectl tool

[root@master k8s]# mkdir -p $HOME/.kube [root@master k8s]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@master k8s]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

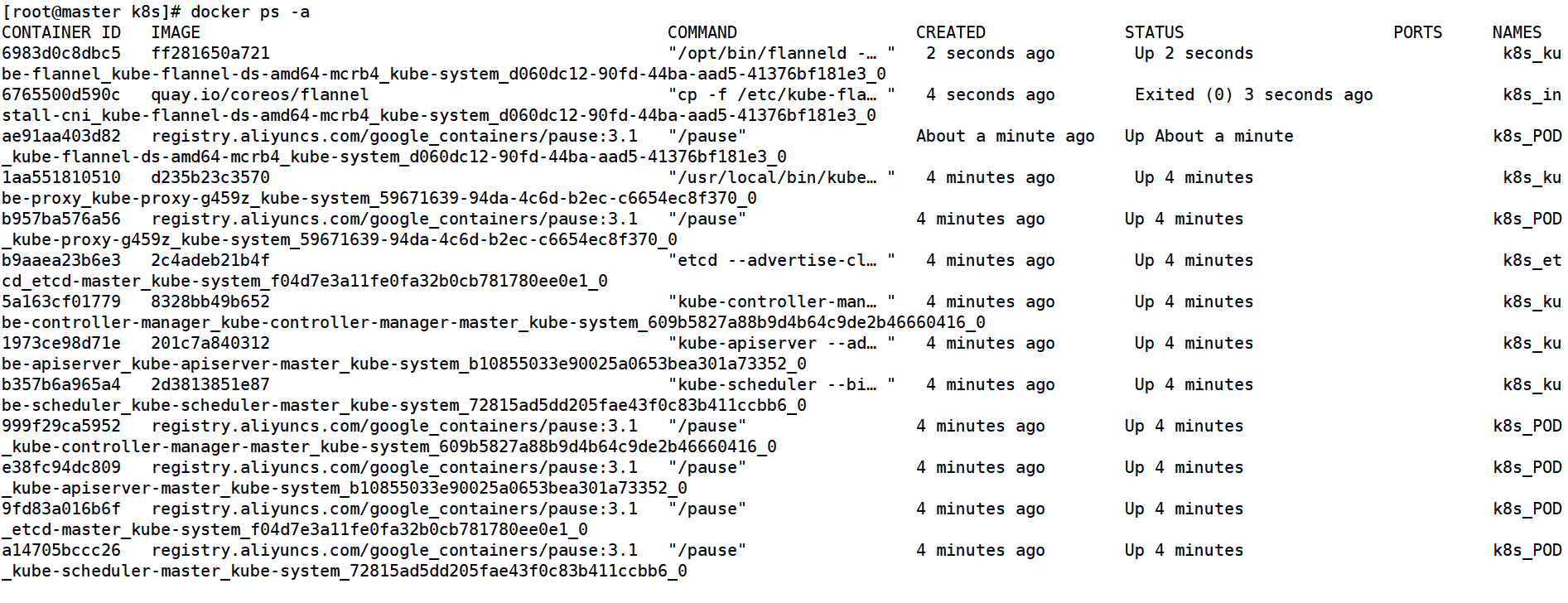

9. Install pod network plug-in (flannel)

[root@master k8s]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/a70459be0084506e4ec919aa1c114638878db11b/Documentation/kube-flannel.yml

10. View component status & & view node status

[root@master k8s]# kubectl get cs [root@master k8s]# kubectl get nodes

11. docker pulls the flannel image

(1) Operation on node1 node

[root@node1 ~]# docker pull lizhenliang/flannel:v0.11.0-amd64

(2) Operation on node2 node

[root@node2 ~]# docker pull lizhenliang/flannel:v0.11.0-amd64

12. Add node

(1) Operation on node1 node

[root@node1 ~]# kubeadm join 192.168.32.11:6443 --token eoxbk7.42l72x67rrmdg4lw --discovery-token-ca-cert-hash sha256:734a6eee9e432751ada6e7821f33325a1546cf42ed5e7fdaa7a093a7b5d76c36

(2) Operation on node2 node

[root@node2 ~]# kubeadm join 192.168.32.11:6443 --token eoxbk7.42l72x67rrmdg4lw --discovery-token-ca-cert-hash sha256:734a6eee9e432751ada6e7821f33325a1546cf42ed5e7fdaa7a093a7b5d76c36

13. View the node status "three ready" on the master node

[root@master k8s]# kubectl get nodes

[root@master k8s]# kubectl get pods --all-namespaces

14. Label node

[root@master k8s]# kubectl label node node1 node-role.kubernetes.io/node=node node/node1 labeled [root@master k8s]# kubectl label node node2 node-role.kubernetes.io/node=node node/node2 labeled

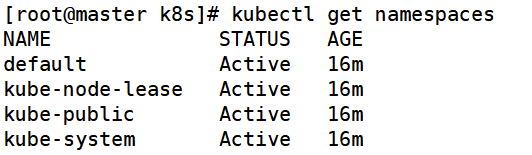

15. View namespaces

[root@master k8s]# kubectl get namespaces

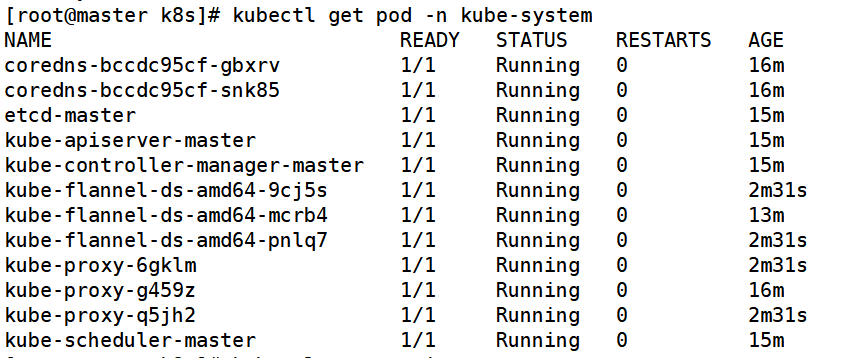

16. View pod namespace

[root@master k8s]# kubectl get pod -n kube-system

17. View resources discovered by service

[root@master k8s]# kubectl get service