WeChat Public Number: Operations and Maintenance Development Story, Author: double winter

1. Overview

Start with two important Pod parameters: CPU Request and Memory Request. In most cases, we do not define these two parameters when defining a Pod, and Kubernetes assumes that the Pod requires very few resources and can be scheduled on any available ode. In this way, if the computing resources in the cluster are not sufficient, a sudden increase in the Pod load in the cluster will result in a serious shortage of resources for a Node.

To avoid system hang-up, the Node chooses to "clean up" some Pods to free up resources, at which point each Pod may become a victim. However, some Pods have more important responsibilities than others, such as those related to data storage, login, and query balance. Even if the system resources are seriously insufficient, these Pods need to be guaranteed to survive. The core of this security mechanism in Kubernetes is as follows.

- Ensure that different Pod s can only occupy the specified resources through resource quotas

- Allow cluster resources to be overallocated to improve cluster resource utilization

- Classify Pods to ensure different levels of Pods have different quality of service (QoS). Low-level Pods will be cleaned up when resources are insufficient to ensure the stable operation of higher-level Pods

Nodes in the Kubernetes cluster provide computing resources, which are measurable basic resources that can be applied, allocated, and used, distinguishing them from API Resources such as Pod and Services. Currently, computing resources in Kubernetes cluster mainly include CPU, GPU and Memory. Most of the general applications are not GPU-capable. Therefore, this paper focuses on resource management issues of CPU and Memory.

CPU and Memory are used by Pod, so when configuring a Pod, you can specify the amount of CPU and Memory needed for each container by the parameters CPU Request and Memory Request. Kubernetes looks for Node s with sufficient resources based on the value of Request to schedule the Pod, and if not, the schedule fails.

2.Pod Resource Usage Specification

We know that the CPU used by a pod and Memory are dynamic quantities, precisely a range, and are closely related to its load: as the load increases, the CPU and Memory use also increases. Therefore, the most accurate statement is that a process uses 0.1 to 1 CPU, and its memory footprint is 500 MB to 1 GB. There are two limitations for CPU and Emory on the OD container corresponding to Kubernetes:

- Requests indicates that the required resources for the normal operation of the business are reserved resources

- Limit indicates the maximum resource used by the business This value is the maximum resource used value without guaranteeing sufficient resource resources

Among them, the CPU schedules compressable resources in time slices, Memory is a hard-constrained resource type, and limits correspond to the upper limit of the amount of resources, that is, the maximum amount of resources allowed to use this upper limit. Since CPU resources are compressable, the process can never break the upper limit, so it is easier to set them up. The Limit setting for Memory, an incompressible resource, is a problem. If set small, a process will be killed by Kubernetes when it tries to request more than the Limit limit during a busy business period. Therefore, Memory's Request and Limit values need to be set carefully in line with the actual needs of the process. What happens if you don't set the Limit value for CPU or Memory? In this case, the Pod's resource usage has an elastic range. We don't have to brainstorm about the reasonable values of these two Limits, but the problem arises. Consider the following example:

Pod A's Memory Request was set to 1GB and Node A's idle Emory was 1.2GB, which met Pod A's requirements, so Pod A was dispatched to Node A. After three days of running, Pod A's access request increased dramatically, requiring 1.5 GB of memory. Node A now has only 200 MB of memory left. Since Pod A's new memory exceeds system resources, Pod A will be killed by Kubernetes in this case.

A pod without a Limit or with only one of the CPU Limit or Memory Limit settings looks resilient on the surface, but in fact, a Pod with four parameters is in a relatively unstable state, which is only a little more stable than a Pod without four parameters. Understanding this makes it easy to understand the Resource QoS issue.

If we have hundreds or thousands of different Pods, it makes sense to manually set these four parameters for each Pod, then check to make sure they are set. For example, a Pod with more than 2GB of memory or two cores of CPU cannot appear. Finally, you have to manually check whether Pod's resource usage exceeds the limit for different tenants (Namespace). To this end, Kubernetes provides two other related objects, LimitRange and ResourceQuota, which address issues such as default and legal ranges of request and limit parameters, and the latter, which address constraints on tenant resource quotas.

- The CPU rules are as follows:

Unit m,10m=0.01 Kernel, 1 Kernel=1000m

Requests fill in estimates based on actual business usage

Limits = Requests * 20% + Requests

- Memory rules are as follows:

Unit Mi 1024Mi=1G of memory

Requests fill in estimates based on actual business usage

Limits = Requests * 20% + Requests

3.Namespace Resource Management Specification

Business Actual Requests Limit does not exceed 80% of the total to prevent business rollover updates from not having sufficient resources to create Pod s

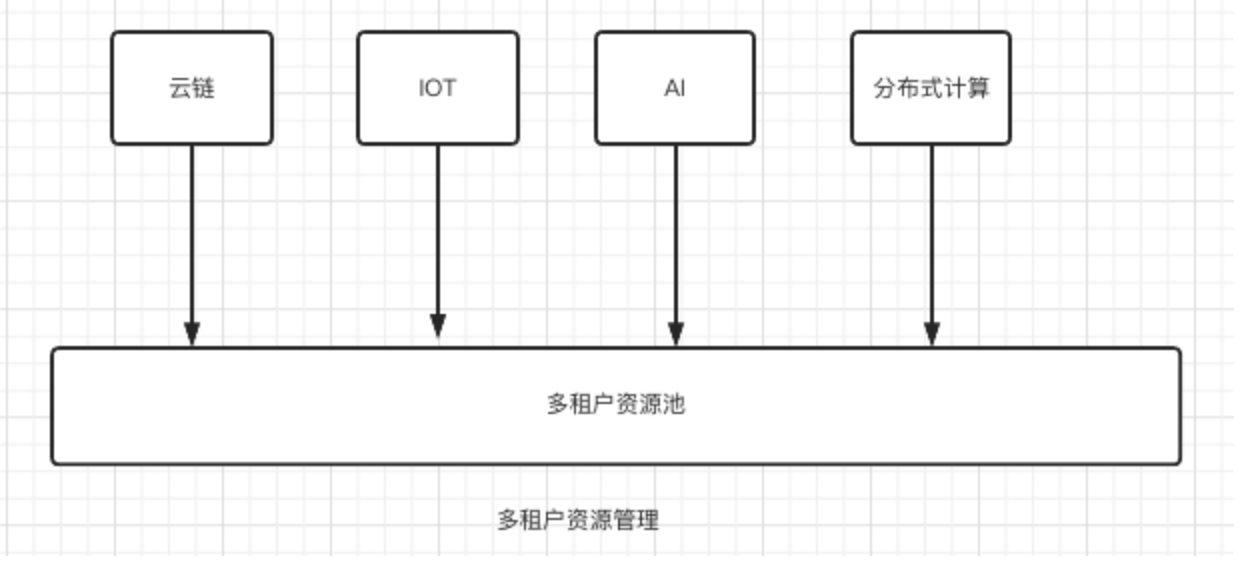

3.1 Multi-tenant Resource Utilization Policy

Limit resource usage for corresponding project groups through ResourceQuota

3.2 Resource Consumption Change Process

4. Resource Monitoring and Inspection

4.1 Resource Use Monitoring

- Namespace Reuqests Resource Usage

sum (kube_resourcequota{type="used",resource="requests.cpu"}) by (resource,namespace) / sum (kube_resourcequota{type="hard",resource="requests.cpu"}) by (resource,namespace) * 100

sum (kube_resourcequota{type="used",resource="requests.memory"}) by (resource,namespace) / sum (kube_resourcequota{type="hard",resource="requests.memory"}) by (resource,namespace) * 100

- Namespace Limit Resource Usage

sum (kube_resourcequota{type="used",resource="limits.cpu"}) by (resource,namespace) / sum (kube_resourcequota{type="hard",resource="limits.cpu"}) by (resource,namespace) * 100

sum (kube_resourcequota{type="used",resource="limits.memory"}) by (resource,namespace) / sum (kube_resourcequota{type="hard",resource="limits.memory"}) by (resource,namespace) * 100

4.2 View through Grafana

- CPU Request Rate

sum (kube_resourcequota{type="used",resource="requests.cpu",namespace=~"$NameSpace"}) by (resource,namespace) / sum (kube_resourcequota{type="hard",resource="requests.cpu",namespace=~"$NameSpace"}) by (resource,namespace)

- Memory Request Rate

sum (kube_resourcequota{type="used",resource="requests.memory",namespace=~"$NameSpace"}) by (resource,namespace) / sum (kube_resourcequota{type="hard",resource="requests.memory",namespace=~"$NameSpace"}) by (resource,namespace)

- CPU Limit Rate

sum (kube_resourcequota{type="used",resource="limits.cpu"}) by (resource,namespace) / sum (kube_resourcequota{type="hard",resource="limits.cpu"}) by (resource,namespace)

- Memory Limit Rate

sum (kube_resourcequota{type="used",resource="limits.memory"}) by (resource,namespace) / sum (kube_resourcequota{type="hard",resource="limits.memory"}) by (resource,namespace)

4.3 Viewing resource usage within a cluster

- View resource usage

[root@k8s-dev-slave04 yaml]# kubectl describe resourcequotas -n cloudchain--staging Name: mem-cpu-demo Namespace: cloudchain--staging Resource Used Hard -------- ---- ---- limits.cpu 200m 500m limits.memory 200Mi 500Mi requests.cpu 150m 250m requests.memory 150Mi 250Mi

- View the event event event to determine whether it was created properly

[root@kevin ~]# kubectl get event -n default LAST SEEN TYPE REASON OBJECT MESSAGE 46m Warning FailedCreate replicaset/hpatest-57965d8c84 Error creating: pods "hpatest-57965d8c84-s78x6" is forbidden: exceeded quota: mem-cpu-demo, requested: limits.cpu=400m,limits.memory=400Mi, used: limits.cpu=200m,limits.memory=200Mi, limited: limits.cpu=500m,limits.memory=500Mi 29m Warning FailedCreate replicaset/hpatest-57965d8c84 Error creating: pods "hpatest-57965d8c84-5w6lk" is forbidden: exceeded quota: mem-cpu-demo, requested: limits.cpu=400m,limits.memory=400Mi, used: limits.cpu=200m,limits.memory=200Mi, limited: limits.cpu=500m,limits.memory=500Mi 13m Warning FailedCreate replicaset/hpatest-57965d8c84 Error creating: pods "hpatest-57965d8c84-w2qvz" is forbidden: exceeded quota: mem-cpu-demo, requested: limits.cpu=400m,limits.memory=400Mi, used: limits.cpu=200m,limits.memory=200Mi, limited: limits.cpu=500m,limits.memory=500Mi