I Introduction to ConfigMap

Configmap is used to save configuration data in the form of key value pairs.

The configMap resource provides a way to inject configuration data into the Pod.

Decouple the image from the configuration file to realize the portability and reusability of the image.

Typical usage scenarios:

Populates the value of the environment variable Set command line parameters within the container Populate the profile of the volume

II Create configmap

There are four ways to create ConfigMap:

Create using literals Create with file Create using directory to write configmap of yaml File creation

1. Create with literal value

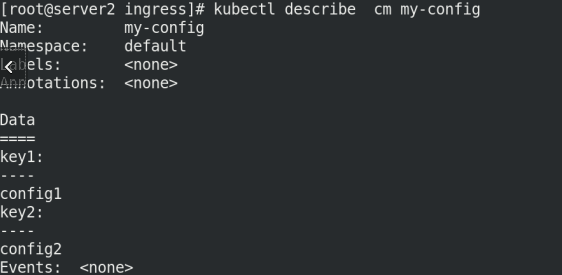

Create cm and view information!

kubectl create configmap my-config --from-literal=key1=config1 --from-literal=key2=config2 kubectl get cm kubectl describe cm my-config

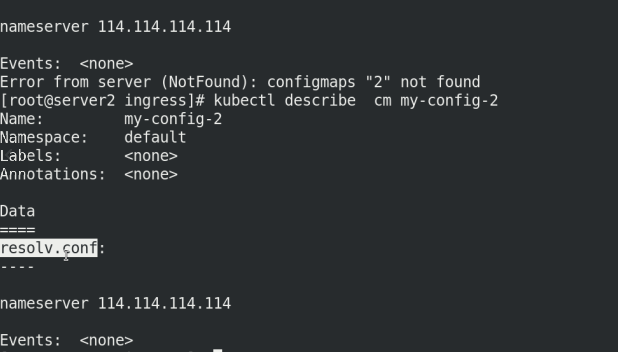

2. File creation

kubectl create configmap my-config-2 --from-file=/etc/resolv.conf kubectl get cm NAME DATA AGE kube-root-ca.crt 1 5d18h my-config 2 98s my-config-2 1 13s kubectl describe cm my-config-2

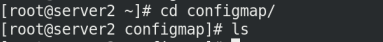

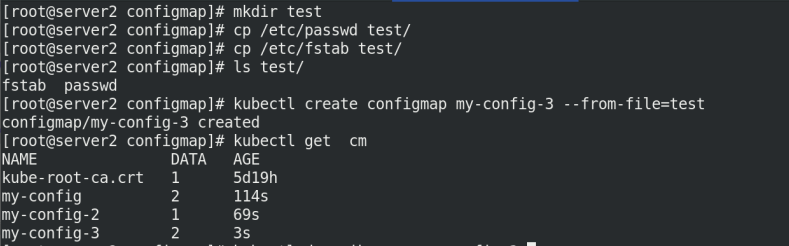

Create using directory

Create test directory

mkdir configmap cd configmap/ ls mkdir test cp /etc/passwd test/ cp /etc/fstab test/ ls test/ kubectl create configmap my-config-3 --from-file=test kubectl get cm kubectl describe cm my-config-3

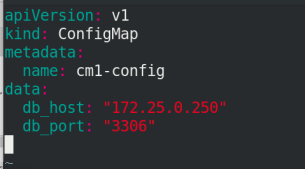

4. Compile the yaml file of configmap

vim cm1.yml apiVersion: v1 kind: ConfigMap metadata: name: cm1-config data: db_host: "172.25.0.250" db_port: "3306"

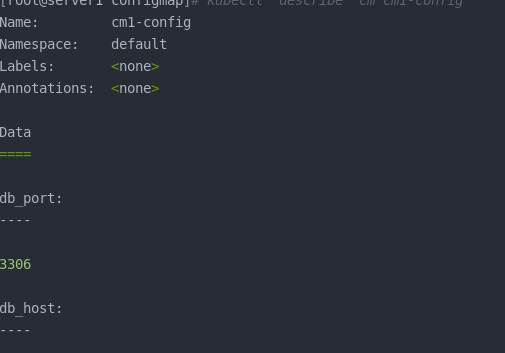

kubectl apply -f cm1.yml kubectl get cm kubectl describe cm cm1-config

III ConfigMap use

How to use configmap:

Passed directly to by means of environment variables pod Through in pod How to run from the command line As volume Mount to pod within

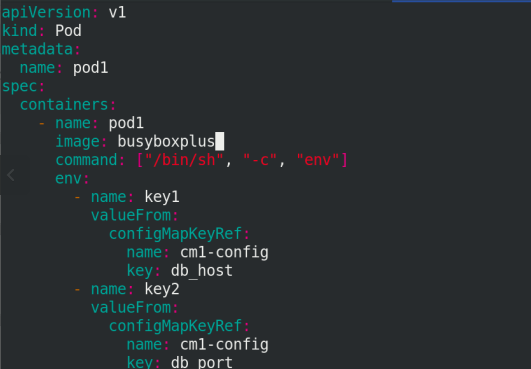

1. How to set environment variables

cd configmap/

vim pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod1

spec:

containers:

- name: pod1

image: busyboxplus

command: ["/bin/sh", "-c", "env"]

env:

- name: key1

valueFrom:

configMapKeyRef:

name: cm1-config

key: db_host

- name: key2

valueFrom:

configMapKeyRef:

name: cm1-config

key: db_port

restartPolicy: Never

Execute pod Yaml file, view pod

After viewing the information of pod, you can delete pod1

kubectl apply -f pod.yaml kubectl get pod

We can see that after the node is created, it will exit automatically!

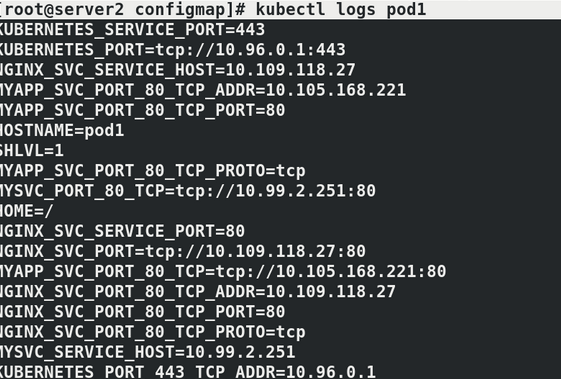

Container execution has been completed. You need to view the results in the pod log

kubectl logs pod1

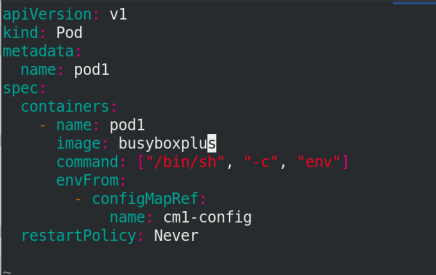

Use default name

vim pod2.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod2

spec:

containers:

- name: pod2

image: busyboxplus

command: ["/bin/sh", "-c", "env"]

envFrom:

- configMapRef:

name: cm1-config

restartPolicy: Never

Execute the file POD2 YML and view

kubectl apply -f pod2.yaml kubectl describe cm cm1-config

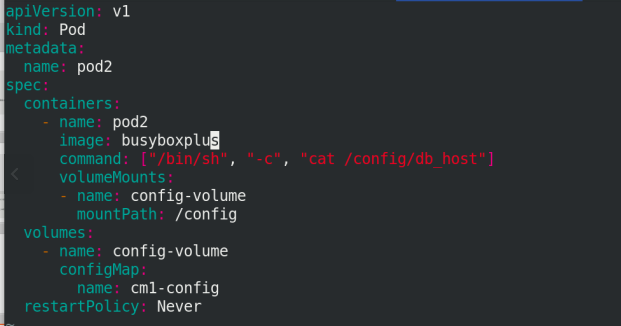

2. By running on the command line of pod

vim pod3.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod3

spec:

containers:

- name: pod2

image: nginx

#command: ["/bin/sh", "-c", "cat /config/db_host"]

volumeMounts:

- name: config-volume

mountPath: /config

volumes:

- name: config-volume

configMap:

name: cm1-config

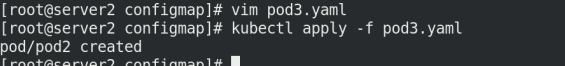

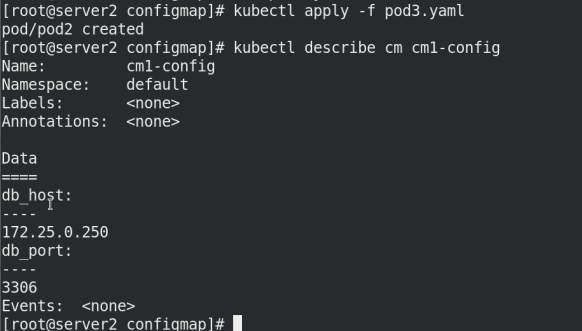

Execute pod3 YML file:

kubectl apply -f pod3.yml kubectl get pod kubectl logs pod2

Comment out the command and restart!

#command: ["/bin/sh", "-c", "cat /config/db_host"] #restartPolicy: Never

Execute pod3.0 again yaml

kubectl apply -f pod3.yaml kubectl describe cm cm1-config

Delete cm from the previous experiment:

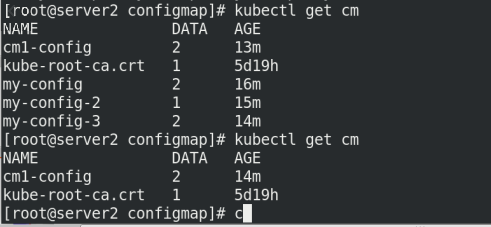

kubectl get cm kubectl delete cm my-config kubectl delete cm my-config-2 kubectl delete cm my-config-3 kubectl get cm

3. Mount to the pod as volume

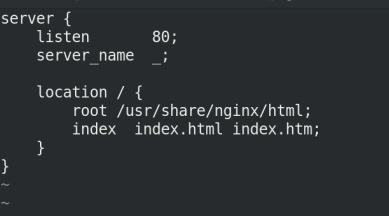

Write the configuration file of nginx first Conf, and then create cm!

vim nginx.conf

*********************************************

server {

listen 80;

server_name _;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

}

**********************************************

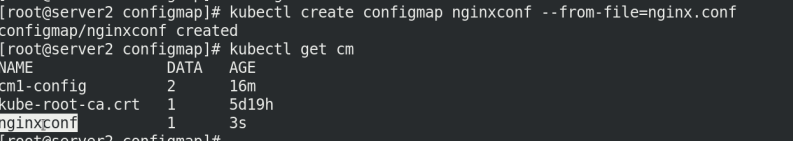

kubectl create configmap nginxconf --from-file=nginx.conf

kubectl get cm

View the information of nginxconf:

kubectl describe cm nginxconf

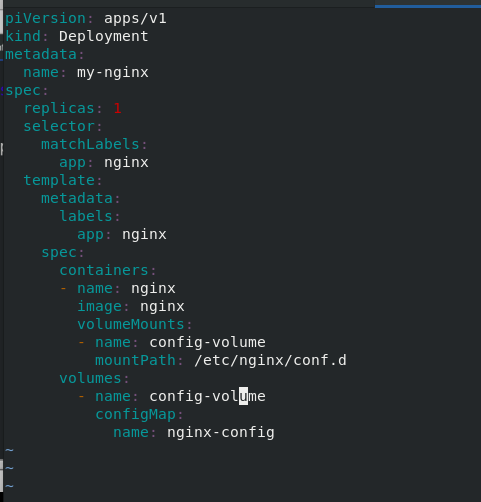

Write the listing nginx yaml

vim nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: config-volume

mountPath: /etc/nginx/conf.d

volumes:

- name: config-volume

configMap:

name: nginxconf

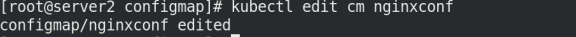

Edit the content of nginxconf: change the port to 8080

kubectl edit cm nginxconf listen 8080;

Execute listing nginx yaml

View pod information:

kubectl apply -f nginx.yaml kubectl get pod kubectl get pod -o wide

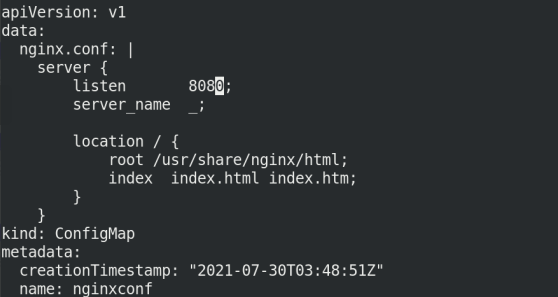

To access this IP address:

curl 10.244.141.225:8080

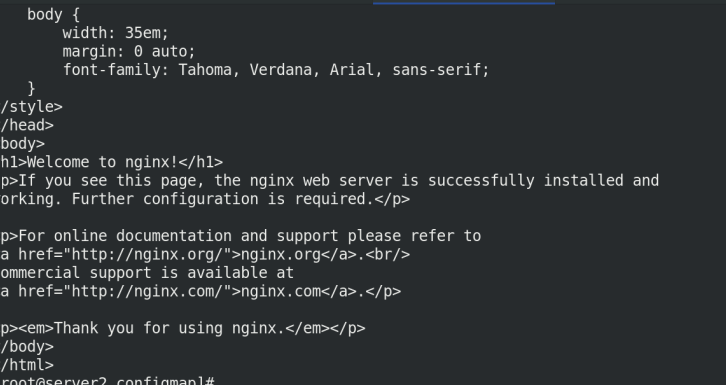

Modify the port to 8000 again

kubectl edit cm nginxconf listen 8000;

Failed to access port 8000!

curl 10.244.141.225:8000

Connection rejection will be displayed!

However, the configuration file in the container has been modified:

Why can't I access port 8000?

Because the copy was not refreshed!

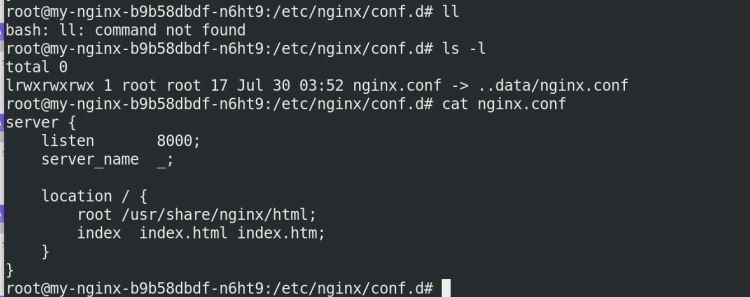

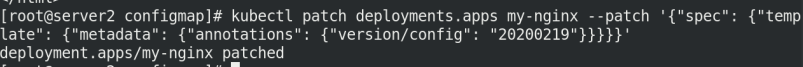

kubectl patch deployments.apps my-nginx --patch '{"spec": {"template": {"metadata": {"annotations": {"version/config": "20200219"}}}}}'

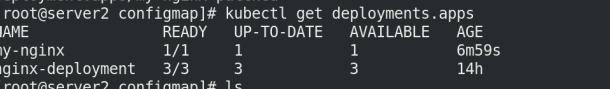

Check whether to refresh through the replica

kubectl get deployments.apps

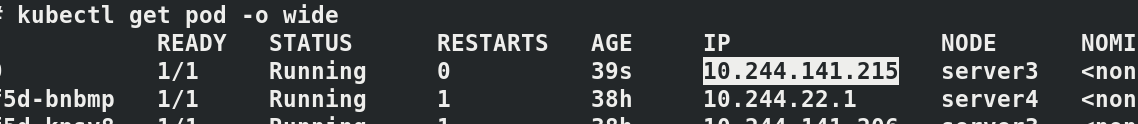

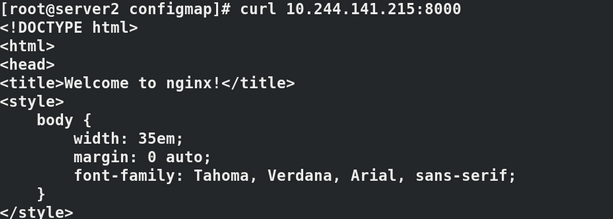

If accessing curl 10.244.141.225:8000, it will also fail because a new IP address has been generated:

kubectl get pod -o wide curl *********:8000

IV Secret overview

The Secret object type is used to store sensitive information, such as passwords, OAuth tokens, and SSH keys.

Sensitive information in secret is more secure and flexible than in the definition of Pod or container image.

Pod can use secret in two ways:

As volume Files in are mounted to pod In one or more containers. When kubelet by pod Used when pulling images.

Type of Secret:

Service Account: Kubernetes Automatically create include access API Credential secret,And automatically modify pod To use this type of secret. Opaque: use base64 Information can be stored by encoding base64 --decode The original data is obtained by decoding, so the security is weak. kubernetes.io/dockerconfigjson: For storage docker registry Authentication information for.

1.Service Account

When the serviceaccout is created, Kubernetes will create the corresponding secret by default. The corresponding secret will be automatically attached to / run / secrets / Kubernetes IO / serviceaccount directory.

kubectl run nginx --image=nginx kubectl exec -it nginx -- bash root@nginx:/# cd /var/run/secrets/kubernetes.io/serviceaccount/ root@nginx:/var/run/secrets/kubernetes.io/serviceaccount# ls ca.crt namespace token

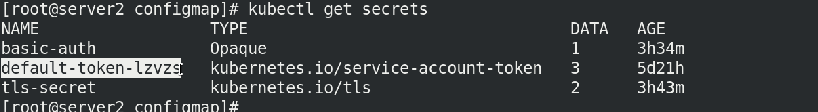

View the secrets command

kubectl get secrets

To view the secrets information:

kubectl describe default-token-lzvzs

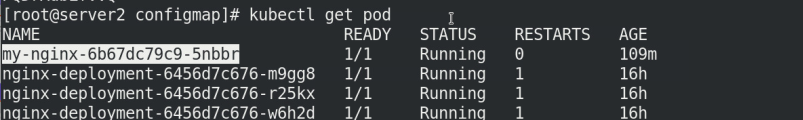

Let's go back to the information of pod. We can find that there is also the key of ca.crt:

kubectl get pod kubectl describe my-nginx-6b6************* kubectl describe cm kube-root-ca.crt

2.Opaque Secret

The value of Opaque Secret is the base64 encoded value.

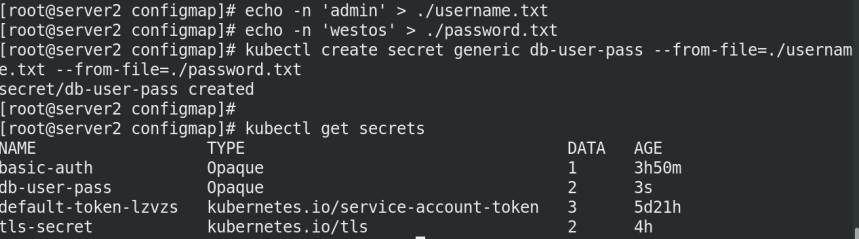

Create Secret from file

echo -n 'admin' > ./username.txt echo -n 'westos' > ./password.txt kubectl create secret generic db-user-pass --from-file=./username.txt --from-file=./password.txt secret/db-user-pass created kubectl get secrets

View DB user pass information:

kubectl describe secrets db-user-pass

By default, kubectl get and kubectl describe do not display the password for security. You can view it in the following ways

Check the secret in yaml format, and you can see the authentication information in base64 format

kubectl get secrets db-user-pass -o yaml

We can also view the plaintext encrypted by base64 in this way

echo YWRtaW4= | base64 -d

V secret application

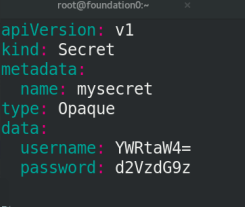

1. Create a secret

echo -n 'admin' | base64 YWRtaW4= echo -n 'westos' | base64 d2VzdG9z vim secret.yaml apiVersion: v1 kind: Secret metadata: name: mysecret type: Opaque data: username: YWRtaW4= password: d2VzdG9z

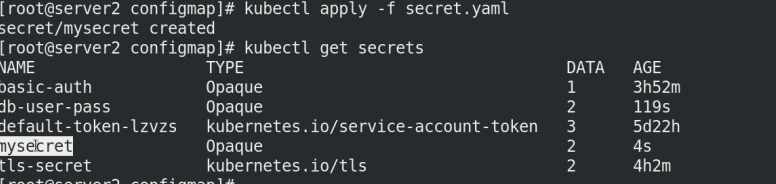

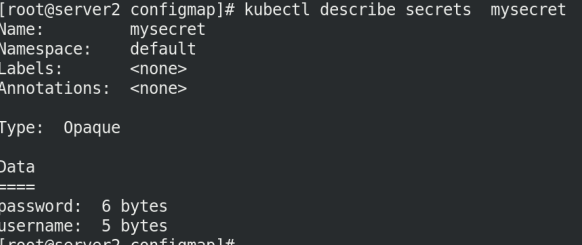

Execute the file secret Yaml and view the secret

kubectl apply -f secret.yaml kubectl get secret kubectl describe secrets mysecret

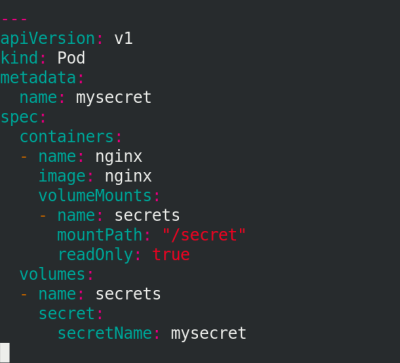

2. Mount the Secret to the Volume

In secret Add content after yaml!

vim secret.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: mysecret

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: secrets

mountPath: "/secret"

readOnly: true

volumes:

- name: secrets

secret:

secretName: mysecret

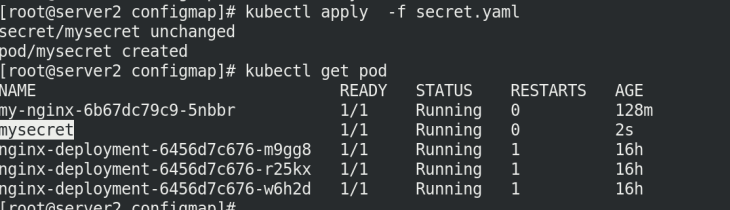

Execute secret Yaml file, view pod

kubectl apply -f secret.yaml kubectl get pod

Enter the container mysecret to view:

kubectl exec -it mysecret -- bash pwd cd secret/ ls

You can see that the file is mounted in / secret / under the root directory

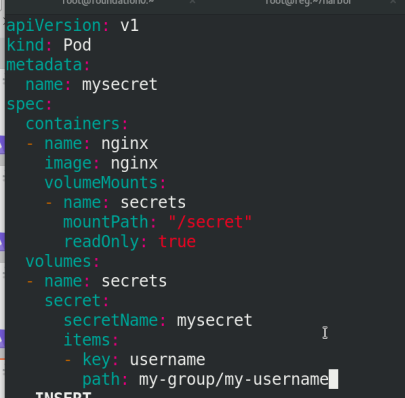

3. Map the secret key to the specified path

Specify path / secret / my group / my username

vim secret.yaml

apiVersion: v1

kind: Pod

metadata:

name: mysecret

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: secrets

mountPath: "/secret"

readOnly: true

volumes:

- name: secrets

secret:

secretName: mysecret

items:

- key: username

path: my-group/my-username

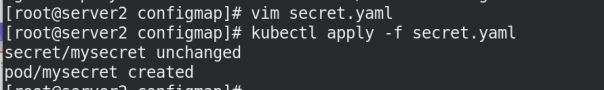

Execute secret again Yaml file!

kubectl apply -f secret.yaml

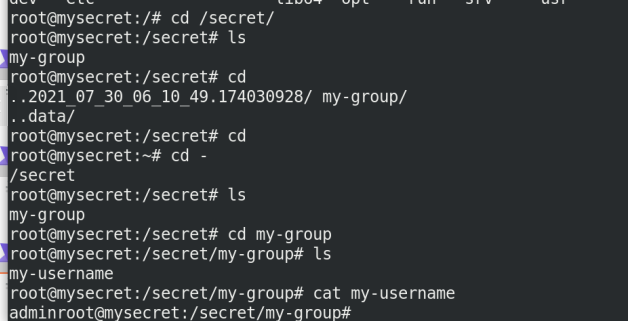

Enter the node terminal to view

kubectl exec -it mysecret -- bash cd /secret/ cd my-group ls cat my-username

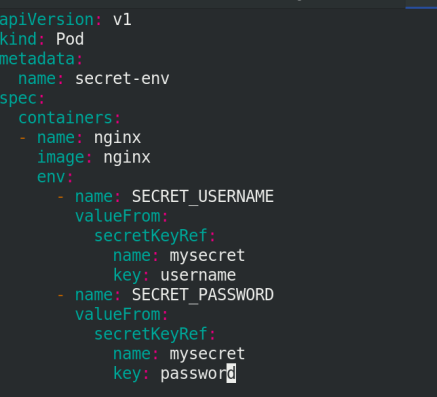

4. Set Secret as the environment variable

vim secret.yaml

vim secret.yaml

apiVersion: v1

kind: Pod

metadata:

name: secret-env

spec:

containers:

- name: nginx

image: nginx

env:

- name: SECRET_USERNAME

valueFrom:

secretKeyRef:

name: mysecret

key: username

- name: SECRET_PASSWORD

valueFrom:

secretKeyRef:

name: mysecret

key: password

Execute secret Yaml file!

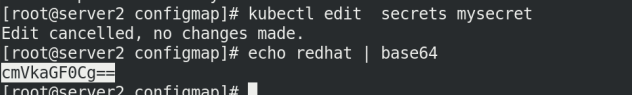

Change the password in mysecret!

kubectl apply -f secret.yaml echo redhat | base64 kubectl edit secrets mysecret

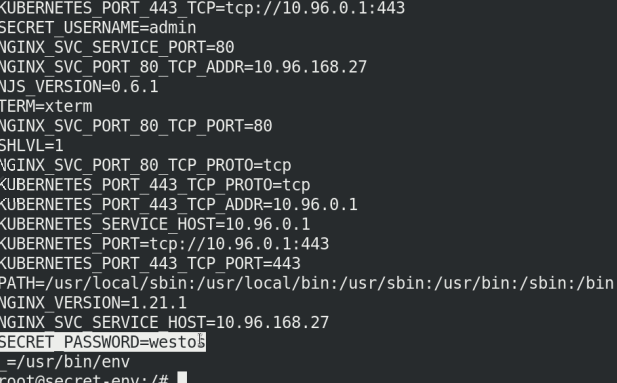

env check whether there is authentication file information in the environment variable

kubectl exec -it secret-env -- bash env

Note: it is convenient for environment variables to read Secret, but it cannot support dynamic update of Secret, that is, after modifying yaml file, it cannot be changed in time in the container.

So: the password just changed has not been changed dynamically!

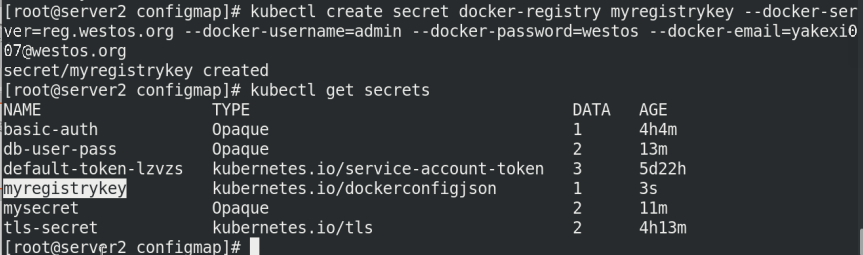

5.docker registry

kubernetes.io/dockerconfigjson is used to store the authentication information of docker registry.

kubectl create secret docker-registry myregistrykey --docker-server=reg.westos.org --docker-username=admin --docker-password=westos --docker-email=yakexi007@westos.org kubectl get secrets

Write a resource list and pull the image in the private warehouse

vim registry.yaml

apiVersion: v1

kind: Pod

metadata:

name: mypod

spec:

containers:

- name: game2048

image: reg.westos.org/westos/game2048

imagePullSecrets:

- name: myregistrykey

Execute registry Yaml file.

kubectl apply -f registry.yaml kubectl get sa kubectl describe sa

Vi Volumes configuration management

The files in the container are temporarily stored on disk, which brings some problems to the special applications running in the container. First, when the container crashes, kubelet will restart the container and the files in the container will be lost because the container will be rebuilt in a clean state. Second, when multiple containers are running simultaneously in a Pod, it is often necessary to share files between these containers. Kubernetes abstracts the Volume object to solve these two problems.

The Kubernetes volume has a clear lifecycle, the same as the Pod that wraps it. Therefore, the volume has a longer lifetime than any container running in the Pod, and the data is retained when the container restarts. Of course, when a Pod no longer exists, the volume will no longer exist. Perhaps more importantly, Kubernetes can support many types of volumes, and Pod can use any number of volumes at the same time.

A volume cannot be mounted to or hard linked to other volumes. Each container in the Pod must independently specify the mount location of each volume.

Kubernetes supports the following types of volumes:

awsElasticBlockStore ,azureDisk,azureFile,cephfs,cinder,configMap,csi downwardAPI,emptyDir,fc (fibre channel),flexVolume,flocker gcePersistentDisk,gitRepo (deprecated),glusterfs,hostPath,iscsi,local, nfs,persistentVolumeClaim,projected,portworxVolume,quobyte,rbd scaleIO,secret,storageos,vsphereVolume

1.emptyDir volume

When the Pod is assigned to a node, an emptyDir volume is created first, and the volume will always exist as long as the Pod runs on the node. As its name indicates, the volume is initially empty. Although the containers in the Pod may have the same or different paths to mount the emptyDir volume, these containers can read and write the same files in the emptyDir volume. When the Pod is deleted from the node for some reason, the data in the emptyDir volume will also be permanently deleted.

Usage scenario of emptyDir:

Cache space, such as disk based merge sort. Checkpoints are provided for long-time computing tasks, so that tasks can easily recover from the pre crash state. stay Web When the server container service data, save the file obtained by the content manager container.

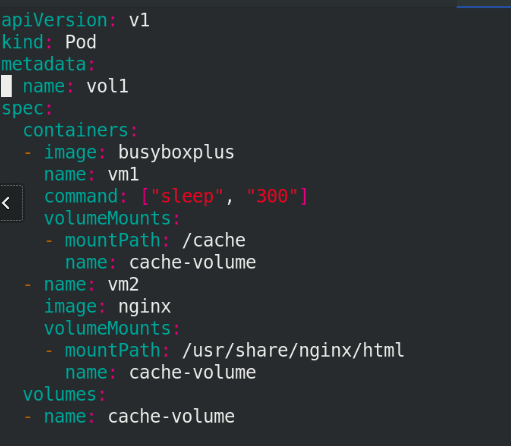

1.1 emptydir volume creation

mkdir volumes

cd volumes/

ls

vim vol1.yaml

apiVersion: v1

kind: Pod

metadata:

name: vol1

spec:

containers:

- image: busyboxplus

name: vm1

command: ["sleep", "300"]

volumeMounts:

- mountPath: /cache

name: cache-volume

- name: vm2

image: nginx

volumeMounts:

- mountPath: /usr/share/nginx/html

name: cache-volume

volumes:

- name: cache-volume

emptyDir:

medium: Memory

sizeLimit: 100Mi

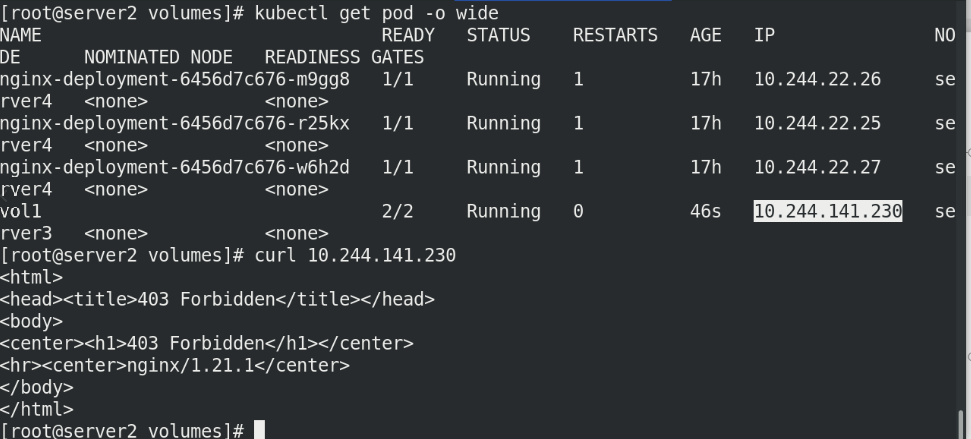

Execute Vol1 Yaml file, view pod information and access IP!

kubectl apply -f vol1.yaml kubectl get pod -o wide # Error 403 when accessing the node curl 10.244.141.230

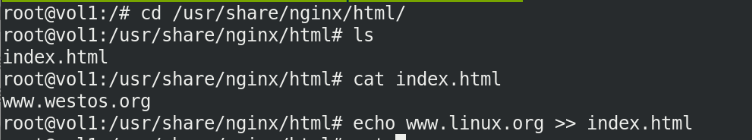

Enter the container and create the default publishing file

kubectl exec -it vol1 -- sh cd /usr/share/nginx/html echo www.linux.westos.org > index.html

Visit again, successful

curl 10.244.141.230

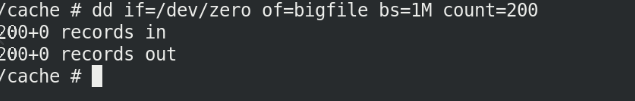

If you create a file larger than the set size of 100M, the node will be broken

kubectl exec -it vol1 -- sh dd if=dev/zero of=bigfile bs=1M count=200

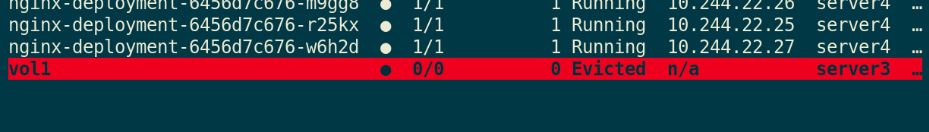

You can see that if the file exceeds sizeLimit, it will be deleted by kubelet evict after a period of time (1-2 minutes). The reason why kubelet is not evict "immediately" is that kubelet is checked regularly, and there will be a time difference.

kubectl get pod kubectl get pod vol1

emptydir disadvantages:

Users cannot be prevented from using memory in time. Although over 1-2 minute kubelet Will Pod Squeeze out, but this time, it's actually right node There are still risks; influence kubernetes Dispatch, because empty dir Not involved node of resources,This will cause Pod""Secretly" used node Memory, but the scheduler does not know; The user cannot perceive that the memory is unavailable in time

2.hostPath volume

The hostPath volume can mount files or directories on the file system of the host node into your Pod. Although this is not what most pods need, it provides a powerful escape Pod for some applications.

Some uses of hostPath are:

Running a requires access Docker Container for engine internal mechanism,mount /var/lib/docker route. Run in container cAdvisor When, to hostPath Mode mount /sys. allow Pod Specify the given hostPath Running Pod Whether it should exist before, whether it should be created, and how it should exist. see pod Whether the scheduling node creates a related directory

vim host.yaml

apiVersion: v1

kind: Pod

metadata:

name: test-pd

spec:

containers:

- image: nginx

name: test-container

volumeMounts:

- mountPath: /test-pd

name: test-volume

volumes:

- name: test-volume

hostPath:

path: /data

type: DirectoryOrCreate

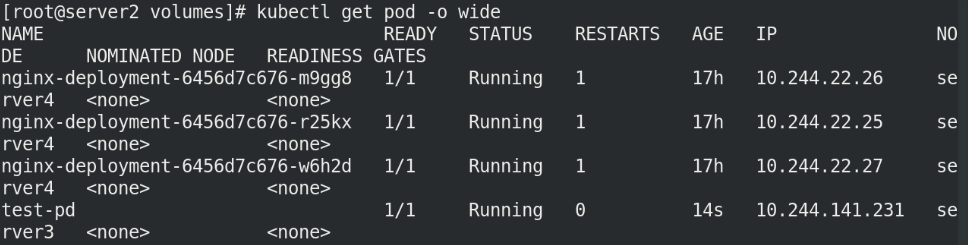

Execute host Yaml file and view the pod node

kubectl apply -f host.yaml kubectl get pod -o wide

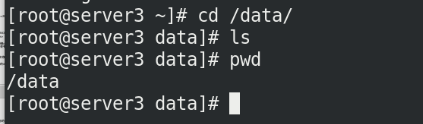

We can see that the file directory is automatically created on node server3!

cd /data/ ls pwd

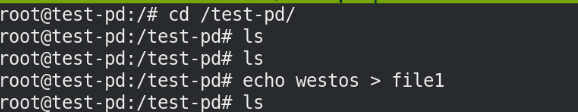

Enter the container to write data:

kubectl exec -it test-pd -- sh cd /test-pd ls echo westos > file1

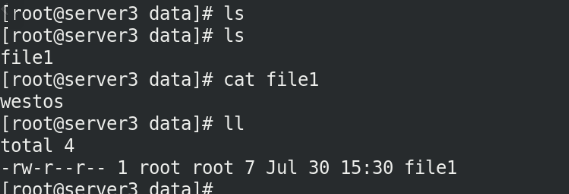

Now go to server3 to view the files under the attached directory / data!

cd /data/ ls cat file1 ll

3.Volumes combined with nfs

To use the shared file system nfs, first install NFS on all nodes and configure NFS on the warehouse node

Install on server2,3,4:

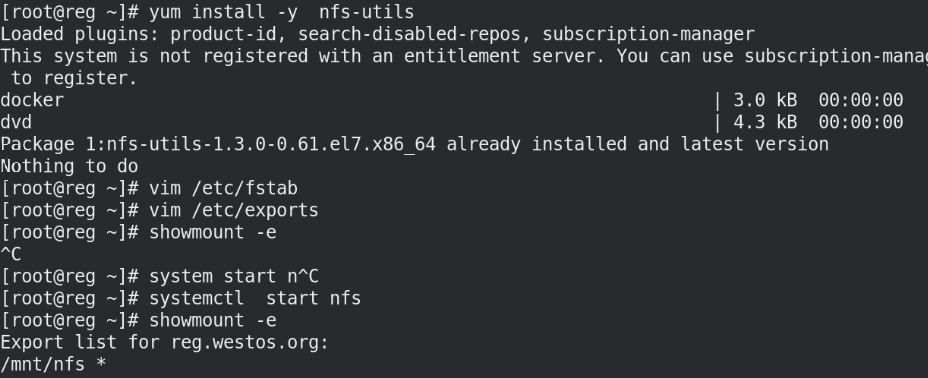

yum install -y nfs-utils

On server1:

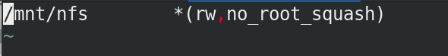

yum install -y nfs-utils vim /etc/exports /mnt/nfs *(rw,no_root_squash) systemctl start nfs showmount -e

On server2

Preparation of inventory documents:

vim nfs.yaml

apiVersion: v1

kind: Pod

metadata:

name: test-pd

spec:

containers:

- image: nginx

name: test-container

volumeMounts:

- mountPath: /usr/share/nginx/html

name: test-volume

volumes:

- name: test-volume

nfs:

server: 172.25.0.1

path: /mnt/nfs

Verify whether the nfs configuration is incorrect

showmount -e 172.25.0.1

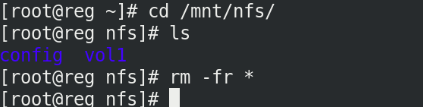

Delete the data of previous docker experiment:

On server1:

cd /mnt/nfs rm -fr *

Then write the release file under / mnt/nfs:

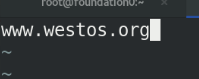

vim index.html www.westos.org

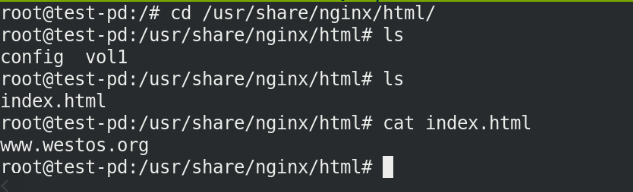

Enter the container and test whether the mount is successful

kubectl exec -it test-pd -- sh cd /usr/share/nginx/html cat index.html

4. Introduction to persistent volume

Persistent volume (PV) is a part of the network storage provided by the administrator in the cluster. Like the nodes in the cluster, PV is also a resource in the cluster. Like volume, it is a volume plug-in, but its life cycle is independent of the Pod using it. PV is an API object that captures the implementation details of NFS, ISCSI, or other cloud storage systems.

There are two ways PV is provided: static and dynamic.

static state PV: Cluster Administrator creates multiple PV,They carry details of real storage that is available to cluster users. They exist in Kubernetes API And can be used for storage. dynamic PV: When an administrator creates a static PV Do not match the user's PVC When, the cluster may try to supply specifically volume to PVC. This supply is based on StorageClass.

The binding of PVC and PV is a one-to-one mapping. If no matching PV is found, the PVC will be unbound and unbound indefinitely.

5. Persistent volume PV -- Static

5.1 creating NFS static PV volumes

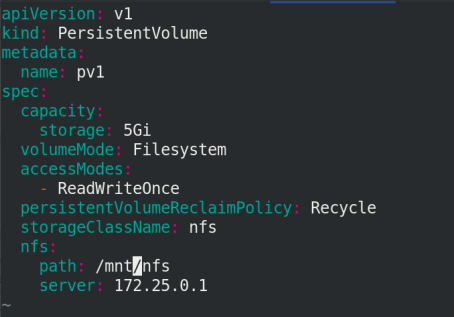

vim pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv1

spec:

capacity:

storage: 5Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

storageClassName: nfs

nfs:

path: /mnt/nfs

server: 172.25.0.1

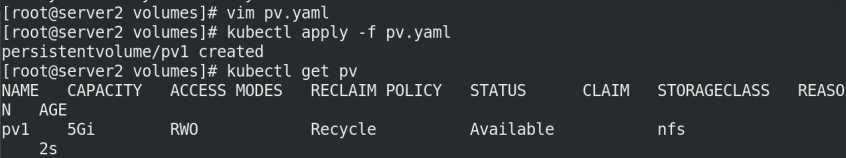

Execute PV Yaml file, view PV

kubectl apply -f pv.yaml kubectl get pv

5.2 creating PVC

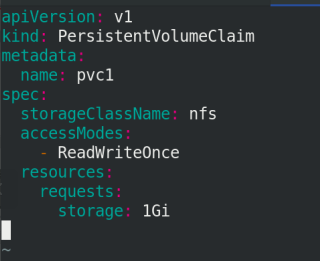

vim pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc1

spec:

storageClassName: nfs

accessModes:

- ReadWriteOnce #ReadWriteMany multipoint read write

resources:

requests:

storage: 1Gi

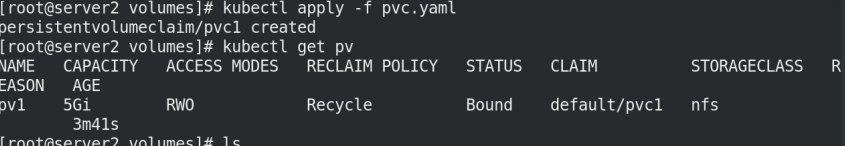

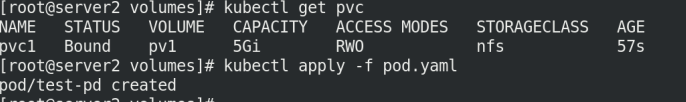

Pull up the pvc container and check the pvc and pv related information

kubectl apply -f pvc.yaml kubectl get pvc kubectl get pv

5.3 Pod mount PV

vim pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: test-pd

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- mountPath: /usr/share/nginx/html

name: pv1

volumes:

- name: pv1

persistentVolumeClaim:

claimName: pvc1

Execute pod Yaml file

kubectl apply -f pod.yaml

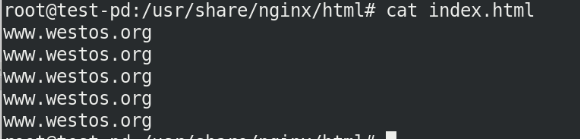

Enter the container terminal to test whether the mount is successful

kubectl exec -it test-pd -- bash

On server1, / mnt/nfs publishes the file index HTML modify:

Check in the container again:

Also updated synchronously:

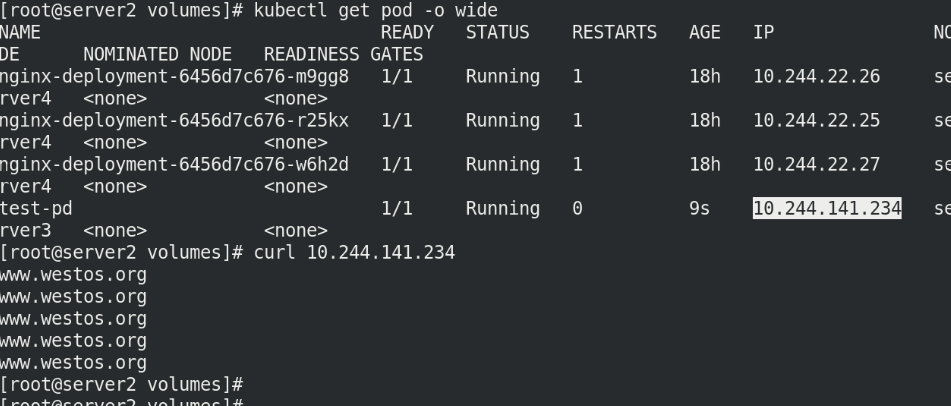

5.4 test access

kubectl get pod -o wide curl 10.244.141.234

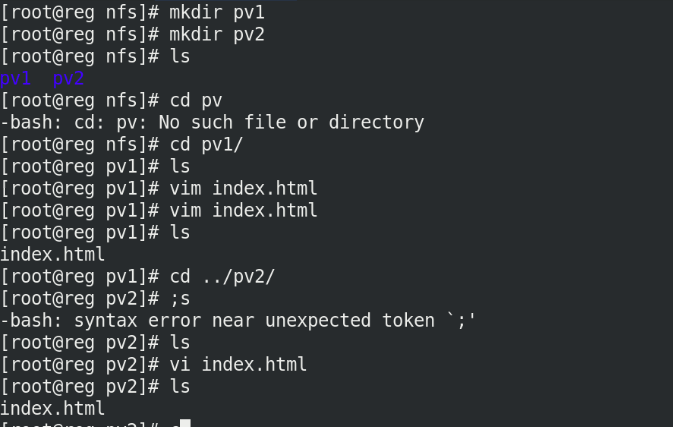

Delete pod and PVC in order pv

On server1, create two directories under / mnt/nfs

Write content separately

mkdir pv1 mkdir pv2 ls cd pv1 echo www.westos.org > index.html cd ../pv2 echo www.redhat.com > index.html

5.5 adding pv

Edit pv Yaml add another pv

vim pv.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv2

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Recycle

storageClassName: nfs

nfs:

path: /mnt/nfs/pv2

server: 172.25.0.1

kubectl apply -f pv.yml kubectl get pv

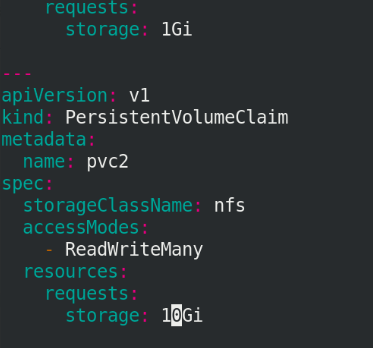

5.6 adding pvc

Modify pvc file:

vim pvc.yml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc2

spec:

storageClassName: nfs

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

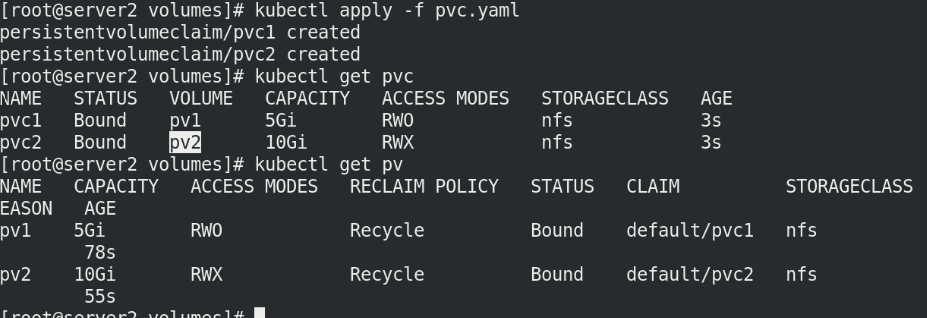

Execute pvc Yaml file and view pvc

kubectl apply -f pvc.yaml kubectl get pvc kubectl get pv

5.7 adding pod

Edit the file pod yaml

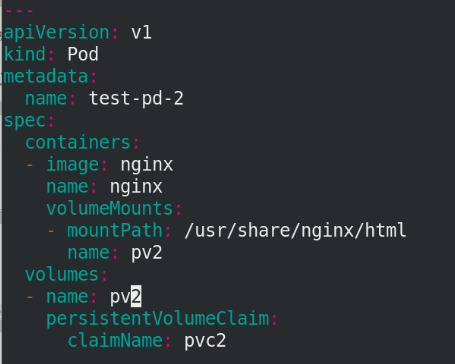

vim pod.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: test-pd-2

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- mountPath: /usr/share/nginx/html

name: pv2

volumes:

- name: pv2

persistentVolumeClaim:

claimName: pvc2

5.8 test access

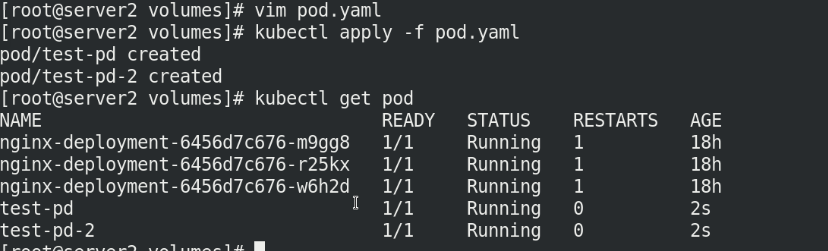

Execute pod Yaml file, view the pod node information, and access the IP address

kubectl apply -f pod.yaml kubectl get pod -o wide curl ******** curl ***********

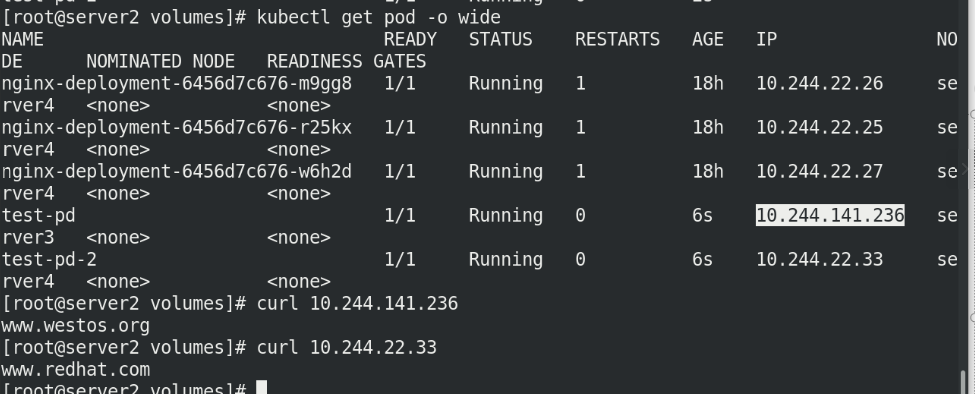

Delete the pod and create it again. pvc still exists and can still be used. Note that ip needs to be obtained again

kubectl delete -f pod.yml kubectl get pvc kubectl apply -f pod.yml kubectl get pod -o wide curl **** curl ******

After deleting pvc, the pv state does not change because it is static

kubectl delete -f pvc.yml kubectl get pvc kubectl get pv

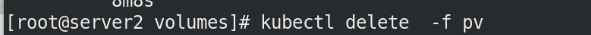

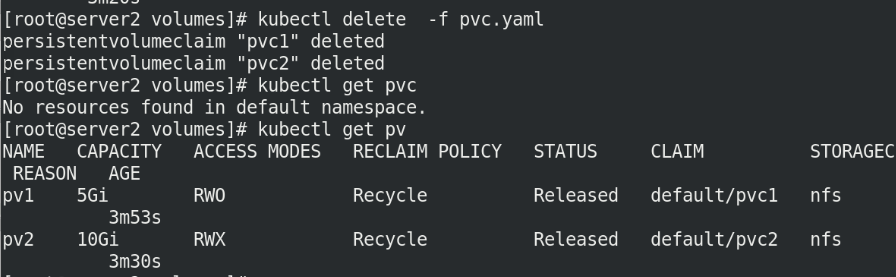

5.9 delete pv,pvc

Delete all!