Preface:

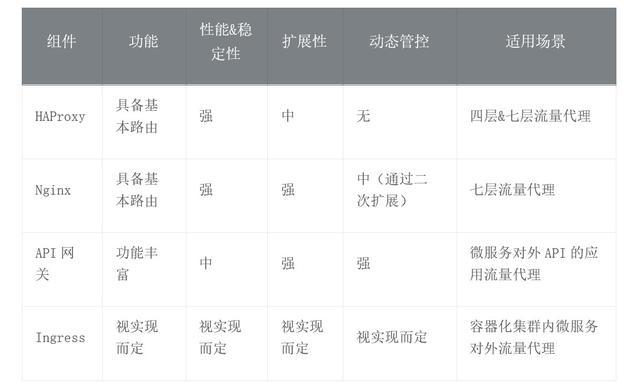

As a portal component of the Internet system, Traffic Entry Agent has many choices, ranging from the old proxy HAProxy, Nginx, to micro-service API gateways Kong, Zuul, to the container Ingress specification and implementation. Function, performance, scalability and application scenarios vary among different choices. Envoy, a CNCF graduation data surface component, is known to more people when a wave of cloud native age strikes. So can Envoy, a great graduate, be a standard component for traffic entry in the cloud age?

Background - Many choices and scenarios for traffic entrances

Under the Internet system, almost all systems that need to be exposed need network agents: the earlier HAProxy and Nginx are still popular; After entering the era of micro-services, API gateways with more functions and better control capabilities become necessary components for traffic entry. After entering the container era, Kubernetes Ingress, as the entrance to the container cluster, is the traffic entry proxy standard for microservices in the container era. For these three types of typical seven-tier agents, the core competencies are compared as follows:

- From the above comparison of core competencies:

- HAProxy&Nginx has been tested for many years for its performance and stability based on its basic routing capabilities. Nginx's downstream community, OpenResty, provides full Lua extensibility, enabling Nginx to be more widely used and extended, such as the API gateway Kong, which is based on Nginx+OpenResty.

- API gateway, as the basic component of microservice to expose external API traffic, provides rich functions and dynamic control capabilities.

- Ingress is the Standard Specification for Kubernetes'inbound traffic, and its capabilities depend on how it is implemented. For example, Nginx-based Ingress implementations are closer to Nginx, and Istio Ingress Gateway is more functional based on Envoy+Istio control surface implementations (essentially Istio Ingress Gateway is more capable than the usual Ingress implementations, but not implemented in accordance with the Ingress specification).

- The question then comes: Can we find a comprehensive technical solution to standardize the flow entrance under the cloud native technology trend as well as the flow entrance?

Introduction to Envoy Core Competencies

Envoy is an open source Edge and Service Agent (ENVOY IS AN OPEN SOURCE EDGE AND SERVICE PROXY, DESIGNED FOR CLOUD-NATIVE APPLICATIONS, @envoyproxy.io) designed for cloud native applications, the third graduate project of the Cloud Native Computing Foundation (CNCF), and GitHub currently has 13k+ Star.

- Envoy has the following main features:

- L4/L7 high performance proxy based on modern C++.

- Transparent proxy.

- Traffic management. Support routing, traffic replication, shunting and other functions.

- Governance characteristics. Supports health check, fuse, current limit, timeout, retry, fault injection.

- Multi-protocol support. Supports protocol proxy and governance such as HTTP/1.1, HTTP/2, GRPC, WebSocket, etc.

- Load balancing. Weighted polling, weighted minimum request, Ring hash, Maglev, random, and so on. Supports area-aware routing, failover, and other features.

- Dynamic configuration API. Provides a robust interface to control agent behavior, enabling Envoy to dynamically configure hot updates.

- Observability design. Provides high observability for seven-tier traffic and native support for distributed tracking.

- Supports hot restart. Seamless upgrade of Envoy is possible.

- Customize plug-in capabilities. Lua and Multilingual Extended Sandbox WebAssembly.

- Overall, Envoy is a "double eugenic" with excellent functionality and performance. Envoy has a congenital advantage in the actual business traffic entry proxy scenario and can be used as a standard technology solution for cloud native technology trend traffic entry:

- Richer features than HAProxy, Nginx

Compared to HAProxy and Nginx, which provide basic functions required by traffic agents (more advanced functions are usually implemented through extensions), Envoy itself has implemented quite a number of advanced functions required by agents based on C++, such as advanced load balancing, fusing, current limiting, fault injection, traffic replication, observability, and so on. More rich functionality not only makes Envoy naturally usable in many scenarios, but native C++ implementations have more performance advantages than extended implementations. - Similar to Nginx, much better than traditional API gateways

Envoy and Nginx perform equally well on common protocol proxies, such as HTTP, in terms of performance. Performance advantages are obvious compared to traditional API gateways.

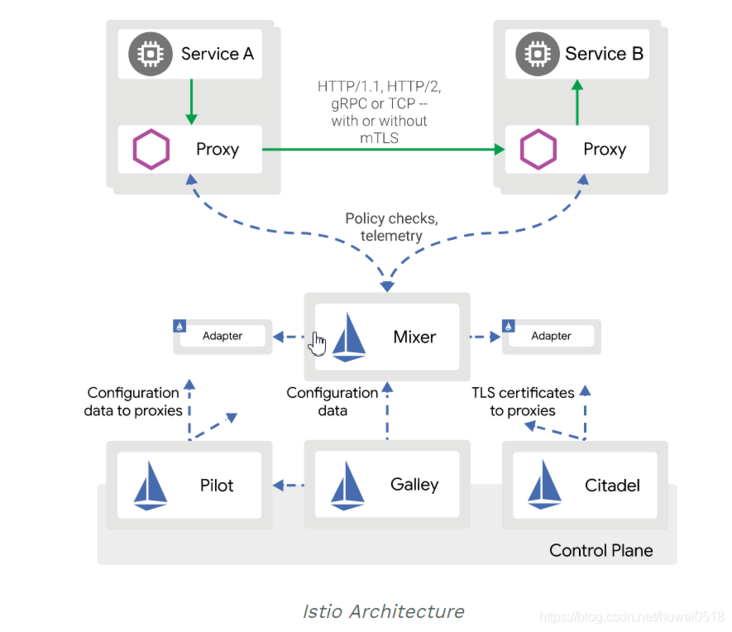

At present, Service Mesh has entered the second generation represented by Istio, which is composed of Data Panel (Proxy) and Control Panel. Istio is a product practice of Service Mesh that helps micro-services decouple hierarchically. The architecture diagram is as follows:

HTTPProxy Resource Specification

apiVersion: projectcontour.io/v1 #API Groups and Versions;

kind: HTTPProxy #Name of CRD resource;

metadata:

name <string>

namespace <string> #Namespace-level resources

spec:

virtualhost <VirtualHost> #Definition FQDN A virtual host in a format similar to Ingress in host fqdn <string> #Name of virtual host FQDN format

tls <TLS> #Enable HTTPS and redirect HTTP requests to HTTPS by default to 301

secretName <string> #Secret resource name stored in certificate and private key information

minimumProtocolVersion <string> #Minimum version of the supported SSL/TLS protocol

passthrough <boolean> #Whether the transfer mode is enabled and the controller does not uninstall HTTPS sessions when enabled

clientvalidation <DownstreamValidation> #Validate client certificates, optional configuration

caSecret <string> #Certificate of CA used to verify client certificate

routes <[ ]Route> #Define Routing Rules

conditions <[]Condition> #Traffic Matching Criteria, Supports PATH Prefix and Header Matching Detection Mechanisms

prefix <String> #PATH path prefix matching, similar to path field in Ingress

permitInsecure <Boolean> #Whether to disable the default redirection of HTTP to HTTPS functionality

services <[ ]Service> #Backend service, which translates to Envoy's Cluster definition

name <String> #Service Name

port <Integer> #Service Port

protocol <string> #Protocol arriving at backend service, available values are tls, h2, or h2c

validation <UpstreamValidation> #Whether to verify the server-side certificate

caSecret <string>

subjectName <string> #Require Subject values used in certificates- HTTPProxy Advanced Routing Resource Specification

spec:

routes <[]Route> #Define Routing Rules

conditions <[]Condition>

prefix <String>

header <Headercondition> #Request message header matching

name <String> #Header Name

present <Boolean> #true indicates that the condition is satisfied if the header exists and the value false does not make sense

contains <String> #Substring that the header value must contain

notcontains <string> #Substring that header value cannot contain

exact <String> #Precise matching of header values

notexact <string> #Precise inverse matching of header values, i.e. cannot be the same as the specified value

services <[ ]Service>#Backend service, converted to Envoy's Cluster

name <String>

port <Integer>

protocol <String>

weight <Int64> #Service weight, used for traffic splitting

mirror <Boolean> #Traffic Mirroring

requestHeadersPolicy <HeadersPolicy> #Header Policy for Requesting Messages to Upstream Server

set <[ ]HeaderValue> #Add a header or set a value for the specified header

name <String>

value <String>

remove <[]String>#Remove the specified header

responseHeadersPolicy <HeadersPolicy> #Header Policy for Response Messages to Downstream Clients

loadBalancerPolicy <LoadBalancerPolicy> #Specify to use load balancing policy

strategy <String>#Specific strategies used to support Random, RoundRobin, Cookie

#And weightedLeastRequest, which defaults to RoundRobin;

requestHeadersPolicy <HeadersPolicy> #Routing Level Request Header Policy

reHeadersPolicy <HeadersPolicy> #Routing Level Response Message Header Policy

pathRewritePolicy <PathRewritePolicy> #URL rewriting

replacePrefix <[]ReplacePrefix>

prefix <String> #PATH Routing Prefix

replacement <string> #Replace with the target path

- HTTPProxy Service Elastic Health Check Resource Specification

spec:

routes <[]Route>

timeoutPolicy <TimeoutPolicy> #Timeout Policy

response <String> #Timeout waiting for server response message

idle <String> # Length of idle time Envoy maintains connection with client after timeout

retryPolicy <RetryPolicy> #Retry Policy

count <Int64> #Number of retries, default 1

perTryTimeout <String> #Timeout for each retry

healthCheckPolicy <HTTPHealthCheckPolicy> # Active Health State Detection

path <String> #Detection targeted path (HTTP endpoint)

host <String> #Virtual Host Requested at Detection

intervalSeconds <Int64> #Time interval, i.e. detection frequency, defaults to 5 seconds

timeoutSeconds <Int64> #Timeout, default 2 seconds

unhealthyThresholdCount <Int64> # Threshold for determining unhealthy state, i.e. number of consecutive errors

healthyThresholdCount <Int64> # Threshold for determining health statusEnvoy Deployment

$ kubectl apply -f https://projectcontour.io/quickstart/contour.yaml [root@k8s-master Ingress]# kubectl get ns NAME STATUS AGE default Active 14d dev Active 13d ingress-nginx Active 29h kube-node-lease Active 14d kube-public Active 14d kube-system Active 14d kubernetes-dashboard Active 21h longhorn-system Active 21h projectcontour Active 39m #Add Namespace test Active 12d [root@k8s-master Ingress]# kubectl nget pod -n projectcontour [root@k8s-master Ingress]# kubectl get pod -n projectcontour NAME READY STATUS RESTARTS AGE contour-5449c4c94d-mqp9b 1/1 Running 3 37m contour-5449c4c94d-xgvqm 1/1 Running 5 37m contour-certgen-v1.18.1-82k8k 0/1 Completed 0 39m envoy-n2bs9 2/2 Running 0 37m envoy-q777l 2/2 Running 0 37m envoy-slt49 1/2 Running 2 37m [root@k8s-master Ingress]# kubectl get svc -n projectcontour NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE contour ClusterIP 10.100.120.94 <none> 8001/TCP 39m envoy LoadBalancer 10.97.48.41 <pending> 80:32668/TCP,443:32278/TCP 39m #Since it is not displayed as pending on the Iaas platform, it means that the resource has been requested and pending, without affecting access through NodePort [root@k8s-master Ingress]# kubectl api-resources NAME SHORTNAMES APIGROUP NAMESPACED KIND ... extensionservices extensionservice,extensionservices projectcontour.io true ExtensionService httpproxies proxy,proxies projectcontour.io true HTTPProxy tlscertificatedelegations tlscerts projectcontour.io true TLSCertificateDelegation

- Create virtual machine www.ik8s.io

[root@k8s-master Ingress]# cat httpproxy-demo.yaml

apiVersion: projectcontour.io/v1

kind: HTTPProxy

metadata:

name: httpproxy-demo

namespace: default

spec:

virtualhost:

fqdn: www.ik8s.io #Virtual Host

tls:

secretName: ik8s-tls

minimumProtocolVersion: "tlsv1.1" #Minimum Compatible Protocol Version

routes :

- conditions:

- prefix: /

services :

- name: demoapp-deploy #Backend svc

port: 80

permitInsecure: true #Whether plain text access redirects true or not

[root@k8s-master Ingress]# kubectl apply -f httpproxy-demo.yaml

httpproxy.projectcontour.io/httpproxy-demo configured- View proxy httpproxy or httpproxies

[root@k8s-master Ingress]# kubectl get httpproxy

NAME FQDN TLS SECRET STATUS STATUS DESCRIPTION

httpproxy-demo www.ik8s.io ik8s-tls valid Valid HTTPProxy

[root@k8s-master Ingress]# kubectl get httpproxies

NAME FQDN TLS SECRET STATUS STATUS DESCRIPTION

httpproxy-demo www.ik8s.io ik8s-tls valid Valid HTTPProxy

[root@k8s-master Ingress]# kubectl describe httpproxy httpproxy-demo

...

Spec:

Routes:

Conditions:

Prefix: /

Permit Insecure: true

Services:

Name: demoapp-deploy

Port: 80

Virtualhost:

Fqdn: www.ik8s.io

Tls:

Minimum Protocol Version: tlsv1.1

Secret Name: ik8s-tls

Status:

Conditions:

Last Transition Time: 2021-09-13T08:44:00Z

Message: Valid HTTPProxy

Observed Generation: 2

Reason: Valid

Status: True

Type: Valid

Current Status: valid

Description: Valid HTTPProxy

Load Balancer:

Events: <none>

[root@k8s-master Ingress]# kubectl get svc -n projectcontour

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

contour ClusterIP 10.100.120.94 <none> 8001/TCP 39m

envoy LoadBalancer 10.97.48.41 <pending> 80:32668/TCP,443:32278/TCP 39m- Add hosts access test

[root@bigyong ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 ... 192.168.54.171 www.ik8s.io [root@bigyong ~]# curl www.ik8s.io:32668 iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: deployment-demo-867c7d9d55-9lnpq, ServerIP: 192.168.12.39! [root@bigyong ~]# curl www.ik8s.io:32668 iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: deployment-demo-867c7d9d55-gw6qp, ServerIP: 192.168.113.39!

- HTTPS Access

[root@bigyong ~]# curl https://www.ik8s.io:32278 curl: (60) Peer's certificate issuer has been marked as not trusted by the user. More details here: http://curl.haxx.se/docs/sslcerts.html curl performs SSL certificate verification by default, using a "bundle" of Certificate Authority (CA) public keys (CA certs). If the default bundle file isn't adequate, you can specify an alternate file using the --cacert option. If this HTTPS server uses a certificate signed by a CA represented in the bundle, the certificate verification probably failed due to a problem with the certificate (it might be expired, or the name might not match the domain name in the URL). If you'd like to turn off curl's verification of the certificate, use the -k (or --insecure) option. [root@bigyong ~]# curl -k https://www.ik8s.io:32278 #Ignore Certificate Untrusted Access Successful iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: deployment-demo-867c7d9d55-9lnpq, Se

Example 1: Access control

- Create two Pod s with different versions

[root@k8s-master Ingress]# kubectl create deployment demoappv11 --image='ikubernetes/demoapp:v1.1' -n dev deployment.apps/demoappv11 created [root@k8s-master Ingress]# kubectl create deployment demoappv12 --image='ikubernetes/demoapp:v1.2' -n dev deployment.apps/demoappv12 created

- Create a corresponding SVC

[root@k8s-master Ingress]# kubectl create service clusterip demoappv11 --tcp=80 -n dev service/demoappv11 created [root@k8s-master Ingress]# kubectl create service clusterip demoappv12 --tcp=80 -n dev service/demoappv12 created [root@k8s-master Ingress]# kubectl get svc -n dev kuNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE demoappv11 ClusterIP 10.99.204.65 <none> 80/TCP 19s demoappv12 ClusterIP 10.97.211.38 <none> 80/TCP 17s [root@k8s-master Ingress]# kubectl describe svc demoappv11 -n dev Name: demoappv11 Namespace: dev Labels: app=demoappv11 Annotations: <none> Selector: app=demoappv11 Type: ClusterIP IP: 10.99.204.65 Port: 80 80/TCP TargetPort: 80/TCP Endpoints: 192.168.12.53:80 Session Affinity: None Events: <none> [root@k8s-master Ingress]# kubectl describe svc demoappv12 -n dev Name: demoappv12 Namespace: dev Labels: app=demoappv12 Annotations: <none> Selector: app=demoappv12 Type: ClusterIP IP: 10.97.211.38 Port: 80 80/TCP TargetPort: 80/TCP Endpoints: 192.168.51.79:80 Session Affinity: None Events: <none>

- Access Test

[root@k8s-master Ingress]# curl 10.99.204.65 iKubernetes demoapp v1.1 !! ClientIP: 192.168.4.170, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! [root@k8s-master Ingress]# curl 10.97.211.38 iKubernetes demoapp v1.2 !! ClientIP: 192.168.4.170, ServerName: demoappv12-64c664955b-lkchk, ServerIP: 192.168.51.79!

- Deploy Envoy httpproxy

[root@k8s-master Ingress]# cat httpproxy-headers-routing.yaml

apiVersion: projectcontour.io/v1

kind: HTTPProxy

metadata:

name: httpproxy-headers-routing

namespace: dev

spec:

virtualhost:

fqdn: www.ilinux.io

routes: #Route

- conditions:

- header:

name: X-Canary #header contains X-Canary:true

present: true

- header:

name: User-Agent #Include curl in header

contains: curl

services: #Route to demoappv11 if these two conditions are met

- name: demoappv11

port: 80

- services: #Other routes that do not meet the criteria to demoapp12

- name: demoappv12

port: 80

[root@k8s-master Ingress]# kubectl apply -f httpproxy-headers-routing.yaml

httpproxy.projectcontour.io/httpproxy-headers-routing unchanged

[root@k8s-master Ingress]# kubectl get httpproxy -n dev

NAME FQDN TLS SECRET STATUS STATUS DESCRIPTION

httpproxy-headers-routing www.ilinux.io valid Valid HTTPProxy

[root@k8s-master Ingress]# kubectl get svc -n projectcontour

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

contour ClusterIP 10.100.120.94 <none> 8001/TCP 114m

envoy LoadBalancer 10.97.48.41 <pending> 80:32668/TCP,443:32278/TCP 114m- Access Test

[root@bigyong ~]# cat /etc/hosts #Add hosts ... 192.168.54.171 www.ik8s.io www.ilinux.io [root@bigyong ~]# curl http://www.ilinux.io #Default version 1.2 iKubernetes demoapp v1.2 !! ClientIP: 192.168.113.54, ServerName: demoappv12-64c664955b-lkchk, ServerIP: 192.168.51.79! [root@bigyong ~]# curl http://www.ilinux.io iKubernetes demoapp v1.2 !! ClientIP: 192.168.113.54, ServerName: demoappv12-64c664955b-lkchk, ServerIP: 192.168.51.79!

- Because accessed via curl, adding X-Canary:true to the add header will satisfy the condition of version 1.1

[root@bigyong ~]# curl -H "X-Canary:true" http://www.ilinux.io iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! [root@bigyong ~]# curl -H "X-Canary:true" http://www.ilinux.io iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! [root@k8s-master Ingress]# kubectl delete -f httpproxy-headers-routing.yaml httpproxy.projectcontour.io "httpproxy-headers-routing" deleted

Example 2: Flow Cutting Canary Release

- Publish small scales first, no problem, then all

- Deployment deployment Envoy httpproxy traffic ratio columns are 10%, 90%

[root@k8s-master Ingress]# cat httpproxy-traffic-splitting.yaml

apiVersion: projectcontour.io/v1

kind: HTTPProxy

metadata:

name: httpproxy-traffic-splitting

namespace: dev

spec:

virtualhost:

fqdn: www.ilinux.io

routes:

- conditions:

- prefix: /

services:

- name: demoappv11

port: 80

weight: 90 #Version v1.1 is 90% traffic

- name: demoappv12

port: 80

weight: 10 #Version v1.2 is 10% traffic

[root@k8s-master Ingress]# kubectl get httpproxy -n dev

NAME FQDN TLS SECRET STATUS STATUS DESCRIPTION

httpproxy-traffic-splitting www.ilinux.io valid Valid HTTPProxy- Access Test

[root@bigyong ~]# while true; do curl http://Www.ilinux.io; Sleep.1; Done#v1.1 v1.2 has a ratio of approximately 9:1 iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.2 !! ClientIP: 192.168.113.54, ServerName: demoappv12-64c664955b-lkchk, ServerIP: 192.168.51.79! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.2 !! ClientIP: 192.168.113.54, ServerName: demoappv12-64c664955b-lkchk, ServerIP: 192.168.51.79! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53!

Example 3: Mirror Publishing

[root@k8s-master Ingress]# cat httpproxy-traffic-mirror.yaml

apiVersion: projectcontour.io/v1

kind: HTTPProxy

metadata:

name: httpproxy-traffic-mirror

namespace: dev

spec:

virtualhost:

fqdn: www.ilinux.io

routes:

- conditions:

- prefix: /

services :

- name: demoappv11

port: 80

- name: demoappv12

port: 80

mirror: true #Mirror Access

[root@k8s-master Ingress]# kubectl apply -f httpproxy-traffic-mirror.yaml

[root@k8s-master Ingress]# kubectl get httpproxy -n dev

NAME FQDN TLS SECRET STATUS STATUS DESCRIPTION

httpproxy-traffic-mirror www.ilinux.io valid Valid HTTPProxy

[root@k8s-master Ingress]# kubectl get pod -n dev

NAME READY STATUS RESTARTS AGE

demoappv11-59544d568d-5gg72 1/1 Running 0 74m

demoappv12-64c664955b-lkchk 1/1 Running 0 74m- Access Test

#All v1.1 versions [root@bigyong ~]# while true; do curl http://www.ilinux.io; sleep .1; done iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53! iKubernetes demoapp v1.1 !! ClientIP: 192.168.113.54, ServerName: demoappv11-59544d568d-5gg72, ServerIP: 192.168.12.53!

- View v1.2 Pod logs with the same traffic access and show that access is normal

[root@k8s-master Ingress]# kubectl get pod -n dev NAME READY STATUS RESTARTS AGE demoappv11-59544d568d-5gg72 1/1 Running 0 74m demoappv12-64c664955b-lkchk 1/1 Running 0 74m [root@k8s-master Ingress]# kubectl logs demoappv12-64c664955b-lkchk -n dev * Running on http://0.0.0.0:80/ (Press CTRL+C to quit) 192.168.4.170 - - [13/Sep/2021 09:35:01] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 09:46:24] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 09:46:28] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 09:46:29] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 09:47:12] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 09:47:25] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 09:50:50] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 09:50:51] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 09:50:51] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 09:50:52] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:03:49] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:03:49] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:03:49] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:03:50] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:03:51] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:03:51] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:03:52] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:03:53] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:03:53] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:03:56] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:03:56] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:04:07] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:04:14] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:04:28] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:05:14] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:05:16] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:41:57] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:41:57] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:41:57] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:41:58] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:41:58] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:41:58] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:41:58] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:41:58] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:41:58] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:41:58] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:41:58] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:41:59] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:41:59] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:41:59] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:41:59] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:41:59] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:41:59] "GET / HTTP/1.1" 200 - 192.168.113.54 - - [13/Sep/2021 10:41:59] "GET / HTTP/1.1" 200 - [root@k8s-master Ingress]# kubectl delete -f httpproxy-traffic-mirror.yaml httpproxy.projectcontour.io "httpproxy-traffic-mirror" deleted

Example 4: Custom scheduling algorithm

[root@k8s-master Ingress]# cat httpproxy-lb-strategy.yaml

apiVersion: projectcontour.io/v1

kind: HTTPProxy

metadata:

name: httpproxy-lb-strategy

namespace: dev

spec:

virtualhost:

fqdn: www.ilinux.io

routes:

- conditions:

- prefix: /

services:

- name: demoappv11

port: 80

- name: demoappv12

port: 80

loadBalancerPolicy:

strategy: Random #Random Access PolicyExample 5: HTTPProxy Service Elastic Health Check

[root@k8s-master Ingress]# cat httpproxy-retry-timeout.yaml

apiVersion: projectcontour.io/v1

kind: HTTPProxy

metadata:

name: httpproxy-retry-timeout

namespace: dev

spec:

virtualhost:

fqdn: www.ilinux.io

routes:

- timeoutPolicy:

response: 2s #No response timed out within 2S

idle: 5s #Idle 5s

retryPolicy:

count: 3 #Retry 3 times

perTryTimeout: 500ms #retry

services:

- name: demoappv12

port: 80Reference link: