Network Plugin flannel

One solution for cross-host communication is Flannel, introduced by CoreOS, which supports three implementations: UDP, VXLAN, host-gw

udp mode: use device flannel.0 for unpacking, not native to the kernel, large context switching, very poor performance

vxlan mode: use flannel.1 for unpacking, native kernel support, better performance

host-gw mode: no need for intermediate devices like flannel.1, direct host serves as the next-hop address for subnets, with the strongest performance

The performance loss of host-gw is about 10%, while that of all other network schemes based on VXLAN tunneling mechanism is about 20%~30%.

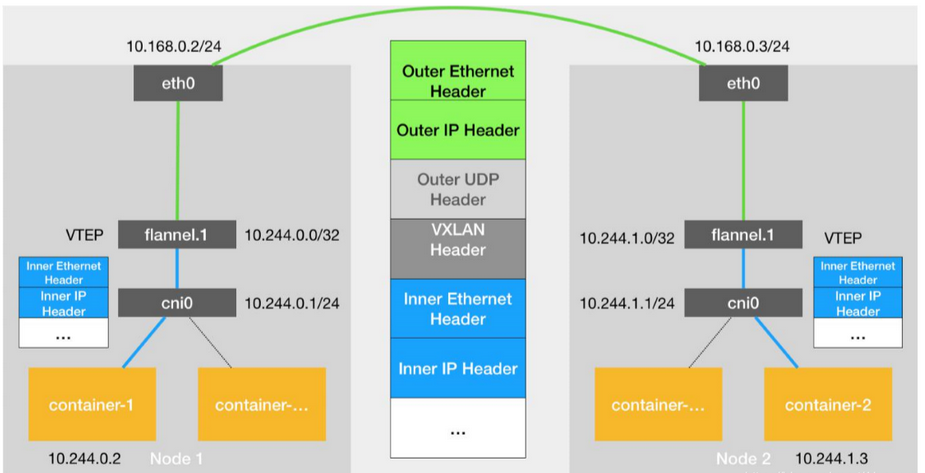

1 Flannel-vxlan mode cross-host communication

What is VXLAN?

VXLAN, Virtual Extensible LAN (Virtual Extensible LAN), is a network virtualization technology supported by Linux itself. VXLANs can be fully encapsulated and unpacked in the kernel state to build an Overlay Network through a Tunnel mechanism.

The design idea of VXLAN is:

Overlaying an existing three-tier network, a virtual two-tier network maintained by the kernel VXLAN module allows "hosts" (either virtual machines or containers) connected to this VXLAN two-nfcu network to communicate freely in the same local area network (LAN).

In order to get through a "tunnel" on a two-nfcu network, VXLAN sets up a special network device on the host machine as the two ends of the "tunnel", called VTE:VXLAN Tunnel End Point (Virtual Tunnel End)

Principle of Flannel vxlan mode cross-host communication

A flanel.1 device is the VTE of a VXLAN, which has both an IP address and a MAC address

Similar to UPD mode, when a container-makes a request, an IP packet with address 10.1.16.3 appears on the docker bridge before it is routed to the local flannel.1 device for processing (inbound).

To be able to package and send "raw IP packets" to the normal host, VXLAN needs to find the exit of the tunnel: the VTEP device of the host on which the device information is maintained by the host's flanneld process

Communication between VTEP devices via a two-tier data frame

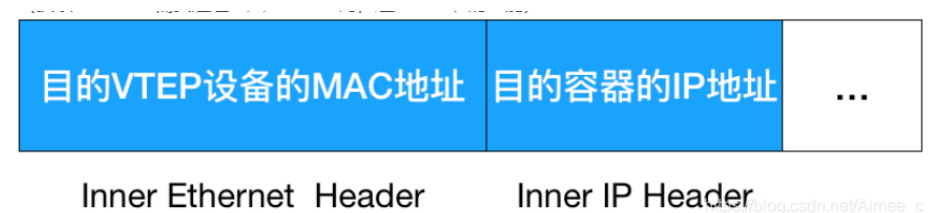

When the source VTEP device receives the original IP packet, it adds a destination MAC address to it, encapsulates it into a frame of imported data, and sends it to the destination VTEP device (it needs to query through three layers of IP address to obtain the MAC address, which is the function of ARP table)

The encapsulation process only adds a two-tier header and does not change the contents of the "original IP package"

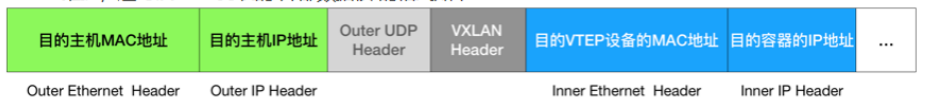

The MAC addresses of these VTEP devices have no practical meaning for the host network, they are called internal data frames and cannot be transmitted on the host's two-tier network. The Linux kernel also needs to further encapsulate them as a common data frame of the host so that they can be transmitted through the host eth0 with the "internal data frame", which Linux will be in front of. With a VXLAN header, there is an important flag in the VXLAN header called VNI, which is an important flag for VTEP to identify whether a data frame should be processed by itself.

In Flannel, the default value of VNI is 1, which is why the host's VTEP devices are called flannel.1

A flannel.1 device only knows the MAC address of the flannel.1 device on the other end, but it does not know what the corresponding host address is.

Inside the Linux kernel, the basis for network devices to forward is from the FDB's forwarding database, and the corresponding FDB information for this flannel.1 bridge is maintained by the flanneld process. The Linux kernel then prefaces the IP packet with a two-layer data frame header to fill in the MAC address of Node2. The MAC address itself, which Node1's ARP table learns from, requires Flannel maintenance, when Linux encapsulates the following "external data frame" format

The flannel.1 device of Node 1 can then send this data frame from eth0, then go through the host network to eth0 of Node2.

Node2's kernel network stack will find that this data frame has a VXLAN Header and VNI is 1. The Linux kernel will unpack it, get the internal data frame, and hand it to Node2's flannel.1 device according to the VNI value

2 Flannel-host-gw mode cross-host communication (pure three layers)

This is a pure three-layer network scheme with the highest performance.

The howt-gw mode works by setting the next hop of each Flannel subnet to the IP address of the host machine corresponding to that subnet, that is, the host acts as the Gateway for this container's communication path, which is what host-gw means.

All subnet and host information is stored in the Etcd. flanneld only needs to watch these changes and update the routing table in real time.

The core is to use the MAC address on the Routing Table's Next Hop setting when an IP packet is encapsulated into a frame, so that it can reach the destination host through a two-tier network.

[kubeadm@server1 mainfest]$ cp /home/kubeadm/kube-flannel.yml . [kubeadm@server1 mainfest]$ ls cronjob.yml daemonset.yml deployment.yml init.yml job.yml kube-flannel.yml pod2.yml pod.yml rs.yml service.yml [kubeadm@server1 mainfest]$ kubectl delete -f kube-flannel.yml podsecuritypolicy.policy "psp.flannel.unprivileged" deleted clusterrole.rbac.authorization.k8s.io "flannel" deleted clusterrolebinding.rbac.authorization.k8s.io "flannel" deleted serviceaccount "flannel" deleted configmap "kube-flannel-cfg" deleted daemonset.apps "kube-flannel-ds-amd64" deleted daemonset.apps "kube-flannel-ds-arm64" deleted daemonset.apps "kube-flannel-ds-arm" deleted daemonset.apps "kube-flannel-ds-ppc64le" deleted daemonset.apps "kube-flannel-ds-s390x" deleted [kubeadm@server1 mainfest]$ vim kube-flannel.yml --------------->Modify here to host-gw Pattern [kubeadm@server1 mainfest]$ kubectl apply -f kube-flannel.yml podsecuritypolicy.policy/psp.flannel.unprivileged created clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.apps/kube-flannel-ds-amd64 created daemonset.apps/kube-flannel-ds-arm64 created daemonset.apps/kube-flannel-ds-arm created daemonset.apps/kube-flannel-ds-ppc64le created daemonset.apps/kube-flannel-ds-s390x created [kubeadm@server1 mainfest]$ kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-698fcc7d7c-n8j7q 1/1 Running 4 7d13h coredns-698fcc7d7c-r6tsw 1/1 Running 4 7d13h etcd-server1 1/1 Running 4 7d13h kube-apiserver-server1 1/1 Running 4 7d13h kube-controller-manager-server1 1/1 Running 4 7d13h kube-flannel-ds-amd64-n67nh 1/1 Running 0 30s kube-flannel-ds-amd64-qd4nw 1/1 Running 0 30s kube-flannel-ds-amd64-wg2tg 1/1 Running 0 30s kube-proxy-4xlms 1/1 Running 0 10h kube-proxy-gx7jc 1/1 Running 0 10h kube-proxy-n58d5 1/1 Running 0 10h kube-scheduler-server1 1/1 Running 4 7d13h

[kubeadm@server1 mainfest]$ vim pod2.yml

[kubeadm@server1 mainfest]$ cat pod2.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment-example

spec:

replicas: 4

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v2

[kubeadm@server1 mainfest]$ kubectl apply -f pod2.yml

deployment.apps/deployment-example configured

[kubeadm@server1 mainfest]$ vim service.yml

[kubeadm@server1 mainfest]$ cat service.yml

kind: Service

apiVersion: v1

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

selector:

app: myapp

type: NodePort

[kubeadm@server1 mainfest]$ kubectl apply -f service.yml

service/myservice created

[kubeadm@server1 mainfest]$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-example-67764dd8bd-495p4 1/1 Running 0 53s 10.244.1.51 server2 <none> <none>

deployment-example-67764dd8bd-jl7nl 1/1 Running 1 3h52m 10.244.1.50 server2 <none> <none>

deployment-example-67764dd8bd-psr8v 1/1 Running 0 53s 10.244.2.76 server3 <none> <none>

deployment-example-67764dd8bd-zvd28 1/1 Running 1 3h52m 10.244.2.75 server3 <none> <none>

[kubeadm@server1 mainfest]$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 7d15h

myservice NodePort 10.102.1.239 <none> 80:31334/TCP 21s3 Flannel - vxlan+directrouting mode

[kubeadm@server1 mainfest]$ kubectl delete -f kube-flannel.yml podsecuritypolicy.policy "psp.flannel.unprivileged" deleted clusterrole.rbac.authorization.k8s.io "flannel" deleted clusterrolebinding.rbac.authorization.k8s.io "flannel" deleted serviceaccount "flannel" deleted configmap "kube-flannel-cfg" deleted daemonset.apps "kube-flannel-ds-amd64" deleted daemonset.apps "kube-flannel-ds-arm64" deleted daemonset.apps "kube-flannel-ds-arm" deleted daemonset.apps "kube-flannel-ds-ppc64le" deleted daemonset.apps "kube-flannel-ds-s390x" deleted [kubeadm@server1 mainfest]$ vim kube-flannel.yml ---------->Turn on direct routing [kubeadm@server1 mainfest]$ kubectl apply -f kube-flannel.yml podsecuritypolicy.policy/psp.flannel.unprivileged created clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.apps/kube-flannel-ds-amd64 created daemonset.apps/kube-flannel-ds-arm64 created daemonset.apps/kube-flannel-ds-arm created daemonset.apps/kube-flannel-ds-ppc64le created daemonset.apps/kube-flannel-ds-s390x created [kubeadm@server1 mainfest]$ kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-698fcc7d7c-n8j7q 1/1 Running 5 7d15h coredns-698fcc7d7c-r6tsw 1/1 Running 5 7d15h etcd-server1 1/1 Running 5 7d15h kube-apiserver-server1 1/1 Running 5 7d15h kube-controller-manager-server1 1/1 Running 5 7d15h kube-flannel-ds-amd64-6h7l7 1/1 Running 0 8s kube-flannel-ds-amd64-7gtj9 1/1 Running 0 8s kube-flannel-ds-amd64-l4fwl 1/1 Running 0 8s kube-proxy-4xlms 1/1 Running 2 13h kube-proxy-gx7jc 1/1 Running 2 13h kube-proxy-n58d5 1/1 Running 2 13h kube-scheduler-server1 1/1 Running 5 7d15h [kubeadm@server1 mainfest]$ kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES deployment-example-67764dd8bd-495p4 1/1 Running 0 19m 10.244.1.51 server2 <none> <none> deployment-example-67764dd8bd-jl7nl 1/1 Running 1 4h10m 10.244.1.50 server2 <none> <none> deployment-example-67764dd8bd-psr8v 1/1 Running 0 19m 10.244.2.76 server3 <none> <none> deployment-example-67764dd8bd-zvd28 1/1 Running 1 4h10m 10.244.2.75 server3 <none> <none> [kubeadm@server1 mainfest]$ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 7d15h myservice NodePort 10.102.1.239 <none> 80:31334/TCP 19m

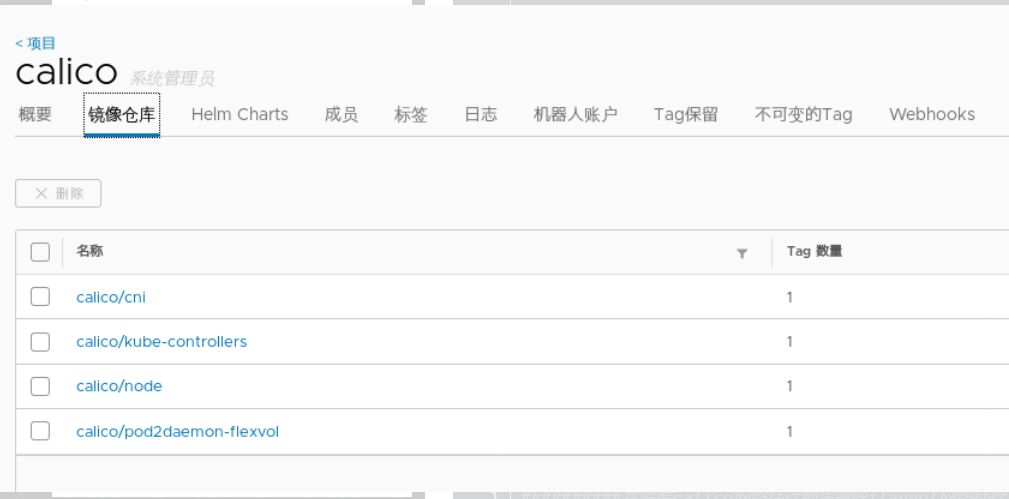

Network Plugin calico

Reference website: https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/

calico not only solves network communication but also network policy

Create a new calico project in the private repository to store the calico image

Pull the required image of calico

##########Remove the flannel network plug-in before installing the calico network plug-in to prevent conflicts between the two plug-ins at run time [root@server2 mainfest]# kubectl delete -f kube-flannel.yml podsecuritypolicy.policy "psp.flannel.unprivileged" deleted clusterrole.rbac.authorization.k8s.io "flannel" deleted clusterrolebinding.rbac.authorization.k8s.io "flannel" deleted serviceaccount "flannel" deleted configmap "kube-flannel-cfg" deleted daemonset.apps "kube-flannel-ds-amd64" deleted daemonset.apps "kube-flannel-ds-arm64" deleted daemonset.apps "kube-flannel-ds-arm" deleted daemonset.apps "kube-flannel-ds-ppc64le" deleted daemonset.apps "kube-flannel-ds-s390x" deleted [root@server2 mainfest]# cd /etc/cni/net.d/ [root@server2 net.d]# ls 10-flannel.conflist [root@server2 net.d]# mv 10-flannel.conflist /mnt ############So all nodes execute

[kubeadm@server1 ~]$ wget https://docs.projectcalico.org/v3.14/manifests/calico.yaml ##Download the required calico configuration file

kubeadm@server1 mainfest]$ cp /home/kubeadm/calico.yaml .

[kubeadm@server1 mainfest]$ ls

calico.yaml cronjob.yml daemonset.yml deployment.yml init.yml job.yml kube-flannel.yml pod2.yml pod.yml rs.yml service.yml

[kubeadm@server1 mainfest]$ vim calico.yaml -------------->Close IPIP tunnel mode

# Enable IPIP

- name: CALICO_IPV4POOL_IPIP

value: "off"

[kubeadm@server1 mainfest]$ kubectl apply -f calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

[kubeadm@server1 mainfest]$ kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-76d4774d89-r2jg9 1/1 Running 0 15s

calico-node-8dvkh 0/1 PodInitializing 0 15s

calico-node-l6kw6 0/1 Init:0/3 0 15s

calico-node-lbqhr 0/1 PodInitializing 0 15s

coredns-698fcc7d7c-n8j7q 1/1 Running 5 7d16h

coredns-698fcc7d7c-r6tsw 1/1 Running 5 7d16h

etcd-server1 1/1 Running 5 7d16h

kube-apiserver-server1 1/1 Running 5 7d16h

kube-controller-manager-server1 1/1 Running 5 7d16h

kube-proxy-4xlms 1/1 Running 2 13h

kube-proxy-gx7jc 1/1 Running 2 13h

kube-proxy-n58d5 1/1 Running 2 13h

kube-scheduler-server1 1/1 Running 5 7d16h

[kubeadm@server1 mainfest]$ kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-76d4774d89-r2jg9 1/1 Running 0 103s

calico-node-8dvkh 0/1 Running 0 103s

calico-node-l6kw6 0/1 Init:2/3 0 103s

calico-node-lbqhr 0/1 Running 0 103s

coredns-698fcc7d7c-n8j7q 1/1 Running 5 7d16h

coredns-698fcc7d7c-r6tsw 1/1 Running 5 7d16h

etcd-server1 1/1 Running 5 7d16h

kube-apiserver-server1 1/1 Running 5 7d16h

kube-controller-manager-server1 1/1 Running 5 7d16h

kube-proxy-4xlms 1/1 Running 2 13h

kube-proxy-gx7jc 1/1 Running 2 13h

kube-proxy-n58d5 1/1 Running 2 13h

kube-scheduler-server1 1/1 Running 5 7d16h

[kubeadm@server1 mainfest]$ kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-76d4774d89-r2jg9 1/1 Running 0 2m18s

calico-node-8dvkh 0/1 Running 0 2m18s

calico-node-l6kw6 0/1 PodInitializing 0 2m18s

calico-node-lbqhr 0/1 Running 0 2m18s

coredns-698fcc7d7c-n8j7q 1/1 Running 5 7d16h

coredns-698fcc7d7c-r6tsw 1/1 Running 5 7d16h

etcd-server1 1/1 Running 5 7d16h

kube-apiserver-server1 1/1 Running 5 7d16h

kube-controller-manager-server1 1/1 Running 5 7d16h

kube-proxy-4xlms 1/1 Running 2 13h

kube-proxy-gx7jc 1/1 Running 2 13h

kube-proxy-n58d5 1/1 Running 2 13h

kube-scheduler-server1 1/1 Running 5 7d16h

[kubeadm@server1 mainfest]$ kubectl get daemonsets.apps -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

calico-node 3 3 0 3 0 kubernetes.io/os=linux 2m45s

kube-proxy 3 3 3 3 3 kubernetes.io/os=linux 7d16h

[kubeadm@server1 mainfest]$ ip addr --------------->No more flannal1

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:bb:3e:1d brd ff:ff:ff:ff:ff:ff

inet 192.168.43.11/24 brd 192.168.43.255 scope global ens33

valid_lft forever preferred_lft forever

inet 172.25.1.1/24 brd 172.25.1.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.3.201/24 brd 192.168.3.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 2408:84fb:1:1209:20c:29ff:febb:3e1d/64 scope global mngtmpaddr dynamic

valid_lft 3505sec preferred_lft 3505sec

inet6 fe80::20c:29ff:febb:3e1d/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:e9:5e:cd:d0 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

4: dummy0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 5a:94:ba:ba:c0:07 brd ff:ff:ff:ff:ff:ff

5: kube-ipvs0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default

link/ether 0a:6f:17:7d:e9:a8 brd ff:ff:ff:ff:ff:ff

inet 10.96.0.10/32 brd 10.96.0.10 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.96.0.1/32 brd 10.96.0.1 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.102.1.239/32 brd 10.102.1.239 scope global kube-ipvs0

valid_lft forever preferred_lft forever

6: calic3d023fea71@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1440 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

7: cali8e3712e48ff@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1440 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

[kubeadm@server1 mainfest]$

[kubeadm@server1 mainfest]$ kubectl describe ippools

Name: default-ipv4-ippool

Namespace:

Labels: <none>

Annotations: projectcalico.org/metadata: {"uid":"e086a226-81ff-4cf9-923d-d5f75956a6f4","creationTimestamp":"2020-06-26T11:52:17Z"}

API Version: crd.projectcalico.org/v1

Kind: IPPool

Metadata:

Creation Timestamp: 2020-06-26T11:52:18Z

Generation: 1

Managed Fields:

API Version: crd.projectcalico.org/v1

Fields Type: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.:

f:projectcalico.org/metadata:

f:spec:

.:

f:blockSize:

f:cidr:

f:ipipMode:

f:natOutgoing:

f:nodeSelector:

f:vxlanMode:

Manager: Go-http-client

Operation: Update

Time: 2020-06-26T11:52:18Z

Resource Version: 276648

Self Link: /apis/crd.projectcalico.org/v1/ippools/default-ipv4-ippool

UID: d07545a4-008b-4000-96c0-b49db8ea8543

Spec:

Block Size: 26

Cidr: 10.244.0.0/16

Ipip Mode: Never

Nat Outgoing: true

Node Selector: all()

Vxlan Mode: Never

Events: <none>View Routing Loops: Communicate directly through ens33

Change calico.yml file open tunnel mode and assign a fixed pod ip segment

kubeadm@server1 mainfest]$ vim calico.yaml

# Enable IPIP

- name: CALICO_IPV4POOL_IPIP

value: "Always"

- name: CALICO_IPV4POOL_CIDR

value: "192.168.0.0/16"

[kubeadm@server1 mainfest]$ kubectl apply -f calico.yaml

You can see that each node gets a tunnel ip