1. Overview of kubernetes upgrade

Kubernetes version upgrade iteration is very fast, update every three months, many new functions iterate quickly in the new version. In order to keep consistent with the community version function, upgrade the kubernetes cluster, the community has upgraded the cluster through the kubeadm tool, the upgrade steps are simple and easy.First, let's look at what components need to be upgraded to upgrade the kubernetes cluster:

- Upgrade management node, kube-apiserver, kuber-controller-manager, kube-scheduler, etcd on management node, etc.

- Other management nodes, if they are deployed in a highly available way, multiple highly available nodes need to be upgraded together;

-

worker work node, upgrade Container Runtime on work node such as docker, kubelet and kube-proxy.

Version upgrades are generally divided into two categories: small version upgrade and cross-version upgrade, small version upgrade such as 1.14.1 upgrade only 1.14.2, cross-version upgrade between small versions such as 1.14.1 upgrade directly to 1.14.3; cross-version upgrade refers to large version upgrade, such as 1.14.x upgrade to 1.15.x.This article upgrades version 1.14.1 to version 1.1.5.1 offline. The following conditions need to be met before upgrading:

- The current cluster version needs to be larger than 1.14.x and can be upgraded to 1.14.x and 1.15.x, between small and cross-versions;

- Close swap space;

- Back up data, backup etcd data, and some important directories such as/etc/kubernetes,/var/lib/kubelet;

- The pod needs to be restarted during the upgrade process to ensure that the application uses the RollingUpdate rolling upgrade strategy to avoid business impact.

2. Offline upgrade to 1.15.1

2.1 Prepare for upgrade

1. View the current version, the version deployed on the system is 1.1.4.1

[root@node-1 ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.1", GitCommit:"b7394102d6ef778017f2ca4046abbaa23b88c290", GitTreeState:"clean", BuildDate:"2019-04-08T17:11:31Z", GoVersion:"go1.12.1", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.1", GitCommit:"b7394102d6ef778017f2ca4046abbaa23b88c290", GitTreeState:"clean", BuildDate:"2019-04-08T17:02:58Z", GoVersion:"go1.12.1", Compiler:"gc", Platform:"linux/amd64"}

[root@node-1 ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.1", GitCommit:"b7394102d6ef778017f2ca4046abbaa23b88c290", GitTreeState:"clean", BuildDate:"2019-04-08T17:08:49Z", GoVersion:"go1.12.1", Compiler:"gc", Platform:"linux/amd64"}2. View the version of the node where kubelet and kube-proxy use version 1.1.4.1

[root@node-1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION node-1 Ready master 25h v1.14.1 node-2 Ready <none> 25h v1.14.1 node-3 Ready <none> 25h v1.14.1

3. Status of other components to ensure that the current components are in a normal application state

[root@node-1 ~]# kubectl get componentstatuses

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

[root@node-1 ~]#

[root@node-1 ~]# kubectl get deployments --all-namespaces

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

default demo 3/3 3 3 37m

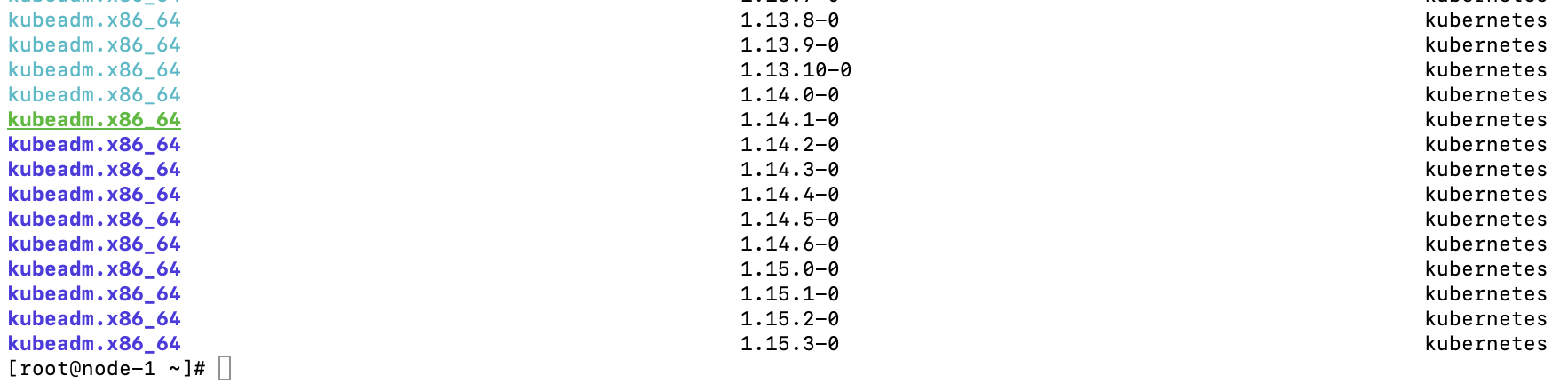

kube-system coredns 2/2 2 2 25h4. View the latest version of kubernetes (configuring the yum source of kubernetes requires reasonable Internet access). Use yum list --showduplicates kubeadm --disableexcludes=kubernetes to see the current upgradable version, green is the current version, and blue is the upgradable version, as follows:

2.2 Upgrade master node

1. Pour in the installation image, first from https://pan.baidu.com/s/1hw8Q0Vf3xvhKoEiVtMi6SA Download the kubernetes installation image from the disk and upload it to the cluster, unzip it and enter the v1.15.1 directory, pour the image into three nodes, and pour the image into node-2 as an example:

Pour into the mirror: [root@node-2 v1.15.1]# docker image load -i kube-apiserver\:v1.15.1.tar [root@node-2 v1.15.1]# docker image load -i kube-scheduler\:v1.15.1.tar [root@node-2 v1.15.1]# docker image load -i kube-controller-manager\:v1.15.1.tar [root@node-2 v1.15.1]# docker image load -i kube-proxy\:v1.15.1.tar //View the list of current system import mirrors: [root@node-1 ~]# docker image list REPOSITORY TAG IMAGE ID CREATED SIZE k8s.gcr.io/kube-proxy v1.15.1 89a062da739d 8 weeks ago 82.4MB k8s.gcr.io/kube-controller-manager v1.15.1 d75082f1d121 8 weeks ago 159MB k8s.gcr.io/kube-scheduler v1.15.1 b0b3c4c404da 8 weeks ago 81.1MB k8s.gcr.io/kube-apiserver v1.15.1 68c3eb07bfc3 8 weeks ago 207MB k8s.gcr.io/kube-proxy v1.14.1 20a2d7035165 5 months ago 82.1MB k8s.gcr.io/kube-apiserver v1.14.1 cfaa4ad74c37 5 months ago 210MB k8s.gcr.io/kube-scheduler v1.14.1 8931473d5bdb 5 months ago 81.6MB k8s.gcr.io/kube-controller-manager v1.14.1 efb3887b411d 5 months ago 158MB quay.io/coreos/flannel v0.11.0-amd64 ff281650a721 7 months ago 52.6MB k8s.gcr.io/coredns 1.3.1 eb516548c180 8 months ago 40.3MB k8s.gcr.io/etcd 3.3.10 2c4adeb21b4f 9 months ago 258MB k8s.gcr.io/pause 3.1 da86e6ba6ca1 21 months ago 742kB

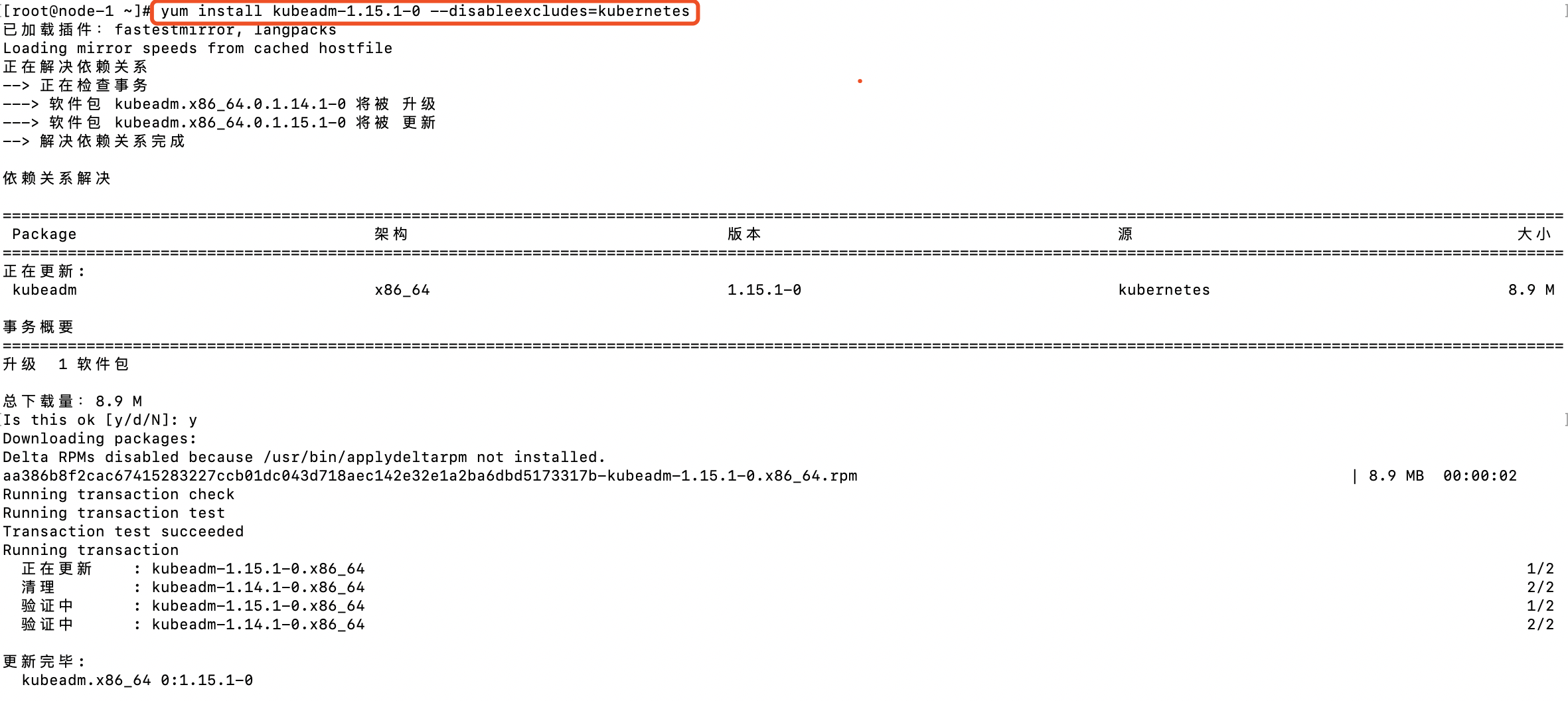

2. Update the kubeadm version to 1.15.1 for domestic reference https://blog.51cto.com/2157217/1983992 Setting up the kubernetes source

3. Verify the kubeadm version, upgraded to version 1.1.5.1

[root@node-1 ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.1", GitCommit:"4485c6f18cee9a5d3c3b4e523bd27972b1b53892", GitTreeState:"clean", BuildDate:"2019-07-18T09:15:32Z", GoVersion:"go1.12.5", Compiler:"gc", Platform:"linux/amd64"}4. View the upgrade plan. You can view the upgrade plan of the current cluster through kubeadm, which shows the latest version of the current small version and the latest version of the community

[root@node-1 ~]# kubeadm upgrade plan

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.14.1 #Current Cluster Version

[upgrade/versions] kubeadm version: v1.15.1 #Current kubeadm version

[upgrade/versions] Latest stable version: v1.15.3 #Community Latest Version

[upgrade/versions] Latest version in the v1.14 series: v1.14.6 #Latest version in 1.14.x

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT AVAILABLE

Kubelet 3 x v1.14.1 v1.14.6

Upgrade to the latest version in the v1.14 series:

COMPONENT CURRENT AVAILABLE #Upgradable version information, currently upgradable from 1.14.1 to 1.14.6

API Server v1.14.1 v1.14.6

Controller Manager v1.14.1 v1.14.6

Scheduler v1.14.1 v1.14.6

Kube Proxy v1.14.1 v1.14.6

CoreDNS 1.3.1 1.3.1

Etcd 3.3.10 3.3.10

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.14.6 #Operational commands executed to upgrade to 1.14.6

_____________________________________________________________________

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT AVAILABLE

Kubelet 3 x v1.14.1 v1.15.3

Upgrade to the latest stable version:

COMPONENT CURRENT AVAILABLE #Version upgraded across versions, current latest version is 1.15.3

API Server v1.14.1 v1.15.3

Controller Manager v1.14.1 v1.15.3

Scheduler v1.14.1 v1.15.3

Kube Proxy v1.14.1 v1.15.3

CoreDNS 1.3.1 1.3.1

Etcd 3.3.10 3.3.10

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.15.3 #Action taken to upgrade to the latest version of the community

Note: Before you can perform this upgrade, you have to update kubeadm to v1.15.3.

_____________________________________________________________________5. The current image does not download the latest image. This article takes upgrading version 1.1.5.1 as an example. Upgrading other versions is similar. It is necessary to ensure that the current cluster has acquired the relevant image and the certificate will be updated during the upgrade process. Upgrading to version 1.15.1 can be done by closing the certificate upgrade with--certificate-renewal=false:

[root@node-1 ~]# kubeadm upgrade apply v1.15.1 [upgrade/config] Making sure the configuration is correct: [upgrade/config] Reading configuration from the cluster... [upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [preflight] Running pre-flight checks. [upgrade] Making sure the cluster is healthy: [upgrade/version] You have chosen to change the cluster version to "v1.15.1" [upgrade/versions] Cluster version: v1.14.1 [upgrade/versions] kubeadm version: v1.15.1 [upgrade/confirm] Are you sure you want to proceed with the upgrade? [y/N]: y Version upgrade information, confirm action [upgrade/prepull] Will prepull images for components [kube-apiserver kube-controller-manager kube-scheduler etcd] [upgrade/prepull] Prepulling image for component etcd. [upgrade/prepull] Prepulling image for component kube-apiserver. [upgrade/prepull] Prepulling image for component kube-controller-manager. [upgrade/prepull] Prepulling image for component kube-scheduler. [apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-kube-controller-manager [apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-kube-apiserver [apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-etcd [apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler [apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-controller-manager [apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler [apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-etcd [apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-apiserver [upgrade/prepull] Prepulled image for component kube-controller-manager. [upgrade/prepull] Prepulled image for component kube-scheduler. [upgrade/prepull] Prepulled image for component etcd. [upgrade/prepull] Prepulled image for component kube-apiserver. #Steps to pull a mirror [upgrade/prepull] Successfully prepulled the images for all the control plane components [upgrade/apply] Upgrading your Static Pod-hosted control plane to version "v1.15.1"... Static pod: kube-apiserver-node-1 hash: bdf7ffba48feb2fc4c7676e7525066fd Static pod: kube-controller-manager-node-1 hash: ecf9c37413eace225bc60becabeddb3b Static pod: kube-scheduler-node-1 hash: f44110a0ca540009109bfc32a7eb0baa [upgrade/etcd] Upgrading to TLS for etcd #Start updating the static pod, and each node on the master [upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests122291749" [upgrade/staticpods] Preparing for "kube-apiserver" upgrade [upgrade/staticpods] Renewing apiserver certificate [upgrade/staticpods] Renewing apiserver-kubelet-client certificate [upgrade/staticpods] Renewing front-proxy-client certificate [upgrade/staticpods] Renewing apiserver-etcd-client certificate #Update Certificate [upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-apiserver.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2019-09-15-12-41-40/kube-apiserver.yaml" [upgrade/staticpods] Waiting for the kubelet to restart the component [upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s) Static pod: kube-apiserver-node-1 hash: bdf7ffba48feb2fc4c7676e7525066fd Static pod: kube-apiserver-node-1 hash: bdf7ffba48feb2fc4c7676e7525066fd Static pod: kube-apiserver-node-1 hash: bdf7ffba48feb2fc4c7676e7525066fd Static pod: kube-apiserver-node-1 hash: 4cd1e2acc44e2d908fd2c7b307bfce59 [apiclient] Found 1 Pods for label selector component=kube-apiserver [upgrade/staticpods] Component "kube-apiserver" upgraded successfully! #Update Successful [upgrade/staticpods] Preparing for "kube-controller-manager" upgrade [upgrade/staticpods] Renewing controller-manager.conf certificate [upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-controller-manager.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2019-09-15-12-41-40/kube-controller-manager.yaml" [upgrade/staticpods] Waiting for the kubelet to restart the component [upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s) Static pod: kube-controller-manager-node-1 hash: ecf9c37413eace225bc60becabeddb3b Static pod: kube-controller-manager-node-1 hash: 17b23c8c6fcf9b9f8a3061b3a2fbf633 [apiclient] Found 1 Pods for label selector component=kube-controller-manager [upgrade/staticpods] Component "kube-controller-manager" upgraded successfully!#Update Successful [upgrade/staticpods] Preparing for "kube-scheduler" upgrade [upgrade/staticpods] Renewing scheduler.conf certificate [upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-scheduler.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2019-09-15-12-41-40/kube-scheduler.yaml" [upgrade/staticpods] Waiting for the kubelet to restart the component [upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s) Static pod: kube-scheduler-node-1 hash: f44110a0ca540009109bfc32a7eb0baa Static pod: kube-scheduler-node-1 hash: 18859150495c74ad1b9f283da804a3db [apiclient] Found 1 Pods for label selector component=kube-scheduler [upgrade/staticpods] Component "kube-scheduler" upgraded successfully! #Update Successful [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.15" in namespace kube-system with the configuration for the kubelets in the cluster [kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.15" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy [upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.15.1". Enjoy! #Update Success Tips [upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you haven't already done so.

6. You can see the information above about the success of master upgrade. Upgrade means that a specific version only needs to specify a specific version after apply. After master upgrade, you can upgrade the plugin s of each component. Refer to the upgrade steps of different networks, such as flannel, calico, etc. The upgrade process can upgrade the corresponding DaemonSets.

7. Upgrade the kubelet version and restart the kubelet service, so the master node version is upgraded.

[root@node-1 ~]# yum install -y kubelet-1.15.1-0 kubectl-1.15.1-0 --disableexcludes=kubernetes [root@node-1 ~]# systemctl daemon-reload [root@node-1 ~]# systemctl restart kubelet

2.3 Upgrade worker nodes

1. Upgrade the kubeadm and kubelet packages

[root@node-2 ~]# yum install -y kubelet-1.15.1-0 --disableexcludes=kubernetes [root@node-2 ~]# yum install -y kubeadm-1.15.1-0 --disableexcludes=kubernetes [root@node-2 ~]# yum install -y kubectl-1.15.1-0 --disableexcludes=kubernetes

2. Applications that set up nodes to enter maintenance mode and expel worker nodes will migrate applications other than DaemonSets to other nodes

Set up maintenance and expulsion: [root@node-1 ~]# kubectl drain node-2 --ignore-daemonsets node/node-2 cordoned WARNING: ignoring DaemonSet-managed Pods: kube-system/kube-flannel-ds-amd64-tm6wj, kube-system/kube-proxy-2wqhj evicting pod "coredns-5c98db65d4-86gg7" evicting pod "demo-7b86696648-djvgb" pod/demo-7b86696648-djvgb evicted pod/coredns-5c98db65d4-86gg7 evicted node/node-2 evicted //Looking at the node, at this point node-2 has more Scheduling Disabled identifiers, that is, no new node will be dispatched to it [root@node-1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION node-1 Ready master 26h v1.15.1 node-2 Ready,SchedulingDisabled <none> 26h v1.14.1 node-3 Ready <none> 26h v1.14.1 //Looking at pods on node-2, pods have been migrated to other nodes [root@node-1 ~]# kubectl get pods --all-namespaces -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES default demo-7b86696648-6f22r 1/1 Running 0 30s 10.244.2.5 node-3 <none> <none> default demo-7b86696648-fjmxn 1/1 Running 0 116m 10.244.2.2 node-3 <none> <none> default demo-7b86696648-nwwxf 1/1 Running 0 116m 10.244.2.3 node-3 <none> <none> kube-system coredns-5c98db65d4-cqbbl 1/1 Running 0 30s 10.244.0.6 node-1 <none> <none> kube-system coredns-5c98db65d4-g59qt 1/1 Running 2 28m 10.244.2.4 node-3 <none> <none> kube-system etcd-node-1 1/1 Running 0 13m 10.254.100.101 node-1 <none> <none> kube-system kube-apiserver-node-1 1/1 Running 0 13m 10.254.100.101 node-1 <none> <none> kube-system kube-controller-manager-node-1 1/1 Running 0 13m 10.254.100.101 node-1 <none> <none> kube-system kube-flannel-ds-amd64-99tjl 1/1 Running 1 26h 10.254.100.101 node-1 <none> <none> kube-system kube-flannel-ds-amd64-jp594 1/1 Running 0 26h 10.254.100.103 node-3 <none> <none> kube-system kube-flannel-ds-amd64-tm6wj 1/1 Running 0 26h 10.254.100.102 node-2 <none> <none> kube-system kube-proxy-2wqhj 1/1 Running 0 28m 10.254.100.102 node-2 <none> <none> kube-system kube-proxy-k7c4f 1/1 Running 1 27m 10.254.100.101 node-1 <none> <none> kube-system kube-proxy-zffgq 1/1 Running 0 28m 10.254.100.103 node-3 <none> <none> kube-system kube-scheduler-node-1 1/1 Running 0 13m 10.254.100.101 node-1 <none> <none>

3. Upgrade worker nodes

[root@node-2 ~]# kubeadm upgrade node [upgrade] Reading configuration from the cluster... [upgrade] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [upgrade] Skipping phase. Not a control plane node[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.15" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [upgrade] The configuration for this node was successfully updated! [upgrade] Now you should go ahead and upgrade the kubelet package using your package manager.

4. Restart the kubelet service

[root@node-2 ~]# systemctl daemon-reload [root@node-2 ~]# systemctl restart kubelet

5. Cancel node dispatch flag to ensure normal dispatch of worker nodes

[root@node-1 ~]# kubectl uncordon node-2 node/node-2 uncordoned [root@node-1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION node-1 Ready master 27h v1.15.1 node-2 Ready <none> 27h v1.15.1 #Upgraded successfully node-3 Ready <none> 27h v1.14.1

Follow the steps above to upgrade the node-3 node, as follows: All node versions are in state after the upgrade is completed:

[root@node-1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION node-1 Ready master 27h v1.15.1 node-2 Ready <none> 27h v1.15.1 node-3 Ready <none> 27h v1.15.1

2.3 Upgrade Principle

1. kubeadm upgrade apply performs actions

- Check that the cluster is up to date, that apiserver is available, that all node s are ready, and that cs components are working

- Forced Version Update Policy

- Check if the image needed for updates is downloadable or retrievable

- Update all control node components to ensure that they roll back to their original state when an exception occurs

- Update the configuration files for kube-dns and kube-proxy to ensure that the required RAB authorization configuration is correct

- Generate a new certificate file and back up the certificate (when the certificate timeout exceeds 180 days)

2. kubeadm upgrade node execution action

- Get ClusterConfiguration from kubeadm, that is, get the configuration file for the update cluster from the cluster and apply it

- Update kubelet configuration information and software on node

3. Update the cluster to the latest version

As of 19.9.15, the latest version of the current kubernetes community is 1.15.3. This article demonstrates how to upgrade the kubernetes cluster online to version 1.15.3 using steps similar to the previous one.

1. Install the latest software package, kubeadm, kubelet, kubectl, all three nodes need to be installed

[root@node-1 ~]# yum install kubeadm kubectl kubelet Plugins loaded: fastestmirror, langpacks Loading mirror speeds from cached hostfile Resolving dependencies -->Checking transactions --->Package kubeadm.x86_64.0.1.15.1-0 will be upgraded --->Package kubeadm.x86_64.0.1.15.3-0 will be updated --->Package kubectl.x86_64.0.1.15.1-0 will be upgraded --->Package kubectl.x86_64.0.1.15.3-0 will be updated --->Package kubelet.x86_64.0.1.15.1-0 will be upgraded --->Package kubelet.x86_64.0.1.15.3-0 will be updated -->Resolve dependencies complete Dependency Resolution ======================================================================================================================================================================== Package Schema Version Source Size ======================================================================================================================================================================== Updating: kubeadm x86_64 1.15.3-0 kubernetes 8.9 M kubectl x86_64 1.15.3-0 kubernetes 9.5 M kubelet x86_64 1.15.3-0 kubernetes 22 M Transaction Summary ======================================================================================================================================================================== Upgrade 3 Package

2. Upgrade master node

View upgrade plan

[root@node-1 ~]# kubeadm upgrade plan

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.15.1

[upgrade/versions] kubeadm version: v1.15.3

[upgrade/versions] Latest stable version: v1.15.3

[upgrade/versions] Latest version in the v1.15 series: v1.15.3

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT AVAILABLE

Kubelet 3 x v1.15.1 v1.15.3

Upgrade to the latest version in the v1.15 series:

COMPONENT CURRENT AVAILABLE

API Server v1.15.1 v1.15.3

Controller Manager v1.15.1 v1.15.3

Scheduler v1.15.1 v1.15.3

Kube Proxy v1.15.1 v1.15.3

CoreDNS 1.3.1 1.3.1

Etcd 3.3.10 3.3.10

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.15.3

_____________________________________________________________________

//Upgrade master node

[root@node-1 ~]# kubeadm upgrade apply v1.15.33. Upgrade worker nodes to upgrade node-2 and node-3 nodes

Set five stains to expel [root@node-1 ~]# kubectl drain node-2 --ignore-daemonsets //Perform upgrade operation [root@node-2 ~]# kubeadm upgrade node [root@node-2 ~]# systemctl daemon-reload [root@node-2 ~]# systemctl restart kubelet //Cancel Scheduling Flag Bits [root@node-1 ~]# kubectl uncordon node-2 node/node-2 uncordoned //Confirm Version Upgrade [root@node-1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION node-1 Ready master 27h v1.15.3 node-2 Ready <none> 27h v1.15.3 node-3 Ready <none> 27h v1.15.1

4. Upgraded status of all nodes

All node state

[root@node-1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node-1 Ready master 27h v1.15.3

node-2 Ready <none> 27h v1.15.3

node-3 Ready <none> 27h v1.15.3

//View component status

[root@node-1 ~]# kubectl get componentstatuses

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

//View application status

[root@node-1 ~]# kubectl get deployments --all-namespaces

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

default demo 3/3 3 3 160m

kube-system coredns 2/2 2 2 27h

//View DaemonSets status

[root@node-1 ~]# kubectl get daemonsets --all-namespaces

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system kube-flannel-ds-amd64 3 3 3 3 3 beta.kubernetes.io/arch=amd64 27h

kube-system kube-proxy 3 3 3 3 3 beta.kubernetes.io/os=linux 27h4. Write at the end

So far, the operation of kubernetes offline upgrade (unable to connect to the external network) and online upgrade (need to be properly connected to the Internet) are described through the two cases above, the process and implementation details of upgrading master node and node node node are described, which provides guidance for experiencing new functions and online version upgrade.

5. References

https://kubernetes.io/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade-1-15/