helm installation reference:

https://www.kubernetes.org.cn/4619.html

Helm is composed of client-side command line tool and server-side tiller. Helm installation is very simple. Download the helm command line tool under / usr/local/bin of master node node 1. Download version 2.9.1 here:

wget https://storage.googleapis.com/kubernetes-helm/helm-v2.11.0-linux-amd64.tar.gz tar -zxvf helm-v2.11.0-linux-amd64.tar.gz cd linux-amd64/ cp helm /usr/local/bin/

In order to install tiller on the server side, it is necessary to configure the kubectl tool and the kubeconfig file on this machine to ensure that the kubectl tool can access apiserver on this machine and use it properly. The node1 node and kubectl are configured here.

Because Kubernetes APIServer opens RBAC access control, it is necessary to create service account: tiller used by tiller and assign it appropriate roles. Details can be viewed in the helm document Role-based Access Control . For the sake of simplicity, assign cluster-admin directly to Cluster Role, which is built-in in the cluster. Create the rbac-config.yaml file:

apiVersion: v1 kind: ServiceAccount metadata: name: tiller namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: tiller roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: tiller namespace: kube-system

kubectl create -f rbac-config.yaml serviceaccount/tiller created clusterrolebinding.rbac.authorization.k8s.io/tiller created

Install tiller

helm init --service-account tiller --skip-refresh

problem

At this point, the problem arises, which is different from what the blogger referred to before wrote. Because I use the domestic docker source, the gcr.io/kubernetes-helm/tiller image is not accessible, so when I look at the pod

kubectl get pods -n kube-system //Display: NAME READY STATUS RESTARTS AGE tiller-deploy-6f6fd74b68-rkk5w 0/1 ImagePullBackOff 0 14h

The pod is not in the right state. As a novice, Bai began to explore solutions.

Solutions

1. View pod events

kubectl describe pod tiller-deploy-6f6fd74b68-rkk5w -n kube-system

display

Events: Type Reason Age From Message ---- ------ ---- ---- ------- Warning Failed 52m (x3472 over 14h) kubelet, test1 Error: ImagePullBackOff Normal BackOff 2m6s (x3686 over 14h) kubelet, test1 Back-off pulling image "gcr.io/kubernetes-helm/tiller:v2.11.0"

Obviously, acquiring gcr.io/kubernetes-helm/tiller:v2.11.0 mirror failed

2. Manual pulling mirror

docker search kubernetes-helm/tiller

cockpit/kubernetes This container provides a version of cockpit... 41 [OK] fluent/fluentd-kubernetes-daemonset Fluentd Daemonset for Kubernetes 24 [OK] lachlanevenson/k8s-helm Helm client (https://github.com/kubernetes/h... 17 dtzar/helm-kubectl helm and kubectl running on top of alpline w... 16 [OK] jessestuart/tiller Nightly multi-architecture (amd64, arm64, ar... 4 [OK] hypnoglow/kubernetes-helm Image providing kubernetes kubectl and helm ... 3 [OK] linkyard/docker-helm Docker image containing kubernetes helm and ... 3 [OK] jimmysong/kubernetes-helm-tiller 2 ibmcom/tiller Docker Image for IBM Cloud private-CE (Commu... 1 zhaosijun/kubernetes-helm-tiller mirror from gcr.io/kubernetes-helm/tiller:v2... 1 [OK] zlabjp/kubernetes-resource A Concourse resource for controlling the Kub... 1 thebeefcake/concourse-helm-resource concourse resource for managing helm deploym... 1 [OK] timotto/rpi-tiller k8s.io/tiller for Raspberry Pi 1 fishead/gcr.io.kubernetes-helm.tiller mirror of gcr.io/kubernetes-helm/tiller 1 [OK] victoru/concourse-helm-resource concourse resource for managing helm deploym... 0 [OK] bitnami/helm-crd-controller Kubernetes controller for HelmRelease CRD 0 [OK] z772458549/kubernetes-helm-tiller kubernetes-helm-tiller 0 [OK] mnsplatform/concourse-helm-resource Concourse resource for helm deployments 0 croesus/kubernetes-helm-tiller kubernetes-helm-tiller 0 [OK]

So many mirrors. Look at the description. I'm right.

fishead/gcr.io.kubernetes-helm.tiller mirror of gcr.io/kubernetes-helm/tiller 1 [OK]

fishead/gcr.io.kubernetes-helm.tiller

mirror of gcr.io/kubernetes-helm/tiller Build

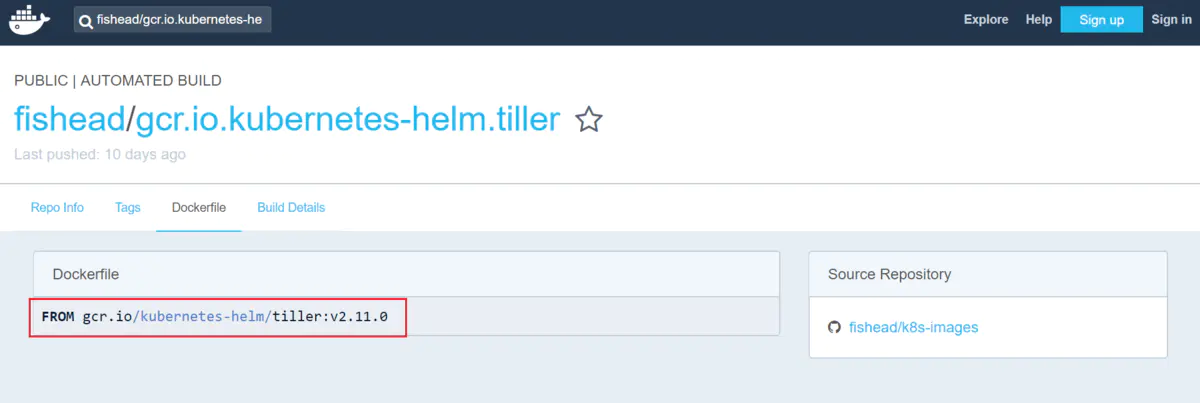

Next go to dockerhub and confirm

dockerhub.jpg

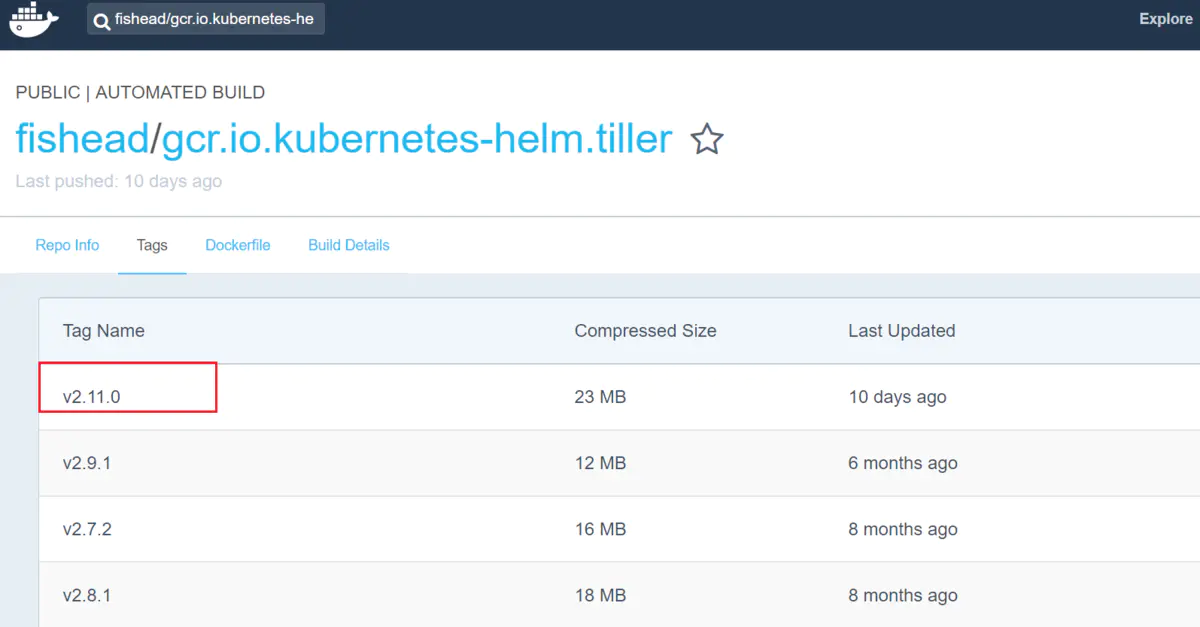

Sure enough, it's the mirror we need, and then look at the version:

tag.jpg

Download Mirror:

docker pull fishead/gcr.io.kubernetes-helm.tiller:v2.11.0

Change tag

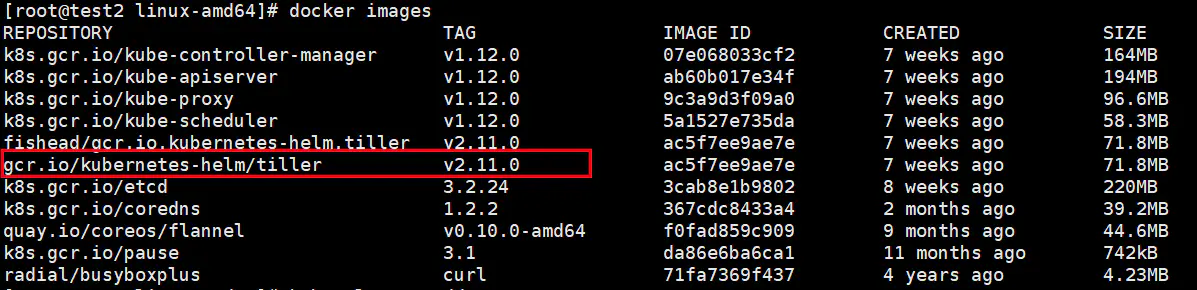

docker tag fishead/gcr.io.kubernetes-helm.tiller:v2.11.0 gcr.io/kubernetes-helm/tiller:v2.11.0

View local mirrors

images.jpg

3. Redeployment

Mengxin this step has been tossing around for a long time, referring to the online method, have tried it.

Delete tiller

helm reset -f

Initialization, redeployment of tiller

helm init --service-account tiller --tiller-image gcr.io/kubernetes-helm/tiller:v2.11.0 --skip-refresh

View pod, or the wrong state

kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE tiller-deploy-6f6fd74b68-qvlzx 0/1 ImagePullBackOff 0 8m43s

Ah Ah Ah Ah Ah Ah Ah Ah Ah Ah Ah Ah Ah Ah Ah Ah Ah Ah, collapsed. Why is it that the pull-out mirror failed? (;')

Calm down and think about it. Is it written in the configuration file that always gets the warehouse image?

Edit the configuration file

kubectl edit deployment tiller-deploy -n kube-system

apiVersion: extensions/v1beta1 kind: Deployment metadata: annotations: deployment.kubernetes.io/revision: "2" creationTimestamp: 2018-11-16T08:03:53Z generation: 2 labels: app: helm name: tiller name: tiller-deploy namespace: kube-system resourceVersion: "133136" selfLink: /apis/extensions/v1beta1/namespaces/kube-system/deployments/tiller-deploy uid: 291c2a71-e976-11e8-b6eb-8cec4b591b6a spec: progressDeadlineSeconds: 2147483647 replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: app: helm name: tiller strategy: rollingUpdate: maxSurge: 1 maxUnavailable: 1 type: RollingUpdate template: metadata: creationTimestamp: null labels: app: helm name: tiller spec: automountServiceAccountToken: true containers: - env: - name: TILLER_NAMESPACE value: kube-system - name: TILLER_HISTORY_MAX value: "0" image: gcr.io/kubernetes-helm/tiller:v2.11.0 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 3 httpGet: path: /liveness port: 44135 scheme: HTTP initialDelaySeconds: 1 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 name: tiller ports: - containerPort: 44134 name: tiller protocol: TCP - containerPort: 44135

Sure enough, we found a mirror pull strategy:

imagePullPolicy: IfNotPresent

Look at what the official website says

https://kubernetes.io/docs/concepts/containers/images/ By default, the kubelet will try to pull each image from the specified registry. However, if the imagePullPolicy property of the container is set to IfNotPresent or Never, then a local image is used (preferentially or exclusively, respectively). #The default is to pull the image according to the mirror address in the configuration file. If set to IfNotPresent and Never, the local image will be used. IfNotPresent : Local mirrors are preferred if they exist locally. Never: Instead of pulling up the mirror directly, use the local one; if the local one does not exist, report an exception.

As a matter of fact, I have no problem with this configuration. Why not retrieve the local image first? Is it the reason why I downloaded it later? Anyway, I'll change to never first.

imagePullPolicy:Never

Save and view the pod status

tiller-deploy-f844bd879-p6m8x 1/1 Running 0 62s