Learn more about template parameters

In fact, there are three template parameters: type parameter, non type parameter and template template parameter (there is no repetition here, which is indeed the name). Chapter 12 lists examples of type parameters and non type parameters, but I haven't seen the template parameter. This chapter also has some manual problems related to type parameters and non type parameters not covered in Chapter 12. These three types of template parameters are discussed in depth below.

Learn more about template type parameters

The type parameter of the template is the essence of the template. Any number of type parameters can be declared. For example, you can add a second type parameter to the Grid template in Chapter 12 to indicate that the Grid is built on another templated class container. The standard library defines several templated container classes, including vector and deque. The original Grid class uses the vector of vector to store the elements of the Grid. The user of Grid class may want to use the vector of deque. The type parameter of another template allows the user to specify whether the underlying container is vector or deque. The following is the class definition with additional template parameters:

template <typename T,typename Container>

class Grid

{

public:

explicit Grid(size_t width = kDefaultWidth,

size_t height = kDefaultHeight);

virtual ~Grid() = default;

//Explicity default a copy constructor and assignment operator

Grid(const Grid& src) = default;

Grid<T,Container>& operator=(const Grid& rhs) = default;

//Explicitly default a move constructor and assignment operator

Grid(Grid&& src) = default;

Grid<T,Container>& operator=(Grid&& rhs) = default;

typename Container::value_type& at(size_t x,size_t y);

const typename Container::value_type& at(size_t x,size_t y) const;

size_t getHeight() const{return mHeight;}

size_t getWidth() const{return mWidth;}

static const size_t kDefaultWidth = 10;

static const size_t kDefaultHeight = 10;

private:

void verifyCoordinate(size_t x,size_t y) const;

std::vector<Container> mCells;

size_t mWidth = 0,mHeight = 0;

};

The template now has two parameters: T and Container. Therefore, all places where Grid is referenced must now specify Grid < T, Container > to represent two template parameters. The only other change is that mCells is now the vector of the Container, not the vector of the vector. The following is the definition of the constructor:

template<typename T,typename Container>

Grid<T,Container>::Grid(size_t width,size_t height)

:mWidth(width),mHeight(height)

{

mCells.resize(mWidth);

for(auto& column : mCells)

{

column.resize(mHeight);

}

}

This constructor assumes that the Container type has a resize() method. If you try to instantiate this template by specifying a type without a resize() method, the compiler generates an error. The return type of the at() method is the element type stored in the given type Container. You can use typename Container: value_ The following are the implementations of the remaining methods:

template<typename T,typename Container>

void Grid<T,Container>::verifyCoordinate(size_t x,size_t y) const

{

if(x >= mWidth || y >= mHeight)

{

throw std::out_of_range("");

}

}

template<typename T,typename Container>

const typename Container::value_type&

Grid<T,Container>::at(size_t x,size_t y) const

{

verifyCoordinate(x,y);

return mCells[x][y];

}

template<typename T,typename Container>

typename Container::value_type&

Grid<T,Container>::at(size_t x,size_t y)

{

return const_cast<typename Container::value_type&>(std::as_const(*this).at(x,y));

}

Grid objects can now be instantiated and used as follows

Grid<int,vector<optional<int>>> myIntVectorGrid; Grid<int,queue<optional<int>>> myIntDequeGrid; myIntVectorGrid.at(3,4) = 5; cout<<myIntVectorGrid.at(3,4).value_or(0)<<endl; myIntDequeGrid.at(1,2) = 3; cout<<myIntVectorGrid.at(1,2).value_or(0)<<endl; Grid<int,vector<optional<int>>> grid2(myIntVectorGrid); grid2 = myIntVectorGrid;

output

xz@xiaqiu:~/study/test/test$ g++ -o test test.cpp -std=c++17

test.cpp: In instantiation of 'Grid<T, Container>::Grid(size_t, size_t) [with T = int; Container = std::queue<std::optional<int> >; size_t = long unsigned int]':

test.cpp:77:36: required from here

test.cpp:47:20: error: 'class std::queue<std::optional<int> >' has no member named 'resize'; did you mean 'size'?

47 | column.resize(mHeight);

| ~~~~~~~^~~~~~

| size

test.cpp: In instantiation of 'const typename Container::value_type& Grid<T, Container>::at(size_t, size_t) const [with T = int; Container = std::queue<std::optional<int> >; typename Container::value_type = std::optional<int>; size_t = long unsigned int]':

test.cpp:72:12: required from 'typename Container::value_type& Grid<T, Container>::at(size_t, size_t) [with T = int; Container = std::queue<std::optional<int> >; typename Container::value_type = std::optional<int>; size_t = long unsigned int]'

test.cpp:82:26: required from here

test.cpp:65:21: error: no match for 'operator[]' (operand types are 'const value_type' {aka 'const std::queue<std::optional<int> >'} and 'size_t' {aka 'long unsigned int'})

65 | return mCells[x][y];

| ~~~~~~~~~^

xz@xiaqiu:~/study/test/test$

code

int main()

{

Grid<int, vector<optional<int>>> myIntVectorGrid;

//Grid<int, queue<optional<int>>> myIntDequeGrid;

myIntVectorGrid.at(3, 4) = 5;

cout << myIntVectorGrid.at(3, 4).value_or(0) << endl;

// myIntDequeGrid.at(1, 2) = 3;

// cout << myIntVectorGrid.at(1, 2).value_or(0) << endl;

Grid<int, vector<optional<int>>> grid2(myIntVectorGrid);

grid2 = myIntVectorGrid;

//Grid<int,int> test; // WILL NOT COMPILE

return 0;

}

Using Container for the parameter name does not mean that the type must be Container. Try instantiating the Grid class with int:

Grid<int,int> test; // WILL NOT COMPILE

This line of code cannot compile successfully, but the compiler may not give the expected error. The compiler does not report an error, saying that the second type parameter is not a Container but an int, but gives a strange error. For example, Microsoft Visual C + + reports "Container": must be a class or namespace when followed by ':: ". This is because the compiler attempts to generate a Grid class that treats int as a Container. Everything is normal before trying to process this line of the class template definition;

xz@xiaqiu:~/study/test/test$ g++ -o test test.cpp -std=c++17

test.cpp: In instantiation of 'class Grid<int, int>':

test.cpp:90:19: required from here

test.cpp:70:1: error: 'int' is not a class, struct, or union type

70 | Grid<T, Container>::at(size_t x, size_t y)

| ^~~~~~~~~~~~~~~~~~

test.cpp:62:1: error: 'int' is not a class, struct, or union type

62 | Grid<T, Container>::at(size_t x, size_t y) const

| ^~~~~~~~~~~~~~~~~~

test.cpp: In instantiation of 'Grid<T, Container>::Grid(size_t, size_t) [with T = int; Container = int; size_t = long unsigned int]':

test.cpp:90:19: required from here

test.cpp:48:16: error: request for member 'resize' in 'column', which is of non-class type 'int'

48 | column.resize(mHeight);

| ~~~~~~~^~~~~~

xz@xiaqiu:~/study/test/test$

typename Container::value_type& at(size_t x,size_t y);

In this line, the compiler realizes that column is of type int and has no embedded value_type alias. As with function parameters, you can specify default values for template parameters. For example, you may want to indicate that the default container for Grid is vector. This template class is defined as follows:

template <typename T,typename Container = std::vector<std::optional<T>>>

class Grid

{

//Everything else is the same as before

};

The type T in the first template parameter can be used as the parameter of the optional template in the default value of the second template parameter. C + + syntax requires that the default value cannot be repeated in the template title line of the method definition. Now with this default parameter, when instantiating the grid, the customer can specify or not specify the underlying container:

Grid<int, deque<optional<int>>> myDequeGrid; Grid<int, vector<optional<int>>> myVectorGrid; Grid<int> myVectorGrid2(myVectorGrid);

Introduction to template parameter

There is another problem with the Container parameter discussed in this section. When instantiating a class template, write the code as follows:

Grid<int,vector<optional<int>>> myIntGrid;

Please note the repetition of int type. The element type must be specified for both Grid and vector in vector. What happens if you write the following code?

Grid<int,vector<optional<SpreadsheetCell>>>myIntGrid;

This does not work well. It would be nice if you could write the following code so that such errors do not occur:

Grid<int,vector>myIntGrid;

The Grid class should be able to determine that an optional vector with an element type of int is required. However, the compiler will not allow such parameters to be passed to ordinary type parameters, because the vector itself is not a type, but a template. If you want to receive a template as a template parameter, you must use a special parameter called the template parameter. Specify template templ The ate parameter is a bit like specifying the function pointer parameter in an ordinary function. The type of the function pointer includes the return type and parameter type of the function. Similarly, when specifying the template template parameter, the complete specification of the template parameter includes the parameters of the template.

For example, containers such as vector and deque have a template parameter list, as shown below. The E parameter is the element type, and the Allocator parameter is shown in Chapter 17.

template<typename E,typename Allocator = std::allocator<E>>

class vector

{

//vector definition

};

To pass such a container as a template parameter, you can only copy and paste the declaration of the class template (in this case, template < typename e, typename allocator = allocator > class vector), replace the class name (vector) with the parameter name (Container), and use it as the template parameter of another template declaration (Grid in this case) , instead of a simple type name. With the previous template specification, the following is the class template definition of the Grid class that receives a container template as the second template parameter:

template<typename T,

template<typename E,

typename Allocate = std::allocator<E>> class Container = std::vector>

class Grid

{

public:

// Omitted code that is the same as before

std::optional<T>& at(size_t x,size_t y);

const std::optional<T>& at(size_t x,size_t y) const;

// Omitted code that is the same as before

private:

void verifyCoordinate(size_t x,size_t y) const;

std::vector<Container<std::optional<T>>> mCells;

size_t mWidth = 0,mHeight = 0;

};

What's going on here? The first template parameter is the same as before: element type T. the second template parameter is now the template of the Container, such as vector or deque. As mentioned earlier, this "template type" Two parameters must be received: element type E and allocator type. Note the repeated word class after the nested template parameter list. The name of this parameter in the Grid template is Container. The default value is now vector instead of vector, because Container is a template rather than an actual class.

The more common syntax rules for the template parameter are:

template <..., template <TemplateTypeParams> class ParameterName, ...>

Starting from C++17, you can also replace class with typename keyword, as shown below:

template <..., template <TemplateTypeParams> typename ParameterName, ...>

Instead of using the Container itself in the code, you must specify the Container < STD:: optional > as the Container type. For example, the declaration of mCells is as follows:

std::vector<Container<std::optional<T>>> mCells;

You do not need to change the method definition, but you must change the template line, for example:

template <typename T,

template <typename E, typename Allocator = std::allocator<E>> class Container>

void Grid<T, Container>::verifyCoordinate(size_t x, size_t y) const

{

if (x >= mWidth || y >= mHeight)

{

throw std::out_of_range("");

}

}

You can use the Grid template as follows:

Grid<int,vector>myGrid; myGrid.at(1,2) = 3; cout<<myGrid.at(1,2).value_or(0)<<endl; Grid<int,vector>myGrid2(myGrid);

code

#include <cstddef>

#include <stdexcept>

#include <vector>

#include <optional>

#include <utility>

#include <deque>

#include <iostream>

using namespace std;

template <typename T,

template <typename E, typename Allocator = std::allocator<E>> class Container = std::vector>

class Grid

{

public:

explicit Grid(size_t width = kDefaultWidth, size_t height = kDefaultHeight);

virtual ~Grid() = default;

// Explicitly default a copy constructor and assignment operator.

Grid(const Grid &src) = default;

Grid<T, Container> &operator=(const Grid &rhs) = default;

// Explicitly default a move constructor and assignment operator.

Grid(Grid &&src) = default;

Grid<T, Container> &operator=(Grid &&rhs) = default;

std::optional<T> &at(size_t x, size_t y);

const std::optional<T> &at(size_t x, size_t y) const;

size_t getHeight() const { return mHeight; }

size_t getWidth() const { return mWidth; }

static const size_t kDefaultWidth = 10;

static const size_t kDefaultHeight = 10;

private:

void verifyCoordinate(size_t x, size_t y) const;

std::vector<Container<std::optional<T>>> mCells;

size_t mWidth = 0, mHeight = 0;

};

template <typename T, template <typename E, typename Allocator = std::allocator<E>> class Container>

Grid<T, Container>::Grid(size_t width, size_t height)

: mWidth(width)

, mHeight(height)

{

mCells.resize(mWidth);

for (auto &column : mCells)

{

column.resize(mHeight);

}

}

template <typename T, template <typename E, typename Allocator = std::allocator<E>> class Container>

void Grid<T, Container>::verifyCoordinate(size_t x, size_t y) const

{

if (x >= mWidth || y >= mHeight)

{

throw std::out_of_range("");

}

}

template <typename T, template <typename E, typename Allocator = std::allocator<E>> class Container>

const std::optional<T> &Grid<T, Container>::at(size_t x, size_t y) const

{

verifyCoordinate(x, y);

return mCells[x][y];

}

template <typename T, template <typename E, typename Allocator = std::allocator<E>> class Container>

std::optional<T> &Grid<T, Container>::at(size_t x, size_t y)

{

return const_cast<std::optional<T>&>(std::as_const(*this).at(x, y));

}

int main()

{

Grid<int, vector>myGrid;

myGrid.at(1, 2) = 3;

cout << myGrid.at(1, 2).value_or(0) << endl;

Grid<int, vector>myGrid2(myGrid);

return 0;

}

output

xz@xiaqiu:~/study/test/test$ ./test3xz@xiaqiu:~/study/test/test$

be careful

The above C + + syntax is a bit confusing because it tries to get the most flexibility. Try not to get into a syntax dilemma here, and remember the main concept: you can pass templates as parameters to other templates.

Learn more about non type template parameters

Sometimes you may want users to specify a default element to initialize each cell in the grid. Here is a completely reasonable way to achieve this goal, which uses T() as the default value of the second template parameter;

template<typename T,const T DEFAULT = T()>

class Grid

{

public:

explicit Grid(size_t width = kDefaultWidth, size_t height = kDefaultHeight);

virtual ~Grid() = default;

// Explicitly default a copy constructor and assignment operator.

Grid(const Grid& src) = default;

Grid<T, DEFAULT>& operator=(const Grid& rhs) = default;

// Explicitly default a move constructor and assignment operator.

Grid(Grid&& src) = default;

Grid<T, DEFAULT>& operator=(Grid&& rhs) = default;

std::optional<T>& at(size_t x, size_t y);

const std::optional<T>& at(size_t x, size_t y) const;

size_t getHeight() const { return mHeight; }

size_t getWidth() const { return mWidth; }

static const size_t kDefaultWidth = 10;

static const size_t kDefaultHeight = 10;

private:

void verifyCoordinate(size_t x, size_t y) const;

std::vector<std::vector<std::optional<T>>> mCells;

size_t mWidth = 0, mHeight = 0;

};

This definition is legal. The type T in the first parameter can be used as the type of the second parameter, and the non type parameter can be const, just like the function parameter. The initial value of t can be used to initialize each cell in the grid:

template<typename T,const T DEFAULT>

Grid<T,DEFAULT>::Grid(size_t width,size_t height)

:mWidth(width),mHeight(height)

{

mCells.resize(mWidth);

for(auto& column : mCells)

{

column.resize(mHeight);

for(auto& element : column)

{

element = DEFAULT;

}

}

}

Other method definitions remain unchanged, except that the second template parameter must be added to the template row, and all Grid instances must become Grid < T, default >. After these modifications, you can instantiate an int Grid and set initial values for all elements;

Grid<int> myIntGrid; //Initial value is 0 Grid<int,10>myIntGrid2; //Initial value is 10

The initial value can be any integer. However, suppose you try to create a sprasheetcell mesh:

SpreadsheetCell defaultCell;Grid<SpreadsheetCell,defaultCell> mySpreadsheet; //WILL NOT COMPILE

This causes compilation errors because objects cannot be passed as arguments to non type parameters.

int main(){ Grid<int> myIntGrid; //Initial value is 0 Grid<int,10>myIntGrid2; //Initial value is 10 Grid<int,10.0>myIntGrid2; //Initial value is 10 // SpreadsheetCell defaultCell; // Grid<SpreadsheetCell,defaultCell> mySpreadsheet; //WILL NOT COMPILE return 0;}

output

xz@xiaqiu:~/study/test/test$ g++ -o test test.cpp SpreadsheetCell.cpp -std=c++17test.cpp: In function 'int main()':test.cpp:60:18: error: conversion from 'double' to 'int' in a converted constant expression 60 | Grid<int,10.0>myIntGrid2; //Initial value is 10 | ^test.cpp:60:18: error: could not convert '1.0e+1' from 'double' to 'int'test.cpp:60:19: error: conflicting declaration 'int myIntGrid2' 60 | Grid<int,10.0>myIntGrid2; //Initial value is 10 | ^~~~~~~~~~test.cpp:59:17: note: previous declaration as 'Grid<int, 10> myIntGrid2' 59 | Grid<int,10>myIntGrid2; //Initial value is 10 | ^~~~~~~~~~xz@xiaqiu:~/study/test/test$

warning

Non type parameters cannot be objects, or even double and float values. Non type parameters are limited to integers, enumerations, pointers, and references. This example shows a strange behavior of a template class. It can be used normally for one type, but another type will fail to compile. A more detailed way to allow the user to specify the initial element value of the grid is to use references as non type template parameters. Here is the new class definition:

template<typename T,const T& DEFAULT>class Grid{ public: explicit Grid(size_t width = kDefaultWidth, size_t height = kDefaultHeight); virtual ~Grid() = default; // Explicitly default a copy constructor and assignment operator. Grid(const Grid &src) = default; Grid<T, DEFAULT> &operator=(const Grid &rhs) = default; // Explicitly default a move constructor and assignment operator. Grid(Grid &&src) = default; Grid<T, DEFAULT> &operator=(Grid &&rhs) = default; std::optional<T> &at(size_t x, size_t y); const std::optional<T> &at(size_t x, size_t y) const; size_t getHeight() const { return mHeight; } size_t getWidth() const { return mWidth; } static const size_t kDefaultWidth = 10; static const size_t kDefaultHeight = 10;private: void verifyCoordinate(size_t x, size_t y) const; std::vector<std::vector<std::optional<T>>> mCells; size_t mWidth = 0, mHeight = 0;};template <typename T, const T &DEFAULT>Grid<T, DEFAULT>::Grid(size_t width, size_t height) : mWidth(width) , mHeight(height){ mCells.resize(mWidth); for (auto &column : mCells) { column.resize(mHeight); for (auto &element : column) { element = DEFAULT; } }}template <typename T, const T &DEFAULT>void Grid<T, DEFAULT>::verifyCoordinate(size_t x, size_t y) const{ if (x >= mWidth || y >= mHeight) { throw std::out_of_range(""); }}template <typename T, const T &DEFAULT>const std::optional<T> &Grid<T, DEFAULT>::at(size_t x, size_t y) const{ verifyCoordinate(x, y); return mCells[x][y];}template <typename T, const T &DEFAULT>std::optional<T> &Grid<T, DEFAULT>::at(size_t x, size_t y){ return const_cast<std::optional<T>&>(std::as_const(*this).at(x, y));}

This template class can now be instantiated for any type. The C++17 standard specifies that the reference passed in as the second template parameter must be a converted constant expression (template parameter type), and it is not allowed to reference sub objects, temporary objects, string literals, the results of typeid expressions or predefined_ func_ Variable. The following example declares an int grid and a SpreadsheetCell grid with initial values.

int main(){ int defaultInt = 1; Grid<int,defaultInt>myIntGrid; SpreadsheetCell defaultCell(1.2); Grid<SpreadsheetCell,defaultCell> mySpreadsheet; return 0;}

output

xz@xiaqiu:~/study/test/test$ g++ -o test test.cpp SpreadsheetCell.cpp -std=c++17test.cpp: In function 'int main()':test.cpp:81:24: error: '& defaultInt' is not a valid template argument of type 'const int&' because 'defaultInt' is not a variable 81 | Grid<int,defaultInt>myIntGrid; | ^test.cpp:84:37: error: '& defaultCell' is not a valid template argument of type 'const SpreadsheetCell&' because 'defaultCell' is not a variable 84 | Grid<SpreadsheetCell,defaultCell> mySpreadsheet; | ^xz@xiaqiu:~/study/test/test$

Declared as static, you can compile it

int main(){ static int defaultInt = 1; Grid<int,defaultInt>myIntGrid; static SpreadsheetCell defaultCell(1.2); Grid<SpreadsheetCell,defaultCell> mySpreadsheet; return 0;}

But these are the rules of C++17, and most compilers have not yet implemented them. Before C++17, the arguments passed to reference non type template parameters cannot be temporary or unlinked (external or internal) named lvalues. Therefore, for the above example, the rules before C++17 are used below. Use internal links to define initial values:

namespace { int defaultInt = 11; SpreadsheetCell defaultCell(1.2);}int main(){ Grid<int, defaultInt> myIntGrid; Grid<SpreadsheetCell, defaultCell> mySpreadsheet; return 0;}

Partial specialization of template class

In Chapter 12, the specialization of const char * class is called the specialization of complete template class, because it makes a specialization of each template parameter in the Grid template. There are no template parameters left in this specialization. This is not the only way to specialize classes. You can also write partially specialized classes that allow you to specialize some template parameters without dealing with other parameters. For example, the basic version of the Grid template has non type parameters for width and height:

Grid.h

template <typename T, size_t WIDTH, size_t HEIGHT>class Grid{public: Grid() = default; virtual ~Grid() = default; // Explicitly default a copy constructor and assignment operator. Grid(const Grid& src) = default; Grid& operator=(const Grid& rhs) = default; std::optional<T>& at(size_t x, size_t y); const std::optional<T>& at(size_t x, size_t y) const; size_t getHeight() const { return HEIGHT; } size_t getWidth() const { return WIDTH; }private: void verifyCoordinate(size_t x, size_t y) const; std::optional<T> mCells[WIDTH][HEIGHT];};template <typename T, size_t WIDTH, size_t HEIGHT>void Grid<T, WIDTH, HEIGHT>::verifyCoordinate(size_t x, size_t y) const{ if (x >= WIDTH || y >= HEIGHT) { throw std::out_of_range(""); }}template <typename T, size_t WIDTH, size_t HEIGHT>const std::optional<T>& Grid<T, WIDTH, HEIGHT>::at(size_t x, size_t y) const{ verifyCoordinate(x, y); return mCells[x][y];}template <typename T, size_t WIDTH, size_t HEIGHT>std::optional<T>& Grid<T, WIDTH, HEIGHT>::at(size_t x, size_t y){ return const_cast<std::optional<T>&>(std::as_const(*this).at(x, y));}

You can use this method to make a special case of this template class for char* C style strings;

#include "Grid.h" // The file containing the Grid template definitiontemplate <size_t WIDTH, size_t HEIGHT>class Grid<const char*, WIDTH, HEIGHT>{public: Grid() = default; virtual ~Grid() = default; // Explicitly default a copy constructor and assignment operator. Grid(const Grid& src) = default; Grid& operator=(const Grid& rhs) = default; std::optional<std::string>& at(size_t x, size_t y); const std::optional<std::string>& at(size_t x, size_t y) const; size_t getHeight() const { return HEIGHT; } size_t getWidth() const { return WIDTH; }private: void verifyCoordinate(size_t x, size_t y) const; std::optional<std::string> mCells[WIDTH][HEIGHT];};template <size_t WIDTH, size_t HEIGHT>void Grid<const char*, WIDTH, HEIGHT>::verifyCoordinate(size_t x, size_t y) const{ if (x >= WIDTH || y >= HEIGHT) { throw std::out_of_range(""); }}template <size_t WIDTH, size_t HEIGHT>const std::optional<std::string>& Grid<const char*, WIDTH, HEIGHT>::at(size_t x, size_t y) const{ verifyCoordinate(x, y); return mCells[x][y];}template <size_t WIDTH, size_t HEIGHT>std::optional<std::string>& Grid<const char*, WIDTH, HEIGHT>::at(size_t x, size_t y){ return const_cast<std::optional<std::string>&>(std::as_const(*this).at(x, y));}

In this example, all template parameters are not exceptional. Therefore, the template code line is as follows:

template <size_t WIDTH, size_t HEIGHT>class Grid<const char*, WIDTH, HEIGHT>

Note that this template has only two parameters: WIDTH and HEIGHT. However, the Grid class takes three parameters: T, WIDTH, and HEIGHT. Therefore, the template parameter list contains two parameters, while the explicit Grid < const char *, WIDTH, HEIGHT > contains three parameters. Three parameters must still be specified when instantiating the template. Templates cannot be instantiated only by HEIGHT and WIDTH:

Grid<int, 2, 2> myIntGrid; // Uses the original GridGrid<const char*, 2, 2> myStringGrid; // Uses the partial specializationGrid<2, 3> test; // DOES NOT COMPILE! No type specified.

output

xz@xiaqiu:~/study/test/test$ g++ -o test test.cpp SpreadsheetCell.cpp -std=c++17test.cpp: In function 'int main()':test.cpp:56:14: error: wrong number of template arguments (2, should be 3) 56 | Grid<2, 3> test; // DOES NOT COMPILE! No type specified. | ^In file included from test.cpp:1:Grid.h:10:7: note: provided for 'template<class T, long unsigned int WIDTH, long unsigned int HEIGHT> class Grid' 10 | class Grid | ^~~~xz@xiaqiu:~/study/test/test$

The above grammar is really messy. What's worse, in partial specialization, different from full specialization, each method definition should be preceded by a template code line, as shown below;

template <size_t WIDTH, size_t HEIGHT>const std::optional<std::string>&Grid<const char*, WIDTH, HEIGHT>::at(size_t x, size_t y) const{ verifyCoordinate(x, y); return mCells[x][y];}

This template line with two parameters is required to indicate that the method has parameterized the two parameters. Note that grid < const char *, width, height > should be used to represent the complete class name.

The previous examples do not show the real power of partial specialization. You can write a special case implementation for a subset of possible types without having to special case each type. For example, you can write a special Grid class for all pointer types. This special copy constructor and assignment operator can perform deep copy of the object pointed to by the pointer instead of saving shallow copy of the pointer in the Grid. The following is the definition of the class. It is assumed that only one parameter is used to specialize the earliest version of Grid. In this implementation, the Grid becomes the owner of the provided pointer, so it automatically releases memory when needed;

GridPtr.h

#pragma once

#include "Grid.h"

#include <memory>

template <typename T>

class Grid<T *>

{

public:

explicit Grid(size_t width = kDefaultWidth,

size_t height = kDefaultHeight);

virtual ~Grid() = default;

// Copy constructor and copy assignment operator.

Grid(const Grid &src);

Grid<T *> &operator=(const Grid &rhs);

// Explicitly default a move constructor and assignment operator.

Grid(Grid &&src) = default;

Grid<T *> &operator=(Grid &&rhs) = default;

void swap(Grid &other) noexcept;

std::unique_ptr<T> &at(size_t x, size_t y);

const std::unique_ptr<T> &at(size_t x, size_t y) const;

size_t getHeight() const { return mHeight; }

size_t getWidth() const { return mWidth; }

static const size_t kDefaultWidth = 10;

static const size_t kDefaultHeight = 10;

private:

void verifyCoordinate(size_t x, size_t y) const;

std::vector<std::vector<std::unique_ptr<T>>> mCells;

size_t mWidth = 0, mHeight = 0;

};

template <typename T>

Grid<T *>::Grid(size_t width, size_t height)

: mWidth(width)

, mHeight(height)

{

mCells.resize(mWidth);

for (auto &column : mCells)

{

column.resize(mHeight);

}

}

template <typename T>

void Grid<T *>::swap(Grid &other) noexcept

{

using std::swap;

swap(mWidth, other.mWidth);

swap(mHeight, other.mHeight);

swap(mCells, other.mCells);

}

template <typename T>

Grid<T *>::Grid(const Grid &src)

: Grid(src.mWidth, src.mHeight)

{

// The ctor-initializer of this constructor delegates first to the

// non-copy constructor to allocate the proper amount of memory.

// The next step is to copy the data.

for (size_t i = 0; i < mWidth; i++)

{

for (size_t j = 0; j < mHeight; j++)

{

// Make a deep copy of the element by using its copy constructor.

if (src.mCells[i][j])

{

mCells[i][j].reset(new T(*(src.mCells[i][j])));

}

}

}

}

template <typename T>

Grid<T *> &Grid<T *>::operator=(const Grid &rhs)

{

// Check for self-assignment.

if (this == &rhs)

{

return *this;

}

// Use copy-and-swap idiom.

auto copy = rhs; // Do all the work in a temporary instance

swap( copy); // Commit the work with only non-throwing operations

return *this;

}

template <typename T>

void Grid<T *>::verifyCoordinate(size_t x, size_t y) const

{

if (x >= mWidth || y >= mHeight)

{

throw std::out_of_range("");

}

}

template <typename T>

const std::unique_ptr<T> &Grid<T *>::at(size_t x, size_t y) const

{

verifyCoordinate(x, y);

return mCells[x][y];

}

template <typename T>

std::unique_ptr<T> &Grid<T *>::at(size_t x, size_t y)

{

return const_cast<std::unique_ptr<T>&>(std::as_const(*this).at(x, y));

}

Grid.h

#pragma once#include <cstddef>#include <stdexcept>#include <vector>#include <optional>#include <utility>template <typename T>class Grid{public: explicit Grid(size_t width = kDefaultWidth, size_t height = kDefaultHeight); virtual ~Grid() = default; // Explicitly default a copy constructor and assignment operator. Grid(const Grid &src) = default; Grid<T> &operator=(const Grid &rhs) = default; // Explicitly default a move constructor and assignment operator. Grid(Grid &&src) = default; Grid<T> &operator=(Grid &&rhs) = default; std::optional<T> &at(size_t x, size_t y); const std::optional<T> &at(size_t x, size_t y) const; size_t getHeight() const { return mHeight; } size_t getWidth() const { return mWidth; } static const size_t kDefaultWidth = 10; static const size_t kDefaultHeight = 10;private: void verifyCoordinate(size_t x, size_t y) const; std::vector<std::vector<std::optional<T>>> mCells; size_t mWidth = 0, mHeight = 0;};template <typename T>Grid<T>::Grid(size_t width, size_t height) : mWidth(width) , mHeight(height){ mCells.resize(mWidth); for (auto &column : mCells) { column.resize(mHeight); }}template <typename T>void Grid<T>::verifyCoordinate(size_t x, size_t y) const{ if (x >= mWidth || y >= mHeight) { throw std::out_of_range(""); }}template <typename T>const std::optional<T> &Grid<T>::at(size_t x, size_t y) const{ verifyCoordinate(x, y); return mCells[x][y];}template <typename T>std::optional<T> &Grid<T>::at(size_t x, size_t y){ return const_cast<std::optional<T>&>(std::as_const(*this).at(x, y));}

As usual, these two lines of code are the key:

template <typename T>class Grid<T*>

The above syntax shows that this class is a special case of Grid template for all pointer types. An implementation is provided only if it is a pointer type. Note that if you instantiate the mesh as follows: Grid < int * > myintgrid, it is actually int rather than int *. This is not intuitive enough, but unfortunately, this syntax is used in this way. Here is an example:

Grid<int> myIntGrid; // Uses the non-specialized gridGrid<int*> psGrid(2, 2); // Uses the partial specialization for pointer typespsGrid.at(0, 0) = make_unique<int>(1);psGrid.at(0, 1) = make_unique<int>(2);psGrid.at(1, 0) = make_unique<int>(3);Grid<int*> psGrid2(psGrid);Grid<int*> psGrid3;psGrid3 = psGrid2;auto& element = psGrid2.at(1, 0);if (element) { cout << *element << endl; *element = 6;}cout << *psGrid.at(1, 0) << endl; // psGrid is not modifiedcout << *psGrid2.at(1, 0) << endl; // psGrid2 is modified

Code output

xz@xiaqiu:~/study/test/test$ ./test336xz@xiaqiu:~/study/test/test$

The implementation of the method is quite simple, except for the copy constructor, which uses the copy constructor of each element for deep copy:

template <typename T>Grid<T *>::Grid(const Grid &src) : Grid(src.mWidth, src.mHeight){ // The ctor-initializer of this constructor delegates first to the // non-copy constructor to allocate the proper amount of memory. // The next step is to copy the data. for (size_t i = 0; i < mWidth; i++) { for (size_t j = 0; j < mHeight; j++) { // Make a deep copy of the element by using its copy constructor. if (src.mCells[i][j]) { mCells[i][j].reset(new T(*(src.mCells[i][j]))); } } }}

Partial specialization of analog functions by overloading

The C + + standard does not allow partial specialization of the template of a function. Instead, you can overload the function with another template. The difference is subtle. Suppose you want to write a special Find() function template (see Chapter 12). This special case dereferences the pointer and directly calls operator = =. According to the syntax of partial specialization of the class template, the following code may be written:

template <typename T>

size_t Find<T*>(T* const& value, T* const* arr, size_t size)

{

for (size_t i = 0; i < size; i++)

{

if (*arr[i] == *value)

{

return i; // Found it; return the index

}

}

return NOT_FOUND; // failed to find it; return NOT_FOUND

}

output

xz@xiaqiu:~/study/test/test$ g++ -o test test.cpp -std=c++17

test.cpp:10:12: error: expected initializer before '<' token

10 | size_t Find<T*>(T* const& value, T* const* arr, size_t size)

| ^

xz@xiaqiu:~/study/test/test$

However, this syntax for declaring partial exceptions to function templates is not allowed by the C + + standard. The correct way to implement the required behavior is to write a new template for Find(). The difference may seem trivial and impractical, but otherwise you can't compile

template <typename T>

size_t Find(T* const& value, T* const* arr, size_t size)

{

for (size_t i = 0; i < size; i++)

{

if (*arr[i] == *value)

{

return i; // Found it; return the index

}

}

return NOT_FOUND; // failed to find it; return NOT_FOUND

}

The first parameter of this Find() version is t * const &, which is consistent with the original Find() function template (it takes const T & as the first parameter), but it is also feasible to use t * (instead of T * const &) as the first parameter of partial specialization of Find(). You can define the original find (template), partial special case version for pointer type, full special case version for const char * and overloaded version only for const char * in a program. The compiler will select the appropriate version to call according to the derivation rules.

be careful:

In all overloaded versions, function template specialization and specific function template instantiation, the compiler always selects the "most specific" function version. If the non templated version is equivalent to function template instantiation, the compiler prefers the non templated version.

Several specialized versions

static const size_t NOT_FOUND = static_cast<size_t>(-1);template <typename T>size_t Find(const T &value, const T *arr, size_t size){ cout << "original" << endl; for (size_t i = 0; i < size; i++) { if (arr[i] == value) { return i; // found it; return the index } } return NOT_FOUND; // failed to find it; return NOT_FOUND}template <typename T>size_t Find(T *const &value, T *const *arr, size_t size){ cout << "ptr special" << endl; for (size_t i = 0; i < size; i++) { if (*arr[i] == *value) { return i; // found it; return the index } } return NOT_FOUND; // failed to find it; return NOT_FOUND}/* // This does not work.template <typename T>size_t Find<T*>(T* const& value, T* const* arr, size_t size){ cout << "ptr special" << endl; for (size_t i = 0; i < size; i++) { if (*arr[i] == *value) { return i; // found it; return the index } } return NOT_FOUND; // failed to find it; return NOT_FOUND}*/template<>size_t Find<const char *>(const char *const &value, const char *const *arr, size_t size){ cout << "Specialization" << endl; for (size_t i = 0; i < size; i++) { if (strcmp(arr[i], value) == 0) { return i; // found it; return the index } } return NOT_FOUND; // failed to find it; return NOT_FOUND}size_t Find(const char *const &value, const char *const *arr, size_t size){ cout << "overload" << endl; for (size_t i = 0; i < size; i++) { if (strcmp(arr[i], value) == 0) { return i; // found it; return the index } } return NOT_FOUND; // failed to find it; return NOT_FOUND}

The following code calls Find() several times, and the comments inside explain which version of Find() is called:

int main()

{

size_t res = NOT_FOUND;

int myInt = 3, intArray[] = { 1, 2, 3, 4 };

size_t sizeArray = std::size(intArray);

res = Find(myInt, intArray, sizeArray); // calls Find<int> by deduction

res = Find<int>(myInt, intArray, sizeArray); // calls Find<int> explicitly

double myDouble = 5.6, doubleArray[] = { 1.2, 3.4, 5.7, 7.5 };

sizeArray = std::size(doubleArray);

res = Find(myDouble, doubleArray, sizeArray); // calls Find<double> by deduction

res = Find<double>(myDouble, doubleArray, sizeArray); // calls Find<double> explicitly

const char *word = "two";

const char *words[] = { "one", "two", "three", "four" };

sizeArray = std::size(words);

res = Find<const char *>(word, words, sizeArray); // calls template specialization for const char*s

res = Find(word, words, sizeArray); // calls overloaded Find for const char*s

int *intPointer = &myInt, *pointerArray[] = { &myInt, &myInt };

sizeArray = std::size(pointerArray);

res = Find(intPointer, pointerArray, sizeArray); // calls the overloaded Find for pointers

SpreadsheetCell cell1(10), cellArray[] = { SpreadsheetCell(4), SpreadsheetCell(10) };

sizeArray = std::size(cellArray);

res = Find(cell1, cellArray, sizeArray); // calls Find<SpreadsheetCell> by deduction

res = Find<SpreadsheetCell>(cell1, cellArray, sizeArray); // calls Find<SpreadsheetCell> explicitly

SpreadsheetCell *cellPointer = &cell1;

SpreadsheetCell *cellPointerArray[] = { &cell1, &cell1 };

sizeArray = std::size(cellPointerArray);

res = Find(cellPointer, cellPointerArray, sizeArray); // Calls the overloaded Find for pointers

return 0;

}

output

xz@xiaqiu:~/study/test/test$ ./testoriginaloriginaloriginaloriginalSpecializationoverloadptr specialoriginaloriginalptr specialxz@xiaqiu:~/study/test/test$

Template recursion

The functions provided by C + + templates are much more powerful than the simple classes and functions described earlier in this chapter and in Chapter 12. One of the functions is called template recursion. This section first explains the motivation of template recursion, and then describes how to implement template recursion. This section uses the operator overloading function discussed in Chapter 15. If you skip that chapter or make changes to operator [] The syntax of overloading is unfamiliar. Please refer to Chapter 15 before continuing.

N-dimensional mesh: first attempt

The previous Grid template example only supports two dimensions so far, which limits its usefulness. What if you want to write a mathematical program for three-dimensional tic tac toe or four-dimensional matrix? Of course, you can write a template class or a non template class for each dimension. However, this repeats a lot of code. Another method is to write only one-dimensional mesh. Then, instantiate the Grid with another Grid as the element type to create a Grid of any dimension. The Grid element type itself can be instantiated with the Grid as the element type, and so on. The following is the implementation of the OneDGrid class template. This is just a one-dimensional version of the Grid template in the previous example, adding the resize() method and replacing at() with operator []. Like standard library containers such as vector, the operator [] implementation does not perform boundary checking. In addition, in this example, mElements stores an instance of the job instead of an instance of std::optional.

template <typename T>class OneDGrid{public: explicit OneDGrid(size_t size = kDefaultSize); virtual ~OneDGrid() = default; T &operator[](size_t x); const T &operator[](size_t x) const; void resize(size_t newSize); size_t getSize() const { return mElements.size(); } static const size_t kDefaultSize = 10;private: std::vector<T> mElements;};template <typename T>OneDGrid<T>::OneDGrid(size_t size){ resize(size);}template <typename T>void OneDGrid<T>::resize(size_t newSize){ mElements.resize(newSize);}template <typename T>T &OneDGrid<T>::operator[](size_t x){ return mElements[x];}template <typename T>const T &OneDGrid<T>::operator[](size_t x) const{ return mElements[x];}

With this implementation of OneDGrid, you can create a multi-dimensional grid in the following ways;

int main(){ OneDGrid<int> singleDGrid; OneDGrid<OneDGrid<int>> twoDGrid; OneDGrid<OneDGrid<OneDGrid<int>>> threeDGrid; singleDGrid[3] = 5; twoDGrid[3][3] = 5; threeDGrid[3][3][3] = 5; return 0;}

This code works, but the declaration code looks a little messy. It is improved below.

Real N-dimensional grid

Templates can be used to recursively write "real" N-dimensional grids because the dimensions of the grid are recursive in nature. As can be seen from the following statement, onedgrid < onedgrid < onedgrid > > threedgrid

You can think of each nested level of OneDGrid as a recursive step, and the OneDGrid of int is the basic case of recursion. In other words, a three-dimensional mesh is a one-dimensional mesh of a one-dimensional mesh of an int one-dimensional mesh. Users do not need to recurse by themselves. They can write a class template to recurse automatically. Then, you can create the following N-dimensional mesh:

NDGrid<int, 1> singleDGrid;NDGrid<int, 2> twoDGrid;NDGrid<int, 3> threeDGrid;

NDGrid class template requires element type and integer representing dimension as parameters. The key problem here is that the element type of NDGrid is not the element type specified in the template parameter list, but another NDGrid specified in the recursive dimension of the upper layer. In other words, 3D mesh is the vector of 2D mesh, and 2D mesh is each vector of 1D mesh. When using recursion, you need to deal with the base case. You can write a partially specialized NDGrid with dimension 1, where the element type is not another NDGrid, but the element type specified by the template parameter. The following is the general form of NDGrid template definition, highlighting the differences from the previous OneDGrid

template <typename T, size_t N>

class NDGrid

{

public:

explicit NDGrid(size_t size = kDefaultSize);

virtual ~NDGrid() = default;

NDGrid < T, N - 1 > & operator[](size_t x);

const NDGrid < T, N - 1 > & operator[](size_t x) const;

void resize(size_t newSize);

size_t getSize() const { return mElements.size(); }

static const size_t kDefaultSize = 10;

private:

std::vector < NDGrid < T, N - 1 >> mElements;

};

Note that mElements are vectors of ndgrid < T, N - 1 >: This is a recursive step. In addition, operator [] returns a reference to the element type, which is still ndgrid < T, N - 1 > instead of T. The template definition of the basic case is a partial specialization with dimension 1:

template <typename T>

class NDGrid<T, 1>

{

public:

explicit NDGrid(size_t size = kDefaultSize);

virtual ~NDGrid() = default;

T &operator[](size_t x);

const T &operator[](size_t x) const;

void resize(size_t newSize);

size_t getSize() const { return mElements.size(); }

static const size_t kDefaultSize = 10;

private:

std::vector<T> mElements;

};

The recursion ends here: the element type is T, not another template instance. The most difficult part of the implementation of template recursion is not the template recursion itself, but the correct size of each dimension in the grid. This implementation creates an N-dimensional grid, and each dimension is the same size. Specifying a different size for each dimension is much more difficult. However, even with this simplification, there is still a problem: users should be able to create arrays of a specified size, such as 20 or 50. Therefore, the constructor receives an integer as a size parameter. However, when you dynamically reset the vector of a sub mesh, you cannot pass this size parameter to the sub mesh element because the vector uses the default constructor to create the object. Therefore, you must explicitly call resize() on each grid element of the vector. The base case does not need to resize the element, because the element of the base case is T, not the grid.

The following is the implementation of NDGrid master template, which highlights the differences between NDGrid and OneDGrid:

template <typename T, size_t N>

NDGrid<T, N>::NDGrid(size_t size)

{

resize(size);

}

template <typename T, size_t N>

void NDGrid<T, N>::resize(size_t newSize)

{

mElements.resize(newSize);

// Resizing the vector calls the 0-argument constructor for

// the NDGrid<T, N-1> elements, which constructs

// them with the default size. Thus, we must explicitly call

// resize() on each of the elements to recursively resize all

// nested Grid elements.

for (auto &element : mElements)

{

element.resize(newSize);

}

}

template <typename T, size_t N>

NDGrid < T, N - 1 > & NDGrid<T, N>::operator[](size_t x)

{

return mElements[x];

}

template <typename T, size_t N>

const NDGrid < T, N - 1 > & NDGrid<T, N>::operator[](size_t x) const

{

return mElements[x];

}

The following is the implementation of partial specialization (basic case). Note that you must rewrite a lot of code because you cannot inherit any implementation in a specialization. The differences between and non specialized NDGrid are highlighted here:

template <typename T>NDGrid<T, 1>::NDGrid(size_t size){ resize(size);}template <typename T>void NDGrid<T, 1>::resize(size_t newSize){ mElements.resize(newSize);}template <typename T>T &NDGrid<T, 1>::operator[](size_t x){ return mElements[x];}template <typename T>const T &NDGrid<T, 1>::operator[](size_t x) const{ return mElements[x];}

Now, you can write the following code;

int main(){ NDGrid<int, 3> my3DGrid; my3DGrid[2][1][2] = 5; my3DGrid[1][1][1] = 5; cout << my3DGrid[2][1][2] << endl; return 0;}

output

xz@xiaqiu:~/study/test/test$ ./test5xz@xiaqiu:~/study/test/test$

Variable parameter template

Common templates can only take a fixed number of template parameters. The variable template can receive variable template parameters. For example, the following code defines a template that can receive any number of template parameters, using a parameter pack called Types:

template<typename... Types>class MyVariadicTemplate { };

be careful:

The three points after typename are not errors. This is the syntax for defining parameter packages for variable parameter templates. Parameter packages can receive variable parameters. Spaces are allowed before and after three points.

You can instantiate MyVariadicTemplate with any number of types, for example:

MyVariadicTemplate<int> instance1;MyVariadicTemplate<string, double, list<int>> instance2;

You can even instantiate MyVariadicTemplate with zero template parameters:

MyVariadicTemplate<> instance3;

To avoid instantiating variable parameter templates with zero template parameters, you can write templates as follows:

template<typename T1, typename... Types>class MyVariadicTemplate { };

With this definition, attempting to instantiate MyVariadicTemplate with zero template parameters will lead to compilation errors. For example, Microsoft Visual C + + will give the following error:

error C2976: 'MyVariadicTemplate' : too few template arguments

Different parameters passed to variable parameter templates cannot be traversed directly. The only way is with the help of template recursion. The following two examples illustrate how to use the variable parameter template.

Type safe variable length parameter list

Variable parameter templates allow you to create type safe variable length parameter lists. The following example defines a variable parameter template processValues(), which allows variable numbers of parameters of different types to be received in a type safe manner. The function processValues() processes each value in the variable length parameter list and executes the handleValue() function on each parameter. This means that the handleValue() function must be written for each type to be processed, such as int, double, and string in the following example:

void handleValue(int value) { cout << "Integer: " << value << endl; }void handleValue(double value) { cout << "Double: " << value << endl; }void handleValue(string_view value) { cout << "String: " << value << endl; }void processValues() { /* Nothing to do in this base case.*/ }template<typename T1, typename... Tn>void processValues(T1 arg1, Tn... args){ handleValue(arg1); processValues(args...);}

In the previous example, the three-point operator "..." was used twice. This operator appears in three places and has two different meanings. First, it is used after typename in the template parameter list and after type Tn in the function parameter list. In both cases, it represents a parameter package. The parameter package can receive variable parameters. The second use of the "..." operator is after the parameter name args in the function body. In this case, it represents the parameter package extension. This operator unpacks / expands the parameter package to get each parameter. It basically extracts the content to the left of the operator and repeats it for each template parameter in the package, separated by commas. Take the following lines from the previous example:

processValues(args...);

This line unpacks (or extends) the args parameter package into different parameters, separates the parameters by commas, and then calls the processvalues () function with these expanded parameters. Templates always require at least one template parameter: T1. The result of calling processValues() recursively through args.. is that each call will lose one template parameter. Since the implementation of the processvalues () function is recursive, you need to take a method to stop recursion. To do this, implement a processvalues () function that requires it to receive zero parameters. The processValues() variable parameter template can be tested with the following code:

processValues(1, 2, 3.56, "test", 1.1f);

The recursive call generated by this example is:

processValues(1, 2, 3.56, "test", 1.1f); handleValue(1); processValues(2, 3.56, "test", 1.1f); handleValue(2); processValues(3.56, "test", 1.1f); handleValue(3.56); processValues("test", 1.1f); handleValue("test"); processValues(1.1f); handleValue(1.1f); processValues();

It is important to remember that this variable length parameter list is completely type safe. The processvalues () function will automatically call the correct overloaded version of handlevalue() based on the actual type. C + + also performs type conversion automatically as usual. For example, the type of 1.1f in the previous example is float. The processvalues () function calls handleValue(double value) because there is no loss in the conversion from float to double. However, if processvalues () is called with some type of parameter, and this type has no corresponding handlevalue() function, the compiler will generate an error. There is a small problem with the previous implementation. Since this is a recursive implementation, the parameters are copied every time processvalues () is called recursively. Depending on the type of parameter, this can be costly. You might think that this duplication problem can be avoided by passing a reference to the processValues() function instead of using the pass by value method. Unfortunately, processValues() cannot be called literally Because literal references are not allowed unless const references are used. In order to use literal values while using non const references, forward references can be used. The following implementation uses forward references T & &, and STD:: forward (perfect forwarding all parameters means that if rvalue is passed to processValues() , it is forwarded as an rvalue reference. If lvalue or lvalue reference is passed to processvalues (), it is forwarded as an lvalue reference.

void processValues() { /* Nothing to do in this base case.*/ }template<typename T1, typename... Tn>void processValues(T1&& arg1, Tn&&... args){ handleValue(std::forward<T1>(arg1)); processValues(std::forward<Tn>(args)...);}

One line of code needs further explanation:

processValues(std::forward<Tn>(args)...);

The "..." operator is used to unpack the parameter package. It uses std::forward() on each parameter in the parameter package and separates them with commas. For example, suppose args is a parameter package with three parameters (A1, A2 and A3), corresponding to three types (A1, A2 and A3). The extended call is as follows:

processValues(std::forward<A1>(a1), std::forward<A2>(a2), std::forward<A3>(a3));

In the function body that uses the parameter package, the number of parameters in the parameter package can be obtained by the following methods:

int numOfArgs = sizeof...(args);

A practical example of using variable length parameter templates is to write a safe and type safe function template similar to printf(). This is a good exercise to practice variable length parameter templates.

#include <iostream>#include <string>#include <string_view>using namespace std;void handleValue(int value){ cout << "Integer: " << value << endl;}void handleValue(double value){ cout << "Double: " << value << endl;}void handleValue(string_view value){ cout << "String: " << value << endl;}// First version using pass-by-valuevoid processValues() // Base case{ // Nothing to do in this base case.}template<typename T1, typename... Tn>void processValues(T1 arg1, Tn... args){ handleValue(arg1); processValues(args...);}// Second version using pass-by-rvalue-referencevoid processValuesRValueRefs() // Base case{ // Nothing to do in this base case.}template<typename T1, typename... Tn>void processValuesRValueRefs(T1 &&arg1, Tn &&... args){ handleValue(std::forward<T1>(arg1)); processValuesRValueRefs(std::forward<Tn>(args)...);}int main(){ processValues(1, 2, 3.56, "test", 1.1f); cout << endl; processValuesRValueRefs(1, 2, 3.56, "test", 1.1f); return 0;}

output

xz@xiaqiu:~/study/test/test$ ./testInteger: 1Integer: 2Double: 3.56String: testDouble: 1.1Integer: 1Integer: 2Double: 3.56String: testDouble: 1.1xz@xiaqiu:~/study/test/test$

Variable mixed class

Parameter packages can be used almost anywhere. For example, the following code uses a parameter package to define a variable mixed class for MyClass class. Chapter 5 discusses the concept of mixed class.

class Mixin1

{

public:

Mixin1(int i) : mValue(i) {}

virtual void Mixin1Func() { cout << "Mixin1: " << mValue << endl; }

private:

int mValue;

};

class Mixin2

{

public:

Mixin2(int i) : mValue(i) {}

virtual void Mixin2Func() { cout << "Mixin2: " << mValue << endl; }

private:

int mValue;

};

template<typename... Mixins>

class MyClass : public Mixins...

{

public:

MyClass(const Mixins&... mixins) : Mixins(mixins)... {}

virtual ~MyClass() = default;

};

The above code first defines two mixed classes Mixin1 and Mixin2. Their definition in this example is very simple. Their constructors receive an integer and then save the integer. The two classes have a function to print the information of a specific instance. The MyClass variable parameter template uses the parameter package typename... Mixins to receive variable mixed classes. MyClass continues Accept all mixed classes, and its constructor receives the same number of parameters to initialize each inherited mixed class. Note that the....... Extension operator basically receives the contents on the left of the operator. Repeat these contents for each template parameter in the parameter package and separate them with commas. MyClass can be used as follows:

MyClass<Mixin1, Mixin2> a(Mixin1(11), Mixin2(22)); a.Mixin1Func(); a.Mixin2Func(); MyClass<Mixin1> b(Mixin1(33)); b.Mixin1Func(); //b.Mixin2Func(); // Error: does not compile. MyClass<> c; //c.Mixin1Func(); // Error: does not compile. //c.Mixin2Func(); // Error: does not compile.

Attempting to call Mixin2Func() on b will result in a compilation error because b does not inherit from the Mixin2 class. The output of this program is as follows:

output

xz@xiaqiu:~/study/test/test$ g++ -o test test.cpp -std=c++17test.cpp: In function 'int main()':test.cpp:35:7: error: 'class MyClass<Mixin1>' has no member named 'Mixin2Func'; did you mean 'Mixin1Func'? 35 | b.Mixin2Func(); // Error: does not compile. | ^~~~~~~~~~ | Mixin1Funcxz@xiaqiu:~/study/test/test$

output

xz@xiaqiu:~/study/test/test$ ./testMixin1: 11Mixin2: 22Mixin1: 33xz@xiaqiu:~/study/test/test$

Collapse expression

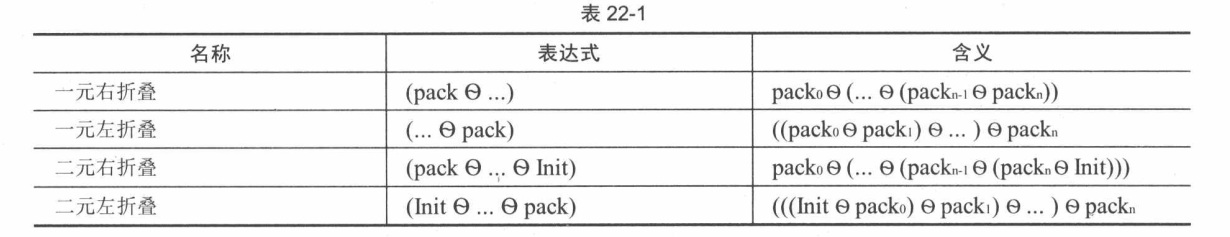

C++17 adds folding expression In this way, it will be easier to process parameter packages in the variable parameter template. Table 22-1 lists the four supported folding types. In this table, 6 can be any of the following operators: +, -, *, /,%, ^, &, |, <, > > >, + =, - =, * =, / =,% =, ^ =, & =, | =, < =, > =, =, = =,! =, <, >, < =, > =, & &, |,. *, - > * * * *.

Some examples are analyzed below. The previous processValues() function template is defined recursively, as follows:

void processValues() //Nothing to do in this base case

{

template<typename T1,typename... Tn>

void processValues(T1 arg1,Tn... args)

{

handleValue(arg1);

processValue(args...);

}

}

Because it is defined recursively, the basic case is required to stop recursion. Use the folding expression, use the unary right folding, and implement it through a single function template. At this time, the basic case is not required.

template<typename... Tn>void processValues(const Tn&... args){ (handleValue(args),...);}

Basically, three points in the function body trigger folding. Expand this line, call handlevalue0 for each parameter in the parameter package, and separate each call to handlevalue() with commas. For example, suppose args is a parameter package containing three parameters (a1, a2 and a3). The unary right folding is as follows

(handleValue(a1), (handleValue(a2), handleValue(a3)));

Here is another example. The printValues() function template writes all arguments to the console, separated by line breaks.

template<typename... Values>

void printValues(const Values&... values)

{

((cout << values << endl), ...);

}

Suppose values is a parameter package containing three parameters (v1, v2 and v3). The extended form of unary right folding is as follows:

((cout << v1 << endl), ((cout << v2 << endl), (cout << v3 << endl)));

Any number of arguments can be used when calling printValues(), as follows:

printValues(1, "test", 2.34);

In these examples, folding is used in conjunction with the comma operator, but in fact, folding can be used in conjunction with any type of operator. For example, the following code defines a variable parameter function template that uses binary left folding to recalculate the sum of all values passed to it. Binary left folding always requires an Init value (see table 22-1D). Therefore, sumValues() There are two template type parameters: one is a normal parameter, which is used to specify the type of Init, and the other is a parameter package, which can receive 0 or more arguments.

template<typename T, typename... Values>double sumValues(const T& init, const Values&... values){ return (init + ... + values);}

Suppose values is a parameter package containing three parameters (v1, v2 and v3). The extended form of binary left folding county is as follows;

return (((init + v1) + v2) + v3);

The usage of sumValues() function template is as follows:

cout << sumValues(1, 2, 3.3) << endl;cout << sumValues(1) << endl;

The function template requires at least one parameter, so the following code cannot be compiled:

cout << sumvalues() << endl;

Template meta programming

This section explains template meta programming. This is a very complex topic. Some books on template metaprogramming explain all the details. This book does not have enough space to explain all the details of template meta programming. This section explains the most important concepts through several examples. The goal of template metaprogramming is to perform some calculations at compile time rather than at run time. Template metaprogramming is basically a small programming language based on C + +. The following first discusses a simple example. This example calculates the factorial of a number at compile time and can use the calculation result as a simple constant at run time.

Compile time factorial

The following code demonstrates how to calculate the factorial of a number at compile time. The code uses the template recursion described earlier in this chapter. We need a recursion template and a basic template to stop recursion. According to the mathematical definition, the factorial of 0 is 1, so it is used as the basic case:

template<unsigned char f>class Factorial{ public: static const unsigned long long val = (f * Factorial<f - 1>::val);};template<>class Factorial<0>{ public: static const unsigned long long val = 1;};int main(){ cout << Factorial<6>::val << endl; return 0;}

This will calculate the factorial of 6, mathematically expressed as 6!, The value is 1x2x3x4x5x6 or 720.

be careful

Remember that factorials are calculated at compile time. At runtime, the value calculated at compile time can be accessed through:: val, which is just a static constant value. The above specific example calculates the factorial of a number at compile time, but template meta programming is not necessary. Due to the introduction of constexpr, you can write it in the following form without using a template. However, template implementation is still an excellent example of implementing recursive templates.

constexpr unsigned long long factorial(unsigned char f){ if (f == 0) { return 1; } else { return f * factorial(f - 1); }}

If the following version is called, the value is calculated at compile time:

constexpr auto f1 = factorial(6);

However, in this statement, don't forget constexpr. If you write the following code, the calculation will be completed at run time!

auto f1 = factorial(6);

In the template metaprogramming version, such errors cannot be made. Always make calculations complete at compile time.

Loop expansion

The second example of template metaprogramming is to expand the Loop at compile time instead of executing the Loop at run time. Note that Loop unrolling should only be used when needed, because the compiler is usually smart enough to automatically unroll loops that can be unrolled. This example uses template recursion again because you need to do something in the Loop at compile time. In each recursion, the Loop template instantiates itself through i- 1. Stop recursion when 0 is reached.

template<int i>

class Loop

{

public:

template <typename FuncType>

static inline void Do(FuncType func)

{

Loop<i - 1>::Do(func);

func(i);

}

};

template<>

class Loop<0>

{

public:

template <typename FuncType>

static inline void Do(FuncType /* func */) { }

};

You can use the Loop template as follows:

void DoWork(int i) { cout << "DoWork(" << i << ")" << endl; }int main(){ Loop<3>::Do(DoWork);}

This code will cause the compiler to expand the loop and call the DoWork() function three times in a row. The output of this program is as follows:

DoWork(1)DoWork(2)DoWork(3)

Using lambda expressions, you can use the version of DoWork() that receives multiple parameters:

void DoWork2(string str, int i){ cout << "DoWork2(" << str << ", " << i << ")" << endl;}int main(){ Loop<2>::Do([](int i) { DoWork2("TestStr", i); });}

The above code first implements a function that receives a string and an int value. The main() function uses a lambda expression and calls DoWork() with a fixed string Test() as the first parameter in each iteration. Compile and run the above code, and the output should be as follows:

DoWork2(TestStr,1) DoWork2(TestStr,2)

Print tuple

This example prints the elements in std::tuple through template meta programming. Chapter 20 explains tuples. Tuples allow you to store any number of values, each of which has its own specific type. Tuples have a fixed size and value type, which are determined at compile time. However, tuples do not provide any built-in mechanism to traverse their elements. The following example demonstrates how to metaprogram elements in tuples at compile time through template metaprogramming. As in most cases in template metaprogramming, this example also uses template recursion. tuple_ The print class template receives two template parameters: tuple type and integer initialized to tuple size. Then recursively instantiate itself in the constructor, reducing the size of each call. When the size becomes 0, tuple_ A partial specialization of print stops recursion. The main() function demonstrates how to use this tuple_print class template.

template<typename TupleType, int n>

class tuple_print

{

public:

tuple_print(const TupleType& t)

{

tuple_print<TupleType, n - 1> tp(t);

cout << get<n - 1>(t) << endl;

}

};

template<typename TupleType>

class tuple_print<TupleType, 0>

{

public:

tuple_print(const TupleType&) { }

};

int main()

{

using MyTuple = tuple<int, string, bool>;

MyTuple t1(16, "Test", true);

tuple_print<MyTuple, tuple_size<MyTuple>::value> tp(t1);

}

Analyze the main() function and you will find that tuple is used_ The line of the print class template looks a little complicated because the size and exact type of tuples are required as template parameters. This code can be simplified by introducing an auxiliary function template that automatically deduces template parameters. The simplified implementation is as follows;

template<typename TupleType, int n>

class tuple_print_helper

{

public:

tuple_print_helper(const TupleType& t)

{

tuple_print_helper<TupleType, n - 1> tp(t);

cout << get<n - 1>(t) << endl;

}

};

template<typename TupleType>

class tuple_print_helper<TupleType, 0>

{

public:

tuple_print_helper(const TupleType&) { }

};

template<typename T>

void tuple_print(const T& t)

{

tuple_print_helper<T, tuple_size<T>::value> tph(t);

}

int main()

{

auto t1 = make_tuple(167, "Testing", false, 2.3);

tuple_print(t1);

}

The first change here is the original tuple_ Rename the print class template to tnple_print_helper. Then, the above code implements a program named tuple_ The small function template of print (), which receives the tuple type as the template type parameter and the reference to the tuple itself as the function parameter. Instantiate the tuple in the body of the function_ print_ Helper class template. The main() function shows how to use this simplified version. Since the exact type of solution group is no longer necessary, make can be used in combination with the auto keyword_ tuple(). tuple_ The call of print() function template is very simple, as shown below:

tuple_print (t1)

You do not need to specify the parameters of the function template because the compiler can automatically infer from the supplied parameters.

- constexpr if

C++17 introduces constexpr if. These are statements that are executed at compile time, not at run time. If the branch of the constexpr if statement never arrives, it will not be compiled. This can be used to simplify a large number of template metaprogramming techniques, as well as SFINAE discussed later in this chapter. For example, you can use constexp ri to simplify the previous code for printing tuple elements as follows. Note that the basic case of template recursion is no longer needed because recursion can be stopped through the constexpr if statement.

template<typename TupleType, int n>class tuple_print_helper{public: tuple_print_helper(const TupleType& t) { if constexpr(n > 1) { tuple_print_helper<TupleType, n - 1> tp(t); } cout << get<n - 1>(t) << endl; }};template<typename T>void tuple_print(const T& t){ tuple_print_helper<T, tuple_size<T>::value> tph(t);}

Now, you can even discard the class template itself and replace it with a simple function template tuple_print_helper:

template<typename TupleType, int n>void tuple_print_helper(const TupleType& t) { if constexpr(n > 1) { tuple_print_helper<TupleType, n - 1>(t); } cout << get<n - 1>(t) << endl;}template<typename T>void tuple_print(const T& t){ tuple_print_helper<T, tuple_size<T>::value>(t);}

It can be further simplified. Combine the two methods into one, as follows:

template<typename TupleType, int n = tuple_size<TupleType>::value>void tuple_print(const TupleType& t) { if constexpr(n > 1) { tuple_print<TupleType, n - 1>(t); } cout << get<n - 1>(t) << endl;}

Still call as before:

auto t1 = make_tuple(167, "Testing", false, 2.3);tuple_print(t1);

- Use compile time Integer Sequences and folds

C + + using STD:: integer_ Sequence (defined in) supports compile time integer sequences. A common use case of template metaprogramming is to generate a compile time index sequence, that is, size_ A sequence of integers of type T. Here, you can use the auxiliary std::index_sequence. You can use std::index_sequence_for to generate an index sequence of the same length as the given parameter package. The tuple printing program is realized by using variable parameter template, compile time index sequence and C++17 folding expression:

template<typename Tuple, size_t... Indices>

void tuple_print_helper(const Tuple& t, index_sequence<Indices...>)

{

((cout << get<Indices>(t) << endl), ...);

}

template<typename... Args>

void tuple_print(const tuple<Args...>& t)

{

tuple_print_helper(t, index_sequence_for<Args...>());

}

When called, tuple_ print_ The unary right folding County expression in the helper () function template is extended to the following form

Type trait

With type trait, you can make decisions based on the type at compile time. For example, you can write a template that requires a type derived from a specific type, or a type that can be converted to a specific type, or an integer type, and so on. The C + + standard defines some auxiliary classes for this purpose. All functions related to type trait are defined in < type_ Traits > header file. The type trait is divided into several different categories. Some examples of the available type trait for each category are listed below. For a complete list, refer to the standard library reference resources (see Appendix B).

➤ original type category

o is_void

o is_integral

o is_floating_point

o is_pointer

o ...

➤ type attribute

o is_const

o is_literal_type

o is_polymorphic

o is_unsigned

o is_constructible

o is_copy_constructible

o is_move_constructible

o is_assignable

o is_trivially_copyable

o is_swappable*

o is_nothrow_swappable*

o has_virtual_destructor

o has_unique_object_representations*

o ...

➤ reference modification

o remove_reference

o add_lvalue_reference

o add_rvalue_reference

➤ pointer modification

o remove_pointer

o add_pointer

➤ composite type category

o is_reference

o is_object

o is_scalar

o ...

➤ type relationship

o is_same

o is_base_of

o is_convertible

o is_invocable*

o is_nothrow_invocable*

o ...

➤ const volatile modification

o remove_const

o add_const

o ...

➤ symbol modification

o make_signed

o make_unsigned

➤ array modification

o remove_extent

o remove_all_extents

➤ logical operator trait

o conjuction*

o disjunction*

o negation*

➤ other conversions

o enable_if

o conditional

o invoke_result*

o ...

Type trait marked with an asterisk is only available in C++17 and later versions. Type trait is a very advanced C + + feature. The above list only shows some types of trait in the C + + standard. From this list alone, it can be seen that this book cannot explain all the details of type trait. Here are just a few use cases to show how to use type trait.

- Use type category

Before giving an example of a template that uses type trait, you should first learn something like is_ How integral's classes work. C + + standard for integral_ The definition of constant class is as follows:;

template <class T, T v>struct integral_constant { static constexpr T value = v; using value_type = T; using type = integral_constant<T, v>; constexpr operator value_type() const noexcept { return value; } constexpr value_type operator()() const noexcept { return value; }};

This also defines bool_constant,true_type and false_type alias:

template <bool B>using bool_constant = integral_constant<bool, B>;using true_type = bool_constant<true>;using false_type = bool_constant<false>;

This defines two types: true_type and false_type. When calling true_ When type:: value, the value obtained is true; Call false_type::value, the value obtained is false. You can also call true_type::type, which returns true_type. This also applies to false_type. Such as is_ Integrated and is_class inherits true_type or false_type. For example, is_integral is a special case of bool type, as shown below:

template<> struct is_integral<bool> : public true_type { };

This allows you to write is_integral::value and returns true. Note that you do not need to write these exceptions yourself, they are part of the standard library. The following code demonstrates the simplest example of using type categories:

if (is_integral<int>::value) { cout << "int is integral" << endl;} else { cout << "int is not integral" << endl;}if (is_class<string>::value) { cout << "string is a class" << endl;} else { cout << "string is not a class" << endl;}

This example is through is_integral to check whether int is an integer type, and pass is_class to check whether the string is a class. The output is as follows:

int is integralstring is a class

For each trait with a value member, C++17 adds a variable template with the same name as the trait, followed by_ v. Instead of writing some trait::value, write some trait_v. For example, is_integral_v and is_const_v et al. Let's rewrite the previous example with a variable template

if (is_integral_v<int>) { cout << "int is integral" << endl;} else { cout << "int is not integral" << endl;}if (is_class_v<string>) { cout << "string is a class" << endl;} else { cout << "string is not a class" << endl;}