Catalog

1. Three devices for high concurrency systems

2. Current Limiting Algorithms

Comparison of 2.5 Leakage Bucket and Token Bucket Algorithms

3. Scenarios for application of current-limiting algorithms

3.4 Nginx Current Limiting Module

1. Three devices for high concurrency systems

Three devices for protecting high-concurrency systems: current limiting, fuse downgrading, and caching.

Limiting current is a means of restricting the availability of a system in the face of huge instantaneous traffic (commodity seconds killing, etc.).

Fuse downgrades are commonly used together to ensure solutions that are available to the core business in some high-traffic business scenarios (double 11 double 12, etc.). (Downgrading is active, and fusing is passive. Fusing means temporarily cutting off requests and preventing system avalanches when upstream services call downstream services when they become unavailable; Downgrading means in some cases guaranteeing core business and temporarily closing edge business services.)

Caching is used to alleviate the query pressure on the database by adding a cache layer to access some hot data and core business data. Redis is often used as the cache layer in high concurrent systems. (redundant data, space for time)

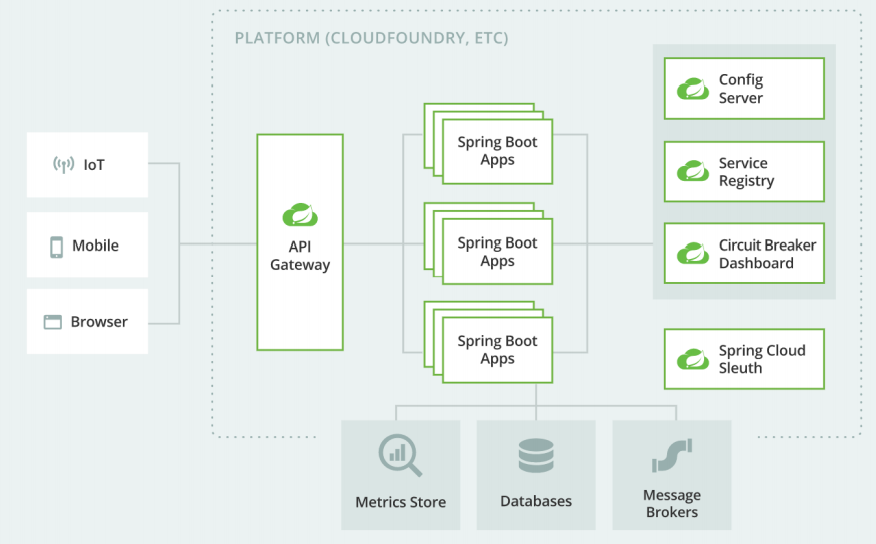

For a microservice architecture, two aspects of service invocation and external service provision are considered.

Intra-service calls can use thread pools to isolate resources, limit flow to callers, and downgrade services to ensure that services are available. (For example, a Hystrix fuse not only satisfies the degradation of the fuse, but also sets the number of threads at call time as a solution for resource isolation and in-service throttling)

Service providers can use either an application gateway or a global gateway to restrict flow to the system as a whole. (For example, SpringGateway acts as an application gateway to do some flow-limiting operations through a Filter; Nginx acts as a global gateway and reverse proxy server to load-balance and limit the application gateway)

2. Current Limiting Algorithms

For a microservice architecture, the overall throttling is arranged in a global gateway, where the global gateway can use Sentinel, Nginx, Kong, and so on. The underlying implementation is actually based on the current-limiting algorithm.

2.1 Fixed Window algorithm

The fixed-window algorithm is also known as the counter algorithm, which maintains the count value in a unit time (such as per second), increments the count value by one whenever a request passes, and rejects other requests in a unit time when the count value exceeds a preset threshold. If the unit time has ended, the counter is cleared and the next round of counts is turned on.

For example, to limit 100 requests per second, the Java code is as follows:

// Fixed window algorithm Java code implementation

public class FixedWindow {

private long time = new Date().getTime();

private Integer count = 0; // Counter

private final Integer max = 100; // Request Threshold

private final Integer interval = 1000; // Window Size (Fixed Pane)

public boolean trafficMonitoring() {

long nowTime = new Date().getTime();

if (nowTime < time + interval) {

// Within the time window

count++;

return max > count;

} else {

time = nowTime; // Open a new window

count = 1; // Initialize counter, this request belongs to the currently open window

return true;

}

}

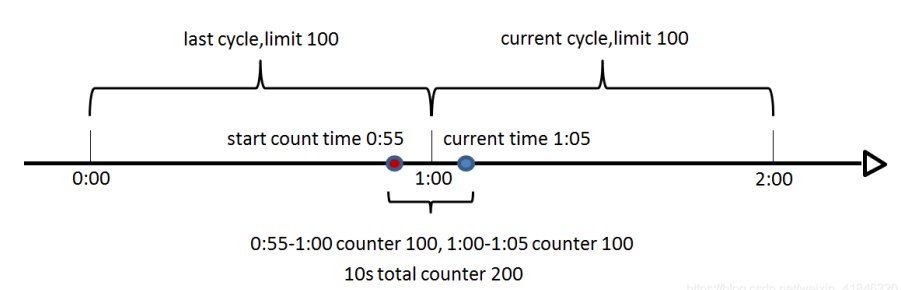

}However, there is a problem here. We set the threshold of 100 requests allowed to pass in one second. If a user sends 100 requests in the last few milliseconds of the time window and then sends 100 requests at the beginning of the next time window, the user actually requests 200 requests in one second, obviously exceeding the threshold but not being streamed. In fact, this is the flow spike problem, so how to solve the critical value problem?

2.2 Sliding Window algorithm

Counter sliding window method was born to solve the above problem of fixed window count. Sliding window divides windows based on time. (equivalent to dividing a fixed window)

As mentioned earlier, fixed windows have a critical value problem. To solve this critical value problem, it is obvious that only one window can not solve the problem. Let's say we still have 200 requests allowed in one second, but here we need to divide the time of one second into multiple squares, let's say five squares (the more squares there are, the smoother the traffic transitions). The time size of each window is 200 milliseconds, and every 200 milliseconds, we move the window forward one space.

Sliding window restriction is actually a variant of counter fixed window algorithm. The smoothness of the flow transition depends on the number of window cells we set, that is, the statistical time interval. The more cells there are, the more accurate the statistics will be. However, the more partitioned windows are, the more time consuming maintenance windows will be, which does not solve the problem of traffic spikes very well.

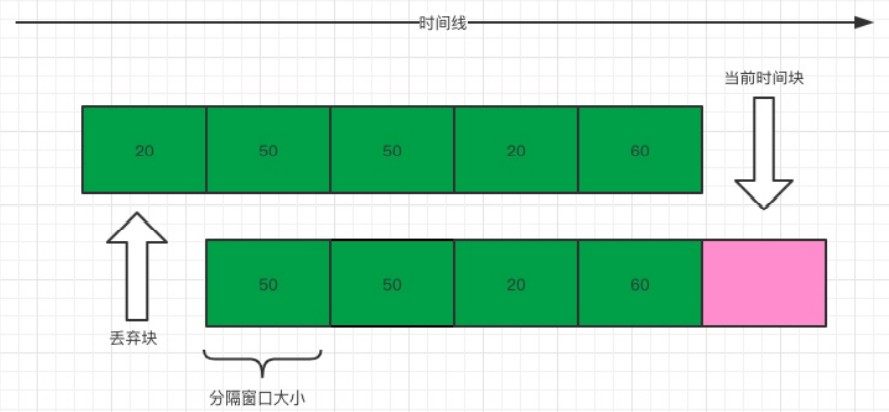

2.3 Leaky Bucket algorithm

To better solve the traffic spike problem, the leaky bucket algorithm can be used. The name of the funnel algorithm is very impressive. The algorithm has a container inside, similar to the funnel used in daily life. When requested, it is equivalent to water pouring into the funnel, and then flowing out slowly and evenly from the lower small opening. No matter how much traffic is above, the flow rate below will remain constant.

Because the processing speed is fixed and the speed of requests coming in is unknown, there may be many requests coming in suddenly. Requests that cannot be processed before they are put in the bucket. Since it is a bucket, there must be a capacity limit. If the bucket is full, new requests will be discarded.

In terms of algorithm implementation, you can prepare a queue to hold requests, and periodically get requests from the queue and execute them through a thread pool to get multiple concurrent executions at once.

However, this algorithm also has some drawbacks: it cannot handle short burst traffic.

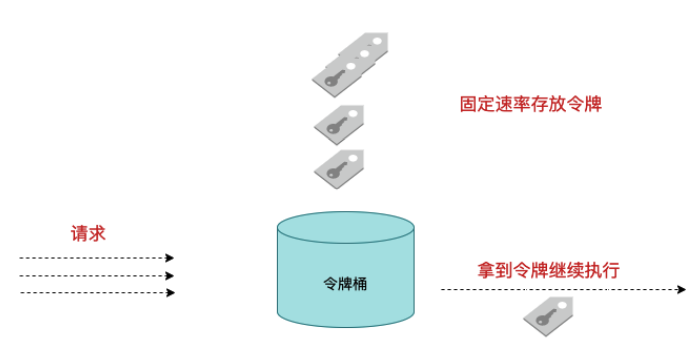

2.4 Token Bucket algorithm

The Token Bucket algorithm is an improvement of the leaky bucket algorithm, which can limit the rate of request calls, while the Token Bucket algorithm can limit the average rate of calls (the rate at which tokens are generated is fixed) and also allow some burst calls.

In the Token Bucket algorithm, a bucket exists to hold a fixed number of tokens. There is a mechanism in the algorithm to place tokens in buckets at a certain rate. Each request invocation requires a token to be acquired first, and only when a token is acquired will there be a chance to continue execution; otherwise, you choose to wait for the available token or reject it directly.

The Token Bucket algorithm generates tokens at a certain rate and puts them in the Token Bucket. When accessing to enter the system, tokens need to be obtained from the Token Bucket. Tokens can be entered without being discarded. Since the number of tokens in a token bucket is continuously generated, when the amount of access is small, tokens can be retained up to the maximum of the token bucket, so that when a short burst of access comes in, the accumulated number of tokens can handle this problem. When the amount of access continues to flow in a large amount, the rate at which tokens are generated is fixed and eventually becomes a fixed-flow process similar to the funnel algorithm.

Comparison of 2.5 Leakage Bucket and Token Bucket Algorithms

| Leaky bucket | Token Bucket | |

| Algorithm implementation | Fixed-rate outflow requests, and incoming requests reject new requests when they reach the bucket capacity. | Add tokens at a fixed rate, and process requests by looking at the number of tokens in the bucket, which is 0 to reject new requests. |

| Burst Access | Not allowed, only smooth access is supported | Allow some burst traffic |

3. Scenarios for application of current-limiting algorithms

Flow-limiting algorithms are implemented in several frameworks, and several common frameworks and code implementations are described here. Currently, the main stream limiting algorithms are leak buckets and token buckets.

3.1 Google Guava

Google Guava is similar to the Apache Commons toolset. The Guava project contains several core libraries that are extensively dependent on by Google's Java projects, such as collections, caching, native type support, concurrency libraries, common annotations, string processing, I/O, and so on.

Guava provides a current limiting tool, RateLimiter.

RateLimiter uses the token bucket algorithm. RateLimiter throws tokens into the bucket at a certain frequency so that the thread gets the token to execute. For example, if you want your application QPS not to exceed 1000, RateLimiter will throw 1000 tokens into the bucket every second after setting a rate of 1000. RateLimiter is often used to limit the rate of access to some physical or logical resources.

Java code implementation:

// Simulate RateLimiter Current Limit

public class TestRateLimiter {

public static void main(String[] args) {

// 2 licenses per second

RateLimiter rateLimiter = RateLimiter.create(2.0);

List<Runnable> tasks = new ArrayList<Runnable>();

for (int i = 0; i < 10; i++) {

tasks.add(new UserRequest(i));

}

ExecutorService threadPool = Executors.newCachedThreadPool();

for (Runnable runnable : tasks) {

System.out.println("Wait time:" + rateLimiter.acquire());

threadPool.execute(runnable);

}

}

// Simulate user request

private static class UserRequest implements Runnable {

private int id;

public UserRequest(int id) {

this.id = id;

}

@Override

public void run() {

System.out.println("userQuestID:"+id);

}

}

}Note here that there is a distinction between Semaphore. Semaphore limits the number of concurrent access rather than the usage rate.

3.2 SpringCloud Gateway

The Spring cloud gateway officially provides a token bucket-based throttling algorithm based on the internal filter factory RequestRateLimiterGatewayFilterFactory implementation. The current implementation of RequestRateLimiterGatewayFilterFactory relies on Redis, so we will also introduce Redis's dependencies. Next, take a look at the implementation steps.

application.yml:

spring:

application:

name: gateway-limiter

cloud:

gateway:

routes:

- id: limit_route

uri: http://127.0.0.1:80/get

# Predicate: Forward to user microservice only when and only when requested at the time After configured

predicates:

- After=2021-02-26T00:00:00+08:00[Asia/Shanghai]

filters:

- name: RequestRateLimiter

args:

key-resolver: '#{@userKeyResolver}'

redis-rate-limiter.replenishRate: 50

redis-rate-limiter.burstCapacity: 300

redis:

host: localhost

port: 6379

database: 0In the configuration file above, the redis information is configured and the RequestRateLimiter's restriction filter is configured, which requires three parameters:

- burstCapacity: Total capacity of the token bucket.

- replenishRate: Average rate of token bucket filling per second.

- key-resolver: The name of the Bean object of the parser used for the current-limiting key. It uses a SpEL expression to get Bean objects from the Spring container based on #{@beanName}.

Configuration class KeyResolverConfiguration.java: (Mono in responsive programming is used here)

// Current Limiting Configuration Class

@Configuration

public class KeyResolverConfiguration {

/**

* Interface Current Limit:

* Gets the uri of the request address as the flow-limiting key.

*/

@Bean

public KeyResolver pathKeyResolver() {

return new KeyResolver() {

@Override

public Mono<String> resolve(ServerWebExchange exchange) {

return Mono.just(exchange.getRequest().getPath().toString());

}

};

}

/**

* User Limit:

* Gets the request user id as a flow-limiting key.

*/

@Bean

public KeyResolver userKeyResolver() {

return exchange -> Mono.just(exchange.getRequest().getQueryParams().getFirst("userId"));

}

/**

* IP Limit current:

* Gets the requesting user ip as the flow-limiting key.

*/

@Bean

public KeyResolver hostAddrKeyResolver() {

return exchange -> Mono.just(exchange.getRequest().getRemoteAddress().getHostName());

}

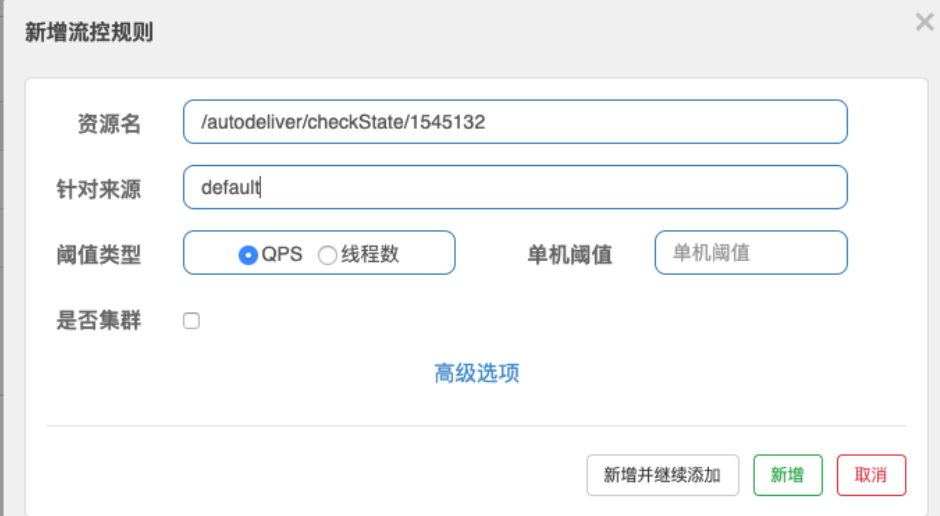

}3.3 Alibab Sentinel

Sentinel is Ali's open source current limiting framework and can be used as an application gateway instead of SpringCLoud Gateway. It implements service current limiting and fuse degradation internally, can also replace Hystrix, and provides a Dashboard dashboard Web interface.

Sentinel does not have to be complex to configure, but rather integrates existing frameworks to limit the flow of resources in the Dashboard dashboard.

There are two key concepts in Sentinel:

Resource: It can be anything in a Java application, such as services provided by the application, other services invoked by the application that should be provided, or even pieces of code. The API interface we are requesting is the resource.

Rules: Rules set around the real-time state of a resource can include traffic control rules, break downgrade rules, and system protection rules. All rules can be dynamically adjusted in real time.

Sentinel's throttling has two control dimensions: QPS and number of threads.

- QPS: (Number of requests per second) Limit flow when the QPS calling the resource reaches the threshold

- Number of threads: Limit flow when the number of threads invoking the resource reaches the threshold (threads take a long time to process requests, and when traffic peaks arrive, many thread resources are consumed, which can accumulate and ultimately render the service unavailable, the upstream service unavailable, and eventually the service avalanche)

Processing in Sentinel is a chain of responsibility where the logic of different functions is abstracted into different ProcessorSlot s, such as FlowSlot with limited streams, LogSot with logging, StatisticSlot with data statistics. Focus on stream-limiting com.alibaba.csp.sentinel.slots.block.flow.FlowSlot

public void entry(Context context, ResourceWrapper resourceWrapper, DefaultNode node, int count,

boolean prioritized, Object... args) throws Throwable {

// Whether to trigger current limiting check

checkFlow(resourceWrapper, context, node, count, prioritized);

// Continue to the next node

fireEntry(context, resourceWrapper, node, count, prioritized, args);

}

public void checkFlow(Function<String, Collection<FlowRule>> ruleProvider, ResourceWrapper resource,

Context context, DefaultNode node, int count, boolean prioritized) throws BlockException {

Collection<FlowRule> rules = ruleProvider.apply(resource.getName());

for (FlowRule rule : rules) { // Multiple Current Limiting Rule Checks

if (!canPassCheck(rule, context, node, count, prioritized)) {

throw new FlowException(rule.getLimitApp(), rule);

}

}

}

// canPassCheck -> passLocalCheck

private static boolean passLocalCheck(FlowRule rule, Context context, DefaultNode node, int acquireCount,

boolean prioritized) {

return rule.getRater().canPass(selectedNode, acquireCount, prioritized);

}The canPass check is a check of the restriction rules. There are several implementation classes:

- DefaultController: Token Bucket

- RateLimiterController: leaky bucket

- WarmUpController: token bucket in preheating mode

- WarmUpRateLimiterController: leaky bucket in preheating mode

When the system is idle for a long time, when the traffic increases suddenly, pulling the system up to the high water level may instantly crush the system, such as the seconds killing module of e-commerce websites. Through Warm Up mode (preheating mode), let the flow through slowly increase, after the set preheating time, reach the set value of the system processing request rate. Warm Up mode increases slowly from one-third of the set QPS threshold to the set QPS value by default.

The detailed implementation process is no longer analyzed, and its principle is the algorithm principle described earlier.

3.4 Nginx Current Limiting Module

Nginx is known to be modular, which facilitates the enrichment and expansion of functions, among which the current limiting function is the corresponding module.

Nginx provides two current limiting methods, one is to control the rate and the other is to control the number of concurrent connections. Similar to QPS and number of threads in Sentinel.

- ngx_http_limit_req_module: leaky bucket algorithm is used to control rate (support burst traffic)

- ngx_http_limit_conn_module: controls the number of concurrent connections

As for the use of child shoes in the current limiting module, they can Baidu or check the official documents: