1, Introduction to scheduler

The scheduler uses kubernetes' watch mechanism to discover newly created clusters that have not yet been scheduled

Pod on Node. The scheduler will schedule each unscheduled pod found to an appropriate Node

Come up and run.

Kube scheduler is the default scheduler for Kubernetes clusters and is part of the cluster control surface.

If you really want or need this, Kube scheduler is designed to allow you to write it yourself

A scheduling component and replace the original Kube scheduler.

Factors to be considered when making scheduling decisions include: individual and overall resource requests, hardware / software / policy constraints

System, affinity and anti affinity requirements, data locality, interference between loads, etc

The default policy can refer to: https://kubernetes.io/zh/docs/concepts/scheduling/kube-scheduler/

Scheduling framework: https://kubernetes.io/zh/docs/concepts/configuration/scheduling-framework/

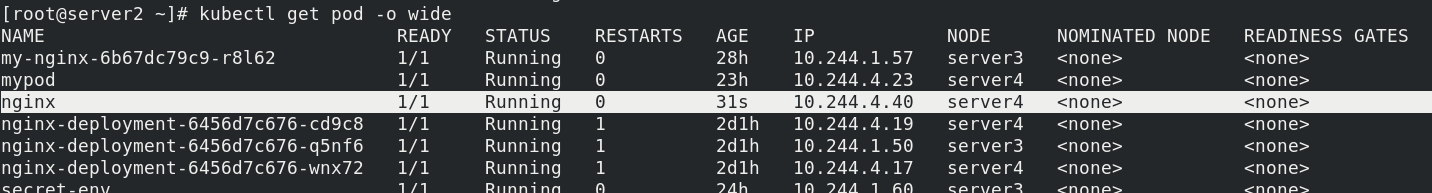

nodeName

NodeName is the simplest method for node selection constraints, but it is generally not recommended. If nodeName is

If specified in PodSpec, it takes precedence over other node selection methods

Some limitations of using nodeName to select nodes:

- If the specified node does not exist.

- If the specified node has no resources to accommodate the pod, the pod scheduling fails.

- Node names in a cloud environment are not always predictable or stable.

example

vim pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx

nodeName: server4

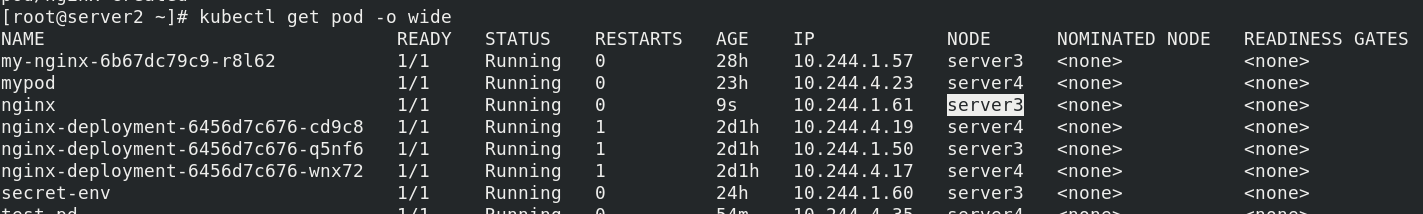

application

nodeSelector

nodeSelector is the simplest recommended form of node selection constraint.

Label selected nodes

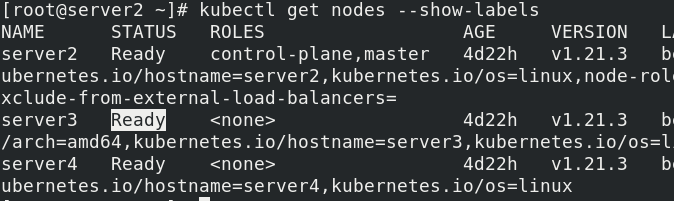

kubectl label nodes server3 disktype=ssd kubectl get nodes --show-labels

Add nodeSelector field to pod configuration

vim pod1.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

env: test

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

nodeSelector:

disktype: ssd

Pull up the container and view

Affinity and anti affinity

nodeSelector provides a very simple way to constrain a pod to a node with a specific label. The affinity / anti affinity function greatly expands the types of constraints you can express.

You can find that the rule is "soft" / "preference", not a hard requirement. Therefore, if the scheduler cannot meet the requirement, it still schedules the pod

You can use the label of the pod on the node to constrain, rather than the label of the node itself, to allow which pods can or cannot be placed together.

Node affinity pod example

vim pod2.yaml

apiVersion: v1

kind: Pod

metadata:

name: node-affinity

spec:

containers:

- name: nginx

image: nginx

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- server4

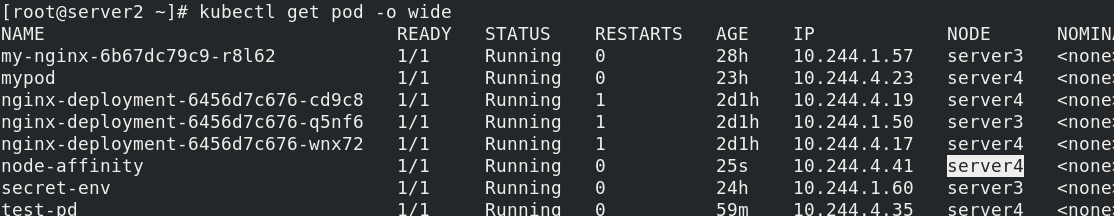

requiredDuringSchedulingIgnoredDuringExecution must be satisfied, and the key is kubernetes The IO / hostname value is server4, so you can see that this pod is running on server4

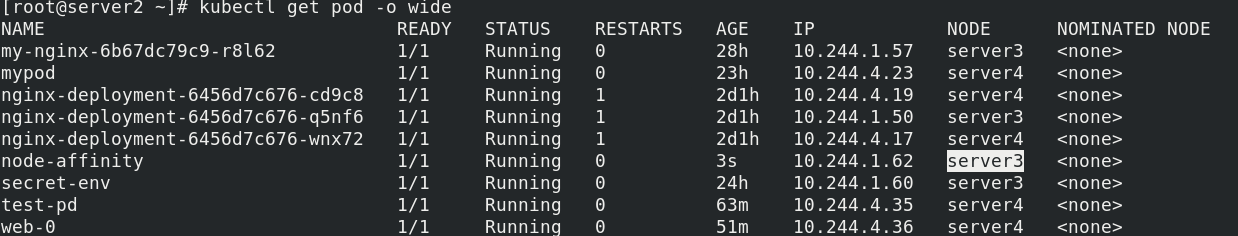

In POD2 Yaml added after the tendency to meet

- server3

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: disktype

operator: In

values:

- ssd

The key that must be written is kubernetes The IO / hostname values are server4 and server3, the preferred key is disktype, and the value is ssd. Since the above experiment gives the label server3, this pod will run on server3 If you want to write the conditions that multiple tendencies meet, you can sort by weight

nodeaffinity also supports the configuration of a variety of rule matching criteria, such as

• the value of In:label is in the list

• notin: the value of label is not in the list

• GT: the value of label is greater than the set value, and Pod affinity is not supported

• LT: the value of label is less than the set value, and pod affinity is not supported

• Exists: the label set does not exist

• DoesNotExist: the label set does not exist

pod affinity and anti affinity

podAffinity mainly solves the problem of which pods can be deployed in the same topology domain

(the topology domain is implemented with the host label, which can be a single host or multiple hosts

cluster, zone, etc.)

Podanti affinity mainly solves the problem that POD cannot be deployed in the same topology domain with which POD

Question. They deal with the relationship between POD and POD within the Kubernetes cluster.

Affinity and anti affinity between Pod and higher-level collections (such as ReplicaSets,

They may be more useful when used together with stateful sets, deployments, etc.). sure

Easily configure a set of workloads that should be in the same defined topology (for example, nodes).

pod affinity example:

vim pod3.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

---

apiVersion: v1

kind: Pod

metadata:

name: myapp

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v1

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nginx

topologyKey: kubernetes.io/hostname

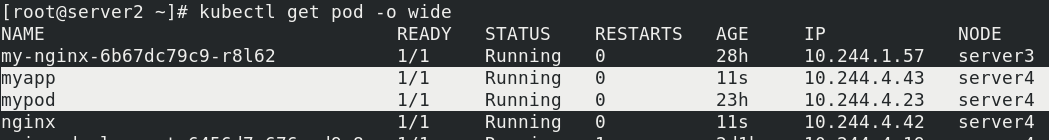

Using this yaml file, you can see that the two pod s are on the same host

Example of pod anti affinity:

vim pod3.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

---

apiVersion: v1

kind: Pod

metadata:

name: myapp

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v1

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nginx

topologyKey: kubernetes.io/hostname

Pull up the container again to see that the mysql node is not on the same node as the nginx service, so as to realize the separation of service and data.

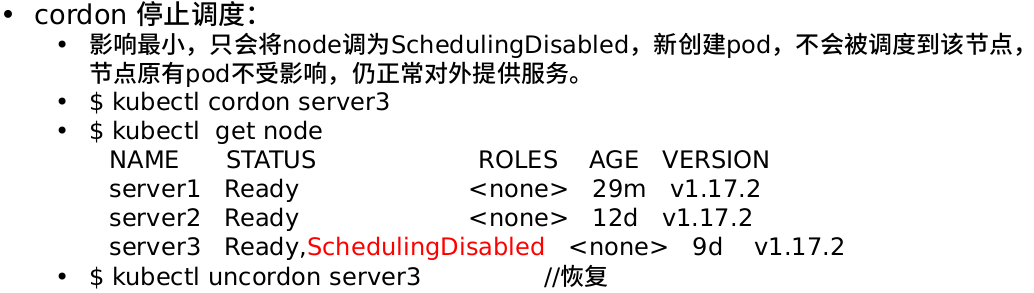

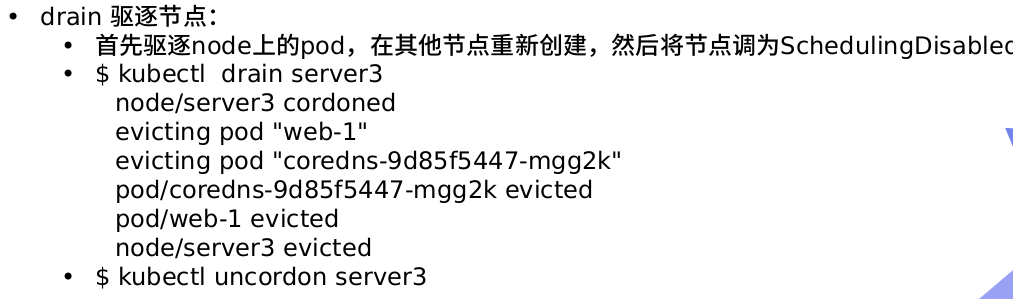

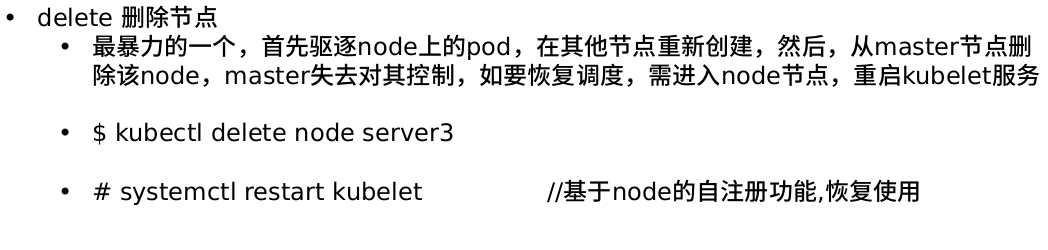

Taints stain

Taints is an attribute of Node. After setting taints, Kubernetes will not set the point

When scheduling to this Node, Kubernetes sets a property tolerance for Pod,

As long as the Pod can tolerate the stains on the Node, Kubernetes will ignore the stains on the Node and

Enough (not necessary) to schedule the Pod.

You can use the command kubectl taint to add a taint to the node:

$ kubectl taint nodes node1 key=value:NoSchedule establish $ kubectl describe nodes server1 |grep Taints query $ kubectl taint nodes node1 key:NoSchedule-delete

Where [effect] can take the value: [NoSchedule | PreferNoSchedule | NoExecute]

• NoSchedule:POD will not be scheduled to nodes marked tails.

• prefernoschedule: the soft policy version of noschedule.

• NoExecute: this option means that once Taint takes effect, if the running POD in the node has no corresponding

Example of deploying nginx deployment:

vim pod4.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-server

spec:

selector:

matchLabels:

app: nginx

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

Run yaml file

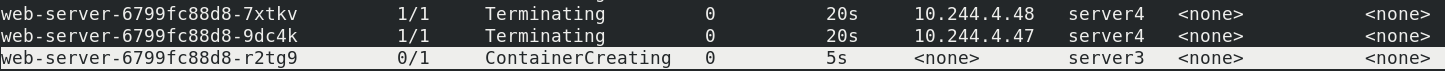

Server 4 runs pod

server4 add stain NoExecute

kubectl get pod -o wide kubectl taint node server4 key1=v1:NoExecute

The key, value, and effect defined in the tolerances should always be consistent with the taint set on the node:

If operator is Exists, value can be omitted.

If the operator is Equal, the relationship between key and value must be Equal.

If the operator attribute is not specified, the default value is Equal.

There are also two special values:

When not specified key,Reconnection Exists Can match all key And value ,All stains can be tolerated. When not specified effect ,Match all effect.

Instructions affecting Pod scheduling