Next section https://blog.csdn.net/weixin_45730790/article/details/122521234

In order to simulate multitasking concurrent access to shared linked lists in the kernel, we need to complete the following tasks.

- First, we need to establish a shared list in the kernel and use the spin lock structure to protect its access

- Several kernel threads are established by using the work queue mechanism. Each kernel thread should insert / delete the shared linked list

- Create a kernel timer and write its callback function to delete the nodes in the shared list when it expires

- Destruction of linked list in module unloading function

This is our simulated system call task's access to the shared linked list

sharelist.c codes are as follows:

#include <linux/init.h>

#include <linux/module.h>

#include <linux/list.h>

#include <linux/semaphore.h>

#include <linux/sched.h>

#include <linux/timer.h>

#include <linux/spinlock_types.h>

#include <linux/workqueue.h>

#include <linux/slab. h> / * kmalloc header file*/

#include <linux/kthread.h>

#include <linux/kallsyms.h>

#Define ntheads 200 / * number of threads*/

struct my_struct {

struct list_head list;

int id;

int pid;

};

static struct work_struct queue;

static struct timer_list mytimer; /* Queue for timer */

static LIST_HEAD(mine); /* sharelist head */

static unsigned int list_len = 0;

static DEFINE_SEMAPHORE(sem); /* The semaphore for synchronization between kernel threads and initiators. 4.15 kernel is applicable*/

static DEFINE_SPINLOCK(my_lock); /* Protect the operation of linked list. 4.15 kernel is applicable */

static atomic_t my_count = ATOMIC_INIT(0); /* Append atomically */

static int count = 0;

static int sharelist(void *data);

static void start_kthread(void);

static void kthread_launcher(struct work_struct *q);

/* The kernel thread adds nodes to the linked list */

static int sharelist(void *data)

{

struct my_struct *p;

if (count++ % 4 == 0)

printk("\n");

spin_lock(&my_lock); /* Add locks to protect shared resources */

if (list_len < 50) {

if ((p = kmalloc(sizeof(struct my_struct), GFP_KERNEL)) == NULL)

return -ENOMEM;

p->id = atomic_read(&my_count); /* Atomic variable operation */

atomic_inc(&my_count);

p->pid = current->pid;

list_add(&p->list, &mine); /* Add new bytes to the queue */

list_len++;

printk("THREAD ADD:%-5d\t", p->id);

}

else { /* If the queue exceeds a fixed length, delete the node */

struct my_struct *my = NULL;

my = list_entry(mine.prev, struct my_struct, list);

list_del(mine.prev); /* Delete node from end of queue */

list_len--;

printk("THREAD DEL:%-5d\t", my->id);

kfree(my);

}

spin_unlock(&my_lock);

return 0;

}

/* Call keventd to run the kernel thread */

static void start_kthread(void)

{

down(&sem);

schedule_work(&queue);

}

static void kthread_launcher(struct work_struct *q)

{

kthread_run(sharelist, NULL, "%d", count);

up(&sem);

}

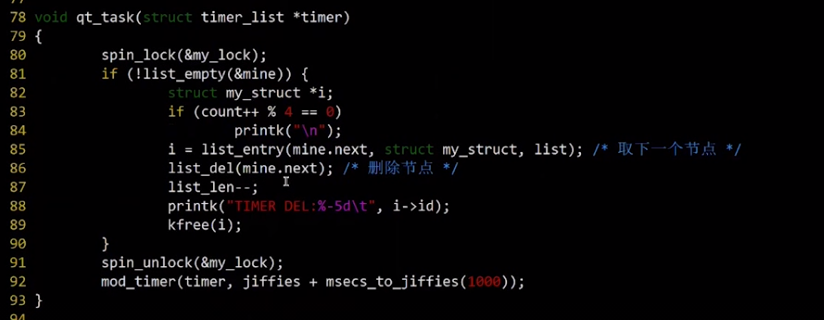

void qt_task(struct timer_list *timer)

{

spin_lock(&my_lock);

if (!list_empty(&mine)) {

struct my_struct *i;

if (count++ % 4 == 0)

printk("\n");

i = list_entry(mine.next, struct my_struct, list); /* Remove the next node */

list_del(mine.next); /* Delete node */

list_len--;

printk("TIMER DEL:%-5d\t", i->id);

kfree(i);

}

spin_unlock(&my_lock);

mod_timer(timer, jiffies + msecs_to_jiffies(1000));

}

static int share_init(void)

{

int i;

printk(KERN_INFO"share list enter\n");

INIT_WORK(&queue, kthread_launcher);

timer_setup(&mytimer, qt_task, 0);

add_timer(&mytimer);

for (i = 0; i < NTHREADS; i++)

start_kthread();

return 0;

}

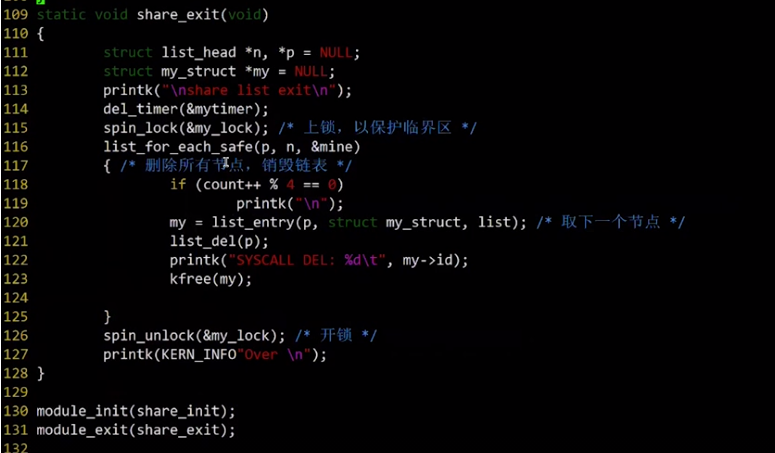

static void share_exit(void)

{

struct list_head *n, *p = NULL;

struct my_struct *my = NULL;

printk("\nshare list exit\n");

del_timer(&mytimer);

spin_lock(&my_lock); /* Lock out to protect critical areas */

list_for_each_safe(p, n, &mine)

{ /* Delete all nodes and destroy the linked list */

if (count++ % 4 == 0)

printk("\n");

my = list_entry(p, struct my_struct, list); /* Remove the next node */

list_del(p);

printk("SYSCALL DEL: %d\t", my->id);

kfree(my);

}

spin_unlock(&my_lock); /* Unlock */

printk(KERN_INFO"Over \n");

}

module_init(share_init);

module_exit(share_exit);

MODULE_LICENSE("GPL v2");

For the shared list, we use the list structure provided by the kernel_ Head to create its node, (line 16 of code) here will be the list provided by the kernel_ The head type linked list structure is included in the structure of the shared linked list node defined by us to complete the definition of the shared linked list node. Via kernel list_ The linked list established by head can directly use the functions in the kernel to insert / delete / traverse the linked list.

Next, we need to create a header node mine for the linked list (line 22 of code), where list is used_ The head macro is used to complete it. Note that the head node is a list_ Head type structure, not the shared linked list node we defined_ Struct structure, which needs special attention during operation

For spin locks, we use DEFINE_SPINLOCK macro to declare and initiate a spin lock_ Lock, so we have completed the first task. A shared linked list with the head node of mine is established in the kernel, and a spin lock is created for it_ Lock to protect its access.

Let's look at how to create a kernel thread using a work queue. For convenience, we use the kernel work queue kevent.

(line 20 of the code) the first step is to define a work_struct type work queue, followed by define_ The macro of semaphore declares a semaphore sem and initializes it to 1 (line 24 of code). The function of this semaphore will be described later.

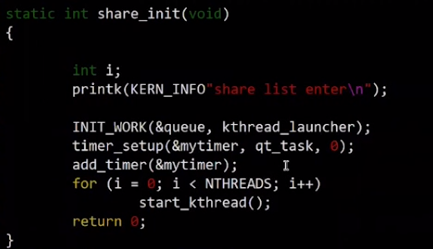

Let's look at the module loading function (line 102). In the module loading function, we use INT_WORK macro to initialize the work queue and specify the work processing function kthread for it_ Launcher, the kernel thread initiator.

After the initialization of the work queue is completed, we just need to insert it into the kernel work queue kevent at the appropriate time and wait for it to be executed

(line 105 of the code) for loop. NTHREADS is a predefined macro indicating the number of kernel threads created. Here, it is 200, (line 106 of the code) start_ The kthread function is executed cyclically to insert the work queue into the kernel work queue

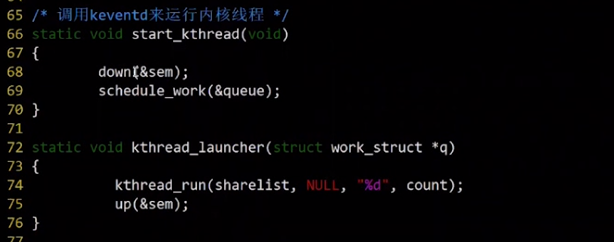

(lines 66-70 of code) kthread function. The code of this function is only 2 lines. First, reduce the semaphore sem by 1 (line 68 of code). If it is not blocked, execute schedule_ Work (line 69 of code), insert the work queue into the kernel work queue.

(lines 72-76 of code) kthread_ The launcher work processing function has only two lines of code. First, kthread_run creates and wakes up a kernel thread. The first parameter sharelist is a function pointer that specifies the function to be executed by the kernel thread. The second parameter is that the specified parameters will be automatically passed to the function. The last two items ('% d', count) are formatted and named for the thread, similar to the printf function. Count is a global variable used to record the sequence number of the kernel thread and then name it. After the thread is created, increase the semaphore sem by 1,

What's the use of semaphore sem here? We tried to cancel the semaphore sem. After execution, we found that the module can only create one kernel thread, but we explicitly called schedule 200 times_ The work function inserts 200 queue jobs into the work queue. Where are the other 199 jobs? The reason is that we execute the schedule_ When using the work function, it will check whether the work to be inserted is already in the work queue. If so, the execution will end. Therefore, before the first work is executed, the other 199 times of scheduling the same work are invalid operations. Therefore, we need to use semaphores to ensure that the next scheduling is performed after each scheduling job is executed, so as to realize the synchronization between thread startup functions.

In addition, we have to consider the question, why do we use work queues to create kernel threads? Instead of calling kthread 200 times directly_ Run function? Kernel queue keventd_wq's default worker thread is called events/n, where n is the processor number, and each processor corresponds to a thread. For example, in a single processor system, there is only one worker thread such as events/0, while in a dual processor system, there will be one more events/1 thread. Therefore, if we want to create a large number of threads, we can allocate this work to multiple CPUs for parallel execution, It will undoubtedly improve efficiency. Of course, the premise is that your system has multiple CPUs. If we don't want to use work queues to create threads, we can avoid semaphore sem.

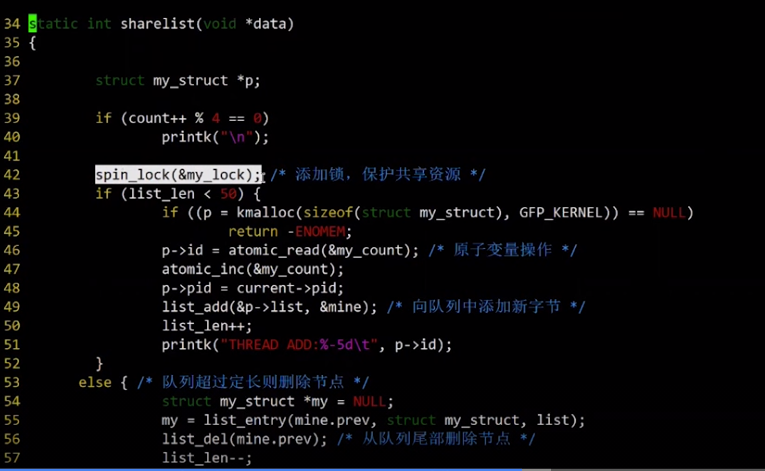

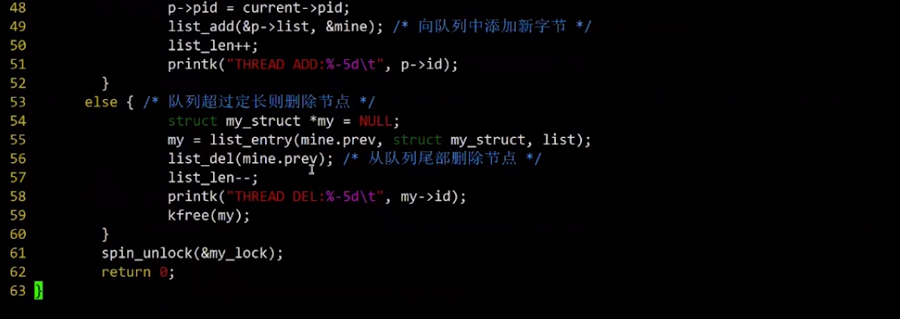

Now let's take a look at the sharelist function (line 34). It is a function that the kernel thread needs to execute. In sharelist, we complete the insertion or deletion of shared linked list nodes. We note that spin needs to be used before operating on the shared linked list_ Lock (42 rows), list_len is a global variable (line 43) used to record the length of the linked list. When the length of the linked list is less than 50, the kernel thread performs the node insertion operation, (line 46) an atomic variable my_count is used to record the node serial number. Here, we create a new shared linked list node (44 rows), assign values to it in order (46 rows), and use list (49 rows)_ The add function is inserted after the head node mine. When the length of the linked list is greater than 50, the thread performs the operation of deleting the node (line 53), and the list is used (line 56)_ Del function deletes the precursor of the head node, that is, the tail node of the linked list. In addition (line 55), there is a list_entry macro, which is used to find the my containing the end node of the linked list_ The address of the sturct structure, because we need to delete not only the pointer of the linked list, but also the shared node structure itself. (line 55) after we find the structure address, (line 59) destroy it with kfree to complete the deletion of the shared linked list node.

(line 61) after the operation is completed, use spin_unlock to ensure that only one thread or other task can access the linked list at a time. Here we have completed the second task. Using the work queue mechanism, 200 kernel threads are established, and each kernel thread can insert or delete the shared linked list.

Next, let's look at the use of the kernel timer (line 21). According to the use process mentioned in the previous section, first define a timer_list type timer mytimer, and then initialize it in the module loading function (line 103). Here, time is used_ The setup macro initializes mytimer and specifies a callback function QT for it_ Task, followed by add_timer activates it (line 104) and the timer can start working.

Callback function qt_task (line 78) needs to be locked before operating the shared linked list (line 80), (line 81). When the linked list is not empty, we need to delete (line 86) the subsequent nodes of the head node, and finally (line 92) use mod_timer modifies the expiration time of the timer and sets the next expiration time of the timer to 1000 milliseconds later. msec_to_jiffies is a function that converts millisecond values into beats. This completes the task of the kernel timer.

The last task is to destroy the linked list in the module unloading function (line 114). First, delete the timer mytimer. Similarly (line 115), lock the linked list before accessing it, and then use the list_for_each_safe macro to traverse the linked list from scratch, delete each node (121 rows) in turn, and destroy the whole linked list (123 rows). When we call the rmmod command to delete the module in the user state, we call delete through the system_ Module, delete_ The module system call will execute the code written in the module unloading function and destroy the linked list, thus simulating the access of the system call task to the shared linked list. This is the main content of the kernel multitasking concurrent instance.

The rest are some defined two macros (13 lines) and variables, including the number of threads, ntheads and the length of the linked list_len (line 23), atomic variable mycount (line 26),

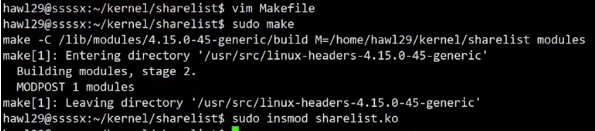

Makefile files are as follows:

obj-m :=sharelist.o CURRENT_PATH := $(shell pwd) LINUX_KERNEL := $(shell uname -r) LINUX_KERNEL_PATH := /lib/modules/$(shell uname -r)/build all: make -C $(LINUX_KERNEL_PATH) M=$(CURRENT_PATH) modules clean: @rm -rf .*.cmd *.o *.mod.c *.ko .tmp_versions Module.symvers .Makefile.swp modules.order *.o.ur-detected *.o.ur-safe

After Make, insert the module with insmod

The dmesg command views the execution results.

It can be seen that the kernel thread performs insert or delete operations in sequence, and can correctly delete the successor nodes of the head node when the timer expires,

Then use the rmmod command to delete the module, and execute the dmesg command again. You can see that the remaining nodes in the linked list have also been deleted.

If it helps you, please like, collect or pay attention to it~