ovs, whose full name is openvswitch, is a high-quality, multi-layer virtual switch with some advantages over bridge:

1) Facilitate network management and monitoring. The introduction of OVS can facilitate administrators to monitor the network status and data traffic in the whole cloud environment. For example, they can analyze which VM, OS and user the data packets flowing in the network come from. These can be achieved with the help of the tools provided by OVS.

2) Accelerate packet routing and forwarding. Compared with Bridge's simple forwarding rules based on MAC address learning, OVS introduces the mechanism of stream cache, which can speed up the forwarding efficiency of data packets.

3) Based on the idea of separating SDN control plane from data plane. The above two points are actually related to this point. The OVS control surface is responsible for learning and Issuing the flow table, and the specific forwarding actions are completed by the data surface. Strong scalability.

4) Tunnel protocol support. Bridge only supports VxLAN, OVS supports gre/vxlan/IPsec, etc.

5) Applicable to Xen, KVM, VirtualBox, VMware and other Hypervisors.

However, in recent years, the popularity of openflow has decreased significantly. SDN network can be realized in many ways, such as segment routing technology. Combined with traditional mpls/bgp, SDN can also be realized well. Compared with SDN network based on openflow protocol, equipment manufacturers support better and more stable.

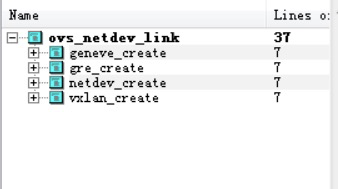

Like bridge, when adding a member interface to ovs bridge, it will be in dev - > RX of the member interface_ Mount the packet receiving processing function on the handler, as follows: ovs_vport_add will call the create function corresponding to the interface according to the different types of added interfaces, and all create functions will call ovs_netdev_link, where netdev is registered_ frame_ Hook is the packet receiving and processing function of ovs member port.

In vxlan_ Take create as an example. The creation of vxlan itself is the same as that of linux vxlan, and the core function is also vxlan_dev_configure, followed by creating the private data vport of the ovs member port, and finally in ovs_ netdev_ The link function is associated with vxlan and vport in the middle, as well as the mounting interface receiving function and private data (netdev_frame_hook, vport)

struct vport *ovs_vport_add(const struct vport_parms *parms)

{

struct vport_ops *ops;

struct vport *vport;

ops = ovs_vport_lookup(parms);

if (ops) {

struct hlist_head *bucket;

if (!try_module_get(ops->owner))

return ERR_PTR(-EAFNOSUPPORT);

vport = ops->create(parms);

......

}

struct vport *ovs_netdev_link(struct vport *vport, const char *name)

{

......

err = netdev_rx_handler_register(vport->dev, netdev_frame_hook,

vport);

......

}

EXPORT_SYMBOL_GPL(ovs_netdev_link);

After the network card receives the packet, go to__ netif_ receive_ skb_ After the core, peel off the vlan and find the vlan sub interface (if any). If SKB - > dev is an ovs member port, it will go to netdev_frame_hook handler.

static int __netif_receive_skb_core(struct sk_buff *skb, bool pfmemalloc)

{

......

// Netdev mounted by ovs_ frame_ Hook function.

rx_handler = rcu_dereference(skb->dev->rx_handler);

if (rx_handler) {

if (pt_prev) {

ret = deliver_skb(skb, pt_prev, orig_dev);

pt_prev = NULL;

}

switch (rx_handler(&skb)) {

case RX_HANDLER_CONSUMED: // The message has been consumed. End processing

ret = NET_RX_SUCCESS;

goto out;

case RX_HANDLER_ANOTHER: // SKB - > dev is modified. Go again

goto another_round;

case RX_HANDLER_EXACT: /* Pass exactly to ptype - > dev = = SKB - > dev */

deliver_exact = true;

case RX_HANDLER_PASS:

break;

default:

BUG();

}

}

......

}

struct vport is the core data structure of ovs member port, which is mounted on net_ RX of device_ handler_ On data members, ovs modules use this structure more, but it is not visible to external modules (private data structure).

struct vport {

struct net_device *dev;

struct datapath *dp; // Corresponding to a bridge, multiple bridges can be added to ovs.

struct vport_portids __rcu *upcall_portids;

u16 port_no;

struct hlist_node hash_node;

struct hlist_node dp_hash_node;

const struct vport_ops *ops; // Operation processing functions corresponding to different interface types.

struct list_head detach_list;

struct rcu_head rcu;

};

netdev_ frame_ hook --> netdev_ port_ receive -->ovs_ vport_ receive–>ovs_dp_process_packet process. Entering OVS_ dp_ process_ Before packet, from tun_info and skb, extract the key information of the stream, including the header information of tunnel, layer 2, layer 3 and layer 4, which provides the basis for ovs dp matching stream table.

static void netdev_port_receive(struct sk_buff *skb)

{

struct vport *vport;

vport = ovs_netdev_get_vport(skb->dev);

if (unlikely(!vport))

goto error;

if (unlikely(skb_warn_if_lro(skb)))

goto error;

skb = skb_share_check(skb, GFP_ATOMIC);

if (unlikely(!skb))

return;

// ovs is a switch, so it is impossible to add layer 2 ports to some layer 3 tunnel ports

skb_push(skb, ETH_HLEN);

skb_postpush_rcsum(skb, skb->data, ETH_HLEN);

// Some tunnel ports, such as vxlan and gre, will be on the DST of skb_ Cache tunnel key in entry

ovs_vport_receive(vport, skb, skb_tunnel_info(skb));

return;

error:

kfree_skb(skb);

}

int ovs_vport_receive(struct vport *vport, struct sk_buff *skb,

const struct ip_tunnel_info *tun_info)

{

struct sw_flow_key key;

int error;

OVS_CB(skb)->input_vport = vport;

OVS_CB(skb)->mru = 0;

OVS_CB(skb)->cutlen = 0;

if (unlikely(dev_net(skb->dev) != ovs_dp_get_net(vport->dp))) {

u32 mark;

mark = skb->mark;

skb_scrub_packet(skb, true);

skb->mark = mark;

tun_info = NULL;

}

/* Extract flow from 'skb' into 'key'. */

// Here from tun_info and skb, extract the key information of the stream, sw_flow_key contains the header information of tunnel, layer 2, layer 3 and layer 4, which provides the basis for ovs dp matching flow table.

error = ovs_flow_key_extract(tun_info, skb, &key);

if (unlikely(error)) {

kfree_skb(skb);

return error;

}

ovs_dp_process_packet(skb, &key);

return 0;

}

ovs_ dp_ process_ The packet function will match the flow table according to the message key:

1. If it is not found, go upcall and call queue_userspace_packet sends the protocol header (OVS_PACKET_ATTR_KEY) and skb data (OVS_PACKET_ATTR_PACKET) information of each layer of the message to the user status (upcall.cmd=OVS_PACKET_CMD_MISS). There will be threads in the user status to listen to the message in udpif_start_threads creates a thread for processing upcall, and handler = udpif_upcall_handler,udpif_ upcall_ The handler waits for triggering through fd poll. If there is an upcall, it enters recv_ In the processing function of upcalls

1) Receive the Packet from the Device and hand it to the event handler registered in advance for processing;

2) After receiving the Packet, identify whether it is an unknown packet, and if yes, submit it to upcall for processing;

3)vswitchd handles the flow rule found in the unknown packet, and the most important call is through the rule_dpif_lookup_from_table finds the matching flow table rules, and then generates actions

rule_ dpif_ lookup_ from_ The table will be searched one by one through the cascade of flow tables, and each single flow table will call rule_dpif_lookup_in_table;

4) After process_ After the upcall process, the corresponding cache flow table information has been generated for the upcall flow. The key information of the cache flow table and the actions action are saved in the struct upcall. Next, the cache flow table is put into the datapath, and the kernel OVS is notified to process the put (corresponding OVS_FLOW_CMD_NEW) and execute (ovs_pack_cmd_execute) processes;

https://www.codenong.com/cs109398201/

2. If there is a matching flow table, perform the corresponding operation according to the action of the flow table. There are many types of actions, but in the end, it usually needs to be output action, which is sent from an interface.

void ovs_dp_process_packet(struct sk_buff *skb, struct sw_flow_key *key)

{

const struct vport *p = OVS_CB(skb)->input_vport;

struct datapath *dp = p->dp;

struct sw_flow *flow;

struct sw_flow_actions *sf_acts;

struct dp_stats_percpu *stats;

u64 *stats_counter;

u32 n_mask_hit;

stats = this_cpu_ptr(dp->stats_percpu);

/* Look up flow. */

// The flow table query is based on the information extracted from the message

flow = ovs_flow_tbl_lookup_stats(&dp->table, key, &n_mask_hit);

if (unlikely(!flow)) {

struct dp_upcall_info upcall;

int error;

// The flow table is not found. Do upcall

memset(&upcall, 0, sizeof(upcall));

upcall.cmd = OVS_PACKET_CMD_MISS;

upcall.portid = ovs_vport_find_upcall_portid(p, skb);

upcall.mru = OVS_CB(skb)->mru;

error = ovs_dp_upcall(dp, skb, key, &upcall, 0);

if (unlikely(error))

kfree_skb(skb);

else

consume_skb(skb);

stats_counter = &stats->n_missed;

goto out;

}

// Find the flow table and perform corresponding operations according to the action of the flow table.

ovs_flow_stats_update(flow, key->tp.flags, skb);

sf_acts = rcu_dereference(flow->sf_acts);

ovs_execute_actions(dp, skb, sf_acts, key);

stats_counter = &stats->n_hit;

out:

/* Update datapath statistics. */

u64_stats_update_begin(&stats->syncp);

(*stats_counter)++;

stats->n_mask_hit += n_mask_hit;

u64_stats_update_end(&stats->syncp);

}

int ovs_execute_actions(struct datapath *dp, struct sk_buff *skb,

const struct sw_flow_actions *acts,

struct sw_flow_key *key)

{

int err, level;

level = __this_cpu_inc_return(exec_actions_level);

if (unlikely(level > OVS_RECURSION_LIMIT)) {

net_crit_ratelimited("ovs: recursion limit reached on datapath %s, probable configuration error\n",

ovs_dp_name(dp));

kfree_skb(skb);

err = -ENETDOWN;

goto out;

}

OVS_CB(skb)->acts_origlen = acts->orig_len;

err = do_execute_actions(dp, skb, key,

acts->actions, acts->actions_len);

if (level == 1)

process_deferred_actions(dp);

out:

__this_cpu_dec(exec_actions_level);

return err;

}

// There are many types of actions, but in the end, it usually needs to be output action, which is sent from an interface

static int do_execute_actions(struct datapath *dp, struct sk_buff *skb,

struct sw_flow_key *key,

const struct nlattr *attr, int len)

{

/* Every output action needs a separate clone of 'skb', but the common

* case is just a single output action, so that doing a clone and

* then freeing the original skbuff is wasteful. So the following code

* is slightly obscure just to avoid that.

*/

int prev_port = -1;

const struct nlattr *a;

int rem;

for (a = attr, rem = len; rem > 0;

a = nla_next(a, &rem)) {

int err = 0;

if (unlikely(prev_port != -1)) {

struct sk_buff *out_skb = skb_clone(skb, GFP_ATOMIC);

if (out_skb)

do_output(dp, out_skb, prev_port, key);

OVS_CB(skb)->cutlen = 0;

prev_port = -1;

}

switch (nla_type(a)) {

case OVS_ACTION_ATTR_OUTPUT:

prev_port = nla_get_u32(a);

break;

case OVS_ACTION_ATTR_TRUNC: {

struct ovs_action_trunc *trunc = nla_data(a);

if (skb->len > trunc->max_len)

OVS_CB(skb)->cutlen = skb->len - trunc->max_len;

break;

}

case OVS_ACTION_ATTR_USERSPACE:

output_userspace(dp, skb, key, a, attr,

len, OVS_CB(skb)->cutlen);

OVS_CB(skb)->cutlen = 0;

break;

case OVS_ACTION_ATTR_HASH:

execute_hash(skb, key, a);

break;

case OVS_ACTION_ATTR_PUSH_MPLS:

err = push_mpls(skb, key, nla_data(a));

break;

case OVS_ACTION_ATTR_POP_MPLS:

err = pop_mpls(skb, key, nla_get_be16(a));

break;

case OVS_ACTION_ATTR_PUSH_VLAN:

err = push_vlan(skb, key, nla_data(a));

break;

case OVS_ACTION_ATTR_POP_VLAN:

err = pop_vlan(skb, key);

break;

case OVS_ACTION_ATTR_RECIRC:

err = execute_recirc(dp, skb, key, a, rem);

if (nla_is_last(a, rem)) {

/* If this is the last action, the skb has

* been consumed or freed.

* Return immediately.

*/

return err;

}

break;

case OVS_ACTION_ATTR_SET:

err = execute_set_action(skb, key, nla_data(a));

break;

case OVS_ACTION_ATTR_SET_MASKED:

case OVS_ACTION_ATTR_SET_TO_MASKED:

err = execute_masked_set_action(skb, key, nla_data(a));

break;

case OVS_ACTION_ATTR_SAMPLE:

err = sample(dp, skb, key, a, attr, len);

break;

case OVS_ACTION_ATTR_CT:

if (!is_flow_key_valid(key)) {

err = ovs_flow_key_update(skb, key);

if (err)

return err;

}

err = ovs_ct_execute(ovs_dp_get_net(dp), skb, key,

nla_data(a));

/* Hide stolen IP fragments from user space. */

if (err)

return err == -EINPROGRESS ? 0 : err;

break;

}

if (unlikely(err)) {

kfree_skb(skb);

return err;

}

}

if (prev_port != -1)

do_output(dp, skb, prev_port, key);

else

consume_skb(skb);

return 0;

}

The output operation of the interface. The process is do_ output -->ovs_ vport_ Send -- > the send function registered by vport is directly called dev_queue_xmit function, because OVS comes in layer-2 packets, the flow table only modifies the header and forwards it out without going through the protocol stack.