reference resources

http://cn.linux.vbird.org/linux_server/0330nfs.php#What_NFS_0

Introduction to NFS

introduce

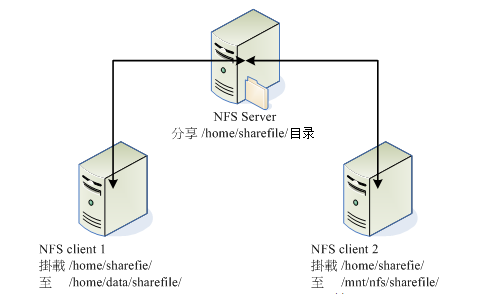

NFS is network Short for file system, the main function is to let different machines and different operating systems share the specified resource files with each other through the network. It can be simply considered as a file server, which can mount the directory shared by the remote NFS server to the local machine. In the local machine, the mounted directory looks like a disk partition of its own Very convenient.

After the NFS server in the above figure has set the directory to be shared, other clients can directly mount the directory to a mount point on their own system, and then directly access the data in the server's shared directory through the mount point (provided that the permissions are sufficient)

NFS data acquisition process

background

Because NFS supports many functions, different programs will be used to start the non functional functions, and each program will start some ports to transmit data, so the corresponding ports of NFS function are not fixed, but randomly obtain some ports less than 1024 for use. So when clients need to use these ports, they need remote procedure call (RPC) services. When the NFS server starts, it will register the randomly acquired ports into RPC, because RPC knows the NFS function corresponding to each port, so when the client requests the server, it only needs to let the RPC service listening on port 111 return the correct port of the client.

**Note: * * before starting NFS, you need to start RPC service. Otherwise, NFS cannot register with RPC. At the same time, when RPC restarts, the old registration data will be lost. All services managed by him need to register with RPC again.

Therefore, after the server registers the port with RPC, if the client has NFS data access requirements, the execution process is as follows:

- The client will ask the RPC (port 111) of the server for NFS file access function;

- After the server finds the corresponding registered port, it will report to the client;

- After the client knows the correct port, it can directly connect with the program running on the port.

Primary profile

The default configuration file address is / etc/exports, and the contents of the configuration file need to be written manually

Syntax and parameters

[root@suhw ~]# vim /etc/exports /tmp 192.168.100.0/24(ro) localhost(rw) *.ev.ncku.edu.tw(ro,sync) [Share directory] [The first host(jurisdiction)] [Available hosts] [Wildcards available]

Detailed users can refer to the example.

Authority reference parameter, more comprehensive introduction reference man exports

| Parameter value | Content description |

|---|---|

| ro | The host has read-only permissions on the shared directory |

| rw | The host has read-write permission to the shared directory |

| root_squash | When the client uses the root user to access the shared folder, it maps the root user to an anonymous user |

| no_root_squash | When the client uses the root user to access the shared folder, it does not map the root user. If you want to open the client to use the root identity to operate the server's file system, you have to open no here_ root_ Squash! |

| all_squash | Any user on the client will map to an anonymous user when accessing the shared directory |

| anonuid | Map users on the client to users with the specified local user ID |

| anongid | Map users on the client to belong to the specified local user group ID |

| sync | Write data to memory and hard disk synchronously |

| async | Data will be stored in memory instead of being written directly to the hard disk |

| insecure | Allow unauthorized access from this machine |

give an example

- Open / home/public, but only users of 192.168.100.0/24 can read and write, and other sources can only read

/home/public 192.168.100.0/24(rw) *(ro)

- Make the / home/iso directory public and allow everyone to read

/home/iso *(ro,insecure,no_root_squash)

Note no_ root_ Functions of squash. In this example, if you are a client and you log in to your Linux host as root, then when you mount my host's / home/iso, you will have root permission in the mount's directory!

exportfs instruction

With exportfs, you can re share the directory resources changed by / etc/exports, dismount or re share the directory shared by NFS Server, and so on.

Syntax and parameters

| parameter | effect |

|---|---|

| -a | Turn all directory shares on or off |

| -o options | Specify a list of sharing options |

| -i | Ignore the / etc/exports file and use only the default and command line specified options |

| -r | Share all directories again for / etc/exportfs to take effect |

| -u | Unsharing one or more directories |

| -v | Output details |

| -s | Show the list in / etc/exports |

View shared resource records

The login files of NFS server are placed in / var/lib/nfs directory, and etab is more important

etab records the full permission settings of the directory shared by NFS

/home/iso (ro,sync,wdelay,hide,nocrossmnt,insecure,no_root_squash,no_all_squash,no_subtree_check,secure_locks,acl,no_pnfs,anonuid=65534,anongid=65534,sec=sys,insecure,no_root_squash,no_all _squash)

showmount instruction

exportfs is used to maintain shared resources on the NFS server side, while shownmount is used on the NFS client side. This command can view the directory resources shared by the NFS server

Syntax and parameters

showmount [ -adehv ] [ --all ] [ --directories ] [ --exports ] [ --help ] [ --version ] [ host ]

| Parameter options | effect |

|---|---|

| -d or --directories | Show directories loaded by nfs clients |

| -e or --exports | Display all shared directories on the nfs server |

Example of mounting a remote directory

NFS Server configuration

Environmental preparation

To configure NFS server, rpcbind and NFS utils are required first

You can use RPM - QA | grep NFS utils to check whether the software has been installed. If not, execute the following command

[root@suhw ~]# yum install -y nfs-utils

Rpcbind: NFS can be treated as an RPC service. Before starting the RPC service, we need to start rpcbind first

[root@suhw ~]# systemctl start rpcbind [root@suhw ~]# systemctl status rpcbind ● rpcbind.service - RPC bind service Loaded: loaded (/usr/lib/systemd/system/rpcbind.service; enabled; vendor preset: enabled) Active: active (running) since Tue 2020-06-16 10:26:08 CST; 2s ago Process: 29537 ExecStart=/sbin/rpcbind -w $RPCBIND_ARGS (code=exited, status=0/SUCCESS) Main PID: 29538 (rpcbind) Tasks: 1 Memory: 660.0K CGroup: /system.slice/rpcbind.service └─29538 /sbin/rpcbind -w Jun 16 10:26:07 suhw systemd[1]: Starting RPC bind service... Jun 16 10:26:08 suhw systemd[1]: Started RPC bind service.

Since the fixed use of port 111 in rpc, you can directly check whether port 111 is in listening state

[root@suhw ~]# netstat -tulnp | grep 111 tcp6 0 0 :::111 :::* LISTEN 29538/rpcbind udp 0 0 0.0.0.0:111 0.0.0.0:* 29538/rpcbind udp6 0 0 :::111 :::* 29538/rpcbind

Start service

Make sure rpcbind starts

#View rpcbind status [root@suhw ~]# systemctl status rpcbind #If rpcbind is not started, start [root@suhw ~]# systemctl start rpcbind

Make sure nfs starts

[root@suhw ~]# systemctl status nfs #If nfs is not started, start [root@suhw ~]# systemctl start nfs

Profile writing

Allow addresses beginning with 10.91 to share / home/test directory and have read-write operation

[root@suhw ~]# cat /etc/exports /home/test 10.91.*(rw,sync,no_root_squash)

Profile takes effect

Use the exportfs command described above to manage the list of file systems for the current NFS share

# Share again for exports to take effect [root@suhw ~]# exportfs -r [root@suhw ~]# exportfs -s /home/test 10.91.*(sync,wdelay,hide,no_subtree_check,sec=sys,rw,secure,no_root_squash,no_all_squash)

verification

After the NFS server configuration is completed, first test whether it has been shared successfully through the showmount command on the server

[root@suhw ~]# showmount -e localhost Export list for localhost: /home/test 10.91.*

At this time, if you look at the previous "etab", you will find that there is an additional record i

[root@suhw ~]# cat /var/lib/nfs/etab /home/test 10.91.*(rw,sync,wdelay,hide,nocrossmnt,secure,no_root_squash,no_all_squash,no_subtree_check,secure_locks,acl,no_pnfs,anonuid=65534,anongid=65534,sec=sys,rw,secure,no_root_squash,no_all_squash)

Create a file in the mount directory for observation

[root@suhw ~]# touch /home/test/test.txt

NFS Cient configuration

Environmental validation

1. Confirm that the local side has started the rpcbind service

[root@nfs-client ~]# systemctl status rpcbind ● rpcbind.service - RPC bind service Loaded: loaded (/usr/lib/systemd/system/rpcbind.service; enabled; vendor preset: enabled) Active: active (running) since Mon 2020-06-15 18:11:40 CST; 20h ago Main PID: 1264 (rpcbind) CGroup: /system.slice/rpcbind.service └─1264 /sbin/rpcbind -w Jun 15 18:11:40 csmp-SP1Fusion systemd[1]: Starting RPC bind service... Jun 15 18:11:40 csmp-SP1Fusion systemd[1]: Started RPC bind service.

2. Make sure showmount is installed

View remote server shared resources

[root@nfs-client ~]# showmount -e 10.91.156.174 Export list for 10.91.156.174: /home/test 10.91.*/

Mount

1. Set up the directory of mount points to be mounted on the local side

[root@nfs-client ~]# mkdir -p /home/test

2. mount the remote host directly to the relevant directory with mount

[root@nfs-client ~]# mount 10.91.156.174:/home/test /home/test

3. View mount information

[root@nfs-client ~]# df -h Filesystem Size Used Avail Use% Mounted on ''' 10.91.156.174:/home/test 17G 8.0G 9.1G 47% /home/test

verification

Just view the contents of the local mount directory

[root@nfs-client ~]# ll /home/test/ total 0 -rw-r--r-- 1 root root 0 Jun 16 14:47 test.txt

Unmount

If you do not need to mount, use the umount command to specify the local mount directory

[root@nfs-client ~]# umount /home/test