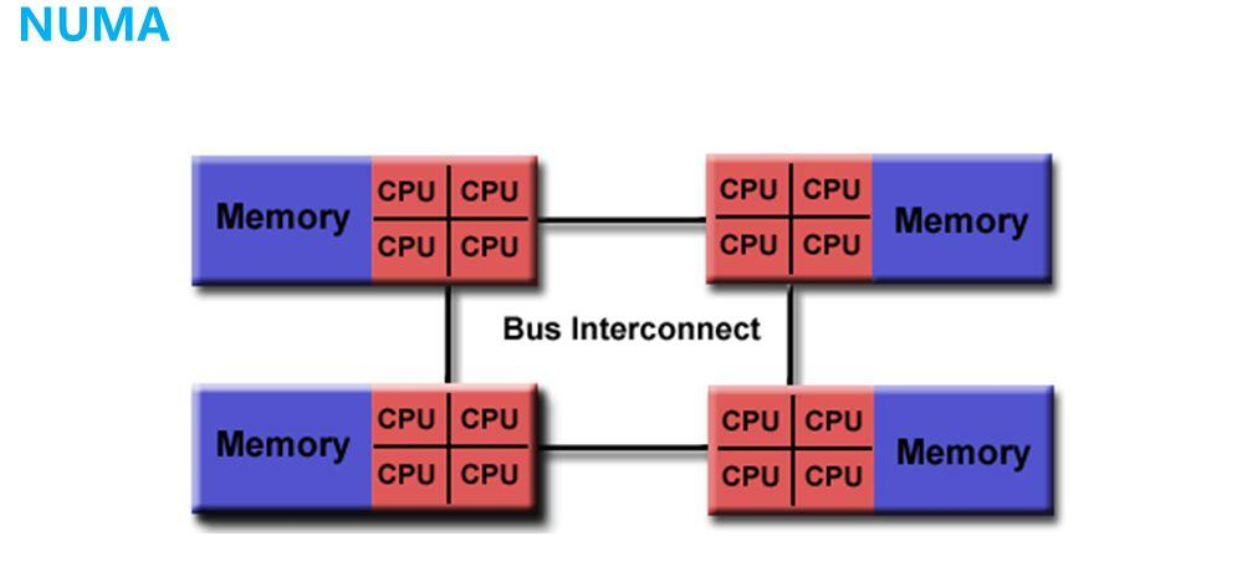

Undertaking ZONE of linux Kernel Things , the physical memory of a node is managed by dividing it into zone s as needed, and each node is managed by pg_data_t Manage (refer to if you want to know) SPARSE(3) of the physical memory model for those things in the linux kernel For NUMA systems:

Each node has its own local memory, so it has its own pg_data_t and zones are managed, and the zone list structure is composed of zones in order to facilitate the memory on all nodes of the system.

NUMA zonelist

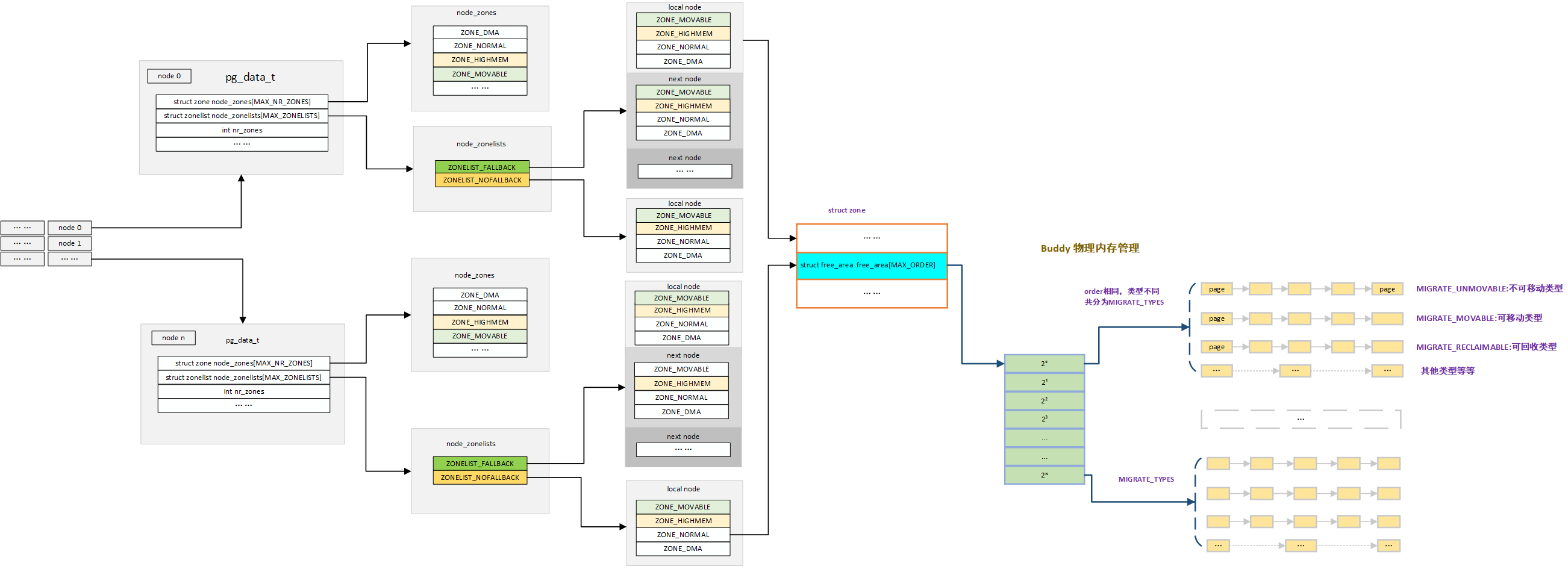

The organizational framework for the above NUMA system kernel memory management data structures is as follows:

Pg_ Data_ The order zonelist used in the T structure to manage this node and to take the next zone by FallBack if the request for memory fails:

typedef struct pglist_data {

/*

* node_zones contains just the zones for THIS node. Not all of the

* zones may be populated, but it is the full list. It is referenced by

* this node's node_zonelists as well as other node's node_zonelists.

*/

struct zone node_zones[MAX_NR_ZONES];

/*

* node_zonelists contains references to all zones in all nodes.

* Generally the first zones will be references to this node's

* node_zones.

*/

struct zonelist node_zonelists[MAX_ZONELISTS];

int nr_zones; /* number of populated zones in this node */

... ...

} pg_data_t;

Pg_in NUMA System Data_ Several members of the zone-related structure in t:

- struct zone node_zones[MAX_NR_ZONES]: Used to manage all possible zones for this node, from low to high by zone type.

- int nr_zones: Used to record the number of zones managed by this node.

- struct zonelist node_zonelists[MAX_ZONELISTS]: Used to manage the zone order of memory requests.

The following is a description of the zone list in the kernel documentation, which is important for understanding the role of zone list

For each node with memory, Linux constructs an independent memory management subsystem, complete with its own free page lists, in-use page lists, usage statistics and locks to mediate access. In addition, Linux constructs for each memory zone [one or more of DMA, DMA32, NORMAL, HIGH_MEMORY, MOVABLE], an ordered "zonelist". A zonelist specifies the zones/nodes to visit when a selected zone/node cannot satisfy the allocation request. This situation, when a zone has no available memory to satisfy a request, is called"overflow" or "fallback".

struct zonelist

MAX_ Array of ZONELISTS bit struct zonelist members:

enum {

ZONELIST_FALLBACK, /* zonelist with fallback */

#ifdef CONFIG_NUMA

/*

* The NUMA zonelists are doubled because we need zonelists that

* restrict the allocations to a single node for __GFP_THISNODE.

*/

ZONELIST_NOFALLBACK, /* zonelist without fallback (__GFP_THISNODE) */

#endif

MAX_ZONELISTS

};

Mainly contains ZONELIST_FALLBACK and ZONELIST_NOFALLBACK:

- ZONELIST_FALLBACK: Exists in NUMA or SMP systems at any time, and requests memory from the next zone in the order of zones managed in the FALLBACK when memory fails. There is only one node in the SMP system whose order is from ZONE_HIGHMEM, ZONE_NORMAL, ZONE_ Sort high to low in DMA. In a NUMA system, after this node is first scheduled, then other remote nodes'memory is scheduled from near to far according to the NUMA system. When this node has no memory, memory is obtained from the nearest remote node in turn according to the nearest principle to determine the memory allocation policy.

- ZONELIST_NOFALLBACK:NUMA systems sometimes only want to get memory from this node as needed, not from the total remote. Thus zonelist in NOFALLBACK simply arranges the zones of this node, from high to low.

The struct zonelist structure is defined as follows:

/*

* One allocation request operates on a zonelist. A zonelist

* is a list of zones, the first one is the 'goal' of the

* allocation, the other zones are fallback zones, in decreasing

* priority.

*

* To speed the reading of the zonelist, the zonerefs contain the zone index

* of the entry being read. Helper functions to access information given

* a struct zoneref are

*

* zonelist_zone() - Return the struct zone * for an entry in _zonerefs

* zonelist_zone_idx() - Return the index of the zone for an entry

* zonelist_node_idx() - Return the index of the node for an entry

*/

struct zonelist {

struct zoneref _zonerefs[MAX_ZONES_PER_ZONELIST + 1];

};

The zones sorting order is arranged in the struct zonelist structure (zones are sorted by the kernel according to proximity principle when initializing). The zoneref structure records detailed zones structure information as follows:

/*

* This struct contains information about a zone in a zonelist. It is stored

* here to avoid dereferences into large structures and lookups of tables

*/

struct zoneref {

struct zone *zone; /* Pointer to actual zone */

int zone_idx; /* zone_idx(zoneref->zone) */

};

- struct zone *zone: is the zone structure to point to.

- int zone_idx: zone index.

zonelist build

Zoelist is built from the beginning of the kernel from near to far according to the system conditions, and each node has a corresponding zonelist to determine the order of memory allocation.

---->start_kernel

---->build_all_zonelists

---->build_all_zonelists_init

---->__build_all_zonelists

---->build_zonelists

---->build_zonelists_in_node_order

---->build_thisnode_zonelistsThe zonelist initialization call follows the final build_ Call build_in zonelists function Zonelists_ In_ Node_ Order is used to initialize ZONELIST_FALLBACK corresponds to zonelist, build_thisnode_zonelists to initialize ZONELIST_NOFALLBACK corresponds to zonelist.

build_zonelists

Build_ Zoelists are divided into NUMA and SMP systems, and NUMA systems handle the following:

/*

* Build zonelists ordered by zone and nodes within zones.

* This results in conserving DMA zone[s] until all Normal memory is

* exhausted, but results in overflowing to remote node while memory

* may still exist in local DMA zone.

*/

static void build_zonelists(pg_data_t *pgdat)

{

static int node_order[MAX_NUMNODES];

int node, load, nr_nodes = 0;

nodemask_t used_mask = NODE_MASK_NONE;

int local_node, prev_node;

/* NUMA-aware ordering of nodes */

local_node = pgdat->node_id;

load = nr_online_nodes;

prev_node = local_node;

memset(node_order, 0, sizeof(node_order));

while ((node = find_next_best_node(local_node, &used_mask)) >= 0) {

/*

* We don't want to pressure a particular node.

* So adding penalty to the first node in same

* distance group to make it round-robin.

*/

if (node_distance(local_node, node) !=

node_distance(local_node, prev_node))

node_load[node] = load;

node_order[nr_nodes++] = node;

prev_node = node;

load--;

}

build_zonelists_in_node_order(pgdat, node_order, nr_nodes);

build_thisnode_zonelists(pgdat);

}

- Call find_next_best_node, obtained from the NUMA topology Data_ The node topology information corresponding to the t-node, which is saved to the node_from the nearest to the farthest Order.

- build_zonelists_in_node_order: Initialize ZONELIST_FALLBACK corresponds to zone list node order.

- Build_ Thisnode_ Zoelists: Build this node zone s in order from high to low.

build_zonelists_in_node_order

build_zonelists_in_node_order is used to build ZONELIST_FALLBACK corresponds to zone list:

/*

* Build zonelists ordered by node and zones within node.

* This results in maximum locality--normal zone overflows into local

* DMA zone, if any--but risks exhausting DMA zone.

*/

static void build_zonelists_in_node_order(pg_data_t *pgdat, int *node_order,

unsigned nr_nodes)

{

struct zoneref *zonerefs;

int i;

zonerefs = pgdat->node_zonelists[ZONELIST_FALLBACK]._zonerefs;

for (i = 0; i < nr_nodes; i++) {

int nr_zones;

pg_data_t *node = NODE_DATA(node_order[i]);

nr_zones = build_zonerefs_node(node, zonerefs);

zonerefs += nr_zones;

}

zonerefs->zone = NULL;

zonerefs->zone_idx = 0;

}

- Based on the incoming node_order order, dividing each node's memory into zones by calling build_zonerefs_node joins zonelist, when memory request fails FALLBACK, it first requests memory from other zones of the local node. If zone memory in the local node is not enough, it requests memory from other nodes (of course, the requested memory is less efficient at this time)

By default, Linux will attempt to satisfy memory allocation requests from the node to which the CPU that executes the request is assigned. Specifically, Linux will attempt to allocate from the first node in the appropriate zonelist for the node where the request originates. This is called "local allocation." If the "local" node cannot satisfy the request, the kernel will examine other nodes' zones in the selected zonelist looking for the first zone in the list that can satisfy the request.

On architectures that do not hide memoryless nodes, Linux will include only zones [nodes] with memory in the zonelists. This means that for a memoryless node the "local memory node"--the node of the first zone in CPU's node's zonelist--will not be the node itself. Rather, it will be the node that the kernel selected as the nearest node with memory when it built the zonelists. So, default, local allocations will succeed with the kernel supplying the closest available memory. This is a consequence of the same mechanism that allows such allocations to fallback to other nearby nodes when a node that does contain memory overflows.

build_thisnode_zonelists

build_thisnode_zonelists is mainly building ZONELIST_NOFALLBACK corresponds to zone list:

/*

* Build gfp_thisnode zonelists

*/

static void build_thisnode_zonelists(pg_data_t *pgdat)

{

struct zoneref *zonerefs;

int nr_zones;

zonerefs = pgdat->node_zonelists[ZONELIST_NOFALLBACK]._zonerefs;

nr_zones = build_zonerefs_node(pgdat, zonerefs);

zonerefs += nr_zones;

zonerefs->zone = NULL;

zonerefs->zone_idx = 0;

}

ZONELIST_NOFALLBACK corresponds to a zone list with only this node information, and zones are sorted from highest to lowest.

ZONELIST_NOFALLBACK is designed for specific memory request scenarios and only wants to request memory from the specified node or local node. When the local node or the specified memory node has insufficient memory, it will know the memory shortage immediately, instead of expecting to continue requesting memory from other nodes through the FALLBACK mechanism. For example, numa_node_id() or CPU_ TO_ Similar functions, such as node (), fetch memory only from the specified node.

Some kernel allocations do not want or cannot tolerate this allocation fallback behavior. Rather they want to be sure they get memory from the specified node or get notified that the node has no free memory. This is usually the case when a subsystem allocates per CPU memory resources, for example

A typical model for making such an allocation is to obtain the node id of the node to which the "current CPU" is attached using one of the kernel's numa_node_id() or CPU_to_node() functions and then request memory from only the node id returned. When such an allocation fails, the requesting subsystem may revert to its own fallback path. The slab kernel memory allocator is an example of this. Or, the subsystem may choose to disable or not to enable itself on allocation failure. The kernel profiling subsystem is an example of this.

build_zonerefs_node

build_zonerefs_node() adds zones from the specified node to the zone list from high to low:

/*

* Builds allocation fallback zone lists.

*

* Add all populated zones of a node to the zonelist.

*/

static int build_zonerefs_node(pg_data_t *pgdat, struct zoneref *zonerefs)

{

struct zone *zone;

enum zone_type zone_type = MAX_NR_ZONES;

int nr_zones = 0;

do {

zone_type--;

zone = pgdat->node_zones + zone_type;

if (managed_zone(zone)) {

zoneref_set_zone(zone, &zonerefs[nr_zones++]);

check_highest_zone(zone_type);

}

} while (zone_type);

return nr_zones;

}

Pg_of the specified node in turn from the highest zone type supported by the system Data_ Node_in t Zones are added to zoneref in order from high to low, and memory is allocated first from the higher zone type when memory is allocated.