preface

Starting today, we will briefly understand and analyze the compiler architecture system LLVM. After understanding the compilation process of LLVM, we will simply implement a Clang plug-in. Let's start today's content.

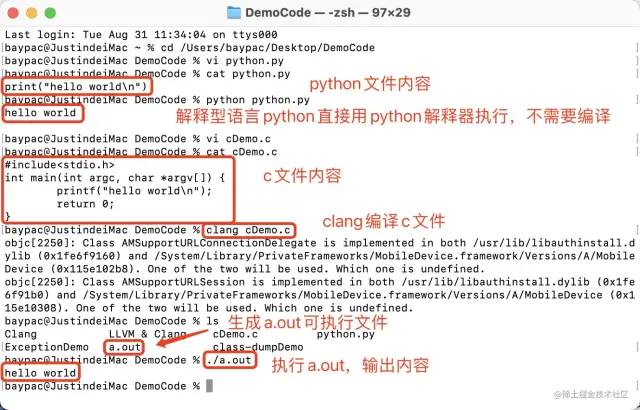

Before studying the compiler, first understand the difference between interpretive language and compiled language.

- Interpretive language: the program does not need to be compiled. The program is translated into machine language only when it is running. It must be translated every time it is executed. Low efficiency, relying on interpreter and good cross platform.

- Compiled language: before the program is executed, a special compilation process is required to compile the program into a machine language file. There is no need to re translate it at runtime, and the compiled results can be used directly. The program has high execution efficiency, depends on the compiler, and has poor cross platform performance.

So is there any way to make the program efficient and ensure good cross platform performance?

Hey, of course. The LLVM to be explored today puts forward the corresponding solutions.

1: LLVM

1.1 LLVM overview

LLVM is a framework system of architecture compiler, written in C + +. It is used to optimize the compile time, link time, run time and idle time of programs written in any programming language. It is open to developers and compatible with existing scripts.

The LLVM program was launched in 2000 and was initially hosted by Dr. Chris Lattner of UIUC University in the United States. In 2006, Chris Lattner joined Apple Inc. and devoted himself to the application of LLVM in Apple development system. Apple is also a major sponsor of the LLVM program.

At present, LLVM has been adopted by Apple iOS development tool, Xilinx Vivado, Facebook, Google and other major companies.

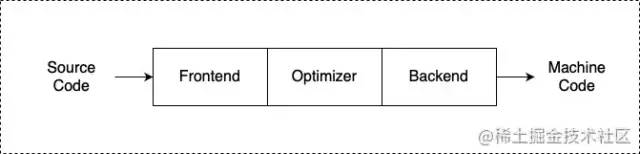

1.2: traditional compiler design

1.2.1: compiler front end

The task of the compiler front end is to parse the source code. It will perform lexical analysis, syntax analysis and semantic analysis, check the source code for errors, and then build an Abstract Syntax Tree (AST). The front end of LLVM will also generate intermediate representation (IR).

1.2.2: Optimizer

The optimizer is responsible for various optimizations. Reduce the volume of the package (split symbols) and improve the running time of the code (eliminate redundant calculations, reduce the number of pointer jumps, etc.).

1.2.3: Backend / CodeGenerator

The backend maps the code to the target instruction set. Generate machine language and optimize machine related code.

Because traditional compilers (such as GCC) are designed as a whole application and do not support multiple languages or multiple hardware architectures, their use is greatly limited.

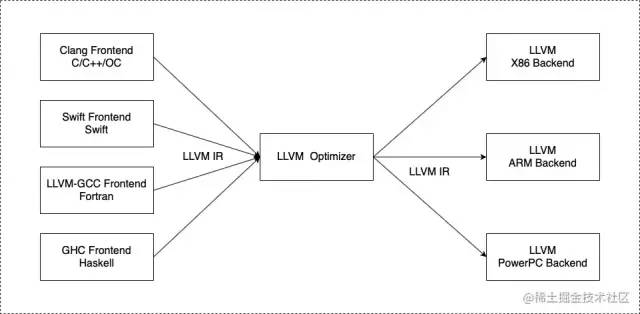

1.3: Design of llvm

When the compiler decides to support multiple source languages or multiple hardware architectures, the most important part of LLVM comes.

The most important aspect of LLVM design is the use of a common code representation (IR), which is the form used to represent code in the compiler. LLVM can independently write the front end for any programming language and the back end for any hardware architecture.

When you need to support a new language, you only need to write an independent front end that can generate IR; When you need to support a new hardware architecture, you only need to write an independent backend that can receive IR.

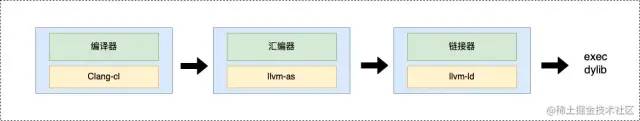

1.3.1: compiler architecture for IOS

The compiler used by Objective-C/C/C + + is Clang at the front end, Swift at the Swift end, and LLVM at the back end.

2: Clang

Clang is a subproject of the LLVM project. It is a lightweight compiler based on LLVM architecture. It was born to replace GCC and provide faster compilation speed. It is the compiler responsible for compiling Objective-C/C/C + + language. It belongs to the compiler front end in the whole LLVM architecture. For developers, studying clang can bring us many benefits.

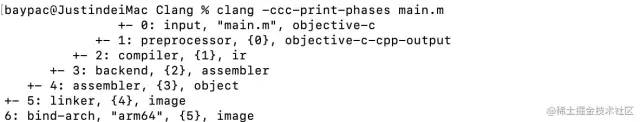

2.1: compilation process

You can print the compilation phase of the source code through the following command:

clang -ccc-print-phases main.m

The printing results are as follows:

- Input file: source file found.

- Preprocessing stage: this process includes macro replacement and header file import.

- Compilation stage: perform lexical analysis, syntax analysis, check whether the syntax is correct, and finally generate IR (or bitcode).

- Back end: here, LLVM will optimize one Pass (link, fragment) by one. Each Pass will do something and finally generate assembly code.

- Generate target file.

- Link: link the required dynamic library and static library to generate executable files.

- Generate corresponding executable files according to different hardware architectures (here is the M1 version of iMAC, arm64).

In the whole process, the optimizer is not specified because the optimization has been distributed in the front and back ends.

0: input source file

Source file found.

1: Pretreatment stage

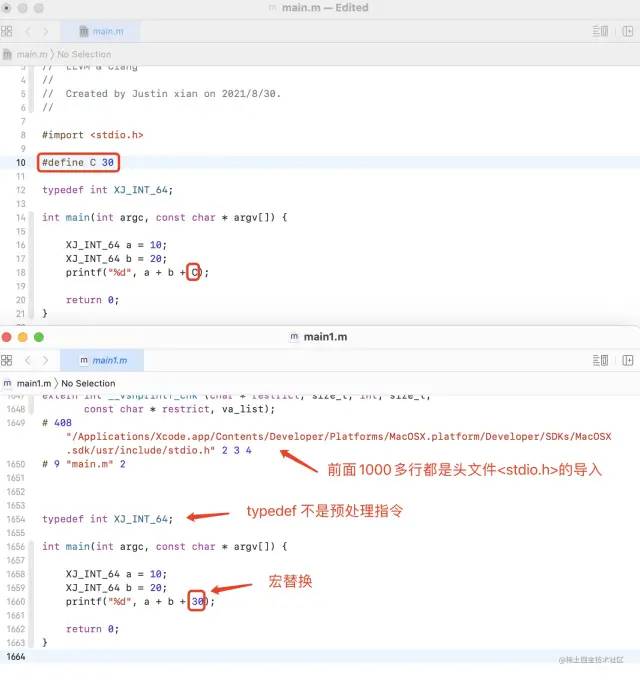

Execute preprocessing instructions, including macro replacement, header file import, conditional compilation, and generate new source code to the compiler.

You can see the code after executing the preprocessing instruction through the following command:

// View directly on the terminal clang -E main.m // Generate mian1 M file view clang -E main.m >> main1.m

2: Compilation phase

Perform lexical analysis, syntax analysis, semantic analysis, check whether the syntax is correct, generate AST, IR (. ll) or bitcode (. bc) files.

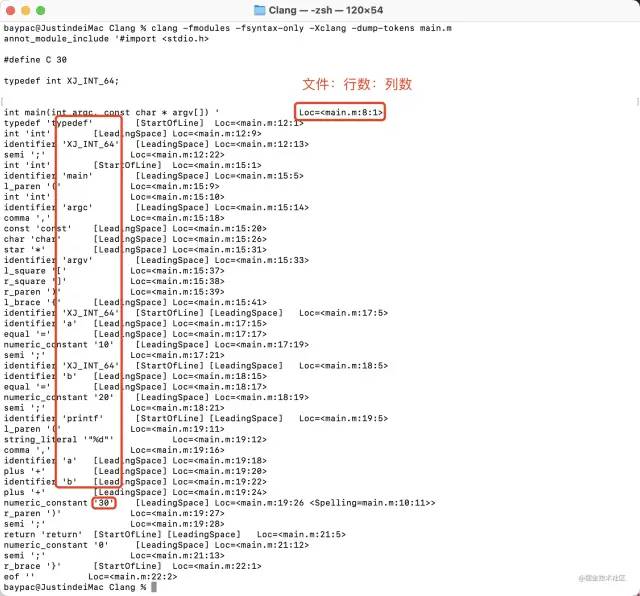

2.1: lexical analysis

After preprocessing, lexical analysis will be carried out to divide the code into tokens and indicate the number of rows and columns, including keywords, class names, method names, variable names, brackets, operators, etc.

You can see the result of lexical analysis by using the following command:

clang -fmodules -fsyntax-only -Xclang -dump-tokens main.m

(slide to show more)

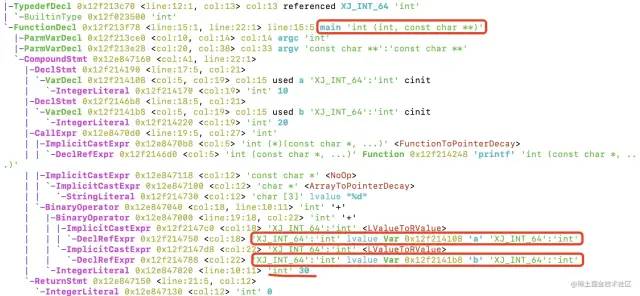

2.2: syntax analysis

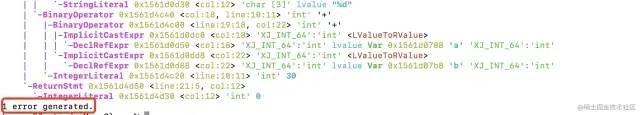

After lexical analysis is completed, it is syntax analysis. Its task is to verify the correctness of the syntax structure of the source code. On the basis of lexical analysis, word sequences are combined into various grammatical phrases, such as "statement", "expression", etc., and then all nodes are formed into an Abstract Syntax Tree (AST).

You can view the result of parsing through the following command:

clang -fmodules -fsyntax-only -Xclang -ast-dump main.m // If the import header file cannot be found, you can specify the SDK clang -isysroot sdk route -fmodules -fsyntax-only -Xclang -ast-dump main.m

(slide to show more)

Syntax tree analysis:

// The addresses here are virtual addresses (the offset address of the current file), and the real address will be opened at runtime

// The address obtained by Mach-O decompilation is the virtual address

// typedef 0x1298ad470 virtual address

-TypedefDecl 0x1298ad470 <line:12:1, col:13> col:13 referenced XJ_INT_64 'int'

| `-BuiltinType 0x12a023500 'int'

// main function, return value int, first parameter int, second parameter const char**

`-FunctionDecl 0x1298ad778 <line:15:1, line:22:1> line:15:5 main 'int (int, const char **)'

// First parameter

|-ParmVarDecl 0x1298ad4e0 <col:10, col:14> col:14 argc 'int'

// Second parameter

|-ParmVarDecl 0x1298ad628 <col:20, col:38> col:33 argv 'const char **':'const char **'

// Compound statement, from line 41 to line 22 and column 1 of the current line

// That is, the main function {} range

/*

{

XJ_INT_64 a = 10;

XJ_INT_64 b = 20;

printf("%d", a + b + C);

return 0;

}

*/

`-CompoundStmt 0x12a1aa560 <col:41, line:22:1>

// Declare line 17, column 5 to column 21, i.e. XJ_INT_64 a = 10

|-DeclStmt 0x1298ad990 <line:17:5, col:21>

// Variable a, 0x1298ad908 virtual address

| `-VarDecl 0x1298ad908 <col:5, col:19> col:15 used a 'XJ_INT_64':'int' cinit

// The value is 10

| `-IntegerLiteral 0x1298ad970 <col:19> 'int' 10

// Declare line 18, column 5 to column 21, i.e. XJ_INT_64 b = 20

|-DeclStmt 0x1298adeb8 <line:18:5, col:21>

// Variable B, 0x1298ad9b8 virtual address

| `-VarDecl 0x1298ad9b8 <col:5, col:19> col:15 used b 'XJ_INT_64':'int' cinit

// The value is 20

| `-IntegerLiteral 0x1298ada20 <col:19> 'int' 20

// Call printf function

|-CallExpr 0x12a1aa4d0 <line:19:5, col:27> 'int'

//Function pointer type, i.e. int printf (const char * _restrict,...)

| |-ImplicitCastExpr 0x12a1aa4b8 <col:5> 'int (*)(const char *, ...)' <FunctionToPointerDecay>

// printf function, 0x1298ada48 virtual address

| | `-DeclRefExpr 0x1298aded0 <col:5> 'int (const char *, ...)' Function 0x1298ada48 'printf' 'int (const char *, ...)'

// The first parameter is the content in ""

| |-ImplicitCastExpr 0x12a1aa518 <col:12> 'const char *' <NoOp>

// Type description

| | `-ImplicitCastExpr 0x12a1aa500 <col:12> 'char *' <ArrayToPointerDecay>

// %d

| | `-StringLiteral 0x1298adf30 <col:12> 'char [3]' lvalue "%d"

// In addition, the value of a + b is taken as the first value + the second value 30

| `-BinaryOperator 0x12a1aa440 <col:18, line:10:11> 'int' '+'

// Addition, a + b

| |-BinaryOperator 0x12a1aa400 <line:19:18, col:22> 'int' '+'

// Type description

| | |-ImplicitCastExpr 0x1298adfc0 <col:18> 'XJ_INT_64':'int' <LValueToRValue>

// a

| | | `-DeclRefExpr 0x1298adf50 <col:18> 'XJ_INT_64':'int' lvalue Var 0x1298ad908 'a' 'XJ_INT_64':'int'

// Type description

| | `-ImplicitCastExpr 0x1298adfd8 <col:22> 'XJ_INT_64':'int' <LValueToRValue>

// b

| | `-DeclRefExpr 0x1298adf88 <col:22> 'XJ_INT_64':'int' lvalue Var 0x1298ad9b8 'b' 'XJ_INT_64':'int'

// 30 after macro replacement

| `-IntegerLiteral 0x12a1aa420 <line:10:11> 'int' 30

// return 0

`-ReturnStmt 0x12a1aa550 <line:21:5, col:12>

`-IntegerLiteral 0x12a1aa530 <col:12> 'int' 0

\

(slide to show more)

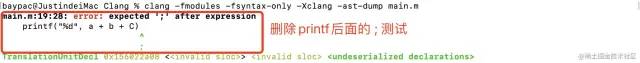

In case of syntax error, the corresponding error will be pointed out:

2.3: generate intermediate code IR (intermediate representation)

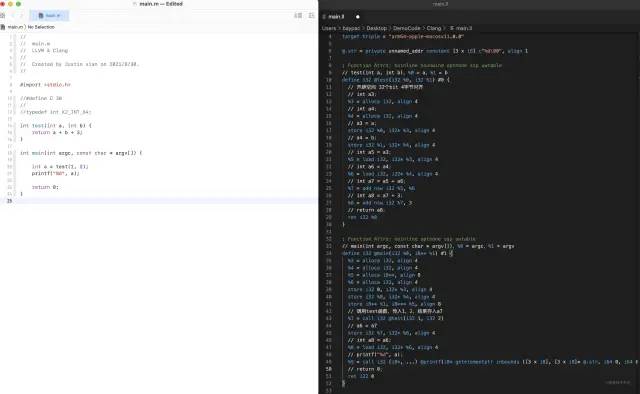

After completing the above steps, the intermediate code IR is generated. The Code Generation will traverse the syntax tree from top to bottom and gradually translate it into LLVM IR. In this step, OC code will perform runtime bridging, such as property synthesis, ARC processing, etc.

2.3.1: basic syntax of IR

- @Global identity

- %Local identification

- alloca opens up space

- align memory alignment

- i32 32 bit s, 4 bytes

- store write to memory

- load read data

- Call call function

- ret return

You can generate the following command ll text file to view the IR code.

clang -S -fobjc-arc -emit-llvm main.m

(slide to show more)

2.4: optimization of IR

In the IR code above, we can see that the IR code generated by translating the syntax tree bit by bit looks a little stupid, but it can be optimized.

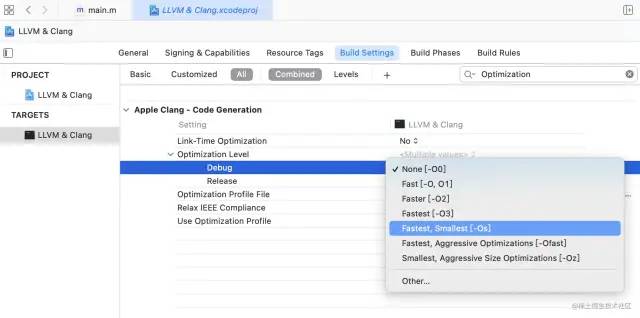

The optimization levels of LLVM are - O0, - O1, - O2, - O3, - Os, - Ofast, - Oz (the first is the capital English letter O).

You can optimize using the command:

clang -Os -S -fobjc-arc -emit-llvm main.m -o main.ll

(slide to show more)

The optimized IR code is concise and clear (the higher the optimization level is, the better. It is - Os in release mode, which is also the most recommended).

You can also set target - > build setting - > optimization level in xcode

2.5: bitCode

After Xcode 7, if bitcode is enabled, Apple will be right ll IR file for further optimization and generation Intermediate code of bc file.

Use the following command to generate the optimized IR code bc Code:

clang -emit-llvm -c main.ll -o main.bc

(slide to show more)

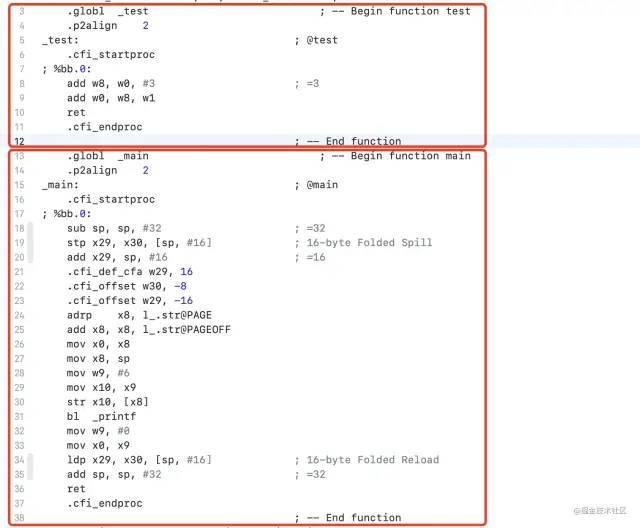

3: Back end stage (generate assembly. s)

The back end converts the received IR structure into different processing objects, and implements its processing process as one Pass type. The conversion, analysis and optimization of IR are completed by processing Pass. Then generate assembly code (. s).

Use the following command bc or ll code generation assembly code:

// bitcode -> .s clang -S -fobjc-arc main.bc -o main.s // IR -> .s clang -S -fobjc-arc main.ll -o main.s // Assembly code can also be optimized clang -Os -S -fobjc-arc main.ll -o main.s

(slide to show more)

4: Assembly phase (generate object file. o)

The generation of object file is that the assembler takes assembly code as input, converts assembly code into machine code, and finally outputs object file (. o).

The command is as follows:

clang -fmodules -c main.s -o main.o

(slide to show more)

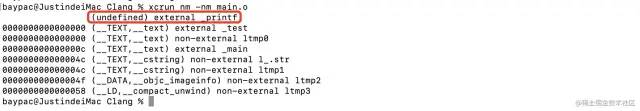

Use the nm command to view main O symbols in:

xcrun nm -nm main.o

(slide to show more)

The output results are as follows:

You can see that after executing the command, an error is reported: the external command cannot be found_ printf symbol. Because this function is imported from the outside, you need to link the corresponding library used.

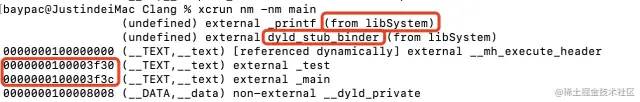

5: Link phase (generate executable Mach-O)

The linker compiles the generated O files and required dynamic libraries dylib and static library a link together to generate an executable file (Mach-O file).

The command is as follows:

clang main.o -o main

To view symbols after a link:

You can see that the output result still shows that the external symbol cannot be found_ Printf, but there is more (from libSystem) after it, indicating_ The library where printf is located is libSystem. This is because the libSystem dynamic library needs to be bound dynamically at run time.

The test function and main function have also generated the offset position of the file. At present, this file is a correct executable file.

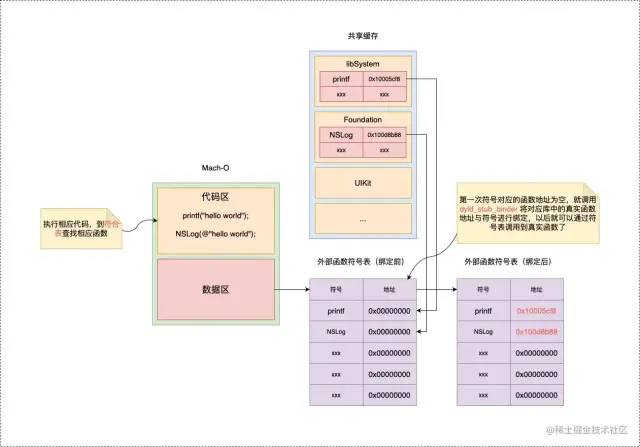

There is also a dyld_ stub_ In fact, as long as the link has this symbol, this symbol is responsible for dynamic binding. After Mach-O enters memory (i.e. execution), dyld will immediately add dyld to libSystem_ stub_ The function address of the binder is bound to the symbol in Mach-O.

dyld_ stub_ The binder symbol is a non lazy binding. Other lazy binding symbols, such as here_ printf is used for the first time through dyld_stub_binder to bind the real function address with the symbol. When calling, you can find the function address in the corresponding library through the symbol for calling.

External function binding diagram:

Difference between link and binding:

- Link. At compile time, you only make a mark on which library the mark symbol is in.

- Binding: when running, bind the external function address with the symbol in Mach-O.

Execute the Mach-O file with the following command:

./main

Execution results:

6: Binding hardware architecture

Generate corresponding executable files according to different hardware architectures (here is the M1 version of iMAC, arm64).

2.2: summarize the compilation process

2.2.1: commands used in each stage

\ ////= = = = = start of front end===== // 1. Lexical analysis: Lang - fmmodules - fsyntax only - xclang - dump tokens main m // 2. Syntax analysis clang -fmodules -fsyntax-only -Xclang -ast-dump main.m // 3. Generate IR file clang - S - fobjc arc - emit llvm main m // 3.1 specify optimization level to generate IR file clang -Os -S -fobjc-arc -emit-llvm main.m -o main.ll // 3.2 (according to compiler settings) generate bitcode file clang -emit-llvm -c main.ll -o main.bc ////= = = = back end start===== // 1. Generate assembly file // bitcode -> .s clang -S -fobjc-arc main.bc -o main.s // IR -> .s clang -S -fobjc-arc main.ll -o main.s // Specifies the optimization level to generate an assembly file clang -Os -S -fobjc-arc main.ll -o main.s // 2. Generate target Mach-O file clang -fmodules -c main.s -o main.o // 2.1 viewing Mach-O files xcrun nm -nm main.o // 3. Generate executable Mach-O file clang main.o -o main ////========= execution started===== // 4. Execute the executable Mach-O file ./main

(slide to show more)

You may wonder that generating assembly files is already the work of the compiler back-end. Why use the clang command? This is because we use the interface provided by clang to invoke the corresponding functions of the backend. As for whether the backend has its own unique commands, I don't know. Welcome to science.

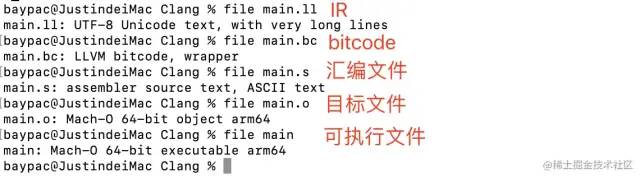

2.2.2: file types generated in each stage

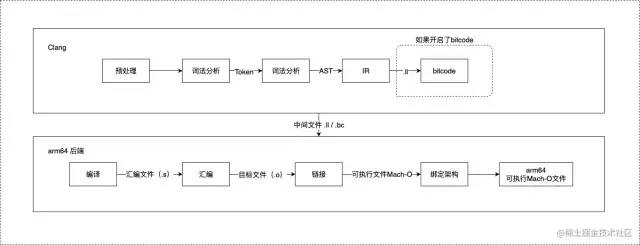

2.2.3: compilation flow chart

Summary and forecast

- Interpretive language & compiled language

- LLVM compiler (emphasis):

- Front end: read code, lexical analysis, syntax analysis, generate AST. LLVM exclusive: IR, apple exclusive: bc

- Optimizer: optimizes according to one Pass after another

- Back end: generate assembly code, generate object files, link dynamic and static libraries, and generate corresponding executable files according to different architectures

- What are the benefits of LLVM?

- The front and rear ends are separated, and the scalability is very strong.

- LLVM compilation process (key points):

- Input file: source file found.

- Preprocessing stage: this process includes macro replacement and header file import.

- Compilation stage: perform lexical analysis, syntax analysis, check whether the syntax is correct, and finally generate IR (or bitcode).

- Back end: here, LLVM will optimize one Pass (link, fragment) by one. Each Pass will do something and finally generate assembly code.

- Generate target file.

- Link: link the required dynamic library and static library to generate executable files.

- Generate corresponding executable files according to different hardware architectures (here is the M1 version of iMAC, arm64).

This paper mainly introduces the concepts, design ideas and compilation process related to LLVM and Clang. The next article will use LLVM and Clang to implement a simple plug-in. Please look forward to it.

Original link:

https://juejin.cn/post/7002520678830342152