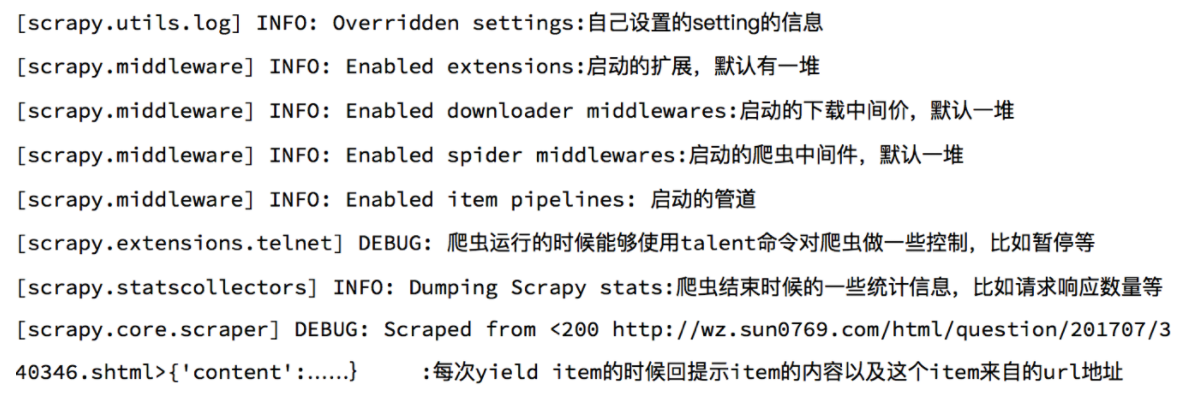

1. Log information of the sweep

2. Common configuration of scratch

- ROBOTSTXT_ Whether obey complies with the robots protocol. The default is compliance

- About robots protocol

- In Baidu search, you can't search the details page of a specific commodity on Taobao. This is the robots protocol at work

- Robots protocol: the website tells search engines which pages can be crawled and which pages cannot be crawled through robots protocol, but it is only a general agreement in the Internet

- For example: robots protocol of Taobao

- About robots protocol

- USER_AGENT settings ua

- DEFAULT_REQUEST_HEADERS sets the default request header, and user is added here_ Agent will not work

- ITEM_PIPELINES pipeline, left position right weight: the smaller the weight value, the more priority will be given to execution

- SPIDER_ The setting process of the middlewares crawler middleware is the same as that of the pipeline

- DOWNLOADER_MIDDLEWARES download Middleware

- COOKIES_ENABLED is True by default, which means that the cookie delivery function is enabled, that is, the previous cookie is brought with each request to maintain the status

- COOKIES_DEBUG defaults to False, indicating that the delivery process of cookie s is not displayed in the log

- LOG_LEVEL is DEBUG by default, which controls the level of logs

- LOG_LEVEL = "WARNING"

- LOG_FILE sets the save path of the log file. If this parameter is set, the log information will be written to the file, the terminal will no longer display, and will be affected by log_ Limit of level log level

- LOG_FILE = "./test.log"

- CONCURRENT_REQUESTS sets the number of concurrent requests. The default is 16

- DOWNLOAD_DELAY download delay, no delay by default, in seconds

3. scrapy_redis configuration

- DUPEFILTER_ Class = "scratch_redis. Dupefilter. Rfpdupefilter" # fingerprint generation and de duplication class

- Scheduler = "scenario_redis. Scheduler. Scheduler" # scheduler class

- SCHEDULER_PERSIST = True # persistent request queue and fingerprint collection

- ITEM_ Pipelines = {'scratch_redis. Pipelines. Redispipeline': 400}# data stored in redis pipeline

- REDIS_ url = " redis://host:port "# redis url

4. scrapy_splash configuration

SPLASH_URL = 'http://127.0.0.1:8050'

DOWNLOADER_MIDDLEWARES = {

'scrapy_splash.SplashCookiesMiddleware': 723,

'scrapy_splash.SplashMiddleware': 725,

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware': 810,

}

DUPEFILTER_CLASS = 'scrapy_splash.SplashAwareDupeFilter'

HTTPCACHE_STORAGE = 'scrapy_splash.SplashAwareFSCacheStorage'

5. scrapy_redis and scratch_ Splash configuration

5.1 principle

- "Dupefilter" is configured in scratch redis_ Class ":" scratch_redis.dupefilter.rfpdupefilter ", which is the same as dupefilter configured for scratch splash_ CLASS = ‘scrapy_splash.SplashAwareDupeFilter 'conflicts!

- Reviewed the script_ splash. After the source code of splashawardeupefilter, I found that it inherited the script dupefilter. Rfpdupefilter and rewritten the request_fingerprint() method.

- Compare the scene dupefilter. Rfpdupefilter and scratch_ redis. dupefilter. Request in rfpdupefilter_ After the fingerprint () method, it is found that it is the same, so a SplashAwareDupeFilter is rewritten to inherit the scratch_ redis. dupefilter. Rfpdupefilter, other codes remain unchanged.

5.2 rewrite dupefilter to remove duplicate classes, and in settings PY

5.2. 1 override de duplication class

from __future__ import absolute_import

from copy import deepcopy

from scrapy.utils.request import request_fingerprint

from scrapy.utils.url import canonicalize_url

from scrapy_splash.utils import dict_hash

from scrapy_redis.dupefilter import RFPDupeFilter

def splash_request_fingerprint(request, include_headers=None):

""" Request fingerprint which takes 'splash' meta key into account """

fp = request_fingerprint(request, include_headers=include_headers)

if 'splash' not in request.meta:

return fp

splash_options = deepcopy(request.meta['splash'])

args = splash_options.setdefault('args', {})

if 'url' in args:

args['url'] = canonicalize_url(args['url'], keep_fragments=True)

return dict_hash(splash_options, fp)

class SplashAwareDupeFilter(RFPDupeFilter):

"""

DupeFilter that takes 'splash' meta key in account.

It should be used with SplashMiddleware.

"""

def request_fingerprint(self, request):

return splash_request_fingerprint(request)

"""The above is the rewritten de duplication class, and the following is the crawler code"""

from scrapy_redis.spiders import RedisSpider

from scrapy_splash import SplashRequest

class SplashAndRedisSpider(RedisSpider):

name = 'splash_and_redis'

allowed_domains = ['baidu.com']

# start_urls = ['https://www.baidu.com/s?wd=13161933309']

redis_key = 'splash_and_redis'

# lpush splash_and_redis 'https://www.baidu.com'

# Distributed initial url cannot use splash service!

# You need to override dupefilter to duplicate classes!

def parse(self, response):

yield SplashRequest('https://www.baidu.com/s?wd=13161933309',

callback=self.parse_splash,

args={'wait': 10}, # Maximum timeout in seconds

endpoint='render.html') # Fixed parameters using splash service

def parse_splash(self, response):

with open('splash_and_redis.html', 'w') as f:

f.write(response.body.decode())

5.2.2 scrapy_redis and scratch_ Splash configuration

# url of the rendering service

SPLASH_URL = 'http://127.0.0.1:8050'

# Downloader Middleware

DOWNLOADER_MIDDLEWARES = {

'scrapy_splash.SplashCookiesMiddleware': 723,

'scrapy_splash.SplashMiddleware': 725,

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware': 810,

}

# Http cache using Splash

HTTPCACHE_STORAGE = 'scrapy_splash.SplashAwareFSCacheStorage'

# De duplication filter

# DUPEFILTER_CLASS = 'scrapy_splash.SplashAwareDupeFilter'

# DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter" # Fingerprint generation and de duplication

DUPEFILTER_CLASS = 'test_splash.spiders.splash_and_redis.SplashAwareDupeFilter' # Location of mixed de duplication classes

SCHEDULER = "scrapy_redis.scheduler.Scheduler" # Scheduler class

SCHEDULER_PERSIST = True # Persistent request queue and fingerprint collection_ Redis and scratch_ Splash mix use splash's DupeFilter!

ITEM_PIPELINES = {'scrapy_redis.pipelines.RedisPipeline': 400} # Data is stored in the redis pipeline

REDIS_URL = "redis://127.0. 0.1:6379 "# redis url

be careful:

- scrapy_ The redis distributed crawler cannot automatically exit after the business logic ends

- The overridden dupefilter de duplication class can be located in a user-defined location, and the corresponding path must be written in the configuration file